Advances in Hyperspectral Image Classification Methods with Small Samples: A Review

Abstract

:1. Introduction

- The current reviews categorize HIC-SS methods invariably according to the learning paradigm. However, some learning paradigms do not have a precise definition, especially those that have just been developed in recent years. The boundaries of these learning paradigms are ambiguous, and even the meaning of some learning paradigms has changed with further research. Therefore, there is some ambiguity in existing taxonomy based on learning paradigms in such cases.

- Most of the current reviews have focused on deep learning methods. Although deep models are the mainstream of current research, there are some non-deep models that have been proposed and have achieved remarkable results as well. In this case, it is necessary to provide a comprehensive overview of the research progress by taking non-deep models into account.

- Due to the rapid development of HIC-SS research, many more methods have been proposed in the past two years, and these methods were not mentioned in the previous reviews. In fact, the number of articles in this area is quite substantial. It is only by including them together that a more comprehensive understanding of the current developments in the field can be generated.

2. Taxonomy

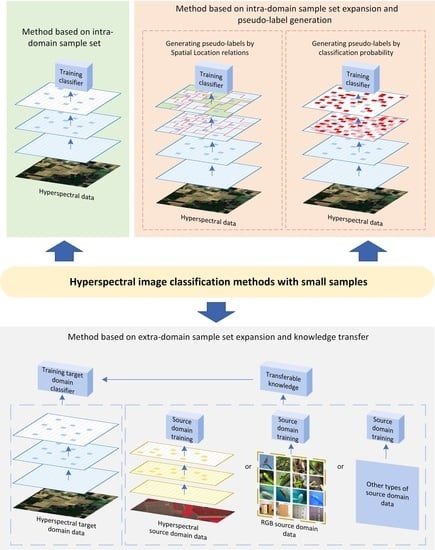

- Method based on intra-domain sample set (IS): This method uses only the labeled samples in the current operation domain to train the model. Because of the limited amount of data available, these methods aim to extract as much useful information as possible from the available data. Approaches commonly used include developing better feature extraction techniques and enhancing the training set with more effective sample augmentation methods, among others.

- Method based on intra-domain sample set expansion and pseudo-label generation (ISE-PG): The most significant difference between this method and the first one is that it incorporates not only labeled data in the current operational domain but also partially unlabeled data in the same domain. Specifically, a portion of unlabeled data is selected from the current operational domain and pseudo-labels are generated for it, thereby enabling the expansion of the training sample size. The selection of samples from unlabeled regions in the current operational domain and the generation of pseudo-labels rely on labeled samples and prior knowledge.

- Method based on extra-domain sample set expansion and knowledge transfer (ESE-KT): This method is similar to the second method in that it also leverages data other than the labeled data in the current domain for auxiliary training. However, in this case, the data used for auxiliary training is not from the current operational domain, but from other domains. The representative works are various transfer learning methods including methods such as few shot learning. In applications, although there is less data available in the current domain, data from other domains may be more readily available. Therefore, finding the similarities between different domains and applying the transferable knowledge to model training in the current domain is also an important research direction.

3. Methods

3.1. Methods Based on Intra-Domain Sample Set

3.2. Method Based on Intra-Domain Sample Set Expansion and Pseudo-Label Generation

3.3. Method Based on Extra-Domain Sample Set Expansion and Knowledge Transfer

4. Performance

4.1. Datasets

4.2. Sampling Strategy

4.3. Performance Analysis

4.4. Performance with Different Numbers of Training Samples

4.5. Running Time

5. Perspectives

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef] [Green Version]

- Patro, R.N.; Subudhi, S.; Biswal, P.K.; Dell’acqua, F. A Review of Unsupervised Band Selection Techniques: Land Cover Classification for Hyperspectral Earth Observation Data. IEEE Geosci. Remote Sens. Mag. 2021, 9, 72–111. [Google Scholar] [CrossRef]

- Liang, L.; Di, L.; Zhang, L.; Deng, M.; Qin, Z.; Zhao, S.; Lin, H. Estimation of crop LAI using hyperspectral vegetation indices and a hybrid inversion method. Remote Sens. Environ. 2015, 165, 123–134. [Google Scholar] [CrossRef]

- Yang, X.; Yu, Y. Estimating Soil Salinity Under Various Moisture Conditions: An Experimental Study. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2525–2533. [Google Scholar] [CrossRef]

- Yokoya, N.; Chan, J.C.W.; Segl, K. Potential of Resolution-Enhanced Hyperspectral Data for Mineral Mapping Using Simulated EnMAP and Sentinel-2 Images. Remote Sens. 2016, 8, 172. [Google Scholar] [CrossRef] [Green Version]

- Chang, C.I. Hyperspectral Data Exploitation: Theory and Applications; John Wiley and Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Cao, X.; Wang, X.; Wang, D.; Zhao, J.; Jiao, L. Spectral–Spatial Hyperspectral Image Classification Using Cascaded Markov Random Fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4861–4872. [Google Scholar] [CrossRef]

- Cao, X.; Wang, D.; Wang, X.; Zhao, J.; Jiao, L. Hyperspectral imagery classification with cascaded support vector machines and multi-scale superpixel segmentation. Int. J. Remote Sens. 2020, 41, 4530–4550. [Google Scholar] [CrossRef]

- Ghamisi, P.; Maggiori, E.; Li, S.; Souza, R.; Tarablaka, Y.; Moser, G.; De Giorgi, A.; Fang, L.; Chen, Y.; Chi, M.; et al. New Frontiers in Spectral-Spatial Hyperspectral Image Classification: The Latest Advances Based on Mathematical Morphology, Markov Random Fields, Segmentation, Sparse Representation, and Deep Learning. IEEE Geosci. Remote Sens. Mag. 2018, 6, 10–43. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef] [Green Version]

- Cubero-Castan, M.; Chanussot, J.; Achard, V.; Briottet, X.; Shimoni, M. A Physics-Based Unmixing Method to Estimate Subpixel Temperatures on Mixed Pixels. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1894–1906. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Paoletti, M.; Haut, J.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Cen, Y.; Zhang, L.F.; Zhang, X.; Wang, Y.M.; Qi, W.C.; Tang, S.L.; Zhang, P. Aerial hyperspectral remote sensing classification dataset of Xiongan New Area (Matiwan Village). J. Remote Sens. 2020, 24, 1299–1306. [Google Scholar] [CrossRef]

- Yang, J.M.; Yu, P.T.; Kuo, B.C.; Huang, H.Y. A novel non-parametric weighted feature extraction method for classification of hyperspectral image with limited training samples. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–27 July 2007; pp. 1552–1555. [Google Scholar] [CrossRef]

- Prasad, S.; Bruce, L.M. Overcoming the Small Sample Size Problem in Hyperspectral Classification and Detection Tasks. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 6–11 July 2008; Volume 5, pp. V-381–V-384. [Google Scholar] [CrossRef]

- Imani, M.; Ghassemian, H. Feature Extraction Using Attraction Points for Classification of Hyperspectral Images in a Small Sample Size Situation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1986–1990. [Google Scholar] [CrossRef]

- Imani, M.; Ghassemian, H. Band Clustering-Based Feature Extraction for Classification of Hyperspectral Images Using Limited Training Samples. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1325–1329. [Google Scholar] [CrossRef]

- Sami ul Haq, Q.; Tao, L.; Sun, F.; Yang, S. A Fast and Robust Sparse Approach for Hyperspectral Data Classification Using a Few Labeled Samples. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2287–2302. [Google Scholar] [CrossRef]

- Li, F.; Xu, L.; Siva, P.; Wong, A.; Clausi, D.A. Hyperspectral Image Classification with Limited Labeled Training Samples Using Enhanced Ensemble Learning and Conditional Random Fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2427–2438. [Google Scholar] [CrossRef]

- Li, F.; Wong, A.; Clausi, D.A. Combining rotation forests and adaboost for hyperspectral imagery classification using few labeled samples. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 4660–4663. [Google Scholar] [CrossRef]

- Xia, J.; Chanussot, J.; Du, P.; He, X. Rotation-Based Support Vector Machine Ensemble in Classification of Hyperspectral Data with Limited Training Samples. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1519–1531. [Google Scholar] [CrossRef]

- Chen, J.; Xia, J.; Du, P.; Chanussot, J.; Xue, Z.; Xie, X. Kernel Supervised Ensemble Classifier for the Classification of Hyperspectral Data Using Few Labeled Samples. Remote Sens. 2016, 8, 601. [Google Scholar] [CrossRef] [Green Version]

- Yu, S.; Jia, S.; Xu, C. Convolutional neural networks for hyperspectral image classification. Neurocomputing 2017, 219, 88–98. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X. MugNet: Deep learning for hyperspectral image classification using limited samples. ISPRS J. Photogramm. Remote Sens. 2018, 145, 108–119. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Yu, A.; Zhang, P.; Wan, G.; Wang, R. Deep Few-Shot Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2290–2304. [Google Scholar] [CrossRef]

- Zhao, W.; Li, S.; Li, A.; Zhang, B.; Li, Y. Hyperspectral images classification with convolutional neural network and textural feature using limited training samples. Remote Sens. Lett. 2019, 10, 449–458. [Google Scholar] [CrossRef]

- Fang, B.; Li, Y.; Zhang, H.; Chan, J.C.W. Collaborative learning of lightweight convolutional neural network and deep clustering for hyperspectral image semi-supervised classification with limited training samples. ISPRS J. Photogramm. Remote Sens. 2020, 161, 164–178. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Qin, J.; Zhang, P.; Tan, X. Deep Relation Network for Hyperspectral Image Few-Shot Classification. Remote Sens. 2020, 12, 923. [Google Scholar] [CrossRef] [Green Version]

- Gao, K.; Guo, W.; Yu, X.; Liu, B.; Yu, A.; Wei, X. Deep Induction Network for Small Samples Classification of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3462–3477. [Google Scholar] [CrossRef]

- Feng, Y.; Zheng, J.; Qin, M.; Bai, C.; Zhang, J. 3D Octave and 2D Vanilla Mixed Convolutional Neural Network for Hyperspectral Image Classification with Limited Samples. Remote Sens. 2021, 13, 4407. [Google Scholar] [CrossRef]

- Huang, L.; Chen, Y. Dual-Path Siamese CNN for Hyperspectral Image Classification with Limited Training Samples. IEEE Geosci. Remote Sens. Lett. 2021, 18, 518–522. [Google Scholar] [CrossRef]

- Li, Z.; Liu, M.; Chen, Y.; Xu, Y.; Li, W.; Du, Q. Deep Cross-Domain Few-Shot Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Bai, J.; Yuan, A.; Xiao, Z.; Zhou, H.; Wang, D.; Jiang, H.; Jiao, L. Class Incremental Learning with Few-Shots Based on Linear Programming for Hyperspectral Image Classification. IEEE Trans. Cybern. 2022, 52, 5474–5485. [Google Scholar] [CrossRef]

- Xue, Z.; Zhou, Y.; Du, P. S3Net: Spectral–Spatial Siamese Network for Few-Shot Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X. Patch-Free Bilateral Network for Hyperspectral Image Classification Using Limited Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10794–10807. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Zhang, P.; Tan, X.; Sun, Y. Small sample classification of hyperspectral image using model-agnostic meta-learning algorithm and convolutional neural network. Int. J. Remote Sens. 2021, 42, 3090–3122. [Google Scholar] [CrossRef]

- Zhao, L.; Luo, W.; Liao, Q.; Chen, S.; Wu, J. Hyperspectral Image Classification with Contrastive Self-Supervised Learning Under Limited Labeled Samples. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Qu, Y.; Baghbaderani, R.K.; Qi, H. Few-Shot Hyperspectral Image Classification Through Multitask Transfer Learning. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Li, X.; Cao, Z.; Zhao, L.; Jiang, J. ALPN: Active-Learning-Based Prototypical Network for Few-Shot Hyperspectral Imagery Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Thoreau, R.; Achard, V.; Risser, L.; Berthelot, B.; Briottet, X. Active Learning for Hyperspectral Image Classification: A comparative review. IEEE Geosci. Remote Sens. Mag. 2022, 10, 256–278. [Google Scholar] [CrossRef]

- Zhou, F.; Zhang, L.; Wei, W.; Bai, Z.; Zhang, Y. Meta Transfer Learning for Few-Shot Hyperspectral Image Classification. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3681–3684. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Yu, A. Unsupervised Meta Learning with Multiview Constraints for Hyperspectral Image Small Sample set Classification. IEEE Trans. Image Process. 2022, 31, 3449–3462. [Google Scholar] [CrossRef]

- Jia, S.; Jiang, S.; Lin, Z.; Li, N.; Xu, M.; Yu, S. A survey: Deep learning for hyperspectral image classification with few labeled samples. Neurocomputing 2021, 448, 179–204. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Qiu, H.; Hou, G.; Fan, P. An overview of hyperspectral image feature extraction, classification methods and the methods based on small samples. Appl. Spectrosc. Rev. 2021, 58, 367–400. [Google Scholar] [CrossRef]

- Wambugu, N.; Chen, Y.; Xiao, Z.; Tan, K.; Wei, M.; Liu, X.; Li, J. Hyperspectral image classification on insufficient-sample and feature learning using deep neural networks: A review. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102603. [Google Scholar] [CrossRef]

- Doersch, C.; Gupta, A.; Zisserman, A. CrossTransformers: Spatially-aware few-shot transfer. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: New York, NY, USA, 2020; Volume 33, pp. 21981–21993. [Google Scholar]

- Luo, X.; Wei, L.; Wen, L.; Yang, J.; Xie, L.; Xu, Z.; Tian, Q. Rectifying the shortcut learning of background for few-shot learning. Adv. Neural Inf. Process. Syst. 2021, 34, 13073–13085. [Google Scholar]

- Luo, X.; Xu, J.; Xu, Z. Channel importance matters in few-shot image classification. In Proceedings of the International Conference on Machine Learning, Baltimore, MA, USA, 17–23 July 2022; pp. 14542–14559. [Google Scholar]

- Karaca, A.C. Spatial aware probabilistic multi-kernel collaborative representation for hyperspectral image classification using few labelled samples. Int. J. Remote Sens. 2021, 42, 839–864. [Google Scholar] [CrossRef]

- Karaca, A.C. Domain Transform Filter and Spatial-Aware Collaborative Representation for Hyperspectral Image Classification Using Few Labeled Samples. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1264–1268. [Google Scholar] [CrossRef]

- Jia, S.; Zhu, Z.; Shen, L.; Li, Q. A Two-Stage Feature Selection Framework for Hyperspectral Image Classification Using Few Labeled Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1023–1035. [Google Scholar] [CrossRef]

- Wang, A.; Liu, C.; Xue, D.; Wu, H.; Zhang, Y.; Liu, M. Depthwise Separable Relation Network for Small Sample Hyperspectral Image Classification. Symmetry 2021, 13, 1673. [Google Scholar] [CrossRef]

- Pan, H.; Liu, M.; Ge, H.; Wang, L. One-Shot Dense Network with Polarized Attention for Hyperspectral Image Classification. Remote Sens. 2022, 14, 2265. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, J.; Qin, Q. Deep Quadruplet Network for Hyperspectral Image Classification with a Small Number of Samples. Remote Sens. 2020, 12, 647. [Google Scholar] [CrossRef] [Green Version]

- Dong, S.; Quan, Y.; Feng, W.; Dauphin, G.; Gao, L.; Xing, M. A Pixel Cluster CNN and Spectral-Spatial Fusion Algorithm for Hyperspectral Image Classification with Small-Size Training Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4101–4114. [Google Scholar] [CrossRef]

- Pal, D.; Bundele, V.; Banerjee, B.; Jeppu, Y. SPN: Stable Prototypical Network for Few-Shot Learning-Based Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ding, C.; Chen, Y.; Li, R.; Wen, D.; Xie, X.; Zhang, L.; Wei, W.; Zhang, Y. Integrating Hybrid Pyramid Feature Fusion and Coordinate Attention for Effective Small Sample Hyperspectral Image Classification. Remote Sens. 2022, 14, 2355. [Google Scholar] [CrossRef]

- Feng, F.; Zhang, Y.; Zhang, J.; Liu, B. Small Sample Hyperspectral Image Classification Based on Cascade Fusion of Mixed Spatial-Spectral Features and Second-Order Pooling. Remote Sens. 2022, 14, 505. [Google Scholar] [CrossRef]

- Wu, H.; Wang, L.; Shi, Y. Convolution neural network method for small-sample classification of hyperspectral images. J. Image Graph. 2021, 26, 2009–2020. [Google Scholar]

- Liu, B.; Yu, A.; Gao, K.; Wang, Y.; Yu, X.; Zhang, P. Multiscale nested U-Net for small sample classification of hyperspectral images. J. Appl. Remote Sens. 2022, 16, 016506. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, K.; Wu, S.; Shi, H.; Zhao, Y.; Sun, Y.; Zhuang, H.; Fu, E. An Investigation of a Multidimensional CNN Combined with an Attention Mechanism Model to Resolve Small-Sample Problems in Hyperspectral Image Classification. Remote Sens. 2022, 14, 785. [Google Scholar] [CrossRef]

- Cao, Z.; Li, X.; Jiang, J.; Zhao, L. 3D convolutional siamese network for few-shot hyperspectral classification. J. Appl. Remote Sens. 2020, 14, 048504. [Google Scholar] [CrossRef]

- Li, N.; Zhou, D.; Shi, J.; Zheng, X.; Wu, T.; Yang, Z. Graph-Based Deep Multitask Few-Shot Learning for Hyperspectral Image Classification. Remote Sens. 2022, 14, 2246. [Google Scholar] [CrossRef]

- Wei, W.; Zhang, L.; Li, Y.; Wang, C.; Zhang, Y. Intraclass Similarity Structure Representation-Based Hyperspectral Imagery Classification with Few Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1045–1054. [Google Scholar] [CrossRef]

- Ding, C.; Li, Y.; Wen, Y.; Zheng, M.; Zhang, L.; Wei, W.; Zhang, Y. Boosting Few-Shot Hyperspectral Image Classification Using Pseudo-Label Learning. Remote Sens. 2021, 13, 3539. [Google Scholar] [CrossRef]

- Cui, B.; Ma, X.; Zhao, F.; Wu, Y. A novel hyperspectral image classification approach based on multiresolution segmentation with a few labeled samples. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417710219. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, N.; Cui, J. Hyperspectral Image Classification with Small Training Sample Size Using Superpixel-Guided Training Sample Enlargement. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7307–7316. [Google Scholar] [CrossRef]

- Romaszewski, M.; Głomb, P.; Cholewa, M. Semi-supervised hyperspectral classification from a small number of training samples using a co-training approach. ISPRS J. Photogramm. Remote Sens. 2016, 121, 60–76. [Google Scholar] [CrossRef]

- Zhang, S.; Kang, X.; Duan, P.; Sun, B.; Li, S. Polygon Structure-Guided Hyperspectral Image Classification with Single Sample for Strong Geometric Characteristics Scenes. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Hu, X.; Si, H. Hyperspectral Image Classification Method with Small Sample Set Based on Adaptive Dictionary. Trans. Chin. Soc. Agric. Mach. 2021, 52, 154–161. [Google Scholar]

- Feng, W.; Huang, W.; Dauphin, G.; Xia, J.; Quan, Y.; Ye, H.; Dong, Y. Ensemble Margin Based Semi-Supervised Random Forest for the Classification of Hyperspectral Image with Limited Training Data. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 971–974. [Google Scholar] [CrossRef]

- Feng, W.; Quan, Y.; Dauphin, G.; Li, Q.; Gao, L.; Huang, W.; Xia, J.; Zhu, W.; Xing, M. Semi-supervised rotation forest based on ensemble margin theory for the classification of hyperspectral image with limited training data. Inf. Sci. 2021, 575, 611–638. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.Q.; Chan, J.C.W. Learning and Transferring Deep Joint Spectral–Spatial Features for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Liang, H.; Fu, W.; Yi, F. A survey of recent advances in transfer learning. In Proceedings of the 2019 IEEE 19th international conference on communication technology (ICCT), Xi’an, China, 16–19 October 2019; pp. 1516–1523. [Google Scholar]

- Liu, B.; Yu, X.; Yu, A.; Wan, G. Deep convolutional recurrent neural network with transfer learning for hyperspectral image classification. J. Appl. Remote Sens. 2018, 12, 026028. [Google Scholar] [CrossRef]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to Compare: Relation Network for Few-Shot Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar] [CrossRef] [Green Version]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching networks for one shot learning. Adv. Neural Inf. Process. Syst. 2016, 29, 3637–3645. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. Adv. Neural Inf. Process. Syst. 2017, 30, 4080–4090. [Google Scholar]

- Chen, W.Y.; Liu, Y.C.; Kira, Z.; Wang, Y.C.F.; Huang, J.B. A closer look at few-shot classification. arXiv 2019, arXiv:1904.04232. [Google Scholar]

- Ren, M.; Triantafillou, E.; Ravi, S.; Snell, J.; Swersky, K.; Tenenbaum, J.B.; Larochelle, H.; Zemel, R.S. Meta-learning for semi-supervised few-shot classification. arXiv 2018, arXiv:1803.00676. [Google Scholar]

- Andrychowicz, M.; Denil, M.; Gomez, S.; Hoffman, M.W.; Pfau, D.; Schaul, T.; Shillingford, B.; De Freitas, N. Learning to learn by gradient descent by gradient descent. Adv. Neural Inf. Process. Syst. 2016, 29, 3988–3996. [Google Scholar]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Liu, B.; Zuo, X.; Tan, X.; Yu, A.; Guo, W. A Deep few-shot learning algorithm for hyperspectral image classification. Acta Geod. Cartogr. Sin. 2020, 49, 1331–1342. [Google Scholar]

- Zhang, C.; Yue, J.; Qin, Q. Global Prototypical Network for Few-Shot Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4748–4759. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, Y.; Zhang, J. Attention Multisource Fusion-Based Deep Few-Shot Learning for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8773–8788. [Google Scholar] [CrossRef]

- Bai, J.; Huang, S.; Xiao, Z.; Li, X.; Zhu, Y.; Regan, A.C.; Jiao, L. Few-Shot Hyperspectral Image Classification Based on Adaptive Subspaces and Feature Transformation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Yang, Y.; Li, Z.; Du, Q.; Chen, Y.; Li, F.; Yang, H. Heterogeneous Few-Shot Learning for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zuo, X.; Yu, X.; Liu, B.; Zhang, P.; Tan, X. FSL-EGNN: Edge-Labeling Graph Neural Network for Hyperspectral Image Few-Shot Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Xinyi, T.; Jihao, Y.; Bingnan, H.; Hui, Q. Few-Shot Learning with Attention-Weighted Graph Convolutional Networks For Hyperspectral Image Classification. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 1686–1690. [Google Scholar] [CrossRef]

- Huang, K.; Deng, X.; Geng, J.; Jiang, W. Self-Attention and Mutual-Attention for Few-Shot Hyperspectral Image Classification. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 2230–2233. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Melgani, F.; Bruzzone, L. Support vector machines for classification of hyperspectral remote-sensing images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; Volume 1, pp. 506–508. [Google Scholar] [CrossRef]

- Liu, B.; Yu, A.; Yu, X.; Wang, R.; Gao, K.; Guo, W. Deep Multiview Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7758–7772. [Google Scholar] [CrossRef]

- Xu, B.; Hou, W.; Wei, Y.; Wang, Y.; Li, X. Minimalistic fully convolution networks (MFCN): Pixel-level classification for hyperspectral image with few labeled samples. Opt. Express 2022, 30, 16585–16605. [Google Scholar] [CrossRef] [PubMed]

- Audebert, N.; Le Saux, B.; Lefevre, S. Deep Learning for Classification of Hyperspectral Data: A Comparative Review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 159–173. [Google Scholar] [CrossRef] [Green Version]

- Cao, X.; Liu, Z.; Li, X.; Xiao, Q.; Feng, J.; Jiao, L. Nonoverlapped Sampling for Hyperspectral Imagery: Performance Evaluation and a Cotraining-Based Classification Strategy. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

| Method | Year | |

|---|---|---|

| Based on intra-domain sample set (IS) | Support vector machine (SVM) [95] | 2002 |

| Deep multiview learning CNN (DMVL) [96] | 2021 | |

| Integrating hybrid pyramid feature fusion and coordinate attention CNN (IHP-CA) [58] | 2022 | |

| Minimalistic fully CNN (MFCN) [97] | 2022 | |

| S3Net: Spectral–spatial siamese network (S3Net) [35] | 2022 | |

| Based on intra-domain sample set expansion and pseudo-label generation (ISE-PG) | Spectral–spatial region growing co-traning approach and SVM (CTA) [69] | 2016 |

| Superpixel-guided training sample enlargement and distance-weighted linear regression classifier (STSE-DWLR) [68] | 2019 | |

| Polygon structure-guided training sample enlargement and SVM (PSG) [70] | 2022 | |

| Based on extra-domain sample set expansion and knowledge transfer (ESE-KT) | Deep relation network (RN-FSC) [29] | 2020 |

| Unsupervised Meta Learning CNN With Multiview Constraints (UM2L) [43] | 2022 | |

| Deep cross-domain few-shot learning CNN (DCFSL) [33] | 2022 | |

| Heterogeneous few-shot learning CNN (HFSL) [90] | 2022 |

| Indian Pines | Salinas Valley | Pavia University | WHU-Hi-LongKou | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|   |   |   | ||||||||

| Color | Land-cover type | Samples | Color | Land-cover type | Samples | Color | Land-cover type | Samples | Color | Land-cover type | Samples |

| Background | 10,776 |  | Background | 56,975 |  | Background | 164,624 |  | Background | 15,458 |

| Alfalfa | 46 |  | Brocoli-green-weeds-1 | 2009 |  | Asphalt | 6631 |  | Corn | 34,511 |

| Corn-notill | 1428 |  | Brocoli-green-weeds-2 | 3726 |  | Meadows | 18,649 |  | Cotton | 8374 |

| Corn-minitill | 830 |  | Fallow | 1976 |  | Gravel | 2099 |  | Sesame | 3031 |

| Corn | 237 |  | Fallow-rough-plow | 1394 |  | Trees | 3064 |  | Broad-leaf soybean | 63,212 |

| Grass-pasture | 483 |  | Fallow-smooth | 2678 |  | Painted metal sheets | 1345 |  | Narrow-leaf soybean | 4151 |

| Grass-trees | 730 |  | Stubble | 3959 |  | Bare Soil | 5029 |  | Rice | 11,854 |

| Grass-pasture-mowed | 28 |  | Celery | 3579 |  | Bitumen | 1330 |  | Water | 67,056 |

| Hay-windrowed | 478 |  | Grapes-untrained | 11,271 |  | Self-Blocking Bricks | 3682 |  | Roads and houses | 7124 |

| Oats | 20 |  | Soil-vinyard-develop | 6203 |  | Shadows | 947 |  | Mixed weed | 5229 |

| Soybean-notill | 972 |  | Corn-senesced-green-weeds | 3278 | ||||||

| Soybean-mintill | 2455 |  | Lettuce-romaine-4wk | 1068 | ||||||

| Soybean-clean | 593 |  | Lettuce-romaine-5wk | 1927 | ||||||

| Wheat | 205 |  | Lettuce-romaine-6wk | 916 | ||||||

| Woods | 1265 |  | Lettuce-romaine-7wk | 1070 | ||||||

| Buildings-Grass-Trees-Drives | 386 |  | Vinyard-untrained | 7268 | ||||||

| Stone-Steel-Towers | 93 |  | Vinyard-vertical-trellis | 1807 | ||||||

| Total samples | 21,025 | Total samples | 111,104 | Total samples | 207,400 | Total samples | 220,000 | ||||

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Alfalfa | 28.19 | 32.78 | 61.62 | 26.51 | 99.76 | 99.14 | 98.05 | 71.76 | 25.62 | 63.63 | 94.63 | 99.27 |

| Corn-notill | 39.74 | 77.49 | 79.12 | 46.47 | 47.38 | 77.13 | 57.81 | 28.53 | 59.10 | 60.01 | 44.26 | 57.62 |

| Corn-mintill | 37.05 | 68.86 | 55.64 | 41.70 | 58.82 | 71.96 | 74.10 | 39.66 | 30.24 | 67.55 | 44.28 | 70.19 |

| Corn | 26.06 | 65.47 | 90.98 | 46.16 | 89.61 | 71.82 | 97.24 | 29.15 | 57.44 | 67.41 | 76.47 | 91.42 |

| Grass-pasture | 42.93 | 81.54 | 91.78 | 64.19 | 78.97 | 92.76 | 85.75 | 70.86 | 52.87 | 82.01 | 75.31 | 72.03 |

| Grass-trees | 80.92 | 66.07 | 33.52 | 77.75 | 95.45 | 90.89 | 92.36 | 90.33 | 84.54 | 43.46 | 84.70 | 79.86 |

| Grass-pasture-mowed | 23.70 | 20.30 | 99.11 | 24.16 | 100 | 58.77 | 96.52 | 41.69 | 38.29 | 89.40 | 98.26 | 100 |

| Hay-windrowed | 92.34 | 93.95 | 24.68 | 91.77 | 83.30 | 97.39 | 100 | 95.08 | 93.58 | 20.72 | 84.33 | 97.55 |

| Oats | 12.92 | 15.09 | 74.42 | 7.58 | 100 | 81.21 | 98.67 | 3.53 | 15.58 | 50.36 | 100 | 100 |

| Soybean-notill | 38.63 | 69.37 | 84.86 | 55.44 | 57.23 | 69.32 | 73.96 | 43.98 | 37.98 | 78.08 | 61.74 | 57.96 |

| Soybean-mintill | 56.25 | 79.28 | 59.51 | 64.40 | 58.13 | 88.50 | 66.73 | 59.48 | 54.70 | 56.18 | 61.40 | 60.86 |

| Soybean-clean | 22.23 | 64.15 | 74.22 | 38.82 | 60.41 | 75.66 | 73.18 | 27.55 | 56.37 | 64.83 | 45.60 | 69.90 |

| Wheat | 83.20 | 53.13 | 93.93 | 56.21 | 99.05 | 83.99 | 100 | 78.58 | 52.75 | 84.44 | 97.95 | 98.40 |

| Woods | 83.40 | 89.63 | 62.06 | 87.18 | 83.16 | 97.91 | 92.94 | 89.87 | 88.00 | 61.74 | 84.90 | 93.75 |

| Buildings-Grass-Trees-Drives | 27.96 | 72.82 | 59.21 | 60.61 | 76.27 | 94.07 | 99.74 | 42.97 | 50.55 | 43.50 | 66.61 | 90.10 |

| Stone-Steel-Towers | 85.20 | 23.72 | 52.16 | 52.71 | 99.43 | 90.61 | 91.93 | 99.74 | 26.83 | 41.17 | 98.86 | 95.80 |

| OA (%) | 47.98 ± 2.60 | 67.52 ± 5.07 | 75.24 ± 3.11 | 57.59 ± 3.44 | 67.52 ± 3.41 | 82.87 ± 1.94 | 77.70 ± 4.24 | 48.94 ± 6.63 | 55.05 ± 5.22 | 63.08 ± 4.04 | 64.89 ± 2.57 | 72.21 ± 4.05 |

| AA (%) | 48.79 ± 1.81 | 60.86 ± 4.45 | 68.86 ± 3.63 | 52.61 ± 3.56 | 80.43 ± 1.35 | 83.82 ± 3.42 | 87.43 ± 1.86 | 57.05 ± 3.98 | 51.53 ± 3.21 | 60.87 ± 3.16 | 76.21 ± 2.13 | 83.42 ± 2.39 |

| Kappa × 100 | 41.91 ± 2.57 | 63.67 ± 5.36 | 72.22 ± 3.35 | 52.37 ± 3.61 | 63.57 ± 3.58 | 80.56 ± 2.18 | 74.96 ± 4.60 | 43.43 ± 6.44 | 50.02 ± 5.53 | 58.83 ± 4.29 | 60.37 ± 2.89 | 68.75 ± 4.43 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Brocoli-green-weeds-1 | 89.94 | 97.46 | 99.92 | 86.27 | 99.91 | 100 | 99.40 | 97.90 | 83.09 | 94.28 | 99.32 | 99.27 |

| Brocoli-green-weeds-2 | 97.36 | 99.89 | 99.63 | 92.43 | 94.24 | 99.93 | 99.85 | 99.71 | 95.35 | 94.80 | 99.26 | 93.03 |

| Fallow | 84.33 | 95.35 | 84.03 | 91.50 | 96.94 | 93.66 | 96.91 | 96.42 | 91.24 | 84.32 | 88.98 | 96.41 |

| Fallow-rough-plow | 99.31 | 69.46 | 99.00 | 91.99 | 98.80 | 92.01 | 99.82 | 98.96 | 90.29 | 93.31 | 99.61 | 99.59 |

| Fallow-smooth | 92.13 | 93.10 | 99.26 | 93.79 | 97.52 | 99.39 | 98.68 | 92.09 | 94.19 | 97.83 | 91.67 | 96.45 |

| Stubble | 97.13 | 94.89 | 97.47 | 99.78 | 99.28 | 99.78 | 99.92 | 97.08 | 99.77 | 95.19 | 99.34 | 99.65 |

| Celery | 96.58 | 97.98 | 94.37 | 90.91 | 98.89 | 99.66 | 99.80 | 99.42 | 93.08 | 88.90 | 99.34 | 97.70 |

| Grapes-untrained | 66.48 | 95.45 | 98.93 | 83.63 | 81.66 | 92.39 | 86.16 | 79.22 | 87.73 | 96.83 | 75.39 | 76.59 |

| Soil-vinyard-develop | 97.41 | 99.99 | 97.65 | 94.97 | 99.71 | 98.74 | 96.75 | 93.01 | 97.98 | 91.77 | 99.39 | 93.12 |

| Corn-senesced-green-weeds | 76.63 | 98.97 | 93.61 | 83.28 | 92.57 | 96.41 | 93.77 | 91.14 | 91.85 | 94.55 | 81.41 | 90.90 |

| Lettuce-romaine-4wk | 76.39 | 93.79 | 98.74 | 79.56 | 99.53 | 97.64 | 97.76 | 66.28 | 91.01 | 89.67 | 98.17 | 98.86 |

| Lettuce-romaine-5wk | 88.41 | 99.39 | 93.92 | 88.47 | 88.67 | 99.45 | 97.13 | 78.33 | 93.44 | 91.04 | 99.34 | 98.13 |

| Lettuce-romaine-6wk | 91.20 | 88.24 | 84.93 | 78.45 | 97.96 | 89.18 | 97.65 | 72.47 | 84.11 | 86.07 | 99.28 | 99.09 |

| Lettuce-romaine-7wk | 79.13 | 67.43 | 78.56 | 79.44 | 91.57 | 85.53 | 94.75 | 63.24 | 85.73 | 63.13 | 98.08 | 96.35 |

| Vinyard-untrained | 46.37 | 84.52 | 99.79 | 64.50 | 75.39 | 91.98 | 94.60 | 45.34 | 62.53 | 88.32 | 75.11 | 57.86 |

| Vinyard-vertical-trellis | 91.23 | 96.75 | 99.23 | 78.43 | 96.10 | 99.84 | 99.17 | 99.39 | 83.38 | 87.76 | 89.92 | 95.27 |

| OA (%) | 79.50 ± 3.67 | 92.33 ± 2.52 | 93.36 ± 1.49 | 83.89 ± 2.64 | 90.85 ± 1.29 | 95.67 ± 0.60 | 95.09 ± 2.47 | 82.02 ± 7.02 | 85.14 ± 4.30 | 85.90 ± 4.43 | 88.89 ± 2.16 | 88.54 ± 2.11 |

| AA (%) | 85.63 ± 2.23 | 92.04 ± 1.93 | 94.93 ± 1.20 | 86.09 ± 2.16 | 94.29 ± 1.20 | 95.98 ± 0.82 | 97.01 ± 1.59 | 85.63 ± 3.69 | 89.04 ± 2.07 | 89.86 ± 2.32 | 93.35 ± 1.37 | 93.71 ± 1.09 |

| Kappa × 100 | 77.26 ± 4.47 | 91.48 ± 2.78 | 92.63 ± 1.65 | 82.17 ± 2.89 | 89.82 ± 1.44 | 95.18 ± 0.45 | 94.54 ± 2.75 | 80.01 ± 7.74 | 87.63 ± 3.67 | 84.42 ± 4.84 | 87.66 ± 2.36 | 87.26 ± 3.80 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Asphalt | 72.42 | 71.16 | 98.03 | 81.15 | 81.76 | 97.35 | 84.19 | 84.49 | 86.15 | 93.81 | 73.99 | 75.46 |

| Meadows | 82.96 | 93.94 | 64.11 | 87.59 | 75.51 | 92.96 | 73.72 | 86.92 | 97.43 | 55.88 | 84.80 | 90.49 |

| Gravel | 40.71 | 81.41 | 60.73 | 46.70 | 70.08 | 61.96 | 99.58 | 67.29 | 43.66 | 54.64 | 60.03 | 75.86 |

| trees | 67.90 | 47.77 | 92.29 | 65.85 | 85.83 | 45.43 | 85.89 | 73.02 | 71.61 | 79.33 | 93.01 | 95.28 |

| Painted metal sheets | 99.99 | 86.21 | 78.12 | 98.21 | 99.93 | 73.21 | 99.80 | 90.56 | 95.18 | 54.86 | 99.23 | 99.81 |

| Bare soil | 27.83 | 94.21 | 90.53 | 42.44 | 64.17 | 72.87 | 99.95 | 40.32 | 46.00 | 70.56 | 75.14 | 86.65 |

| Bitumen | 41.89 | 82.98 | 64.25 | 54.08 | 95.14 | 83.83 | 99.98 | 51.30 | 44.50 | 75.41 | 79.54 | 91.84 |

| Self-blocking bricks | 54.84 | 81.75 | 71.63 | 66.05 | 72.45 | 70.81 | 82.95 | 62.14 | 75.04 | 66.91 | 68.25 | 96.28 |

| Shadows | 81.75 | 23.81 | 87.46 | 91.09 | 94.90 | 71.31 | 64.16 | 89.83 | 76.27 | 88.08 | 97.73 | 99.06 |

| OA (%) | 59.54 ± 2.13 | 77.48 ± 7.24 | 80.46 ± 6.22 | 67.29 ± 5.37 | 77.15 ± 8.04 | 77.07 ± 5.13 | 82.78 ± 7.72 | 66.12 ± 8.55 | 71.94 ± 4.54 | 73.41 ± 6.23 | 80.51 ± 2.64 | 88.35 ± 3.58 |

| AA (%) | 63.36 ± 3.19 | 73.69 ± 4.77 | 78.84 ± 5.35 | 70.35 ± 3.92 | 82.19 ± 3.15 | 74.41 ± 3.52 | 87.80 ± 3.92 | 72.21 ± 7.09 | 70.93 ± 3.38 | 71.01 ± 3.78 | 81.30 ± 1.64 | 90.08 ± 2.26 |

| Kappa × 100 | 49.49 ± 2.13 | 71.14 ± 8.12 | 75.30 ± 7.45 | 59.21 ± 6.07 | 71.02 ± 8.89 | 70.88 ± 5.90 | 78.49 ± 8.84 | 58.02 ± 8.85 | 64.92 ± 5.11 | 66.66 ± 7.05 | 74.80 ± 3.12 | 84.83 ± 4.42 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Corn | 79.49 | 82.11 | 85.39 | 92.87 | 69.47 | 95.36 | 94.51 | 93.74 | 96.02 | 91.00 | 98.34 | 98.17 |

| Cotton | 26.58 | 91.56 | 91.20 | 61.56 | 87.28 | 72.26 | 97.04 | 51.42 | 86.79 | 24.33 | 87.96 | 93.85 |

| Sesame | 27.27 | 60.29 | 91.01 | 52.55 | 95.87 | 65.65 | 99.09 | 59.75 | 32.16 | 95.46 | 87.22 | 83.34 |

| Broad-leaf soybean | 93.11 | 86.58 | 58.25 | 96.06 | 54.63 | 96.88 | 87.22 | 97.30 | 97.07 | 50.17 | 88.40 | 82.73 |

| Narrow-leaf soybean | 27.30 | 44.23 | 97.26 | 35.12 | 93.80 | 56.83 | 94.23 | 66.72 | 55.25 | 77.10 | 89.56 | 94.27 |

| Rice | 78.71 | 76.33 | 99.07 | 95.74 | 78.64 | 98.71 | 95.57 | 94.05 | 72.51 | 99.32 | 91.94 | 92.46 |

| Water | 99.99 | 97.69 | 66.02 | 99.78 | 47.86 | 99.75 | 97.58 | 98.80 | 99.71 | 77.36 | 99.87 | 99.64 |

| Roads and houses | 50.51 | 30.80 | 41.49 | 86.28 | 41.99 | 74.12 | 67.97 | 81.64 | 74.06 | 62.77 | 76.18 | 84.20 |

| Mixed weed | 21.65 | 23.46 | 97.75 | 64.27 | 40.07 | 88.80 | 72.72 | 59.47 | 59.61 | 94.25 | 72.09 | 84.51 |

| OA (%) | 74.94 ± 2.85 | 75.82 ± 2.23 | 89.49 ± 4.51 | 87.19 ± 5.61 | 58.23 ± 4.82 | 92.92 ± 1.62 | 92.01 ± 2.39 | 89.64 ± 3.21 | 86.83 ± 2.89 | 84.67 ± 4.91 | 93.19 ± 2.66 | 92.24 ± 4.29 |

| AA (%) | 56.07 ± 2.36 | 65.90 ± 2.01 | 80.83 ± 2.71 | 76.03 ± 5.28 | 67.73 ± 2.42 | 83.15 ± 2.96 | 89.55 ± 1.38 | 78.10 ± 5.10 | 74.80 ± 3.29 | 74.64 ± 3.73 | 87.95 ± 2.91 | 90.35 ± 3.45 |

| Kappa × 100 | 68.39 ± 3.37 | 69.57 ± 2.59 | 86.29 ± 5.41 | 83.65 ± 6.89 | 48.70 ± 4.70 | 90.82 ± 2.07 | 89.70 ± 2.98 | 86.59 ± 4.03 | 83.23 ± 3.50 | 80.61 ± 6.00 | 91.15 ± 3.39 | 90.23 ± 5.53 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Alfalfa | 15.18 | 8.07 | 60.15 | 7.32 | 97.56 | 82.02 | 97.56 | 65.78 | 15.59 | 41.10 | 89.02 | 96.34 |

| Corn-notill | 37.80 | 73.47 | 37.67 | 27.16 | 25.81 | 62.18 | 31.19 | 39.18 | 42.57 | 34.74 | 18.95 | 33.31 |

| Corn-mintill | 24.54 | 64.02 | 33.26 | 29.41 | 30.05 | 51.27 | 37.05 | 26.13 | 31.22 | 39.40 | 28.22 | 23.04 |

| Corn | 18.20 | 49.21 | 87.56 | 21.99 | 58.75 | 41.98 | 72.89 | 24.10 | 30.20 | 30.27 | 41.90 | 53.84 |

| Grass-pasture | 51.48 | 68.29 | 77.49 | 22.62 | 49.81 | 70.37 | 53.45 | 68.01 | 45.36 | 57.28 | 41.86 | 42.97 |

| Grass-trees | 78.98 | 58.88 | 18.13 | 47.44 | 83.53 | 76.53 | 62.83 | 91.58 | 64.93 | 13.60 | 66.18 | 41.75 |

| Grass-pasture-mowed | 10.63 | 14.07 | 98.44 | 6.26 | 100 | 56.09 | 99.13 | 28.17 | 12.68 | 63.30 | 92.61 | 96.52 |

| Hay-windrowed | 89.04 | 77.28 | 4.94 | 60.94 | 42.18 | 88.51 | 88.60 | 95.53 | 90.19 | 5.45 | 42.77 | 71.78 |

| Oats | 8.46 | 13.83 | 61.68 | 3.05 | 98.67 | 36.08 | 100 | 0.91 | 4.34 | 38.00 | 98.04 | 94.75 |

| Soybean-notill | 37.53 | 63.20 | 64.76 | 25.62 | 46.69 | 48.09 | 54.87 | 46.09 | 34.36 | 62.89 | 45.69 | 39.80 |

| Soybean-mintill | 51.72 | 82.17 | 47.08 | 47.96 | 32.97 | 75.47 | 31.19 | 59.03 | 53.43 | 28.32 | 38.89 | 46.79 |

| Soybean-clean | 16.51 | 53.99 | 51.93 | 13.87 | 29.22 | 46.92 | 32.59 | 25.28 | 34.62 | 32.12 | 15.07 | 30.09 |

| Wheat | 76.07 | 33.92 | 84.00 | 23.24 | 98.55 | 62.30 | 100 | 68.42 | 18.11 | 59.80 | 79.50 | 85.55 |

| Woods | 77.63 | 82.27 | 51.11 | 69.46 | 70.47 | 86.14 | 79.38 | 91.90 | 85.76 | 43.71 | 75.48 | 74.10 |

| Buildings-Grass-Trees-Drives | 21.89 | 49.71 | 52.37 | 40.44 | 56.40 | 70.23 | 85.51 | 31.47 | 40.78 | 25.03 | 48.19 | 64.28 |

| Stone-Steel-Towers | 85.81 | 14.70 | 29.00 | 13.81 | 96.48 | 43.71 | 90.00 | 97.92 | 18.62 | 8.97 | 90.91 | 92.50 |

| OA (%) | 38.33 ± 3.68 | 38.14 ± 9.94 | 50.24 ± 6.16 | 27.79 ± 7.00 | 46.10 ± 5.86 | 58.24 ± 9.05 | 51.31 ± 4.95 | 40.49 ± 7.38 | 34.66 ± 7.66 | 31.96 ± 6.79 | 43.38 ± 5.71 | 47.67 ± 5.38 |

| AA (%) | 43.84 ± 2.52 | 50.44 ± 10.70 | 53.72 ± 3.48 | 28.79 ± 3.80 | 63.57 ± 3.12 | 62.36 ± 4.26 | 69.77 ± 2.59 | 53.72 ± 4.07 | 38.92 ± 2.79 | 36.50 ± 2.64 | 57.08 ± 3.21 | 61.70 ± 4.43 |

| Kappa× 100 | 32.29 ± 3.55 | 34.50 ± 9.36 | 45.14 ± 6.04 | 21.95 ± 6.44 | 40.61 ± 5.89 | 53.48 ± 9.30 | 46.48 ± 4.86 | 34.88 ± 6.77 | 29.03 ± 7.57 | 26.66 ± 6.67 | 37.12 ± 5.49 | 41.77 ± 5.52 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Brocoli-green-weeds-1 | 86.03 | 78.32 | 98.69 | 66.17 | 98.56 | 99.98 | 99.40 | 94.57 | 41.16 | 64.30 | 96.84 | 71.44 |

| Brocoli-green-weeds-2 | 86.26 | 91.15 | 90.52 | 71.90 | 84.40 | 99.57 | 98.63 | 93.39 | 61.30 | 49.96 | 89.78 | 73.76 |

| Fallow | 71.71 | 72.68 | 74.97 | 62.75 | 61.72 | 88.31 | 60.39 | 88.93 | 66.79 | 53.45 | 48.21 | 55.84 |

| Fallow-rough-plow | 99.27 | 46.10 | 86.55 | 86.85 | 90.23 | 84.59 | 99.73 | 98.94 | 63.95 | 78.52 | 99.34 | 96.02 |

| Fallow-smooth | 81.30 | 82.83 | 99.66 | 81.50 | 96.30 | 85.97 | 97.55 | 85.20 | 72.46 | 87.48 | 84.01 | 87.36 |

| Stubble | 91.40 | 83.69 | 96.17 | 99.53 | 98.69 | 99.63 | 99.92 | 93.17 | 98.59 | 77.66 | 99.34 | 97.55 |

| Celery | 92.48 | 92.05 | 80.64 | 82.47 | 90.97 | 99.60 | 86.54 | 94.71 | 72.55 | 68.62 | 98.19 | 77.01 |

| Grapes-untrained | 60.75 | 88.51 | 95.69 | 58.70 | 59.42 | 87.89 | 69.69 | 59.23 | 63.23 | 88.49 | 52.99 | 58.63 |

| Soil-vinyard-develop | 90.63 | 99.93 | 97.78 | 89.67 | 97.12 | 97.60 | 95.12 | 90.80 | 92.12 | 69.54 | 96.00 | 79.97 |

| Corn-senesced-green-weeds | 72.05 | 82.02 | 84.84 | 70.04 | 61.19 | 93.54 | 72.03 | 84.57 | 70.34 | 52.35 | 51.83 | 59.15 |

| Lettuce-romaine-4wk | 55.62 | 69.25 | 76.35 | 45.50 | 95.93 | 69.43 | 95.78 | 62.92 | 49.09 | 63.34 | 78.02 | 95.54 |

| Lettuce-romaine-5wk | 77.05 | 85.82 | 57.61 | 70.83 | 67.26 | 91.37 | 70.43 | 65.59 | 62.95 | 63.91 | 95.29 | 80.00 |

| Lettuce-romaine-6wk | 81.12 | 59.47 | 62.82 | 43.87 | 75.93 | 79.14 | 96.36 | 56.33 | 59.44 | 48.90 | 96.84 | 90.96 |

| Lettuce-romaine-7wk | 66.39 | 51.45 | 58.39 | 60.40 | 78.37 | 72.19 | 84.28 | 68.27 | 61.80 | 43.54 | 96.08 | 79.16 |

| Vinyard-untrained | 41.68 | 71.68 | 97.72 | 46.36 | 67.28 | 64.20 | 69.76 | 33.56 | 54.53 | 41.93 | 58.67 | 51.19 |

| Vinyard-vertical-trellis | 61.50 | 70.86 | 99.70 | 51.51 | 81.37 | 99.53 | 92.37 | 95.06 | 61.57 | 48.27 | 78.89 | 62.86 |

| OA (%) | 70.62 ± 4.61 | 70.69 ± 15.06 | 81.51 ± 5.59 | 65.76 ± 4.56 | 78.12 ± 4.92 | 86.11 ± 3.70 | 83.02 ± 4.70 | 74.47 ± 9.26 | 62.17 ± 5.23 | 57.56 ± 6.93 | 76.15 ± 3.61 | 70.54 ± 2.83 |

| AA (%) | 75.95 ± 4.40 | 76.61 ± 6.27 | 84.88 ± 2.38 | 68.01 ± 5.91 | 81.55 ± 3.53 | 88.28 ± 2.59 | 86.75 ± 2.36 | 79.08 ± 7.30 | 65.74 ± 3.45 | 62.52 ± 5.22 | 82.52 ± 3.96 | 76.03 ± 4.76 |

| Kappa × 100 | 67.48 ± 5.00 | 68.16 ± 16.05 | 72.02 ± 6.27 | 62.14 ± 4.92 | 75.76 ± 5.39 | 84.62 ± 4.06 | 81.15 ± 5.17 | 71.64 ± 10.20 | 68.19 ± 4.86 | 53.85 ± 7.06 | 73.58 ± 4.01 | 67.97 ± 3.53 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Asphalt | 56.84 | 51.33 | 96.31 | 51.03 | 41.96 | 82.45 | 60.04 | 94.86 | 56.04 | 78.51 | 65.57 | 52.69 |

| Meadows | 79.89 | 89.90 | 50.44 | 77.24 | 61.50 | 85.28 | 54.72 | 83.01 | 82.76 | 22.04 | 64.40 | 77.76 |

| Gravel | 33.46 | 39.30 | 62.73 | 17.25 | 47.38 | 42.89 | 87.60 | 48.73 | 38.03 | 27.04 | 49.32 | 24.57 |

| trees | 51.26 | 24.09 | 77.03 | 50.80 | 80.37 | 37.04 | 74.00 | 55.46 | 37.74 | 47.39 | 87.40 | 82.12 |

| Painted metal sheets | 100 | 54.45 | 51.18 | 74.30 | 99.59 | 56.52 | 85.77 | 89.52 | 75.79 | 39.17 | 98.31 | 99.94 |

| Bare soil | 33.21 | 59.93 | 62.49 | 40.77 | 41.32 | 40.67 | 91.00 | 31.20 | 36.51 | 21.10 | 33.39 | 43.48 |

| Bitumen | 39.13 | 52.64 | 34.68 | 29.42 | 97.67 | 62.36 | 99.46 | 44.88 | 42.24 | 47.70 | 71.58 | 52.61 |

| Self-blocking bricks | 54.82 | 52.51 | 42.15 | 46.74 | 69.67 | 56.92 | 61.75 | 58.19 | 57.74 | 40.67 | 48.77 | 69.03 |

| Shadows | 86.99 | 14.32 | 54.05 | 71.06 | 92.10 | 55.78 | 57.86 | 94.18 | 42.47 | 54.64 | 98.81 | 99.99 |

| OA (%) | 51.01 ± 9.70 | 49.22 ± 4.22 | 47.45 ± 5.23 | 50.83 ± 9.85 | 60.45 ± 9.44 | 54.12 ± 9.11 | 65.84 ± 8.91 | 59.17 ± 11.63 | 49.16 ± 13.39 | 46.09 ± 10.30 | 62.54 ± 6.38 | 67.20 ± 5.75 |

| AA (%) | 59.51 ± 2.76 | 48.72 ± 3.16 | 59.01 ± 4.72 | 50.96 ± 6.04 | 70.17 ± 6.31 | 57.77 ± 4.62 | 74.69 ± 4.18 | 66.67 ± 6.48 | 52.15 ± 8.89 | 42.03 ± 6.68 | 68.62 ± 2.44 | 66.91 ± 2.71 |

| Kappa × 100 | 40.65 ± 9.55 | 40.12 ± 4.08 | 39.18 ± 5.26 | 38.50 ± 9.57 | 51.57 ± 9.37 | 45.09 ± 9.26 | 58.71 ± 9.59 | 50.40 ± 11.15 | 38.56 ± 12.73 | 34.00 ± 9.69 | 53.10 ± 6.56 | 57.44 ± 6.35 |

| Class | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| Corn | 79.31 | 50.29 | 65.49 | 72.66 | 32.24 | 86.99 | 55.57 | 85.79 | 78.05 | 58.31 | 92.31 | 88.92 |

| Cotton | 15.09 | 95.30 | 62.78 | 32.48 | 72.93 | 51.39 | 91.20 | 37.36 | 58.35 | 7.24 | 67.67 | 97.16 |

| Sesame | 15.45 | 7.48 | 72.38 | 6.68 | 89.09 | 28.02 | 92.08 | 43.66 | 14.45 | 87.15 | 81.76 | 58.47 |

| Broad-leaf soybean | 79.64 | 72.70 | 13.36 | 86.33 | 31.21 | 89.99 | 51.76 | 83.67 | 87.01 | 26.69 | 76.24 | 60.15 |

| Narrow-leaf soybean | 18.95 | 17.36 | 85.27 | 20.07 | 68.38 | 37.56 | 94.95 | 40.50 | 29.62 | 36.47 | 60.33 | 63.42 |

| Rice | 61.40 | 61.97 | 91.51 | 48.35 | 28.82 | 95.77 | 61.04 | 76.09 | 33.66 | 99.16 | 73.86 | 51.18 |

| Water | 100 | 98.63 | 57.96 | 99.92 | 20.60 | 99.75 | 98.83 | 97.30 | 87.81 | 54.74 | 99.78 | 99.61 |

| Roads and houses | 62.16 | 48.02 | 27.27 | 48.95 | 31.50 | 61.56 | 27.94 | 76.33 | 55.12 | 60.78 | 41.57 | 49.80 |

| Mixed weed | 22.38 | 35.13 | 75.65 | 41.70 | 30.52 | 52.28 | 38.00 | 30.35 | 32.03 | 67.20 | 45.83 | 52.20 |

| OA (%) | 55.69 ± 8.23 | 49.08 ± 13.34 | 57.22 ± 10.47 | 63.40 ± 7.10 | 31.08 ± 6.81 | 75.27 ± 8.92 | 70.28 ± 4.10 | 73.00 ± 13.25 | 64.37 ± 9.01 | 65.88 ± 7.62 | 83.96 ± 6.78 | 78.42 ± 6.96 |

| AA (%) | 50.49 ± 5.84 | 54.10 ± 5.46 | 61.26 ± 5.59 | 50.79 ± 6.78 | 45.03 ± 5.13 | 67.03 ± 3.86 | 67.93 ± 4.60 | 63.45 ± 8.77 | 52.9 ± 5.44 | 55.31 ± 5.05 | 71.04 ± 6.22 | 68.99 ± 7.95 |

| Kappa × 100 | 46.83 ± 9.21 | 40.41 ± 11.82 | 48.66 ± 10.54 | 55.41 ± 7.83 | 20.20 ± 4.50 | 69.37 ± 10.42 | 63.24 ± 4.60 | 66.73 ± 14.47 | 59.11 ± 14.82 | 58.09 ± 8.50 | 78.56 ± 8.07 | 72.78 ± 8.29 |

| Datsets | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | DMVL | IHP-CA | MFCN | S3Net | CTA | STSE-DWLR | PSG | RN-FSC | UM2L | DCFSL | HFSL | |

| IP | 3.61 | 2557.72 | 222.57 | 12.56 | 11.00 | 863.64 | 1.29 | 1.03 | 104.47 | 95.50 | 2316.97 | 916.69 |

| SV | 5.80 | 13,432.13 | 697.00 | 62.69 | 27.33 | 12,171.80 | 15.52 | 10.34 | 386.06 | 203.33 | 2428.09 | 1836.22 |

| UP | 4.86 | 10,721.28 | 407.73 | 125.70 | 18.70 | 8506.83 | 9.58 | 2.24 | 222.71 | 148.11 | 1369.20 | 749.72 |

| LK | 16.53 | 251,305.68 | 1869.83 | 17.46 | 289.54 | 13,547.5 | 237.11 | 4.03 | 406.75 | 225.21 | 6095.68 | 65,261.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Liu, J.; Chi, W.; Wang, W.; Ni, Y. Advances in Hyperspectral Image Classification Methods with Small Samples: A Review. Remote Sens. 2023, 15, 3795. https://doi.org/10.3390/rs15153795

Wang X, Liu J, Chi W, Wang W, Ni Y. Advances in Hyperspectral Image Classification Methods with Small Samples: A Review. Remote Sensing. 2023; 15(15):3795. https://doi.org/10.3390/rs15153795

Chicago/Turabian StyleWang, Xiaozhen, Jiahang Liu, Weijian Chi, Weigang Wang, and Yue Ni. 2023. "Advances in Hyperspectral Image Classification Methods with Small Samples: A Review" Remote Sensing 15, no. 15: 3795. https://doi.org/10.3390/rs15153795