Abstract

It has been demonstrated that color imagery can have a profound impact when generated prior to search, while at the same time, perceptual cues have a somewhat limited influence. Given this discrepancy, the present study evaluated the processes impacted by imagery and perception using a singleton search task where participants had to find an oddball colored target among homogenously colored distractors. Prior to each trial, a perceptual color was displayed or imagery was generated that could match the target, distractors, or neither item in the search array. It was revealed that color imagery led to both a larger benefit when it matched the target and a larger cost when it matched the distractors relative to perceptual cues. By parsing response times into pre-search, search, and response phases based on eye movements, it was revealed that, while imagery and perceptual cues both influenced the search phase, imagery had a significantly greater influence than perceptual cues. Further, imagery influenced pre-search and response phases as well. Overall, the present findings reveal that the influence of imagery is profound as it affects multiple processes in the vision-perception pipeline, while perception only appeared to impact search.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There is little doubt that searching for an object in the visual environment is enhanced by the knowledge of what the object looks like. This phenomenon is often investigated using cueing procedures where information about the target is presented prior to a search task where the observer must locate it amongst an array of distractors. A particularly potent aspect of these cueing procedures is the representational basis of the cue. For example, it has been robustly demonstrated that cues that perceptually depict the target have a more profound impact on search relative to semantic descriptions of it (Baier & Ansorge, 2019; Leonard & Egeth, 2008; Müller & Krummenacher, 2006; Müller et al., 2003; Theeuwes et al., 2006; Wolfe et al., 2004; also, cf. Kawashima & Matsumoto, 2017). The underlying notion is that the attentional guidance system can utilize perceptual representations of the cue to make salient congruent representations in the visual environment (Serences & Yantis, 2006; Wolfe, 1994, 2014).

While perceptual representations appear to have a reliable influence when presented prior to search, their influence appears quite tenuous compared to those generated with visual imagery (Cochrane et al., 2019; Cochrane, Nwabuike, et al., 2018; Cochrane, Townsend, et al., 2021; Cochrane, Zhu, & Milliken, 2018; Moriya, 2018; Reinhart et al., 2015). In a particular study, participants were cued to generate color imagery prior to a color singleton search task, similar to the perceptual cueing procedures described above (Cochrane et al., 2019). It was revealed that responses were faster when imagery was congruent than incongruent with the search target. Further, by introducing a neutral condition (where neither the target nor distractor items matched imagery) it was revealed that imagery-perception congruency led to both a benefit when it matched the target and a cost when it matched the distractors, supporting the notion that imagery influenced the processes underlying attentional guidance (Cochrane, Townsend, et al., 2021; Moriya, 2018). Also, imagery-perception congruency effects were powerful enough to override the selection history effects produced by the previous target color (Cochrane, Ng, & Milliken, 2021; Cochrane, Nwabuike, et al., 2018; Cochrane, Zhu, & Milliken, 2018). In particular, participants imagine the opposite color of the previous target in the interval between trials of singleton search, which put the color imagery and selection history processes in opposition (see Maljkovic & Nakayama, 1994, 2000). Despite the robust and automatic nature of the selection history system, it was revealed color imagery prevailed over it, suggesting that representations constructed with visual imagery may have a greater influence on search than recently viewed perceptual representations.

It is unclear why perceptual cues have a relatively tenuous impact compared to imagery. This is particularly striking given evidence indicating that imagery and perception rely on many of the same brain regions (Dijkstra et al., 2019; Pearson & Kosslyn, 2015). One possibility is that imagery and perception have a different influence on the different processes underlying search. Indeed, in addition to the processes responsible for attentional guidance, it has been revealed that imagery can influence other processes in the vision-perception pipeline, like those responsible for object discrimination (Cochrane & Milliken, 2019, 2020; Cue et al., 2007; Grindell et al., 2020; Wantz et al., 2015). That is, imagery-perception congruency effects also emerge in tasks where a centrally located stimulus has to be identified, which in principle cannot be due to attentional guidance. Instead, the preponderance of the influence here appears to occur at the response stage of processing (Cochrane et al., 2019; although see Cochrane & Milliken, 2020). Further, the imagery congruency effects observed in binocular rivalry studies are purported to be of a retinotopic basis, which are not clearly attentional guidance or response based (Chang et al., 2013).

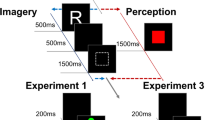

Given this discrepancy, the purpose of the present study was to evaluate the processes underlying imagery and perceptual cueing in search. To do so, participants performed a color singleton search task where they had to locate the oddball colored target diamond amongst homogenously colored distractor diamonds then indicate whether a chunk was missing in its left or right side. In the perception group, a centrally presented colored diamond preceded the search task, and in the imagery group, participants imagined a colored diamond instead. These representations could match either the target or the distractors or neither item in the search display. In Experiments 1a and 1b, our goal was to simply evaluate whether imagery cues did indeed have a more profound influence than perceptual cues. Experiment 2 conceptually replicated Experiment 1a with the exception that eye movements were monitored. By doing so, we were able to partition response times (RTs) into pre-search, search, and response phases (see Zhou et al., 2012). In particular, the duration from the search display onset until the first saccade constituted the pre-search phase, the duration from the first saccade until the last fixation constituted the search phase, and the duration from the last fixation until a manual response was made constituted the response phase. Accordingly, this method allowed us to pinpoint the processes responsible for imagery and perception congruency effects so we could evaluate whether there were any differences between them.

Experiments 1a and 1b

The purpose of Experiment 1a was to evaluate whether imagery did indeed have a more profound influence on search than perceptual cues. If so, imagery should lead to both a larger benefit when congruent with the target and a larger cost when congruent with the distractors relative to perception. If not, these benefits and costs should be statistically identical across the imagery and perception groups. The purpose of Experiment 1b was to assess whether the perceptual decay of the cue affected the magnitude of the congruency effect in the perception group. If perceptual decay affects the congruency effect, there should be a larger congruency effect for cues that remain on-screen until the search display onset than those that offset prior to it. If not, comparable congruency effects should be observed for the differing cue offsets.

Method

Participants

Forty undergraduates at McMaster University participated in Experiment 1a (32 female, 8 male; Mage = 18.1 years) in exchange for course credit, and 40 participants recruited through Prolific (prolific.co) participated in Experiment 1b (15 female, 25 male; Mage = 26.5 years) in exchange for monetary compensation. All participants reported normal color vision and normal or corrected-to-normal visual acuity. While no formal power analyses were conducted, we made the a priori decision on a sample size of 40 participants based on similar studies conducted in the lab.

Apparatus and stimuli

For Experiment 1a, stimuli were presented using Psychopy v1.84 on a BenQ 24-in. LED monitor that was connected to a Mac mini computer. For Experiment 1b, stimuli were presented using PsychoPy v2020.2.3 and were presented with Pavlovia (pavlovia.org). Experiment 1b was performed using the participants’ web browser, computer, and monitor. All displays were presented on a black background. Cues were presented centrally on the screen and were approximately 2 cm in size. In the perception group, the cue was a colored diamond (i.e., red, green, blue, or yellow), and in the imagery group, the cue was the first two letters of the color (i.e., ‘re’, ‘gr’, ‘bl’, or ‘ye’). The search displays contained one colored target and three homogenously colored distractor diamonds that were approximately 2 cm in size. Target and distractor diamonds were positioned in the corners of an invisible square, the sides of which were approximately 10 cm and which was centered in the middle of the screen. The target and distractor diamonds were missing a chunk out of either the right or left corner that was approximately .5 cm in size.

Procedure

Each trial was initiated with a press of the spacebar. The cue was then presented on-screen for 750 ms followed by a blank display with a central fixation cross for 2,000 ms. The one exception was the no-interval group of Experiment 1b – here, the cue remained on-screen during the total duration of the 2,000-ms blank display. The search display was then presented on-screen until participants provided a keypress response. Participants were instructed to indicate the side of the missing corner on the oddball colored target diamond among the homogenously colored distractor diamonds. They indicated that the corner was missing on the left by pressing the ‘Z’ key and the right by pressing the ‘M’ key on a standard QWERTY keyboard. Trials were identical for the imagery and perception groups with the following exceptions. Participants in the imagery group were instructed to imagine a color diamond corresponding to a letter cue (i.e., ‘re’, ‘gr’, ‘bl’, or ‘ye’), whereas in the perception group, the cue was a colored diamond (i.e., red, green, blue, or yellow). Following the search display of each trial, participants in the imagery group were instructed to indicate the vividness of their visual imagery on a 4-point scale, where a keypress of ‘1’ indicated no imagery, ‘2’ indicated low vividness, ‘3’ indicated moderate vividness, and ‘4’ indicated high vividness.

Prior to the experimental session, participants performed 15 practice trials. For the first five practice trials, participants only perform the search task of the experiment. For the second set of five practice trials, the cue was now displayed prior to the search task. At this time, participants in the imagery group were instructed to imagine a solid diamond in the color corresponding to the letter cue. For the participants in the perception group, they were instructed to attend to the perceptual cue. For the last set of practice trials, participants in the imagery group were instructed on how to rate their vividness, while the participants in the perception group performed trials as in the previous training phase. Specifically, participants were instructed that the “no imagery” rating constituted the situation when “they did not generate any imagery,” the “low vividness” rating constituted the situation when their imagery was “vague and dim,” the “moderate vividness” rating constituted the situation when their imagery was “reasonably clear and vivid,” and the “high vividness” rating constituted the situation when their “imagery was clear and vivid like that of normal vision.” For both the imagery and perception groups, participants were informed that the situation when the cue color matched the target was the most common trial type.

Following this practice phase, the participants of Experiment 1a performed 300 experimental trials and the participants of Experiment 1b performed 400 experimental trials. On each trial, the side of the missing chunk on the target and distractor diamonds was randomized such that they appeared on the left or right side with equal likelihood. The color corresponding to the cue was randomized on a trial-by-trial basis such that the percentage likelihood that it matched the color of the target was 50%, matched the distractors was 25%, and matched neither the target nor the distractor was 25%. This contingency was introduced to encourage participants to use the cue in both the perception and imagery groups. In Experiment 1b, trials were presented across two blocks, an interval block where a blank interval was presented between cue and search displays (identical the perception group of Experiment 1a), and a no-interval block where the cue remained on-screen until the search display was presented. The order of these blocks was counterbalanced across participants. Following the experimental session, participants in the imagery group provided an estimate of the percentage of trials in which they implemented the imagery instruction. See Fig. 1 for a depiction of a typical trial.

This is a depiction of a target-match trial in Experiments 1 and 2. The cue was a perceptual colored diamond in the perception group, and the first two letters corresponding to a color in the imagery group. In the no-interval block of Experiment 1b, the perceptual cue remained on-screen until the search display onset

Results

An absolute cut-off outlier removal procedure was used to exclude RTs that were less than 200 ms and greater than 2,000 ms from analyses, which excluded 5.1% of observations from Experiment 1a and 2.8% of observations from Experiment 1b. The remaining correct RTs were submitted to the non-recursive outlier elimination procedure of Van Selst and Jolicoeur (1994), which used a standard deviation exclusion criterion that varied systematically with the number of observations in each cell. This procedure removed an additional 2.4% of observations from Experiment 1a, and 2.6% of observations from Experiment 1b. Mean RTs were computed from the remaining observations. In Experiment 1a, the mean RTs and corresponding error percentages were submitted to a mixed-factor ANOVA that treated cue (target-match/neutral/distractor-match) as a within-subject factor and group (imagery/perception) as a between-subjects factor. In Experiment 1b, the mean RTs and corresponding error percentages were submitted to a within-subject ANOVA that treated cue (target-match/neutral/distractor-match) and block (interval/no-interval) as within-subject factors. An alpha criterion of .05 was used to determine statistical significance in all analyses reported here. Mean RTs and error percentages are depicted in Fig. 2.

The mean response times and error percentages for the target-match, neutral, and distractor-match cues for the imagery and perception groups of Experiment 1a, and the interval and no-interval blocks of Experiment 1b. Error bars represent the standard error of the mean corrected to remove between-subject variability (Cousineau, 2005; Morey, 2008)

Experiment 1a

The RT analysis revealed a significant interaction of cue and group, F(2,76) = 15.0, p < .001, η2p = .28. The main effect of group was not significant (p = .08). First, we explored this interaction by conducting a separate planned ANOVA that treated cue and group as factors for the target-match and neutral levels of cue. This analysis revealed a significant interaction, F(1,38) = 16.4, p < .001, η2p = .23, reflecting a larger facilitation effect in the imagery than perception group. Second, we explored this interaction further by conducting a separate planned ANOVA that treated cue and group as factors for the neutral and distractor-match levels of cue. This analysis revealed no interaction of cue and group (p = .08), although there was a numerical trend towards a larger inhibition effect in the imagery than perception group. The analysis of error percentages did not reveal a significant effect of group or an interaction of group and cue (all p > .09). We conducted separate one-way ANOVAs of RTs and error percentages that treated cue as a factor for each group separately – see below.

Perception group

The analysis of RTs revealed a main effect of cue, F(2,38) = 8.83, p < .001, η2p = .32. This effect was explored further by conducting planned paired t-tests for the target-match and neutral, and distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(19) = 2.63, p = .02, d = .15, reflecting faster responses when the target matched (786 ms) than when it was neutral to (811 ms) the cue. The analysis of the distractor-match and neutral cues revealed no effect (p = .17) reflecting similar RTs when the distractor matched (822 ms) and was neutral to (811 ms) the cue. There were no significant effects in the analyses of error percentages (all p > .12).

Imagery group

The analysis of RTs revealed a main effect of cue, F(2,38) = 28.2, p < .001, η2p = .60. This effect was explored further by conducting planned paired t-tests that evaluated the difference between the target-match and neutral, and distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(19) = 5.98, p < .001, d = .37, reflecting faster responses when the cue matched the target (853 ms) than when it was neutral (955 ms). The analysis of the distractor-match and neutral cues also revealed a significant effect t(19) = 2.43, p = .03, d = .15, reflecting faster responses when the cue was neutral (955 ms) than when it matched the distractors (998 ms). There were no significant effects in the analyses of error percentages (all p > .19). The mean post-experiment estimate of imagery use was 78.0%.

Imagery ratings

In a separate analysis, we evaluated whether the cueing effects in the imagery group were modulated by the imagery vividness ratings. Imagery ratings of ‘1’, ‘2’, and ‘3’ were collapsed together into the “other” imagery category and imagery ratings of ‘4’ into the “high” imagery category. We categorized the imagery ratings this way since we were most interested in the situation when visual imagery was most vivid. This led to the removal of two participants due to empty cells (i.e., they did not provide responses to all cue types for both the “other” and “high” categories). Mean RTs and error percentages were submitted to within-subject analyses that treated cue (target-match/neutral/distractor-match) and imagery rating (other/high) as factors. The mean RTs when the imagery rating was treated as a factor are depicted in the left panel of Fig. 3.

The mean response times and error percentages for the target-match, neutral, and distractor-match cues when imagery rating was treated as a factor for Experiments 1 and 2. Error bars represent the standard error of the mean corrected to remove between-subject variability (Cousineau, 2005; Morey, 2008)

This analysis revealed a significant interaction of cue and imagery rating, F(2,34) = 5.61, p = .008, η2p = .25. This interaction was explored further by conducting planned paired t-tests that treated vividness rating as a factor for each level of cue separately. For the target-match cues, there was a significant effect of imagery rating, t(17) = 3.70, p = .002, d = .36, reflecting that responses were faster for the high imagery ratings (807 ms) than the other imagery ratings (908 ms) when the cue matched the target. No significant effects were revealed in the analyses of imagery rating for the neutral (p = .77) and distractor-match (p = .66) cues.

There was also a significant interaction of cue and imagery rating in the analysis of error percentages, F(2,34) = 4.70, p = .02, η2p = .22. An ANOVA that treated the target- and distractor-match levels of cue separately revealed an interaction of cue and imagery rating, F(1,17) = 7.38, p = .02, η2p = .30, reflecting a trend towards lower error percentages for high relative to the other imagery ratings when the target matched the cue, and an opposite pattern of results when the distractors matched the cue. There were no other significant effects in follow-up analyses (all p > .08).

Experiment 1b

The RT analysis did not reveal a significant interaction of cue and block (p = .62). The main effect of block was not significant (p = .13). The analysis of error percentages did not reveal a significant effect of block or an interaction of block and cue (all p > .07). Given a priori interests, we conducted separate planned one-way ANOVAs of RTs and error percentages that treated cue as a factor for each block separately.

Interval block

The analysis of RTs revealed a marginally significant main effect of cue, F(2,78) = 2.93, p = .059, η2p = .07. This effect was explored further by conducting planned paired t-tests for the target-match and neutral, and distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(39) = 2.10, p = .04, d = .11, reflecting faster responses when the target matched (797 ms) than when it was neutral to (812 ms) the cue. The analysis of the distractor-match and neutral cues revealed no effect (p = .86) reflecting similar RTs when the distractor matched (814 ms) and was neutral to (812 ms) the cue. The analyses of error percentages did not reveal any significant effects (all p > .09).

No-interval block

The analysis of RTs revealed a significant main effect of cue, F(2,78) = 4.95, p = .009, η2p = .11. This effect was explored further by conducting planned paired t-tests for the target-match and neutral, and distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(39) = 2.50, p = .02, d = .15, reflecting faster responses when the target matched (808 ms) than when it was neutral to (830 ms) the cue. The analysis of the distractor-match and neutral cues revealed no effect (p = .38) reflecting similar RTs when the distractor matched (837 ms) and was neutral to (830 ms) the cue. The analyses of error percentages did not reveal any significant effects (all p > .15).

Discussion

Experiment 1a revealed that both perceptual and imagined cues benefited performance when they matched the target of the singleton search task; however, the benefit of imagery was significantly larger than perception. Further, imagery also led to a performance cost when it matched the distractors and perceptual cues did not. Also, the magnitude of the imagery congruency effect increased with increased imagery vividness, suggesting that imagery was indeed the reason for the observed pattern of results (see also Cochrane, Ng, & Milliken, 2021; Cochrane, Ng, et al., 2021; Dijkstra et al., 2017). Experiment 1b revealed similar congruency effects in the perceptual condition regardless of the cue offset, suggesting that the larger congruency effects with imagery than perception was not due to the passive decay of the perceptual cue. Overall, this finding indicates that imagery has a more profound impact on search relative to perceptual depictions of the target.

Experiment 2

While the results of Experiment 1 revealed that imagery had a greater impact on search performance than perceptual cues, it remains unclear what processes produced this difference. To evaluate this issue in Experiment 2, we replicated Experiment 1a while measuring eye movements. This allowed us to evaluate whether imagery produced greater attention capture than perception, but importantly, we were able to partition RTs into pre-search, search, and response phases based on eye movements. Partitioning RTs this way allowed us to determine whether imagery and perception had a different influence on pre-search, search, and response processes.

Method

Participants

Sixty undergraduates at McMaster University participated in Experiment 2 (48 female, 12 male, Mage = 18.5 years) in exchange for course credit. All participants had normal color vision and normal to corrected-to-normal visual acuity. Participants now performed one-third fewer trials as in Experiment 1a, and accordingly, the sample was increased by one-third to maintain the same number of observations.

Apparatus and stimuli

A Dell computer was used to collect eye movements using the Eyelink II system (SR Research, Canada, Version 2.31) with a 250-Hz temporal resolution and 0.2° spatial resolution. Otherwise, the apparatus and stimuli were identical to Experiment 1a.

Procedure

The procedure was identical to Experiment 1a with the following exceptions. First, participants were required to place their chin on a chinrest that was positioned 57 cm from the computer monitor. Second, the experimenter now initiated the start of each trial once participants fixated on the central fixation cross. Third, the eye tracker was calibrated prior to the experimental session and was re-calibrated any time a central fixation at the beginning of the trial sequence did not align with the central fixation cross. Fourth, participants now performed 200 experimental trials; the number of experimental trials was reduced relative to Experiment 1a due to the lengthening of the experimental session resulting from the addition of the eye-monitoring procedure.

Results

Overall analyses

The same absolute cut-off outlier removal procedure as in Experiment 1 removed 1.5% of observations. The remaining correct RTs were submitted to the non-recursive outlier elimination procedure of Van Selst and Jolicoeur (1994), which removed an additional 2.3% of observations. Mean RTs were computed from the remaining observations, and these mean RTs and corresponding error percentages were submitted to a mixed-factor ANOVA that treated cue (target-match/neutral/distractor-match) as a within-subject factor and group (imagery/perception) as a between-subjects factor. An alpha criterion of .05 was used to determine statistical significance in all analyses. Mean RTs and error percentages are depicted in Fig. 4.

The RT analysis revealed a significant interaction of cue and group, F(2,116) = 38.6, p < .001, η2p = .40. There was also a significant main effect of group, F(1,58) = 13.6, p < .001, η2p = .19, reflecting overall faster responses in the perception than imagery group. First, we explored this interaction by conducting a separate planned ANOVA that treated cue and group as factors for the target-match and neutral levels of cue. This analysis revealed a significant interaction, F(1,58) = 31.6, p < .001, η2p = .42, reflecting a larger facilitation effect in the imagery than in the perception group. Second, we explored this interaction further by conducting a separate planned analysis that treated cue and group as factors for the neutral and distractor-match levels of cue. The analysis of the distractor-match and neutral cues also revealed an interaction that was significant, F(1,58) = 11.1, p = .001, d = .18, reflecting that the distractor-match cues led to a greater RT cost in the imagery than in the perception group. The interaction of group and cue, and main effect of group were not significant (all p > .76). We conducted separate one-way ANOVAs of RTs and error percentages that treated cue as a factor for each group separately – see below.

Perception group

The analysis of RTs revealed a main effect of cue, F(2,58) = 8.15, p < .001, η2p = .22. This effect was explored further with planned paired t-tests that evaluated the difference between the target-match and neutral, and distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(29) = 3.26, p = .003, d = .11, reflecting faster responses when the target matched (714 ms) than was neutral to (730 ms) the cue. The analysis of the distractor-match and neutral cues revealed a non-significant effect (p = .17), reflecting no difference in RTs for the distractor-match (738 ms) and neutral (730 ms) cues. There were no significant effects in the analysis of error rates (all p > .31).

Imagery group

The analysis of RTs revealed a main effect of cue, F(2,58) = 56.0, p < .001, η2p = .66. This effect was explored further with planned paired t-tests that evaluated the difference between the target-match and neutral, and the distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(29) = 6.56, p < .001, d = .70, reflecting faster responses when the cue matched the target (774 ms) than when it was neutral (904 ms). The analysis of the distractor-match and neutral cues also revealed a significant effect t(29) = 2.43, p = .03, d = .15, reflecting faster responses when the cue was neutral (904 ms) than when it matched the distractors (967 ms). There were no significant effects in the analysis of error rates (all p > .19). The mean post-experiment estimate of imagery use was 76.3%.

Imagery ratings

Separate analyses were conducted on the imagery ratings, like in Experiment 1. This led to the removal of one participant due to empty cells. A within-subject ANOVA was conducted that treated cue (target-match/neutral/distractor-match) and imagery rating (other/high) as factors. This analysis revealed a significant interaction of cue type and imagery rating, F(2,56) = 3.77, p = .01, η2p = .12. The interaction was explored further by conducting planned paired t-tests that treated imagery rating as a factor for each cue type separately. For the target-match cues, there was a significant effect of imagery rating, t(28) = 5.09, p < .001, d = .57, reflecting faster responses for the high (750 ms) than other (853 ms) imagery ratings. No other significant effects were revealed in the analyses of imagery rating for the neutral (p = .12) and distractor-match (p = .25) cues. There were no significant effects in the analysis of error percentages (all p > .18). The mean RTs and error percentages are depicted in the right panel of Fig. 3.

Eye-movement analyses

In all eye-movement analyses, observations determined to be outliers by the absolute cut-off and Van Selst and Jolicoeur (1994) procedures and those culminating in an incorrect response were excluded. Further, eye movements that fell outside 2° of visual angle from central fixation prior to onset of the search display were removed from analyses, which led to the removal of an additional 10.5% of observations.

First saccade

The search display was partitioned into quadrants, and the percentage of trials the first saccade was directed into the quadrant of the search target was the primary dependent variable. These percentages were submitted to a mixed-factor ANOVA that treated group (imagery/perception) as a between-subjects factor and cue (target-match/neutral/distractor-match) as a within-subject factor. The analysis revealed a significant interaction of group and cue, F(2,116) = 18.4, p < .001, η2p = .24. This interaction was explored further by conducting separate planned independent-sample t-tests that treated group as a factor for each level of cue. The analysis of the target-match cue revealed a significant effect of group, t(58) = 4.41, p = .001, d = .94, reflecting a greater percentage of first saccades to the target in the imagery (70.8%) than in the perception (56.8%) group. The analysis of the neutral cues revealed no effect of group (p = .29), reflecting a similar percentage of first saccades to the target in the imagery (58.4%) and perception (53.5%) groups. The analysis of the distractor-match cues revealed a significant effect of group, t(58) = 2.16, p = .04, d = .09, reflecting a greater percentage of first saccades to the target in the perception (49.8%) than in the imagery (37.8%) group. The mean percentage of trials the first saccade was directed to the target are depicted in Fig. 5.

Partitioned RTs

RTs were partitioned into pre-search, search, and response phases based on eye-movement data. RTs were submitted to a mixed-factor ANOVA that treated phase (pre-search/search/response) and cue (target-match/neutral/distractor-match) as within-subject factors, and group (imagery/perception) as a between-subjects factor. This analysis revealed a significant interaction of phase, cue, and group, F(4,232) = 6.09, p < .001, η2p = .09. This interaction was explored further by conducting separate planned ANOVAs for each phase separately. Mean RTs are depicted in Fig. 6.

The mean response times of the target-match, neutral, and distractor-match cues partitioned into pre-search, search, and response phases for the imagery and perception groups of Experiment 2. Error bars represent the standard error of the mean corrected to remove between-subject variability (Cousineau, 2005; Morey, 2008)

Pre-search phase

The analysis of the pre-search phase revealed a significant interaction of cue and group, F(2,116) = 3.11, p = .05, η2p = .05. There was also a significant main effect of group, F(1,58) = 708.5, p < .001, η2p = .92, reflecting overall shorter durations in the perception than in the imagery group. The interaction was explored further by conducting separate planned ANOVAs that treated cue as a factor for the imagery and perception groups separately. The analysis of the imagery group revealed a significant effect of cue, F(2,58) = 7.85, p = .001, η2p = .21. This effect was explored further by conducting planned paired t-tests of the target-match and neutral, and the distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(29) = 2.33, p = .03, d = .33, reflecting shorter durations when the target matched (364 ms) than when it was neutral to (373 ms) the cue. The analysis of the neutral and distractor-match cues revealed no effect (p = .18), reflecting similar durations for the distractor-match (378 ms) and neutral (373 ms) conditions. The analysis of the perception group did not reveal a significant effect of cue (p = .48), reflecting similar durations for the target-match (220 ms), neutral (224 ms), and distractor-match (223 ms) conditions in the pre-search phase.

Search phase

The analysis of the search phase revealed a significant interaction of cue and group, F(2,116) = 12.8, p < .001, η2p = .18. The main effect of group was not significant (p = .16). This interaction was explored further by conducting separate planned ANOVAs that treated cue as a factor for the imagery and perception groups separately. The analysis of the imagery group revealed a significant effect of cue, F(2,58) = 27.3, p < .001, η2p = .48. This effect was explored further by conducting planned paired t-tests of the target-match and neutral, and the distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(29) = 4.94, p < .001, d = .63, reflecting a shorter duration when the target matched (195 ms) than when it was neutral to (304 ms) the cue. The analysis of the distractor-match and neutral cues revealed a significant effect, t(29) = 2.52, p = .02, d = .24, reflecting a shorter duration when the cue was neutral (304 ms) than when it matched the distractors (352 ms). The analysis of the perception group revealed a significant effect of cue, F(2,58) = 4.94, p = .01, η2p = .15. This effect was explored further by conducting planned paired t-tests of the target-match and neutral, and the distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(29) = 2.63, p = .01, d = .23, reflecting shorter durations when the target matched (321 ms) than was neutral to (341 ms) the cue. The analysis of the neutral and distractor-match cues revealed no effect (p = .18), reflecting similar durations for the neutral (341 ms) and distractor-match (358 ms) cues.

Response phase

The analysis of the response phase revealed a significant interaction of cue and group, F(2,116) = 12.1, p < .001, η2p = .17. There was also a significant main effect of group, F(1,58) = 6.56, p = .01, η2p = .10, reflecting overall shorter durations in the perception than in the imagery group. This interaction was explored further by conducting separate planned ANOVAs that treated cue as a factor for the imagery and perception groups separately. The analysis of the imagery group revealed a significant effect of cue, F(2,58) = 12.8, p < .001, η2p = .31. This effect was explored further by conducting planned paired t-tests of the target-match and neutral, and the distractor-match and neutral levels of cue separately. The analysis of the target-match and neutral cues revealed a significant effect, t(29) = 2.84, p = .008, d = .63, reflecting shorter durations when the target matched (217 ms) than when it was neutral to (247 ms) the cue. The analysis of the distractor-match and neutral cues did not reveal a significant effect (p = .08), although there was a trend towards shorter durations when the cue was neutral to (247 ms) than when it matched the distractors (264 ms). The analysis of the perception group did not reveal a significant effect of cue (p = .33), reflecting similar durations for the target-match (187 ms), neutral (184 ms), and distractor-match (177 ms) conditions in the response phase.

Discussion

First, Experiment 2 revealed that the overall RT and imagery rating findings of Experiment 1 were observable upon replication; that is, imagery once again had a greater influence on performance compared to perceptual cues. The only notable difference was that the non-significant effect of group (p = .08) in Experiment 1a was now highly significant (p < .001), suggesting that perceptual cues led to faster overall responses compared to imagery. Second, it was revealed that imagery led to greater attention capture than perceptual cues, as indicated by the direction of the first saccade. In particular, the first saccade following imagery was more likely to be in the direction of the item that was congruent with the cue relative to perception. Third, when we partitioned RTs based on eye movements, there was a markedly different pattern of results for the imagery and perceptual cues. While both imagery and perceptual cues influenced the search phase, imagery also influenced the pre-search and response phases and perception did not. Further, there was a pronounced slowing to initiate the first saccade for imagery than perception (see the General discussion for more on this issue), and imagery produced clear response biases. Overall, the present findings revealed that imagery influenced all phases in the vision-perception pipeline, and perceptual cues only influenced search.

General discussion

The purpose of the present study was to evaluate the influence of imagery and perceptual cues on visual search. In all experiments, it was revealed that imagery had a more profound influence on search performance than perceptual cues. That is, there was both a greater benefit when the cue matched the target and a greater cost when the cue matched the distractors for imagery than perception. Further, the magnitude of the imagery effect increased with increased ratings of imagery vividness, supporting the notion that the imagery effects were due to representations that were visual in nature (see also Cochrane, Ng, et al., 2021; Dijkstra et al., 2017). Why there were differences between imagery and perception was explored in Experiment 2, where it was revealed that imagery led to greater attention capture than perceptual cues, as indicated by a greater proportion of first saccades when the cue matched the target and vice versa when it matched the distractors. Lastly, RTs were partitioned based on eye movements into pre-search, search, and response phases, where it was revealed that, while imagery and perceptual cues both influenced the search phase, imagery had a more profound influence. In further contrast with perception, imagery also influenced the pre-search and response phases, suggesting that it impacted covert attention and response aspects of search as well.

It is an open question why the vividness ratings affected performance when it matched the target, but not when it matched the distractors. That is, it was revealed that when imagery matched the target, responses were faster when it was reported with high than with lesser forms of vividness. However, when imagery matched the distractors, response speed did not vary as a function of vividness rating. Contrary to our findings, it is reasonable to predict that when imagery matched the distractors, it should produce slower responses when it is reported with high than with lesser forms of vividness since attention ought to be more frequently driven to the distractor locations. We suspect the primary reason we did not observe this result was that vividness ratings were not a pure measure of imagery vividness, as participants sometimes used them to constitute their performance more generally (see Cochrane, Ng, & Milliken, 2021; Cochrane, Ng, et al., 2021; Cochrane, Townsend, et al., 2021). Accordingly, the mean RTs of the distractor-match condition likely reflected both the situation when participants rated their vividness (which slowed responding for the high relative to other vividness ratings) and general performance (which slowed responding for the other relative to high ratings), which cancelled out the effect. In the target-match condition, this did not occur since the results of using vividness rating to constitute imagery vividness and performance were not in opposition.

Related to the above issue, a limitation of the present study is that it is not clear whether imagery vividness ratings influenced performance above and beyond imagery. That is, the participants of the imagery group had to rate the vividness of their imagery following each trial, whereas the participants of the perception group performed no such task. The present experiments included this rating procedure in the imagery group based on preliminary work suggesting they encouraged imagery use, and they were not used in the perception group, since not using them was a better approximate of other perceptual cueing studies in the literature. The issue is that cueing imagery when it is infrequent is tantamount to semantic cueing, as participants are left to use the letter cues to inform their search. Also, it is unclear how perceptual cue use ought to be encouraged without changing its phenomenological basis. That is, for example, if participants had to rate how well they encoded the perceptual cue following each trial, high ratings might reflect the instance when participants held the representation in working memory, and thus, reflect a phenomenological experience more akin to imagery than the passive influence elicited by perceptual cues. Ultimately more work is needed to fully unpack this issue, but we suspect that any intervention that inspires active engagement with the cue should produce larger congruency effects in search compared to passive engagement with the cue.

A notable finding in the present study was that perceptual cues led to faster overall performance than imagery in Experiment 2 (p < .001) and a trend towards faster performance in Experiment 1a (p = .08). There is certainly no doubt that imagery can have a profound impact on performance, but at the same time this finding calls into question its utility more generally. Intuitively, one would think that imagery has utility in helping the observer locate a target as it seems reasonable that producing greater attention capture for features congruent with what you are looking for can facilitate search. However, the present study revealed that the act of generating imagery led to an additive slowing of performance, suggesting otherwise. In other words, it does not appear that generating imagery is useful for search, as its benefits do not outweigh its costs. Although, it remains an open question whether the reason why imagery was not useful was because new imagery events were constantly generated across our simple search task. It may be that the benefit of generating imagery only emerges when search is sufficiently difficult, the point at which it overcomes the slowing produced by generating it. It is also important to acknowledge that it may be that perception led to a benefit above and beyond imagery, while at the same time understanding that the perception manipulation is the less ecologically valid of the two. For example, if you are looking for your car in a crowded parking lot, you likely would not use a picture of it to help you facilitate your search, with the practical alternative being to generate a representation from memory.

While imagery may have little practical utility on search, it most certainly has a profound impact on the processes underlying it. That is, when we compare the search phase (the time from first saccade until the last fixation) across imagery and perception, it is clear that imagery has a more profound influence. Indeed, this finding is complimented by the first saccade analyses as well, which demonstrated that imagery played a greater role in the automatic orienting of eye movements than perceptual cues. The implications of these findings are important, as they address the nature of the processes governing attentional guidance. Certainly, top-down attentional guidance effects (in cueing procedures at least) depend on the formation of an internal representation of what you are looking for. It is then presumed that attention (and subsequent eye movements) is guided to locations in the visual environment based on neural activity in an internal spatiotopic map, and the innervation within this map is based on congruency across that in the world and that which you are internally representing (Serences & Yantis, 2006; Wolfe, 2014). What the present study reveals is that these attentional guidance processes are better engaged by imagery, suggesting the active maintenance of a representation can benefit top-down guidance.

Central to the issue of why imagery profoundly impacted attentional guidance but hindered performance overall, is the somewhat surprising finding that imagery was especially slow in the pre-search phase relative to perception. Upon revelation of this finding, we pondered whether it may have been due to select participants in the imagery group not moving their eyes at all, as these search tasks can be performed while eyes remain fixated (see Cochrane, Townsend, et al., 2021). While no formal analyses were conducted, visual inspection of the data suggested that this was not the reason, as the pre-search durations of all participants were of similar magnitude (SD = 29 ms). Given that this finding does not seem to be spurious, it is curious what could produce such a pattern of results. One possibility is that imagery led to the greater use of covert attention relative to perception; that is, it is possible that imaginers were doing more searching while their eyes remained fixated. While this interpretation is supported by the significant effect of cue in the analysis of the pre-search phase, this effect was strikingly small. In other words, if this were exclusively the case you would expect the magnitude of the cueing effect in the pre-search phase to be comparable to that in the search phase. The more likely possibility is that imagery led to impaired initiation of the search task, as imaginers had to disengage from their imagery prior to performing search. In contrast, those in the perceptual group were likely spending this same interval readying themselves for search, which produced the short pre-search phase durations.

In addition to the influence of imagery on pre-search and search phases, it was revealed that imagery also influenced the processes in the response phase, as indicated by a clear performance benefit when imagery matched the target and a cost when it matched the distractors. We suspect that this result reflects an influence on the processes governing decision-making; that is, congruent imagery led participants to quickly decide that the target was indeed the target, and this was inversely so when it matched the distractors. If our interpretation is correct, it is curious why a similar effect was not revealed in the perception group. Recently, while using a color singleton search task where participants were provided with the knowledge of an upcoming target color, we revealed that verbally indicating the target prior to the search display influenced performance, and passive knowledge of it did not (Cochrane & Pratt, 2020; see also Maljkovic & Nakayama, 1994). While it is noteworthy that verbal representations are not nearly as impactful as imagined ones (see Cochrane, Nwabuike, et al., 2018), what they have in common with imagery is that they necessitate that the participant is aware of the upcoming target. That is, it could be that participants typically do not use passive perceptual cues to prepare a response. Another possibility is that response biases are indeed produced by perceptual cues, but they were overridden by a different response process working in opposition. Indeed, while not significant, there was a trend towards faster responding when perceptual cues were congruent with the distractors rather than the target, which aligns with the pattern of results produced by the stimulus-location binding processes that pervade these types of cognitive tasks (Hommel, 2004; Kahneman et al., 1992). Overall, we suspect that there is some truth to both of these notions; that passive knowledge has a weak influence on responding and that stimulus-location binding processes were operative and in opposition.

An important caveat is that the present findings do not suggest that imagery has a more profound impact than perception when directly compared. For example, if you were to imagine a red fire hydrant, this representation ought to appear less clear and vivid than if you were to view a picture of one. What we ought to conclude from the present findings is that imagined representations have a more profound residual influence than perceptual representations. Indeed, this finding has been demonstrated in other cognitive tasks, such as cueing imagery and perception prior to binocular rivalry (Chang et al., 2013; Pearson et al., 2008, 2011; Sherwood & Pearson, 2010), and imagined and perceptual training prior to visual search (Reinhart et al., 2015). While it is an open question why imagined representations have a greater influence than perception when cued, we suspect that the reason for this cuing difference is not simply because imagined representations remained passively viewable until the search display onset, and perceptual representations did not. Indeed, Experiment 1b revealed that how long the perceptual cue was displayed relative to the search task did not influence the magnitude of the congruency effect. Instead, we suspect the fact that imagery necessitates the representations be actively maintained and engaged with is critical to this phenomenon – similar to how actively maintained working-memory representations drive attention to congruent representations in the environment (Soto et al., 2005, 2006, 2008; Woodman & Luck, 2007).

Conclusion

The present study revealed that color imagery can have a more profound impact on visual search than perceptual cues. Imagery not only led to greater attentional guidance as indicated by a larger congruency influence in the search phase of the task relative to perceptual cues, it also influenced covert attentional shifts that occurred prior to the first eye movement and responding after the last fixation. A cautionary note is that while imagery had a more profound influence on the processes underlying search, perceptual cues led to faster overall performance, suggesting that imagery may have limited utility for search more generally.

References

Baier, D., & Ansorge, U. (2019). Investigating the role of verbal templates in contingent capture by color. Attention, Perception, & Psychophysics, 86(6), 1846-1879.

Chang, S., Lewis, D. E., & Pearson, J. (2013). The functional effects of color perception and color imagery. Journal of Vision, 13(10), 1-10.

Cochrane, B. A., & Milliken, B. (2019). Imagined event-files: An interplay between imagined and perceived objects. Psychonomic Bulletin & Review, 26(2), 538-544.

Cochrane, B. A., & Milliken, B. (2020). The representational basis of positive and negative repetition effects. Journal of Experimental Psychology: Human Perception and Performance, 46(3), 252-263.

Cochrane, B. A., & Pratt, J. (2020). Re-examining Maljkovic and Nakayama (1994): Conscious expectancy does affect the Priming of Pop-out effect. Attention, Perception, & Psychophysics, 82(5), 2693-2702.

Cochrane, B. A., Ng, V., & Milliken, B. (2021). Top-down imagery overrides the influence of the selection history effects. Consciousness and Cognition. Advanced online publication. https://doi.org/10.1016/j.concog.2021.103153.

Cochrane, B. A., Ng, V., Khosla, A., & Milliken, B. (2021). Looking into the Mind’s Eye: Directed and evaluated imagery vividness modulates imagery-perception congruency effects. Psychonomic Bulletin & Review. Advanced online publication. https://doi.org/10.3758/s13423-020-01868-8

Cochrane, B. A., Nwabuike, A. A., Thomson, D. R., & Milliken, B. (2018). An imagery-induced reversal of intertrial priming in visual search. Journal of Experimental Psychology: Learning, Memory, and Cognition, 44(4), 572-587.

Cochrane, B. A., Siddhpuria, S., & Milliken, B. (2019). Cueing color imagery: A critical analysis of imagery-perception congruency effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(8), 1410-1421.

Cochrane, B. A., Townsend, B., Lee, R. C., Legere, J. K., Milliken, B., & Shedden, J. M. (2021). Visual imagery influences attentional guidance during visual search: behavioral and electrophysiological evidence. Attention, Perception, & Psychophysics, 83(1), 58-66.

Cochrane, B. A., Zhu, H., & Milliken, B. (2018). Strategic visual imagery and automatic priming effects in pop-out visual search. Consciousness & Cognition 65, 59-70.

Cousineau, D. (2005). Confidence intervals in within-subject design: a simpler solution to Loftus and Masson’s method. Tutorials in Quantitative Methods for Psychology, 1(1), 42-45.

Cue, X., Jeter, C. B., Yang D., Montague, & Eagleman, D. M. (2007). Vividness of mental imagery: Individual variability can be measured objectively. Vision Research, 47(4), 474-478.

Dijkstra, N., Bosch, S. E., van Gerven, M. A. J. (2017). Vividness of visual imagery depends on the neural overlap with perception in visual areas. Journal of Neuroscience, 37(5), 1367-1373.

Dijkstra, N., Bosch, S. E., & van Gerven, M. A. J. (2019). Shared neural mechanisms of visual perception and imagery. Trends in Cognitive Science, 23(5), 423-434.

Grindell, J., Liao, M. R., & Anderson, B. A. (2020). Mind’s Eye vs. Mine eye: Effects of imagery and perceptual priming on singleton search [Poster presentation]. The Object Perception, Attention, and Memory 28th Annual Meeting. (Online Conference)

Hommel, B. (2004). Event files: feature binding in and across perception and action. Trends in Cognitive Sciences, 8(11), 494-500.

Kahneman, D., Treisman, A. M., & Gibbs, B. J. (1992). The reviewing of object files: object-specific integration of information. Cognitive Psychology, 24, 175-219.

Kawashima, T., & Matsumoto, E. (2017). Cognitive control of attentional guidance by visual and verbal working memory representations. Japanese Psychological Research, 59(1), 49-57.

Leonard, C. J., & Egeth, H. E. (2008). Attentional guidance in singleton search: An examination of top-down, bottom-up, and intertrial factors. Visual Cognition, 16(8), 1078-1091.

Maljkovic, V., & Nakayama, K. (1994). Priming of pop-out: I. Role of features. Memory & Cognition, 22(6), 657-672.

Maljkovic, V., & Nakayama, K. (2000). Priming of popout: III. A short-term implicit memory system beneficial for rapid target selection. Visual Cognition, 7(5), 571-595.

Morey, R. D. (2008). Confidence intervals from normalized data: A correction to Cousineau (2005). Tutorials in Quantitative Methods for Psychology, 4(2), 61-64.

Moriya, J. (2018). Visual mental imagery influences attentional guidance. Attention, Perception, & Psychophysics, 80(5), 1127-1142.

Müller, H. J., & Krummenacher, J. (2006). Locus of dimension weighting: preattentive or postselective. Visual Cognition, 14(4-8), 490-513.

Müller, H. J., Reimann, B., & Krummenacher, J. (2003). Visual search for singleton feature targets across dimensions: Stimulus- and expectancy-driven effects in dimensional weighting. Journal of Experimental Psychology: Human Perception and Performance, 29(5), 1021-1035.

Pearson, J., & Kosslyn, S. M. (2015). The heterogeneity of mental representations: Ending the imagery debate. Proceedings of the National Academy of Sciences of the United States of America, 112(33), 10089-10092.

Pearson, J., Clifford, C. W. G., & Tong, F. (2008). The functional impact of mental imagery on conscious perception. Current Biology, 13(8), 982-986.

Pearson, J., Rademaker, R. L., & Tong, F. (2011). Evaluating the Mind’s Eye: The metacognition of visual imagery. Psychological Science, 22(12), 1535-1542.

Reinhart, R. M. G., McClenahan, L. J., & Woodman, G. F. (2015). Visualizing trumps vision in training attention. Psychological Science, 26(7), 1114-1122.

Serences, J. T., & Yantis, S. (2006). Selective visual attention and perceptual coherence. Trends in Cognitive Science, 10(1), 38-45.

Sherwood, R., & Pearson, J. (2010). Closing the mind’s eye: Incoming luminance signals disrupt visual imagery. PLoS One, 5(12), e15217.

Soto, D., Heinke, D., Humphreys, G. W., & Blanco, M. J. (2005). Early involuntary top-down guidance of attention from working memory. Journal of Experimental Psychology: Human Perception and Performance, 31(2), 248-261.

Soto, D., Hodsoil, J., Rotshtein, P., & Humphreys, G. W. (2008). Automatic guidance of attention from working memory. Trends in Cognitive Science, 12(9), 342-348.

Soto, D., Humphreys, G. W., & Heinke, D. (2006). Working memory can guide pop-out search. Vision Research, 46(6-7), 1010-1018.

Theeuwes, J., Reimann, B., & Mortier, K. (2006). Visual search for featural singletons: No top-down modulation, only bottom-up priming. Visual Cognition, 14(4-8), 466-489.

Van Selst, M., & Jolicoeur, P. (1994). A solution to the effect of sample size on outlier elimination. The Quarterly Journal of Experimental Psychology, 47(3), 631-650.

Wantz, A. L., Borst, G., Mast, F. W., & Lobmaier, J. S. (2015). Colors in mind: a novel paradigm to investigate pure color imagery. Journal of Experimental Psychology: Learning, Memory, and Cognition 41(4), 1152-1161.

Wolfe, J. M. (2014). Approaches to visual search: Feature integration theory and guided search. In Nobre & S. Kastner (Eds.), The Oxford handbook of attention (pp. 11-55). Oxford University Press.

Wolfe, J. M. (1994). Guided search 2.0 a revised model of visual search. Psychonomic Bulletin, & Review, 1(2), 202-238.

Wolfe, J. M., Horowitz, T. S., Kenner, N., Hyle, M.F & Vasan, N. (2004). How fast can you change your mind? The speed of top-down guidance in visual search. Vision Research, 44, 1411-1426.

Woodman, G. F., & Luck, S. J. (2007). Do the contents of visual working memory automatically influence attentional selection during visual search? Journal of Experimental Psychology: Human Perception and Performance, 33(2), 363-377.

Zhou, G., Liu, Q., Jiao, J., Zhou, P., Li, H., & Sun, H. J. (2012). Dual-state modulation of the contextual cueing effect: Evidence from eye movement recordings. Journal of Vision, 12(6), 1-13.

Acknowledgements

Financial support for this study was provided by Natural Sciences and Engineering Research Council of Canada Discovery Grants awarded to Bruce Milliken and Jay Pratt. The funding agreement ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report. The authors report no conflicts of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Open Practices Statement

The experiments reported in this article were not preregistered. The data for all experiments are publicly available at the Center of Open Science website (osf.io/xn97e). Requests for materials can be sent via email to the corresponding author at brett.cochrane@utoronto.ca.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cochrane, B.A., Wang, C., Pratt, J. et al. Comparing imagery and perception: Using eye movements to dissociate mechanisms in search. Atten Percept Psychophys 83, 2879–2890 (2021). https://doi.org/10.3758/s13414-021-02336-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-021-02336-8