Abstract

Recent behavioral studies have shown that color imagery can benefit visual search when it is congruent with an upcoming target. In the present study we investigated whether this color imagery benefit was due to the processes underlying attentional guidance, as indicated by the electrophysiological marker known as the N2pc component. Participants were instructed to imagine a color prior to each trial of a singleton search task. On some trials, the imagined color was congruent with the target, and on other trials, it was congruent with the distractors. The analyses revealed that the N2pc was present when color imagery was congruent with the search target, and absent when it was congruent with the distractors. Further, there was preliminary evidence that attentional guidance depended on the vividness of color imagery and the frequency at which participants implemented the imagery instruction. Overall, the results of the present study indicate that color imagery can influence the attentional guidance processes underlying visual search.

Similar content being viewed by others

Introduction

If you were to close your eyes and imagine a color, you may have a phenomenological experience much like seeing that color in the real world. One explanation for this phenomenological experience is that the brain regions necessary for color perception are activated when a color is represented in the “mind’s eye.” While this interpretation has long been debated (Kosslyn, 1996; Pylyshyn, 1973, 2003; Tye, 1991), it has become increasingly evident that the reason visual imagery feels like a perceptual experience is because imagery and perception depend on many of the same underlying processes (Pearson & Kosslyn, 2015).

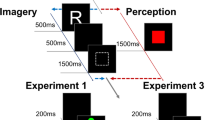

While there is a long history of studying color imagery as a phenomenological experience in and of itself, recent studies have focused on investigating the impact of color imagery on behavior (Chang, Lewis, & Pearson, 2013; Cochrane & Milliken, 2019, 2020; Wantz, Borst, Mast, & Lobmaier, 2015). A particularly noteworthy behavior that color imagery has been demonstrated to affect is that of visual search. While there is some debate on whether perceptual and semantic cues have a similar (or any) influence on visual search performance (Baier & Ansorge, 2019; Kawashima & Matsumoto, 2017; Müller & Krummenacher, 2006; Theeuwes, Reimann, & Mortier, 2006; Wolfe, Butcher, Lee, & Hyle, 2003), color imagery is consistently demonstrated to have a profound impact on search (Cochrane, Siddhpuria & Milliken, 2019; Cochrane, Zhu, and Milliken, 2018; Moriya, 2018) that goes beyond the influence of semantic and perceptual representations (Cochrane, Nwabuike, Thomson, & Milliken, 2018; Reinhart, McClenahan, & Woodman, 2015). In one such example, Moriya (2018) reported that color imagery led to efficient attentional guidance when it was congruent with a perceptually colored target. Here, they had participants perform a search task where they indicated the side of a gap in a target square, while a singleton distractor was presented in the opposite visual hemifield. Prior to a trial of the search task, participants were cued to imagine a color that either matched the target or the distractor, or neither item in the display. Response times indicated that visual search was most efficient when color imagery matched the target and was least efficient when it matched the distractor. This led Moriya to conclude that color imagery impacted the processes responsible for attentional guidance.

Given the findings of Moriya (2018) and other studies of this nature, we felt it worthwhile to take this a step further by investigating whether color imagery also influenced the electrophysiological component known as the N2pc, a well established marker of attentional guidance (Luck & Hillyard, 1990; for a review, see Luck, 2011). The N2pc is constituted by negative electrical activity in the posterior brain regions corresponding to the opposite visual hemifield that attention has been oriented to. For example, if a target is presented in the left visual hemifield and a distractor is presented in the right, negative electrical activity emerges in the posterior brain regions corresponding to the right visual hemifield (i.e., the location of the distractor). While there is some debate over the precise nature of the N2pc, it is widely agreed that this activity reflects improved attentional guidance to the target (Eimer 1996; Luck, 2011; Luck & Hillyard, 1994b).

Accordingly, the goal of the present study was to evaluate whether color imagery impacted the processes responsible for attentional guidance as indicated by the N2pc component. Participants were instructed to imagine a color prior to a singleton search task, where the target was defined as the color singleton amongst homogenously colored distractors. Following each trial of the search task, participants reported the vividness of their color imagery on a 3-point scale. If it is indeed the case that color imagery can impact the processes responsible for attentional guidance, we should observe an N2pc when imagery and target colors were congruent – there should be greater negative electrical activity in the posterior brain regions corresponding to the target-contralateral than target-ipsilateral visual hemifield. Further, an N2pc should not be observed when imagery matched the distractors since attention should be guided to the distractor locations, and the distractors were presented in both visual hemifields. In other words, since the N2pc is constituted as greater negative electrical activity in the target-contralateral than target-ipsilateral visual hemifield, and the imagery-congruent distractors are present in both hemifields, we should not observe an N2pc here. A secondary goal of the present study was to evaluate whether attention was influenced by the vividness of the color imagery generated and the frequency at which the imagery instruction was used, which may offer confirmatory support to the notion that color imagery indeed influences the attentional guidance system.

Method

Participants

Twenty-two undergraduates at McMaster University (18 female, Mage = 19.1 years) took part in exchange for course credit or monetary compensation. All participants reported having normal or corrected-to-normal visual acuity and normal color vision. While the imagery-based congruency effects are quite robust – a power analysis on pilot data revealed that six participants were sufficient to detect an imagery effect (Cohen’s f = 1.80, power = .90, alpha = .05) – we were unsure of the power level necessary to detect a significant N2pc. Accordingly, we made the a priori decision to use a sample of approximately 20 participants based on the assumption that the N2pc effects would not be as robust as the response time effects.

Apparatus and stimuli

Stimuli were presented using PsychoPy v1.82 against a black background on a CRT monitor with a 100-Hz refresh rate. The search array consisted of four colored diamonds that were positioned in the corners of a 5.6° × 5.6° invisible square that was positioned at the center of the screen. Each search array contained one target diamond that was a different color to three homogenously colored distractor diamonds. Target and distractor diamonds were presented in red and green. All diamonds subtended a horizontal and vertical visual angle of 1.5° and contained a missing notch on either the top or the bottom that subtended a visual angle of .33°. The imagery cues (‘R’ or ‘G’) were presented in white and subtended approximate vertical and horizontal visual angles of 1.9°. The central fixation cross was presented in white and subtended a vertical and horizontal visual angle of .33°.

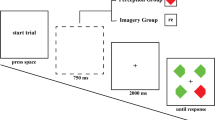

Procedure

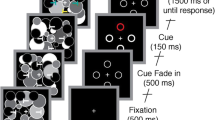

Participants were seated approximately 60 cm from the monitor in a dimly lit room. Each trial was initiated by pressing the spacebar. Participants were instructed to reduce any unnecessary physical movements and eye blinks during the trial sequence, and if needed, to make these movements prior to initiating the trial. Following a black screen that was presented for 500 ms, the imagery cue (‘R’ or ‘G’) was then displayed centrally for 500 ms. Participants had to imagine a red or green square in response to the ‘R’ and ‘G’ imagery cues, respectively. A black screen with the central fixation cross was then presented for 2,000 ms, during which time participants maintained their color imagery. The search display was then presented and participants had to locate the singleton colored target diamond and indicate whether the notch was positioned on the top or the bottom. Participants pressed either the ‘z’ or ‘m’ key on a standard QWERTY keyboard with their left or right index finger to indicate the notch location. The position of the notch varied randomly on a trial-by-trial basis. The key assigned to the notch location was counterbalanced across participants. Imagery cues were randomized on a trial-by-trial basis such that there was a .75 probability of being congruent with the target and a .25 probability of being incongruent with the target. This probability manipulation was implemented to incentivize participants to use the imagery instruction (see Cochrane, Nwabuike, et al., 2018a), and it has been reported that such probability manipulations do not affect the N2pc (Luck & Hillyard, 1994a). Once participants responded to the search display, the imagery vividness probe was presented and participants were required to rate the vividness of their color imagery on a 3-point scale, where a keypress response of 1 indicated no imagery, 2 indicated low/moderate vividness, and 3 indicated high vividness. See Fig. 1 for an example of a typical congruent trial.

The experiment began with 15 practice trials to ensure that participants fully understood the instructions prior to the experimental trials. Participants performed only the singleton search task for the first five trials, and it was emphasized that they remain fixated on the central fixation cross from the time they initiated the trial until a response was made. For the next five trials, participants implemented the imagery instruction and performed the singleton search task without the trial-by-trial imagery vividness probe. Here, it was emphasized that targets will not necessarily match the imagery generated prior to the search task. The last five practice trials were identical to the experimental trials. Participants then performed 300 experimental trials. At conclusion of the experimental trials, participants provided a percentage estimate of the frequency at which they implemented the imagery instruction across the experimental session.

EEG data acquisition and pre-processing

EEG data were recorded using the BioSemi ActiveTwo electrophysiological system (www.biosemi.com) with 128 sintered Ag/AgCl scalp electrodes. An additional four electrodes recorded eye movements (two below the eyes on the upper cheeks and two placed laterally from the outer canthi). Continuous signals were recorded using an open pass band from direct current to 150 Hz and digitized at 1,024 Hz. All processing was performed in MATLAB-2018a using functions from EEGLAB and ERPLAB (Delorme & Makeig, 2004; Lopez-Calderon & Luck, 2014). The signal was re-referenced to the average voltage across all electrodes. An infinite impulse response filter (IIR; Butterworth) was used to filter continuous EEG data with frequencies higher than 0.1 Hz and ERP data lower than 30 Hz. Data were epoched from 100 ms prior to and 600 ms after the search array onset. Eye blinks and other movement artifacts were removed using independent component analysis (ICA; see Drisdelle, Aubin, & Jolicoeur, 2017). As a result of ICA, four participants were removed from further analysis due to abnormally high electrical activity at a substantial number of electrodes.

Results and discussion

Overall results

Behavioral analyses

Correct response times (RTs) less than 200 ms or greater than 2,000 ms were excluded from analysis, which led to the removal of 9.8% of observations (7.9% in the congruent and 15.4% in the incongruent color conditions). Mean correct RTs were computed from the remaining observations, and these mean RTs and corresponding error rates were submitted to a paired t-test that treated color congruency (congruent/incongruent) as a factor. An alpha level of .05 was used to determine statistical significance in all analyses here. Mean RTs are displayed in the left panel of Fig. 2.

The left panel depicts the overall mean response times and error percentages for the congruent and incongruent color conditions. Error bars represent the standard error of the mean corrected for between-subjects variability (Cousineau, 2005; Morey, 2008). The right panel depicts the mean electrical activity at the PO7/PO8 electrodes ipsilateral and contralateral to the target location across the epoched time frame

The analysis of RTs revealed a significant effect of color congruency, t(1,17) = 6.32, p < .001, d = 1.0, reflecting faster responses for congruent colors (992 ms) than incongruent colors (1,179 ms).Footnote 1 The analysis of error rates revealed no differences between the congruent (5.1%) and incongruent (4.7%) colors (p = .73). The effect of RTs constitutes the imagery congruency effect, demonstrating that color imagery can indeed affect visual search task performance.

N2pc analyses

Electrical activity was measured at the lateral posterior electrodes (i.e., PO7/PO8) contralateral and ipsilateral to the location of the target. The mean electrical activity at these electrodes within the epoched time was then combined to compute a grand average. From this grand average, the N2pc was constituted as the second negative inflection of the contralateral waveform – that is, the first negative inflection following the N1 evoked potential. A 100-ms window was centered on the grand average peak of the N2pc and the N2pc for each participant was calculated by taking the mean peak average that fell within this window for the contralateral and ipsilateral waveforms. These peak amplitudes were then submitted to a within-subject ANOVA that treated color congruency (congruent/incongruent) and laterality (contralateral/ipsilateral) as factors. The grand average waveforms are depicted in the right panel of Fig. 2.

This analysis revealed a significant interaction of color congruency and laterality, F(1,17) = 5.66, p = .029, η2p = .25. This interaction was examined further by analyzing the effect of laterality for each level of color congruency. For congruent colors, a paired t-test revealed that the mean peak amplitudes were significantly more negative contralateral than ipsilateral to the target, constituting the N2pc, t(1,17) = 2.94, p = .009, d = .24. For incongruent colors, there was no significant difference between the contralateral and ipsilateral amplitudes (p = .52). This finding suggests that color imagery influenced the processes responsible for attentional guidance – that is, when color imagery matched the color of the target, attention was efficiently guided to the target location. In contrast, when color imagery matched the distractors, attention was no longer efficiently guided to the target location.

Subjective estimates of visual imagery

We conducted further exploratory analyses to evaluate whether the imagery congruency effect was modulated by the subjective estimates of visual imagery. First, we conducted an analysis that assessed whether the trial-by-trial vividness ratings affected the imagery effect and attentional guidance. To do so, “no imagery” and “low/moderate imagery” vividness ratings were combined to form the “other” vividness ratings category and compared to the “high” imagery vividness rating category. The imagery ratings were collapsed this way in order to ensure a sufficient number of observations per cell. Even so, five participants were removed from analysis due to empty cells (i.e., not providing both other and high vividness ratings across the congruent and incongruent color conditions). Second, we conducted analyses to evaluate whether the post-experiment estimates of the frequency to which participants implemented the imagery instruction affected attentional guidance. To do this, we performed a split-half analysis that separated the half of participants with the lowest reported imagery use estimates from those with the highest, and compared these groups.

Vividness rating behavioral analyses

Mean RTs and corresponding error rates were submitted to a within-subject ANOVA that treated color congruency (congruent/incongruent) and vividness rating (other/strong) as factors. The analysis of RTs revealed that there was no interaction of color congruency and vividness rating (p = .90). There was, however, a significant main effect of color congruency, F(1,12) = 37.1, p < .001, η2p = .76, reflecting faster responses for the congruent (1,043 ms) than incongruent (1,223 ms) color condition. There was also a significant main effect of vividness rating, F(1,12) = 24.2, p < .001, η2p = .67, reflecting faster responses when the high vividness (1,154 ms) relative to when the other vividness (1,202 ms) ratings were reported. The left panel of Fig. 3 depicts the mean RTs collapsed across the high and other vividness ratings.

The left panel depicts the mean response times and error percentages for the congruent and incongruent color conditions for each the high and other vividness rating categories. The right panel depicts the mean N2pc amplitudes, which were computed by subtracting the mean peak ipsilateral electrical activity from the mean peak contralateral electrical activity within the designated N2pc time window. Error bars across both the left and right panels represent the standard error of the mean corrected for between-subjects variability (Cousineau, 2005; Morey, 2008)

Interestingly, there was a significant interaction of color congruency and vividness rating in the analysis of error rates, F(1,12) = 8.06, p < .016, η2p = .42. This interaction was explored further by conducting additional analyses that evaluated the effect of color congruency for the high and other vividness ratings separately. The analysis of the high vividness ratings revealed that there was no significant difference in the error percentages of the congruent (2.8%) and incongruent (3.8%) color conditions (p = .38). The analysis of the other vividness ratings revealed a significant effect of color congruency, t(12) = 3.30, p = .007, d = .92, reflecting higher error percentages for the congruent (9.1%) than incongruent (3.3%) color conditions. Together the results of the RT and error rate analyses constitute a speed-accuracy trade-off. That is, there appeared to be a criterion shift towards responding quickly at the risk of making errors for the imagery congruent than incongruent color conditions when participants generated imagery with relatively low vividness. It may be that imagery with relatively low vividness hindered responding to the imagery congruent targets by producing a form of habituation like that underlying some negative repetition effects (see Cochrane & Milliken, 2020). Alternative and not mutually exclusive, it is also possible that participants used vividness ratings to reflect their performance more generally – that is, when participants made an error in the congruent color condition, this may have led them to report relatively low imagery vividness. While both of these possibilities are viable, it is noteworthy that we have consistently observed larger imagery congruency effects corresponding to high vividness ratings than low vividness ratings in published and unpublished studies (e.g., Cochrane & Milliken, 2019; Cochrane Nwabuike et al., 2018). Further, this pattern of results was present when imagery vividness was cued prior to a visual search task, when these ratings could not be used to reflect performance (Cochrane, Ng, Khosla, & Milliken, submitted; see also, Pearson, Rademaker, & Tong, 2011).

Vividness rating N2pc analyses

Mean N2pc amplitudes (as constituted above) were submitted to a within-subject ANOVA that treated color congruency (congruent/incongruent), laterality (contralateral/ipsilateral), and vividness rating (other/high) as factors. This analysis revealed a three-way interaction of color congruency, laterality, and vividness rating that was not significant (p = .09). Given a priori interests, we felt it worthwhile to conduct additional exploratory analyses to evaluate whether the N2pc amplitudes differed for the high and other vividness ratings. For the high vividness ratings, although the interaction of congruency and laterality was not significant (p = .10), further analyses demonstrated a significant N2pc for congruent colors, t(12) = 2.62, p = .02, d = .63, and a non-significant pattern of results that trended in the opposite direction for incongruent colors (p = .46). In other words, when color imagery was vivid and matched the target, attention was guided to the target location. When color imagery was vivid and matched the distractors, attention appeared to be guided to the visual hemifield that was contralateral to the target most of the time (i.e., the location where two out of three distractors were presented). This pattern of results was not observed for the other vividness ratings (p = .30), instead there was a trend towards an N2pc for the congruent color condition (p = .11) and a slight trend towards the N2pc for the incongruent color condition (p = .74). The right panel of Fig. 3 depicts the N2pc amplitudes across the high and other vividness ratings.

Estimates of imagery use N2pc analyses

Here we explored whether the amplitude of the N2pc (as constituted above) was associated with the post-experiment estimates of the frequency at which participants implemented the imagery instruction. Participants were split into high and low groups based on their percentage estimate of imagery use. The mean estimate of the high group was 82.5% and the low group was 54.4%. Based on these groups, separate mixed-factor ANOVAs were conducted for the congruent and incongruent colors that treated laterality (contralateral/ipsilateral) as a within-subject factor and imagery group (high/low) as a between-subjects factor. For the congruent colors, the analysis revealed an interaction of laterality and imagery group that was not significant (p = .09). Given our a priori interest in whether the N2pc amplitude was associated with the frequency of imagery use, we examined this interaction further by analyzing the simple main effects of laterality for the high and low imagery groups separately. For the high imagery group, the analysis revealed a significant N2pc – that is, there was a greater negative amplitude contralateral than ipsilateral to the target, t(8) = 4.04, p = .004, d = .24. For the low imagery group, the analysis revealed no effect of laterality (p = .42). The analysis of incongruent colors revealed no significant effects (all F < 1). In other words, the strength at which color imagery guided attention was associated with the post-experiment estimates of imagery instruction use, much like they were with the trial-by-trial vividness ratings. Assuming that the low imagery participants were as aware of the color imagery cues as the high imagery participants, this finding suggests that the attentional guidance effects here were due to color imagery rather than a color expectancy that was not visual in nature.

General discussion

The purpose of the present study was to evaluate whether color imagery affected the processes underlying attentional guidance. There were three key results. First, color imagery prior to a singleton search task resulted in faster responses when it was congruent than incongruent with the target, supporting the notion that color imagery can impact visual search task performance. Second and most important, congruent color imagery elicited an N2pc, suggesting that color imagery impacted the processes underlying attentional guidance. Further, the N2pc was absent when color imagery was incongruent with the target (i.e., congruent with the distractors), suggesting that attention was guided to the distractor locations instead. Third, there was preliminary evidence that subjective estimates of visual imagery impacted the N2pc – that is, the N2pc was only present when color imagery vividness was reported as high, and for participants who reported frequently implementing the imagery instruction across the experimental session. Overall, this study demonstrates the novel finding that color imagery can affect attentional guidance.

A finding of the present study that is worthy of further discussion is the absence of the N2pc in the color incongruent condition. The reason we suspect that the N2pc was absent here was that when participants generated color imagery that matched the distractor, one of the distractor locations was selected. Importantly, two of the three distractors were located in the opposite visual hemifield to the target, meaning that the negative electrical activity typically constituting the N2pc corresponded to the target-ipsilateral than the target-contralateral location. It is also likely that on some incongruent trials attention was guided to the target-ipsilateral distractor and singleton color target, which was the reason why we observed no overall electrical difference and not an opposite pattern of results. It is also conceivable that incongruent trials reflected the summed electrical activity of target and distractor location selection. That is, participants may have selected the distractor then the target, producing roughly equivalent contralateral and ipsilateral activity.

The present findings have important implications when considering another phenomenon known as the Priming of Pop-out (PoP) effect. The PoP effect constitutes the robust finding that observers are faster at responding when color target and distractors are repeated rather than switched across trials of a color singleton search task (Maljkovic & Nakayama, 1994). While there are competing theories on the precise nature of the PoP effect (for a review, see Kristjánsson & Campana, 2010), it is typically suggested that the PoP effect is due to the formation of a memory trace that incorporates target and distractor representations (although see Cochrane & Pratt, 2020). When the target and distractor color information is repeated across trials, search performance is enhanced since this memory trace is automatically retrieved and it matches that of the previous search trial. Responding is slowed when the target and distractor colors are switched since this memory trace is not informative (or misleading) of the target and distractor identity information.

While at a surface level the PoP and the imagery congruency effects are quite different phenomena, it may be the case that the process responsible for storing these color representations is one and the same. A study supporting this notion is that of Eimer, Kiss, and Cheung (2010), which showed larger N2pc amplitudes when the target and distractor colors are repeated than switched across trials (see also Feldmann-Wüstefeld & Schubö, 2016; Kristjánsson, Vuilleumier, Schwartz, Macaluso, & Driver, 2007; Töllner, Gramann, Müller, Kiss, & Eimer, 2008). In other words, the representations underlying the PoP effect and the imagery congruency effect appear to impact attentional guidance in a similar manner. These similar findings beg the question: are the representations driving the PoP effect and the imagery congruency effect one and the same? We suspect that the answer to this question is “yes.” While it is clear that the representations underlying the PoP and the imagery congruency effects emerge in a different manner, we suspect that the representations guiding each of these phenomena are subserved by the same memory system. That is, it appears that how an object comes to be represented in the brain (whether it be due to a passive memory trace or actively generated with imagery) is unimportant to attentional guidance; what matters is that identity information be sufficiently represented in this memory system (see also, Wolfe, Cave, & Franzel, 1989; Wolfe, 1994). This is to say that the frequently formulated dichotomy between selection history and top-down-based attentional guidance may be rather arbitrary – that is, selection history and top-down-based biases may simply reflect different ways of impacting a single memory system that influences attentional guidance.

A separate but related issue to that noted above has to do with the automaticity with which color imagery influences attentional guidance. While there is no debate that generating visual imagery (at least in the context of the present experiment) is a willful and deliberate action, it is possible that once color imagery is sufficiently represented, it automatically primes the observer, much like perceptual objects do (see also Soto, Heinke, Humphreys, & Blanco, 2005; Soto, Humphreys, & Heinke, 2006). To illustrate this point, it is reasonable to assume that the imagery procedure used here could aid visual search in two important ways: by clarifying the target and by heightening the saliency of relevant features. For example, if you were looking for your friend in a crowd, knowing what they were wearing may help you find them. Alternatively, it may be that imagery increases the saliency of congruent features independent of the specific goals of the observer – for example, visualizing your friend’s red hat may automatically direct your attention to all red items in the crowd. While the present study did not directly evaluate this issue, a provocative finding was that when queried, the majority of participants reported that they could not help but attend to stimuli that matched their color imagery, supporting the notion that imagery automatically heightens the saliency of imagery-congruent features in the search array. However, this finding does not rule out the possibility that imagery may have also helped clarify the target goal or that these target clarification processes depend on imagery at all. That is, while imagery appears to have an impact on performance above and beyond semantic and verbal color representations (Cochrane & Milliken, 2019, 2020; Cochrane, Nwabuike et al., 2018), it is possible that semantic representations (via the imagery cue) may have contributed to the search-performance benefit observed in the present study.

A parallel issue concerns the integration of imagery into the perceptual environment. Chang, Lewis, and Pearson (2013) explored this issue using a binocular rivalry task where different perceptual colors were presented to each eye following the generation of color imagery. While it has been robustly demonstrated that ocular dominance is biased to the eye where the imagery-congruent perceptual color is presented (Pearson, Rademaker, & Tong, 2011; Pearson, Clifford, & Tong, 2008; Sherwood & Pearson, 2010), Chang, Lewis, and Pearson showed that this effect depended on imagery being generated at the same location as the perceptual stimulus. That is, vision was only biased to perceptually congruent colors when imagery was localized there, suggesting that these imagery effects were of a retinotopic basis. Interestingly, while these imagery-based ocular dominance effects appear to depend on the overlap of imagery and perception in the same visual space, we have observed that imagery-based search effects do not depend on this overlap. In a series of experiments, imagery congruency effects (like those reported here) were present when color imagery was generated in a central box that was never the location of the target (Cochrane, Siddhpuria, & Milliken, 2019). Accordingly, we suspect that imagery can impact a variety of different processes in the vision-perception pipeline. That is, while location priming effects (like those of Chang, Lewis, & Pearson, 2013) may depend on imagery being spatially localized, those associated with attentional guidance likely do not.

Conclusions

The present study demonstrated that color imagery can affect attentional guidance during visual search as reflected by the N2pc component. There was also preliminary evidence that attentional guidance depended on the vividness of color imagery and the frequency at which participants implemented the imagery instruction. Overall, the present study showed that representations in the “mind’s eye” can have a profound impact on our ability to search visual environments.

Open practices statement

The experiments reported in this article were not preregistered. The data for all experiments is publicly available at the Center of Open Science website (osf.io/ntbf6). Requests for materials can be sent via email to the corresponding author at brett.cochrane@utoronto.ca.

Notes

All Cohen’s d values reported in this manuscript were based on an aggregate measure of performance in each condition for each participant.

References

Baier, D., & Ansorge, U. (2019). Investigating the role of verbal templates in contingent capture by color. Attention, Perception, & Psychophysics, 86(6), 1846-1879.

Chang, S., Lewis, D. E., & Pearson, J. (2013). The functional effects of color perception and color imagery. Journal of Vision, 13(10), 1-10.

Cochrane, B. A., & Milliken, B. (2019). Imagined event files: an interplay between imagined and perceived objects. Psychonomic Bulletin & Review, 26(2), 538-544.

Cochrane, B. A., & Milliken, B. (2020). The representational basis of positive and negative repetition effects. Journal of Experimental Psychology: Human Perception and Performance, 46(3), 252-263.

Cochrane, B. A., & Pratt, J. (2020). Re-examining Maljkovic and Nakayama (1994): Conscious expectancy does affect the priming of pop-out effect. Attention, Perception, & Psychophysics, 82(5), 2693-2702.

Cochrane, B. A., Ng, V., Khosla, A., & Milliken, B. (submitted). Looking into the Mind’s Eye: Directed and evaluated imagery vividness modulates imagery-perception congruency effects. Psychonomic Bulletin & Review, PBR-BR-20-330.

Cochrane, B. A., Nwabuike, A. A., Thomson, D. R., & Milliken, B. (2018). An imagery-induced reversal of intertrial priming in visual search. Journal of Experimental Psychology: Learning, Memory, and Cognition, 44(4), 572-587.

Cochrane, B. A., Siddhpuria, S., & Milliken, B. (2019). Cueing color imagery: a critical analysis of imagery-perception congruency effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(8), 1410-1421.

Cochrane, B. A., Zhu, H., & Milliken, B. (2018). Strategic visual imagery and automatic priming effects in pop-out visual search. Consciousness and Cognition, 65, 59-70.

Cousineau, D. (2005). Confidence intervals in within-subject design: a simpler solution to Loftus and Masson’s method. Tutorials in Quantitative Methods for Psychology, 1, 42-45.

Delorme, A., & Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience, 134(1), 9-21.

Drisdelle, B. L., Aubin, S., & Jolicoeur, P. (2017). Dealing with ocular artifacts on lateralized ERPs in studies of visual-spatial attention and memory: ICA correction versus epoch rejection. Psychophysiology, 54(1), 83-99.

Eimer, M. (1996). The N2pc component as an indicator of attentional selectivity. Electroencephalography and Clinical Neurophysiology, 99(3), 225-234.

Eimer, M., Kiss, M., & Cheung, T. (2010). Priming of pop-out modulates attentional target selection in visual search: behavioural and electrophysiological evidence. Vision Research, 50(14), 1353-1361.

Feldmann-Wüstefeld, T. & Schubö, A. (2016). Intertrial priming due to distractor repetition is eliminated in homogenous contexts. Attention, Perception, & Psychophysics, 78(7), 1935-1947.

Kawashima, T., & Matsumoto, E. (2017). Cognitive control of attentional guidance by visual and verbal working memory representations. Japanese Psychological Research, 59(1), 49-57.

Kosslyn, S. M. (1996). Image and Brain: The Resolution of the Imagery Debate. Cambridge MA: MIT press.

Kristjánsson, Á. & Campana, G. (2010). Where perception meets memory: A review of repetition priming in visual search tasks. Attention, Perception, & Psychophysics, 72(1), 5-18.

Kristjánsson, Á., Vuilleumier, P., Schwartz, S., Macaluso, E., & Driver, J. (2007). Neural basis for priming of pop-out during visual search revealed with fMRI. Cerebral Cortex, 17(7), 1612-1624.

Lopez-Calderon, J., & Luck, S. J. (2014). ERPLAB: an open-source toolbox for the analysis of event-related potentials. Frontiers in Human Neuroscience, 8, 213.

Luck, S. J. (2011). Electrophysiological correlates of the focusing of attention within complex visual scenes: N2pc and the related ERP components. In E. S. Kappenman & S. J. Luck (Eds.), the Oxford Handbook of Event-Related Potential Components (pp. 329-360). New York, NY: Oxford University Press.

Luck, S. J., & Hillyard, S. A. (1990). Electrophysiological evidence for parallel and serial processing during visual search. Perception & Psychophysics, 48(6), 603-617.

Luck, S. J., & Hillyard, S. A. (1994a). Electrophysiological correlates of feature analysis during visual search. Psychophysiology, 31(3), 291-208.

Luck, S. J., & Hillyard, S. A. (1994b). Spatial filtering during visual search: evidence from human electrophysiology. Journal of Experimental Psychology: Human Perception and Performance, 20(5), 1000-1014.

Maljkovic, V. & Nakayama, K. (1994). Priming of pop-out: i. Role of features. Memory & Cognition, 22(6), 657-672.

Morey, R. D. (2008). Confidence intervals from normalized data: a correction to Cousineau (2005). Tutorials in Quantitative Methods for Psychology, 4(2), 61-64.

Moriya, J. (2018). Visual mental imagery influences attentional guidance. Attention, Perception, & Psychophysics, 80(5), 1127-1142.

Müller, H. J., & Krummenacher, J. (2006). Locus of dimension weighting: Preattentive or postselective? Visual Cognition, 14(4-8), 490-513.

Pearson, J., & Kosslyn, S. M. (2015). The heterogeneity of mental representation: Ending the imagery debate. Proceedings of the National Academy of Sciences of the United States of America, 112(33), 10089-10092.

Pearson, J., Clifford, C. W. G., & Tong, F. (2008). The functional impact of mental imagery on conscious perception. Current Biology, 13(8), 982-986.

Pearson, J., Rademaker, R. L., & Tong, F. (2011). Evaluating the Mind’s Eye: The metacognition of visual imagery. Psychological Science, 22(12), 1535-1542.

Pylyshyn, Z. W. (1973). What the mind’s eye tells the mind’s brain: a critique of mental imagery. Psychological Bulletin, 80(1), 1-24.

Pylyshyn, Z. W. (2003). Return of the mental image: are there really pictures in the brain? Trends in Cognitive Sciences, 7(3), 113-118.

Reinhart, R. M. G., McClenahan, L. J., & Woodman, G. F. (2015). Visualizing trumps vision in training attention. Psychological Science, 26(7), 1114-1122.

Sherwood, R., & Pearson, J. (2010). Closing the mind’s eye: Incoming luminance signals disrupt visual imagery. PLoS One, 5(12), e15217.

Soto, D., Heinke, D., Humphreys, G. W., & Blanco, M. J. (2005). Early, involuntary top-down guidance of attention from working memory. Journal of Experimental Psychology: Human Perception and Performance, 31(2), 248-261.

Soto, D., Humphreys, G. W., & Heinke, D. (2006). Working memory can guide pop-out search. Vision Research, 46(6-7), 1010-1018.

Theeuwes, J., Reimann, B., & Mortier, K. (2006). Visual search for featural singletons: No top-down modulation, only bottom-up priming. Visual Cognition, 14(4-8), 466-489.

Töllner, T., Gramann, K., Müller, H. J., Kiss, M., & Eimer, M. (2008). Electrophysiological markers of visual dimension changes and response changes. Journal of Experimental Psychology: Human Perception and Performance, 34(3), 531-542.

Tye, M. (1991). The Imagery Debate. Cambridge, MA: MIT Press.

Wantz, A. L., Borst, G., Mast, F. W., & Lobmaier, J. S. (2015). Colors in mind: a novel paradigm to investigate pure color imagery. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(4), 1152-1161.

Wolfe, J. M. (1994). Guided search 2.0: a revised model of visual search. Psychonomic Bulletin & Review, 1(2), 202-238.

Wolfe, J. M., Butcher, S. J., Lee, C., & Hyle, M. (2003). Changing your mind: On the contributions of top-down and bottom-up guidance in visual search for feature singletons. Journal of Experimental Psychology: Human Perception and Performance, 29(2), 483-502.

Wolfe, J. M., Cave, K. R., & Franzel, S. L. (1989). Guided search: an alternative to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception and Performance, 15(3), 419-433.

Acknowledgements

Financial support for this study was provided in part by a Natural Sciences and Engineering Research Council of Canada Discovery Grant awarded to Bruce Milliken. The funding agreement ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report. The authors report no conflicts of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cochrane, B.A., Townsend, B., Lee, R.C. et al. Visual imagery influences attentional guidance during visual search: Behavioral and electrophysiological evidence. Atten Percept Psychophys 83, 58–66 (2021). https://doi.org/10.3758/s13414-020-02208-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-020-02208-7