TAG-Net: Target Attitude Angle-Guided Network for Ship Detection and Classification in SAR Images

Abstract

:1. Introduction

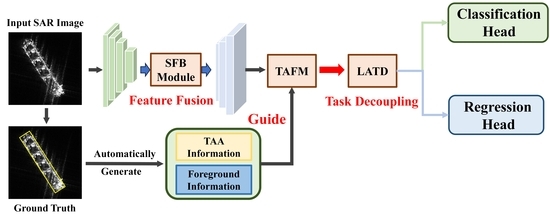

- To address the challenges of detecting and classifying targets with diverse imaging variations at different TAAs, we propose a TAFM module. It uses TAA information and foreground information as guidance and applies an adaptive feature-level fusion strategy to dynamically learn more representative features. This module effectively reduces intra-class variations, increases inter-class distinctions, and improves the accuracy in locating ships under various imaging conditions.

- Considering the different requirements of detection and classification tasks for scattering information, an LATD is designed, which extracts multi-level features through stacked convolutional layers and uses layer attention to adaptively select the most suitable features for each task, thereby improving the overall accuracy.

- The SFB module is introduced to adopt an adaptive dynamic fusion method to balance the multi-size features, providing high-resolution and semantically rich features for multi-scale ships. Moreover, it highlights the ship targets by extracting the global context through exploring inter-channel connections, effectively mitigating the impact of background interference.

2. Related Work

2.1. Traditional Ship Detection and Classification Method in SAR Images

2.2. Deep Learning-Based Ship Detection and Classification Methods in SAR Images

3. Proposed Method

3.1. Overall Scheme of the Proposed Method

3.2. TAA-Aware Feature Modulation Module (TAFM)

3.3. Layer-Wise Attention-Based Task Decoupling Detection Head (LATD)

3.4. Salient-Enhanced Feature Balance Module (SFB)

3.5. Loss Function

4. Experiments and Results

4.1. Dataset and Settings

4.2. Evaluation Metrics

4.3. Ablation Studies

4.4. Comparison with CNN-Based Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ma, X.; Hou, S.; Wang, Y.; Wang, J.; Wang, H. Multiscale and dense ship detection in SAR images based on key-point estimation and attention mechanism. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5221111. [Google Scholar] [CrossRef]

- Ke, X.; Zhang, X.; Zhang, T. GCBANET: A global context boundary-aware network for SAR ship instance segmentation. Remote Sens. 2022, 14, 2165. [Google Scholar] [CrossRef]

- Jung, J.; Yun, S.H.; Kim, D.J.; Lavalle, M. Damage-Mapping Algorithm Based on Coherence Model Using Multitemporal Polarimetric–Interferometric SAR Data. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1520–1532. [Google Scholar] [CrossRef]

- Akbari, V.; Doulgeris, A.P.; Eltoft, T. Monitoring Glacier Changes Using Multitemporal Multipolarization SAR Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3729–3741. [Google Scholar] [CrossRef]

- Sun, Y.; Hua, Y.; Mou, L.; Zhu, X.X. CG-Net: Conditional GIS-Aware Network for Individual Building Segmentation in VHR SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5201215. [Google Scholar] [CrossRef]

- Tan, W.; Li, J.; Xu, L.; Chapman, M.A. Semiautomated Segmentation of Sentinel-1 SAR Imagery for Mapping Sea Ice in Labrador Coast. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1419–1432. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense Attention Pyramid Networks for Multi-Scale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. High-speed ship detection in SAR images based on a grid convolutional neural network. Remote Sens. 2019, 11, 1206. [Google Scholar] [CrossRef]

- Joshi, S.K.; Baumgartner, S.V.; da Silva, A.B.; Krieger, G. Range-Doppler based CFAR ship detection with automatic training data selection. Remote Sens. 2019, 11, 1270. [Google Scholar] [CrossRef]

- Ai, J.; Qi, X.; Yu, W.; Deng, Y.; Liu, F.; Shi, L. A new CFAR ship detection algorithm based on 2-D joint log-normal distribution in SAR images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 806–810. [Google Scholar] [CrossRef]

- Weiss, M. Analysis of some modified cell-averaging CFAR processors in multiple-target situations. IEEE Trans. Geosci. Remote Sens. 1982, 1, 102–114. [Google Scholar] [CrossRef]

- Hansen, V.G. Constant false alarm rate processing in search radars. IEEE Radar-Present Future 1973, 20, 325–332. [Google Scholar]

- Knapskog, A.O. Classification of ships in TerraSAR-X images based on 3D models and silhouette matching. In Proceedings of the 8th European Conference on Synthetic Aperture Radar, Aachen, Germany, 7–10 June 2010; pp. 1–4. [Google Scholar]

- Wang, C.; Zhang, H.; Wu, F.; Jiang, S.; Zhang, B.; Tang, Y. A novel hierarchical ship classifier for COSMO-SkyMed SAR data. IEEE Geosci. Remote Sens. Lett. 2013, 11, 484–488. [Google Scholar] [CrossRef]

- Lang, H.; Zhang, J.; Zhang, X.; Meng, J. Ship classification in SAR image by joint feature and classifier selection. IEEE Geosci. Remote Sens. Lett. 2015, 13, 212–216. [Google Scholar] [CrossRef]

- Goldstein, G. False-alarm regulation in log-normal and Weibull clutter. IEEE Trans. Geosci. Remote Sens. 1973, 9, 84–92. [Google Scholar] [CrossRef]

- Novak, L.M.; Owirka, G.J.; Brower, W.S.; Weaver, A.L. The automatic target-recognition system in SAIP. Linc. Lab. J. 1997, 10, 187–202. [Google Scholar]

- Zhu, M.; Hu, G.; Zhou, H.; Wang, S. Multiscale ship detection method in SAR images based on information compensation and feature enhancement. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5117913. [Google Scholar] [CrossRef]

- Tang, G.; Zhao, H.; Claramunt, C.; Zhu, W.; Wang, S.; Wang, Y.; Ding, Y. PPA-Net: Pyramid Pooling Attention Network for Multi-Scale Ship Detection in SAR Images. Remote Sens. 2023, 15, 2855. [Google Scholar] [CrossRef]

- Sun, Z.; Dai, M.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. An anchor-free detection method for ship targets in high-resolution SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7799–7816. [Google Scholar] [CrossRef]

- Shang, Y.; Pu, W.; Wu, C.; Liao, D.; Xu, X.; Wang, C.; Huang, Y.; Zhang, Y.; Wu, J.; Yang, J.; et al. HDSS-Net: A Novel Hierarchically Designed Network With Spherical Space Classifier for Ship Recognition in SAR Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5222420. [Google Scholar] [CrossRef]

- Guan, Y.; Zhang, X.; Chen, S.; Liu, G.; Jia, Y.; Zhang, Y.; Gao, G.; Zhang, J.; Li, Z.; Cao, C. Fishing Vessel Classification in SAR Images Using a Novel Deep Learning Model. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5215821. [Google Scholar] [CrossRef]

- Alonso, M.T.; López-Martínez, C.; Mallorquí, J.J.; Salembier, P. Edge enhancement algorithm based on the wavelet transform for automatic edge detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2010, 49, 222–235. [Google Scholar] [CrossRef]

- Baselice, F.; Ferraioli, G. Unsupervised coastal line extraction from SAR images. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1350–1354. [Google Scholar] [CrossRef]

- Zhou, W.; Xie, J.; Li, G.; Du, Y. Robust CFAR detector with weighted amplitude iteration in nonhomogeneous sea clutter. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1520–1535. [Google Scholar] [CrossRef]

- Ao, W.; Xu, F.; Li, Y.; Wang, H. Detection and discrimination of ship targets in complex background from spaceborne ALOS-2 SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 536–550. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Xing, X.; Zhou, S.; Zou, H. Area ratio invariant feature group for ship detection in SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2376–2388. [Google Scholar] [CrossRef]

- Chen, W.t.; Ji, K.f.; Xing, X.w.; Zou, H.x.; Sun, H. Ship recognition in high resolution SAR imagery based on feature selection. In Proceedings of the 2012 International Conference on Computer Vision in Remote Sensing (CVRS 2012), Xiamen, China, 16–18 December 2012; pp. 301–305. [Google Scholar]

- Yin, D.; Hu, L.; Li, B.; Zhang, Y. Adapter is All You Need for Tuning Visual Tasks. arXiv 2023, arXiv:2311.15010. [Google Scholar]

- Ke, X.; Zhang, T.; Shao, Z. Scale-aware dimension-wise attention network for small ship instance segmentation in synthetic aperture radar images. J. Appl. Remote Sens. 2023, 17, 046504. [Google Scholar] [CrossRef]

- Zhu, Y.; Guo, P.; Wei, H.; Zhao, X.; Wu, X. Disentangled Discriminator for Unsupervised Domain Adaptation on Object Detection. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 5685–5691. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1440–1448. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 9627–9636. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise separable convolution neural network for high-speed SAR ship detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef]

- Wang, S.; Cai, Z.; Yuan, J. Automatic SAR Ship Detection Based on Multi-Feature Fusion Network in Spatial and Frequency Domain. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4102111. [Google Scholar]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An anchor-free method based on feature balancing and refinement network for multiscale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1331–1344. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Liu, C.; Shi, J.; Wei, S.; Ahmad, I.; Zhan, X.; Zhou, Y.; Pan, D.; Li, J.; et al. Balance learning for ship detection from synthetic aperture radar remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 182, 190–207. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. A polarization fusion network with geometric feature embedding for SAR ship classification. Pattern Recognit. 2022, 123, 108365. [Google Scholar] [CrossRef]

- He, J.; Wang, Y.; Liu, H. Ship classification in medium-resolution SAR images via densely connected triplet CNNs integrating Fisher discrimination regularized metric learning. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3022–3039. [Google Scholar] [CrossRef]

- Wang, C.; Pei, J.; Luo, S.; Huo, W.; Huang, Y.; Zhang, Y.; Yang, J. SAR Ship Target Recognition via Multiscale Feature Attention and Adaptive-Weighed Classifier. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4003905. [Google Scholar] [CrossRef]

- Zhu, H. Ship classification based on sidelobe elimination of SAR images supervised by visual model. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 7–14 May 2021; pp. 1–6. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Recovering realistic texture in image super-resolution by deep spatial feature transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 606–615. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 510–519. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS–improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding Vertex on the Horizontal Bounding Box for Multi-Oriented Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1452–1459. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9759–9768. [Google Scholar]

| TAFM Module | LATD | SFB Module | mAP | Params (M) |

|---|---|---|---|---|

| ✕ | ✕ | ✕ | 0.6792 | 28.40 |

| ✓ | ✕ | ✕ | 0.7179 | 30.77 |

| ✕ | ✓ | ✕ | 0.7207 | 32.83 |

| ✕ | ✕ | ✓ | 0.7079 | 30.18 |

| ✓ | ✓ | ✕ | 0.7296 | 34.93 |

| ✓ | ✓ | ✓ | 0.7391 | 36.71 |

| Models | BC | CS | OT | WS | RV | T0 | T1 | T2 | T3 | T4 | T5 | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6651 | 0.5313 | 0.7941 | 0.4937 | 0.5318 | 0.6948 | 0.7617 | 0.8161 | 0.8335 | 0.5738 | 0.8875 | 0.6792 |

| TAFM | 0.6464 | 0.5479 | 0.7994 | 0.5110 | 0.5573 | 0.8136 | 0.7651 | 0.8474 | 0.8878 | 0.6746 | 0.8459 | 0.7179 |

| Supervised | Unsupervised | mAP |

|---|---|---|

| ✕ | ✕ | 0.6792 |

| ✕ | ✓ | 0.6892 |

| ✓ | ✕ | 0.7179 |

| First Step | Second Step | mAP |

|---|---|---|

| ⊕ | ⊕ | 0.7053 |

| ⊗ | ⊗ | 0.7080 |

| ⊗ | ⊕ | 0.7179 |

| ⊕ | ⊗ | 0.7123 |

| Loss | mAP |

|---|---|

| L1 Loss | 0.7113 |

| Smooth L1 Loss | 0.7179 |

| 0.3 | 0.5 | 0.7 | 1 | 2 | |

|---|---|---|---|---|---|

| mAP | 0.7076 | 0.7179 | 0.7120 | 0.7153 | 0.7106 |

| Models | BC | CS | OT | WS | RV | T0 | T1 | T2 | T3 | T4 | T5 | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6651 | 0.5313 | 0.7941 | 0.4937 | 0.5318 | 0.6948 | 0.7617 | 0.8161 | 0.8335 | 0.5738 | 0.8875 | 0.6792 |

| LATD | 0.6931 | 0.5497 | 0.7788 | 0.5010 | 0.5987 | 0.7859 | 0.7682 | 0.8632 | 0.9039 | 0.6333 | 0.8519 | 0.7207 |

| Dilated Convolutional Layer | mAP |

|---|---|

| ✕ | 0.7091 |

| ✓ | 0.7207 |

| Models | BC | CS | OT | WS | RV | T0 | T1 | T2 | T3 | T4 | T5 | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6651 | 0.5313 | 0.7941 | 0.4937 | 0.5318 | 0.6948 | 0.7617 | 0.8161 | 0.8335 | 0.5738 | 0.8875 | 0.6792 |

| SFB | 0.6361 | 0.6043 | 0.7861 | 0.4907 | 0.5691 | 0.7762 | 0.7498 | 0.8362 | 0.8321 | 0.6844 | 0.8216 | 0.7079 |

| CFB Stage | SFA Stage | mAP |

|---|---|---|

| ✕ | ✕ | 0.6792 |

| ✓ | ✕ | 0.7029 |

| ✕ | ✓ | 0.6920 |

| ✓ | ✓ | 0.7079 |

| Method | Framework | mAP | Params (M) |

|---|---|---|---|

| Oriented R-CNN [53] | Two-Stages | 0.7146 | 41.37 |

| ROI Trans [54] | Two-Stages | 0.6972 | 55.13 |

| Gliding Vertex [55] | Two-Stages | 0.5911 | 41.14 |

| Rotated Faster RCNN [32] | Two-Stages | 0.6553 | 41.14 |

| -Net [56] | Single-Stage | 0.6722 | 38.60 |

| Oriented CenterNet (Baseline) [45] | Single-Stage | 0.6792 | 28.40 |

| Rotated ATSS [57] | Single-Stage | 0.6978 | 36.03 |

| Rotated FCOS [35] | Single-Stage | 0.6510 | 31.92 |

| TAG-Net (Ours) | Single-Stage | 0.7391 | 36.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, D.; Wu, Y.; Dai, W.; Miao, T.; Zhao, W.; Gao, X.; Sun, X. TAG-Net: Target Attitude Angle-Guided Network for Ship Detection and Classification in SAR Images. Remote Sens. 2024, 16, 944. https://doi.org/10.3390/rs16060944

Pan D, Wu Y, Dai W, Miao T, Zhao W, Gao X, Sun X. TAG-Net: Target Attitude Angle-Guided Network for Ship Detection and Classification in SAR Images. Remote Sensing. 2024; 16(6):944. https://doi.org/10.3390/rs16060944

Chicago/Turabian StylePan, Dece, Youming Wu, Wei Dai, Tian Miao, Wenchao Zhao, Xin Gao, and Xian Sun. 2024. "TAG-Net: Target Attitude Angle-Guided Network for Ship Detection and Classification in SAR Images" Remote Sensing 16, no. 6: 944. https://doi.org/10.3390/rs16060944