1. Introduction

Land cover reflects essential information about the Earth’s surface characteristics and is one of the critical variables for monitoring the Sustainable Development Goals (SDGs). It holds great significance for research in natural resource management, ecological environmental protection, and urban planning [

1,

2,

3]. With the rapid development of the economy and society, issues such as soil degradation, environmental pollution, and urban expansion have shown a noticeable upward trend. Therefore, there is an urgent need for accurate and detailed land-cover information to provide a scientific basis for policy formulation and sustainable development research.

At present, high-resolution fine-scale land-cover products covering large areas are limited. Over recent decades, the spatial resolution and quality of global or regional land-cover products have gradually evolved from coarse to fine. Land-cover products based on coarse resolution data, such as AVHRR and MODIS [

4,

5,

6,

7,

8], are commonly recognized by users as possessing a lack of spatial detail, low classification accuracy, and poor consistency between different products, making them inadequate for modern applications [

9,

10]. The high-resolution remote sensing data freely available from Landsat and Sentinel-2 provide data support for producing more fine-grained land-cover products. For instance, Gong et al. [

11] generated the 2015 global 30 m land-cover product FROM_GLC30 based on Landsat data, which includes 28 land-cover types, but the overall accuracy was only 52.76%. Zhang et al. [

12] produced the 2015 global 30 m land-cover product GLC_FCS30 by combining the multi-temporal random forest model, time series of Landsat imagery, and global training data. It contains 16 global land-cover types and 14 regional fine-grained land-cover types, with overall accuracy rates of 71.4% and 68.7%, respectively. However, due to the low classification accuracy of fine-grained land-cover types, it is difficult to capture the spatial detail information of the land cover, hindering the fine-scale application of existing products. With the rapid enhancement of data storage and computing power, it becomes feasible to produce land-cover products with finer resolution [

13,

14], such as FROM_GLC10 [

15], ESA World Cover [

16], and ESRI Land Cover [

17], which can support the fine-grained monitoring of global land cover and its changes. In general, the existing large-scale, coarse-resolution products offer a wide range of categories, but suffer from low mapping accuracy. On the other hand, for high-resolution products, due to limitations in classification systems and algorithms, the improvement in data quality does not yield more fine-grained classification quantity.

Establishing a scientific classification system is important in achieving fine-grained land-cover classification. In 1996, the Food and Agriculture Organization of the United Nations (FAO) [

18] established a standard and comprehensive land-cover classification system—LCCS (Land Cover Classification System), which is suitable for research at various scales and with different data sources. Some classification systems, such as the European Union Joint Research Center GLC2000 [

6] and the Basic Geographic National Conditions Monitoring Classification Standard (CH/T 9029-2019) [

19], drew upon the design concepts of the LCCS classification system. However, these classification systems exhibit significant differences in terms of compatibility across different scenarios, often necessitating additional data processing and conversion for their use [

20]. Subsequently, in 2010, China initiated essential research on global land-cover remote sensing mapping techniques [

21]. Chen et al. [

22] utilized the pixel object knowledge method to divide the world into ten types, and this classification system is currently one of the most widely applied systems globally and at regional levels. Nevertheless, these predefined categories are challenging in terms of encompassing all of the natural land-cover types. Regarding land-cover product mapping, it is advisable to explore the finest possible category system based on the data and target scenarios, ultimately leading to superior land-cover products.

The classification method employed is the critical factor influencing the accuracy of large-scale land-cover mapping. As exemplified by convolutional neural networks (CNNs), deep learning approaches exhibit strong feature extraction capabilities and are widely used in large-scale land-cover mapping tasks [

23,

24,

25,

26]. However, due to the significant scale variations and diverse shapes of different land features, the conventional layers used in the above research have the problem of a limited receptive field, hindering the comprehensive learning of contextual information [

27,

28]. The limitation results in slight patch effects at the transition zones of features with similar characteristics, leading to the discontinuity of some slender land features in the classification results, thereby impeding the practical application of land-cover products. While transformers utilize multi-head self-attention modules to rapidly capture long-range feature relationships, thereby maximizing the utilization of global context information, they provide innovative concepts for large-scale land-cover mapping [

29,

30]. Liu et al. [

31] proposed the Swin Transformer in 2021, which uses sliding windows and hierarchical structures to extract multi-scale features. Based on the idea of Swin-T, Cao et al. [

32] proposed Swin-UNet in 2021, which is a U-shaped encoding–decoding architecture of the base transformer and has achieved great success in the field of medical image segmentation. In addition, due to the issue of imbalanced sample sizes in the training sample data [

33], models tend to favor learning the majority class during training while ignoring rare categories, thus affecting the overall classification accuracy [

34]. Existing research has attempted to rebalance sample quantities through re-sampling and re-weighting [

35]. However, these methods have specific shortcomings in specific use cases, such as the fact that under-sampling can lead to the loss of essential feature information, while over-sampling can result in overfitting for minority class data [

36]. When dealing with multi-classification tasks, the re-weighting method increases the complexity of determining the appropriate weight for each category due to the potential correlation between different categories [

37].

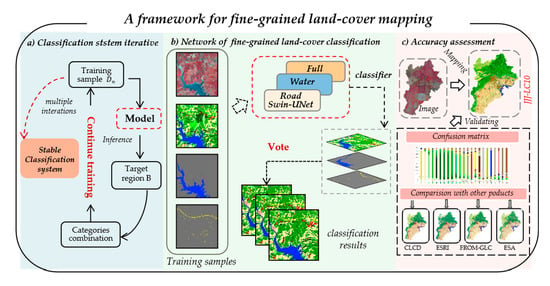

In general, the limitations of classification systems and classification algorithms restrict the production of fine-grained land-cover products for large-scale scenarios. We propose a mapping framework for fine-grained land-cover classification, and its main contributions are as follows:

This paper proposes a fine-grained classification system iteration algorithm, which aims to explore a stable classification system tailored to specific sensors based on the target region. We applied this method to deduce a classification system for Sentinel-2 data, initially training the model with the sample dataset and constructing the initial classification system. Subsequently, the trained model was used to predict the target region, merging land-cover types with insufficient discriminability at a 10 m resolution. Through multiple iterations and the refinement of the classification system, we ultimately developed a stable classification system encompassing 23 land-cover types and achieved optimal mapping results.

The paper aims to address challenges in large-scale scenes, such as varying scales of target features, imbalanced sample quantities, and weak connectivity of slender features. We introduce a pyramid pooling module based on Swin-UNet to enhance the model’s perception of features at different scales. Additionally, we designed a combination loss function based on class balance, balancing the model’s learning ability for different features. Independent model training was conducted for roads and water. Leveraging spatial relationships in natural features as clues, we used a voting algorithm to integrate predictions from independent models and the overall classification model, enhancing the generalization ability of slender features in complex scenarios.

Based on this framework, we produced the 2017 fine-grained land-cover product JJJLC-10 for the Beijing–Tianjin–Hebei region. We quantitatively assessed the accuracy of JJJLC-10 using a validation sample set of 4254 samples and visually compare it with four mainstream large-scale land-cover products, thus demonstrating the advantages of our product more intuitively. To the best of our knowledge, it is by far the richest product in terms of surface coverage types at the 10 m scale.

6. Conclusions

This paper proposes a mapping framework for fine-grained land-cover classification, aiming to produce large-scale land-cover products with fine-grained land-cover types. Firstly, we propose an iterative method for a fine-grained classification system, exploring a stable classification system tailored to a specific sensor for the target area. Through multiple iterations and the optimization of the classification system, we ultimately constructed a stable classification system for Sentinel-2 data, encompassing 23 land-cover types. Furthermore, we enhanced the model by introducing a pyramid pooling module on the basis of Swin-UNet and designed a combination loss function based on class balance. Additionally, while leveraging spatial relationships in natural features as clues, we used a voting algorithm to integrate predictions from the overall classification model and slender features. This approach addresses the challenges encountered in large-scale scenes, such as varying scales of target features, imbalanced sample quantities, and the weak connectivity of slender features.

To evaluate the performance of the proposed framework, we selected the 2017 Sentinel-2 image covering the Beijing–Tianjin–Hebei region as our experimental data. We produced the fine-grained land-cover product JJJLC-10 for this region, which covered 23 land-cover types. Subsequently, we conducted a validation of JJJLC-10 using a dataset comprising 4254 visually interpreted validation samples. The validation results demonstrate that within the I-level validation system, JJJLC-10 achieved an overall accuracy of 80.3% and a kappa coefficient of 0.7602, covering seven land-cover types. Within the II-level validation system, it achieved an overall accuracy of 72.2% and a kappa coefficient of 0.706, covering 23 land-cover types. Compared with the four land-cover products covering the Beijing–Tianjin–Hebei region, our analysis reveals that JJJLC-10 accurately represented the spatial distribution of various land-cover types, excelling in the classification of slender features like roads and water. Overall, JJJLC-10 exhibited significant advantages over 10 m resolution land-cover products in terms of classification quantity, classification accuracy, and spatial detail. In future research, we will continue our in-depth research to explore land-cover products with higher accuracy and more refined categories.