Cotton is the most produced and utilized natural fiber worldwide. It is not only an important raw material for the development of the textile industry but also an oil crop and fine chemical raw material. As the largest producer and consumer of cotton in the world, China plays an important role in global cotton production, consumption, and trade. Cotton is one of the most important cash crops in China, and it is directly related to the national economy and people’s livelihood. Additionally, cotton cultivation is closely related to irrigation and also has a serious impact on total nitrogen emissions during the growing season. Xinjiang is the largest cotton producer in China, but it is located in an arid and semiarid region with severe water scarcity. Moreover, global warming intensifies and accelerates potential evaporation from crop surfaces, so a decline in the availability of soil moisture to plants is obvious, and indirectly, the agricultural water demand has increased [

1]. With the development of society, the increase in water consumption in industries and domestically has led to a decrease in the available water for agriculture, exacerbates the supply–demand contradiction of agricultural water use, and seriously affects the sustainable development of agriculture [

2]. In addition, the flowering and boll stage is the most highly sensitive period of cotton to water and fertilizer demand, and it is also a period of centralized irrigation for cotton. Optimizing the irrigation scheduling strategy, managing water resources intensively and efficiently, and improving cotton water productivity are urgent challenges to be solved in arid and semiarid agriculture regions [

3]. Therefore, accurately measuring and analyzing the spatial distribution characteristics of early cotton is a basic prerequisite of agricultural water management and optimization in Xinjiang in order to provide strong support for the adjustment of regional economic structure, industrial structure, and water resource scheduling.

Traditional information about crop planting area, yield, and other data is mainly obtained by on-the-spot sampling survey methods, and then those reports are gathered and submitted step-by-step, which is not suitable for large-scale surveillance of agriculture information. However, such investigations involve a tremendous amount of work, lack quality control, and cannot provide spatial distribution information, making it difficult to meet the needs of modern management and decision-making. Satellite remote sensing, as an advanced detection technique for the recognition of ground objects, can obtain real-time information on a large scale from ground objects and has played an important role in cotton recognition [

4,

5]. With the steady development of sensor performance, cotton recognition has evolved from lower spatial resolution and multispectral remote sensing image data [

6,

7,

8] to high spatial resolution and hyperspectral remote sensing imagery [

9,

10], and its recognition degree and accuracy are constantly improving. Moreover, although the recognition and accuracy of images obtained by drones equipped with cameras are very high [

11], and the sampling time can be flexibly controlled, the observation range is smaller than satellite remote sensing, the cost of field operations is high, and large-scale surveillance of agriculture information requires a long cycle, making it unsuitable for early crop recognition. In recent years, experts and scholars have conducted extensive research on the extraction of cotton planting areas based on multitemporal or multi-source remote sensing image data [

12,

13]. Although it can achieve good recognition results, the recognition process is complex, and early identification of cotton remote sensing cannot be completed in time. Moreover, the interference of cloud cover and rather long revisiting cycles of high-resolution satellite exists, making it difficult to obtain multitemporal remote-sensing data during the cotton growth period, which brings many difficulties to the research of cotton recognition [

14]. At present, various classification methods based on pixels or objects have been used for remote-sensing recognition of crops [

15], such as the maximum likelihood method [

16], nearest neighbor sampling method [

17], spectral angle mapping method [

18], decision tree algorithm [

19], ear cap transformation method [

20], support vector machine [

21], random forest [

22], neural network [

23], and biomimetic algorithm [

24]. For example, Ji Xusheng et al. [

25] compared the recognition accuracy of different algorithms based on single high-resolution remote sensing images from specific time periods: SPOT-6 (May), Pleiades-1 (September), and WorldView-3 (October). With the rapid development of remote-sensing information deep excavation and information extraction technology, a series of crop classification algorithms and studies that do not rely on field sampling have also been carried out [

26,

27,

28]. MARIANA et al. [

29] used pixel-based and object-based time-weighted dynamic time to identify crop planting areas based on sentinel2 images, and this type of algorithm is becoming increasingly mature. Deep learning methods have been extensively used to extract valuable information due to their ability to extract multiple features of remote sensing images and to discover more subtle differences, as well as their high training efficiency and classification accuracy and the ability to reduce significant spectral differences within similar ground objects (such as “same object but different spectra” and “foreign object with the same spectrum” phenomena) [

30]. Research has shown that convolutional neural networks (CNN) have powerful feature learning and expression capabilities [

31]. Zhang Jing et al. [

32] used drones to obtain RGB images of six densities of cotton fields and expanded the dataset using data augmentation technology. Different neural network models (VGGNet16, GoogleNet, MobileNetV2) were used to identify and classify cotton fields with different densities, with recognition accuracy rates exceeding 90%. However, under the higher-yield-technique mode of mechanized farming in Xinjiang, the plant spacing between plants and rows is unified. The classification of density does not have practical significance unless there is a lack of seedlings due to disasters. The existing high-resolution temporal and spatial research projects of cotton recognition and area extraction mostly focus on the comprehensive utilization of medium-resolution multitemporal images [

33,

34]. There is little research on remote-sensing image recognition of a single period or critical period in cotton growth, especially in early cotton based on high-resolution remote-sensing images. Moreover, in large-scale cotton operations, the advantages of object-oriented analysis and design methods of early recognition in high-resolution images are not clear, and further research and exploration are needed.

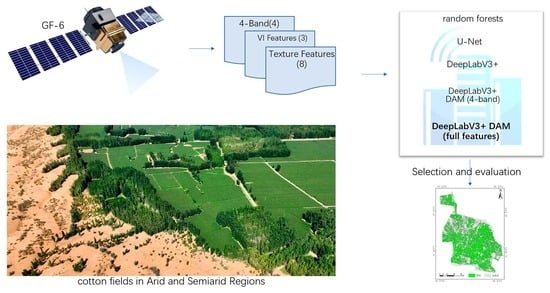

DeepLabV3+ network is a CNN that can be used for pixel-level object detection. Due to the performance in locating objects in images with complex backgrounds, this type of method has been widely applied in the field of agriculture [

35,

36]. In this study, an improved DeepLab V3+ network method for cotton high-resolution remote sensing recognition has been proposed, termed DAM (double attention mechanism), to recognize cotton. By adding a DAM module, it is possible to highlight the important parts of channels and spaces in the recognition results, thereby improving the precision of the recognition results. Furthermore, the red edge band can effectively monitor the growth status of vegetation. GF-6 satellite is the first multispectral remote sensing satellite in China to be equipped with a red edge band alone. The monitoring capabilities of satellites for resources such as agriculture, forestry, and grasslands can be improved. Improving the classification accuracy of paddy crops and enhancing crop recognition capabilities by red edge information, purple band, and yellow band showed the GF-6 satellite had broad application prospects in the precise identification and area extraction of crops. Given the outstanding performance on recognition in the field of agriculture by using the DeepLab V3+ network and GF-6 images recently, we considered applying this improved DeepLabV3+ network structure on cotton field identification and compare the results with other models to see its performance at identifying the cotton field. The purpose of this study is to provide a fast and efficient method for the early large-scale identification and distribution of cotton, better serving the critical irrigation period of cotton in arid and semiarid regions and providing a good decision-making basis for water-saving irrigation policies in arid and semiarid regions.