Underwater Image Restoration via Adaptive Color Correction and Contrast Enhancement Fusion

Abstract

:1. Introduction

2. Background

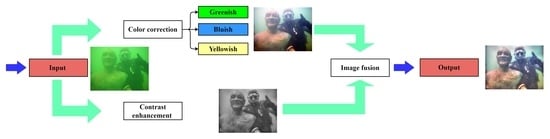

3. Method

3.1. Underwater Image Classification

3.2. Underwater Image Color Correction

3.3. Detail Restoration

3.4. Image Fusion

4. Results and Evaluation

4.1. Validation of Our Method

4.2. Comparison with Other Methods

4.3. Application Tests

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, Y.H.; Yu, C.M.; Wu, C.Y. Towards the Design and Implementation of an Image-Based Navigation System of an Autonomous Underwater Vehicle Combining a Color Recognition Technique and a Fuzzy Logic Controller. Sensors 2021, 21, 4053. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Jiang, Z.; Tong, L.; Liu, Z.; Zhao, A.; Zhang, Q.; Dong, J.; Zhou, H. Perceptual Underwater Image Enhancement with Deep Learning and Physical Priors. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3078–3092. [Google Scholar] [CrossRef]

- Kahanov, Y.; Royal, J.G. Analysis of hull remains of the Dor D Vessel, Tantura Lagoon, Israel. Int. J. Naut. Archaeol. 2001, 30, 257–265. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Zhang, T.; Xu, W. Enhancing underwater image via color correction and Bi-interval contrast enhancement. Signal Process. Image Commun. 2021, 90, 116030. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Karpel, N. Recovery of underwater visibility and structure by polarization analysis. IEEE J. Ocean. Eng. 2005, 30, 570–587. [Google Scholar] [CrossRef]

- Hu, H.; Qi, P.; Li, X.; Cheng, Z.; Liu, T. Underwater imaging enhancement based on a polarization filter and histogram attenuation prior. J. Phys. D Appl. Phys. 2021, 54, 175102. [Google Scholar] [CrossRef]

- Li, X.; Han, Y.; Wang, H.; Liu, T.; Chen, S.-C.; Hu, H. Polarimetric Imaging Through Scattering Media: A Review. Front. Phys. 2022, 10, 815296. [Google Scholar] [CrossRef]

- Li, X.; Zhang, L.; Qi, P.; Zhu, Z.; Xu, J.; Liu, T.; Zhai, J.; Hu, H. Are Indices of Polarimetric Purity Excellent Metrics for Object Identification in Scattering Media? Remote Sens. 2022, 14, 4148. [Google Scholar] [CrossRef]

- Li, X.; Xu, J.; Zhang, L.; Hu, H.; Chen, S.-C. Underwater image restoration via Stokes decomposition. Opt. Lett. 2022, 47, 2854–2857. [Google Scholar] [CrossRef]

- Li, X.; Hu, H.; Zhao, L.; Wang, H.; Yu, Y.; Wu, L.; Liu, T. Polarimetric image recovery method combining histogram stretching for underwater imaging. Sci. Rep. 2018, 8, 12430. [Google Scholar] [CrossRef]

- Azmi, K.Z.M.; Ghani, A.S.A.; Yusof, Z.M.; Ibrahim, Z. Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Comput. 2019, 85, 105810. [Google Scholar] [CrossRef]

- Zhang, W.D.; Dong, L.L.; Pan, X.P.; Zhou, J.C.; Qin, L.; Xu, W.H. Single Image Defogging Based on Multi-Channel Convolution MSRCR. IEEE Access 2019, 7, 72492–72504. [Google Scholar] [CrossRef]

- Sethi, R.; Indu, S. Fusion of Underwater Image Enhancement and Restoration. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2054007. [Google Scholar] [CrossRef]

- Xu, L.; Ren, J.S.J.; Liu, C.; Jia, J. Deep Convolutional Neural Network for Image Deconvolution. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1790–1798. [Google Scholar]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised Generative Network to Enable Real-Time Color Correction of Monocular Underwater Images. IEEE Rob. Autom. Lett. 2018, 3, 387–394. [Google Scholar] [CrossRef]

- Hu, H.; Yang, S.; Li, X.; Cheng, Z.; Liu, T.; Zhai, J. Polarized image super-resolution via a deep convolutional neural network. Opt. Express 2023, 31, 8535–8547. [Google Scholar] [CrossRef]

- Li, X.; Yan, L.; Qi, P.; Zhang, L.; Goudail, F.; Liu, T.; Zhai, J.; Hu, H. Polarimetric Imaging via Deep Learning: A Review. Remote Sens. 2023, 15, 1540. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color Balance and Fusion for Underwater Image Enhancement. IEEE Trans. Image Process. 2018, 27, 379–393. [Google Scholar] [CrossRef]

- McGlamery, B. A Computer Model For Underwater Camera Systems. Proc. SPIE 1979, 208, 221–231. [Google Scholar] [CrossRef]

- Jaffe, J.S. Computer modeling and the design of optimal underwater imaging systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- Gong, M.L.; Yang, Y.H. Quadtree-based genetic algorithm and its applications to computer vision. Pattern Recogn. 2004, 37, 1723–1733. [Google Scholar] [CrossRef]

- Hou, G.J.; Zhao, X.; Pan, Z.K.; Yang, H.; Tan, L.; Li, J.M. Benchmarking Underwater Image Enhancement and Restoration, and Beyond. IEEE Access 2020, 8, 122078–122091. [Google Scholar] [CrossRef]

- Li, Y.M.; Zhu, C.L.; Peng, J.X.; Bian, L.H. Fusion-based underwater image enhancement with category-specific color correction and dehazing. Opt. Express 2022, 30, 33826–33841. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Liu, W.; Xing, W. Color image enhancement based on local spatial homomorphic filtering and gradient domain variance guided image filtering. J. Electron. Imaging 2018, 27, 063026. [Google Scholar] [CrossRef]

- Hitam, M.S.; Yussof, W.; Awalludin, E.A.; Bachok, Z.; IEEE. Mixture Contrast Limited Adaptive Histogram Equalization for Underwater Image Enhancement. In Proceedings of the International Conference on Computer Applications Technology, Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cao, K.M.; Cosman, P.C. Generalization of the Dark Channel Prior for Single Image Restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cosman, P.C. Underwater Image Restoration Based on Image Blurriness and Light. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Fu, X.Y.; Fan, Z.W.; Ling, M.; Huang, Y.; Ding, X.H. Two-step approach for single underwater image enhancement. In Proceedings of the International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS 2017), Xianmen, China, 6–9 November 2017; pp. 789–794. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Rob. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Liu, R.S.; Fan, X.; Zhu, M.; Hou, M.J.; Luo, Z.X. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Wang, S.Q.; Ma, K.D.; Yeganeh, H.; Wang, Z.; Lin, W.S. A Patch-Structure Representation Method for Quality Assessment of Contrast Changed Images. IEEE Signal Process Lett. 2015, 22, 2387–2390. [Google Scholar] [CrossRef]

- Agaian, S.S.; Panetta, K.; Grigoryan, A.M. Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process. 2001, 10, 367–382. [Google Scholar] [CrossRef] [PubMed]

| Methods | MSRCR | GDCP | IBLA | Two-Step | Ours | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| UCIQE | UIQM | UCIQE | UIQM | UCIQE | UIQM | UCIQE | UIQM | UCIQE | UIQM | |

| (a) | 0.534 | 3.548 | 0.360 | −1.993 | 0.374 | −0.136 | 0.467 | 3.472 | 0.596 | 5.323 |

| (b) | 0.583 | 3.012 | 0.537 | 2.983 | 0.518 | 1.432 | 0.552 | 3.299 | 0.648 | 4.693 |

| (c) | 0.500 | 4.062 | 0.373 | 1.460 | 0.427 | 2.088 | 0.458 | 4.408 | 0.585 | 4.972 |

| (d) | 0.510 | 5.414 | 0.368 | −0.367 | 0.411 | −0.221 | 0.420 | 3.472 | 0.582 | 5.021 |

| (e) | 0.521 | 2.329 | 0.371 | −1.124 | 0.505 | 0.643 | 0.608 | 4.274 | 0.383 | 4.448 |

| (f) | 0.488 | 3.165 | 0.628 | 3.993 | 0.542 | 1.316 | 0.566 | 5.192 | 0.547 | 5.412 |

| (g) | 0.458 | 2.749 | 0.517 | 0.565 | 0.555 | 2.172 | 0.584 | 5.318 | 0.542 | 5.236 |

| (h) | 0.506 | 3.236 | 0.672 | 4.635 | 0.522 | 1.651 | 0.598 | 4.936 | 0.565 | 4.966 |

| (i) | 0.556 | 2.432 | 0.558 | 0.749 | 0.599 | 1.880 | 0.612 | 4.939 | 0.569 | 4.968 |

| (j) | 0.560 | 2.862 | 0.538 | 0.987 | 0.503 | 2.198 | 0.581 | 4.198 | 0.618 | 4.350 |

| (k) | 0.477 | 2.607 | 0.546 | 3.362 | 0.492 | 4.218 | 0.479 | 4.018 | 0.526 | 4.451 |

| (l) | 0.534 | 1.649 | 0.491 | 1.718 | 0.632 | 1.082 | 0.514 | 1.674 | 0.519 | 2.084 |

| (m) | 0.502 | 2.793 | 0.659 | 4.862 | 0.691 | 5.021 | 0.587 | 4.078 | 0.570 | 3.854 |

| (n) | 0.523 | 3.385 | 0.516 | 3.586 | 0.615 | 2.628 | 0.521 | 4.031 | 0.577 | 4.340 |

| Methods | MSRCR | GDCP | IBLA | Two-Step | Ours | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| PCQI | EME | PCQI | EME | PCQI | EME | PCQI | EME | PCQI | EME | |

| (a) | 1.305 | 21.145 | 1.042 | 12.341 | 1.022 | 4.030 | 1.276 | 14.710 | 1.327 | 21.285 |

| (b) | 1.017 | 14.258 | 0.897 | 7.824 | 1.067 | 7.665 | 1.189 | 10.742 | 1.259 | 10.272 |

| (c) | 1.130 | 3.464 | 0.962 | 2.732 | 1.105 | 4.961 | 1.162 | 4.954 | 1.231 | 9.261 |

| (d) | 1.261 | 3.466 | 1.002 | 7.237 | 1.124 | 11.647 | 1.170 | 6.848 | 1.308 | 14.157 |

| (e) | 0.700 | 1.706 | 0.623 | 3.555 | 1.082 | 8.197 | 0.853 | 4.909 | 0.911 | 7.321 |

| (f) | 0.850 | 5.379 | 1.182 | 15.208 | 1.127 | 11.646 | 1.337 | 16.101 | 1.397 | 16.802 |

| (g) | 0.655 | 8.226 | 0.853 | 16.317 | 1.115 | 18.587 | 1.330 | 23.183 | 1.343 | 22.967 |

| (h) | 0.882 | 5.833 | 1.17 | 19.039 | 1.099 | 15.552 | 1.300 | 16.496 | 1.352 | 18.453 |

| (i) | 0.600 | 10.277 | 0.857 | 19.848 | 1.049 | 10.764 | 1.257 | 24.038 | 1.278 | 26.479 |

| (j) | 0.943 | 5.322 | 0.834 | 5.051 | 1.122 | 11.464 | 1.096 | 7.748 | 1.204 | 17.517 |

| (k) | 0.958 | 2.035 | 0.983 | 4.478 | 0.979 | 1.885 | 1.113 | 4.431 | 1.267 | 6.847 |

| (l) | 0.865 | 1.144 | 0.796 | 1.225 | 1.035 | 2.340 | 0.965 | 1.769 | 1.053 | 3.638 |

| (m) | 0.949 | 1.714 | 1.060 | 2.946 | 1.011 | 4.395 | 1.066 | 4.026 | 1.205 | 6.046 |

| (n) | 0.999 | 2.080 | 0.929 | 2.084 | 0.722 | 2.887 | 1.149 | 3.733 | 1.264 | 7.640 |

| Methods | UCIQE | UIQM | PCQI | EME |

|---|---|---|---|---|

| MSRCR | 0.294 | −1.011 | 0.686 | 0.745 |

| GDCP | 0.397 | −2.097 | 0.875 | 2.163 |

| IBLA | 0.449 | −0.991 | 1.030 | 4.194 |

| Two-step | 0.482 | 1.884 | 1.052 | 2.759 |

| Ours | 0.502 | 1.984 | 1.003 | 3.874 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Li, X.; Xu, S.; Li, X.; Yang, Y.; Xu, D.; Liu, T.; Hu, H. Underwater Image Restoration via Adaptive Color Correction and Contrast Enhancement Fusion. Remote Sens. 2023, 15, 4699. https://doi.org/10.3390/rs15194699

Zhang W, Li X, Xu S, Li X, Yang Y, Xu D, Liu T, Hu H. Underwater Image Restoration via Adaptive Color Correction and Contrast Enhancement Fusion. Remote Sensing. 2023; 15(19):4699. https://doi.org/10.3390/rs15194699

Chicago/Turabian StyleZhang, Weihong, Xiaobo Li, Shuping Xu, Xujin Li, Yiguang Yang, Degang Xu, Tiegen Liu, and Haofeng Hu. 2023. "Underwater Image Restoration via Adaptive Color Correction and Contrast Enhancement Fusion" Remote Sensing 15, no. 19: 4699. https://doi.org/10.3390/rs15194699