1. Introduction

Forests, as one of the most valuable resources in nature, play a crucial role in ecological functions, such as preventing wind erosion and conserving water and soil. On the other hand, forests also have enormous economic value for humans. Forest fires often lead to severe consequences such as soil erosion, air pollution, and threats to animal survival, causing significant ecological and economic damage [

1]. Therefore, the early detection and control of forest fires are crucial. Smoke, as an important precursor to forest fires, can be effectively monitored for their detection and control, which is significant for their suppression [

2].

The detection of forest smoke has gone through various developmental stages, including manual inspections, instrument-based detection, and detection based on computer vision. Manual inspections require a high level of manpower and material resources and have a low efficiency. Moreover, detection results often fail to meet expectations. Instrument-based detection mainly depends on various detectors and sensors from the past two decades. However, instruments are prone to interference from small particles, such as dust in the environment [

3]. Additionally, they only trigger an alarm when the concentration of smoke reaches a threshold. Due to the complexity of outdoor air flow and other environmental factors, a fire may become difficult to control by the time the alarm goes off. Therefore, this method has gradually been abandoned. In the phase of detection based on computer vision, pattern recognition is used for feature extraction and classification to achieve the identification of forest smoke [

4]. Gubbi et al. [

5] used wavelets to extract the features of smoke and then classified smoke using a support vector machine (SVM). H. Cruz et al. [

6] proposed a new color detection index for detecting the colors of flames and smoke. This method enhances the color by normalizing the RGB channel color and mainly combines the features of the motion and color of smoke to obtain the regions of flames and smoke through thresholding. Prema et al. [

7] used a comprehensive approach to detect smoke, which included the YUV color space and wavelet energy, taking the relationship and contrast of smoke into account. However, due to the limitations of human experience, it is subject to various forest environments. In summary, although some progress has been made compared to instrument-based detection, traditional image detection methods have difficulty extracting the intrinsic features of smoke. The time required for detection is also too long, and the rate of false alarms is high, with poor generalization ability.

In recent years, with the rapid development of artificial intelligence, drones with deep learning have injected strong development momentum into detection via computer vision [

8]. Due to their high accuracy, real-time performance, strong robustness, and low cost, deep-learning-based detection algorithms of smoke are widely applicable in various complex scenarios and hold great research value. Convolutional neural networks (CNNs) can achieve the high-precision recognition of the data of a two-dimensional image, and researchers have attempted to apply CNNs in the detection of smoke. Salman Khan et al. [

9] comprehensively studied various detection algorithms and proved that the CNN has a high accuracy in smoke detection tasks. Additionally, the detection of smoke is often prone to errors due to the complexity of the background. In outdoor environments, such as forests, interferences such as clouds in the sky, reflections in lakes, and changes in lighting can easily cause false alarms [

10]. Therefore, many scholars have proposed algorithms for improvement. Xuehui Wu et al. [

11] used algorithms of background subtraction and achieved good results in the detection of dynamic smoke. The rate of false detection for classifying clouds reflected from sunlight was reduced, but the rate of false detection for newly formed objects remained high. Yin et al. [

12] adjusted the parameters according to changes in the actual environment and thus could accurately detect smoke in different conditions. Zhang, Q. et al. [

13] constructed a simulated smoke dataset and trained it using the proposed deep convolutional generative adversarial network. They effectively monitored smoke areas and reduced false alarms, but their method was demanding in terms of hardware and difficult to widely deploy to meet real-time requirements. Lightweight models are widely used in practical tasks by virtue of their lower energy consumption and faster inference speeds. Guo, Y. et al. [

14] used the constructed S-Mobilenet module to realize the lightweight YOLO model for the problem of the real-time detection of ship targets of a smaller size and evaluated its effectiveness on hardware devices. However, there is still the problem of weak applicability in real tasks. Li, W. et al. [

15] developed the lightweight WearNet based on a novel convolutional block, which can be deployed with embedded devices for the detection of scratches. Although all of the above achieved good results, there are still problems in existing research on smoke detection. Sheng, D. et al. [

16] used a CNN network and linear iterative clustering (SLIC) for smoke image segmentation and applied density-based spatial clustering of applications with noise (DBSCAN), which can achieve faster detection. However, their proposed method has a low FPR rate, which indicates high model sensitivity and needs further improvement.

In summary, the deep-learning-based detection algorithms of smoke mentioned above have achieved considerable success, but there are three problems when it comes to actually using edge equipment for detection. Firstly, models of a large network have a huge number of parameters and high hardware requirements, making it difficult to deploy them for practical tasks and meet real-time requirements for the detection of smoke. Secondly, existing lightweight models can detect smoke more quickly under the same conditions, but their accuracy of detection is often far lower than that of models with a large network. For detecting things with thin features, such as smoke, the fusion of the features is often incomplete, which leads to a lower accuracy of detection. Therefore, there is the problem of a performance imbalance between the accuracy and speed of detection. Thirdly, so-called small smoke is a type of smoke produced in the early stages of a forest fire and is characterized by a small volume and thinness. Thin and small smoke cannot effectively extract information due to the small number of features it can extract. It is more difficult to detect than typical smoke that has already taken shape and is susceptible to disturbances, such as lens impurities. This leads to the problem of UAVs obtaining noisy images during detection missions, which can cause missed detections [

17] as well as false detections caused by interfering objects, such as cloud cover [

18]. These make the detection of forest smoke a major challenge.

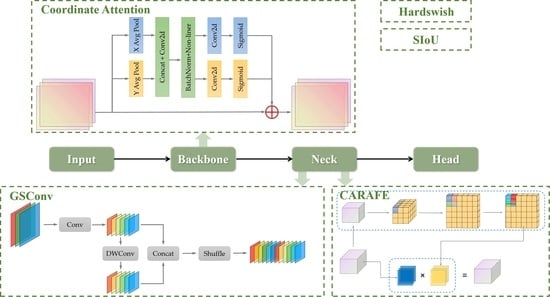

In order to solve the problems above, a lightweight model for detecting forest fire smoke based on YOLOv7 [

19] is proposed in this paper. (1) To address the problem of the original model being large in size and difficult to deploy in real edge devices, we use GSConv to replace the standard convolution in the neck layer and construct fast pyramid pooling modules by using GSELAN and GSSPPFCSPC, based on GSConv. This can speed up the model convergence and fuse the features of smoke at a faster rate with less computation when dealing with images of smoke. (2) Considering the blurred feature boundaries of smoke-like objects and smoke, it is very easy to confuse the detection of clouds and that of smoke from forest fires in a forest environment. There is the problem of low interclass heterogeneity, and the foreground and background of images of smoke are difficult to effectively distinguish, which can cause false detection. In response, we embed multilayer coordinate attention in the backbone network, which improves its ability to distinguish between the smoke and background by effectively fusing the channel relations and location information, focusing on the location of interest to the network, suppressing useless information, and improving the separation of clouds and smoke. (3) Thin and fine smoke cannot carry sufficient information due to its inconspicuous features, which also weakens the accuracy of smoke detection. Moreover, the use of the CARAFE upsampling operator allows us to extract information more fully from the image by expanding the sensory fields in order to effectively improve the detection accuracy of small targets. The SIoU loss function is used to improve the speed and accuracy of inference during model training.

5. Discussion and Conclusions

Predicting and preventing forest fires is crucial to protecting forests. On one hand, when comparing the development histories of the means of detecting forest fire smoke, manual detection is less effective and too costly, while detection by using instruments is easily disturbed by fine particles such as dust in the environment. Compared with these two methods, our method is based on computer vision, uses pattern recognition for feature extraction and classification, is able to detect smoke well, has low deployment costs, and is a good strategy for detecting forest fire smoke. On the other hand, in smoke detection based on deep learning, many scholars have proposed network structures, such as R-CNN or other algorithms [

41], which do improve the accuracy of smoke detection to some extent, but they are more demanding in terms of hardware than the LMDFS proposed in this paper, making them difficult to deploy to meet real-time requirements. Moreover, they cannot provide an effective solution for detecting small smoke and smoke containing disturbances. Although FfireNet [

42] provides a faster detection method, there is still a possibility to improve its accuracy. Our model takes both high accuracy and low computational costs into account and improves the detection accuracy of small smoke by aggregating larger sensory fields. Furthermore, our model can also more effectively separate the essential difference between forest fire smoke and smoke-like smoke, which solves this painful problem in the field of detecting forest fire smoke and provides a new idea for preventing and controlling forest fires.

YOLOv7, as the latest target detection model, has a high capability to extract and aggregate the features of images, thus achieving a high accuracy in target recognition. However, better detection results require a large computational expenditure, which is inconvenient for the model’s deployment in edge devices. For this reason, we built the GS-ELAN module by using GSConv. GSConv is able to improve the effectiveness of convolution while enhancing the calculation efficiency through the effective combination of DWConv and SConv. So, it is an efficient means to lighten the model. Taking the GS-ELAN module constructed in this paper as an example, the problem of a possible lack of links for GSConv due to the replacement of convolution can be eliminated, and it is helpful for the transfer and flow of information in the model in that it introduces identity mapping. In addition, we borrow the structure of the SPPF to improve the SPPCSPC, which can have a higher computational efficiency and training efficiency with fewer parameters. Then, we add a multi-layer CA mechanism to the feature extraction network, because under a forest environment, there exist a large number of smoke-like disturbances, such as floating clouds, atmospheric fog, etc. Due to their similar characteristics to those of forest fire smoke, the traditional feature extraction network cannot accurately extract the features of forest smoke. The addition of CA significantly enhances the model’s ability to extract smoke features and can more effectively separate the essential differences between forest fire smoke and other clouds, thus reducing the false detection rate of non-smoke. In addition, in regards to the characteristics of the thinness and fineness of small smoke produced in the early stages of a forest fire, especially for images of forest smoke taken at long distances with long views, its shape is even smaller. It is more difficult for feature fusion to detect this than the typical smoke that is already formed, i.e., there is a possibility of smoke being filtered out. For this reason, we add CARAFE upsampling, which can help the network perceive a wider range of contextual information by expanding the perceptual field of the model, and improve the capability of feature representation by contextual fusion judgments in order to extract and fuse these fine features. Finally, we use the loss function SIoU to replace the original localization overlap loss function by judging the angular difference between judgement boxes, which not only allows for fast convergence during training to improve the model’s accuracy, but also allows for the fast screening of NMS during detection to locate smoke locations more quickly and accurately, which is also essential for the fast detection of forest fire smoke. The final experimental results for the constructed dataset demonstrate that the model proposed achieves an

[email protected] of 80.2%, a number of FPS of 63.39, and a total number of parameters of 7.96 M. Compared to the baseline, the proposed model shows comprehensive improvements. Furthermore, when compared to other detectors of the same class, it achieves the best performance for all indicators. Its lighter weight and better detection performance make it more deployable in the practical tasks of detecting forest fire smoke. In addition, we note the important role of sensors in fire detection tasks. Abeer D. Algarni et al. [

43] compare multiple sensors in wildfires. The advantages and limitations of detection have inspired us to consider using sensors, such as thermal infrared remote sensors, to improve the detection of forest fire smoke from a multidimensional direction in our later studies.

6. Future Work

Our experimental results demonstrate that the model proposed in this paper has a wide range of applications. On one hand, it can be installed on drones and watchtowers equipped with video surveillance, which can be used for the real-time prediction of incipient fires or fires that have not yet occurred; on the other hand, it can also be installed on fire cameras for observing and describing the development of fires that have already occurred, providing reference for the rescue work of firefighters. In future research, we will further explore its coherence with other monitoring equipment.

In the field of forest fire detection, wildfire detection based on satellite imagery has a deep research foundation [

44,

45], but it also has some shortcomings. For instance, it is easy to detect large-scale fire situations because satellite images usually cover a large area, while it is not easy to detect the features of smoke in the early stage of a fire, especially small smoke, and it is crucial for forest fires to be extinguished as early as possible. To address the above issues, our model has good potential for application. Firstly, our model performs excellent when detecting small smoke and smoke with smoke-like inference. Secondly, our model is designed to be lightweight and suitable for resource-constrained environments, such as emergency response sites or platforms such as UAVs. This makes our model easy to deploy and integrate into existing satellite-imagery-based wildfire detection systems.

Certainly, the model proposed in this article also has some limitations. The model mainly focuses on detecting forest smoke during the daytime, and the dataset used is mostly from the daytime. However, the risk of forest fires occurring at night is also high. Therefore, in our next study, we will incorporate data on forest smoke at night to improve the generalization ability and broad applicability of this model.