1. Introduction

Crop above-ground biomass (AGB) refers to the organic matter fixed in crops during their growth process, which is closely influenced by factors such as photosynthesis, nutrient absorption, and climate [

1]. Measuring crop AGB plays a crucial role in assessing crop growth status, determining fertilization requirements, promptly detecting pests and diseases, and predicting crop yield [

2]. However, traditional manual sampling methods for measuring crop AGB are reliable but expensive, destructive, and limited in terms of the number of sampling points. Consequently, they are only suitable for small-scale agricultural areas and fail to meet the demand for quantitative monitoring of crop AGB over larger regions [

3]. Hence, there is a need to explore more efficient, cost-effective, and dependable methods for acquiring timely crop AGB information.

Remote sensing technology enables the collection of crop canopy reflectance information from a distance, without direct contact. Analyzing and processing this reflectance information allows for non-destructive monitoring of crop growth [

4]. In comparison to ground surveys, remote sensing technology offers real-time, non-destructive, and large-scale estimation of crop AGB [

5]. Unmanned Aerial Vehicles (UAVs) remote sensing technology has proven effective in crop growth monitoring due to its affordability, ease of use, and high temporal and spatial resolution [

6]. The primary sensors used in UAVs include RGB digital cameras, multispectral sensors, hyperspectral sensors, and LiDAR sensors [

7,

8]. Although multispectral, hyperspectral, and LiDAR sensors provide superior accuracy and versatility, their high cost and complexity limit their widespread application [

9]. In contrast, RGB digital cameras are preferred for crop growth monitoring due to their cost-effectiveness, lightweight nature, high spatial resolution, and simplified data processing [

10,

11].

Numerous studies have demonstrated the effectiveness of estimating the AGB of wheat, maize, and rice by calculating vegetation indices (VIs) from UAV RGB images [

12,

13,

14]. However, VIs alone are limited in capturing internal information of vertically growing crop organs, leading to lower accuracy in estimation [

15]. To address this limitation, some studies have explored the combination of VIs with crop height (CH) [

16]. CH provides insights into the vertical structure of crops and its variations reflect the health and nutritional status of crops, thereby aiding in AGB estimation [

17]. UAV RGB images can be stitched together to generate a digital surface model (DSM), which, in turn, enables the derivation of crop height models for obtaining CH information [

18]. Crop height models based on UAV remote sensing technology can provide accurate CH. When combined with VIs, they provide a novel approach for in-field estimation of crop AGB.

While VIs are commonly used indicators for estimating crop AGB, their accuracy can be influenced by various factors such as soil background, lighting conditions, and weather [

19]. Moreover, VIs tend to lose sensitivity during the reproductive growth stage, limiting their effectiveness as standalone estimators of crop AGB. On the other hand, texture features are less susceptible to these factors and can provide valuable high-frequency information about crop growth status, including leaf morphology, distribution density, and leaf arrangement [

20]. Texture features refer to the variations in grayscale distribution of pixels within their neighboring area, enabling them to reflect the spatial distribution of vegetation in the image and its relationship with the surrounding environment [

21]. Therefore, combining VIs with texture features shows great potential in enhancing the accuracy of crop AGB estimation.

In UAV remote sensing, flight height is a crucial factor that influences image resolution and quality [

22]. Currently, in studies aimed at estimating crop growth parameters, flight heights typically range from 30 to 100 m, corresponding to image resolutions of 1 to 10 cm [

5,

23]. However, the selection of flight heights and corresponding image resolutions in current research is subjective and arbitrary, lacking unified standards and guidelines. This lack of standardization poses challenges for the application and dissemination of crop growth parameter inversion models based on UAV remote sensing technology. Therefore, evaluating the impact of different flight height images on crop AGB estimation holds significant importance in formulating standardized guidelines for UAV image acquisition.

The continuous progress in artificial intelligence and computer science has propelled the development of machine learning algorithms, leading to their widespread application in agricultural remote sensing data analysis [

24]. As a branch of machine learning, deep learning has experienced rapid growth. For instance, artificial neural networks (ANN) and convolutional neural networks (CNN) are two renowned deep learning algorithms that possess unique advantages and have been successfully applied in various fields. ANN excels in capturing complex nonlinear relationships within data and can generalize well to unseen samples with appropriate training [

25]. It is effective in handling high-dimensional datasets, making it suitable for tasks such as image recognition and natural language processing. On the other hand, CNN is specifically designed for image processing tasks and is highly efficient in extracting spatial features from images [

26]. It utilizes convolutional layers to automatically detect patterns and structures, exhibiting remarkable performance in object detection and image classification tasks. However, it is important to note that while ANN and CNN demonstrate promise in many applications, they also have limitations. Compared to traditional machine learning algorithms, deep learning algorithms typically require a large amount of labeled training data and longer training times. Additionally, the complex network architecture and hyperparameter tuning in deep learning models can pose challenges in terms of interpretability and computational resources [

7]. Traditional machine learning algorithms, on the other hand, have certain advantages in interpretability, training speed, handling small-sample data, data requirements, and parameter optimization. Currently, traditional machine learning algorithms such as Random Forest Regression (RFR), Gradient Boosting Regression Trees (GBRT), and Support Vector Regression (SVR) are widely used for estimating various crop growth parameters, including monitoring corn leaf area index, estimating soybean yield, and assessing wheat nitrogen nutrition status [

7,

27,

28]. It is worth noting that each machine learning algorithm operates based on its unique principles; therefore, when applied to the same dataset, they may yield different results. By understanding their distinct working principles, researchers can significantly improve the accuracy and reliability of crop growth parameter estimation. In addition to selecting suitable machine learning algorithms, feature importance analysis and hyperparameter tuning are of significant importance in the field of machine learning. Feature importance analysis helps us understand the essence of the data and enhance the model’s interpretability by determining the contribution of input features to the model’s prediction results [

29]. On the other hand, reasonable selection of hyperparameters can enhance the accuracy and stability of the model, avoiding overfitting or underfitting and optimizing the utilization of computational resources [

18]. Both of these processes are critical steps in optimizing the performance and interpretability of machine learning models, playing crucial roles in improving model performance and facilitating the practical application of machine learning.

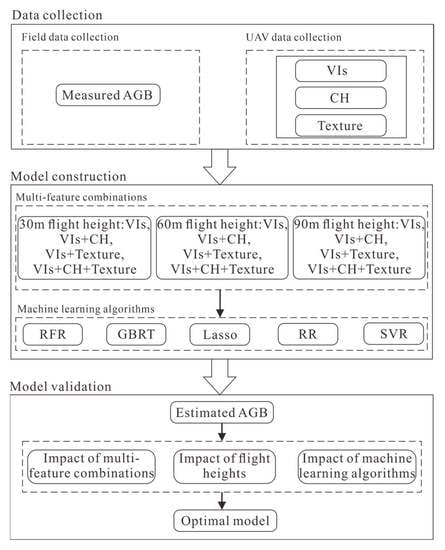

In summary, previous studies on wheat AGB estimation have solely relied on simple indicators such as VIs, making it difficult to accurately capture crop canopy structure and resulting in low estimation accuracy. Additionally, different flight altitudes and machine learning algorithms can also influence the estimation results. To address these issues, this study adopted the following approaches. Firstly, a comprehensive feature-based estimation method was proposed, integrating traditional VIs with CH and texture features to more accurately reflect wheat AGB. Secondly, the study explored the impact of different flight altitudes and multiple machine learning algorithms on estimation accuracy, thereby broadening the choice of UAV flight altitudes and methods for wheat AGB estimation. Therefore, the study puts forward the following hypotheses: (1) integrating VIs, CH, and texture features can more accurately reflect the growth status of wheat, hypothesizing that combining multiple features can improve estimation accuracy; (2) different flight altitudes may lead to variations in observing wheat canopy structure, hypothesizing that estimation accuracy decreases with increasing flight altitude; (3) different machine learning algorithms have different implementation principles, hypothesizing that the choice of different machine learning algorithms significantly affects wheat yield estimation accuracy. By validating these innovative methods and hypotheses, this study aims to provide new approaches and insights for accurately estimating wheat AGB and to offer new avenues for precision agricultural management based on unmanned aerial vehicle remote sensing technology.