1. Introduction

Remote sensing has emerged as a powerful tool for gathering data and information about the ground’s surface from a long distance. Recent developments in deep learning have revolutionized the field of remote sensing, providing researchers with new and sophisticated methods for image processing and interpretation. Among these methods, classification [

1,

2,

3,

4,

5], object detection [

6,

7,

8,

9,

10,

11,

12], change detection [

13,

14,

15,

16] and semantic segmentation [

17,

18,

19] are some of the most popular uses for deep learning-based remote sensing, which have significantly accelerated the realization of related applications in the fields of environmental monitoring, traffic security and national defense.

As typical remote sensing targets, aircraft are important transportation carriers and military targets. The accurate detection of aircraft targets plays a vital role in diverse applications, such as air transportation, emergency rescue and military surveillance. Compared to object detection methods based on other specific targets, such as ships and constructions [

20,

21], it is difficult to detect aircraft in remote sensing images, mainly because of their small size, dense distribution and complex backgrounds. Therefore, aircraft detection is an important task within the field of remote sensing.

Over the past few years, researchers have been striving to develop efficient and accurate methods for aircraft detection, which can be broadly categorized into two groups based on the techniques used: bounding box regression and landmark detection. Bounding box regression methods employ general detection models, such as the R-CNN series [

22,

23,

24] and the YOLO series [

25,

26,

27], to extract features by selecting a large number of region proposals for subsequent regression and classification. In contrast, landmark detection-based methods first locate different types of landmarks on objects and then form detection boxes based on these landmarks, which can make better use of the structural characteristics of aircraft.

However, aircraft detection has some characteristics that are different from those of general object detection. Firstly, as shown in

Figure 1, aircraft targets in remote sensing images are usually densely arranged in airports and are prone to interference between different targets. Secondly, the head, wings and tail of an aircraft contain strong structural information that is crucial for accurate detection. Finally, the fine-grained classification of aircraft categories [

28] relies heavily on the structural information of aircraft. The existing approaches [

29,

30,

31,

32,

33] do not fully leverage these features, despite some improvements in aircraft detection methods. For example, bounding box regression-based methods suffer from irrelevant background interference within the rectangular anchor boxes, while implicit feature extraction fails to make full use of the strong structural information of aircraft targets. Moreover, in the case of a large number of closely spaced targets, landmark-based methods are prone to errors when grouping keypoints. Therefore, it is necessary to develop new and more effective methods for aircraft detection that can overcome these challenges and take full advantage of the unique characteristics of aircraft targets.

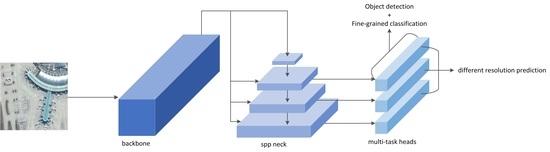

To address the characteristics of aircraft detection and the problems of existing algorithms, we propose an aircraft detection algorithm called Aircraft-LBDet (aircraft landmark and bounding box detection), which is a multi-task algorithm model combining bounding box detection and landmark detection. The bounding box-based approach can effectively compensate for the grouping errors of landmark detection in the case of dense arrangement, thus improving the accuracy of detection. By adding landmark supervision information to the bounding box-based approach, the structural features of aircraft can be fully utilized to provide more detailed position information for bounding box localization, which is helpful for subsequent fine-grained classification tasks. We can achieve more accurate aircraft detection by combining bounding box detection with landmark supervision. In this paper, we present our method and evaluate its performance on relevant remote sensing datasets. The experimental results demonstrated that the proposed method outperformed existing state-of-the-art algorithms and was robust to various environmental conditions.

The main contributions of our method are as follows:

We propose a multi-task joint training method for remote sensing aircraft detection, within which landmark detection provides stronger semantic structural features for bounding box localization in dense areas, which helps to improve the accuracy of aircraft detection and recognition;

We propose a multi-task joint inference algorithm, within which landmarks provide more accurate supervision for the NMS filtering of bounding boxes, thus substantially reducing post-processing complexity and effectively reducing false positives;

We optimize the landmark loss function for more effective multi-task learning, thereby further improving the accuracy of aircraft detection.

In the rest of this paper, we first review the related work in the field of remote sensing and identify the gaps that our proposed method aims to address. Next, we describe our proposed method and explain how it differs from existing approaches. Then, we present the results of our experiments, including comparisons to existing methods and ablation studies. Finally, we summarize our findings and discuss the implications of our work for future research within the remote sensing field.

4. Results

In this section, we describe the experiments related to our proposed approach. We start by describing the dataset used for our experiments, followed by details of the implementation. Then, we discuss the evaluation metrics that were employed to measure the performance of our method. Subsequently, we compare our method to other state-of-the-art techniques on the same dataset. Furthermore, we conduct ablation experiments to analyze the contributions of the individual components of our approach. Finally, we provide visualizations of our results and discuss our findings regarding the fine-grained performance.

4.1. Dataset

During the experimental stage, we chose the UCAS-AOD dataset [

58] to validate the performance of our model. The UCAS-AOD is a dataset comprising aerial images for object detection in remote sensing applications, which was developed by the University of the Chinese Academy of Sciences (UCAS) and contains high-resolution images captured by an unmanned aerial vehicle (UAV) over a university campus. The images have a resolution of 0.1 m per pixel and cover an area of approximately 2.4 square kilometers.

The UCAS-AOD dataset is annotated with ground truth object bounding boxes for three object classes: cars, buildings and trees. The annotations were performed manually by experts in remote sensing and computer vision. The dataset has been extensively utilized to demonstrate the effectiveness of object detection algorithms for aerial images, particularly those relying on deep CNNs. There are 648 images for training, including 4720 instances, and 432 images for testing, including 3490 instances. During the experiments, the original image input size was cropped to 832 × 832.

4.2. Implementation Details

We conducted experiments utilizing the PyTorch 1.8.1 framework in conjunction with the Nvidia Geforce GTX 1080 GPU. We employed the YOLOv5-4.0 as our initial version and implemented modifications that were specific to our research. Our experiments utilized a batch size of 16 and training was executed for a duration of 200 epochs using an SGD optimizer. We set the initial learning rate to and the final learning rate to in order to effectively optimize the model performance over time. Furthermore, a momentum value of 0.8 was utilized for the first five warm-up epochs, subsequently transitioning to 0.9 for later steps to enhance the stability and computational efficiency of the training process. In an effort to increase the resilience of our proposed model and improve its generalizability, we also enlarged the dataset through a range of techniques, including flipping, rotation and random cropping.

4.3. Comparison Experiments

We set up comparative experiments in several areas to demonstrate the validity of our proposed model.

Comparison of our model to other generic detection models.

The proposed Aircraft-LBDet method was evaluated in comparison to several advanced object detection methods on the UCAS-AOD dataset. The results are presented in

Table 1. The proposed method achieved an impressive AP of 0.904, outperforming the other methods by significant margins. Specifically, it outperformed the Faster R-CNN, SSD, CornerNet, Yolo v3, RetinaNet+FPN and Yolo v5s by 4.5%, 0.8%, 13.9%, 4%, 0.3% and 4.5%, respectively. In addition to its superior performance, the proposed method was also highly efficient. The Yolo v5s, which is known for its efficient and real-time detection, achieved a frame rate of 80.6 FPS; however, the Aircraft-LBDet method further improved the efficiency of the YOLO method, achieving a frame rate of 94.3 FPS, which was 17.0% higher than that of the Yolo v5s. Overall, these results demonstrated that the proposed Aircraft-LBDet method is a highly effective and efficient method for object detection, particularly for aircraft detection.

Comprehensive comparison of multiple indicators between our approach and the baseline.

In

Table 2, a comparison between the Aircraft-LBDet method and the baseline YOLOv5s method is presented. The performance of these two methods was evaluated from several aspects. In terms of the average aircraft detection precision, Aircraft-LBDet performed slightly better than the baseline when the threshold was adjusted from 0.5 to 0.95. Additionally, the parameter of Aircraft-LBDet was significantly less than that of YOLOv5s. The false alarm rate (FA) is an important evaluation metric and it is evident from the table that Aircraft-LBDet had a 39.7% lower false alarm rate than the baseline method. The F1 score, which is a measure of the balance between precision and recall, was also significantly higher for Aircraft-LBDet than the baseline. Therefore, the results indicated that Aircraft-LBDet outperforms the baseline in terms of both accuracy and efficiency.

Comparison of other aircraft detection methods.

In the field of aircraft detection, there have been numerous studies exploring effective models to enhance the accuracy of detection [

60,

61,

62,

63,

64]. We conducted comparative experiments between our model and other models for aircraft detection. The results are summarized in

Table 3. It is evident that our approach outperformed all of the other methods, including FR-O [

22], ROI-trans [

60], FPN-CSL [

61],

Det-DCL [

62], P-RSDet [

63] and DARDet [

64], which had AP scores ranging from 0.834 to 0.903. Our method achieved an AP score of 0.904, which was 0.001 higher than the best performing method in the literature. Moreover, our approach utilized the smallest backbone (CSP-ResBlock) compared to the other methods, indicating the superiority of our architecture in terms of computational efficiency. The reason for this superior performance was the re-designed CSP-ResBlock architecture in our method. The CSP-ResBlock architecture effectively balances the accuracy and efficiency of the model. It combines the advantages of a residual block and a cross-stage partial (CSP) structure, which allows the model to capture more useful features from input images. Our method outperformed the other SOTA methods and had the smallest backbone (CSP-ResBlock) compared to ResNet-50 and ResNet-101, which indicated the effectiveness of our re-designed CSP-ResBlock architecture and innovative collaborative learning detection head.

4.4. Ablation Experiments

The detection of aircraft in remote sensing images is a challenging task that requires the accurate localization of aircraft landmarks and bounding boxes. With respect to this matter, we propose a novel method for detecting aircraft in remote sensing images by integrating various modules and loss functions. To evaluate the effectiveness of our proposed method, we conducted a series of ablation experiments on the UCAS-AOD dataset.

The ablation experiments comprised a quantitative analysis of the different modules and loss functions to determine their contributions to the overall performance of our proposed method. The results demonstrated that the landmark box loss function enhanced the detection performance of the algorithm by constraining the relationships between landmarks and bounding boxes. Consequently, the algorithm’s detection performance was boosted by 4.9%.

Building on this initial improvement, we specifically added the CSP-NB module to improve the network’s feature extraction ability by deepening the network channel, which improved the detection performance of the algorithm by 4.2%. We then incorporated a P-Stem module to improve the average precision (AP) of the algorithm by 1.6%. Finally, we added a central-constraint NMS operation to further increase the detection precision of the algorithm by 0.2%. The ablation study results are listed in

Table 4, where each row corresponds to a different combination of modules and loss functions.

Overall, the ablation experiments indicated the effectiveness of our proposed method for detecting aircraft. By integrating different modules and loss functions, we were able to achieve a significant improvement in the detection performance of the algorithm. Our proposed method could be used for various applications, including aerial surveillance, border patrol and disaster response. Further research should explore the possibility of our method being used in other domains and applications.

4.5. Visualization

This paper proposes an innovative method for the precise detection of dense objects in large-scale remote sensing imagery. In particular, the proposed method leverages highlight detection and keypoint detection to realize dense object detection and fine-grained classification. In this section, we present some example predictions (

Figure 7 and

Figure 8) to demonstrate the effectiveness and superiority of our method.

Figure 7 and

Figure 8 show two densely arranged scenes captured by large-size remote sensing imagery at different resolutions. From these figures, we can see that our proposed method could accurately detect aircraft and precisely identify their positions to achieve dense object detection. The results showed that our method is capable of handling complex and cluttered environments and could be a promising approach for real-world applications.

Overall, the proposed method achieved a significant improvement over existing methods for the detection of dense objects in large-scale remote sensing imagery. By leveraging highlight detection and keypoint detection, our approach offers a more accurate and precise solution for a wide range of real-world scenarios.

5. Discussion

In

Figure 9, we demonstrate that our proposed approach can judge specific types of aircraft, including the MD-90, A330, Boeing787, Boeing777, ARJ21 and Boeing747, by calculating the size of detected keypoints. Different points represent different parts of aircraft. The purple point represents the head, while the green and yellow points represent the left and right wings, respectively. The red and blue points represent the left and right of the tail, respectively. This fine-grained classification is achieved by utilizing highlight-detected keypoints, which enables the proposed method to accurately distinguish between different types of aircraft.

Furthermore, we calculated the wingspan and fuselage length of each aircraft category and compared them to relevant data on Wiki. The results of this specific contrast are shown in

Table 5. The contrast between the actual and theoretical data for the aircraft categories was conducted to aid the fine-grained classification. The results suggested that the actual data tended to exhibit slightly increased wingspan and fuselage lengths, ranging from 5–15%, which was because of the angle and inclination of the samples during measurement. Our analysis of the relevant data within each category consistently indicated a close resemblance, which showed that length is a reliable parameter for fine-grained classification. Therefore, the focus on wingspan and fuselage length provides a more comprehensive understanding of aircraft categories and better fine-grained classification performance.