An Accurate Forest Fire Recognition Method Based on Improved BPNN and IoT

Abstract

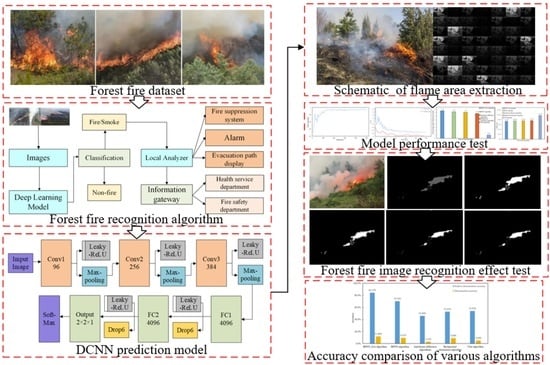

:1. Introduction

2. Materials and Methods

2.1. The Dataset Description

2.2. Network Structure

2.3. BPNNFire Algorithm

- The checked window is divided into 16 × 16 parts;

- Each pixel is compared to the neighboring pixel;

- If the value of the neighbor is smaller than the focal pixel value, the pixel value is set to 0; otherwise, it is 1, generating an 8-bit binary number;

- The frequency histogram generated by each member number is calculated in the entire cell;

- Histograms can be normalized according to the use-case authenticity, and the histogram of each cell is normalized to obtain the feature vector;

2.4. Construction of the DCNN Prediction Model

- (1)

- Convolutional layer

- (2)

- Sampling layer

- (3)

- DCNN model

3. Results

3.1. Performance Comparison Test and Analysis of the BPNN Algorithm

3.1.1. Model Performance Test

3.1.2. Forest Fire Image Recognition Effect Test

3.2. Internet of Things Monitoring System Test and Analysis

3.2.1. System Packet Loss Rate Test

3.2.2. Fire-Monitoring Network Deployment of Longyandong Forest Farm

4. Conclusions

- The system can typically transmit the forest environment data monitored by the ground WSN, and the UAV can generally return fire images above the forest and provide a prompt early warning, meeting the needs of forest fire monitoring;

- Multiple algorithms compared the processing speed of a video with a processing time of 4 min and 16 s. The video had 29 images/s, and the size of each frame was 960×540, for a total of 7424 images. The processing speed and delay rate of the video images were calculated using the BPNNFire algorithm and other algorithms. The test results revealed that the BPNNFire algorithm’s judgment accuracy rate was 84.37%, indicating that this algorithm was superior to other recognized algorithms;

- The real-time online monitoring of forest environmental indicators for three months indicates that the packet loss rate of the forest fire monitoring network was 5.99%for Longshan Forest Farm and 2.22% for Longyandong Forest Farm. The constructed hardware equipment and embedded routing protocol could be applied to an unattended situation in the forest fire monitoring field to ensure long-term stable system operation. The maximum relative error between the measured temperature and humidity values and the actual value was 5.75%, indicating that the forest fire monitoring/early warning system could stably receive and transmit forest environmental data, and that the system connectivity was good.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hanewinkel, M.; Hummel, S.; Albrecht, A. Assessing natural hazards in forestry for risk management: A review. Eur. J. For. Res. 2011, 130, 329–351. [Google Scholar] [CrossRef]

- Díaz-Ramírez, A.; Tafoya, L.A.; Atempa, J.A.; Mejía-Alvarez, P. Wireless sensor networks and fusion information methods for forest fire detection. Procedia Technol. 2012, 3, 69–79. [Google Scholar] [CrossRef]

- Bouabdellah, K.; Noureddine, H.; Larbi, S. Using wireless sensor networks for reliable forest fires detection. Procedia Comput. Sci. 2013, 19, 794–801. [Google Scholar] [CrossRef]

- Vikram, R.; Sinha, D.; De, D.; Das, A.K. EEFFL: Energy efficient data forwarding for forest fire detection using localization technique in wireless sensor network. Wirel. Netw. 2020, 26, 5177–5205. [Google Scholar] [CrossRef]

- Nebot, À.; Mugica, F. Forest Fire Forecasting Using Fuzzy Logic Models. Forests 2021, 12, 1005. [Google Scholar] [CrossRef]

- Nikhil, S.; Danumah, J.H.; Saha, S.; Prasad, M.K.; Rajaneesh, A.; Mammen, P.C.; Ajin, R.S.; Kuriakose, S.L. Application of GIS and AHP Method in Forest Fire Risk Zone Mapping: A Study of the Parambikulam Tiger Reserve, Kerala, India. J. Geovisualization Spat. Anal. 2021, 5, 1–14. [Google Scholar]

- Faroudja, A. A survey of machine learning algorithms based forest fires prediction and detection systems. Fire Technol. 2021, 57, 559–590. [Google Scholar]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video flame and smoke based fire detection algorithms: A literature review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Moumgiakmas, S.S.; Samatas, G.G.; Papakostas, G.A. Computer Vision for Fire Detection on UAVs—From Software to Hardware. Future Internet 2021, 13, 200. [Google Scholar] [CrossRef]

- Moussa, N.; El Belrhiti El Alaoui, A.; Chaudet, C. A novel approach of WSN routing protocols comparison for forest fire detection. Wireless Netw. 2020, 26, 1857–1867. [Google Scholar] [CrossRef]

- Saeed, F.; Paul, A.; Karthigaikumar, P.; Nayyar, A. Convolutional neural network based early fire detection. Multimed. Tools Appl. 2020, 79, 9083–9099. [Google Scholar] [CrossRef]

- Sinha, D.; Kumari, R.; Tripathi, S. Semisupervised classification based clustering approach in WSN for forest fire detection. Wirel. Pers. Commun. 2019, 109, 2561–2605. [Google Scholar] [CrossRef]

- Varela, N.; Ospino, A.; Zelaya, N.A.L. Wireless sensor network for forest fire detection. Procedia Comput. Sci. 2020, 175, 435–440. [Google Scholar] [CrossRef]

- Yebra, M.; Quan, X.; Riaño, D.; Larraondo, P.R.; van Dijk, A.I.; Cary, G.J. A fuel moisture content and flammability monitoring methodology for continental Australia based on optical remote sensing. Remote Sens. Environ. 2018, 212, 260–272. [Google Scholar] [CrossRef]

- Majid, S.; Alenezi, F.; Masood, S.; Ahmad, M.; Gündüz, E.S.; Polat, K. Attention based CNN model for fire detection and localization in real-world images. Expert Syst. Appl. 2022, 189, 116114. [Google Scholar] [CrossRef]

- Kukuk, S.B.; Kilimci, Z.H. Comprehensive analysis of forest fire detection using deep learning models and conventional machine learning algorithms. Int. J. Comput. Exp. Sci. Eng. 2021, 7, 84–94. [Google Scholar] [CrossRef]

- Avazov, K.; Hyun, A.E.; Sami, S.A.A.; Khaitov, A.; Abdusalomov, A.B.; Cho, Y.I. Forest Fire Detection and Notification Method Based on AI and IoT Approaches. Future Internet 2023, 15, 61. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, Z.; Chen, Z.; Chen, M.; Zhou, H.; Zhang, H.; Sun, J. Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 2023, 274, 115158. [Google Scholar] [CrossRef]

- Wardihani, E.; Ramdhani, M.; Suharjono, A.; Setyawan, T.A.; Hidayat, S.S.; Helmy, S.W.; Triyono, E.; Saifullah, F. Real-time forest fire monitoring system using unmanned aerial vehicle. J. Eng. Sci. Technol. 2018, 13, 1587–1594. [Google Scholar]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 274, 1–37. [Google Scholar] [CrossRef]

- Sevinc, V.; Kucuk, O.; Goltas, M. A Bayesian network model for prediction and analysis of possible forest fire causes. For. Ecol. Manag. 2020, 457, 117723. [Google Scholar] [CrossRef]

- Apriani, Y.; Oktaviani, W.A.; Sofian, I.M. Design and Implementation of LoRa-Based Forest Fire Monitoring System. J. Robot. Control 2022, 3, 236–243. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Lee, S.; Park, S. Multilabel Image Classification with Deep Transfer Learning for Decision Support on Wildfire Response. Remote Sens. 2021, 13, 3985. [Google Scholar] [CrossRef]

- Dampage, U.; Bandaranayake, L.; Wanasinghe, R.; Kottahachchi, K.; Jayasanka, B. Forest fire detection system using wireless sensor networks and machine learning. Sci. Rep. 2022, 12, 46. [Google Scholar] [CrossRef] [PubMed]

- Elshewey, A.M. Machine learning regression techniques to predict burned area of forest fires. Int. J. Soft Comput. 2021, 16, 1–8. [Google Scholar]

- Tang, Y.; Chen, C.; Leite, A.C.; Xiong, Y. Precision control technology and application in agricultural pest and disease control. Front. Plant Sci. 2023, 14, 1163839. [Google Scholar] [CrossRef]

- Guede-Fernández, F.; Martins, L.; de Almeida, R.V.; Gamboa, H.; Vieira, P. A deep learning based object identification system for forest fire detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Li, C.; Tang, Y.; Zou, X.; Zhang, P.; Lin, J.; Lian, G.; Pan, Y. A Novel Agricultural Machinery Intelligent Design System Based on Integrating Image Processing and Knowledge Reasoning. Appl. Sci. 2022, 12, 7900. [Google Scholar] [CrossRef]

- Naderpour, M.; Rizeei, H.M.; Ramezani, F. Forest fire risk prediction: A spatial deep neural network-based framework. Remote Sens. 2021, 13, 2513. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Bajracharya, B.; Thapa, R.B.; Matin, M.A. Forest fire detection and monitoring. In Earth Observation Science and Applications for Risk Reduction and Enhanced Resilience in Hindu Kush Himalaya Region; Springer: Berlin/Heidelberg, Germany, 2021; pp. 147–167. [Google Scholar]

- Miller, E.A. A Conceptual Interpretation of the Drought Code of the Canadian Forest Fire Weather Index System. Fire 2020, 3, 23. [Google Scholar] [CrossRef]

- Zhou, Y.; Tang, Y.; Zou, X.; Wu, M.; Tang, W.; Meng, F.; Zhang, Y.; Kang, H. Adaptive Active Positioning of Camellia oleifera Fruit Picking Points: Classical Image Processing and YOLOv7 Fusion Algorithm. Appl. Sci. 2022, 12, 12959. [Google Scholar] [CrossRef]

- Narita, D.; Gavrilyeva, T.; Isaev, A. Impacts and management of forest fires in the Republic of Sakha, Russia: A local perspective for a global problem. Polar Sci. 2021, 27, 100573. [Google Scholar] [CrossRef]

- Bui, D.T.; Hoang, N.D.; Samui, P. Spatial pattern analysis and prediction of forest fire using new machine learning approach of Multivariate Adaptive Regression Splines and Differential Flower Pollination optimization: A case study at Lao Cai province (Viet Nam). J. Environ. Manag. 2019, 237, 476–487. [Google Scholar]

- Peng, B.; Zhang, J.; Xing, J.; Liu, J. Distribution prediction of moisture content of dead fuel on the forest floor of Maoershan national forest, China using a LoRa wireless network. J. For. Res. 2022, 33, 899–909. [Google Scholar] [CrossRef]

- Michael, Y.; Helman, D.; Glickman, O.; Gabay, D.; Brenner, S.; Lensky, I.M. Forecasting fire risk with machine learning and dynamic information derived from satellite vegetation index time-series. Sci. Total Environ. 2021, 764, 142844. [Google Scholar] [CrossRef] [PubMed]

| Category | Number of Dataset Samples | Training Set Samples | Verification Set Samples | Testing Set Samples |

|---|---|---|---|---|

| Fire image | 53,830 | 43,064 | 5237 | 5529 |

| Name | Training Environment |

|---|---|

| CPU | Inter® Xeon® Gold [email protected] GHz |

| GPU | NVIDIA GTX 3090@24 GB |

| RAM | 128 GB |

| PyCharm version | 2020.3.2 |

| Python version | 3.7.10 |

| PyTorch version | 1.6.0 |

| CUDA version | 11.1 |

| cuDNN version | 8.0.5 |

| Forest Farm | Longshan | Longyandong |

|---|---|---|

| Number of local packets sent | 3572 | 3572 |

| Number of packets received by the platform | 3358 | 2725 |

| Packet loss rate | 5.99% | 23.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, S.; Gao, P.; Zhou, Y.; Wu, Z.; Wan, L.; Hu, F.; Wang, W.; Zou, X.; Chen, S. An Accurate Forest Fire Recognition Method Based on Improved BPNN and IoT. Remote Sens. 2023, 15, 2365. https://doi.org/10.3390/rs15092365

Zheng S, Gao P, Zhou Y, Wu Z, Wan L, Hu F, Wang W, Zou X, Chen S. An Accurate Forest Fire Recognition Method Based on Improved BPNN and IoT. Remote Sensing. 2023; 15(9):2365. https://doi.org/10.3390/rs15092365

Chicago/Turabian StyleZheng, Shaoxiong, Peng Gao, Yufei Zhou, Zepeng Wu, Liangxiang Wan, Fei Hu, Weixing Wang, Xiangjun Zou, and Shihong Chen. 2023. "An Accurate Forest Fire Recognition Method Based on Improved BPNN and IoT" Remote Sensing 15, no. 9: 2365. https://doi.org/10.3390/rs15092365