Use-Specific Considerations for Optimising Data Quality Trade-Offs in Citizen Science: Recommendations from a Targeted Literature Review to Improve the Usability and Utility for the Calibration and Validation of Remotely Sensed Products

Abstract

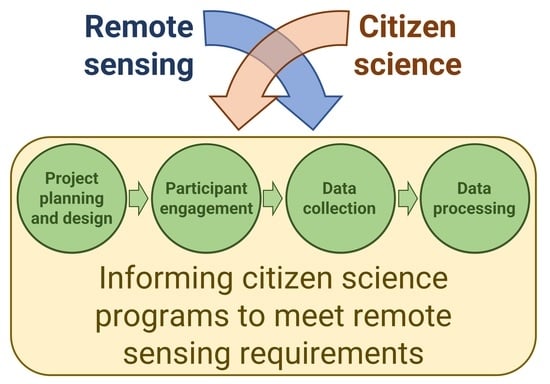

:1. Introduction

1.1. Current State of Citizen-Science Use in Remote Sensing

1.2. Suitability of Citizen Science for Remote-Sensing Calibration/Validation

1.3. Data Quality in Citizen Science: Challenges and Trade-Offs

2. Methods

3. Results

3.1. Planning and Design

- (i)

- End use of data

- (ii)

- Trust, transparency and privacy

3.2. Participant Engagement

- (i)

- Participant background

- (ii)

- Training

- (iii)

- Incentivisation

- (iv)

- Feedback

- (v)

- Participant motivation and retention

3.3. Data-Collection Protocols

- (i)

- Sampling scale and density

- (ii)

- Structure and standardisation of sampling protocols

- (iii)

- Metadata capture and technology

3.4. Data Processing

3.5. Guiding Questions

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Silvertown, J. A new dawn for citizen science. Trends Ecol. Evol. 2009, 24, 467–471. [Google Scholar] [CrossRef]

- Fraisl, D.; Hager, G.; Bedessem, B.; Gold, M.; Hsing, P.-Y.; Danielsen, F.; Hitchcock, C.B.; Hulbert, J.M.; Piera, J.; Spiers, H.; et al. Citizen science in environmental and ecological sciences. Nat. Rev. Methods Prim. 2022, 2, 64. [Google Scholar] [CrossRef]

- Adler, F.R.; Green, A.M.; Şekercioğlu, Ç.H. Citizen science in ecology: A place for humans in nature. Ann. N. Y. Acad. Sci. 2020, 1469, 52–64. [Google Scholar] [CrossRef]

- Chandler, M.; See, L.; Copas, K.; Bonde, A.M.Z.; López, B.C.; Danielsen, F.; Legind, J.K.; Masinde, S.; Miller-Rushing, A.J.; Newman, G.; et al. Contribution of citizen science towards international biodiversity monitoring. Biol. Conserv. 2017, 213, 280–294. [Google Scholar] [CrossRef] [Green Version]

- Roger, E.; Tegart, P.; Dowsett, R.; Kinsela, M.A.; Harley, M.D.; Ortac, G. Maximising the potential for citizen science in New South Wales. Aust. Zool. 2020, 40, 449–461. [Google Scholar] [CrossRef]

- Fritz, S.; Fonte, C.C.; See, L. The role of citizen science in earth observation. Remote Sens. 2017, 9, 357. [Google Scholar] [CrossRef] [Green Version]

- Kosmala, M.; Crall, A.; Cheng, R.; Hufkens, K.; Henderson, S.; Richardson, A.D. Season spotter: Using citizen science to validate and scale plant phenology from near-surface remote sensing. Remote Sens. 2016, 8, 726. [Google Scholar] [CrossRef] [Green Version]

- Kosmala, M.; Wiggins, A.; Swanson, A.; Simmons, B. Assessing data quality in citizen science. Front. Ecol. Environ. 2016, 14, 551–560. [Google Scholar] [CrossRef] [Green Version]

- Comber, A.; See, L.; Fritz, S.; Van der Velde, M.; Perger, C.; Foody, G. Using control data to determine the reliability of volunteered geographic information about land cover. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 37–48. [Google Scholar] [CrossRef] [Green Version]

- Dubovyk, O. The role of remote sensing in land degradation assessments: Opportunities and challenges. Eur. J. Remote Sens. 2017, 50, 601–613. [Google Scholar] [CrossRef]

- Mayr, S.; Kuenzer, C.; Gessner, U.; Klein, I.; Rutzinger, M. Validation of earth observation time-series: A review for large-area and temporally dense land surface products. Remote Sens. 2019, 11, 2616. [Google Scholar] [CrossRef] [Green Version]

- White, M.; Sinclair, S.; Hollings, T.; Williams, K.J.; Dickson, F.; Brenton, P.; Liu, C.; Raisbeck-Brown, N.; Warnick, A.; Lyon, P.; et al. Towards a continent-wide ecological site-condition dataset using calibrated subjective evaluations. Ecol. Appl. 2023, 33, e2729. [Google Scholar] [CrossRef] [PubMed]

- Roelfsema, C.; Phinn, S. Integrating field data with high spatial resolution multispectral satellite imagery for calibration and validation of coral reef benthic community maps. J. Appl. Remote Sens. 2010, 4, 043527. [Google Scholar] [CrossRef] [Green Version]

- Sparrow, B.D.; Foulkes, J.N.; Wardle, G.M.; Leitch, E.J.; Caddy-Retalic, S.; van Leeuwen, S.J.; Tokmakoff, A.; Thurgate, N.Y.; Guerin, G.R.; Lowe, A.J. A vegetation and soil survey method for surveillance monitoring of rangeland environments. Front. Ecol. Evol. 2020, 8, 157. [Google Scholar] [CrossRef]

- Sparrow, B.D.; Edwards, W.; Munroe, S.E.M.; Wardle, G.M.; Guerin, G.R.; Bastin, J.-F.; Morris, B.; Christensen, R.; Phinn, S.; Lowe, A.J. Effective ecosystem monitoring requires a multi-scaled approach. Biol. Rev. 2020, 95, 1706–1719. [Google Scholar] [CrossRef] [PubMed]

- Fischer, H.; Cho, H.; Storksdieck, M. Going beyond hooked participants: The nibble-and-drop framework for classifying citizen science participation. Citiz. Sci. Theory Pract. 2021, 6, 10. [Google Scholar] [CrossRef]

- Kohl, H.A.; Nelson, P.V.; Pring, J.; Weaver, K.L.; Wiley, D.M.; Danielson, A.B.; Cooper, R.M.; Mortimer, H.; Overoye, D.; Burdick, A.; et al. GLOBE observer and the GO on a trail data challenge: A citizen science approach to generating a global land cover land use reference dataset. Front. Clim. 2021, 3, 620497. [Google Scholar] [CrossRef]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; Grillmayer, R.; Achard, F.; Kraxner, F.; Obersteiner, M. Geo-wiki.org: The use of crowdsourcing to improve global land cover. Remote Sens. 2009, 1, 345–354. [Google Scholar] [CrossRef] [Green Version]

- Clark, M.L.; Aide, T.M. Virtual interpretation of earth web-interface tool (VIEW-IT) for collecting land-use/land-cover reference data. Remote Sens. 2011, 3, 601–620. [Google Scholar] [CrossRef] [Green Version]

- Conrad, C.C.; Hilchey, K.G. A review of citizen science and community-based environmental monitoring: Issues and opportunities. Environ. Monit. Assess. 2011, 176, 273–291. [Google Scholar] [CrossRef]

- Balázs, B.; Mooney, P.; Nováková, E.; Bastin, L.; Jokar Arsanjani, J. Data quality in citizen science. In The Science of Citizen Science; Vohland, K., Land-Zandstra, A., Ceccaroni, L., Lemmens, R., Perelló, J., Ponti, M., Samson, R., Wagenknecht, K., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 139–157. [Google Scholar] [CrossRef]

- Hunter, J.; Alabri, A.; van Ingen, C. Assessing the quality and trustworthiness of citizen science data. Concurr. Comput. Pract. Exp. 2013, 25, 454–466. [Google Scholar] [CrossRef]

- Baker, E.; Drury, J.P.; Judge, J.; Roy, D.B.; Smith, G.C.; Stephens, P.A. The verification of ecological citizen science data: Current approaches and future possibilities. Citiz. Sci. Theory Pract. 2021, 6, 1–14. [Google Scholar] [CrossRef]

- Corbari, C.; Paciolla, N.; Ben Charfi, I.; Skokovic, D.; Sobrino, J.A.; Woods, M. Citizen science supporting agricultural monitoring with hundreds of low-cost sensors in comparison to remote sensing data. Eur. J. Remote Sens. 2022, 55, 388–408. [Google Scholar] [CrossRef]

- Held, A.; Phinn, S.; Soto-Berelov, M.; Jones, S. AusCover Good Practice Guidelines: A Technical Handbook Supporting Calibration and Validation Activities of Remotely Sensed Data Products. TERN AusCover 2015. Available online: http://qld.auscover.org.au/public/html/AusCoverGoodPracticeGuidelines_2015_2.pdf (accessed on 27 February 2023).

- Santos-Fernandez, E.; Mengersen, K. Understanding the reliability of citizen science observational data using item response models. Methods Ecol. Evol. 2021, 12, 1533–1548. [Google Scholar] [CrossRef]

- Ceccaroni, L.; Bibby, J.; Roger, E.; Flemons, P.; Michael, K.; Fagan, L.; Oliver, J.L. Opportunities and Risks for Citizen Science in the Age of Artificial Intelligence. Citiz. Sci. Theory Pract. 2019, 4, 29. [Google Scholar] [CrossRef] [Green Version]

- Vasiliades, M.A.; Hadjichambis, A.C.; Paraskeva-Hadjichambi, D.; Adamou, A.; Georgiou, Y. A Systematic Literature Review on the Participation Aspects of Environmental and Nature-Based Citizen Science Initiatives. Sustainability 2021, 13, 7457. [Google Scholar] [CrossRef]

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef] [Green Version]

- Gilfedder, M.; Robinson, C.J.; Watson, J.E.M.; Campbell, T.G.; Sullivan, B.L.; Possingham, H.P. Brokering trust in citizen science. Soc. Nat. Resour. 2019, 32, 292–302. [Google Scholar] [CrossRef]

- Tarko, A.; Tsendbazar, N.-E.; de Bruin, S.; Bregt, A.K. Producing consistent visually interpreted land cover reference data: Learning from feedback. Int. J. Digit. Earth 2021, 14, 52–70. [Google Scholar] [CrossRef]

- Gengler, S.; Bogaert, P. Integrating crowdsourced data with a land cover product: A bayesian data fusion approach. Remote Sens. 2016, 8, 545. [Google Scholar] [CrossRef] [Green Version]

- Grainger, A. Citizen observatories and the new earth observation science. Remote Sens. 2017, 9, 153. [Google Scholar] [CrossRef] [Green Version]

- Cruickshank, S.S.; Bühler, C.; Schmidt, B.R. Quantifying data quality in a citizen science monitoring program: False negatives, false positives and occupancy trends. Conserv. Sci. Pract. 2019, 1, e54. [Google Scholar] [CrossRef] [Green Version]

- Vermeiren, P.; Munoz, C.; Zimmer, M.; Sheaves, M. Hierarchical toolbox: Ensuring scientific accuracy of citizen science for tropical coastal ecosystems. Ecol. Indic. 2016, 66, 242–250. [Google Scholar] [CrossRef]

- Anhalt-Depies, C.; Stenglein, J.L.; Zuckerberg, B.; Townsend, P.A.; Rissman, A.R. Tradeoffs and tools for data quality, privacy, transparency, and trust in citizen science. Biol. Conserv. 2019, 238, 108195. [Google Scholar] [CrossRef]

- Rasmussen, L.M.; Cooper, C. Citizen science ethics. Citiz. Sci. Theory Pract. 2019, 4, 5. [Google Scholar] [CrossRef] [Green Version]

- Welvaert, M.; Caley, P. Citizen surveillance for environmental monitoring: Combining the efforts of citizen science and crowdsourcing in a quantitative data framework. SpringerPlus 2016, 5, 1890. [Google Scholar] [CrossRef] [Green Version]

- Lewandowski, E.; Specht, H. Influence of volunteer and project characteristics on data quality of biological surveys. Conserv. Biol. 2015, 29, 713–723. [Google Scholar] [CrossRef]

- Clare, J.D.J.; Townsend, P.A.; Anhalt-Depies, C.; Locke, C.; Stenglein, J.L.; Frett, S.; Martin, K.J.; Singh, A.; Van Deelen, T.R.; Zuckerberg, B. Making inference with messy (citizen science) data: When are data accurate enough and how can they be improved? Ecol. Appl. 2019, 29, e01849. [Google Scholar] [CrossRef]

- Foody, G.M. An assessment of citizen contributed ground reference data for land cover map accuracy assessment. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, II-3/W5, 219–225. [Google Scholar] [CrossRef] [Green Version]

- Lukyanenko, R.; Wiggins, A.; Rosser, H.K. Citizen science: An information quality research frontier. Inf. Syst. Front. 2020, 22, 961–983. [Google Scholar] [CrossRef] [Green Version]

- Aceves-Bueno, E.; Adeleye, A.S.; Feraud, M.; Huang, Y.; Tao, M.; Yang, Y.; Anderson, S.E. The accuracy of citizen science data: A quantitative review. Bull. Ecol. Soc. Am. 2017, 98, 278–290. [Google Scholar] [CrossRef] [Green Version]

- Mesaglio, T.; Callaghan, C.T. An overview of the history, current contributions and future outlook of iNaturalist in Australia. Wildl. Res. 2021, 48, 289–303. [Google Scholar] [CrossRef]

- Tredick, C.A.; Lewison, R.L.; Deutschman, D.H.; Hunt, T.A.; Gordon, K.L.; Von Hendy, P. A rubric to evaluate citizen-science programs for long-term ecological monitoring. BioScience 2017, 67, 834–844. [Google Scholar] [CrossRef]

- Ogunseye, S.; Parsons, J. Designing for information quality in the era of repurposable crowdsourced user-generated content. In Proceedings of the Lecture Notes in Business Information Processing, Tallinn, Estonia, 11–15 June 2018; pp. 180–185. [Google Scholar]

- Rotman, D.; Preece, J.; Hammock, J.; Procita, K.; Hansen, D.; Parr, C.; Lewis, D.; Jacobs, D. Dynamic changes in motivation in collaborative citizen-science projects. In Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work, Seattle, WA, USA, 11–15 February 2012; pp. 217–226. [Google Scholar]

- Lukyanenko, R.; Parsons, J.; Wiersma, Y.F. Emerging problems of data quality in citizen science. Conserv. Biol. 2016, 30, 447–449. [Google Scholar] [CrossRef] [Green Version]

- Lukyanenko, R.; Parsons, J. Beyond micro-tasks: Research opportunities in observational crowdsourcing. In Crowdsourcing: Concepts, Methodologies, Tools, and Applications; Information Resources Management Association, Ed.; IGI Global: Hershey, PA, USA, 2019; pp. 1510–1535. [Google Scholar] [CrossRef]

- Dickinson, J.L.; Shirk, J.; Bonter, D.; Bonney, R.; Crain, R.L.; Martin, J.; Phillips, T.; Purcell, K. The current state of citizen science as a tool for ecological research and public engagement. Front. Ecol. Environ. 2012, 10, 291–297. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Crall, A.W.; Newman, G.J.; Stohlgren, T.J.; Holfelder, K.A.; Graham, J.; Waller, D.M. Assessing citizen science data quality: An invasive species case study. Conserv. Lett. 2011, 4, 433–442. [Google Scholar] [CrossRef]

- Sun, C.C.; Hurst, J.E.; Fuller, A.K. Citizen science data collection for integrated wildlife population analyses. Front. Ecol. Evol. 2021, 9, 682124. [Google Scholar] [CrossRef]

- Kennedy, K.A.; Addison, P.A. Some considerations for the use of visual estimates of plant cover in biomonitoring. J. Ecol. 1987, 75, 151–157. [Google Scholar] [CrossRef]

- Iwao, K.; Nishida, K.; Kinoshita, T.; Yamagata, Y. Validating land cover maps with degree confluence project information. Geophys. Res. Lett. 2006, 33, L23404. [Google Scholar] [CrossRef]

- Fink, D.; Damoulas, T.; Bruns, N.E.; La Sorte, F.A.; Hochachka, W.M.; Gomes, C.P.; Kelling, S. Crowdsourcing meets ecology: Hemispherewide spatiotemporal species distribution models. AI Mag. 2014, 35, 19–30. [Google Scholar] [CrossRef] [Green Version]

- Kelling, S.; Fink, D.; La Sorte, F.A.; Johnston, A.; Bruns, N.E.; Hochachka, W.M. Taking a ‘big data’ approach to data quality in a citizen science project. Ambio 2015, 44, 601–611. [Google Scholar] [CrossRef] [Green Version]

- Geldmann, J.; Heilmann-Clausen, J.; Holm, T.E.; Levinsky, I.; Markussen, B.; Olsen, K.; Rahbek, C.; Tøttrup, A.P. What determines spatial bias in citizen science? Exploring four recording schemes with different proficiency requirements. Divers. Distrib. 2016, 22, 1139–1149. [Google Scholar] [CrossRef]

- Tokmakoff, A.; Sparrow, B.; Turner, D.; Lowe, A. AusPlots Rangelands field data collection and publication: Infrastructure for ecological monitoring. Future Gener. Comput. Syst. 2016, 56, 537–549. [Google Scholar] [CrossRef]

- Hochachka, W.M.; Fink, D.; Hutchinson, R.A.; Sheldon, D.; Wong, W.K.; Kelling, S. Data-intensive science applied to broad-scale citizen science. Trends Ecol. Evol. 2012, 27, 130–137. [Google Scholar] [CrossRef] [PubMed]

- Kelling, S.; Yu, J.; Gerbracht, J.; Wong, W.K. Emergent filters: Automated data verification in a large-scale citizen science project. In Proceedings of the 7th IEEE International Conference on e-Science Workshops, eScienceW, Stockholm, Sweden, 5–8 December 2011; pp. 20–27. [Google Scholar]

- Robinson, O.J.; Ruiz-Gutierrez, V.; Reynolds, M.D.; Golet, G.H.; Strimas-Mackey, M.; Fink, D. Integrating citizen science data with expert surveys increases accuracy and spatial extent of species distribution models. Divers. Distrib. 2020, 26, 976–986. [Google Scholar] [CrossRef]

- Swanson, A.; Kosmala, M.; Lintott, C.; Packer, C. A generalized approach for producing, quantifying, and validating citizen science data from wildlife images. Conserv. Biol. 2016, 30, 520–531. [Google Scholar] [CrossRef] [PubMed]

- See, L.; Bayas, J.C.L.; Lesiv, M.; Schepaschenko, D.; Danylo, O.; McCallum, I.; Dürauer, M.; Georgieva, I.; Domian, D.; Fraisl, D.; et al. Lessons learned in developing reference data sets with the contribution of citizens: The Geo-Wiki experience. Environ. Res. Lett. 2022, 17, 065003. [Google Scholar] [CrossRef]

- Vahidi, H.; Klinkenberg, B.; Yan, W. Trust as a proxy indicator for intrinsic quality of volunteered geographic information in biodiversity monitoring programs. GIScience Remote Sens. 2018, 55, 502–538. [Google Scholar] [CrossRef]

- Fonte, C.C.; Bastin, L.; See, L.; Foody, G.; Lupia, F. Usability of VGI for validation of land cover maps. Int. J. Geogr. Inf. Sci. 2015, 29, 1269–1291. [Google Scholar] [CrossRef] [Green Version]

- Elmore, A.J.; Stylinski, C.D.; Pradhan, K. Synergistic use of citizen science and remote sensing for continental-scale measurements of forest tree phenology. Remote Sens. 2016, 8, 502. [Google Scholar] [CrossRef] [Green Version]

- Bayraktarov, E.; Ehmke, G.; O’Connor, J.; Burns, E.L.; Nguyen, H.A.; McRae, L.; Possingham, H.P.; Lindenmayer, D.B. Do big unstructured biodiversity data mean more knowledge? Front. Ecol. Evol. 2019, 6, 239. [Google Scholar] [CrossRef] [Green Version]

| End use of data [4,33,34] |

|

| Transparency, trust, and privacy [36] |

|

| Participant background [29,38,42,43,44] |

|

| Training [20,42,45,47] |

|

| Incentivisation [20,47] |

|

| Feedback [6,39,45,49] |

|

| Participant motivation and retention [6,39,42,49,50] |

|

| Sampling scale and density [39,40,44,55,57,60] |

|

| Structure and standardisation of sampling protocols [38,51] |

|

| Metadata capture and technology [38,59,60,61] |

|

| Citizen science data validation and data processing [39,43,45,64] |

|

| Program Planning and Design: Guiding Questions and Considerations | |

| End use of data [6,30,31,34,35] |

|

| Transparency, trust, and privacy [20,36,37] |

|

| Participant engagement: Guiding questions and considerations | |

| Participant background [39,42,43] |

|

| Training [20,33,38,42,45,46] |

|

| Incentivisation [20,47] |

|

| Feedback [6,33,39,42,45] |

|

| Participant motivation and retention [6,33,39,42,47,49,50,51] |

|

| Data collection: Guiding questions and considerations | |

| Sampling scale and density [7,8,55,56,57,66] |

|

| Structure and standardisation of sampling protocols [38,39,42,48,51] |

|

| Metadata capture and technology [38,42,59,60,61] |

|

| Data processing: Guiding questions and considerations | |

| Citizen-science data validation and data processing [22,23,32,33,34,43,62,64,65,67] |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schacher, A.; Roger, E.; Williams, K.J.; Stenson, M.P.; Sparrow, B.; Lacey, J. Use-Specific Considerations for Optimising Data Quality Trade-Offs in Citizen Science: Recommendations from a Targeted Literature Review to Improve the Usability and Utility for the Calibration and Validation of Remotely Sensed Products. Remote Sens. 2023, 15, 1407. https://doi.org/10.3390/rs15051407

Schacher A, Roger E, Williams KJ, Stenson MP, Sparrow B, Lacey J. Use-Specific Considerations for Optimising Data Quality Trade-Offs in Citizen Science: Recommendations from a Targeted Literature Review to Improve the Usability and Utility for the Calibration and Validation of Remotely Sensed Products. Remote Sensing. 2023; 15(5):1407. https://doi.org/10.3390/rs15051407

Chicago/Turabian StyleSchacher, Alice, Erin Roger, Kristen J. Williams, Matthew P. Stenson, Ben Sparrow, and Justine Lacey. 2023. "Use-Specific Considerations for Optimising Data Quality Trade-Offs in Citizen Science: Recommendations from a Targeted Literature Review to Improve the Usability and Utility for the Calibration and Validation of Remotely Sensed Products" Remote Sensing 15, no. 5: 1407. https://doi.org/10.3390/rs15051407