1. Introduction

At present, image semantic segmentation (ISS) is one of the most-significant areas of research in the field of digital image processing and computer vision. Compared with traditional image segmentation, ISS adds semantic information to the target and foreground of the image on this basis and can obtain the information that the image itself needs to express according to the texture, color, and other high-level semantic features of the image, which is more practical [

1]. The difference between remote sensing image semantic segmentation and ordinary image semantic segmentation lies in the different processing objects. Specifically, the semantic segmentation of remote sensing images refers to the analysis of the spectrum, color, shape, and spatial information of various ground objects in remote sensing images, divides the feature space into independent subspaces, and finally, assigns each pixel in the image predetermined semantic tags. Remote sensing images contain richer ground object information and vary in size, color, and orientation, which leads to the emergence of inter-class similarities and intra-class variability [

2,

3,

4]. For instance, “stadium”, “church”, and “baseballfield” may appear in “school”, and there may be large differences in different “school” scenes, as shown in

Figure 1. Moreover, remote sensing images often have a complex background environment, irregular object shapes, similar appearances in different categories, and other factors that are not conducive to image segmentation. In particular, compared with natural images that only contain the three channels of RGB, high-resolution multispectral remote sensing images contain more channels, which contain richer ground features and a more complex spatial distribution. On account of the above-mentioned factors, the task of the semantic segmentation of remote sensing images is far more complicated than that of natural images. Furthermore, many natural image semantic segmentation models do not perform satisfactorily on remote sensing images. Recently, there has been increasing research on the semantic segmentation of remote sensing images. However, due to the above problems, the semantic segmentation of remote sensing images is still worth further study [

5,

6,

7,

8].

Currently, several new segmentation tasks have emerged, including instance segmentation and panoptic segmentation [

9,

10]. Different from basic semantic segmentation, instance segmentation needs to label different individual aspects of the same object [

11,

12,

13]. On the basis of instance segmentation, panoptic segmentation needs to detect and segment all objects in the image, including the background [

14,

15,

16]. It can be found that the instance segmentation and panoptic segmentation tasks are more complicated. At present, there are still many problems in the research of instance segmentation and panoptic segmentation, such as object occlusion and image degradation [

10,

11]. Although there are many new research branches of semantic segmentation, this does not mean that the basic semantic segmentation research is worthless. The semantic segmentation of remote sensing images plays an important role in many applications such as resource surveys, disaster detection, and urban planning [

17]. However, due to the complexity of remote sensing images, there are many problems to be solved in basic semantic segmentation. For example, how to improve the accuracy and execution speed of remote sensing image semantic segmentation is still a problem worth studying. When the performance of the basic remote sensing semantic segmentation model is satisfactory, it is reasonable to further consider the instance segmentation and panoptic segmentation of remote sensing images.

The results of remote sensing image segmentation are mainly determined by multiscale feature extraction, spatial context information, and boundary information [

18]. First of all, rich features are conducive to determining the object categories in images in complex environments. In particular, feature extraction at different scales can effectively alleviate the segmentation impact caused by the differences between object sizes in the image. Second, the global spatial context information can help determine the category of adjacent pixels, because it is not easy to obtain satisfactory segmentation results using only local information. Finally, since the satellite is not stationary when taking remote sensing images, the boundaries of objects in the image are often unclear, so more attention should be paid to the edge details of objects during image segmentation.

Traditional remote sensing image segmentation models generally use manual feature extractors for feature extraction and then use traditional machine learning classification models for the final pixel classification operations. Traditional feature extraction models include oriented FAST and rotated brief (ORB) [

19], local binary pattern (LBP) [

20], speeded up robust features (SURF) [

21], etc. Traditional classification methods consist of support vector machine [

22], logistic regression [

23], etc. However, due to the complex background environment in remote sensing images, the performance of traditional remote sensing image segmentation models is often unsatisfactory. Additionally, the abilities of traditional feature extraction models cannot meet the needs of practical applications.

Deep learning has been widely used in image recognition [

24,

25], image classification [

26,

27], video prediction [

28,

29], and other fields. In particular, deep convolutional neural networks (DCNNs) with strong feature extraction capabilities are popular in computer vision [

30,

31,

32,

33,

34,

35,

36,

37]. For instance, Reference [

38] designed a YOLOv3 model with four scale detection layers for pavement crack detection, using a multiscale fusion structure and an efficient cross-linking (EIoU) loss function. Recently, DCNNs have been gradually used in image semantic segmentation tasks. For example, Reference [

39] built a segmentation model called U-Net, which fuses the features of different scales to obtain segmentation results. However, the U-net model does not consider the spatial context information in the image. The PSPNet [

40] model obtains contextual information through a pyramid pooling module, which improves the segmentation performance. However, it has high computational complexity and poor efficiency. Reference [

41] designed a high-resolution network (HRNet), which fuses structural features and high-level semantic information at the output end, improving the spatial information extraction ability of images, but ignoring local information. For the problem of HRNet, Reference [

42] used the attention mechanism to improve the recognition performance of the model for local regions. However, this model is very resource-intensive and has a large amount of redundant information. Additionally, Reference [

18] proposed a high-resolution context extraction network (HRCNet) based on a high-resolution network for the semantic segmentation of remote sensing images. Reference [

43] built a multi-attention network (MANet) to extract contextual dependencies in images through multiple efficient attention modules. However, the multiscale context fusion effect of HRCNet and MANet needs to be improved, and both have the problem of high computational complexity. In summary, during the semantic segmentation task of remote sensing images in complex environments, the current CNN-based model does not pay enough attention to global context information, and its performance is not completely convincing [

44]. In addition, networks with a superior segmentation performance often have the problem of excessive computing resource consumption.

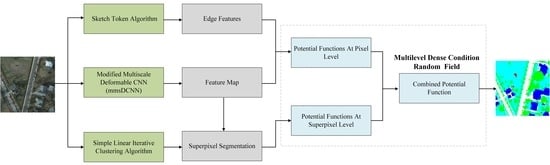

Considering the main factors affecting the semantic segmentation of remote sensing images and the problems of deep learning models in the semantic segmentation of remote sensing images, this paper proposes the mmsDCNN-DenseCRF model, in which a modified multiscale deformable convolutional neural network (mmsDCNN) is used for multiscale feature extraction, while DenseCRF is utilized to capture semantic information and global context dependency and optimize rough segmentation results such as edge refinement. First, a lightweight multiscale deformable convolution neural network is proposed, which is based on the modified multiscale convolutional neural network (mmsCNN) designed in our previous work [

45]. The mmsDCNN model adds an offset to the sampling position of mmsCNN convolution, allowing for the convolutional kernel to adaptively choose the receptive field size with only a slight increase in computing resources. In addition, the mmsDCNN can achieve a balance between strong multiscale feature extraction performance and low computational complexity. We used the mmsDCNN to extract rich multiscale features and obtain preliminary prediction results. Subsequently, a multi-level DenseCRF based on the superpixel and pixel level is proposed as a back-end optimization module, which can make full use of spatial context information at different granularities. The multi-level DenseCRF model considers the relationship between each pixel and all other pixels, establishes a dependency between all pixel pairs in the image, and uses the interdependencies between pixels to introduce the global information of the image, which is suitable for the semantic segmentation task of remote sensing images in complex environments [

46]. Although DenseCRF improves the robustness of processing images with semantic segmentation, it can only determine the region position and approximate shape contour of the object of interest in the image marking results. As a result, the real boundary of the segmented region cannot be accurately obtained, since there are still fuzzy edge categories or segmentation mistakes. To solve the above problems, we used the Sketch token edge detection algorithm [

47] to extract the edge contour features of the image and fuse the edge contour features into the potential function of the DenseCRF model. In summary, this paper proposes a new idea and specific implementation for the problems existing in the existing models based on convolutional neural networks in remote sensing image segmentation tasks.

The main contributions are as follows:

A framework of the mmsDCNN-DenseCRF combined model for the semantic segmentation of remote sensing images is proposed.

We designed a lightweight mmsDCNN model, which incorporates deformable convolution into the mmsCNN proposed in our previous work [

45]. Notably, the mmsDCNN adds an offset to the sampling position of the mmsCNN convolution, which enables the convolutional kernel to adaptively determine the receptive field size. Compared with the mmsCNN, the mmsDCNN can achieve a satisfactory performance improvement with only a tiny increase in the computational complexity.

The multi-level DenseCRF model based on the superpixel level and pixel level is proposed. We combined the pixel-level potential function with the superpixel-based potential function to obtain the final Gaussian potential function, which enables our model to consider features of various scales and the context information of the image and prevents poor superpixel segmentation results from affecting the final result.

To solve the problem of blurring edge categories or segmentation errors in the semantic segmentation task of DenseCRF, we utilized a Sketch token edge detection algorithm to extract the edge contour features of the image and integrated them into the Gaussian potential function of the DenseCRF model.

The remainder of the article is organized as follows.

Section 2 introduces the modified multiscale deformable convolutional neural network (mmsDCNN), and the multi-level DenseCRF model. In

Section 3, we conduct some comparative experiments on the public dataset, i.e., the International Society for Photogrammetry and Remote Sensing (ISPRS) [

48]. Finally,

Section 5 provides a summary of the article.