High-Resolution Monitoring of the Snow Cover on the Moroccan Atlas through the Spatio-Temporal Fusion of Landsat and Sentinel-2 Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Remotely Sensed Data Acquisition

2.3. Estimation of Snow Surfaces from Satellite Images (Snow Cover Area)

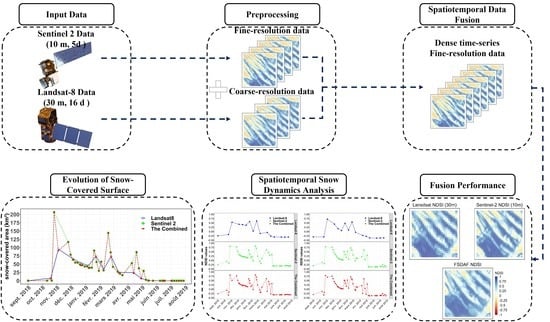

2.4. Methodology

2.5. The Spatio-Temporal Data Fusion Method

2.5.1. The ESTARFM Method

2.5.2. The FSDAF Method

2.5.3. The Pre-Classification FSDAF Method

2.6. Selection of Optimum Input Image Pairs

2.7. Quantitative and Qualitative Evaluation of Fusion Techniques

3. Results

3.1. The Optimal Input Image Pairs

3.2. Fusion Performance

3.3. Assessing the Fusion Performance Using a Snow Binary Classification

3.4. Analysis of NDSI Profiles

3.5. Spatio-Temporal Fusion Effect on Snow Cover Area Extraction

4. Discussion

5. Conclusions

- The effectiveness of the strategy used for selecting the optimal input images has been found to improve the accuracy of the final data fusion products.

- Both the FDSAF and the pre-classification FSDAF particularly have yielded the highest performance in terms of correlation between the syntenic NDSI data and the real one.

- The combined use of the L8 and S2 satellite images shows a well-defined snow cover characteristic.

- The fusion of the S2 and L8 data allowed for seamless monitoring of snow cover change and assisted in capturing precise temporal variations while maintaining high spatial resolution details, which was not previously feasible using only the S2 or L8 data.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mankin, J.S.; Viviroli, D.; Singh, D.; Hoekstra, A.Y.; Diffenbaugh, N.S. The Potential for Snow to Supply Human Water Demand in the Present and Future. Environ. Res. Lett. 2015, 10, 114016. [Google Scholar] [CrossRef]

- Viviroli, D.; Dürr, H.H.; Messerli, B.; Meybeck, M.; Weingartner, R. Mountains of the world, water towers for humanity: Typology, mapping, and global significance. Water Resour. Res. 2007, 43, W07447. [Google Scholar] [CrossRef] [Green Version]

- Barnett, T.P.; Adam, J.C.; Lettenmaier, D.P. Potential Impacts of a Warming Climate on Water Availability in Snow-Dominated Regions. Nature 2005, 438, 303–309. [Google Scholar] [CrossRef]

- Schöber, J.; Schneider, K.; Helfricht, K.; Schattan, P.; Achleitner, S.; Schöberl, F.; Kirnbauer, R. Snow Cover Characteristics in a Glacierized Catchment in the Tyrolean Alps—Improved Spatially Distributed Modelling by Usage of Lidar Data. J. Hydrol. 2014, 519, 3492–3510. [Google Scholar] [CrossRef]

- Tsai, Y.-L.S.; Dietz, A.; Oppelt, N.; Kuenzer, C. Remote Sensing of Snow Cover Using Spaceborne SAR: A Review. Remote Sens. 2019, 11, 1456. [Google Scholar] [CrossRef] [Green Version]

- Qiao, H.; Zhang, P.; Li, Z.; Liu, C. A New Geostationary Satellite-Based Snow Cover Recognition Method for FY-4A AGRI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11372–11385. [Google Scholar] [CrossRef]

- Pielke, R.A.; Doesken, N.; Bliss, O.; Green, T.; Chaffin, C.; Salas, J.D.; Woodhouse, C.A.; Lukas, J.J.; Wolter, K. Drought 2002 in Colorado: An Unprecedented Drought or a Routine Drought? Pure Appl. Geophys. 2005, 162, 1455–1479. [Google Scholar] [CrossRef] [Green Version]

- Ouatiki, H.; Boudhar, A.; Leblanc, M.; Fakir, Y.; Chehbouni, A. When Climate Variability Partly Compensates for Groundwater Depletion: An Analysis of the GRACE Signal in Morocco. J. Hydrol. Reg. Stud. 2022, 42, 101177. [Google Scholar] [CrossRef]

- Wu, Y.; Xu, Y. Snow Impact on Groundwater Recharge in Table Mountain Group Aquifer Systems with a Case Study of the Kommissiekraal River Catchment South Africa. WSA 2007, 31, 275–282. [Google Scholar] [CrossRef] [Green Version]

- Boudhar, A.; Hanich, L.; Boulet, G.; Duchemin, B.; Berjamy, B.; Chehbouni, A. Evaluation of the Snowmelt Runoff Model in the Moroccan High Atlas Mountains Using Two Snow-Cover Estimates. Hydrol. Sci. J. 2009, 54, 1094–1113. [Google Scholar] [CrossRef] [Green Version]

- Boudhar, A.; Ouatiki, H.; Bouamri, H.; Lebrini, Y.; Karaoui, I.; Hssaisoune, M.; Arioua, A.; Benabdelouahab, T. Hydrological Response to Snow Cover Changes Using Remote Sensing over the Oum Er Rbia Upstream Basin, Morocco. In Mapping and Spatial Analysis ofSocio-Economic and Environmental Indicators for Sustainable Development, Advances in Science, Technology & Innovation; Rebai, N., Mastere, M., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 95–102. ISBN 978-3-030-21166-0. [Google Scholar]

- Tuel, A.; Chehbouni, A.; Eltahir, E.A.B. Dynamics of Seasonal Snowpack over the High Atlas. J. Hydrol. 2021, 595, 125657. [Google Scholar] [CrossRef]

- Hanich, L.; Chehbouni, A.; Gascoin, S.; Boudhar, A.; Jarlan, L.; Tramblay, Y.; Boulet, G.; Marchane, A.; Baba, M.W.; Kinnard, C.; et al. Snow Hydrology in the Moroccan Atlas Mountains. J. Hydrol. Reg. Stud. 2022, 42, 101101. [Google Scholar] [CrossRef]

- Jarlan, L.; Khabba, S.; Er-Raki, S.; Le Page, M.; Hanich, L.; Fakir, Y.; Merlin, O.; Mangiarotti, S.; Gascoin, S.; Ezzahar, J.; et al. Remote Sensing of Water Resources in Semi-Arid Mediterranean Areas: The Joint International Laboratory TREMA. Int. J. Remote Sens. 2015, 36, 4879–4917. [Google Scholar] [CrossRef]

- Baba, M.W.; Boudhar, A.; Gascoin, S.; Hanich, L.; Marchane, A.; Chehbouni, A. Assessment of MERRA-2 and ERA5 to Model the Snow Water Equivalent in the High Atlas (1981–2019). Water 2021, 13, 890. [Google Scholar] [CrossRef]

- Bouamri, H.; Kinnard, C.; Boudhar, A.; Gascoin, S.; Hanich, L.; Chehbouni, A. MODIS Does Not Capture the Spatial Heterogeneity of Snow Cover Induced by Solar Radiation. Front. Earth Sci. 2021, 9, 640250. [Google Scholar] [CrossRef]

- Boudhar, A.; Duchemin, B.; Hanich, L.; Boulet, G.; Chehbouni, A. Spatial Distribution of the Air Temperature in Mountainous Areas Using Satellite Thermal Infra-Red Data. Comptes. Rendus. Geosci. 2011, 343, 32–42. [Google Scholar] [CrossRef] [Green Version]

- Tuel, A.; Eltahir, E.A.B. Seasonal Precipitation Forecast Over Morocco. Water Resour. Res. 2018, 54, 9118–9130. [Google Scholar] [CrossRef]

- Dozier, J.; Marks, D. Snow Mapping and Classification from Landsat Thematic Mapper Data. A. Glaciol. 1987, 9, 97–103. [Google Scholar] [CrossRef] [Green Version]

- Gascoin, S.; Hagolle, O.; Huc, M.; Jarlan, L.; Dejoux, J.-F.; Szczypta, C.; Marti, R.; Sánchez, R. A Snow Cover Climatology for the Pyrenees from MODIS Snow Products. Hydrol. Earth Syst. Sci. 2015, 19, 2337–2351. [Google Scholar] [CrossRef] [Green Version]

- Boudhar, A.; Duchemin, B.; Hanich, L.; Jarlan, L.; Chaponnière, A.; Maisongrande, P.; Boulet, G.; Chehbouni, A. Long-term analysis of snow-covered area in the Moroccan High-Atlas through remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, S109–S115. [Google Scholar] [CrossRef]

- Marchane, A.; Jarlan, L.; Hanich, L.; Boudhar, A.; Gascoin, S.; Tavernier, A.; Filali, N.; Le Page, M.; Hagolle, O.; Berjamy, B. Assessment of Daily MODIS Snow Cover Products to Monitor Snow Cover Dynamics over the Moroccan Atlas Mountain Range. Remote Sens. Environ. 2015, 160, 72–86. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the Accuracy of Blending Landsat–MODIS Surface Reflectances in Two Landscapes with Contrasting Spatial and Temporal Dynamics: A Framework for Algorithm Selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Feng, G.; Masek, J.; Schwaller, M.; Hall, F. On the Blending of the Landsat and MODIS Surface Reflectance: Predicting Daily Landsat Surface Reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar] [CrossRef]

- Anderton, S.P.; White, S.M.; Alvera, B. Micro-Scale Spatial Variability and the Timing of Snow Melt Runoff in a High Mountain Catchment. J. Hydrol. 2002, 268, 158–176. [Google Scholar] [CrossRef]

- Carlson, B.Z.; Corona, M.C.; Dentant, C.; Bonet, R.; Thuiller, W.; Choler, P. Observed Long-Term Greening of Alpine Vegetation—A Case Study in the French Alps. Environ. Res. Lett. 2017, 12, 114006. [Google Scholar] [CrossRef]

- López-Moreno, J.I.; Stähli, M. Statistical Analysis of the Snow Cover Variability in a Subalpine Watershed: Assessing the Role of Topography and Forest Interactions. J. Hydrol. 2008, 348, 379–394. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A High-Performance and in-Season Classification System of Field-Level Crop Types Using Time-Series Landsat Data and a Machine Learning Approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Roy, D.P.; Kovalskyy, V.; Zhang, H.K.; Vermote, E.F.; Yan, L.; Kumar, S.S.; Egorov, A. Characterization of Landsat-7 to Landsat-8 Reflective Wavelength and Normalized Difference Vegetation Index Continuity. Remote Sens. 2016, 185, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Gómez-Landesa, E.; Rango, A. Operational Snowmelt Runoff Forecasting in the Spanish Pyrenees Using the Snowmelt Runoff Model. Hydrol. Process. 2002, 16, 1583–1591. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Gascon, F.; Bouzinac, C.; Thépaut, O.; Jung, M.; Francesconi, B.; Louis, J.; Lonjou, V.; Lafrance, B.; Massera, S.; Gaudel-Vacaresse, A.; et al. Copernicus Sentinel-2A Calibration and Products Validation Status. Remote Sens. 2017, 9, 584. [Google Scholar] [CrossRef] [Green Version]

- Revuelto, J.; Alonso-González, E.; Gascoin, S.; Rodríguez-López, G.; López-Moreno, J.I. Spatial Downscaling of MODIS Snow Cover Observations Using Sentinel-2 Snow Products. Remote Sens. 2021, 13, 4513. [Google Scholar] [CrossRef]

- Baba, M.; Gascoin, S.; Hanich, L. Assimilation of Sentinel-2 Data into a Snowpack Model in the High Atlas of Morocco. Remote Sens. 2018, 10, 1982. [Google Scholar] [CrossRef] [Green Version]

- Gascoin, S.; Grizonnet, M.; Bouchet, M.; Salgues, G.; Hagolle, O. Theia Snow Collection: High-Resolution Operational Snow Cover Maps from Sentinel-2 and Landsat-8 Data. Earth Syst. Sci. Data 2019, 22, 493–514. [Google Scholar] [CrossRef] [Green Version]

- Wayand, N.E.; Marsh, C.B.; Shea, J.M.; Pomeroy, J.W. Globally Scalable Alpine Snow Metrics. Remote Sens. Environ. 2018, 213, 61–72. [Google Scholar] [CrossRef]

- Gascoin, S.; Barrou Dumont, Z.; Deschamps-Berger, C.; Marti, F.; Salgues, G.; López-Moreno, J.I.; Revuelto, J.; Michon, T.; Schattan, P.; Hagolle, O. Estimating Fractional Snow Cover in Open Terrain from Sentinel-2 Using the Normalized Difference Snow Index. Remote Sens. 2020, 12, 2904. [Google Scholar] [CrossRef]

- Dong, C.; Menzel, L. Producing Cloud-Free MODIS Snow Cover Products with Conditional Probability Interpolation and Meteorological Data. Remote Sens. Environ. 2016, 186, 439–451. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; DiGirolamo, N.E.; Román, M.O. Evaluation of MODIS and VIIRS Cloud-Gap-Filled Snow-Cover Products for Production of an Earth Science Data Record. Hydrol. Earth Syst. Sci. 2019, 23, 5227–5241. [Google Scholar] [CrossRef] [Green Version]

- Dozier, J.; Bair, E.H.; Davis, R.E. Estimating the Spatial Distribution of Snow Water Equivalent in the World’s Mountains. WIREs Water 2016, 3, 461–474. [Google Scholar] [CrossRef]

- Belgiu, M.; Stein, A. Spatiotemporal Image Fusion in Remote Sensing. Remote Sens. 2019, 11, 818. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M. A Flexible Spatiotemporal Method for Fusing Satellite Images with Different Resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal Reflectance Fusion via Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Dunsmuir, W.; Robinson, P.M. Estimation of Time Series Models in the Presence of Missing Data. J. Am. Stat. Assoc. 1981, 76, 560–568. [Google Scholar] [CrossRef]

- Racault, M.-F.; Sathyendranath, S.; Platt, T. Impact of Missing Data on the Estimation of Ecological Indicators from Satellite Ocean-Colour Time-Series. Remote Sens. Environ. 2014, 152, 15–28. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Wang, J.; Gao, F.; Liu, Y.; Schaaf, C.; Friedl, M.; Yu, Y.; Jayavelu, S.; Gray, J.; Liu, L.; et al. Exploration of Scaling Effects on Coarse Resolution Land Surface Phenology. Remote Sens. Environ. 2017, 190, 318–330. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An Enhanced Spatial and Temporal Adaptive Reflectance Fusion Model for Complex Heterogeneous Regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Htitiou, A.; Boudhar, A.; Lebrini, Y.; Lionboui, H.; Chehbouni, A.; Benabdelouahab, T. Classification and Status Monitoring of Agricultural Crops in Central Morocco: A Synergistic Combination of OBIA Approach and Fused Landsat-Sentinel-2 Data. J. Appl. Rem. Sens. 2021, 15, 014504. [Google Scholar] [CrossRef]

- Liu, M.; Yang, W.; Zhu, X.; Chen, J.; Chen, X.; Yang, L.; Helmer, E.H. An Improved Flexible Spatiotemporal DAta Fusion (IFSDAF) Method for Producing High Spatiotemporal Resolution Normalized Difference Vegetation Index Time Series. Remote Sens. Environ. 2019, 227, 74–89. [Google Scholar] [CrossRef]

- Wang, P.; Gao, F.; Masek, J.G. Operational Data Fusion Framework for Building Frequent Landsat-Like Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7353–7365. [Google Scholar] [CrossRef]

- Chen, Y.; Cao, R.; Chen, J.; Zhu, X.; Zhou, J.; Wang, G.; Shen, M.; Chen, X.; Yang, W. A New Cross-Fusion Method to Automatically Determine the Optimal Input Image Pairs for NDVI Spatiotemporal Data Fusion. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5179–5194. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Zhang, X.; Yang, Z.; Alfieri, J.G.; Kustas, W.P.; Mueller, R.; Johnson, D.M.; Prueger, J.H. Toward Mapping Crop Progress at Field Scales through Fusion of Landsat and MODIS Imagery. Remote Sens. Environ. 2017, 188, 9–25. [Google Scholar] [CrossRef] [Green Version]

- Liang, L.; Schwartz, M.D.; Wang, Z.; Gao, F.; Schaaf, C.B.; Tan, B.; Morisette, J.T.; Zhang, X. A Cross Comparison of Spatiotemporally Enhanced Springtime Phenological Measurements from Satellites and Ground in a Northern U.S. Mixed Forest. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7513–7526. [Google Scholar] [CrossRef] [Green Version]

- Luo, J.; Dong, C.; Lin, K.; Chen, X.; Zhao, L.; Menzel, L. Mapping Snow Cover in Forests Using Optical Remote Sensing, Machine Learning and Time-Lapse Photography. Remote Sens. Environ. 2022, 275, 113017. [Google Scholar] [CrossRef]

- Muhuri, A.; Gascoin, S.; Menzel, L.; Kostadinov, T.S.; Harpold, A.A.; Sanmiguel-Vallelado, A.; Lopez-Moreno, J.I. Performance Assessment of Optical Satellite-Based Operational Snow Cover Monitoring Algorithms in Forested Landscapes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7159–7178. [Google Scholar] [CrossRef]

- Kostadinov, T.S.; Schumer, R.; Hausner, M.; Bormann, K.J.; Gaffney, R.; McGwire, K.; Painter, T.H.; Tyler, S.; Harpold, A.A. Watershed-Scale Mapping of Fractional Snow Cover under Conifer Forest Canopy Using Lidar. Remote Sens. Environ. 2019, 222, 34–49. [Google Scholar] [CrossRef]

- Boudhar, A.; Duchemin, B.; Hanich, L.; Chaponnière, A.; Maisongrande, P.; Boulet, G. Snow Covers Dynamics Analysis in the Moroccan High Atlas Using SPOT-VEGETATION Data. Sécheresse 2007, 18, 1–11. [Google Scholar]

- Irons, J.R.; Dwyer, J.L.; Barsi, J.A. The next Landsat Satellite: The Landsat Data Continuity Mission. Remote Sens. Environ. 2012, 122, 11–21. [Google Scholar] [CrossRef] [Green Version]

- Loveland, T.R.; Irons, J.R. Landsat 8: The Plans, the Reality, and the Legacy. Remote Sens. Environ. 2016, 185, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Malenovský, Z.; Rott, H.; Cihlar, J.; Schaepman, M.E.; García-Santos, G.; Fernandes, R.; Berger, M. Sentinels for Science: Potential of Sentinel-1, -2, and -3 Missions for Scientific Observations of Ocean, Cryosphere, and Land. Remote Sens. Environ. 2012, 120, 91–101. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover/Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Rosenthal, W.; Dozier, J. Automated Mapping of Montane Snow Cover at Subpixel Resolution from the Landsat Thematic Mapper. Water Resour. Res. 1996, 32, 115–130. [Google Scholar] [CrossRef] [Green Version]

- Simpson, J.J.; Stitt, J.R.; Sienko, M. Improved Estimates of the Areal Extent of Snow Cover from AVHRR Data. J. Hydrol. 1998, 204, 1–23. [Google Scholar] [CrossRef]

- Dozier, J. Spectral Signature of Alpine Snow Cover from the Landsat Thematic Mapper. Remote Sens. Environ. 1989, 28, 9–22. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support Vector Machines in Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Mantero, P.; Moser, G.; Serpico, S.B. Partially Supervised Classification of Remote Sensing Images through SVM-Based Probability Density Estimation. IEEE Trans. Geosci. Remote Sens. 2005, 43, 559–570. [Google Scholar] [CrossRef]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An Assessment of Support Vector Machines for Land Cover Classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Su, L.; Huang, Y. Support Vector Machine (SVM) Classification: Comparison of Linkage Techniques Using a Clustering-Based Method for Training Data Selection. GIScience Remote Sens. 2009, 46, 411–423. [Google Scholar] [CrossRef]

- MacQueen, J. Some Methods for Classification and Analysis of Multivariate Observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Cambridge, CA, USA, 21 June–18 July 1965; Volume 1, pp. 281–298. [Google Scholar]

- Carroll, T.R.; Baglio, J.V.; Verdin, J.P.; Holroyd, E.W. Operational Mapping of Snow Cover in the United States and Canada Using Airborne and Satellite Data. In Proceedings of the 12th Canadian Symposium on Remote Sensing Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 10–14 July 1989; Volume 3, pp. 1257–1259. [Google Scholar]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V. Development of Methods for Mapping Global Snow Cover Using Moderate Resolution Imaging Spectroradiometer Data. Remote Sens. Environ. 1995, 54, 127–140. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Anderson, M.C.; Verdin, J.P. (Eds.) Snow Cover Monitoring from Remote-Sensing Satellites: Possibilities for Drought Assessment. In Remote Sensing of Drought; CRC Press: Boca Raton, FL, USA, 2012; pp. 382–411. ISBN 978-0-429-10605-7. [Google Scholar]

- Quan, J.; Zhan, W.; Ma, T.; Du, Y.; Guo, Z.; Qin, B. An Integrated Model for Generating Hourly Landsat-like Land Surface Temperatures over Heterogeneous Landscapes. Remote Sens. Environ. 2018, 206, 403–423. [Google Scholar] [CrossRef]

- Liu, X.; Deng, C.; Wang, S.; Huang, G.-B.; Zhao, B.; Lauren, P. Fast and Accurate Spatiotemporal Fusion Based Upon Extreme Learning Machine. IEEE Geosci. Remote Sens. Lett. 2016, 13, 2039–2043. [Google Scholar] [CrossRef]

- Song, H.; Liu, Q.; Wang, G.; Hang, R.; Huang, B. Spatiotemporal Satellite Image Fusion Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 821–829. [Google Scholar] [CrossRef]

- Wang, S.; Cao, J.; Yu, P.S. Deep Learning for Spatio-Temporal Data Mining: A Survey. arXiv 2019, arXiv:1906.04928. [Google Scholar] [CrossRef]

- Jia, D.; Song, C.; Cheng, C.; Shen, S.; Ning, L.; Hui, C. A Novel Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions Using a Two-Stream Convolutional Neural Network. Remote Sens. 2020, 12, 698. [Google Scholar] [CrossRef] [Green Version]

- Htitiou, A.; Boudhar, A.; Lebrini, Y.; Benabdelouahab, T. Deep Learning-Based Reconstruction of Spatiotemporally Fused Satellite Images for Smart Agriculture Applications in a Heterogeneous Agricultural Region. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2020, 44, 249–254. [Google Scholar] [CrossRef]

- Jia, D.; Cheng, C.; Song, C.; Shen, S.; Ning, L.; Zhang, T. A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions. Remote Sens. 2021, 13, 645. [Google Scholar] [CrossRef]

- Yin, Z.; Wu, P.; Foody, G.M.; Wu, Y.; Liu, Z.; Du, Y.; Ling, F. Spatiotemporal Fusion of Land Surface Temperature Based on a Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1808–1822. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

| Pair Index Number | Entry Date (FSDAF Model) | Entry Date (ESTARFM Model) |

|---|---|---|

| #1 | 4 January 2018 | 8 February 2019 and 11 February 2020 |

| #2 | 25 March 2018 | 11 February 2020 and 18 April 2021 |

| #3 | 12 May 2018 | 12 May 2018 and 11 February 2020 |

| #4 | 08 February 2019 | 12 May 2018 and 18 April 2021 |

| #5 | 25 December 2019 | 25 March 2018 and 27 December 2020 |

| #6 | 11 February 2020 | 25 March 2018 and 28 January 2021 |

| #7 | 27 December 2020 | 25 December 2019 and 27 December 2020 |

| #8 | 28 January 2021 | 28 January 2021 and 4 January 2018 |

| #9 | 18 April 2021 | 28 January 2021 and 11 February 2020 |

| Difference between the real NDSI S2 and the predicted images (First sub-area) | |||

| ESTARFM | FSDAF | Pre-Classification FSDAF | |

| Unaltered area (km²) | 0.7654 | 0.8826 | 0.9079 |

| Unaltered area (%) | 76.54 | 88.26 | 90.79 |

| Difference between the real NDSI S2 and the predicted images (Second sub-area) | |||

| ESTARFM | FSDAF | Pre-Classification FSDAF | |

| Unaltered area (km²) | 0.6922 | 0.8875 | 0.9107 |

| Unaltered area (%) | 69.22 | 88.75 | 91.07 |

| Difference between the real NDSI S2 and the predicted images (The entire area) | |||

| ESTARFM | FSDAF | Pre-Classification FSDAF | |

| Unaltered area (km²) | 187.62 | 196.59 | 198.32 |

| Unaltered area (%) | 89.02 | 93.28 | 94.10 |

| Sentinel-2 | ESTARFM | FSDAF | Pre-Classification FSDAF | |

|---|---|---|---|---|

| SCA (km²) | 52.57 | 54.77 | 51.29 | 52.32 |

| Bias (km²) | 2.2 | −1.28 | −0.25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bousbaa, M.; Htitiou, A.; Boudhar, A.; Eljabiri, Y.; Elyoussfi, H.; Bouamri, H.; Ouatiki, H.; Chehbouni, A. High-Resolution Monitoring of the Snow Cover on the Moroccan Atlas through the Spatio-Temporal Fusion of Landsat and Sentinel-2 Images. Remote Sens. 2022, 14, 5814. https://doi.org/10.3390/rs14225814

Bousbaa M, Htitiou A, Boudhar A, Eljabiri Y, Elyoussfi H, Bouamri H, Ouatiki H, Chehbouni A. High-Resolution Monitoring of the Snow Cover on the Moroccan Atlas through the Spatio-Temporal Fusion of Landsat and Sentinel-2 Images. Remote Sensing. 2022; 14(22):5814. https://doi.org/10.3390/rs14225814

Chicago/Turabian StyleBousbaa, Mostafa, Abdelaziz Htitiou, Abdelghani Boudhar, Youssra Eljabiri, Haytam Elyoussfi, Hafsa Bouamri, Hamza Ouatiki, and Abdelghani Chehbouni. 2022. "High-Resolution Monitoring of the Snow Cover on the Moroccan Atlas through the Spatio-Temporal Fusion of Landsat and Sentinel-2 Images" Remote Sensing 14, no. 22: 5814. https://doi.org/10.3390/rs14225814