EDTRS: A Superpixel Generation Method for SAR Images Segmentation Based on Edge Detection and Texture Region Selection

Abstract

:1. Introduction

- (1)

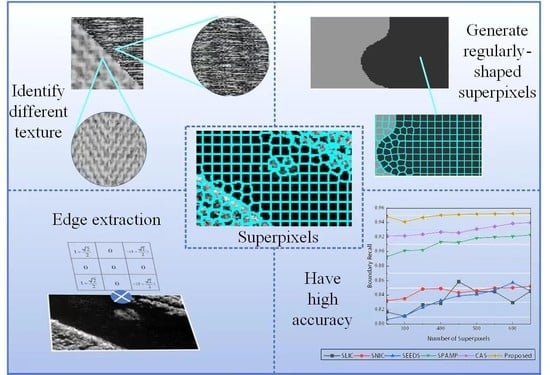

- A new edge detection method is proposed, which based on 2-D entropy to eliminate the effect of noise. Using virtual points fused with region information, the resultant edges of the proposed method form a band-shaped area, which meets the requirements of generating superpixels in the later stage.

- (2)

- A region selection method is proposed, which combines the periodic judgment and edge constraint to select regions for texture feature extraction. The selected region can accurately describe the texture of the target pixel for generating superpixels.

- (3)

- A superpixel generation method is proposed, which combines edge penalty and texture information. The generated superpixels always retain a regular shape and high accuracy.

2. Background and Related Works

3. Methods

3.1. Edge Detection Method

3.1.1. Generate Virtual Pixel Values

3.1.2. Using 2-D Entropy to Optimize Edge Values of Pixels

3.2. Texture Feature Extraction Based on Region Selection

3.3. Specification for Generating Superpixels Based on the Edge and Texture Features

4. Results

4.1. Edge Detection and Texture Region Selection

4.2. Superpixel Results

4.2.1. Superpixel Results of Simulated SAR Images

4.2.2. Superpixel Results of Real SAR Images

4.3. Computation Cost Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shang, R.; Peng, P.; Shang, F.; Jiao, L.; Shen, Y.; Stolkin, R. Semantic Segmentation for SAR Image Based on Texture Complexity Analysis and Key Superpixels. Remote Sens. 2020, 12, 2141. [Google Scholar] [CrossRef]

- Marghany, M. Advanced Algorithms for Mineral and Hydrocarbon Exploration Using Synthetic Aperture Radar; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Marghany, M. Nonlinear Ocean Dynamics: Synthetic Aperture Radar; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Shang, R.; Lin, J.; Jiao, L.; Li, Y. SAR Image Segmentation Using Region Smoothing and Label Correction. Remote Sens. 2020, 12, 803. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Shi, H.; Jiao, L.; Liu, R. Quantum evolutionary clustering algorithm based on watershed applied to SAR image segmentation. Neurocomputing 2012, 87, 90–98. [Google Scholar] [CrossRef]

- Fan, S.; Sun, Y.; Shui, P.; Bie, J. Region Merging with Texture Pattern for Segmentation of SAR Images. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019; pp. 1–5. [Google Scholar]

- Soares, M.D.; Dutra, L.V.; Costa, G.A.O.P.d.; Feitosa, R.Q.; Negri, R.G.; Diaz, P.M.A. A Meta-Methodology for Improving Land Cover and Land Use Classification with SAR Imagery. Remote Sens. 2020, 12, 961. [Google Scholar] [CrossRef] [Green Version]

- Gupta, K.; Goyal, N.; Khatter, H. Optimal reduction of noise in image processing using collaborative inpainting filtering with Pillar K-Mean clustering. Imaging Sci. J. 2019, 67, 100–114. [Google Scholar] [CrossRef]

- Maged, M. Developing robust model for retrieving sea surface current from RADARSAT-1 SAR satellite data. Int. J. Phys. Sci. 2011, 6, 6630–6637. [Google Scholar]

- Chen, Z.; Guo, B.; Li, C.; Liu, H. Review on Superpixel Generation Algorithms Based on Clustering. In Proceedings of the 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 27–29 September 2020; pp. 532–537. [Google Scholar]

- Qiao, N.; Sun, C.; Sun, J.; Song, C. Superpixel Combining Region Merging for Pancreas Segmentation. In Proceedings of the 2021 36th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Nanchang, China, 28–30 May 2021; pp. 276–281. [Google Scholar]

- Xiang, D.; Zhang, F.; Zhang, W.; Tang, T.; Guan, D.; Zhang, L.; Su, Y. Fast pixel-superpixel region merging for SAR image segmentation. IEEE Trans. Geosci. Remote Sens. 2020, 59, 9319–9335. [Google Scholar] [CrossRef]

- Ghaffari, R.; Golpardaz, M.; Helfroush, M.S.; Danyali, H. A fast, weighted CRF algorithm based on a two-step superpixel generation for SAR image segmentation. Int. J. Remote Sens. 2020, 41, 3535–3557. [Google Scholar] [CrossRef]

- Wang, X.; Li, G.; Plaza, A.; He, Y. Revisiting SLIC: Fast Superpixel Segmentation of Marine SAR Images Using Density Features. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5221818. [Google Scholar] [CrossRef]

- Han, L.; Liu, D.; Guan, D. Ship detection in SAR images by saliency analysis of multiscale superpixels. Remote Sens. Lett. 2022, 13, 708–715. [Google Scholar] [CrossRef]

- Peng, D.; Yang, W.; Li, H.-C.; Yang, X. Superpixel-Based Urban Change Detection in SAR Images Using Optimal Transport Distance. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; pp. 1–4. [Google Scholar]

- Lv, N.; Chen, C.; Qiu, T.; Sangaiah, A.K. Deep learning and superpixel feature extraction based on contractive autoencoder for change detection in SAR images. IEEE Trans. Ind. Inform. 2018, 14, 5530–5538. [Google Scholar] [CrossRef]

- Li, T.; Liu, Z.; Ran, L.; Xie, R. Target detection by exploiting superpixel-level statistical dissimilarity for SAR imagery. IEEE Geosci. Remote Sens. Lett. 2018, 15, 562–566. [Google Scholar] [CrossRef]

- Du, L.; Li, L.; Wang, Z. A Hierarchical Saliency Based Target Detection Method for High-Resolution Sar Images. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 13–16. [Google Scholar]

- Hou, B.; Zhang, X.; Gong, D.; Wang, S.; Zhang, X.; Jiao, L. Fast graph-based SAR image segmentation via simple superpixels. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 799–802. [Google Scholar]

- Yu, H.; Jiang, H.; Liu, Z.; Sun, Y.; Zhou, S.; Gou, Q. SAR Image Segmentation by Merging Multiple Feature Regions. In Proceedings of the 2022 3rd International Conference on Geology, Mapping and Remote Sensing (ICGMRS), Zhoushan, China, 22–24 April 2022; pp. 183–186. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Boemer, F.; Ratner, E.; Lendasse, A. Parameter-free image segmentation with SLIC. Neurocomputing 2018, 277, 228–236. [Google Scholar] [CrossRef]

- Dixit, M.; Pradhan, A. Building Extraction using SLIC from High Resolution Satellite Image. In Proceedings of the 2021 3rd International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 17–18 December 2021; pp. 443–445. [Google Scholar]

- Zhang, L.; Han, C.; Cheng, Y. Improved SLIC superpixel generation algorithm and its application in polarimetric SAR images classification. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 4578–4581. [Google Scholar]

- Bai, X.; Cao, Z.; Wang, Y.; Ye, M.; Zhu, L. Image segmentation using modified SLIC and Nyström based spectral clustering. Optik 2014, 125, 4302–4307. [Google Scholar] [CrossRef]

- Wu, H.; Wu, Y.; Zhang, S.; Li, P.; Wen, Z. Cartoon image segmentation based on improved SLIC superpixels and adaptive region propagation merging. In Proceedings of the 2016 IEEE International Conference on Signal and Image Processing (ICSIP), Beijing, China, 13–15 August 2016; pp. 277–281. [Google Scholar]

- Achanta, R.; Susstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4651–4660. [Google Scholar]

- Bergh, M.V.d.; Boix, X.; Roig, G.; Capitani, B.d.; Gool, L.V. Seeds: Superpixels extracted via energy-driven sampling. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 13–26. [Google Scholar]

- Xiao, X.; Zhou, Y.; Gong, Y.-J. Content-adaptive superpixel segmentation. IEEE Trans. Image Process. 2018, 27, 2883–2896. [Google Scholar] [CrossRef]

- Zou, H.; Qin, X.; Kang, H.; Zhou, S.; Ji, K. A PDF-based SLIC superpixel algorithm for SAR images. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 6823–6826. [Google Scholar]

- Jing, W.; Jin, T.; Xiang, D. Edge-aware superpixel generation for SAR imagery with one iteration merging. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1600–1604. [Google Scholar] [CrossRef]

- Cui, M.; Huang, Y.; Wang, R.; Pei, J.; Huo, W.; Zhang, Y.; Yang, H. A Superpixel Aggregation Method Based on Multi-Direction Gray Level Co-Occurrence Matrix for Sar Image Segmentation. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3487–3490. [Google Scholar]

- Jing, W.; Jin, T.; Xiang, D. Content-Sensitive Superpixel Generation for SAR Images with Edge Penalty and Contraction–Expansion Search Strategy. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Zhang, L.; Lu, S.; Hu, C.; Xiang, D.; Liu, T.; Su, Y. Superpixel Generation for SAR Imagery Based on Fast DBSCAN Clustering with Edge Penalty. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 15, 804–819. [Google Scholar] [CrossRef]

- Suruliandi, A.; Ramar, K. Local texture patterns-a univariate texture model for classification of images. In Proceedings of the 2008 16th International Conference on Advanced Computing and Communications, Chennai, India, 14–17 December 2008; pp. 32–39. [Google Scholar]

- Machairas, V.a. Waterpixels et Leur Application à l′Apprentissage Statistique de la Segmentation. Ph.D. Thesis, Paris Sciences et Lettres (ComUE), Paris, France, 2016. [Google Scholar]

- Li, Z.; Chen, J. Superpixel segmentation using linear spectral clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1356–1363. [Google Scholar]

- Akyilmaz, E.; Leloglu, U.M. Similarity ratio based adaptive Mahalanobis distance algorithm to generate SAR superpixels. Can. J. Remote Sens. 2017, 43, 569–581. [Google Scholar] [CrossRef]

- Maini, R.; Aggarwal, H. Study and comparison of various image edge detection techniques. Int. J. Image Process. 2009, 3, 1–11. [Google Scholar]

- Liu, Y.; Xie, Z.; Liu, H. An adaptive and robust edge detection method based on edge proportion statistics. IEEE Trans. Image Process. 2020, 29, 5206–5215. [Google Scholar] [CrossRef] [PubMed]

- Breunig, M.M.; Kriegel, H.-P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 93–104. [Google Scholar]

- Fei, H.; Fan, Z.; Wang, C.; Zhang, N.; Wang, T.; Chen, R.; Bai, T. Cotton classification method at the county scale based on multi-features and Random Forest feature selection algorithm and classifier. Remote Sens. 2022, 14, 829. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, F.; You, H. SAR Image Segmentation by Efficient Fuzzy C-Means Framework with Adaptive Generalized Likelihood Ratio Nonlocal Spatial Information Embedded. Remote Sens. 2022, 14, 1621. [Google Scholar] [CrossRef]

- Zhi, X.-H.; Shen, H.-B. Saliency driven region-edge-based top down level set evolution reveals the asynchronous focus in image segmentation. Pattern Recognit. 2018, 80, 241–255. [Google Scholar] [CrossRef]

- Suryakant, N.K. Edge detection using fuzzy logic in Matlab. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2012, 2, 38–40. [Google Scholar]

| SLIC | SNIC | SEEDS | SPAMP | CAS | Proposed | |

|---|---|---|---|---|---|---|

| ASA | 0.9015 | 0.9187 | 0.8910 | 0.9409 | 0.9638 | 0.9789 |

| UE | 0.2213 | 0.2409 | 0.2501 | 0.2134 | 0.2015 | 0.1204 |

| BR | 0.8126 | 0.8789 | 0.7968 | 0.8902 | 0.8919 | 0.9866 |

| CR | 1.2253 | 0.6042 | 0. 4519 | 0.3188 | 0.4043 | 9.9311 |

| SLIC | SNIC | SEEDS | SPAMP | CAS | Proposed | |

|---|---|---|---|---|---|---|

| ASA | 0.9215 | 0.9314 | 0.9421 | 0.9510 | 0.9329 | 0.9844 |

| UE | 0.1911 | 0.1576 | 0.1301 | 0.1209 | 0.1596 | 0.0993 |

| BR | 0.9090 | 0.9564 | 0.9609 | 0.9732 | 0.9530 | 0.9918 |

| CR | 2.6271 | 1.4289 | 0.8809 | 0.4528 | 6.2910 | 10.8972 |

| SLIC | SNIC | SEEDS | SPAMP | CAS | Proposed | |

|---|---|---|---|---|---|---|

| ASA | 0.9016 | 0.9266 | 0.9402 | 0.9281 | 0.9353 | 0.95744 |

| UE | 0.0608 | 0.0603 | 0.0306 | 0.0598 | 0.0457 | 0.0257 |

| BR | 0.6474 | 0.6916 | 0.7268 | 0.7019 | 0.7240 | 0.7987 |

| CR | 2.0627 | 1.0275 | 2.1160 | 2.6723 | 1.2341 | 14.32 |

| SLIC | SNIC | SEEDS | SPAMP | CAS | EDTRS | |

|---|---|---|---|---|---|---|

| Simulated SAR Image | 0.1020 | 0.1014 | 0.0930 | 10.7392 | 0.2489 | 0.5718 |

| Real SAR image ) | 0.0984 | 0.0956 | 0.0867 | 10.5327 | 0.2403 | 0.5450 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, H.; Jiang, H.; Liu, Z.; Zhou, S.; Yin, X. EDTRS: A Superpixel Generation Method for SAR Images Segmentation Based on Edge Detection and Texture Region Selection. Remote Sens. 2022, 14, 5589. https://doi.org/10.3390/rs14215589

Yu H, Jiang H, Liu Z, Zhou S, Yin X. EDTRS: A Superpixel Generation Method for SAR Images Segmentation Based on Edge Detection and Texture Region Selection. Remote Sensing. 2022; 14(21):5589. https://doi.org/10.3390/rs14215589

Chicago/Turabian StyleYu, Hang, Haoran Jiang, Zhiheng Liu, Suiping Zhou, and Xiangjie Yin. 2022. "EDTRS: A Superpixel Generation Method for SAR Images Segmentation Based on Edge Detection and Texture Region Selection" Remote Sensing 14, no. 21: 5589. https://doi.org/10.3390/rs14215589