1. Introduction

In recent years, autonomous-driving approaches and advanced driver assistance systems (ADASs) have resulted in unprecedented development at both the academic and industrial levels [

1]. The breakthroughs in the fields of deep learning and computer vision, as well as the tremendous computational ability of graphics processing units (GPUs), open the door to research on fully autonomous driving. Fully autonomous driving requires traffic-scene understanding, including traffic-sign recognition, vehicle and pedestrian detection, and road-surface recognition (e.g., road-marking recognition) [

2,

3]. Lane detection, which is a task of road-surface recognition, plays a vital role in autonomous driving, as road lanes demonstrate the drivable area on the road for vehicles [

4]. In the field of lane detection, a variety of methods have been proposed, comprising traditional handcrafted feature-based methods and convolutional neural network (CNN)-based methods [

5,

6,

7,

8,

9,

10,

11,

12].

However, on urban roads, where autonomous driving faces complex and diverse problems, lane detection is not the only component of road-surface recognition. In addition to the drivable area imposed by road lanes, there is abundant information that can assist the drivers provided by symbolic road markings on road surfaces. Road markings, including road lanes and symbolic road markings, refer to the application of paints on road surfaces to communicate information to drivers and pedestrians, as shown in

Figure 1. Generally, a standard system of road markings can convey drivable areas, directions, speed limits, stopping, etc. [

13]. It is commonly believed that understanding the abundant guidance information provided by symbolic road markings increases the safety of autonomous driving. However, the publicly available datasets of and approaches to road-marking recognition pay less attention to the recognition of symbolic road markings than road lanes [

14].

Several commonly used and publicly available datasets have been released to evaluate various algorithms in the field of autonomous driving (e.g., the KITTI Vision Benchmark Suite (KITTI) [

15], Cityscape Dataset (Cityscape) [

16], Mapillary Vistas Dataset (Mapillary) [

17], Cambridge-driving Labeled Video Database (CamVid) [

18], BDD100K [

19], TuSimple Benchmark Dataset (TuSimple) [

20], and CurveLanes Dataset [

21]). However, most of the datasets mentioned above only contain a road lane, and some of them include limited types of road markings. These limitations mean that road-marking recognition is sometimes difficult [

13]. For the perception of road markings, Road Marking Detection [

22] was the first publicly available dataset, released in 2013, and it consists of 1443 labeled images with bounding-box annotation belonging to 11 symbolic road-marking classes. Most of the earlier works [

23,

24] using handcrafted feature-based methods are evaluated on the Road Marking Detection dataset. However, there exists the problem that multiple images present the same scene in Road Marking Detection (e.g., from the image of

roadmark_1202 to the image of

roadmark_ 1259). VPGNet [

25] and TRoM [

14] have become the most popular datasets in road-marking recognition since 2017. VPGNet contains 21,097 labeled images with pixel-level annotation belonging to 17 classes, while TRoM contains 712 labeled images with pixel-level annotation belonging to 19 classes. Recent works [

13,

25,

26,

27] based on CNN methods have addressed the problem of road-marking segmentation using VPGNet and TRoM. However, it has been indicated that the instance frequency of the symbolic-road-marking classes is much lower than that of the road lanes in VPGNet and TRoM [

28]. CeyMo [

28] is a new dataset for road-marking detection consisting of 2887 labeled images belonging to 11 road-marking classes (released in 2022). Although CeyMo makes up for the shortcoming of the low frequency of instances for symbolic road markings, the number of classes of road markings is too small compared with the real-world situation.

As described before, research on road-marking perception remains an ongoing challenge due to the lack of datasets and the limitations of the existing datasets. Hence, a new dataset called the Road Marking Dataset (RMD) was created to cope with the real-world conditions of urban road markings in this study. The RMD has 3221 well-labeled images belonging to 30 classes that were collected from three cities in Japan (i.e., Yokohama, Chofu, and Nogata). The RMD covers 29 categories of road markings on urban road surfaces, and it has the largest number of classes among the existing datasets.

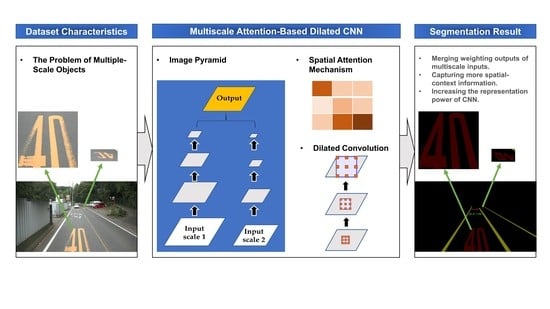

The RMD contains both road lanes and symbolic road markings. This study focuses on road-marking segmentation. Hence, a multiscale attention-based dilated convolutional neural network (MSA-DCNN) is proposed and applied to the RMD. The proposed MSA-DCNN takes multiple-scale images that are resized from the original image as inputs to learn the attention weights of each scale, following merging the semantic predictions to obtain the final output. In addition, dilated convolution [

29] is adopted in the feature-extraction process to utilize a larger range of spatial-context information. The main inspiration of the proposed method comes from previous studies [

30,

31]. Chen et al. [

30] resize the input images to several scales to pass them through a shared network, and they prove that multiscale inputs improve the performance of semantic segmentation compared with a single-scale input. The experiments in [

31] show that large-scale objects can be better segmented in resized images with reduced pixel counts because the receptive field of the CNN can observe more global context. Moreover, fine details, such as objects of thin structures, can be well predicted in resized images with increased pixel counts.

The remainder of this paper is organized as follows.

Section 2 describes the related work.

Section 3 introduces the proposed dataset. Thereafter, the proposed method is presented in

Section 4.

Section 5 presents the results of the experiments. Finally,

Section 6 concludes the paper.

3. Proposed Dataset

The proposed RMD is a new dataset for the semantic segmentation of road markings, including symbolic road markings and road lanes. It comprises 3221 pixel-level annotated road-surface images of 29 road-marking categories, with a size of 1920 × 1080. The RMD was built with the aim of making up for the lack of datasets and the limitations of the existing datasets in the field of road-marking recognition, as mentioned in

Section 1. The raw data of the proposed RMD were collected by a camera mounted inside a vehicle from three cities in Japan: Yokohama, Chofu, and Nogata, in November 2015, November 2015, and March 2017, respectively. Thereafter, from the 9779 frames of road-surface scenes obtained, 3221 representative scenes with more than one symbolic road marking were extracted for composing the RMD, which ensured that each image contained at least one symbolic road marking.

As shown in

Figure 1, a variety of scenarios, such as (a) ordinary, (b) shadow, (c) dazzle light, (d) occlusion, (e) deteriorated road markings, and (f) narrow road, were carefully selected to design the proposed RMD. To the best of our knowledge, the RMD covers the most categories compared with the other existing datasets, as shown in

Table 1.

A graphical image-annotation tool called Labelme [

39] was used to manually annotate the raw images. Both symbolic road markings and road lanes were manually annotated as polygons with corresponding shapes. After annotation, pixel-level segmentation masks were converted from the generated JSON format data. Examples of RMDs are shown in

Figure 3. It should be noted that, when annotating road markings consisting of multiple Japanese characters, only the key characters were annotated, as shown in

Figure 3d.

The RMD is divided into the training and test sets at a ratio of approximately 9:1, which correspond to 2990 and 321 images, respectively.

Table 2 shows the ID and RGB value of each category, and the numbers of images for each category in the training set, test set, and the total. Because the road surface in each image may involve several road markings (

Figure 1e), including the same road marking in plural, the number of instances of each category is more than the number of images for each category. The RMD needs to be perfected by adding more road-surface images with symbolic road markings due to the problem of class imbalance, shown in

Table 2.

6. Conclusions

In this study, a new dataset for the semantic segmentation of road markings named the RMD is introduced. The RMD is proposed to compensate for the lack of datasets and the limitations of the existing datasets in the field of road-marking recognition. The proposed RMD comprises 3221 pixel-level well-annotated road-surface images of 29 road-marking categories, with a resolution of 1920 × 1080. It is divided into the training and test sets at a ratio of approximately 9:1, which correspond to 2990 and 321 images, respectively.

We focus on the problem of the existence of objects at multiple scales of the proposed RMD, and we investigate four kinds of network architectures that deal with the multiscale context. Inspired by previous studies, we propose a novel MSA-DCNN to tackle the RMD. An attention module that can softly weight the feature maps from different scales and dilated convolution to enlarge the receptive field of feature maps and utilize a large range of spatial-context information are adopted. The two models that employ (Model 1) and (Model 2) are trained to evaluate five kinds of multiscale inputs on the RMD. At the inference time, Model 2, with , gained the best mIoU of 74.88%. The ablation study shows that the proposed MSA-DCNN yields the best results by combining multiscale attention and dilated convolution. Additionally, it obtains better results in comparison with other state-of-the-art models.

The RMD should be constantly improved by adding more road-surface images with symbolic road markings due to the problem of class imbalance. In this study, we proposed the MSA-DCNN, which focuses on the accuracy of the segmentation rather than real-time segmentation. For a future study, we will work on designing a real-time accurate road-marking-segmentation algorithm to solve the diverse needs in the field of road-marking recognition.