High-Resolution Rice Mapping Based on SNIC Segmentation and Multi-Source Remote Sensing Images

Abstract

:1. Introduction

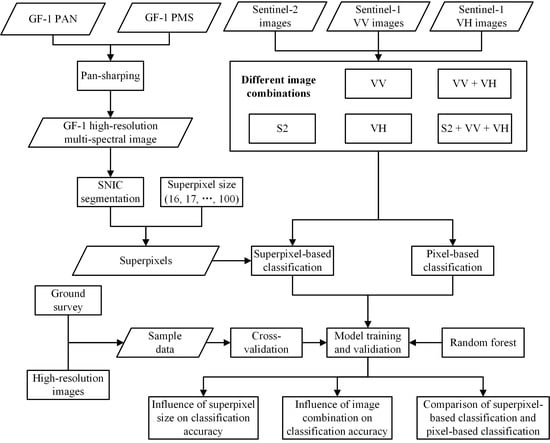

2. Materials

2.1. Study Area

2.2. Remote Sensing Data and Pre-Processing

2.2.1. Remote Sensing Data

2.2.2. Pre-Processing of Remote Sensing Data

2.3. Ground Survey and Sampling

3. Methodology

3.1. Superpixel Segmentation Based on Simple Non-Iterative Clustering (SNIC)

3.2. Classification Based on Random Forest

4. Results

4.1. Rice Mapping Based on SNIC and Multi-Source Remote Sensing Images

4.2. Comparison of SNIC Superpixel-Based Classification and Pixel-Based Classification

5. Discussion

6. Conclusions

- The value of the superpixel size has a significant influence on the classification accuracy of SNIC based high-resolution image classification.

- The combination of optical and SAR images can increase the classification accuracy of superpixel-based rice mapping compared with using only optical or SAR images.

- Superpixel-based classification based on SNIC method significantly outperforms the pixel-based classification for the five image combinations, especially when only using time-series Sentinel-1 SAR images.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, M.; Huang, W.; Niu, Z.; Wang, Y.; Wang, C.; Li, W.; Hao, P.; Yu, B. Fine crop mapping by combining high spectral and high spatial resolution remote sensing data in complex heterogeneous areas. Comput. Electron. Agric. 2017, 139, 1–9. [Google Scholar] [CrossRef]

- Ozdarici-Ok, A.; Ok, A.O.; Schindler, K. Mapping of agricultural crops from single high-resolution multispectral images—Data-driven smoothing vs. parcel-based smoothing. Remote Sens. 2015, 7, 5611–5638. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Wang, L.; Huang, J.; Mansaray, L.R.; Mijiti, R. Monitoring policy-driven crop area adjustments in northeast China using Landsat-8 imagery. Int. J. Appl. Earth Obs. 2019, 82, 101892. [Google Scholar] [CrossRef]

- Zhou, Q.; Yu, Q.; Liu, J.; Wu, W.; Tang, H. Perspective of Chinese GF-1 high-resolution satellite data in agricultural remote sensing monitoring. J. Integr. Agr. 2017, 16, 242–251. [Google Scholar] [CrossRef]

- Lv, Z.; Shi, W.; Zhang, X.; Benediktsson, J.A. Landslide inventory mapping from bitemporal high-resolution remote sensing images using change detection and multiscale segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1520–1532. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Yang, L.; Kabba, V.T.; Kanu, A.S.; Huang, J.; Wang, F. Optimising rice mapping in cloud-prone environments by combining quad-source optical with Sentinel-1A microwave satellite imagery. Gisci. Remote Sens. 2019, 56, 1333–1354. [Google Scholar] [CrossRef]

- Erinjery, J.J.; Singh, M.; Kent, R. Mapping and assessment of vegetation types in the tropical rainforests of the Western Ghats using multispectral Sentinel-2 and SAR Sentinel-1 satellite imagery. Remote Sens. Environ. 2018, 216, 345–354. [Google Scholar] [CrossRef]

- Fu, B.; Wang, Y.; Campbell, A.; Li, Y.; Zhang, B.; Yin, S.; Xing, Z.; Jin, X. Comparison of object-based and pixel-based Random Forest algorithm for wetland vegetation mapping using high spatial resolution GF-1 and SAR data. Ecol. Indic. 2017, 73, 105–117. [Google Scholar] [CrossRef]

- Yang, L.; Mansaray, L.R.; Huang, J.; Wang, L. Optimal Segmentation Scale Parameter, Feature Subset and Classification Algorithm for Geographic Object-Based Crop Recognition Using Multisource Satellite Imagery. Remote Sens. 2019, 11, 514. [Google Scholar] [CrossRef] [Green Version]

- Makinde, E.O.; Salami, A.T.; Olaleye, J.B.; Okewusi, O.C. Object Based and Pixel Based Classification Using Rapideye Satellite Imager of ETI-OSA, Lagos, Nigeria. Geoinformatics FCE CTU 2016, 15, 59–70. [Google Scholar] [CrossRef]

- Csillik, O. Fast segmentation and classification of very high resolution remote sensing data using SLIC superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef] [Green Version]

- Gong, Y.; Zhou, Y. Differential evolutionary superpixel segmentation. IEEE Trans. Image Process. 2017, 27, 1390–1404. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Graesser, J.; Ramankutty, N. Detection of cropland field parcels from Landsat imagery. Remote Sens. Environ. 2017, 201, 165–180. [Google Scholar] [CrossRef] [Green Version]

- Waldner, F.; Diakogiannis, F.I. Deep learning on edge: Extracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Achanta, R.; Susstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4651–4660. [Google Scholar]

- Schultz, B.; Immitzer, M.; Formaggio, A.R.; Sanches, I.D.A.; Luiz, A.J.B.; Atzberger, C. Self-guided segmentation and classification of multi-temporal Landsat 8 images for crop type mapping in Southeastern Brazil. Remote Sens. 2015, 7, 14482–14508. [Google Scholar] [CrossRef] [Green Version]

- Xu, Q.; Fu, P.; Sun, Q.; Wang, T. A Fast Region Growing Based Superpixel Segmentation for Hyperspectral Image Classification. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xi’an, China, 8–11 November 2019; Springer: Cham, Switzerland, 2019; pp. 772–782. [Google Scholar]

- Paludo, A.; Becker, W.R.; Richetti, J.; Silva, L.C.D.A.; Johann, J.A. Mapping summer soybean and corn with remote sensing on Google Earth Engine cloud computing in Parana state–Brazil. Int. J. Digit. Earth. 2020, 13, 1624–2636. [Google Scholar] [CrossRef]

- Brinkhoff, J.; Vardanega, J.; Robson, A.J. Land Cover Classification of Nine Perennial Crops Using Sentinel-1 and-2 Data. Remote Sens. 2020, 12, 96. [Google Scholar] [CrossRef] [Green Version]

- Tassi, A.; Vizzari, M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Tu, Y.; Chen, B.; Zhang, T.; Xu, B. Regional Mapping of Essential Urban Land Use Categories in China: A Segmentation-Based Approach. Remote Sens. 2020, 12, 1058. [Google Scholar] [CrossRef] [Green Version]

- Jia, S.; Zhan, Z.; Zhang, M.; Xu, M.; Huang, Q.; Zhou, J.; Jia, X. Multiple Feature-Based Superpixel-Level Decision Fusion for Hyperspectral and LiDAR Data Classification. IEEE Trans. Geosci. Remote Sens. 2020, 99, 1–19. [Google Scholar]

- Amani, M.; Mahdavi, S.; Afshar, M.; Brisco, B.; Huang, W.; Mohammad Javad Mirzadeh, S.; White, L.; Banks, S.; Montgomery, J.; Hopkinson, C. Canadian wetland inventory using Google Earth engine: The first map and preliminary results. Remote Sens. 2019, 11, 842. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Wu, B.; Ponce-Campos, G.E.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-date paddy rice extent at 10 m resolution in China through the integration of optical and synthetic aperture radar images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Wang, L.; Yang, L.; Shao, J.; Teng, F.; Yang, F.; Fu, C. Geometric correction of GF-1 satellite images based on block adjustment of rational polynomial model. Trans. Chin. Soc. Agric. Eng. 2015, 31, 146–154. [Google Scholar]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the pansharpening methods for remote sensing images based on the idea of meta-analysis: Practical discussion and challenges. Inform. Fusion. 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Sarp, G. Spectral and spatial quality analysis of pan-sharpening algorithms: A case study in Istanbul. Eur. J. Remote Sens. 2014, 47, 19–28. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Sharma, R.C.; Hara, K.; Tateishi, R. High-resolution vegetation mapping in japan by combining sentinel-2 and landsat 8 based multi-temporal datasets through machine learning and cross-validation approach. Land 2017, 6, 50. [Google Scholar] [CrossRef] [Green Version]

- Mi, L.; Chen, Z. Superpixel-enhanced deep neural forest for remote sensing image semantic segmentation. ISPRS J. Photogramm. 2020, 159, 140–152. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Zhan, P.; Zhu, W.; Li, N. An automated rice mapping method based on flooding signals in synthetic aperture radar time series. Remote Sens. Environ. 2021, 252, 112112. [Google Scholar] [CrossRef]

| Sensor | Band Name | Wavelength (μm) | Resolution (m) |

|---|---|---|---|

| Panchromatic | Panchromatic | 0.45–0.90 | 2 |

| Multi-spectral | Blue | 0.45–0.52 | 8 |

| Green | 0.52–0.59 | ||

| Red | 0.63–0.69 | ||

| Near infrared | 0.77–0.89 |

| Band Name | Description | Wavelength (μm) | Resolution (m) |

|---|---|---|---|

| B1 | Aerosols | 0.4439 | 60 |

| B2 | Blue | 0.4966 | 10 |

| B3 | Green | 0.5600 | 10 |

| B4 | Red | 0.6645 | 10 |

| B5 | Red edge 1 | 0.7039 | 20 |

| B6 | Red edge 2 | 0.7402 | 20 |

| B7 | Red edge 3 | 0.7825 | 20 |

| B8 | Near infrared | 0.8351 | 10 |

| B8A | Red edge 4 | 0.8648 | 20 |

| B9 | Water vapor | 0.9450 | 60 |

| B10 | Cirrus | 1.3735 | 60 |

| B11 | Short-wave infrared 1 | 1.6137 | 20 |

| B12 | Short-wave infrared 2 | 2.2024 | 20 |

| QA | Cloud mask | - | 60 |

| SNIC | Superpixel Size | Image Combinations |

|---|---|---|

| GF-1 pan-sharpened image | 16–100 | Sentinel-2 MSI (S2) |

| Sentinel-1 C-SAR VV (VV) | ||

| Sentinel-1 C-SAR VH (VH) | ||

| Sentinel-1 C-SAR VV + VH (VV + VH) | ||

| Sentinel-1 C-SAR VV + VH + Sentinel-2 MSI (S2 + VV + VH) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Wang, L.; Abubakar, G.A.; Huang, J. High-Resolution Rice Mapping Based on SNIC Segmentation and Multi-Source Remote Sensing Images. Remote Sens. 2021, 13, 1148. https://doi.org/10.3390/rs13061148

Yang L, Wang L, Abubakar GA, Huang J. High-Resolution Rice Mapping Based on SNIC Segmentation and Multi-Source Remote Sensing Images. Remote Sensing. 2021; 13(6):1148. https://doi.org/10.3390/rs13061148

Chicago/Turabian StyleYang, Lingbo, Limin Wang, Ghali Abdullahi Abubakar, and Jingfeng Huang. 2021. "High-Resolution Rice Mapping Based on SNIC Segmentation and Multi-Source Remote Sensing Images" Remote Sensing 13, no. 6: 1148. https://doi.org/10.3390/rs13061148