DRE-SLAM: Dynamic RGB-D Encoder SLAM for a Differential-Drive Robot

Abstract

:1. Introduction

- A robust and accurate framework based on optimization to fuse the information of an RGB-D camera and two wheel-encoders for dynamic environments.

- A dynamic pixel detection and culling method that does not need GPUs and can detect dynamic pixels both on predefined and undefined moving objects.

- A submap-based OctoMap construction method, which can speed up the rebuilding process and decrease memory consumption.

2. Related Work

2.1. Sensor Fusion

2.2. Dynamic Pixels Detection

2.3. Simultaneous Localization and Dense Mapping

2.4. Simultaneous Localization and Dense Mapping for Dynamic Scenes

3. Preliminaries

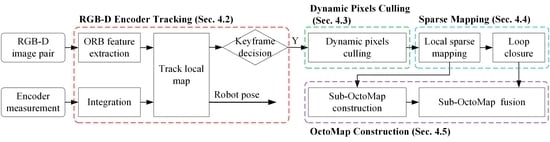

4. Dynamic RGB-D Encoder SLAM (DRE-SLAM)

4.1. System Overview

4.2. RGB-D Encoder Tracking

4.2.1. ORB Feature Extraction

4.2.2. Encoder Integration

4.2.3. Local Map Tracking

4.2.4. Keyframe Decision

- frames have passed from the last keyframe. This condition guarantees enough time between two adjacent keyframes allow for the dynamic pixels culling, sparse mapping, and OctoMap construction. We set to be the output frequency of the RGB-D camera to ensure at least 1 s of time is between the two adjacent keyframes. If the robot moves too fast, the distance between two consecutive keyframes may be too large, which results in too much encoder integration error. The two erroneous match detection policies in Section 4.2.3 may be invalid. The system accuracy is reduced. Then, there will be no enough co-view part for the mapping. Thus, we recommend operating the robot at a slow speed, e.g., lower than 0.4 m/s.

- The distance or angle between the current frame and the last keyframe is larger than threshold or , respectively. On the one hand, this ensures that the encoder integration does not diverge too much, thus ensuring the functional of erroneous match detection and the accuracy of robot pose tracking. On the other hand, it ensures enough viewing overlaps between keyframes for OctoMap construction. In our implementation, we set these two parameters as m and rad.

4.3. Dynamic Pixels Culling

4.3.1. Objects Detection

4.3.2. Multiview Constraint-Based Dynamic Pixels Culling

4.4. Sparse Mapping

4.4.1. Sparse Mapping

4.4.2. Loop Closure

4.5. OctoMap Construction

4.5.1. Sub-OctoMap Construction

4.5.2. Sub-OctoMap Fusion

4.6. System Initialization

5. Experiments

5.1. Comparative Tests

5.1.1. Pose Accuracy

5.1.2. Map Quality

5.1.3. Robustness

5.2. Performance of Dynamic Pixels Culling

5.3. Extension Tests

5.4. Performance of OctoMap Construction

5.5. Timing Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. Elasticfusion: Real-time dense slam and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- Fu, X.; Zhu, F.; Wu, Q.; Sun, Y.; Lu, R.; Yang, R. Real-Time Large-Scale Dense Mapping with Surfels. Sensors 2018, 18, 1493. [Google Scholar] [CrossRef] [PubMed]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.-J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. Ds-slam: A semantic visual slam towards dynamic environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Rünz, M.; Agapito, L. Co-fusion: Real-time segmentation, tracking and fusion of multiple objects. In Proceedings of the IEEE International Conference on Robotics and Automation(ICRA), Singapore, 29 May–3 June 2017; pp. 4471–4478. [Google Scholar]

- Bârsan, I.A.; Liu, P.; Pollefeys, M.; Geiger, A. Robust dense mapping for large-scale dynamic environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7510–7517. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. Dynaslam: Tracking, mapping and inpainting in dynamic scenes. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Rünz, M.; Agapito, L. Maskfusion: Real-time recognition, tracking and reconstruction of multiple moving objects. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018. [Google Scholar]

- Zhou, G.; Bescos, B.; Dymczyk, M.; Pfeiffer, M.; Neira, J.; Siegwart, R. Dynamic objects segmentation for visual localization in urban environments. arXiv, 2018; arXiv:1807.02996. [Google Scholar]

- Pizzoli, M.; Forster, C.; Scaramuzza, D. Remode: Probabilistic, monocular dense reconstruction in real time. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2609–2616. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. Kinectfusion: Real-time dense surface mapping and tracking. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. Octomap: An efficient probabilistic 3d mapping framework based on octrees. Auton. Robots 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. Rtab-map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2018, 36, 416–446. [Google Scholar] [CrossRef]

- Laidlow, T.; Bloesch, M.; Li, W.; Leutenegger, S. Dense rgb-d-inertial slam with map deformations. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6741–6748. [Google Scholar]

- Scona, R.; Jaimez, M.; Petillot, Y.R.; Fallon, M.; Cremers, D. Staticfusion: Background reconstruction for dense rgb-d slam in dynamic environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1–9. [Google Scholar]

- Kim, D.-H.; Kim, J.-H. Effective background model-based rgb-d dense visual odometry in a dynamic environment. IEEE Trans. Robot. 2016, 32, 1565–1573. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, M.; Meng, M.Q.-H. Improving rgb-d slam in dynamic environments: A motion removal approach. Robot. Auton. Syst. 2017, 89, 110–122. [Google Scholar] [CrossRef]

- Xiao, Z.; Dai, B.; Wu, T.; Xiao, L.; Chen, T. Dense scene flow based coarse-to-fine rigid moving object detection for autonomous vehicle. IEEE Access 2017, 5, 23492–23501. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Yebes, J.J.; Almazán, J.; Bergasa, L.M. On combining visual slam and dense scene flow to increase the robustness of localization and mapping in dynamic environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 1290–1297. [Google Scholar]

- Wang, Y.; Huang, S. Towards dense moving object segmentation based robust dense rgb-d slam in dynamic scenarios. In Proceedings of the International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, 10–12 December 2014; pp. 1841–1846. [Google Scholar]

- Besl, P.; Mckay, N. A method for registration of 3D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Curless, B.; Levoy, M. A volumetric method for building complex models from range images. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 303–312. [Google Scholar]

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3d reconstruction at scale using voxel hashing. ACM Trans. Graph. 2013, 32, 169. [Google Scholar] [CrossRef]

- Whelan, T.; Kaess, M.; Johannsson, H.; Fallon, M.; Leonard, J.J.; McDonald, J. Real-time large-scale dense rgb-d slam with volumetric fusion. Int. J. Robot. Res. 2015, 34, 598–626. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3D mapping with an rgb-d camera. IEEE Trans. Robot. 2014, 30, 177–187. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 343–352. [Google Scholar]

- Dou, M.; Khamis, S.; Degtyarev, Y.; Davidson, P.; Fanello, S.R.; Kowdle, A.; Escolano, S.O.; Rhemann, C.; Kim, D.; Taylor, J. Fusion4d: Real-time performance capture of challenging scenes. ACM Trans. Graph. 2016, 35, 114. [Google Scholar] [CrossRef]

- Bi, S.; Yang, D.; Cai, Y. Automatic Calibration of Odometry and Robot Extrinsic Parameters Using Multi-Composite-Targets for a Differential-Drive Robot with a Camera. Sensors 2018, 18, 3097. [Google Scholar] [CrossRef] [PubMed]

- Siegwart, R.; Nourbakhsh, I.R. Introduction to Autonomous Mobile Robots, 2nd ed.; MIT Press: Cambridge, MA, USA, 2004; pp. 270–275. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv, 2018; arXiv:1804.02767. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Ceres Solver. Available online: http://ceres-solver.org (accessed on 15 January 2019).

- Rosbag. Available online: http://wiki.ros.org/rosbag (accessed on 15 January 2019).

- iai_kinect2. Available online: https://github.com/code-iai/iai_kinect2/ (accessed on 15 January 2019).

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003; pp. 87–131. [Google Scholar]

- evo. Available online: https://michaelgrupp.github.io/evo/ (accessed on 15 January 2019).

| Sequences | DRE-SLAM | RTAB-MAP | StaticFusion | Co-Fusion | ||||

|---|---|---|---|---|---|---|---|---|

| Trans. | Rot. | Trans. | Rot. | Trans. | Rot. | Trans. | Rot. | |

| O-ST1 | 0.0171 | 0.0252 | 0.0574 | 0.0934 | 0.6896 | 0.2304 | 0.2136 | 0.1091 |

| O-ST2 | 0.0233 | 0.0436 | 0.0429 | 0.0633 | 2.2755 | 2.8240 | 0.2114 | 0.1266 |

| O-ST3 | 0.0218 | 0.0335 | 0.0964 | 0.1406 | 3.4684 | 1.8022 | 0.3499 | 0.2061 |

| L-ST1 | 0.0274 | 0.0461 | 0.0422 | 0.0672 | 0.1575 | 0.2352 | 0.1361 | 0.0950 |

| L-ST2 | 0.0116 | 0.0403 | 0.0216 | 0.0364 | 0.2429 | 0.2784 | 0.1726 | 0.1017 |

| L-ST3 | 0.0212 | 0.0315 | 0.0447 | 0.0648 | 0.1951 | 0.1846 | 0.1641 | 0.1427 |

| C-ST1 | 0.0162 | 0.0209 | 0.0339 | 0.0433 | 0.8425 | 0.6428 | 0.2174 | 0.1427 |

| C-ST2 | 0.0088 | 0.0192 | 0.1342 | 0.0991 | 0.4140 | 0.2310 | 0.1889 | 0.1287 |

| C-ST3 | 0.0129 | 0.0246 | 0.0228 | 0.0401 | 0.3572 | 0.2095 | 0.1980 | 0.1852 |

| O-LD1 | 0.0108 | 0.0185 | 0.0655 | 0.0915 | 1.0082 | 1.1706 | 0.3272 | 0.2191 |

| O-LD2 | 0.0162 | 0.0309 | 0.0683 | 0.0797 | 0.7561 | 0.8079 | 0.7212 | 0.4193 |

| O-LD3 | 0.0175 | 0.0236 | 0.0915 | 0.0818 | 2.0426 | 2.6095 | 1.2238 | 0.3720 |

| L-LD1 | 0.0133 | 0.0270 | 0.0321 | 0.0561 | 0.1912 | 0.2503 | 0.3934 | 0.1662 |

| L-LD2 | 0.0110 | 0.0262 | 0.0325 | 0.0396 | 0.2037 | 0.1894 | 0.5229 | 0.6387 |

| L-LD3 | 0.0206 | 0.0358 | 0.0227 | 0.0451 | 0.2105 | 0.1836 | 0.3281 | 0.2751 |

| C-LD1 | 0.0231 | 0.0317 | 0.0087 | 0.0252 | 1.4949 | 1.2988 | 0.4433 | 0.3749 |

| C-LD2 | 0.0184 | 0.0274 | 0.0374 | 0.0211 | 2.4187 | 1.4065 | 0.6043 | 0.2630 |

| C-LD3 | 0.0164 | 0.0549 | 0.0072 | 0.0246 | 3.2005 | 2.5659 | 0.2238 | 0.3615 |

| O-HD1 | 0.0141 | 0.0229 | 0.1627 | 0.1739 | 4.7680 | 2.4754 | 0.7468 | 0.3635 |

| O-HD2 | 0.0284 | 0.0331 | 0.1396 | 0.1329 | 2.2350 | 2.6707 | 0.7736 | 1.0450 |

| O-HD3 | 0.0127 | 0.0256 | 0.0896 | 0.0846 | 0.8233 | 1.3533 | 0.4835 | 0.3002 |

| L-HD1 | 0.0136 | 0.0317 | 0.0277 | 0.0332 | 2.6896 | 1.2771 | 1.2393 | 1.1438 |

| L-HD2 | 0.0262 | 0.0380 | 0.0211 | 0.0258 | 1.4187 | 2.0547 | 0.8072 | 1.0425 |

| L-HD3 | 0.0190 | 0.0314 | 0.0340 | 0.0471 | 2.1998 | 2.1153 | 0.7263 | 0.6762 |

| C-HD1 | 0.0095 | 0.0292 | 0.0127 | 0.0357 | 0.5868 | 0.2994 | 0.8212 | 0.4649 |

| C-HD2 | 0.0225 | 0.0519 | 0.0111 | 0.0195 | 1.8197 | 2.8022 | 1.3695 | 0.4685 |

| C-HD3 | 0.0222 | 0.0575 | 0.0257 | 0.0416 | 1.3683 | 2.3261 | 0.5095 | 0.2080 |

| Voxel Size (m) | Submap Size (KFs) | ||||

|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | ||

| 0.05 | Retrace | 78,458.12 | 79,088.49 | 79,063.69 | 78,568.92 |

| Our approach | 13,963.45 | 11,558.43 | 10,189.10 | 10,308.59 | |

| Improvement | 82.20% | 85.39% | 87.11% | 86.88% | |

| 0.10 | Retrace | 12,754.71 | 12,607.22 | 12,712.09 | 12,730.30 |

| Our approach | 2198.32 | 1682.83 | 1470.14 | 1416.18 | |

| Improvement | 82.76% | 86.65% | 88.44% | 88.88% | |

| 0.15 | Retrace | 5476.40 | 5453.38 | 5549.65 | 5472.54 |

| Our approach | 768.58 | 583.72 | 489.83 | 429.21 | |

| Improvement | 85.97% | 89.30% | 91.17% | 92.16% | |

| 0.20 | Retrace | 3576.74 | 3694.08 | 3647.32 | 3728.31 |

| Our approach | 324.03 | 238.94 | 246.99 | 196.73 | |

| Improvement | 90.94% | 93.53% | 93.23% | 94.72% | |

| Voxel Size (m) | Submap Size (KFs) | ||||

|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | ||

| 0.05 | Retrace | 768.57 | 772.14 | 769.51 | 781.48 |

| Our approach | 630.22 | 562.91 | 479.58 | 546.55 | |

| Improvement | 18.00% | 27.10% | 37.68% | 30.06% | |

| 0.10 | Retrace | 770.69 | 768.68 | 773.55 | 773.81 |

| Our approach | 95.24 | 77.92 | 67.56 | 66.47 | |

| Improvement | 87.64% | 89.86% | 91.27% | 91.41% | |

| 0.15 | Retrace | 776.46 | 771.22 | 771.98 | 782.25 |

| Our approach | 33.73 | 27.44 | 23.88 | 22.34 | |

| Improvement | 95.66% | 96.44% | 96.91% | 97.14% | |

| 0.20 | Retrace | 770.64 | 770.67 | 772.14 | 772.39 |

| Our approach | 16.52 | 13.04 | 11.55 | 11.01 | |

| Improvement | 97.86% | 98.31% | 98.50% | 98.57% | |

| Thread | Operation | Time Cost (ms) |

|---|---|---|

| RGB-D Encoder Tracking | Encoder integration | 0.01 ± 0.00 |

| Feature extraction (1600 per frame) | 17.28 ± 6.91 | |

| 3D–2D match | 8.24 ± 4.48 | |

| Pose estimation | 5.86 ± 3.64 | |

| Total | 33.24 ± 12.12 | |

| Dynamic Pixels Culling | Object detection | 162.17 ± 8.88 |

| K-means | 286.71 ± 48.61 | |

| Multiview constraints | 28.10 ± 14.58 | |

| Total | 480.08 ± 54.27 | |

| Local Sparse Mapping | New map points creation | 0.23 ± 0.06 |

| Local BA (Window size: 8 KFs) | 138.54 ± 73.80 | |

| Total | 138.94 ± 73.83 | |

| Loop Closure (Loop size: 408 KFs) | Loop check | 80.87 |

| Pose graph optimization | 143.28 | |

| Total | 224.19 | |

| Sub-OctoMap Construction (voxel: 0.1 m) | Total | 52.04 ± 27.84 |

| Sub-OctoMap Fusion (408 KFs; voxel: 0.1 m) | Total | 2198.32 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, D.; Bi, S.; Wang, W.; Yuan, C.; Wang, W.; Qi, X.; Cai, Y. DRE-SLAM: Dynamic RGB-D Encoder SLAM for a Differential-Drive Robot. Remote Sens. 2019, 11, 380. https://doi.org/10.3390/rs11040380

Yang D, Bi S, Wang W, Yuan C, Wang W, Qi X, Cai Y. DRE-SLAM: Dynamic RGB-D Encoder SLAM for a Differential-Drive Robot. Remote Sensing. 2019; 11(4):380. https://doi.org/10.3390/rs11040380

Chicago/Turabian StyleYang, Dongsheng, Shusheng Bi, Wei Wang, Chang Yuan, Wei Wang, Xianyu Qi, and Yueri Cai. 2019. "DRE-SLAM: Dynamic RGB-D Encoder SLAM for a Differential-Drive Robot" Remote Sensing 11, no. 4: 380. https://doi.org/10.3390/rs11040380