DyLFG: A Dynamic Network Learning Framework Based on Geometry

Abstract

:1. Introduction

2. Background and Related Work

2.1. Basic Conceptions of Hyperbolic Space

2.2. Geometric Operations of Hyperbolic Space

2.3. Ricci Curvature

2.4. Related Work

3. Problem Definition

4. Methods

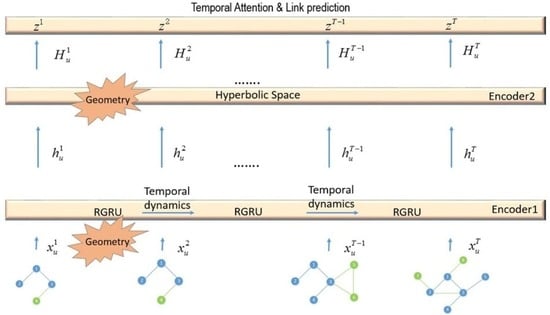

4.1. GAT Stacked Module and RGRU

4.2. Hyperbolic Geometric Transition Layer

4.3. Temporal Attention Layer

4.4. DyLFG Architecture

5. Experiment

5.1. Datasets

5.2. Baseline Methods’ Setup

5.3. Link Prediction

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Yan, D.; Zhang, Y.; Xie, W.; Jin, Y.; Zhang, Y. Muse: Multi-faceted attention for signed network embedding. Neurocomputing 2023, 519, 36–43. [Google Scholar] [CrossRef]

- Su, C.; Tong, J.; Zhu, Y.; Cui, P.; Wang, F. Network embedding in biomedical data science. Brief. Bioinform. 2020, 21, 182–197. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Cui, P.; Zhu, W. Structural deep network embedding. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1225–1234. [Google Scholar] [CrossRef]

- Qiu, J.; Dong, Y.; Ma, H.; Li, J.; Wang, K.; Tang, J. Network embedding as matrix factorization: Unifying deepwalk, line, pte, and node2vec. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018; pp. 459–467. [Google Scholar]

- Zhang, J.; He, X.; Wang, J. Directed community detection with network embedding. J. Am. Stat. Assoc. 2022, 117, 1809–1819. [Google Scholar] [CrossRef]

- Xu, X.L.; Xiao, Y.Y.; Yang, X.H.; Wang, L.; Zhou, Y.B. Attributed network community detection based on network embedding and parameter-free clustering. Appl. Intell. 2022, 55, 8073–8086. [Google Scholar] [CrossRef]

- Bandyopadhyay, S.; Lokesh, N.; Murty, M.N. Outlier aware network embedding for attributed networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 12–19. [Google Scholar]

- Abu-El-Haija, S.; Kapoor, A.; Perozzi, B.; Lee, J. N-gcn: Multi-scale graph convolution for semi-supervised node classification. In Proceedings of the Uncertainty in Artificial Intelligence, PMLR, Virtual, 3–6 August 2020; pp. 841–851. [Google Scholar]

- Zhang, Z.; Cai, J.; Zhang, Y.; Wang, J. Learning hierarchy-aware knowledge graph embeddings for link prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 3065–3072. [Google Scholar]

- Cai, L.; Ji, S. A multi-scale approach for graph link prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 3308–3315. [Google Scholar]

- Zhao, W.; Luo, J.; Fan, T.; Ren, Y.; Xia, Y. Analyzing and visualizing scientific research collaboration network with core node evaluation and community detection based on network embedding. Pattern Recognit. Lett. 2021, 144, 54–60. [Google Scholar] [CrossRef]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar] [CrossRef]

- Lai, Y.A.; Hsu, C.C.; Chen, W.H.; Yeh, M.Y.; Lin, S.D. Prune: Preserving proximity and global ranking for network embedding. Adv. Neural Inf. Process. Syst. 2017, 30, 5257–5266. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “siamese” time delay neural network. Adv. Neural Inf. Process. Syst. 1993, 6, 737–744. [Google Scholar] [CrossRef]

- Ribeiro, L.F.; Saverese, P.H.; Figueiredo, D.R. struc2vec: Learning node representations from structural identity. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 385–394. [Google Scholar]

- Mo, J.; Gao, N.; Zhou, Y.; Pei, Y.; Wang, J. NANE: Attributed network embedding with local and global information. In International Conference on Web Information Systems Engineering, Proceedings of the WISE 2018, Dubai, United Arab Emirates, 12–15 November 2018; Springer: Cham, Switzerland, 2018; pp. 247–261. [Google Scholar]

- Mahdavi, S.; Khoshraftar, S.; An, A. dynnode2vec: Scalable dynamic network embedding. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 3762–3765. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar] [CrossRef]

- Du, L.; Wang, Y.; Song, G.; Lu, Z.; Wang, J. Dynamic Network Embedding: An Extended Approach for Skip-gram based Network Embedding. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; Volume 2018, pp. 2086–2092. [Google Scholar]

- Goyal, P.; Kamra, N.; He, X.; Liu, Y. Dyngem: Deep embedding method for dynamic graphs. arXiv 2018, arXiv:1805.11273. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Chen, J.; Zhang, J.; Xu, X.; Fu, C.; Zhang, D.; Zhang, Q.; Xuan, Q. E-lstm-d: A deep learning framework for dynamic network link prediction. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 3699–3712. [Google Scholar] [CrossRef]

- Sankar, A.; Wu, Y.; Gou, L.; Zhang, W.; Yang, H. Dysat: Deep neural representation learning on dynamic graphs via self-attention networks. In Proceedings of the 13th International Conference on Web Search and Data Mining, Houston, TX, USA, 3–7 February 2020; pp. 519–527. [Google Scholar]

- Li, J.; Peng, J.; Liu, S.; Weng, L.; Li, C. TSAM: Temporal Link Prediction in Directed Networks based on Self-Attention Mechanism. arXiv 2020, arXiv:2008.10021. [Google Scholar]

- Hou, C.; Zhang, H.; He, S.; Tang, K. GloDyNE: Global Topology Preserving Dynamic Network Embedding. IEEE Trans. Knowl. Data Eng. 2020, 34, 4826–4837. [Google Scholar] [CrossRef]

- Leimeister, M.; Wilson, B.J. Skip-gram word embeddings in hyperbolic space. arXiv 2018, arXiv:1809.01498. [Google Scholar]

- Nickel, M.; Kiela, D. Poincaré embeddings for learning hierarchical representations. arXiv 2017, arXiv:1705.08039. [Google Scholar]

- Chami, I.; Ying, R.; Ré, C.; Leskovec, J. Hyperbolic graph convolutional neural networks. Adv. Neural Inf. Process. Syst. 2019, 32, 4869. [Google Scholar] [PubMed]

- Zhang, Y.; Li, C.; Xie, X.; Wang, X.; Shi, C.; Liu, Y.; Sun, H.; Zhang, L.; Deng, W.; Zhang, Q. Geometric Disentangled Collaborative Filtering. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 80–90. [Google Scholar]

- Iyer, R.G.; Bai, Y.; Wang, W.; Sun, Y. Dual-geometric space embedding model for two-view knowledge graphs. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 676–686. [Google Scholar]

- Sun, L.; Ye, J.; Peng, H.; Yu, P.S. A self-supervised riemannian gnn with time varying curvature for temporal graph learning. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 1827–1836. [Google Scholar]

- Sun, L.; Zhang, Z.; Zhang, J.; Wang, F.; Peng, H.; Su, S.; Philip, S.Y. Hyperbolic variational graph neural network for modeling dynamic graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 4375–4383. [Google Scholar]

- Krioukov, D.; Papadopoulos, F.; Kitsak, M.; Vahdat, A.; Boguná, M. Hyperbolic geometry of complex networks. Phys. Rev. E 2010, 82, 036106. [Google Scholar] [CrossRef]

- Cannon, J.W.; Floyd, W.J.; Kenyon, R.; Parry, W.R. Hyperbolic geometry. Flavors Geom. 1997, 31, 59–115. [Google Scholar]

- Otto, F. The geometry of dissipative evolution equations: The porous medium equation. Commun. Partial. Differ. Equ. 2001, 26, 101–174. [Google Scholar] [CrossRef]

- Jost, J.; Liu, S. Ollivier’s Ricci curvature, local clustering and curvature-dimension inequalities on graphs. Discret. Comput. Geom. 2014, 51, 300–322. [Google Scholar] [CrossRef]

- Lin, Y.; Lu, L.; Yau, S.T. Ricci curvature of graphs. Tohoku Math. J. Second. Ser. 2011, 63, 605–627. [Google Scholar] [CrossRef]

- Ollivier, Y. Ricci curvature of Markov chains on metric spaces. J. Funct. Anal. 2009, 256, 810–864. [Google Scholar] [CrossRef]

- Trivedi, R.; Farajtabar, M.; Biswal, P.; Zha, H. Dyrep: Learning representations over dynamic graphs. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Zuo, Y.; Liu, G.; Lin, H.; Guo, J.; Hu, X.; Wu, J. Embedding temporal network via neighborhood formation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2857–2866. [Google Scholar]

- Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Schardl, T.; Leiserson, C. Evolvegcn: Evolving graph convolutional networks for dynamic graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5363–5370. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Klimt, B.; Yang, Y. Introducing the Enron corpus. In Proceedings of the CEAS, Mountain View, CA, USA, 30–31 July 2004. [Google Scholar]

- Panzarasa, P.; Opsahl, T.; Carley, K.M. Patterns and dynamics of users’ behavior and interaction: Network analysis of an online community. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 911–932. [Google Scholar] [CrossRef]

- Rossi, R.; Ahmed, N. The network data repository with interactive graph analytics and visualization. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Hou, C.; Zhang, H.; Tang, K.; He, S. DynWalks: Global topology and recent changes awareness dynamic network embedding. arXiv 2019, arXiv:1907.11968. [Google Scholar]

- Gracious, T.; Gupta, S.; Kanthali, A.; Castro, R.M.; Dukkipati, A. Neural latent space model for dynamic networks and temporal knowledge graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 4054–4062. [Google Scholar]

| Dataset | Enron | UCI | AS733 |

|---|---|---|---|

| Nodes | 143 | 1795 | 2102 |

| Edges | 2852 | 13,399 | 4307 |

| Time steps | 16 | 12 | 16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, W.; Zhai, X. DyLFG: A Dynamic Network Learning Framework Based on Geometry. Entropy 2023, 25, 1611. https://doi.org/10.3390/e25121611

Wu W, Zhai X. DyLFG: A Dynamic Network Learning Framework Based on Geometry. Entropy. 2023; 25(12):1611. https://doi.org/10.3390/e25121611

Chicago/Turabian StyleWu, Wei, and Xuemeng Zhai. 2023. "DyLFG: A Dynamic Network Learning Framework Based on Geometry" Entropy 25, no. 12: 1611. https://doi.org/10.3390/e25121611