Trends and hotspots in research on medical images with deep learning: a bibliometric analysis from 2013 to 2023

- 1First School of Clinical Medicine, Fujian University of Traditional Chinese Medicine, Fuzhou, China

- 2College of Rehabilitation Medicine, Fujian University of Traditional Chinese Medicine, Fuzhou, China

- 3The School of Health, Fujian Medical University, Fuzhou, China

Background: With the rapid development of the internet, the improvement of computer capabilities, and the continuous advancement of algorithms, deep learning has developed rapidly in recent years and has been widely applied in many fields. Previous studies have shown that deep learning has an excellent performance in image processing, and deep learning-based medical image processing may help solve the difficulties faced by traditional medical image processing. This technology has attracted the attention of many scholars in the fields of computer science and medicine. This study mainly summarizes the knowledge structure of deep learning-based medical image processing research through bibliometric analysis and explores the research hotspots and possible development trends in this field.

Methods: Retrieve the Web of Science Core Collection database using the search terms “deep learning,” “medical image processing,” and their synonyms. Use CiteSpace for visual analysis of authors, institutions, countries, keywords, co-cited references, co-cited authors, and co-cited journals.

Results: The analysis was conducted on 562 highly cited papers retrieved from the database. The trend chart of the annual publication volume shows an upward trend. Pheng-Ann Heng, Hao Chen, and Klaus Hermann Maier-Hein are among the active authors in this field. Chinese Academy of Sciences has the highest number of publications, while the institution with the highest centrality is Stanford University. The United States has the highest number of publications, followed by China. The most frequent keyword is “Deep Learning,” and the highest centrality keyword is “Algorithm.” The most cited author is Kaiming He, and the author with the highest centrality is Yoshua Bengio.

Conclusion: The application of deep learning in medical image processing is becoming increasingly common, and there are many active authors, institutions, and countries in this field. Current research in medical image processing mainly focuses on deep learning, convolutional neural networks, classification, diagnosis, segmentation, image, algorithm, and artificial intelligence. The research focus and trends are gradually shifting toward more complex and systematic directions, and deep learning technology will continue to play an important role.

1. Introduction

The origin of radiology can be seen as the beginning of medical image processing. The discovery of X-rays by Röntgen and its successful application in clinical practice ended the era of disease diagnosis relying solely on the clinical experience of doctors (Glasser, 1995). The production of medical images provides doctors with more data, enabling them to diagnose and treat diseases more accurately. With the continuous improvement of computer performance and image processing technology represented by central processing units (CPUs; Dessy, 1976), medical image processing has become more efficient and accurate in medical research and clinical applications. Initially, medical image processing was mainly used in medical imaging diagnosis, such as analyzing and diagnosing X-rays, CT, MRI, and other images. Nowadays, medical image processing has become an important research tool in fields such as radiology, pathology, and biomedical engineering, providing strong support for medical research and clinical diagnosis (Hosny et al., 2018; Hu et al., 2022; Lin et al., 2022).

Deep learning originated from artificial neural networks, which can be traced back to the 1940 and 1950s when scientists proposed the perceptron model and neuron model to simulate the working principles of human nervous system (Rosenblatt, 1958; McCulloch and Pitts, 1990). However, limited by the weak performance of computers at that time, these models were quickly abandoned. In 2006, Canadian computer scientist Geoffrey Hinton and his team proposed a model called “deep belief network,” which adopted a deep structure and solved the shortcomings of traditional neural networks. This is considered as the starting point of deep learning (Hinton et al., 2006).

In recent years, with the rapid development of the Internet, massive data are constantly generated and accumulated, which are very favorable for deep learning networks that require a large amount of data for training (Misra et al., 2022). Additionally, the development of computer devices such as graphics processing units (GPUs) and tensor processing units(TPUs) has made the training of deep learning models faster and more efficient (Alzubaidi et al., 2021; Elnaggar et al., 2022). Furthermore, the continuous improvement and optimization of deep learning algorithms have also led to the continuous improvement of the performance of deep learning models (Minaee et al., 2022). Therefore, the application of deep learning is becoming more and more widespread in various fields, including medical image processing.

Deep learning has many advantages in processing medical images. Firstly, it does not require human intervention and can automatically learn and extract features, achieving automation in processing (Yin et al., 2021). Secondly, it can process a large amount of data simultaneously, with processing efficiency far exceeding traditional manual methods (Narin et al., 2021). Thirdly, its accuracy is also high, able to learn more complex features and discover subtle changes and patterns that are difficult for humans to perceive (Han et al., 2022). Lastly, it is less affected by subjective human factors, leading to relatively more objective results (Kerr et al., 2022).

Bibliometrics is a quantitative method for evaluating the research achievements of researchers, institutions, countries, or subject areas, and can be traced back to the 1960s (Schoenbach and Garfield, 1956). In bibliometric analysis, the citation half-life of an article has two characteristics: first, classical articles are continuously cited; second, some articles are frequently cited within a certain period and quickly reach a peak. The length of time that classical articles are continuously cited is closely related to the speed of development of basic research, while the frequent citation of certain articles within a specific period represents the dynamic changes in the corresponding field. Generally speaking, articles that reflect dynamic changes in the field are more common than classical articles. In Web of Science, papers that are cited in one or more fields and rank in the top 1% of citation counts for their publication year are included as highly cited papers. Visual analysis of highly cited papers is more effective in identifying popular research areas and trends compared to visual analysis of all search results. CiteSpace is a visualization software that employs bibliometric methods, developed by Professor Chaomei Chen at Drexel University (Chen, 2006).

Therefore, to gain a deeper understanding of the research hotspots and possible development trends of deep learning-based medical image processing, this study aims to analyze highly cited papers published between 2013 and 2023 using bibliometric methods, intends to identify the authors, institutions, and countries with the most research achievements, and provide an overall review of the knowledge structure among the highly cited papers. Expected to be helpful for researchers in this field.

2. Methods

2.1. Search strategy and data source

A search was conducted in the Web of Science Core Collection database using the search terms “deep learning” and “medical imaging,” along with their synonyms and related terms. The complete search string is as follows: (TS = Deep Learning OR “Deep Neural Networks” OR “Deep Machine Learning” OR “Deep Artificial Neural Networks” OR “Deep Models” OR “Hierarchical Learning” OR “Deep architectures” OR “Multi-layer Neural Networks” OR “Large-scale Neural Networks” OR “Deep Belief Networks”) AND (TS = “Medical imaging” OR “Radiology imaging” OR “Diagnostic imaging” OR “Clinical imaging” OR “Biomedical imaging” OR “Radiographic imaging” OR “Tomographic imaging” OR “Imaging modalities” OR “Medical visualization” OR “Medical image analysis”). The search was refined to include only articles published between 2013 and 2023, with a focus on highly cited papers. The search yielded a total of 562 results. The article type was restricted to papers, and the language was limited to English.

2.2. Scientometric analysis methods

Due to the Web of Science export limitation, the record options were set to export records 1–500 and 501–562 separately, and the record content including full records and cited references. This plain text file served as the source file for the analysis. Next, a new project was established in CiteSpace 6.1.R6, with the project location and data storage location set up. The input and output function of CiteSpace were used to convert the plain text file into a format that could be analyzed in CiteSpace. The remaining parameters were set as follows: the time slicing was set from 2013 to 2023, with a yearly time interval; the node types selected included authors, institutions, countries keyword, co-cited references, co-cited authors, and co-cited journals; the threshold for “Top N,” “Top N%,” and “g-index” were set to default; the network pruning was set to pathfinder and pruning the merged network; the visualization was set to static cluster view and show merged network to display the overall network.

In the map generated by CiteSpace, there are multiple elements. The various nodes available for analysis are represented as circles on the map, with their size generally indicating the quantity—the larger the circle, the greater the quantity. The circles are composed of annual rings, with the color of each ring representing the year, and the thickness of the ring determined by the number of corresponding nodes in that year. The more nodes in a year, the thicker the ring. The meaning of the “Centrality” option in CiteSpace menu is “Betweenness Centrality” (Chen, 2005). CiteSpace utilizes this metric to discover and measure the importance of nodes, and highlights nodes with purple circles when the centrality greater than or equal to 0.1. It means that only nodes with centrality greater than or equal to 0.1 are worth emphasizing their importance. The calculation method is based on the formulation introduced by Freeman (1977), and the formula is as follows:

In this formula, represents the number of shortest paths from node to node , and represents the number of those shortest paths from node to node that pass through node . From the information transmission perspective, the higher the Betweenness Centrality, the greater the importance of the node. Removing these nodes will have a larger impact on network transmission.

3. Results

3.1. Analysis of annual publication volume

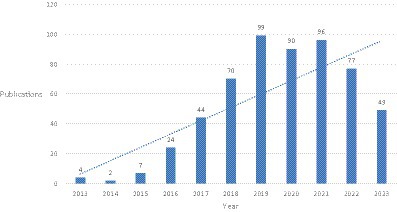

The trend of annual publication volume shows that from 2013 to 2023, the number of related studies fluctuated slightly each year but showed an overall upward trend. Overall, it can be divided into three stages: before 2016, the number of papers was relatively small; after 2016, the number of papers increased year by year, and the rate of increase accelerated. From 2016 to 2019, there was an increase of about 20 papers per year on the basis of the previous year. After 2019, the growth rate slowed down, but there was still a high level of publications each year (Figure 1).

3.2. Analysis of authors

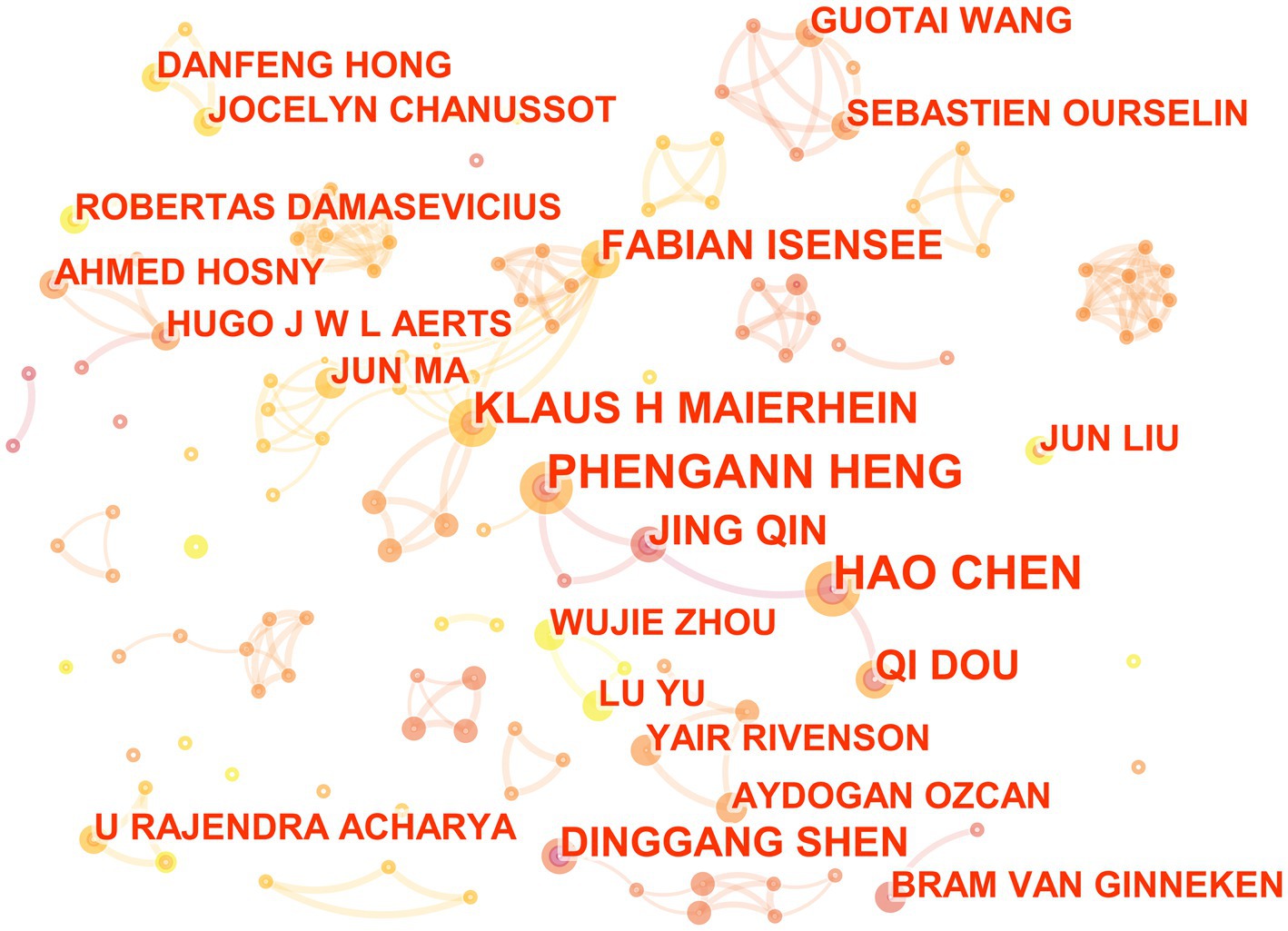

Among the 562 articles included, there are a total of 364 authors (Figure 2). Pheng-Ann Heng and Hao Chen ranks first with seven publications, Klaus Hermann Maier-Hein ranks second with six publications, while Fabian Isensee, Jing Qin, Qi Dou, and Dinggang Shen are tied for third place with five publications each. From Figure 2, it can be seen that there are many small groups of authors, but no very large research groups, and there are still many authors who do not have any collaborative relationships with each other.

Figure 2. The collaborative relationship map of researchers in the field of medical image processing with deep learning from 2013 to 2023.The size of nodes represents the number of papers published by the author. The links between nodes reflect the strength of collaboration.

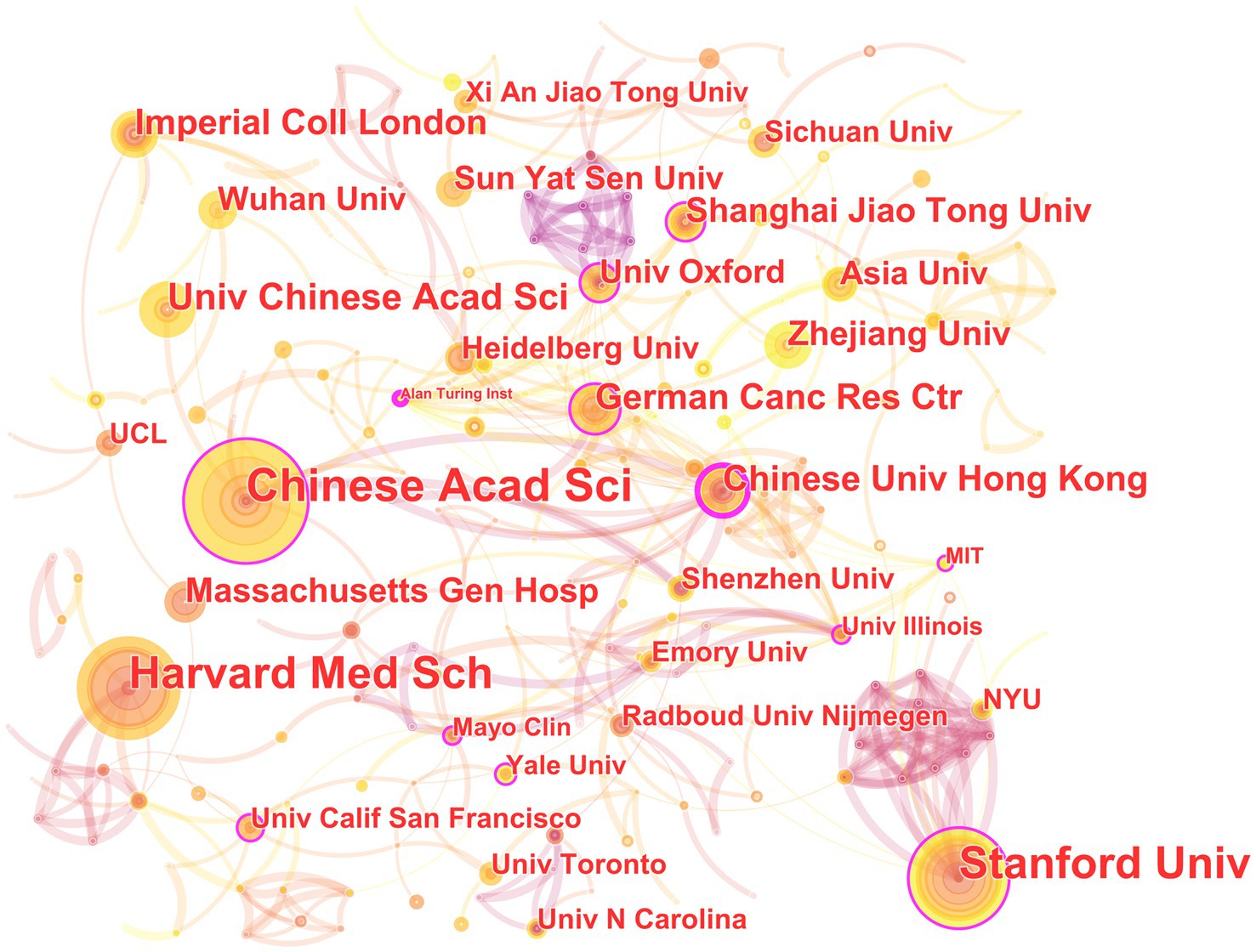

3.3. Analysis of institutions

In the 562 papers included, there are a total of 311 institutions (Figure 3; Table 1). The institution with the highest publication output is Chinese Academy of Sciences, and the institution with the highest centrality is Stanford University. The map shows that there are close collaborative relationships between institutions, but these relationships are based on one or more institutions with high publication output and centrality. There is less collaboration between institutions with low publication output and no centrality. As shown in Table 1, there is no necessary relationship between publication output and centrality, and the institution with the highest publication output does not necessarily have the highest centrality.

Figure 3. The collaborative relationship map of institutions in the field of medical image processing with deep learning from 2013 to 2023. The size of nodes represents the number of papers published by the institution. The links between nodes reflect the strength of collaboration.

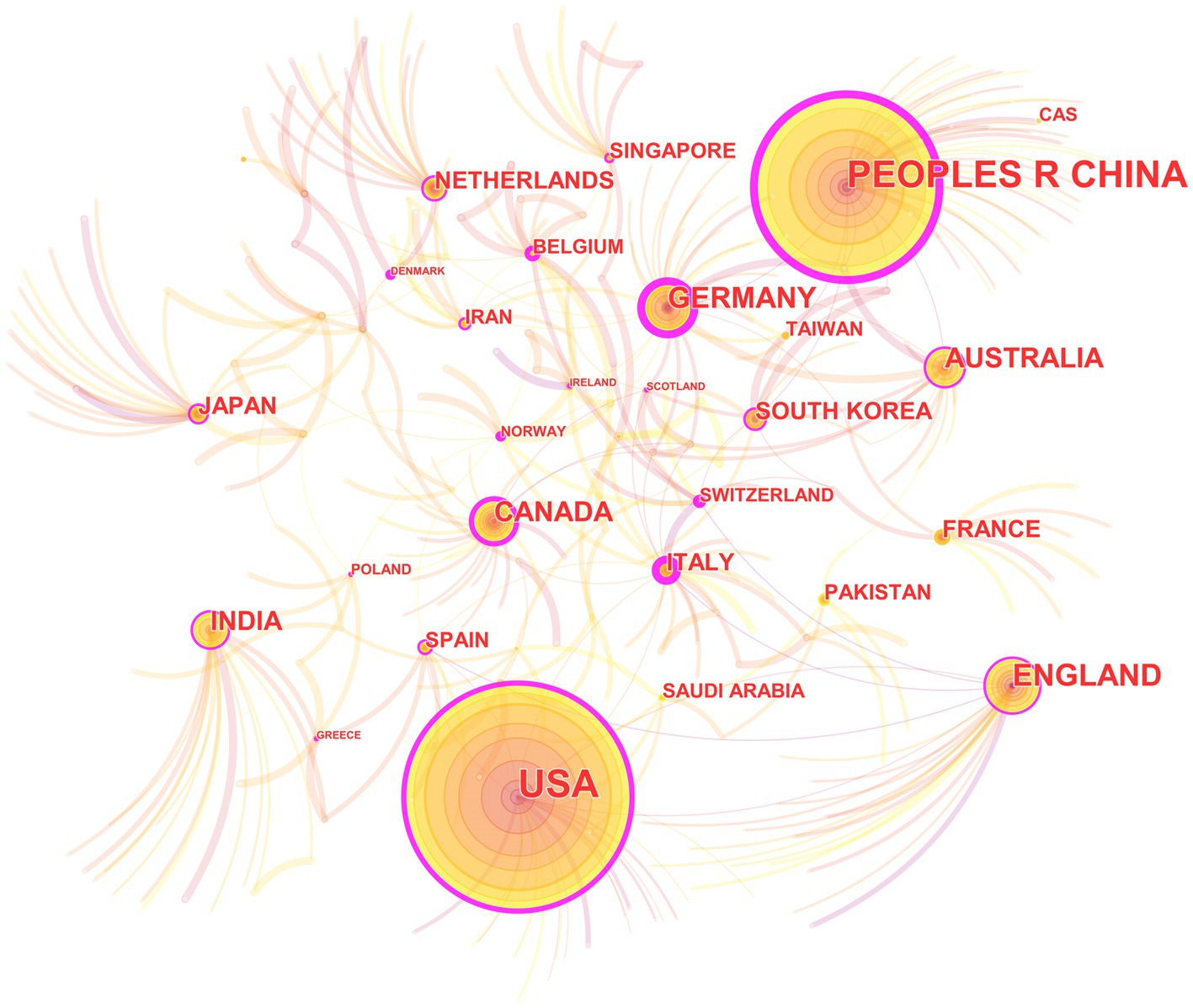

3.4. Analysis of countries

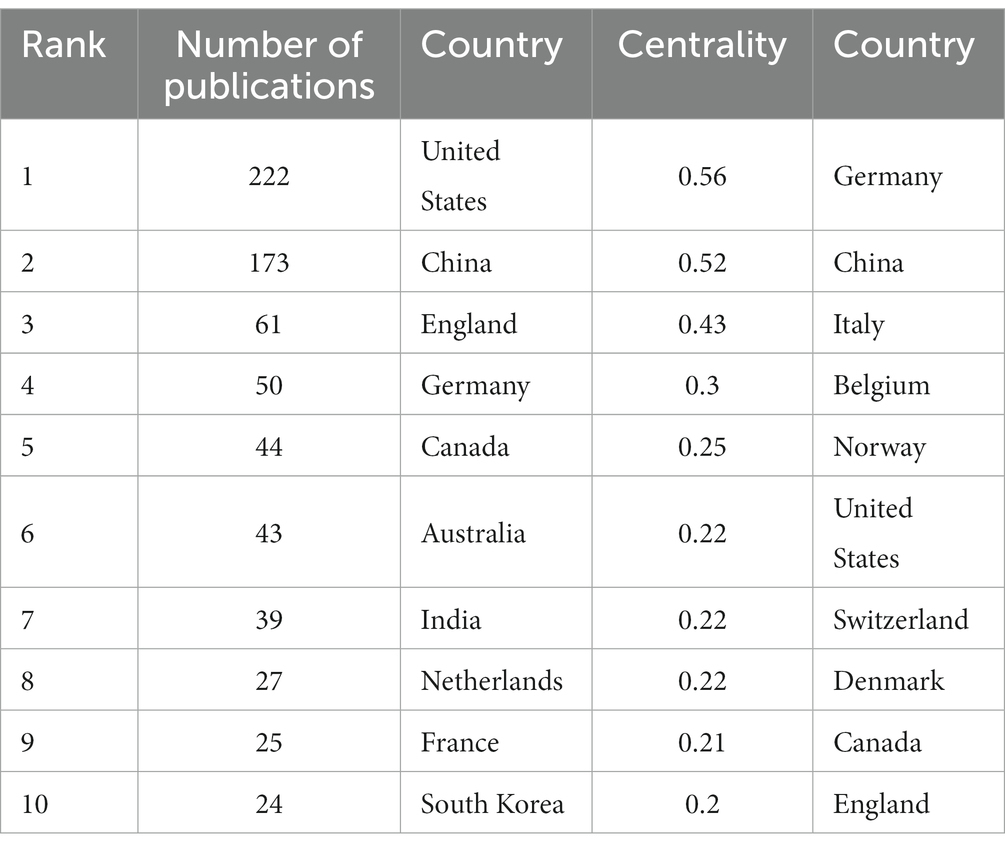

In the 562 included papers, there are a total of 62 countries represented (Figure 4; Table 2). The United States has the highest publication output, while Germany has the highest centrality. The map shows that all countries have at least some collaboration with other countries. In general, there are three situations: some countries have a high publication output and centrality; some have a low publication output but high centrality, and some have a high publication output but low centrality.

Figure 4. The collaborative relationship map of countries in the field of medical image processing with deep learning from 2013 to 2023. The size of nodes represents the number of papers published by the country. The links between nodes reflect the strength of collaboration.

3.5. Analysis of keywords

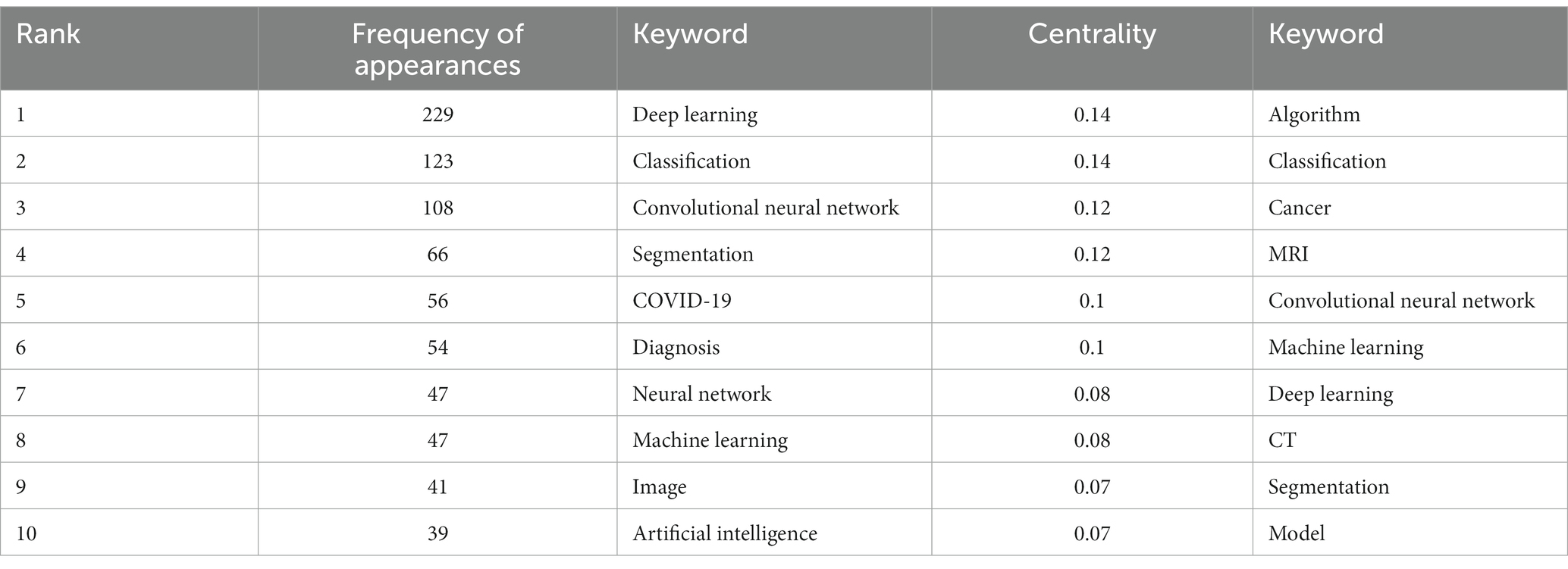

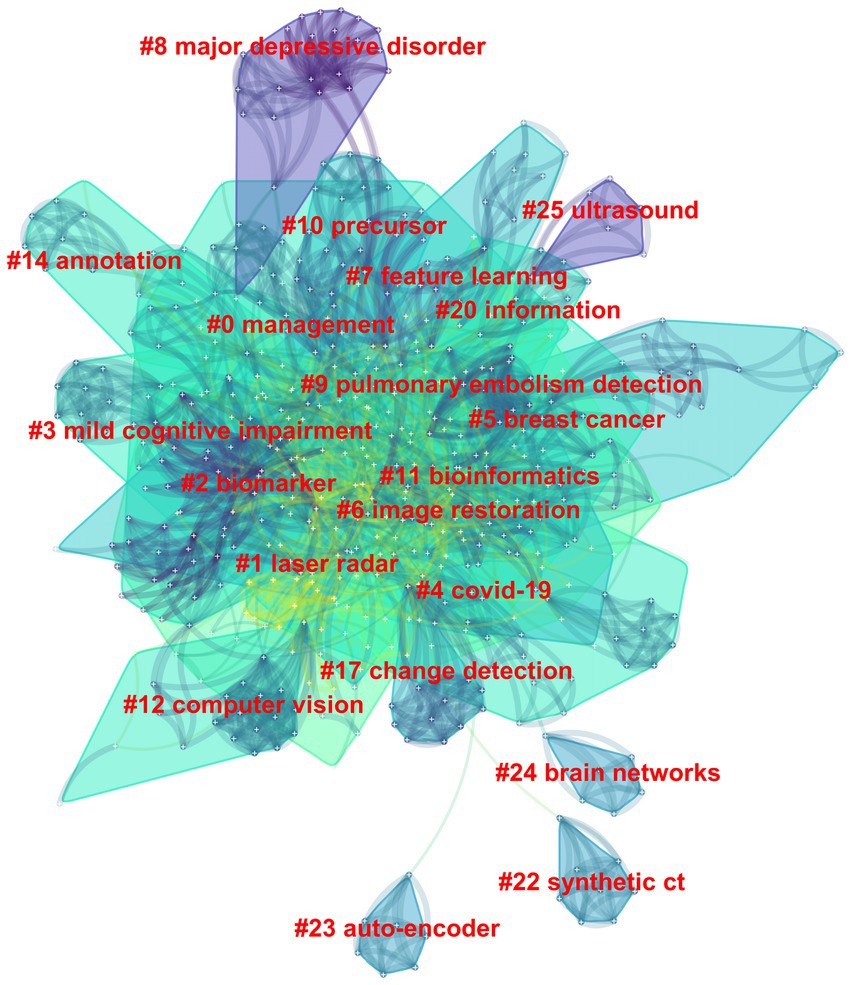

Among the 562 papers included, there were a total of 425 keywords (Figure 5; Table 3). The most frequently occurring keyword is “Deep Learning,” and the one with the highest centrality is “algorithm.” Clustering analysis of the keywords resulted in 20 clusters: management, laser radar, biomarker, mild cognitive impairment, COVID-19, image restoration, breast cancer, feature learning, major depressive disorder, pulmonary embolism detection, precursor, bioinformatics, computer vision, annotation, change detection, information, synthetic CT, auto-encoder, brain networks, and ultrasound.

Figure 5. The clustering map of keywords in the field of medical image processing with deep learning from 2013 to 2023. The smaller the cluster number, the larger its size, and the more keywords it contains.

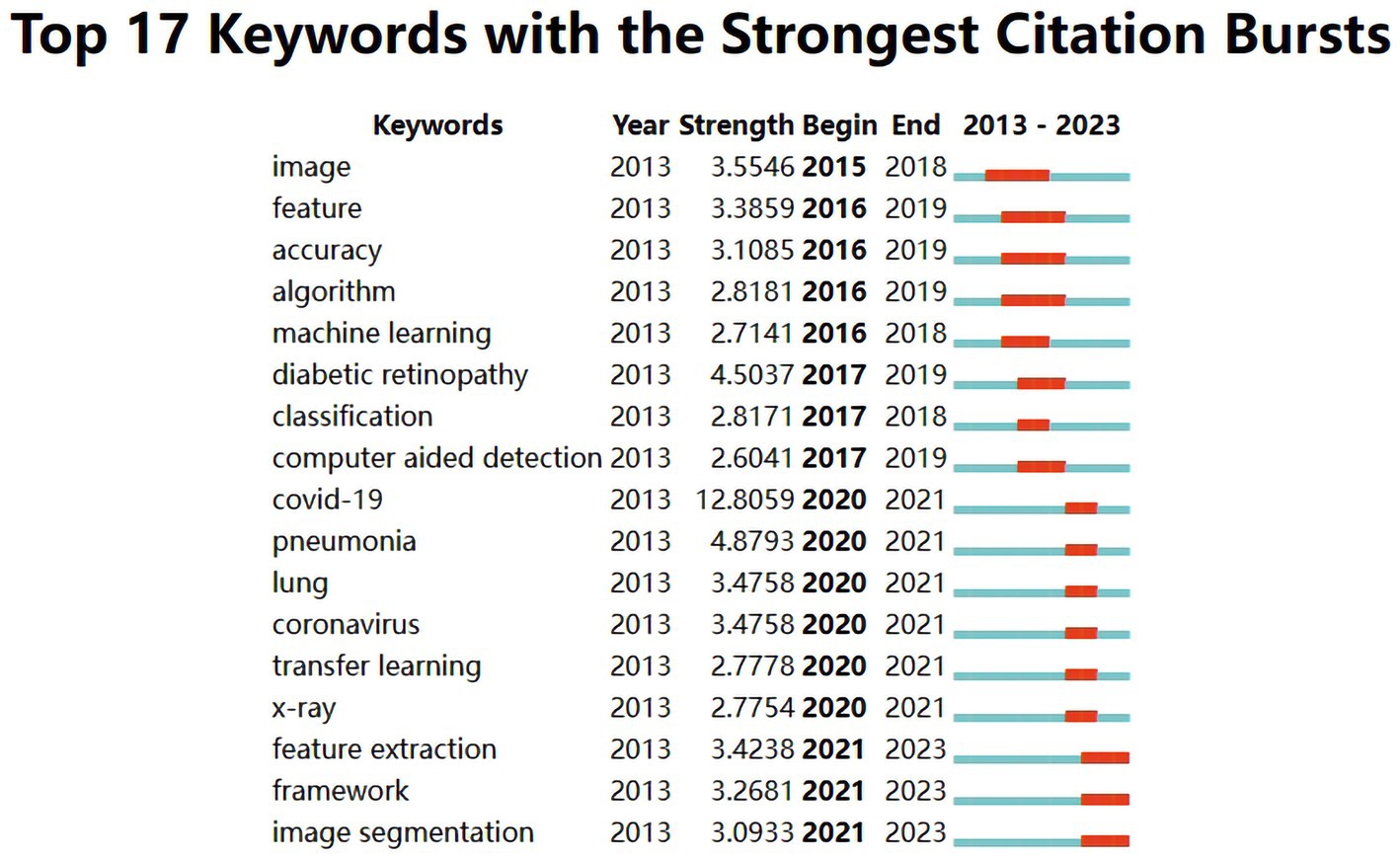

The evolution of burst keywords in recent years can be summarized as follow (Figure 6): It all began in 2015 with a focus on “image.” By 2016, “feature, accuracy, algorithm, and machine learning” took center stage. The year 2017 brought prominence to “diabetic retinopathy, classification and computer-aided detection.” Moving into 2020, attention shifted to “COVID-19, pneumonia, lung, coronavirus, transfer learning and X-ray.” In 2021, the conversation revolved around “feature extraction, framework and image segmentation”.

Figure 6. Top 17 keywords with the strongest citation bursts in publications of medical image processing with deep learning from 2013 to 2023. The blue line represents the overall timeline, while the red line represents the appearance year, duration, and end year of the burst keywords.

3.6. Analysis of references

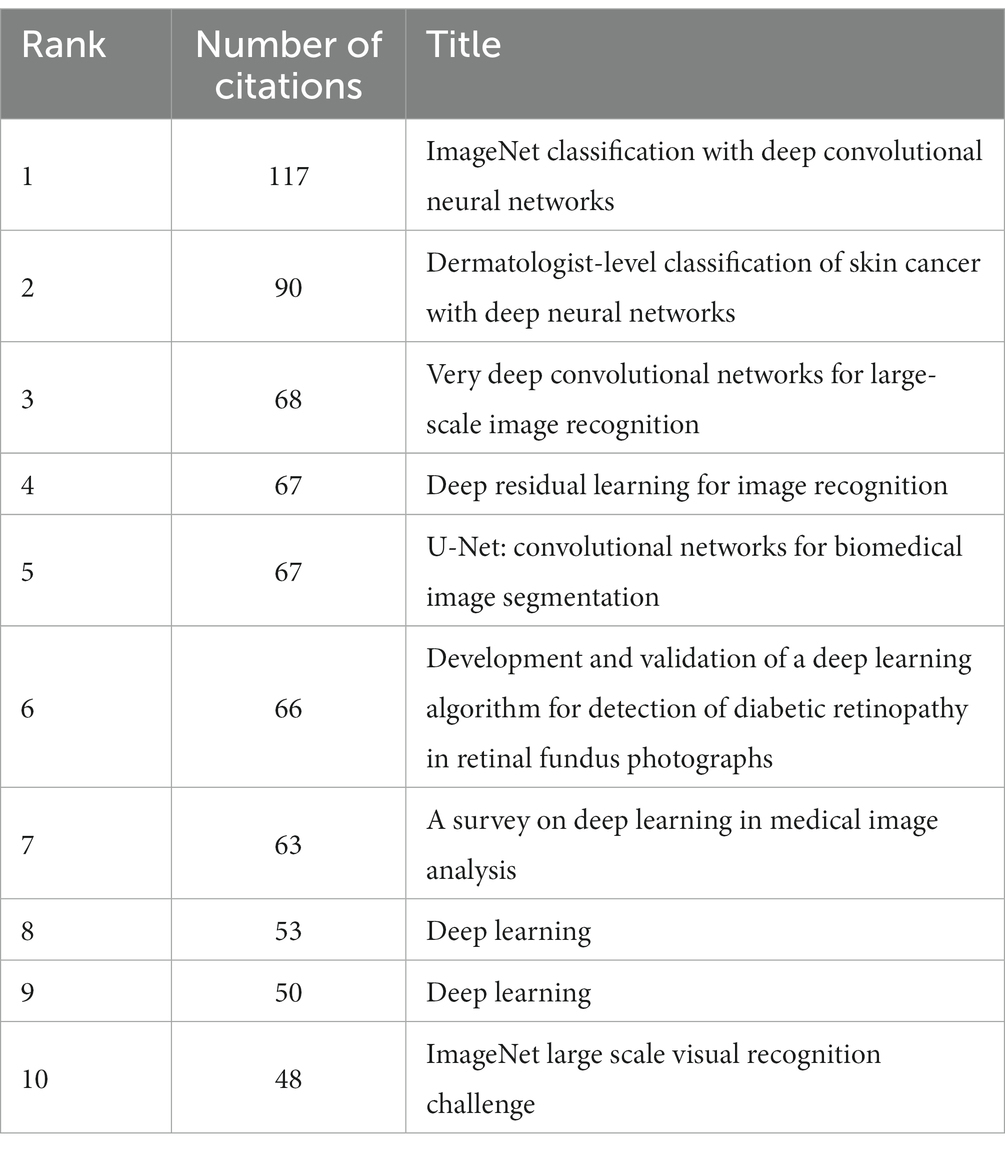

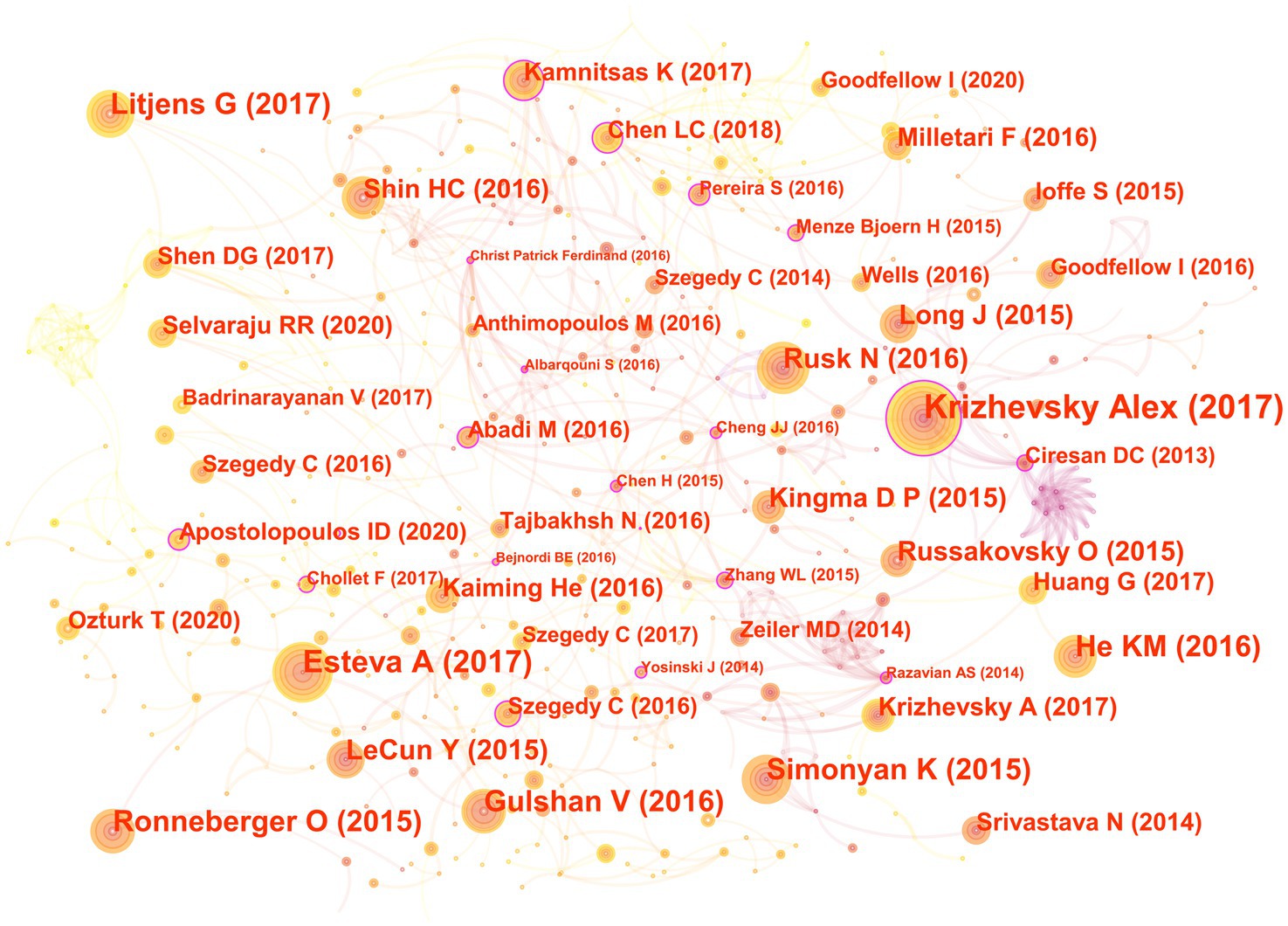

In the 562 articles included, there are a total of 584 references (Figure 7; Table 4). The most cited reference is “ImageNet Classification with Deep Convolutional Neural Networks” by Alex Krizhevsky. Alex Krizhevsky and his team developed a powerful convolutional neural network (CNN) to classify a vast dataset of high-resolution images into 1,000 categories, achieving significantly improved accuracy rates of 37.5 and 17.0% for top-1 and top-5 errors compared to previous methods (Krizhevsky et al., 2017).

Figure 7. The co-cited reference map in the field of medical image processing with deep learning from 2013 to 2023. The size of nodes reflects the number of citations, while the links between nodes reflect the strength of co-citations.

There are a total of three articles with centrality greater than or equal to 0.1. The authors of these three articles are Dan Claudiu Ciresan, Liang-Chieh Chen, and Marios Anthimopoulos. Dan Claudiu Ciresan use deep max-pooling convolutional neural networks to detect mitosis in breast histology images and won the ICPR 2012 mitosis detection competition (Ciresan et al., 2013). Liang-Chieh Chen address the task of semantic image segmentation with deep learning and make three main contributions. Firstly, convolution with upsampled filters, known as “atrous convolution.” Secondly, they introduce the method of atrous spatial pyramid pooling (ASPP). Lastly, they improve the accuracy of object boundary localization by integrating techniques from deep convolutional neural networks and probabilistic graphical models (Chen et al., 2018). Marios Anthimopoulos propose and evaluate a convolutional neural network (CNN), designed for the classification of interstitial lung diseases (ILDs) patterns (Anthimopoulos et al., 2016).

The eighth and ninth ranked articles have the same title, originating from the Nature journal. The commonality lies in their source, but they differ in authors. The eighth-ranked article is by Nicole Rusk, published in the Comments & Opinion section of Nature Methods. It provides a concise introduction to deep learning (Rusk, 2016). On the other hand, the ninth-ranked article is authored by Yann LeCun and is a comprehensive review. In comparison to Nicole Rusk’s article, LeCun’s extensively elaborates on the fundamental principles of deep learning and its applications in various domains such as speech recognition, visual object recognition, object detection, as well as fields like drug discovery and genomics (LeCun et al., 2015).

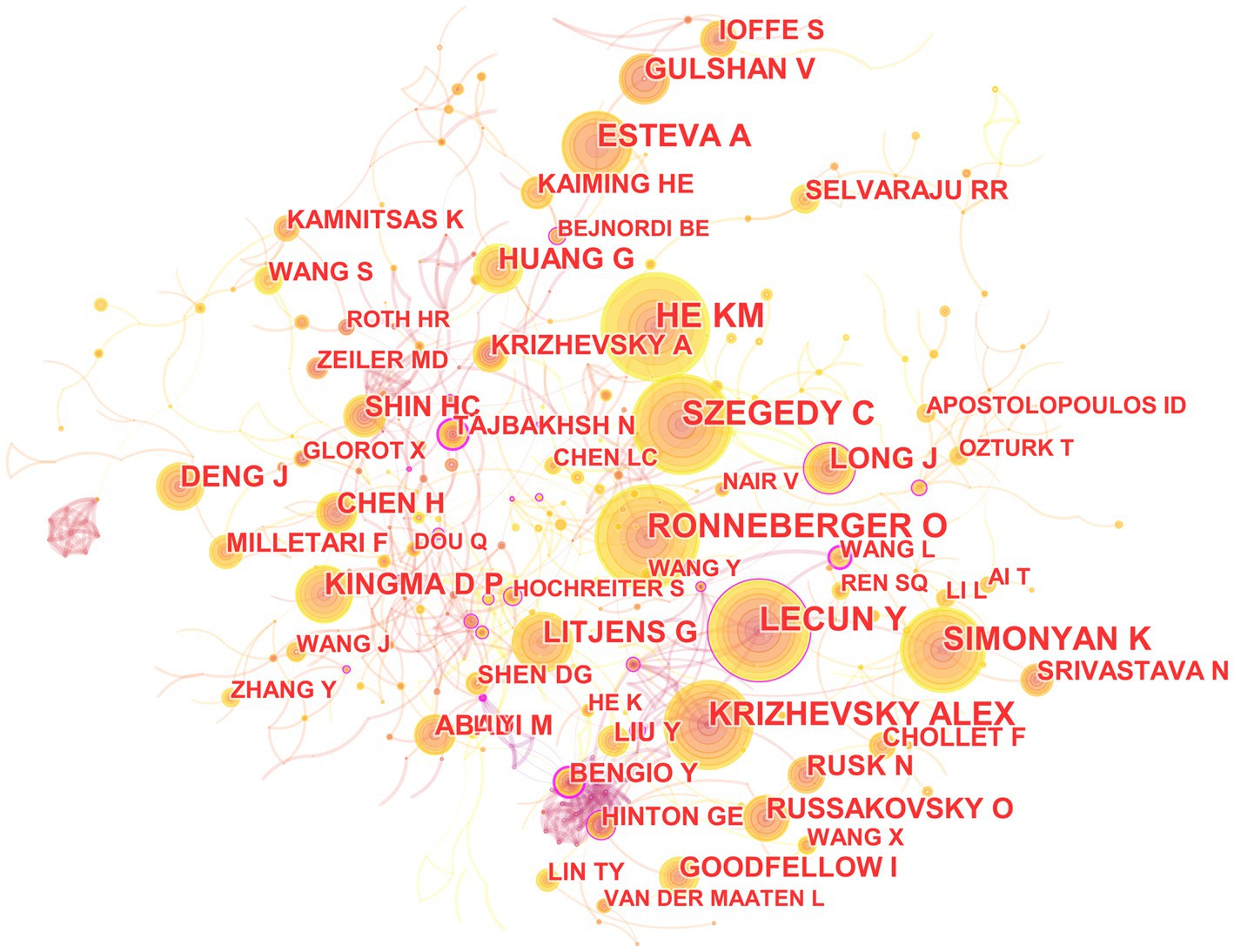

3.7. Analysis of co-cited authors

In the 562 included articles, there are a total of 634 cited authors (Figure 8). The most cited author is Kaiming He, whose papers have been cited 141 times; the author with the highest centrality is Yoshua Bengio, whose papers have been cited 45 times.

Figure 8. The map of co-cited author in the field of medical image processing with deep learning from 2013 to 2023. The size of nodes reflects the number of citations, while the links between nodes reflect the strength of co-citations.

The most cited paper authored by Kaiming He in Web of Science is “Deep Residual Learning for Image Recognition.” This paper introduces a residual learning framework to simplify the training of networks that are much deeper than those used previously. These residual networks are not only easier to optimize but also achieve higher accuracy with considerably increased depth (He et al., 2016). On the other hand, the most cited paper authored by Yoshua Bengio in Web of Science is “Representation Learning: A Review and New Perspectives.” This paper reviews recent advances in unsupervised feature learning and deep learning, covering progress in probabilistic models, autoencoders, manifold learning, and deep networks (Bengio et al., 2013).

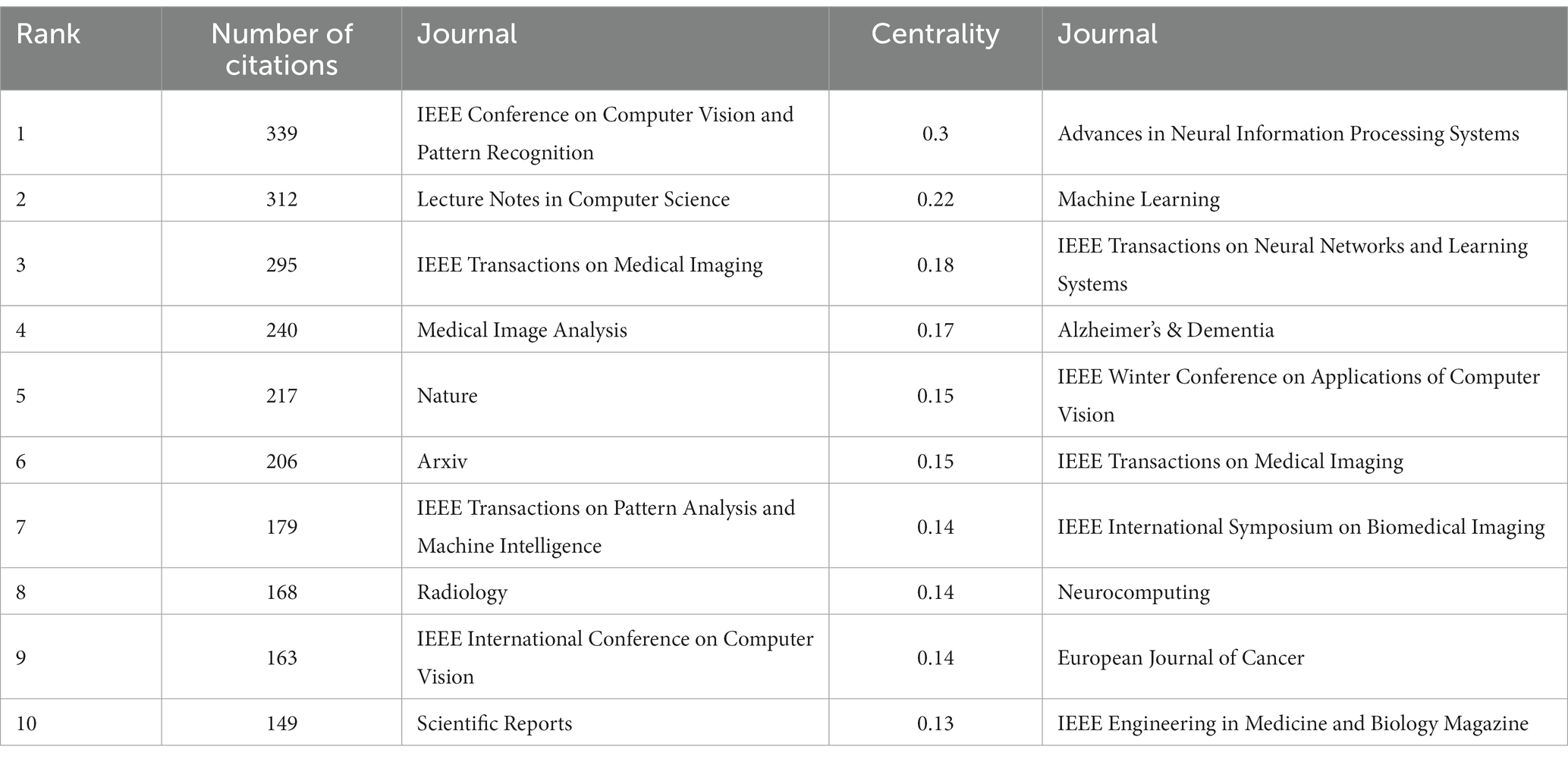

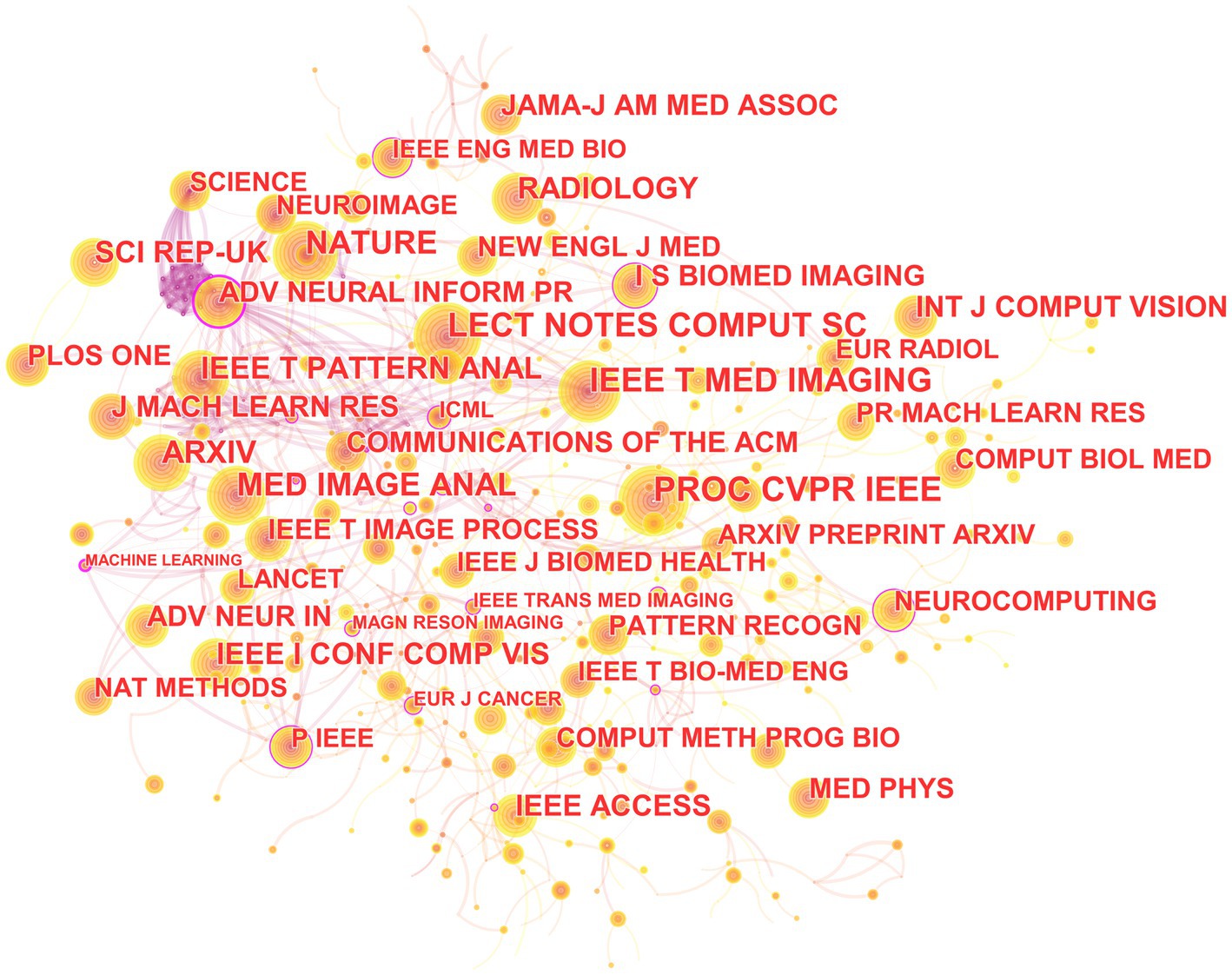

3.8. Analysis of co-cited journals

In the 562 articles included, a total of 345 journals were cited (Figure 9; Table 5). The journal with the most citations is the IEEE Conference on Computer Vision and Pattern Recognition, with 339 articles citing papers from this journal; the journal with the highest centrality is Advances in Neural Information Processing Systems, with 128 articles citing papers from this journal.

Figure 9. The collaborative relationship map of co-cited journal in the field of medical image processing with deep learning from 2013 to 2023.The size of nodes reflects the number of citations, while the links between nodes reflect the strength of co-citations.

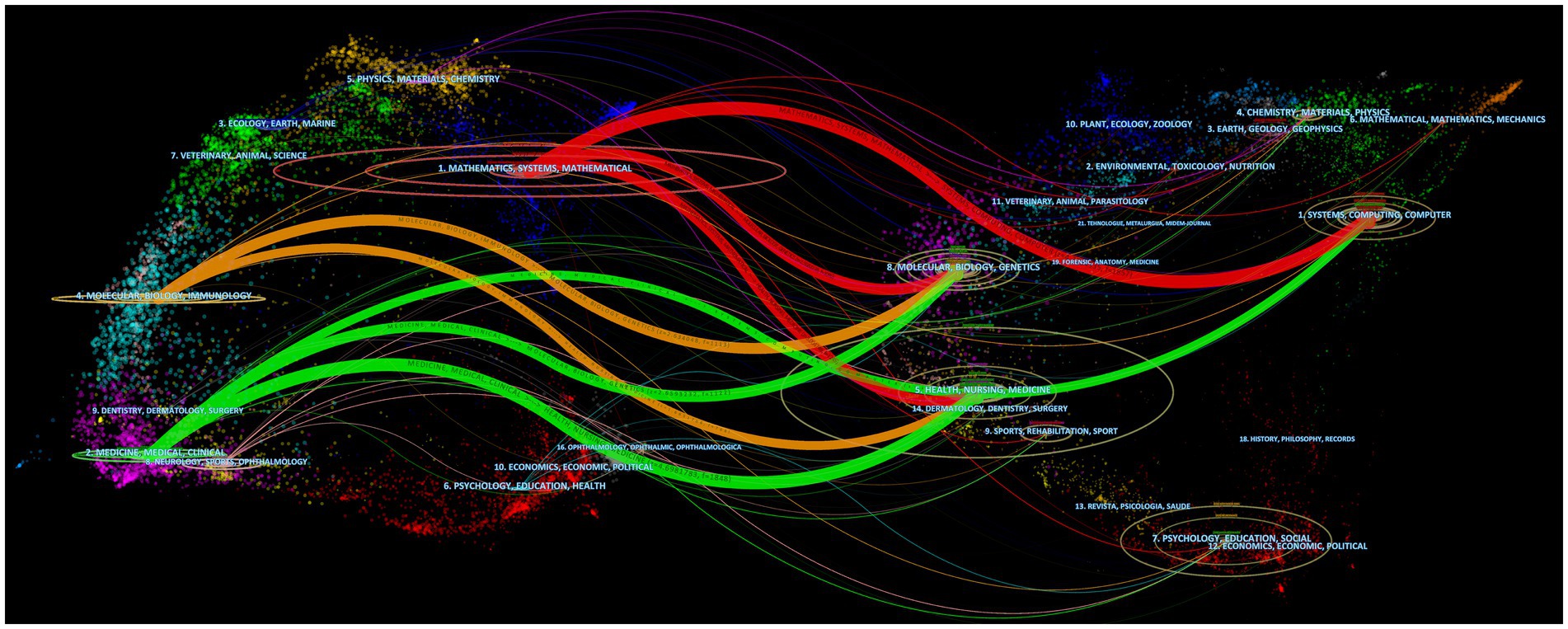

It can be seen that the literature in three major disciplines, mathematics, systems, and mathematical, cite systems, computing, computers; molecular, biology, genetics; health, nursing, and medicine. The literature in molecular, biology, and immunology cite molecular, biology, genetics, and literature in health, nursing, and medicine. The literature in medicine, medical, and clinical cite molecular, biology, genetics, and literature in health, nursing, medicine (Figure 10).

Figure 10. Dual-map overlap of journals. The map consists of two graphs, with the citing graph on the left and the cited graph on the right. The curves represent citation links, displaying the full citation chain. The longer the vertical axis of the ellipse, the more articles are published in the journal. The longer the horizontal axis of the ellipse, the more authors have contributed to the journal.

4. Discussion

From 2013 to 2023, the analysis of publication volume reveals an obvious stage characteristic, before and after 2016, and thus, 2016 is a key year for the field of deep learning-based medical image processing. Although deep learning technology began to be applied as early as 2012, it did not receive widespread attention in the field of medical image processing because traditional machine learning methods, such as support vector machines (SVM) and random forests (Lehmann et al., 2007), were mainly used before then. At the same time, deep learning models require powerful computing power and a large amount of data for training (Ren et al., 2022). Before 2016, high-performance computers were very expensive, which was not conducive to large-scale research in this field. Moreover, large-scale medical image datasets were relatively scarce, so research in this field was constrained by computing capability and dataset limitations. In 2016, however, deep learning technology achieved breakthroughs in computer vision, including image classification, object detection, and segmentation, providing more advanced and efficient solutions for medical image processing (Girshick et al., 2016; Madabhushi and Lee, 2016). These breakthroughs accelerated the progress of research in this field, leading to an increase in publication volume year by year.

From the analysis of authors, it can be seen that the research on deep learning in the field of medical image processing is relatively scattered, and large-scale cooperative teams have not been formed. This may be because research on deep learning requires a large amount of computing resources and data, and therefore requires a strong background in mathematics and computer science. At the same time, the application of deep learning in the medical field is an interdisciplinary cross, which also requires the participation of talents with medical backgrounds. However, individuals with both backgrounds are relatively few, making it difficult to form large-scale research teams. In addition, researchers in this field may be more focused on personal research achievements rather than collaborating with others. This situation may not necessarily mean that researchers lack a spirit of cooperation, but rather reflects the research characteristics and preferences of this field’s researchers.

The institutional analysis mainly reflects two characteristics: first, the broad cooperation between institutions is mainly based on high publication volume and high centrality institutions; publication volume and centrality are not necessarily correlated. This indicates that in the field of medical image processing, institutions with high publication volume and centrality often have strong collaborative abilities and influence, which can attract other institutions to cooperate with them. However, institutions with low publication volume and no centrality may collaborate less due to a lack of resources or opportunities. Second, publication volume does not entirely determine centrality. Sometimes smaller institutions may receive high attention and recognition due to their unique research contributions or research directions (Wuchty et al., 2007; Lariviere and Gingras, 2010). Therefore, institutional centrality is not only related to publication volume but also to the depth and breadth of research, and the degree of innovation in research results. Overall, these institutions are internationally renowned research institutions with broad disciplinary areas and research capabilities, and they have high centrality in the field of medical image processing, making them important research institutions in this field. The collaboration and communication between these institutions are also very frequent, jointly promoting the development of medical image processing. These institutions are distributed globally, including countries and regions such as China, the United States, Germany, and the United Kingdom, showing an international character. Among them, the United States has the largest number of institutions, occupying two of the top three positions, indicating that the United States has strong strength and influence in the field of medical image processing. In addition, these institutions include universities, hospitals, and research institutes, demonstrating the interdisciplinary nature of the field of medical image processing. These institutions also often collaborate and communicate with each other, jointly promoting the research progress in this field.

In country analysis, there are mainly three situations: some countries not only have a large number of publications, but also have high centrality; some countries have a small number of publications, but high centrality; and some countries have a large number of publications, but low centrality. This indicates that deep learning in medical image processing is a global research hotspot, and various countries have published high-quality papers in this field and have close collaborative relationships. Some countries have a large number of publications in this field because they have strong research capabilities and play a leading role in this field. The high centrality of these countries also indicates that they play an important role in collaborative relationships. Some countries have a relatively low number of publications, but their centrality is still high. This may be because they have unique contributions in specific research directions or technologies in this field (Lee et al., 2018), or because they have close relationships with other countries in this field. There are also some countries with a large number of publications, but low centrality. This may be because their research and published paper quality is relatively low in this field, or because they have relatively few collaborative relationships with other countries.

According to keyword analysis, these keywords indicate that in highly cited papers in the field of medical image processing, core concepts include deep learning and machine learning, such as “deep learning” and “machine learning.” In terms of applications, the keywords emphasize COVID-19 diagnosis, image segmentation, and classification, while highlighting the significance of neural networks and convolutional neural networks. Additionally, the centrality-ranked keywords underscore the relevance of algorithms associated with deep learning and reiterate key themes in medical image processing, such as “cancer” and “MRI.” Overall, these keywords reflect the diverse applications of deep learning in medical image processing and the importance of algorithms.

From the clusters of keywords, these clusters can be grouped into four main domains, reflecting diverse applications of deep learning in medical image processing. The first group focuses on medical image processing and diseases, encompassing biomarkers, the detection, and diagnosis of specific diseases such as breast cancer and COVID-19 (Chougrad et al., 2018; Altan and Karasu, 2020). The second group concentrates on image processing and computer vision, including image restoration, annotation, and change detection (Zhang et al., 2016; Kumar et al., 2017; Tatsugami et al., 2019) to enhance the quality and analysis of medical images. The third group emphasizes data analysis and information processing, encompassing feature learning, bioinformatics, and information extraction (Min et al., 2017; Chen et al., 2021; Hang et al., 2022), aiding in the extraction of valuable information from medical images. Lastly, the fourth group centers on neuroscience and medical imaging, studying brain networks and ultrasound images (Kawahara et al., 2017; Ragab et al., 2022), highlighting the importance of deep learning in understanding and analyzing biomedical images for studying the nervous system and organs.

From the analysis of burst keywords, the evolution of these keywords reflects the changing trends and focal points in the field of deep learning in medical image processing. In 2015, the keyword “image” dominated, signifying an initial emphasis on basic image processing and analysis to acquire fundamental image information. By 2016, terms like “feature,” “accuracy,” “algorithm,” and “machine learning” (Shin et al., 2016; Zhang et al., 2016; Jin et al., 2017; Lee et al., 2017; Zhang et al., 2018) were introduced, indicating a growing interest in feature extraction, algorithm optimization, accuracy, and machine learning methods, highlighting the shift toward higher-level analysis and precision in medical image processing. In 2017, terms like “diabetic retinopathy,” “classification,” and “computer-aided detection” (Zhang et al., 2016; Lee et al., 2017; Quellec et al., 2017; Setio et al., 2017) were added, underlining an increased interest in disease-specific diagnoses (e.g., diabetic retinopathy) and computer-assisted detection of medical images. The year 2020 saw the emergence of “COVID-19,” “pneumonia,” “lung,” “coronavirus,” “transfer learning,” and “x-ray” (Minaee et al., 2020) due to the urgent demand for analyzing lung diseases and infectious disease detection, prompted by the COVID-19 pandemic. Additionally, “transfer learning” reflected the trend of utilizing pre-existing deep learning models for medical image data. In 2021, keywords such as “feature extraction,” “framework,” and “image segmentation” (Dhiman et al., 2021; Sinha and Dolz, 2021; Chen et al., 2022) became prominent, indicating a deeper exploration of feature extraction, analysis frameworks, and image segmentation to enhance the accuracy and efficiency of medical image processing. Overall, these changes illustrate the ongoing development in the field of medical image processing, evolving from basic image processing toward more precise feature extraction, disease diagnosis, lesion segmentation, and addressing the needs arising from disease outbreaks. This underscores the widespread application and continual evolution of deep learning in the medical domain.

Based on the analysis of reference citations, it is evident that these 10 highly cited papers cover significant research in the field of deep learning applied to medical image processing. They share a common emphasis on the outstanding performance of deep Convolutional Neural Networks (CNNs) in tasks such as image classification, skin cancer classification, and medical image segmentation. They explore the effectiveness of applying deep residual learning in large-scale image recognition and medical image analysis (He et al., 2016). The introduction of the U-Net, a convolutional network architecture suitable for biomedical image segmentation, is another key aspect (Ronneberger et al., 2015). Additionally, they develop deep learning algorithms for detecting diabetic retinopathy in retinal fundus photographs (Gulshan et al., 2016). They also provide a review of deep learning in medical image analysis, summarizing the trends in related research (LeCun et al., 2015; Rusk, 2016). However, these papers also exhibit some differences. Some focus on specific tasks like skin cancer classification and diabetic retinopathy detection, some concentrate on proposing new network structures (such as ResNet, U-Net, etc.) to enhance the performance of medical image processing, while others provide overviews and summaries of the overall application of deep learning in medical image processing. Overall, these papers collectively drive the advancement of deep learning in the field of medical image processing, achieving significant research outcomes through the introduction of new network architectures, effective algorithms, and their application to specific medical image tasks.

From the analysis of cited journal, it can be observed that these journals collectively highlight the important features of research in medical image processing. Firstly, they emphasize areas such as computer vision, image processing, and pattern recognition, which are closely related to medical image processing. Moreover, journals and conferences led by IEEE, such as IEEE Transactions on Neural Networks and Learning Systems, IEEE Transactions on Medical Imaging, and IEEE Winter Conference on Applications of Computer Vision, hold significant influence in the fields of computer vision and pattern recognition, reflecting IEEE’s leadership in the domain of medical image processing. These journals span across multiple fields including computer science, medicine, and natural sciences, underscoring the interdisciplinary nature of medical image processing research. Open-access publishing platforms like Arxiv and Scientific Reports underscore the importance of open access and information sharing in the field of medical image processing. Additionally, specialized journals like “Medical Image Analysis” and “Radiology” play pivotal roles in research on medical image processing. The comprehensive journal “Nature” covers a wide range of scientific disciplines, potentially including research related to medical image processing. In summary, these journals collectively form a comprehensive research network covering various academic disciplines in the field of medical image processing, emphasizing the significance of open access and information sharing. They also highlight the crucial role of deep learning and neural network technologies in medical image processing, as well as the importance of image processing, analysis, and diagnosis.

From the analysis of dual-map overlap of journals, it can be observed that a particularly noteworthy citation relationship is the reference of computer science, biology, and medicine to mathematics. Computer science research has a strong connection to mathematics, as mathematical methods and algorithms are the foundation of computer science, while the development of computers and information technology provides a broader range of applications for mathematical research (Domingos, 2012). Molecular biology and genetics are important branches of biological research, where mathematical methods are widely applied, such as for analyzing gene sequences and molecular structures, and studying interactions between molecules (Jerber et al., 2021). Medicine is a field related to human health, where mathematical methods also have many applications, such as for statistical analysis of clinical trial results, predicting disease risk, and optimizing the allocation of medical resources (Gong and Tang, 2020; Wang et al., 2021).

From our perspective, the future development of deep learning in the field of medical image processing can be summarized as follows. First, with the widespread application of deep learning models in medical image processing, the design and development of more efficient and lightweight network architectures will become necessary. This can improve the speed and portability of the model, making it possible for these models to run effectively in resource-limited environments such as mobile devices (Ghimire et al., 2022). Second, traditional deep learning methods usually require a large amount of labeled data for training, while in the field of medical image processing, labeled data is often difficult to obtain. Therefore, weakly supervised learning will become an important research direction to improve the model’s performance using a small amount of labeled data and a large amount of unlabeled data. This includes the application of techniques such as semi-supervised learning, transfer learning, and generative adversarial networks (Ren et al., 2023). Third, medical image processing involves different types of data such as CT scans, MRI, X-rays, and biomarkers. Therefore, multimodal fusion will become an important research direction to organically combine information from different modalities and provide more comprehensive and accurate medical image analysis results. Deep learning methods can be used to learn the correlations between multimodal data and perform feature extraction and fusion across modalities (Saleh et al., 2023). Finally, deep learning models are typically black boxes, and their decision-making process is difficult to explain and understand. In medical image processing, the interpretability and reliability of the decision-making process are crucial. Therefore, researchers will focus on developing interpretable deep learning methods to enhance physicians’ and clinical experts’ trust in the model’s results and provide explanations for the decision-making process (Chaddad et al., 2023).

In conclusion, deep learning is becoming increasingly important in the field of medical image processing, with many active authors, institutions, and countries in this field. In the high-cited papers of this field in the core collection of Web of Science, Pheng-Ann Heng, Hao Chen, and Dinggang Shen have published a relatively large number of papers. China has the most research institutions in this field, including the Chinese Academy of Sciences, the University of Chinese Academy of Sciences, The Chinese University of Hong Kong, Zhejiang University, and Shanghai Jiao Tong University. The United States ranks second in terms of the number of institutions, including Stanford University, Harvard Medical School, and Massachusetts General Hospital. Germany and the United Kingdom have relatively few institutions in this field. The number of publications in the United States far exceeds that of other countries, with China in second place. The number of papers from the United Kingdom, Germany, Canada, Australia, and India is relatively high, while the number of papers from the Netherlands and France is relatively low. South Korea’s development and publication output in medical image processing are relatively low. Currently, research in this field is mainly focused on deep learning, convolutional neural networks, classification, diagnosis, segmentation, algorithms, artificial intelligence, and other aspects, and the research focus and trends are gradually moving toward more complex and systematic directions. Deep learning technology will continue to play an important role in this field.

This study has certain limitations. Firstly, we only selected highly cited papers from the Web of Science Core Collection as our analysis material, which means that we may have missed some highly cited papers from other databases and our analysis may not be comprehensive for the entire Web of Science. However, given the limitations of bibliometric software, it is difficult to merge and analyze various databases. Additionally, the reasons why we chose highly cited papers from the Web of Science Core Collection as our analysis material have been explained in the section “Introduction.” Secondly, we may have overlooked some important non-English papers, leading to research bias.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

BC: Writing – original draft. JJ: Writing – review & editing. HL: Writing – review & editing. ZY: Writing – review & editing. HZ: Writing – review & editing. YW: Writing – review & editing. JL: Writing – original draft. SW: Writing – original draft. SC: Writing – original draft.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work is supported by the National Natural Science Foundation of China (Grant No. 81973924) and Special Financial Subsidies of Fujian Province, China (Grant No. X2021003—Special financial).

Acknowledgments

We would like to thank Chaomei Chen for developing this visual analysis software.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

CNNs, Convolutional neural networks; CPUs, Central processing units; GPUs, Graphics processing units; TPUs, Tensor processing units; ASPP, Atrous spatial pyramid pooling.

References

Altan, A., and Karasu, S. (2020). Recognition of Covid-19 disease from X-Ray images by hybrid model consisting of 2D curvelet transform, chaotic salp swarm algorithm and deep learning technique. Chaos, Solitons Fractals 140:110071. doi: 10.1016/j.chaos.2020.110071

Alzubaidi, L., Zhang, J., Humaidi, A. J., Al-Dujaili, A., Duan, Y., Al-Shamma, O., et al. (2021). Review of deep learning: concepts, cnn architectures, challenges, applications, future directions. J. Big Data 8:53. doi: 10.1186/s40537-021-00444-8

Anthimopoulos, M., Christodoulidis, S., Ebner, L., Christe, A., and Mougiakakou, S. (2016). Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans. Med. Imaging 35, 1207–1216. doi: 10.1109/TMI.2016.2535865

Bengio, Y., Courville, A., and Vincent, P. (2013). Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35, 1798–1828. doi: 10.1109/TPAMI.2013.50

Chaddad, A., Peng, J. H., Xu, J., and Bouridane, A. (2023). Survey of explainable AI techniques in healthcare. Sensors 23:634. doi: 10.3390/s23020634

Chen, C. (2005). “The centrality of pivotal points in the evolution of scientific networks” in Proceedings of the 10th international conference on Intelligent user interfaces; San Diego, California, USA: Association for Computing Machinery. p. 98–105.

Chen, C. M. (2006). Citespace II: detecting and visualizing emerging trends and transient patterns in scientific literature. J. Am. Soc. Inf. Sci. Technol. 57, 359–377. doi: 10.1002/asi.20317

Chen, R. J., Lu, M. Y., Wang, J. W., Williamson, D. F. K., Rodig, S. J., Lindeman, N. I., et al. (2022). Pathomic fusion: an integrated framework for fusing histopathology and genomic features for cancer diagnosis and prognosis. IEEE Trans. Med. Imaging 41, 757–770. doi: 10.1109/TMI.2020.3021387

Chen, L. C., Papandreou, G., Kokkinos, I., Murphy, K., and Yuille, A. L. (2018). Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 40, 834–848. doi: 10.1109/TPAMI.2017.2699184

Chen, M., Shi, X. B., Zhang, Y., Wu, D., and Guizani, M. (2021). Deep feature learning for medical image analysis with convolutional autoencoder neural network. IEEE Trans. Big Data 7, 750–758. doi: 10.1109/TBDATA.2017.2717439

Chougrad, H., Zouaki, H., and Alheyane, O. (2018). Deep convolutional neural networks for breast cancer screening. Comput. Methods Prog. Biomed. 157, 19–30. doi: 10.1016/j.cmpb.2018.01.011

Ciresan, D. C., Giusti, A., Gambardella, L. M., and Schmidhuber, J. (2013). Mitosis detection in breast cancer histology images with deep neural networks. Med. Image Comput. Comput. Assist. Intervent. 16, 411–418. doi: 10.1007/978-3-642-40763-5_51

Dessy, R. E. (1976). Microprocessors?—an end user's view. Science (New York, N.Y.) 192, 511–518. doi: 10.1126/science.1257787

Dhiman, G., Kumar, V. V., Kaur, A., and Sharma, A. (2021). DON: deep learning and optimization-based framework for detection of novel coronavirus disease using X-ray images. Interdiscip. Sci. 13, 260–272. doi: 10.1007/s12539-021-00418-7

Domingos, P. (2012). A few useful things to know about machine learning. Commun. ACM 55, 78–87. doi: 10.1145/2347736.2347755

Elnaggar, A., Heinzinger, M., Dallago, C., Rehawi, G., Wang, Y., Jones, L., et al. (2022). Prottrans: toward understanding the language of life through self-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 44, 7112–7127. doi: 10.1109/TPAMI.2021.3095381

Freeman, L. C. (1977). A set of measures of centrality based on betweenness. Sociometry 40, 35–41. doi: 10.2307/3033543

Ghimire, D., Kil, D., and Kim, S. H. (2022). A survey on efficient convolutional neural networks and hardware acceleration. Electronics 11:945. doi: 10.3390/electronics11060945

Girshick, R., Donahue, J., Darrell, T., and Malik, J. (2016). Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 38, 142–158. doi: 10.1109/TPAMI.2015.2437384

Glasser, O. W. C. (1995). Roentgen and the Discovery of the Roentgen Rays. AJR Am. J. Roentgenol. 165, 1033–1040. doi: 10.2214/ajr.165.5.7572472

Gong, F., and Tang, S. (2020). Internet intervention system for elderly hypertensive patients based on hospital community family edge network and personal medical resources optimization. J. Med. Syst. 44:95. doi: 10.1007/s10916-020-01554-1

Gulshan, V., Peng, L., Coram, M., Stumpe, M. C., Wu, D., Narayanaswamy, A., et al. (2016). Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. J. Am. Med. Assoc. 316, 2402–2410. doi: 10.1001/jama.2016.17216

Han, Z., Yu, S., Lin, S.-B., and Zhou, D.-X. (2022). Depth selection for deep relu nets in feature extraction and generalization. IEEE Trans. Pattern Anal. Mach. Intell. 44, 1853–1868. doi: 10.1109/TPAMI.2020.3032422

Hang, R. L., Qian, X. W., and Liu, Q. S. (2022). Cross-modality contrastive learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 60, 1–12. doi: 10.1109/TGRS.2022.3188529

He, K, Zhang, X, Ren, S, and Sun, J (eds.) (2016). “Deep Residual Learning for Image Recognition” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); June 27-30, 2016.

Hinton, G. E., Osindero, S., and Teh, Y.-W. (2006). A fast learning algorithm for deep belief nets. Neural Comput. 18, 1527–1554. doi: 10.1162/neco.2006.18.7.1527

Hosny, A., Parmar, C., Quackenbush, J., Schwartz, L. H., and Aerts, H. J. W. L. (2018). Artificial intelligence in radiology. Nat. Rev. Cancer 18, 500–510. doi: 10.1038/s41568-018-0016-5

Hu, K., Zhao, L., Feng, S., Zhang, S., Zhou, Q., Gao, X., et al. (2022). Colorectal polyp region extraction using saliency detection network with neutrosophic enhancement. Comput. Biol. Med. 147:105760. doi: 10.1016/j.compbiomed.2022.105760

Jerber, J., Seaton, D. D., Cuomo, A. S. E., Kumasaka, N., Haldane, J., Steer, J., et al. (2021). Population-scale single-cell RNA-Seq profiling across dopaminergic neuron differentiation. Nat. Genet. 53:304. doi: 10.1038/s41588-021-00801-6

Jin, K. H., McCann, M. T., Froustey, E., and Unser, M. (2017). Deep convolutional neural network for inverse problems in imaging. IEEE Trans. Image Process. 26, 4509–4522. doi: 10.1109/TIP.2017.2713099

Kawahara, J., Brown, C. J., Miller, S. P., Booth, B. G., Chau, V., Grunau, R. E., et al. (2017). Brainnetcnn: convolutional neural networks for brain networks; toward predicting neurodevelopment. NeuroImage 146, 1038–1049. doi: 10.1016/j.neuroimage.2016.09.046

Kerr, M. V., Bryden, P., and Nguyen, E. T. (2022). Diagnostic imaging and mechanical objectivity in medicine. Acad. Radiol. 29, 409–412. doi: 10.1016/j.acra.2020.12.017

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2017). Imagenet classification with deep convolutional neural networks. Commun. ACM 60, 84–90. doi: 10.1145/3065386

Kumar, N., Verma, R., Sharma, S., Bhargava, S., Vahadane, A., and Sethi, A. (2017). A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans. Med. Imaging 36, 1550–1560. doi: 10.1109/TMI.2017.2677499

Lariviere, V., and Gingras, Y. (2010). The impact factor's matthew effect: a natural experiment in bibliometrics. J. Am. Soc. Inf. Sci. Technol. 61, 424–427. doi: 10.1002/asi.21232

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Lee, H., Tajmir, S., Lee, J., Zissen, M., Yeshiwas, B. A., Alkasab, T. K., et al. (2017). Fully automated deep learning system for bone age assessment. J. Digit. Imaging 30, 427–441. doi: 10.1007/s10278-017-9955-8

Lee, D., Yoo, J., Tak, S., and Ye, J. C. (2018). Deep residual learning for accelerated MRI using magnitude and phase networks. IEEE Trans. Biomed. Eng. 65, 1985–1995. doi: 10.1109/TBME.2018.2821699

Lehmann, C., Koenig, T., Jelic, V., Prichep, L., John, R. E., Wahlund, L.-O., et al. (2007). Application and comparison of classification algorithms for recognition of alzheimer's disease in electrical brain activity (EEG). J. Neurosci. Methods 161, 342–350. doi: 10.1016/j.jneumeth.2006.10.023

Lin, H., Wang, C., Cui, L., Sun, Y., Xu, C., and Yu, F. (2022). Brain-like initial-boosted hyperchaos and application in biomedical image encryption. IEEE Trans. Industr. Inform. 18, 8839–8850. doi: 10.1109/TII.2022.3155599

Madabhushi, A., and Lee, G. (2016). Image analysis and machine learning in digital pathology: challenges and opportunities. Med. Image Anal. 33, 170–175. doi: 10.1016/j.media.2016.06.037

McCulloch, W. S., and Pitts, W. (1990). A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biol. 52, 99–115. doi: 10.1016/S0092-8240(05)80006-0

Min, S., Lee, B., and Yoon, S. (2017). Deep learning in bioinformatics. Brief. Bioinform. 18, 851–869. doi: 10.1093/bib/bbw068

Minaee, S., Boykov, Y. Y., Porikli, F., Plaza, A. J., Kehtarnavaz, N., and Terzopoulos, D. (2022). Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 44, 3523–3542. doi: 10.1109/TPAMI.2021.3059968

Minaee, S., Kafieh, R., Sonka, M., Yazdani, S., and Soufi, G. J. (2020). Deep-covid: predicting covid-19 from chest X-ray images using deep transfer learning. Med. Image Anal. 65:101794. doi: 10.1016/j.media.2020.101794

Misra, N. N., Dixit, Y., Al-Mallahi, A., Bhullar, M. S., Upadhyay, R., and Martynenko, A. (2022). Iot, big data, and artificial intelligence in agriculture and food industry. IEEE Internet Things J. 9, 6305–6324. doi: 10.1109/JIOT.2020.2998584

Narin, A., Kaya, C., and Pamuk, Z. (2021). Automatic detection of coronavirus disease (Covid-19) using X-ray images and deep convolutional neural networks. Pattern. Anal. Applic. 24, 1207–1220. doi: 10.1007/s10044-021-00984-y

Quellec, G., Charriére, K., Boudi, Y., Cochener, B., and Lamard, M. (2017). Deep image mining for diabetic retinopathy screening. Med. Image Anal. 39, 178–193. doi: 10.1016/j.media.2017.04.012

Ragab, M., Albukhari, A., Alyami, J., and Mansour, R. F. (2022). Ensemble Deep-Learning-Enabled Clinical Decision Support System for Breast Cancer Diagnosis and Classification on Ultrasound Images. Biology 11:439. doi: 10.3390/biology11030439

Ren, Z. Y., Wang, S. H., and Zhang, Y. D. (2023). Weakly supervised machine learning. Caai Transact. Intellig. Technol. 8, 549–580. doi: 10.1049/cit2.12216

Ren, P., Xiao, Y., Chang, X., Huang, P.-Y., Li, Z., Gupta, B. B., et al. (2022). A survey of deep active learning. ACM Comput. Surv. 54, 1–40. doi: 10.1145/3472291

Ronneberger, O, Fischer, P, and Brox, T (eds.) (2015). “U-Net: Convolutional Networks for Biomedical Image Segmentation” in International Conference on Medical Image Computing and Computer-Assisted Intervention.

Rosenblatt, F. (1958). The perceptron: a probabilistic model for information storage and organization in the brain. Psychol. Rev. 65, 386–408. doi: 10.1037/h0042519

Saleh, M. A., Ali, A. A., Ahmed, K., and Sarhan, A. M. (2023). A brief analysis of multimodal medical image fusion techniques. Electronics 12:97. doi: 10.3390/electronics12010097

Schoenbach, U. H., and Garfield, E. (1956). Citation indexes for science. Science (New York, N.Y.) 123, 61–62. doi: 10.1126/science.123.3185.61.b

Setio, A. A. A., Traverso, A., de Bel, T., Berens, M. S. N., van den Bogaard, C., Cerello, P., et al. (2017). Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: the Luna16 challenge. Med. Image Anal. 42, 1–13. doi: 10.1016/j.media.2017.06.015

Shin, H. C., Roth, H. R., Gao, M. C., Lu, L., Xu, Z. Y., Nogues, I., et al. (2016). Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 35, 1285–1298. doi: 10.1109/TMI.2016.2528162

Sinha, A., and Dolz, J. (2021). Multi-scale self-guided attention for medical image segmentation. IEEE J. Biomed. Health Inform. 25, 121–130. doi: 10.1109/JBHI.2020.2986926

Tatsugami, F., Higaki, T., Nakamura, Y., Yu, Z., Zhou, J., Lu, Y. J., et al. (2019). Deep learning-based image restoration algorithm for coronary CT angiography. Eur. Radiol. 29, 5322–5329. doi: 10.1007/s00330-019-06183-y

Wang, S., Zhang, Y., and Yao, X. (2021). Research on spatial unbalance and influencing factors of ecological well-being performance in China. Int. J. Environ. Res. Public Health 18:9299. doi: 10.3390/ijerph18179299

Wuchty, S., Jones, B. F., and Uzzi, B. (2007). The increasing dominance of teams in production of knowledge. Science 316, 1036–1039. doi: 10.1126/science.1136099

Yin, L., Zhang, C., Wang, Y., Gao, F., Yu, J., and Cheng, L. (2021). Emotional deep learning programming controller for automatic voltage control of power systems. IEEE Access 9, 31880–31891. doi: 10.1109/ACCESS.2021.3060620

Zhang, J., Gajjala, S., Agrawal, P., Tison, G. H., Hallock, L. A., Beussink-Nelson, L., et al. (2018). Fully automated echocardiogram interpretation in clinical practice: feasibility and diagnostic accuracy. Circulation 138, 1623–1635. doi: 10.1161/CIRCULATIONAHA.118.034338

Keywords: deep learning, medical images, bibliometric analysis, CiteSpace, trends, hotspots

Citation: Chen B, Jin J, Liu H, Yang Z, Zhu H, Wang Y, Lin J, Wang S and Chen S (2023) Trends and hotspots in research on medical images with deep learning: a bibliometric analysis from 2013 to 2023. Front. Artif. Intell. 6:1289669. doi: 10.3389/frai.2023.1289669

Edited by:

Alfredo Vellido, Universitat Politecnica de Catalunya, SpainReviewed by:

Massimo Salvi, Polytechnic University of Turin, ItalyCarla Pitarch, Universitat Politecnica de Catalunya, Spain

Copyright © 2023 Chen, Jin, Liu, Yang, Zhu, Wang, Lin, Wang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jianping Lin, ljp1985@fjmu.edu.cn; Shizhong Wang, shzhwang@fjmu.edu.cn; Shaoqing Chen, chensq@fjtcm.edu.cn

†These authors have contributed equally to this work and share first authorship

Borui Chen

Borui Chen Jing Jin

Jing Jin Haichao Liu2†

Haichao Liu2†  Yu Wang

Yu Wang Jianping Lin

Jianping Lin