- 1Sungai Ramal Secondary School, Bangi, Selangor, Malaysia

- 2Faculty of Education, Centre of STEM Enculturation, National University of Malaysia, Bangi, Selangor, Malaysia

- 3Faculty of Education, Research Centre of Education Leadership and Policy, National University of Malaysia, Bangi, Selangor, Malaysia

Computational thinking refers to the cognitive processes underpinning the application of computer science concepts and methodologies to the methodical approach and creation of a solution to a problem. The study aims to determine how students’ cognitive, affective, and conative dispositions in using computational thinking are influenced by a gender. This study used a survey research design with quantitative approach. Five hundred thirty-five secondary school students were sampled using probability sampling with the Computational Thinking Disposition Instrument (CTDI). WINSTEPS version 3.71.0 software was subsequently employed to assess the Gender Differential item functioning (GDIF) including reliability and validity with descriptive statistics were employed to assess students’ disposition toward practicing computational thinking. In addition to providing implications for the theory, the data give verifiable research that the CT disposition profile consists of three constructs. In addition, the demonstrated CTDI has good GDIF features, which may be employed to evaluate the efficacy of the application of CT in the Malaysian curriculum by measuring the level of CT in terms of the disposition profile of students.

Introduction

Computational thinking (CT) is a vital skill in any field. A number of researchers have proposed that CT serves as a stepping stone to more complex computational endeavors like programming (1). In particular, CT aid elementary school kids in conceptualizing computational reasoning. This is an ability that develops via repeated use (1). The educators viewed technology as a means to broaden their pupils’ horizons and give them more agency in their own learning (2). Recent discussions have centered on the importance of introducing computer science to students in lower grades (3–6). The enthusiasm for CT in the classroom is understandable, but there are still many challenges that must be overcome. As a result, there is a growing expectation that teachers will be able to illustrate computational thinking by applying it to real-world scenarios that use computer technology.

Thus, an item is the fundamental unit of an instrument. The creation of items must be consistent and fair for all participants. DIF refers to a measurement instrument with multiple functions. It is being administered to a group of respondents with diverse demographic backgrounds but comparable abilities. Hambleton and Jones (7) suggest that a DIF-detected item’s functions in various subgroups are dissimilar.

Consequently, the DIF analysis procedure identifies items that do not mirror similar functions when applied to a group of capabilities with parallel capabilities. Osterlind (8) states that item analysis entails observing items critically to reduce measurement error. Consequently, DIF analysis determines item validity (9). DIF endorsement in instrument construction is indicative of an instrument with high reliability. Siti Rahayah Ariffin (10), stated that DIF impacts the dependability of instruments. For composite measures to be unidimensional and the variable to be linear, the item scale values must be consistent across individuals and groups. Three DIF endorsement methods are Mantel-Haenszel (11, 12), Item Response Theory (13), and Rasch Models (14).

We are currently working on the next iteration of CT disposition instruments. Empirical evidence is essential for the creation of new statistical tools. As a result, the gender gap is one of the topics that has gained a lot of attention in academia, especially in the field of computer science education. Since many of the same ideas are used in both CT and computer science, a number of recent studies have looked into the disparity between the sexes in terms of CT proficiency.

From a neurological point of view, boys are a few weeks behind girls and remain behind girls until late adolescence (15). This developmental difference impacts their early school learning experiences and has impact throughout their education. Boys’ fine motor skills develop slower than girls and they may have difficulty with handwriting tasks (16). Their language and fine motor skills fully mature about six years later than girls (17). However, the areas involved in targeting and spatial memory mature some four years earlier in boys than they do in girls (17). Although those differences are significant, it is important to examine how that information relates to developmental gender differences especially in CT. Recent studies in Cognitive Neurodynamics field also discussed several variables that cater interest such as decision-making (18), and brain activity patterns and mental (19). Other than that, the gender differences also reported in spirituality well-being (20) and mental fatigue (21). All these factors open the door to relate the Cognitive Neurodynamics with CT for developing better students in local context.

Thus, the very design of the brain and the resulting disparities in sensory perception and physical skills differ considerably between the sexes. Understanding those variances will assist instructors in providing a good and encouraging environment for their pupils, as well as promoting CT through teaching and learning.

Literature review

Computational thinking

In today’s digital age, CT must be grasped quickly. CT is a kind of thinking that aligns with many 21st century abilities, such as problem-solving, creativity, and critical thinking (22). It derives from computer science and involves problem-solving, system design, and understanding people’s behavior (5). CT refers to the cognitive process of problem solving (23) as a set of 21st century skills (24, 25) or the thought process involved in formulating problems and expressing solutions (26) and a set of problem-solving skills based on Computer Science (27). This encouraged researchers to perform more in-depth research on learning experiences and computational thinking methods (28). Researchers couldn’t predict all difficulties before implementation (29).

CT is used from early childhood to university (30–32). The use of CT in formal education has taken numerous forms, including integration in computer science courses and embedding in math, science, and art (33, 34). (35) CT has also reached classrooms through robotics (36) and unplugged activities, such as board games or storybooks (37–39). While much has been said about demystifying CT pedagogy, research on evaluating CT skills and attitudes continues. To investigate systematic issues, it is indeed necessary to improve the attitude towards CT (40).

A review of the past five years (2016-2020) reveals that little research has been conducted on student CT disposition (41, 42). Correspondingly, attitudes affect CT as much as skills (5). CT’s complexity inspires others to investigate further, implying a deeper understanding of CT as a disposition (43, 44). In the digital age, self-directed problem-solving instruction may no longer be adequate. This problem-solving method does not account for the willingness to incorporate these abilities. Thus, researchers propose that CT dispositions are crucial motivators for identifying complex real-world issues and developing effective solutions (45, 46). According to the National Research Council (NRC), specific thinking skills are associated with an innate desire to think and are constituents of specific thinking dispositions (47). Thus, good thinkers possess the ability to think and the disposition to think (48). It appears that a validated evaluation of CT dispositions is lacking.

Notwithstanding, there is a persistent desire in all fields to distinguish between various conceptualizations of CT measurement. Psychometric scales are one of the most often employed instruments for evaluating computational thinking (49–53). However, psychometric instruments are predicated on the notion that an individual provides accurate and comprehensive information (54). On the other hand, a western instrument might not be suitable for Malaysia, given the distinct cultural and geographic environments. As a result, an instrument that has already been verified might not be reliable in a different period, culture, or setting (55–57). Additionally, it can be difficult to compare data from various cultures and groups when studying attitudes (58).

Furthermore, it is a well-known fact that the issue of gender inequality in CT is coming up more frequently. Every student exhibits a different level of CT proficiency depending on their location, gender, and academic standing, as is well known (59). The CT of males and females is essentially the same. Although earlier studies (60–62) found no differences in CT skills between male and female students aged 15 to 18, gender inequalities still exist (6, 60). CT skillset is frequently correlated with mathematical reasoning, favoring male students (59). Researchers explained the contradictory results, suggesting that the task content might be to blame for the differences. For some tasks, boys or girls may find them more interesting (63). This implies that earlier research on gender issues has produced conflicting findings, demonstrating the need for additional study.

In Cognitive Neurodynamics aspect of human development, one of the important aspects for behavioral, cognitive, and neural sciences is related to decision-making (18). The complexity of real-life decision-making has the potential to be linked to one’s person CT abilities. When a person is able to master CT well, then there is a possibility that their decision-making abilities also increase. CT may relate to brain activity patterns and mental. This point of discussion supports the findings of previous studies that there is a systematic link between brain activity patterns and spontaneously generated internal mental states (19).

In the context of this study, a person’s gender in CT also encourages in effecting spirituality well-being. (20) in his study found the existence of a gender effect on spirituality and showed that alpha and theta brain signals increased in male students at the 30–35 age range; while this increase was slower at the 20-29 age range. External factors such as decision-making, brain activity patterns, internal mental, and spirituality also can be linked to a person’s gender in CT differences. In addition, a study by Sadeghian et al. (21) also discusses mental fatigue based on gender. Their findings strengthen previous studies by showing the existence of a significant difference between the two groups of men and women for brain indicators with the alpha-1 index in men was higher than women and the average alpha-2 index in women was higher (both alpha indexes were to measure mental fatigue). This means that this difference in mental fatigue also has the potential to be linked to a person’s CT ability according to gender.

However, most CT measurement methods focus on thinking skills rather than dispositions. The architecture of the CT disposition measurement model suggested in this paper is built on cognitive, affective, and conative. In this perspective, the study’s importance can be viewed differently. It’s important for developing a measurement tool’s item pool and similar questions. It also helps create content for the most common components in modern literature. In many studies, limited tools such as perception-attitude scales, multiple choice tests, or just coding have been used to measure computational thinking (49, 51, 52, 64–66). This study established the Computational Thinking Disposition Instrument (CTDI) by considering several aspects of computational thinking.

Computational thinking disposition

The development of CT dispositions necessitates long-term involvement in computational techniques focused on the CT process (67). CT’s psychological makeup remains a mystery to this day (52). When it comes to the internal impulse to act toward CT or respond in habitual but adaptive ways to people, events, or circumstances, the disposition is the term that describes it (68). While CT is most often regarded as a problem-solving process that emphasizes one’s cognitive process and thinking skills (69, 70), more attention should be paid to the dispositions that students develop in CT education. CT dispositions refer to people’s psychological status or attitudes when they are engaged in CT development (71). CT dispositions have recently been referred to as “confidence in dealing with complexity, a persistent working with difficulties, an ability to handle open-ended problems” (33, 72). Social psychologists describe dispositional traits as having an “attitudinal tendency” (73–75). Thoughtful dispositions, on the other hand, are often described in the context of critical thinking as a “mental frame or habit” (76). Furthermore, theorists argue that thinking is a collection of dispositions rather than knowledge or skill and that this is the case (77, 78).

Three psychological components comprise disposition: cognitive, affective, and conative. These three components of the mind are traditionally identified and studied by psychology (79–81). Information is encoded, perceived, stored, processed, and retrieved during cognition. A dispositional cognitive function is an individual’s propensity to engage in cognitive mental activities such as perception, recognition, conception, judgment, and others. Affection is the emotional interpretation of sensations, data, or knowledge. People, things, and concepts are frequently associated with one’s positive or negative relationships, and the question “How do I feel about this information or knowledge?” Self-actualization/self-satisfaction determines whether or not students feel successful after practicing CT in problem-solving exercises.

In contrast, conation refers to the relationship between knowledge, emotion, and behavior, which is ideally positive (rather than reactive or habitual) behavior (82, 83). Conative mental functions are “that aspect of mental activity that tends to develop into something else, such as the desire to act or a deliberate effort.” Determination to an endeavor is a conative mental capacity. In this investigation, these attitudes and dispositions serve as theoretical entities. Different contexts and requirements necessitate distinct mental dispositions, according to the study’s findings.

Due to the paucity of research and development on this topic, this study will make a substantial contribution to the body of knowledge as a result of its focus on computational thinking. Additionally, the tool gave an alternate perspective for evaluating students’ success in the CT course. In response, we aim to take a psychometric approach to these challenges. On the other hand, the creation and development of our Computational Thinking Disposition Instrument is described, along with its descriptive statistics and dependability based on its administration to more than 500 Malaysian students. Consequently, the purpose of this work is to provide a novel instrument for assessing CT and to demonstrate the relationships between CT and other well-established psychological dimensions.

Research question

The purpose of this paper is to answer the following research question, which focuses on gender variations in attitudes concerning CT. Following the discussion on computational thinking disposition, a research question guides this paper:

1. To identify the existence of GDIF items in the Computational Thinking Disposition Instrument.

Methodology

Sample

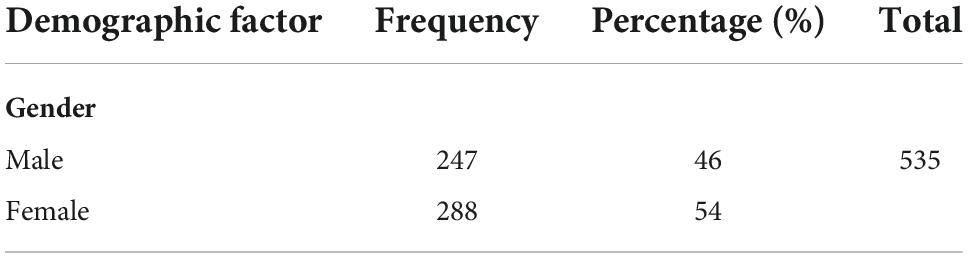

The study employed a quantitative cross-sectional survey to collect and numerically analyze data to better comprehend the events under investigation (84). A self-administered online survey was used to collect the data, saving money, time, and effort. So, the data are almost ready for statistical analysis (85). The questionnaire survey was utilized since it is acceptable for a high sample size with a broad geographical coverage (86). This method also required respondents to check all boxes before submitting their responses, thereby minimizing data gaps. This study was conducted with the participation of 535 secondary school students with a background in computer science. Using probability sampling, samples were generated. Probability sampling employs a method of random selection that permits the estimation of sampling error, hence decreasing selection bias. Using a random sample, it is possible to describe quantitatively the relationship between the sample and the underlying population, giving the range of values, called confidence intervals, in which the true population parameter is likely to lie (87). Respondents were required to have a background in computer science, be willing to fill out questionnaires, and engage in online activities. The research was performed in October of 2020. Regarding ethical considerations, the student’s permission to participate in this study was obtained prior to completing the questionnaire. Participation was voluntary and strictly anonymous. Table 1 displays the demographic profile of the respondents.

Instrumentation

A Computational Thinking Disposition Instrument (CTDI) measures students’ disposition in computational thinking. As was previously noted, three components were used to design the CTDI questionnaire. Sovey et al. (88) used factor analysis to demonstrate the items and validity constructions for the three constructs. EFA was the starting point of the investigation, then Rasch. The CTDI includes three demographic questions (gender, location, and prior knowledge) and 55 items in three dimensions that measure computational thinking disposition such as Cognitive (19 items), affective (17 items), and conative (19 items). All items had a 4-point Likert scale from strongly disagree (1) to strongly agree (4). Hence, there are recommendations that odd-numbered response scales should be avoided (89, 90). Dolnicar et al. (91) have explained that odd five-point Likert scales affect response styles that are biased, lack stability and take a long time to complete. The middle point scale category encourages a disproportionate number of responses (because the tendency to choose the middle scale is high). In the context of the study, the firmness of the respondent is considered an important basis in answering the items. Therefore, Sumintono and Widhiarso (92) have suggested not to provide a midpoint option. This argument is also supported by Wang et al. (93) who recommend that the midpoint scale not be used to obtain the views of Asian respondents. The scale is more appropriate compared to the conventional scoring method for the use of the Rasch model in this study. Ten pupils in total were then chosen for face validation. They were tasked with locating and cataloguing any unclear word or terminology. Additionally, they were permitted to share their thoughts on how to improve the questionnaires’ quality in terms of font size and design so that the research sample could understand them more quickly. These 10 pupils were left out of the main study.

On the other hand, Rasch measurement model software WINSTEPS 3.73 was used to determine the instrument’s validity and reliability. Rasch analysis (94) uses assumptions and a functional form to determine if a single latent trait drives questionnaire item responses. The Rasch model shows the assumed probability of participants’ scale response patterns, which are added and tested against a probabilistic model (94, 95). The Rasch rating scale analysis model is used when a set of items share a fixed response rating scale format (e.g., Likert scale) and thresholds do not vary. Through its calibration of item difficulties and person abilities, the WINSTEPS software transformed raw ordinal data (Likert-type data), based on the frequency of response which appeared as probability, to logit (log odd unit) via the logarithm function, which assesses the overall fit of the instrument as well as person fit (96, 97). Rasch models are used in this study to determine gender. Bond and Fox (98, 99) propose three DIF indicators for groups that have been studied: (1) t value ± 2.0 (−2.0 ≤ t ≤ 2.0), (2) DIF Contrast ± 0.5 (−0.5 ≤ DIF Contrast ≤ 0.5), and (3) p < 0.05.

Person reliability and item reliability

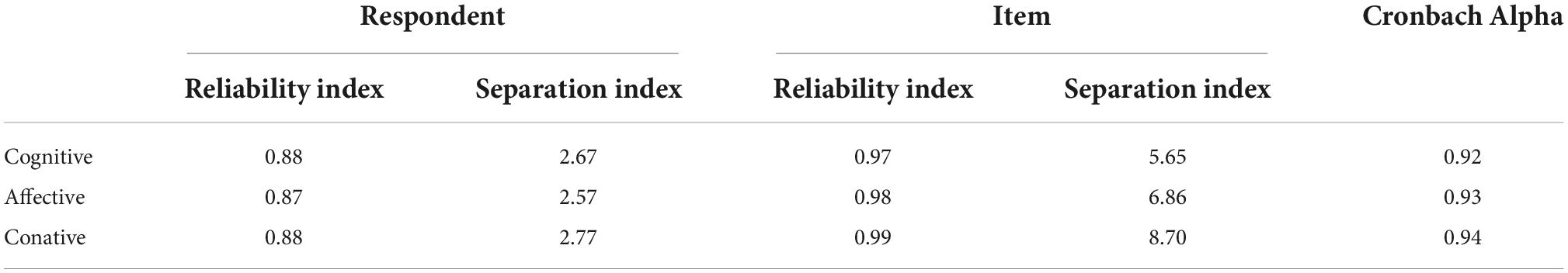

According to Table 2, the “real” Person Reliability index (above 0.8) demonstrates that the consistency of individual responses was satisfactory (97). This indicates that the scale discriminates between individuals very well. This indicates that the likelihood of individuals responding to items was likely high. The same interpretation logic applies to Item Reliability measurements exceeding 0.90, which are also categorized as “very good” (100). High estimates of item reliability also indicate that the items define the latent variable very well (97). The CTDI may be considered a reliable instrument for various respondent groups.

Cronbach Alpha

The Cronbach Alpha coefficient value, as calculated by the Rasch Model, described the interaction between the 535 participants and the 55 items. According to Sumintono and Widhiarso’s instrument quality criteria, a reliability score of more than 0.90 (Table 3) is considered “very good” (2014). This result indicates a high degree of interaction between the people and the items. An instrument is highly reliable if it has good psychometric internal consistency.

Person and item separation index

The person Separation index measures how well the CTDI can distinguish between ‘Person abilities.’ Item Separation index shows easy and difficult items’ commonness (101). Wider is better. Bond and Fox (97) report that the item separation index is between 5.0 and 8.0, exceeding 2.0. Statistically, CTDI items could be divided into 5 to 8 endorsement levels. For respondents, a separation index above 2.0 is acceptable (102). Table 3 shows each construct’s internal reliability. These criteria endorse the CTDI as a reliable instrument for assessing students’ computational thinking disposition.

Results

Students’ disposition towards computational thinking

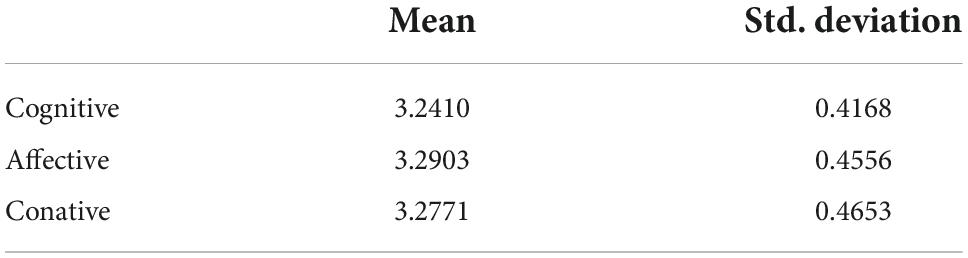

Firstly, students’ disposition towards computational thinking was analyzed. According to Table 4, among the three dimensions of disposition for computational thinking, students rated highest on affective, with a mean score of M = 2.76, SD = 2.08. However, lowest on the cognitive aspect, with a mean score of M = 2.48 and SD = 1.80. The results are summarized in Table 5.

Differences between students’ demographic factors and computational thinking disposition

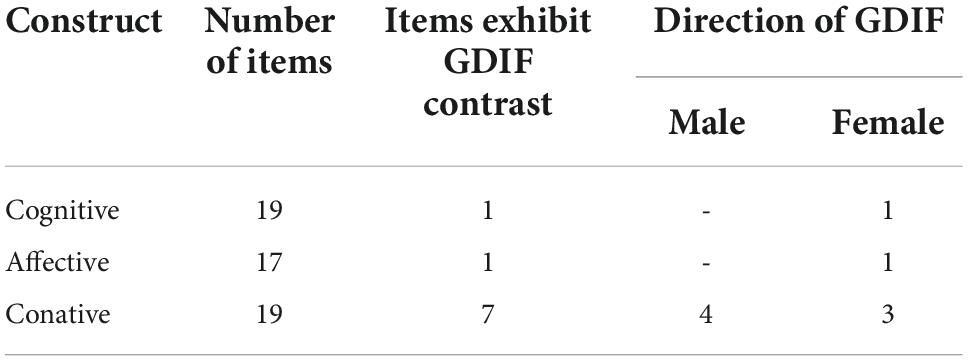

GDIF analysis is performed to determine biased items in the CTDI instrument. Table 3 shows the summary of GDIF items in each construct of CTDI. With the critical t-value set at 2.0 and the confidence level at 95%, nine items were identified as significant for GDIF, extending the analysis to identify the extreme level of GDIF that could exist in the items. Using the Rasch Model, we can predict which items are likely to exhibit biases and eliminate the most significant DIF-exhibiting items to improve test fairness. The negative t-value and GDIF size indicate that male students answered the questions more easily than female students. Four (44.4%) of the nine items indicating the existence of GDIF were easier for males, while five (55.6%) were easier for females. There is a sizeable proportion of items that appeal to both genders. The disparities between the sexes are minimal, and the business’s direction is nonsystematic across all constructs. When the bias direction is not systematic, the moderately biased items are not problematic. The study revealed that item bias does not diminish the overall measurement accuracy and predictive validity of a test (103). As there is no benefit to removing these items, there should be a relatively high proportion of items in both ability groups that exhibit moderate DIF and have a low tendency to affect the instrument’s quality.

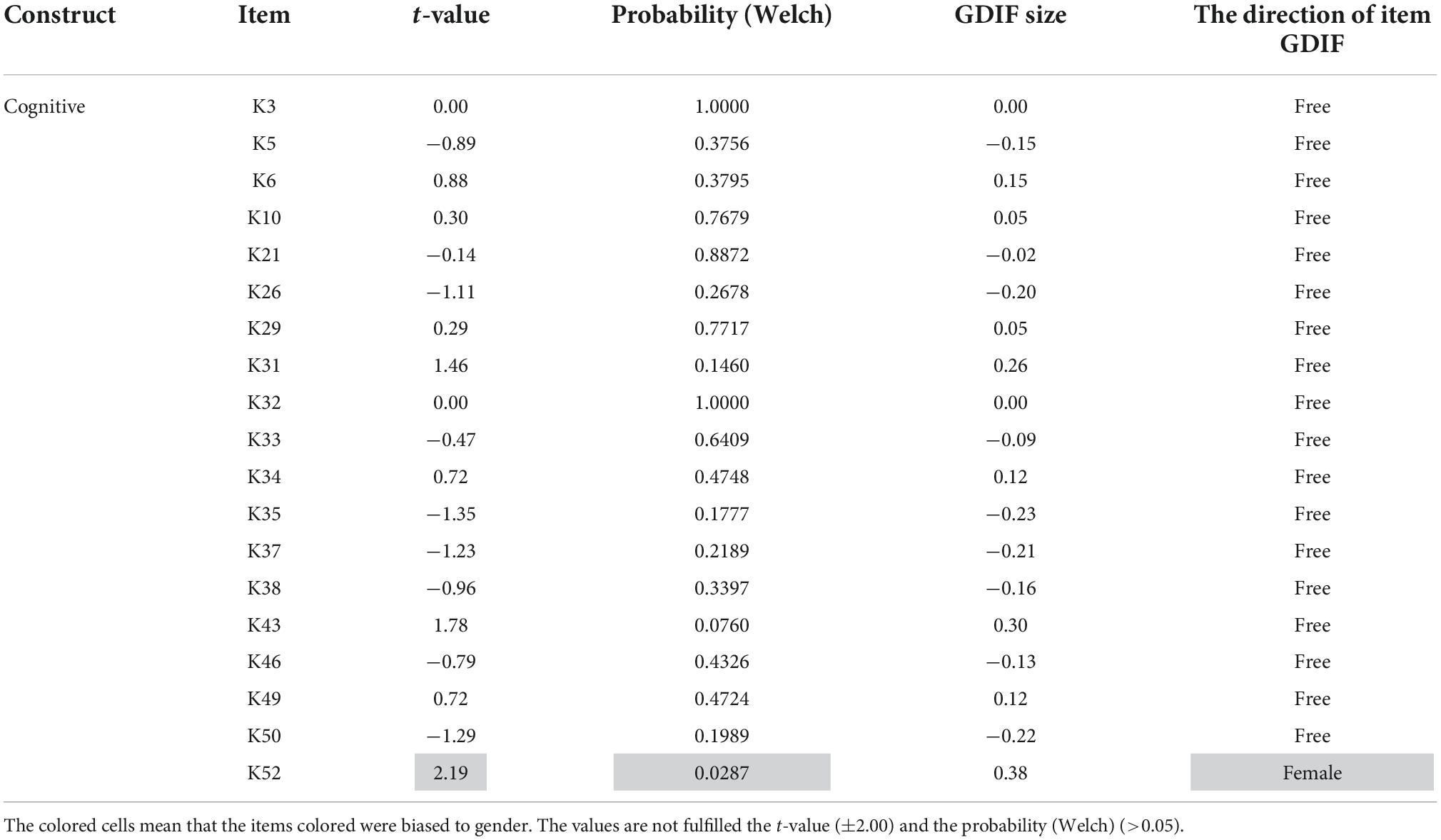

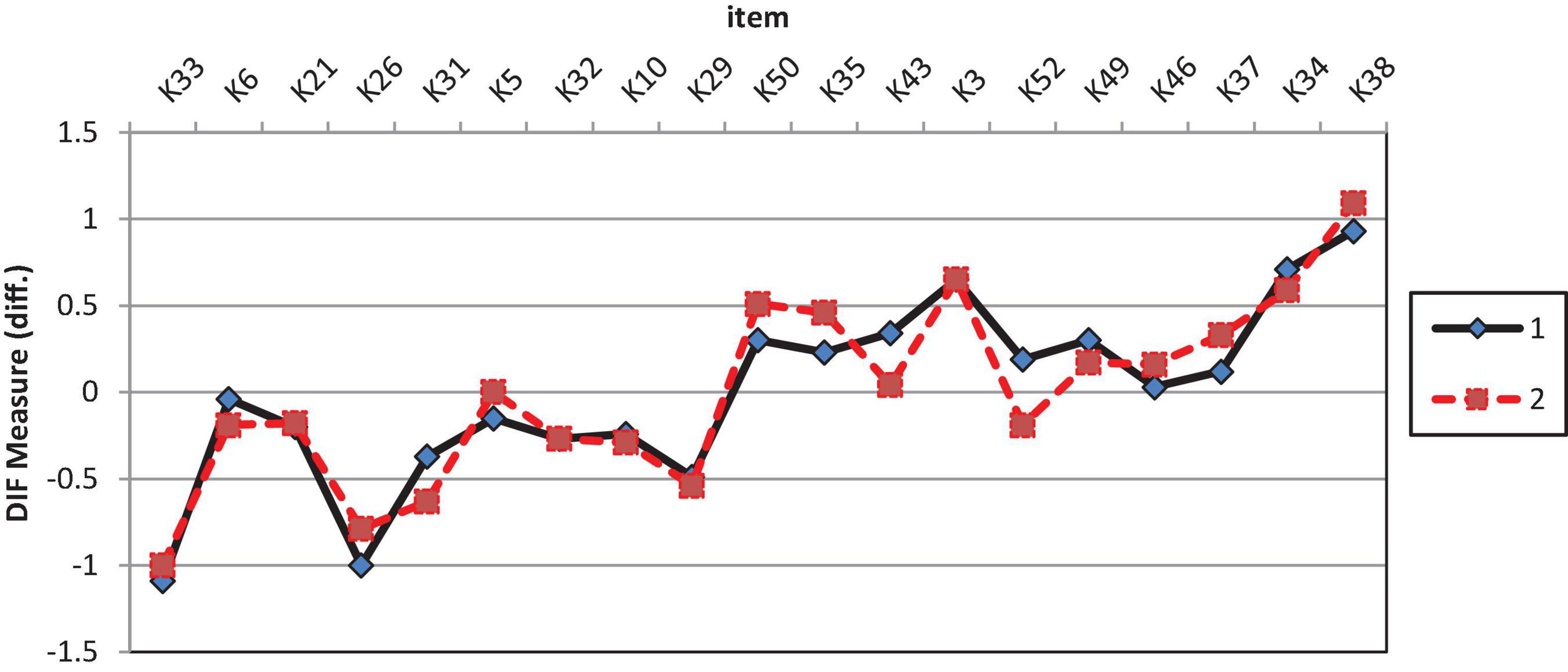

Table 4 shows the results of GDIF analysis on cognitive items. Analysis revealed that only one of 19 items showed significant GDIF, K52. Item K52 (“I know the importance of citing reference sources for assignments undertaken”) is easier to agree with by female students than male students. This item with a significant GDIF of 0.38 logits has a t-value of more than 2 (t ≥ 2.0). Figure 1 shows the DIF plot using the DIF measure on the analysis of cognitive construct by gender where 1 indicate male students and 2 refers female students.

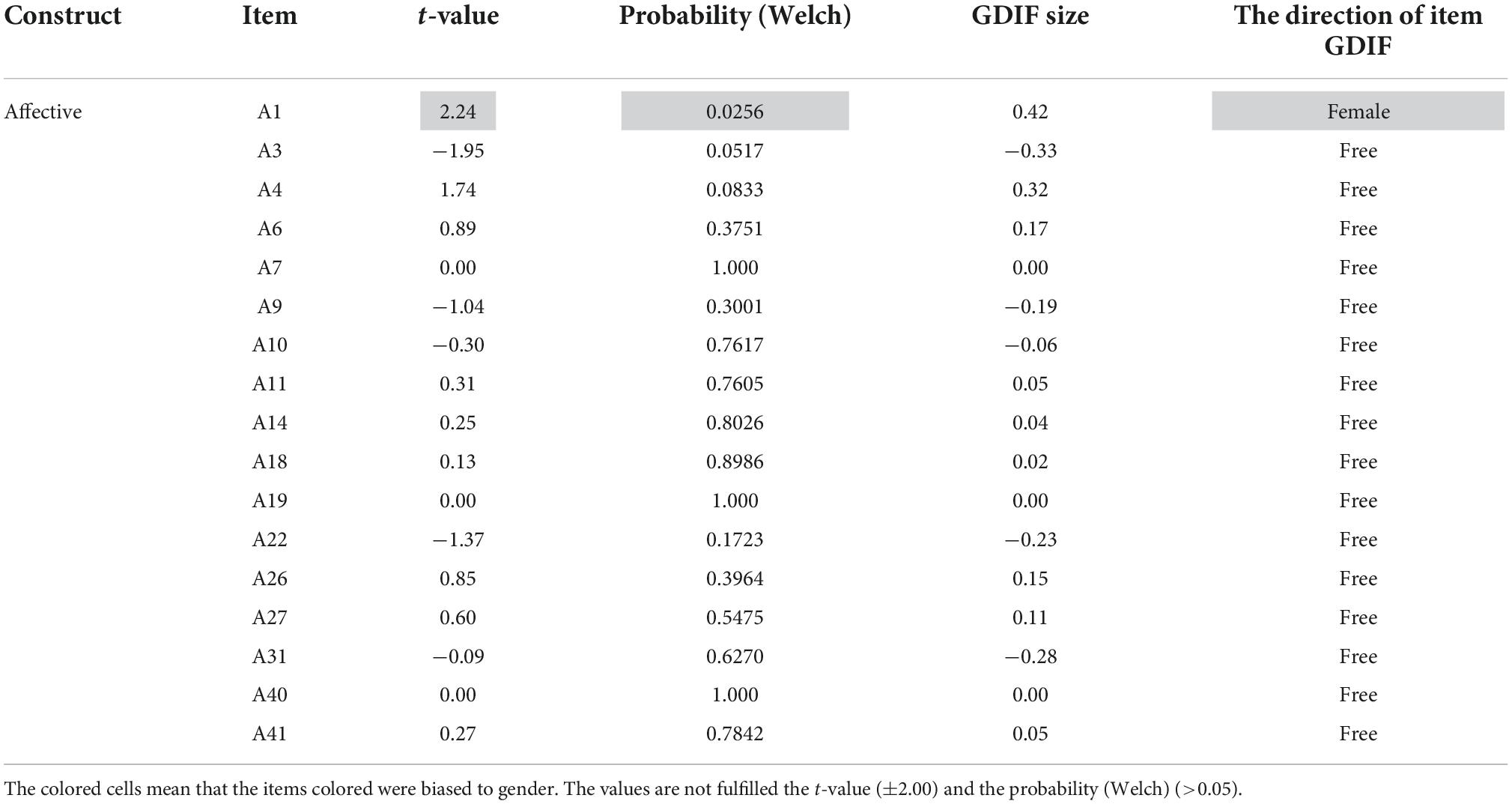

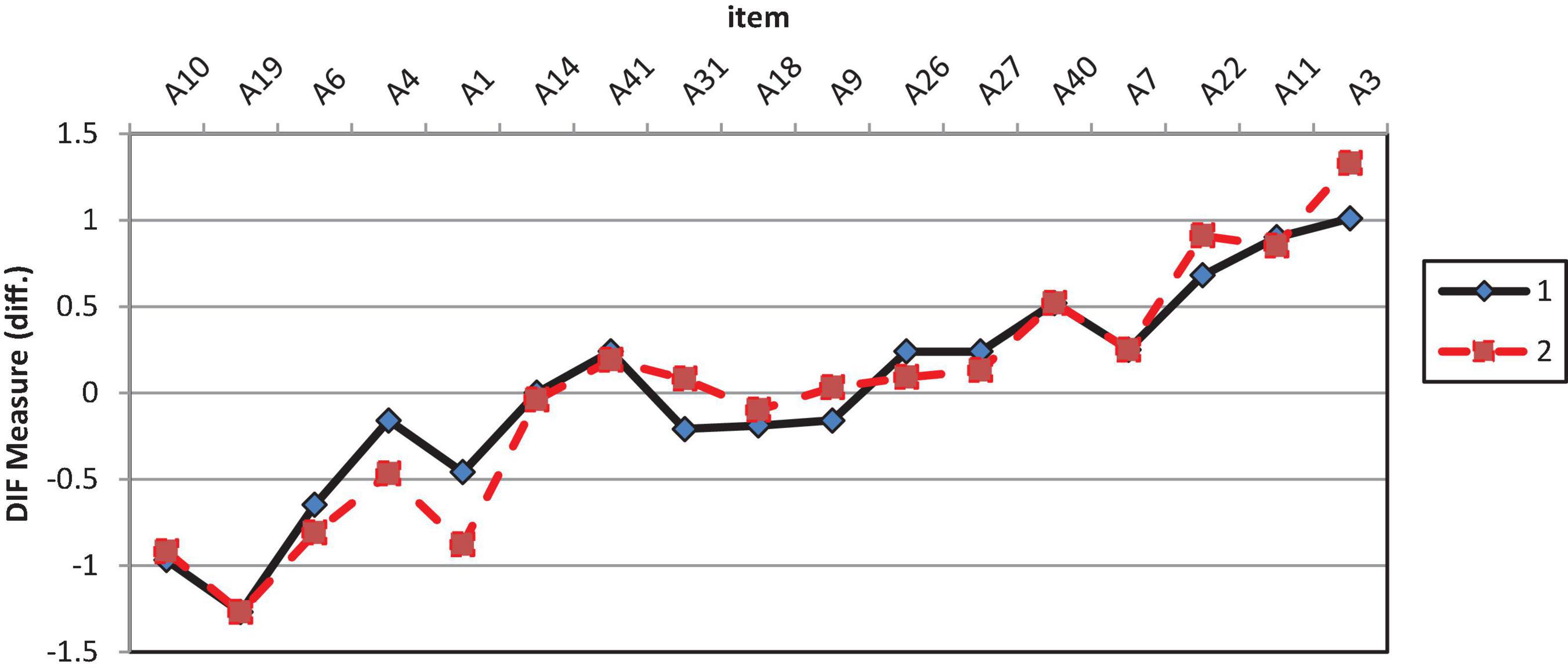

Table 6 shows the results of GDIF analysis on affective items. Analysis revealed that only one of 17 items showed significant GDIF, A1. Item A1 (“I do have a curiosity to explore new knowledge”) is easier to agree with by female students than male students. This item with a significant GDIF of 0.42 logits has a t-value of more than 2 (t ≥ 2.0). Figure 2 shows the DIF plot analysis of affective construct by gender (1 Male; 2 Female).

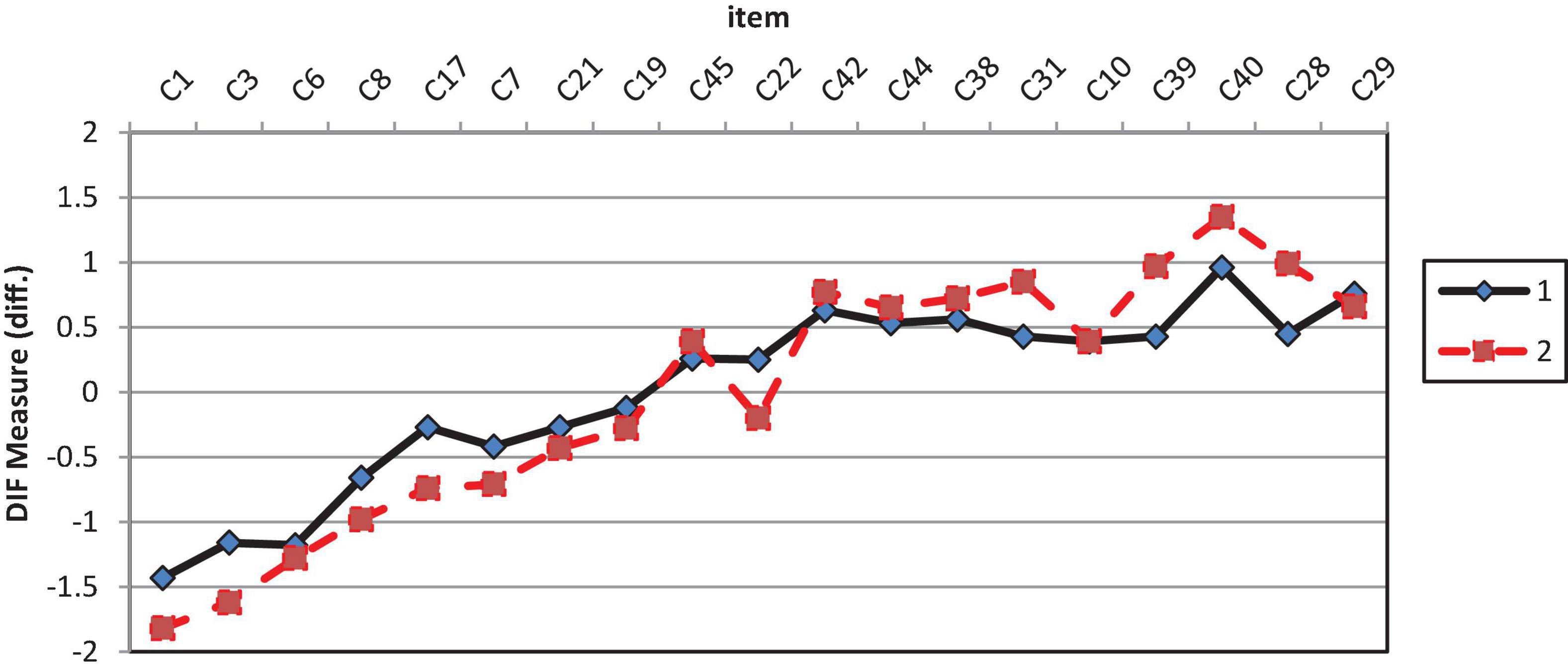

Table 7 shows the results of GDIF analysis on conative items. Analysis revealed that seven of 19 items showed significant GDIF, which are C3, C17, C22, C28, C31, C39, and C40. These items with significant GDIF ranging from 0.45 to 0.54 logits have a t-value of more than 2 (t ≥ 2.0). Figure 3 shows the DIF plot DIF measure analysis of conative construct by gender. Item C3 (“I am willing to tolerate current group members during problem solving”) is easier to agree with by female students than male students. Similarly, Item C17 (“I try to find the cause when a solution doesn’t work”) is easier to agree with by female students compared to male students. Additionally, Item C22 (“I diligently deal with a problem even beyond the allotted time”) is easier to agree with by female students than male students. Conversely, Item C28 (“I am willing to take risks to solve a problem”) is easier to agree with my male students than with female students. In addition, Item C31 (“I can adapt to the uncertainty of solving a problem”) is easier to agree with male students than female students. Item C39 (“I have the courage to accept challenges to solve complex problems”) is easier to agree with male students than female students. Item C40 (“I am confident that I understand the content of Computational Thinking”) male students were more confident in understanding the content of computational thinking.

Discussion

This advancement in science and technology has not had the same effect on men as it has on women. Differential item functioning (DIF) is present when two or more subgroups perform differently on a test item despite being matched on a measured construct. DIF analysis plays a crucial role in ensuring the equity and fairness of educational assessments since DIF-free instruments are regarded as equitable and fair for all participants. Consequently, the DIF study is a crucial procedure that aims to identify the item that does not demonstrate the same function when administered to students with the same ability but different backgrounds.

Nine out of the 55 items associated to gender in total do not fall within the acceptable range, hence it is suggested that they can be removed (99). The value was between 0.42 and 0.46, according to the DIF contrast results in Tables 4, 6, 7, while the t value was between 1.95 and 1.95 logits. The result is in agreement with the logit value of +0.5 to 0.5 determined for the DIF contrast for the Likert scale and the t value between 2 and +2. (97, 104). Apart from that, since the probability was higher than 0.05, these items did not include DIF (92). In general, geographic location, gender, and academic achievement affect a student’s skillset (59). Gender differences are also a notable discussion in CT study (105). However, certain studies also indicated that males and females have similar CT. Despite no difference in CT between male and female 15-18-year-olds (61, 62), gender inequalities persist (6, 60).

Regarding the cognitive construct, K43 (I can change my mind to try something new while solving a problem) demonstrates that male students have superior cognitive abilities compared to female students. Boys are stronger at deductive and abstract reasoning, whereas girls are better at inductive and concrete (15). Boys reason from the general to the specific. They employ concepts to solve problems. Male brains are 10-15% larger and heavier than female brains, according to study. Besides size, genders also differ in brain autonomy. Using brain mapping, researchers found that men have six times more gray matter connected to intelligence than women, but women have ten times more white matter. One study shows that gender-related differences in brain areas connect with IQ (106). This study and others show that males’ inferior parietal lobes are larger. This lobe helps boys with spatial and mathematical reasoning. The left side of the brain, which controls language and verbal and written skills, develops sooner in girls, so they perform better in those areas (107). These results concur with Mouza et al. (108) ‘s conclusion that male students have a higher cognitive level of CT knowledge than female students. In addition, other studies have found that female students have limited computing knowledge and experience (109). According to research, males are typically more interested in information or knowledge than females (110). This may be due to the influence of culture and stereotypical socialization processes experienced by people beginning in childhood, as there are more men than women in these sectors (61). Lack of early experience and other obstacles contribute to girls’ underrepresentation in this field (111).

Examining the affective construct, findings indicate that male students are more interested in practicing CT than female students for items A4 (I want to learn programming to apply Computational Thinking) and A1 (I have a curiosity to explore new knowledge). Similarly, Askar and Davenport (112) and Ozyurt and Ozyurt (113) found that male students have a greater sense of programming self-efficacy than female students. In addition, other studies have shown that the lack of female role models and differences in prior programming experience influence women’s participation in computer science (114, 115). In addition, CT aptitude appears to be frequently linked to mathematical logic and favors male students (59). In addition, a study conducted outside of Malaysia revealed that male students were more familiar with technology and favored its use for learning (116). Female students typically require more time than male students to master CT (60). Atmatzidou and Demetriadis (60) reported that girls in the high school robotics STEM curriculum appeared to require more training time to attain the same skill level as boys in certain CT-specific aspects, such as decomposition.

Moreover, in the conative construct, male students won item C17 (I try to find the cause when a solution does not work). According to neuroscience study (117–119), females’ hippocampus develops faster and is larger than boys. Sequencing, vocabulary, reading, and writing are affected. Boys learn better through movement and visual experience because their cerebral cortex is more defined for spatial relationships. Girls favor collaborative activities where they exchange ideas with others, while guys prefer rapid, individualistic, kinesthetic, spatially-oriented, and manipulative-based activities (120). This fits with what Geary et al. (121) found: that male and female students have very different spatial and computational skills because male students are better at arithmetic reasoning. It is influenced by the fact that male students tend to be more intellectual, abstract, and objective. As a result, male students tend to understand issues through calculations, evaluate the compatibility between computational tools and techniques and challenges, and use computational strategies when solving problems. Besides, the additional information in Cognitive Neurodynamics context showed the existence of a gender effect on spirituality which reported that alpha and theta brain signals started increased in male according by age range (20). Computational thinking is breaking down complicated problems into steps that can be understood (called algorithms) and finding patterns that can be used to solve other problems (22). On the other hand, female students worked harder to find the first information. Still, female students tended to solve problems step by step, making it hard for them to find patterns or quick ways to solve problems. Women tend to be more careful, organized, and thorough than men (122, 123). Overall, the existing results proved that the existence of a significant difference between the two groups of men and women for brain indicators in the Cognitive Neurodynamics context.

Limitations and future directions

This study, like any other, has limitations. To begin with, this research is only focused on computational thinking disposition aspect. As a result, the CTDI instrument was built around three main elements in disposition. Thus, the first limitation is only Malaysian secondary school students were included in the study. Context can affect cultural differences. Researchers assert that context may explain the different results. Therefore, it is best to conduct larger-scale research with samples across Malaysia. This would increase the respondents’ and research’s demographic diversity.

DIF benchmarking could include in secondary schools. More research is needed to understand the differences in DIF item performance between groups, especially since computational thinking instruments are still being developed. We based our work on CT literature from various domains. The tool should apply to other fields. Replications in other countries would boost relevance in diverse nations. This study’s construct validity came from a homogenous population. The scale must be validated with higher education, elementary, middle, and private school students. Comparing studies across tests can also improve psychometric assessment.

On the other hand, there are multiple areas for further research that stem from this study. Accordingly, future research should include different cultural groups to determine if the phenomenon is universal. East Asians and Westerners have different thinking patterns, according to Nisbett et al. (124). Westerners strongly prefer positivity, while Easterners have more varied preferences (125). This study will influence future analyses and improve item psychometrics. Further research can correlate personality traits. This instrument is DIF-analyzed in psychometrics to ensure it has not biased towards one measurement component as ethnic, socioeconomic status or age groups may contribute to the DIF.

Conclusion

This study assessed the effects of gender differences on disposition towards CT Using DIF analysis. Implementing a curriculum design to integrate STEM education with computational thinking to create an interdisciplinary approach presented a number of obstacles. A well-organized measuring instrument should be designed for long-term utilization. The information on gender clustering tendencies in answering GDIF items can help test developers create more fair achievement test items for students of different genders. The important practical implication is that the items selected from this study can be used as an alternative for self-evaluation and peer evaluation session for improvement purposes.

The findings of this study revealed a moderate level of CT disposition, which suggests the importance of making students aware of the evolution and rapid growth of CT discipline, and the availability of technological resources. The DIF analysis showed that there was a significant difference based on gender towards students’ disposition for CT. Educators can use the data to identify students’ strengths and weaknesses and plan more meaningful lessons. Girls and boys alike can flourish in their creative thinking if we teach them to focus on the process of CT and problem solving.

We need to acknowledge the fact that boys’ and girls’ perspectives on CT differ in significant ways. Differences in ability are not included in these categories. In order to encourage excellence in both sexes, educators must take into account the differences between males and females while planning lessons and activities. The necessity of devising engaging interventions and monitoring children’s attentional and motivational elements during activities is illuminated by these findings, which have implications for educational practitioners and researchers. The study makes CT dispositions visible to the education community as path-opening invitations to explore CT and foster meaningful learning experiences.

Data availability statement

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Education Policy Planning and Research Division, Ministry of Education, Malaysia (Ethics approval number: KPM.600-3/2/3-eras (7906). Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

Author contributions

SS: conceptualization, methodology, formal analysis, investigation, writing—original draft preparation, and project administration. SS and MM: validation and data curation. SS, MM, and KO: resources and writing—review and editing. MM: visualization and funding acquisition. MM and KO: supervision. All authors read and agreed to the published version of the manuscript.

Funding

This study was funded by the Faculty of Education, Universiti Kebangsaan Malaysia (UKM) with a Publication Reward Grant (GP-2021-K021854).

Acknowledgments

Special thanks and appreciation of gratitude to my supervisor, MM and KO, the Faculty of Education, UKM and Ministry of Education (MOE) for giving us the golden opportunity to conduct this wonderful project and guiding us from the beginning to the end, including each of the participants for their support during the data collection.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Denning PJ, Tedre M. Computational Thinking. Cambridge, MA: The MIT Press (2019). doi: 10.7551/mitpress/11740.001.0001

2. Rich KM, Yadav A, Larimore R. Teacher implementation profiles for integrating computational thinking into elementary mathematics and science instruction. Educ Inf Technol. (2020) 25:3161–88. doi: 10.1007/s10639-020-10115-5

3. Barr V, Stephenson C. Bringing computational thinking to K-12: what is involved and what is the role of the computer science education community. ACM Inroads. (2011) 2:48–54. doi: 10.1145/1929887.1929905

4. College Board. Advanced Placement Computer Science Principles: Curriculum Framework. (2014). Available online at: http://cms5.revize.com/revize/williamsvilleschools/Departments/Teaching%20&%20Learning/Mathematics/High%20School,%20Grades%209-12/ap-computer-science-principles-curriculum-framework.pdf (accessed December 1, 2021).

6. Yadav A, Mayfield C, Zhou N, Hambrusch S, Korb JT. Computational thinking in elementary and secondary teacher education. ACM Trans Comput Educ. (2014) 14:1–16. doi: 10.1145/2576872

7. Hambleton RK, Jones RW. Comparing classical test and item response theories and their applications to test development. Educ Meas Issues Pract. (1993) 12:38–47. doi: 10.1111/j.1745-3992.1993.tb00543.x

8. Osterlind SJ. Constructing Test Items. Boston, MA: Kluwer Academic Publishers (1989). doi: 10.1007/978-94-009-1071-3

9. Ackerman TA. A didactic explanation of item bias, item impact, and item validity from a multidimensional perspective. J Educ Meas. (1992) 29:67–91. doi: 10.1111/j.1745-3984.1992.tb00368.x

10. Siti Rahayah Ariffin. Inovasi dalam Pengukuran dan Penilaian Pendidikan [Innovation in Educational Measurement and Evaluation]. Bangi: Fakulti Pendidikan UKM (2008).

11. Dodeen H. Stability of differential item functioning over a single population in survey data. J Exp Educ. (2004) 72:181–93. doi: 10.3200/JEXE.72.3.181-193

12. Stoneberg BDJr. A Study of gender-based and ethnic based differential item functioning (DIF) in the Spring 2003 Idaho standards achievement tests applying the simultaneous bias test (SUBSET) and the mantel Haenszel chi-square test. Intern Meas Stat. (2004) 1–15. Available online at: https://files.eric.ed.gov/fulltext/ED483777.pdf

13. Maller SJ. Differential item functioning in the WISC-III: item parameters for boys and girls in the national standardization sample. Educ Psychol Meas. (2001) 61:793–817. doi: 10.1177/00131640121971527

14. Cauffman E, MacIntosh RA. Rasch differential item functioning analysis of the Massachusetts youth screening instrumentidentifying race and gender differential item functioning among juvenile offenders. Educ Psychol Meas. (2006) 66:502–321. doi: 10.1177/0013164405282460

15. Gurian M. Boys and Girls Learn Differently: A Guide for Teachers and Parents I Michael Gurian and Patricia Henley with Terry Trueman. 1st paperback ed. San Francisco, CA: Jossey-Bass (2002).

16. Pollack WS. Real boys: Rescuing Our Sons From the Myths of Boyhood. William Pollack (1st Owl Books ed ed.). New York, NY: Henry Holt & Company (1999).

17. Hanlon HW, Thatcher RW, Cline MJ. Gender differences in the development of EEG coherence in normal children. Dev Neuropsychol. (1999) 76:479. doi: 10.1207/S15326942DN1603_27

18. Vargas DV, Lauwereyns J. Setting the space for deliberation in decision-making. Cogn Neurodyn. (2021) 15:743–55. doi: 10.1007/s11571-021-09681-2

19. Sen S, Daimi SN, Watanabe K, Takahashi K, Bhattacharya J, Saha G. Switch or stay? Automatic classification of internal mental states in bistable perception. Cogn Neurodyn. (2020) 14:95–113. doi: 10.1007/s11571-019-09548-7

20. MahdiNejad JED, Azemati H, Sadeghi habibabad A, Matracchi P. Investigating the effect of age and gender of users on improving spirituality by using EEG. Cogn Neurodyn. (2021) 15:637–47. doi: 10.1007/s11571-020-09654-x

21. Sadeghian M, Mohammadi Z, Mousavi SM. Investigation of electroencephalography variations of mental workload in the exposure of the psychoacoustic in both male and female groups. Cogn Neurodyn. (2022) 16:561–74. doi: 10.1007/s11571-021-09737-3

22. Yadav A, Hong H, Stephenson C. Computational thinking for all: pedagogical approaches to embedding 21st century problem solving in K-12 classrooms. Tech Trends. (2016) 60:565–8. doi: 10.1007/s11528-016-0087-7

23. García-Peñalvo FJ, Mendes AJ. Exploring the Computational Thinking Effects in Pre-University Education. Amsterdam: Elsevier (2018). doi: 10.1016/j.chb.2017.12.005

24. Mohaghegh M, McCauley M. Computational thinking: the skill set of the 21st century. Int J Comput Sci Inf Technol. (2016) 7:1524–30.

25. Curzon P, Black J, Meagher LR, McOwan PW. cs4fn.org: enthusing students about computer science. In: C Hermann, T Lauer, T Ottmann, M Welte editors. Proceedings of Informatics Education Europe IV. (2009). p. 73–80. Available online at: http://www.eecs.qmul.ac.uk/~pc/publications/2009/PCJBLRMPWCIEEIV2009preprint.pdf

26. Wing JM. Computational Thinking Benefits Society. 40th Anniversary Blog of Social Issues in Computing. New York, NY: Academic Press (2014).

27. Basso D, Fronza I, Colombi A, Pah C. Improving assessment of computational thinking through a comprehensive framework. In: Proceedings of the 18th Koli Calling International Conference on Computing Education Research. Koli (2018). doi: 10.1145/3279720.3279735

28. Eguchi A. Bringing robotics in classrooms. In: M Khine editor. Robotics in STEM Education. Cham: Springer (2017). p. 3–31. doi: 10.1007/978-3-319-57786-9_1

29. Belanger C, Christenson H, Lopac K. Confidence and Common Challenges: The Effects of Teaching Computational Thinking to Students Ages 10-16. Ph.D. Thesis. St Paul, MN: St. Catherine University Repository (2018).

30. Grover S, Pea R. Computational thinking in K–12: a review of the state of the field. Educ Res. (2013) 42:38–43. doi: 10.3102/0013189X12463051

31. Lyon JA, Magana JA. Computational thinking in higher education: a review of the literature. Comput Appl Eng Educ. (2020) 28:1174–89. doi: 10.1002/cae.22295

32. Fagerlund J, Häkkinen P, Vesisenaho M, Viiri J. Computational thinking in programming with scratch in primary schools: a systematic review. Comput Appl Eng Educ. (2021) 29:12–28. doi: 10.1002/cae.22255

33. Weintrop D, Beheshti E, Horn M, Orton K, Jona K, Trouille L, et al. Defining computational thinking for mathematics and science classrooms. J Sci Educ Technol. (2015) 25:1–21. doi: 10.1007/s10956-015-9581-5

34. Hickmott D, Prieto-Rodriguez E, Holmes K. A scoping review of studies on computational thinking in K–12 mathematics classrooms. Digit Exp Math Educ. (2018) 4:48–69. doi: 10.1007/s40751-017-0038-8

35. Bell J, Bell T. Integrating computational thinking with a music education context. Inform Educ. (2018) 17:151–66.

36. Ioannou A, Makridou E. Exploring the potentials of educational robotics in the development of computational thinking: a summary of current research and practical proposal for future work. Educ Inf Technol. (2018) 23:2531–44. doi: 10.1007/s10639-018-9729-z

37. Zhang L, Nouri J. A systematic review of learning computational thinking through scratch in K-9. Comput Educ. (2019) 141:103607. doi: 10.1016/j.compedu.2019.103607

38. Papadakis S. The impact of coding apps on young children computational thinking and coding skills. A literature reviews. Front Educ. (2021) 6:657895. doi: 10.3389/feduc.2021.657895

39. Stamatios P. Can preschoolers learn computational thinking and coding skills with Scratch Jr? A systematic literature review. Int J Educ Reform. (2022). doi: 10.1177/10567879221076077

40. Qiu RG. Computational thinking of service systems: dynamics and adaptiveness modeling. Serv Sci. (2009) 1:42–55. doi: 10.1287/serv.1.1.42

41. Haseski HI, Ilic U, Tugtekin U. Defining a new 21st-century skill-computational thinking: concepts and trends. Int Educ Stud. (2018) 11:29. doi: 10.5539/ies.v11n4p29

42. Jong MS, Geng J, Chai CS, Lin P. Development and predictive validity of the computational thinking disposition questionnaire. Sustainability. (2020) 12:4459. doi: 10.3390/su12114459

43. Tang K, Chou T, Tsai C. A content analysis of computational thinking research: an international publication trends and research typology. Asia Pac Educ Res. (2019) 29:9–19. doi: 10.1007/s40299-019-00442-8

44. Wing JM. Computational thinking and thinking about computing. Philos Trans R Soc A Math Phys Eng Sci. (2008) 366:3717–25. doi: 10.1098/rsta.2008.0118

45. Abdullah N, Zakaria E, Halim L. The effect of a thinking strategy approach through visual representation on achievement and conceptual understanding in solving mathematical word problems. Asian Soc Sci. (2012) 8:30. doi: 10.5539/ass.v8n16p30

46. Denning PJ. The profession of IT beyond computational thinking. Commun ACM. (2019) 52:28–30. doi: 10.1145/1516046.1516054

47. NRC. Report of A Workshop on The Pedagogical Aspects of Computational Thinking. Washington, DC: National Academies Press (2011).

48. Barr D, Harrison J, Conery L. Computational thinking: a digital age skill for everyone. Learn Lead Technol. (2011) 38:20–3.

49. Haseski HI, Ilic U. An investigation of the data collection instruments developed to measure computational thinking. Inform Educ. (2019) 18:297. doi: 10.15388/infedu.2019.14

50. Poulakis E, Politis P. Computational thinking assessment: literature review. In: T Tsiatsos, S Demetriadis, A Mikropoulos, V Dagdilelis editors. Research on E-Learning and ICT in Education. Berlin: Springer (2021). p. 111–28. doi: 10.1007/978-3-030-64363-8_7

51. Roman-Gonzalez M, Moreno-Leon J, Robles G. Combining assessment tools for a comprehensive evaluation of computational thinking interventions. In: SC Kong, H Abelson editors. Computational Thinking Education. Singapore: Springer (2019). p. 79–98. doi: 10.1007/978-981-13-6528-7_6

52. Tang X, Yin Y, Lin Q, Hadad R, Zhai X. Assessing computational thinking: a systematic review of empirical studies. Comput Educ. (2020) 148:103798. doi: 10.1016/j.compedu.2019.103798

53. Varghese VV, Renumol VG. Assessment methods and interventions to develop computational thinking—A literature review. In: Proceedings of the 2021 International Conference on Innovative Trends in Information Technology (ICITIIT). Piscataway, NJ: IEEE (2021). p. 1–7.

54. Alan Ü. Likert Tipi Olçeklerin ¨ Çocuklarla kullanımında yanıt kategori sayısının psikometrik Ozelliklere ¨ etkisi [effect of Number of Response Options on Psychometric Properties of Likert-Type Scale for Used With Children]. Ph.D Thesis. Ankara: Hacettepe University (2019).

55. Beaton DE, Bombardier C, Guillemin F, Ferraz MB. Guidelines for the process of cross-cultural adaptation of self-report measures. Spine. (2000) 25:3186–91. doi: 10.1097/00007632-200012150-00014

56. Boynton PM, Greenhalgh T. Selecting, designing, and developing your questionnaire. BMJ. (2004) 328:1312–5. doi: 10.1136/bmj.328.7451.1312

57. Reichenheim ME, Moraes CL. Operacionalização de adaptação transcultural de instrumentos de aferição usados em epidemiologia [Operationalizing the cross-cultural adaptation of epidemiological measurement instruments]. Rev Saude Publ. (2007) 41:665–73. doi: 10.1590/S0034-89102006005000035

58. Gjersing L, Caplehorn JR, Clausen T. Cross-cultural adaptation of research instruments: language, setting, time, and statistical considerations. BMC Med Res Methodol. (2010) 10:13. doi: 10.1186/1471-2288-10-13

59. Chongo S, Osman K, Nayan NA. Level of computational thinking skills among secondary science students: variation across gender and mathematics achievement skills among secondary science students. Sci Educ Int. (2020) 31:159–63. doi: 10.33828/sei.v31.i2.4

60. Atmatzidou S, Demetriadis S. Advancing students’ computational thinking skills through educational robotics: a study on age and gender relevant differences. Robot Autonom Syst. (2016) 75:661–70. doi: 10.1016/j.robot.2015.10.008

61. Espino EE, González CS. Influence of gender on computational thinking. In: Proceedings of the XVI International Conference on Human-Computer Interaction. Vilanova i la Geltru (2015). p. 119–28. doi: 10.1145/2829875.2829904

62. Oluk A, Korkmaz Ö. Comparing students’ scratch skills with their computational thinking skills in different variables. Int J Modern Educ Comput Sci. (2016) 8:1–7. doi: 10.5815/ijmecs.2016.11.01

63. Izu C, Mirolo C, Settle A, Mannila L, Stupurienë G. Exploring bebras tasks content and performance: a multinational study. Inf Educ. (2017) 16:39–59. doi: 10.15388/infedu.2017.03

64. de Araujo ALSO, Andrade WL, Guerrero DDS. A Systematic Mapping Study on Assessing Computational Thinking Abilities. Piscataway, NJ: IEEE (2016). p. 1–9. doi: 10.1109/FIE.2016.7757678

65. Kong SC. Components and methods of evaluating computational thinking for fostering creative problem-solvers in senior primary school education. In: Kong SC, Abelson H editors. Computational Thinking Education. Singapore: Springer (2019). p. 119–41. doi: 10.1007/978-981-13-6528-7_8

66. Martins-Pacheco LH, von Wangenheim CAG, Alves N. Assessment of computational thinking in K-12 context: educational practices, limits and possibilities-a systematic mapping study. Proceedings of the 11th international conference on computer supported education (CSEDU 2019). Heraklion: (2019). p. 292–303. doi: 10.5220/0007738102920303

67. Brennan K, Resnick M. New frameworks for studying and assessing the development of computational thinking. In: Paper Presented at the Annual Meeting of the American Educational Research Association (AERA). Vancouver, BC (2012).

68. CSTA. CSTA K–12 Computer Science Standards (revised 2017). (2017). Available online at: http://www.csteachers.org/standards (accessed December 13, 2021).

69. Lee I, Martin F, Denner J, Coulter B, Allan W, Erickson J, et al. Computational thinking for youth in practice. ACM Inroads. (2011) 2:32–7. doi: 10.1145/1929887.1929902

70. Brennan K, Resnick M. New frameworks for studying and assessing the development of computational thinking. In: Proceedings of the 2012 Annual Meeting of the American Educational Research Association. Vancouver, BC (2012). p. 25.

71. Halpern DF. Teaching critical thinking for transfer across domains: disposition, skills, structure training, and metacognitive monitoring. Am Psychol. (1998) 53:449. doi: 10.1037/0003-066X.53.4.449

73. Facione PA. The disposition toward critical thinking: its character, measurement, and relationship to critical thinking skill. Inform Logic. (2000) 20:61–84. doi: 10.22329/il.v20i1.2254

74. Facione PA, Facione NC, Sanchez CA. Test Manual for the CCTDI. 2nd ed. Berkeley, CA: The California Academic Press (1995).

75. Sands P, Yadav A, Good J. Computational thinking in K-12: in-service teacher perceptions of computational thinking. In: M Khine editor. Computational Thinking in the STEM Disciplines. Cham: Springer (2018). doi: 10.1007/978-3-319-93566-9_8

79. Hilgard ER. The trilogy of mind: cognition, affection, and conation. J Hist Behav Sci. (1980) 16:107–17. doi: 10.1002/1520-6696(198004)16:2<107::AID-JHBS2300160202>3.0.CO;2-Y

80. Huitt W, Cain S. An Overview of the Conative Domain. In Educational Psychology Interactive. Valdosta, GA: Valdosta State University (2005). p. 1–20.

81. Tallon A. Head and Heart: Affection, Cognition, Volition as Triune Consciousness. The Bronx, NY: Fordham University (1997).

82. Baumeister REF, Bratslavsky E, Muraven M, Tice DM. Ego depletion: is the active self a limited resource. J Personal Soc Psychol. (1998) 74:1252–65. doi: 10.1037/0022-3514.74.5.1252

83. Emmons RA. Personal strivings: an approach to personality and subjective well-being. J Pers Soc Psychol. (1986) 51:1058–68. doi: 10.1037/0022-3514.51.5.1058

84. Gay LR, Mills GE. Educational Research: Competencies for Analysis and Applications. 12th ed. Hoboken, NJ: Merrill Prentice-Hall (2018).

85. Hair JF, Celsi MW, Harrison DE. Essentials of Marketing Research. 5th ed. New York, NY: McGraw-Hill Education (2020).

86. Creswell JW, Creswell JD. Research Design: Qualitative, Quantitative, and Mixed Methods approach. 5th ed. Los Angeles, CA: SAGE (2018).

87. Tyrer S, Heyman B. Sampling in epidemiological research: issues, hazards and pitfalls. BJPsych Bull. (2016) 40:57–60. doi: 10.1192/pb.bp.114.050203

88. Sovey S, Osman K, Matore MEE. Exploratory and confirmatory factor analysis for disposition levels of computational thinking instrument among secondary school students. Eur J Educ Res. (2022) 11:639–52. doi: 10.12973/eu-jer.11.2.639

90. Yount R. Research Design & Statistical Analysis in Christian Ministry. 4th ed. Fort Worth, TX: Department of Foundations of Education (2006).

91. Dolnicar S, Grun B, Leisch F, Rossiter J. Three good reasons NOT to use five- and seven-point likert items. In: Proceedings of the 21st CAUTHE National Conference. Adelaide, SA: University of Wollongong (2011). p. 8–11.

92. Sumintono B, Widhiarso W. Aplikasi Model Rasch untuk Penelitian Ilmu-Ilmu Sosial (Edisi revisi) [Application of Rasch Modelling in Social Science Research, Revised Edition]. Cimahi: Trimkom Publishing House (2014).

93. Wang R, Hempton B, Dugan JP, Komives SR. Cultural differences: why do asians avoid extreme responses? Surv Pract. (2008) 1:1–7. doi: 10.29115/SP-2008-0011

94. Rasch G. Probabilistic Models for Some Intelligence and Attainment Tests. San Diego, CA: MESA Press (1960).

95. Boone WJ. Rasch analysis for instrument development: why, when, and how? CBE Life Sci Educ. (2016) 15:rm4. doi: 10.1187/cbe.16-04-0148

96. Linacre JM. A User’s Guide to WINSTEPS: Rasch Model Computer Programs. San Diego, CA: MESA Press (2012).

97. Bond TG, Fox CM. Applying the Rasch Model: Fundamental Measurement in the Human Sciences. 3rd ed. New York, NY: Routledge (2015).

98. Bond TG, Fox CM. Applying the Rasch Model: Fundamental Measurement in the Human Sciences. New Jersey, NJ: Lawrence Erlbaum Associates Publishers (2001).

99. Bond TG, Fox CM. Applying the Rasch Model: Fundamental Measurement in the Human Sciences. 2nd ed. New Jersey, NJ: Routledge (2007).

101. Boone WJ, Staver JR, Yale MS. Rasch Analysis in the Human Sciences. Dordrecht: Springer (2014). doi: 10.1007/978-94-007-6857-4

102. Fox CM, Jones JA. Uses of Rasch modeling in counselling psychology research. J Couns Psychol. (1998) 45:30–45. doi: 10.1037/0022-0167.45.1.30

103. Sheppard R, Han K, Colarelli S, Dai G, King D. Differential item functioning by sex and race in the hogan personality inventory. Assessment. (2006) 13:442–53. doi: 10.1177/1073191106289031

105. Gunbatar MS, Karalar H. Gender differences in middle school students’ attitudes and self-efficacy perseptions towards mblock programming. Eur J Educ Res. (2018) 7:925–33. doi: 10.12973/eu-jer.7.4.925

106. Kaufmann C, Elbel G. Frequency dependence and gender effects in visual cortical regions involved in temporal frequency dependent pattern processing. Hum Brain Mapp. (2001) 14:28–38. doi: 10.1002/hbm.1039

107. Gabriel P, Schmitz S. Gender differences in occupational distributions among workers. Monthly labor review (June). Washington, DC: U.S. Bureau of Labor Statistics Division of Information and Marketing Services (2007).

108. Mouza C, Marzocchi A, Pan Y, Pollock L. Development, implementation, and outcomes of an equitable computer science after-school program: findings from middle-school students. Res Technol Educ. (2016) 48:84–104. doi: 10.1080/15391523.2016.1146561

109. Hur JW, Andrzejewski CE, Marghitu D. Girls and computer science: Experiences, perceptions, and career aspirations. Comput Sci Educ. (2017) 27:100–20. doi: 10.1080/08993408.2017.1376385

110. Paderewski P, García M, Gil R, González C, Ortigosa EM, Padilla-Zea N. Acercando las mujeres a la ingeniería: iniciativas y estrategias que favorecen su inclusión. En XVI Congreso Internacional de Interacción Persona-Ordenador. Workshop Engendering Technology (II). Vilanova i la Geltrú: Asociación Interacción Persona Ordenador (AIPO) (2015). p. 319–26.

111. Cheryan S, Ziegler SA, Montoya AK, Jiang L. Why are some STEM fields more gender-balanced than others? Psychol Bull. (2017) 145:1–35. doi: 10.1037/bul0000052

112. Askar P, Davenport D. An investigation of factors related to self-efficacy for java programming among engineering students. Turk Online J Educ Technol. (2009) 8:26–32.

113. Ozyurt O, Ozyurt H. A study for determining computer programming students’ attitudes towards programming and their programming self-efficacy. J Theor Pract Educ. (2015) 11:51–67. doi: 10.17718/tojde.58767

114. Beyer S. Why are women underrepresented in computer science? Gender differences in stereotypes, self-efficacy, values, and interests and predictors of future CS course-taking and grades. Comput Sci Educ. (2014) 24:153–92. doi: 10.1080/08993408.2014.963363

115. Wilson BC. A study of factors promoting success in computer science, including gender differences. Comput Sci Educ. (2002) 12:141–64. doi: 10.1076/csed.12.1.141.8211

116. Naresh B, Reddy BS, Pricilda U. A study on the relationship between demographic factors and e-learning readiness among students in higher education. Sona Glob Manag Rev. (2016) 10:1–11.

117. Sousa DA, Tomlinson CA. Differentiation and the Brain: How Neuroscience Supports the Learner-Friendly Classroom. Bloomington, IN: Solution Tree Press (2011).

119. Bonomo V. Gender matters in elementary education research-based strategies to meet the distinctive learning needs of boys and girls. Educ Horiz. (2001) 88:257–64.

120. Gurian M. The Boys and Girls Learn Differently Action Guide for Teachers. San Francisco, CA: Jossey-Bass (2003).

121. Geary DC, Saults SJ, Liu F, Hoard MK. Sex differences in spatial cognition, computational fluency, and arithmetical reasoning. J Exp Child Psychol. (2000) 77:337–53. doi: 10.1006/jecp.2000.2594

122. Davita PWC, Pujiastuti H. Anallisis kemampuan pemecahan masalah matematika ditinjau dari gender. Kreano J Mat Kreatif Inov. (2020) 11:110–7. doi: 10.15294/kreano.v11i1.23601

123. Kalily S, Juhaevah F. Analisis Kemampuan Berpikir Kritis Siswa Kelas X SMA dalam Menyelesaikan Masalah Identitas Trigonometri Ditinjau Dari Gender. J Mat Dan Pembelajaran. (2018) 6:111–26.

124. Nisbett RE, Peng K, Choi I, Norenzayan A. Culture and systems of thought: holistic versus analytic cognition. Psychol Rev. (2001) 108:291–310. doi: 10.1037/0033-295X.108.2.291

Keywords: computational thinking, disposition, Rasch model, gender differential item functioning, secondary school, student

Citation: Sovey S, Osman K and Matore MEEM (2022) Gender differential item functioning analysis in measuring computational thinking disposition among secondary school students. Front. Psychiatry 13:1022304. doi: 10.3389/fpsyt.2022.1022304

Received: 18 August 2022; Accepted: 27 October 2022;

Published: 24 November 2022.

Edited by:

Rubin Wang, East China University of Science and Technology, ChinaReviewed by:

Jun Ma, Lanzhou University of Technology, ChinaJianwei Shen, North China University of Water Resources and Electric Power, China

Copyright © 2022 Sovey, Osman and Matore. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohd Effendi Ewan Mohd Matore, effendi@ukm.edu.my

Saralah Sovey

Saralah Sovey Kamisah Osman

Kamisah Osman Mohd Effendi Ewan Mohd Matore

Mohd Effendi Ewan Mohd Matore