- Department of Psychological Science, Northern Michigan University, Marquette, MI, United States

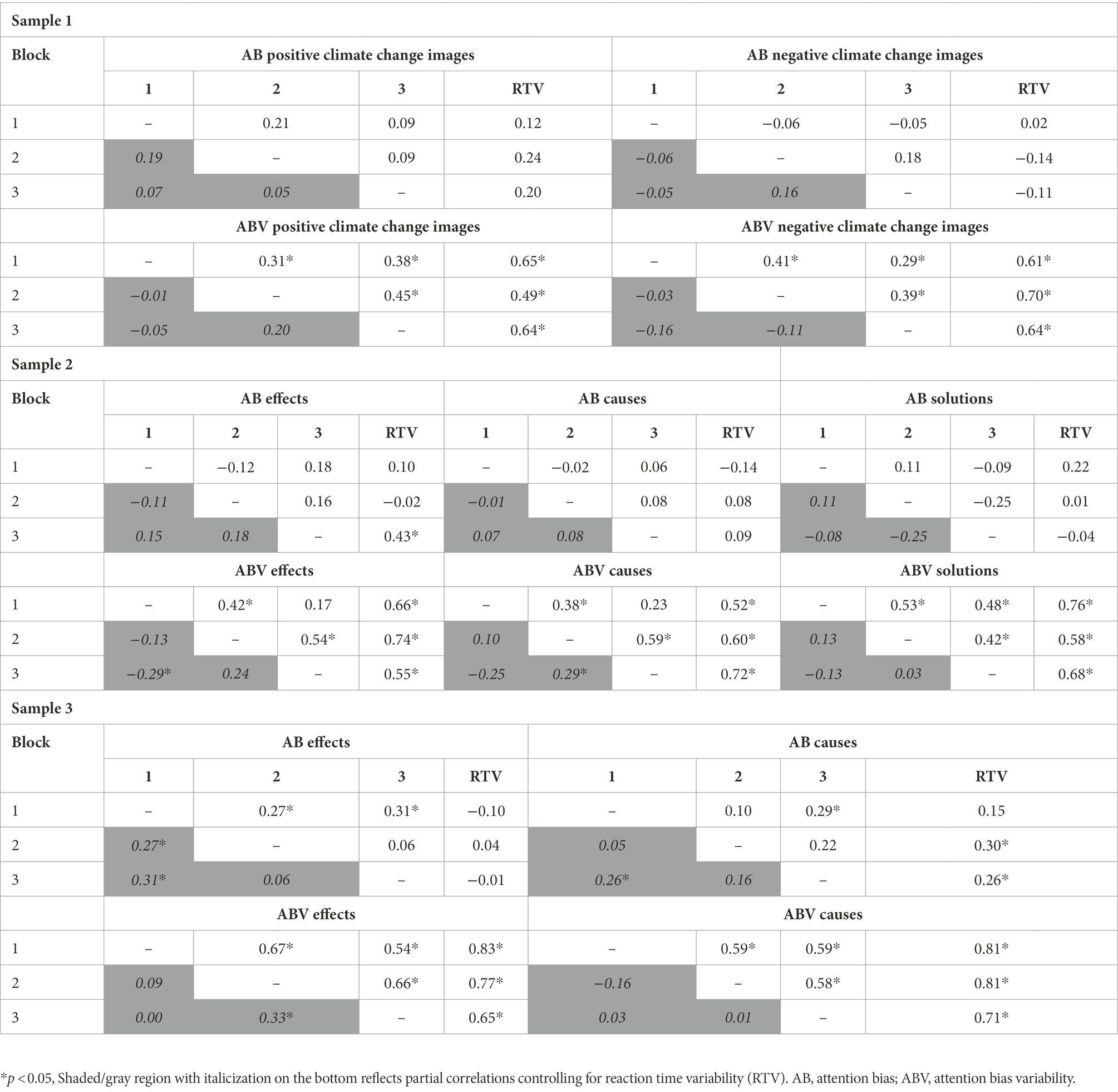

Climate change is one of the most pressing issues of the 21st century, which is perhaps why information about climate change has been found to capture observers’ attention. One of the most common ways of assessing individual differences in attentional processing of climate change information is through the use of reaction time difference scores. However, reaction time-based difference scores have come under scrutiny for their low reliability. Given that a primary goal of the field is to link individual differences in attention processing to participant variables (e.g., environmental attitudes), we assessed the reliability of reaction time-based measures of attention processing of climate change information utilizing an existing dataset with three variations of the dot-probe task. Across all three samples, difference score-based measures of attentional bias were generally uncorrelated across task blocks (r = −0.25 to 0.31). We also assessed the reliability of newer attention bias variability measures that are thought to capture dynamic shifts in attention toward and away from salient information. Although these measures were initially found to be correlated across task blocks (r = 0.17–0.67), they also tended to be highly correlated with general reaction time variability (r = 0.49–0.83). When controlling for general reaction time variability, the correlations across task blocks for attention bias variability were much weaker and generally nonsignificant (r = −0.25 to 0.33). Furthermore, these measures were unrelated to pro-environmental disposition indicating poor predictive validity. In short, reaction time-based measures of attentional processing (including difference score and variability-based approaches) have unacceptably low levels of reliability and are therefore unsuitable for capturing individual differences in attentional bias to climate change information.

1. Introduction

Anthropogenic climate change is one of the most serious problems facing the global community (Pecl et al., 2017; Tollefson, 2019, 2020). Information about climate change should therefore demand individuals’ attention (Luo and Zhao, 2021). Yet, only recently has research explored the extent to which climate change related information captures attention. Initial research using climate change relevant images of environmental damage found that these images captured the attention of individuals with pro-environmental attitudes (Beattie and McGuire, 2012). Follow up studies have generally supported the finding that climate change-relevant (or other environmentally harmful) objects capture attention in individuals with pro-environmental dispositions (Sollberger et al., 2017; Carlson et al., 2019b; Meis-Harris et al., 2021). In addition, words related to climate change (Whitman et al., 2018) and graphical information of climate change (Luo and Zhao, 2019) capture attention in politically liberal individuals more concerned with climate change. Thus, there is emerging evidence that climate change relevant information captures observers’ attention—what can be referred to as an attentional bias for climate change or environmentally relevant information. Such findings may offer insight into how best disseminate information about climate change that is attention grabbing in such a way as to promote large scale societal changes.

Research assessing the attentional capture of environmentally relevant stimuli has primarily used reaction time (RT; Carlson et al., 2019b, 2020; Meis-Harris et al., 2021) and eye tracking (Beattie and McGuire, 2012; Sollberger et al., 2017; Luo and Zhao, 2019) based measures. RT measures of attentional bias are typically calculated using a difference score (e.g., the difference in RTs between conditions where attention is facilitated vs. not facilitated). Broadly speaking, RT-based difference scores have come under scrutiny for low internal and/or test–retest reliability (Hedge et al., 2018; Goodhew and Edwards, 2019). For individual differences (i.e., correlational) research, between subject variability is necessary and needs to consistently/reliably measure the construct of interest. Given that one of the broad goals in the newly developing field of environmental attention bias research is to link variability in attentional processing to individual differences such as pro-environmental disposition (Beattie and McGuire, 2012; Sollberger et al., 2017; Carlson et al., 2019b; Meis-Harris et al., 2021) and political orientation (Whitman et al., 2018; Luo and Zhao, 2019), it is important to assess the reliability of environmental attention bias measures. However, reliability estimates for attention bias measures are rarely reported in the literature.

Given the low reliability of RT difference score-based estimates of attentional bias, the field of experimental psychopathology (where attentional bias is often linked to affective disorders/traits) sought to improve upon traditional (difference score-based) attention bias measures. As a result, innovative attention bias variability (ABV) measures were developed, which are thought to capture dynamic shifts of attention with alternating periods of attentional focus toward and away from affective information (Iacoviello et al., 2014; Zvielli et al., 2015). Early research using ABV measures found that they were more reliable than the traditional approach (Naim et al., 2015; Price et al., 2015; Davis et al., 2016; Rodebaugh et al., 2016; Zvielli et al., 2016; Molloy and Anderson, 2020). However, subsequent work has shown that general RT variability and mean RT speed influence measures of ABV and when controlled for significantly reduce their reliability (Kruijt et al., 2016; Carlson and Fang, 2020; Carlson et al., 2022a). ABV measures have not been used in the field of environmental attention bias. However, if found to be reliable in this context, they could be useful measures of attentional bias to environmental information.

Given that RT measures are commonly used in environmental attention bias research, and a goal of this research is often to link variability in attentional biases to relevant individual differences, we sought to assess the reliability of both traditional attention bias and innovative ABV measures. To meet this end, we utilized three existing datasets from previously published research utilizing the dot-probe task to assess attentional bias to emotionally positive and negative climate change relevant images (Carlson et al., 2020). We computed the correlation of attention bias measures for emotionally positive and negative climate change relevant images across blocks in the dot-probe tasks to assess the reliability of these measures. Based on previous research using non-environmental stimuli (e.g., threat or food related images; Carlson and Fang, 2020; Vervoort et al., 2021), we hypothesized that RT measures of attentional bias to climate change information would not be reliable and therefore unsuitable for individual differences research.

2. Materials and methods

2.1. Participants

This report included 177 participants from three separate samples. Sample one contained 58 (female = 47) individuals between the ages of 18–34 (M = 21.00, SD = 3.71). Sample two included 59 (female = 45) individuals 18–38 years old (M = 20.41, SD = 3.69). Sample three was comprised of 60 individuals (female = 52) 18–36 years old (M = 20.68, SD = 3.83). With N ≥ 58, this study was powered to detect correlations of r ≥ 0.35 (with each sample at ⍺ = 0.05, and power = 0.80) and therefore able to detect reliabilities considered to be unacceptably low. Across all three samples, participants provided informed written consent and received course credit (in undergraduate psychology courses) for their participation. The study was approved by the Northern Michigan University (NMU) Institutional Review Board (IRB; HS16-768).

2.2. Dot-probe task

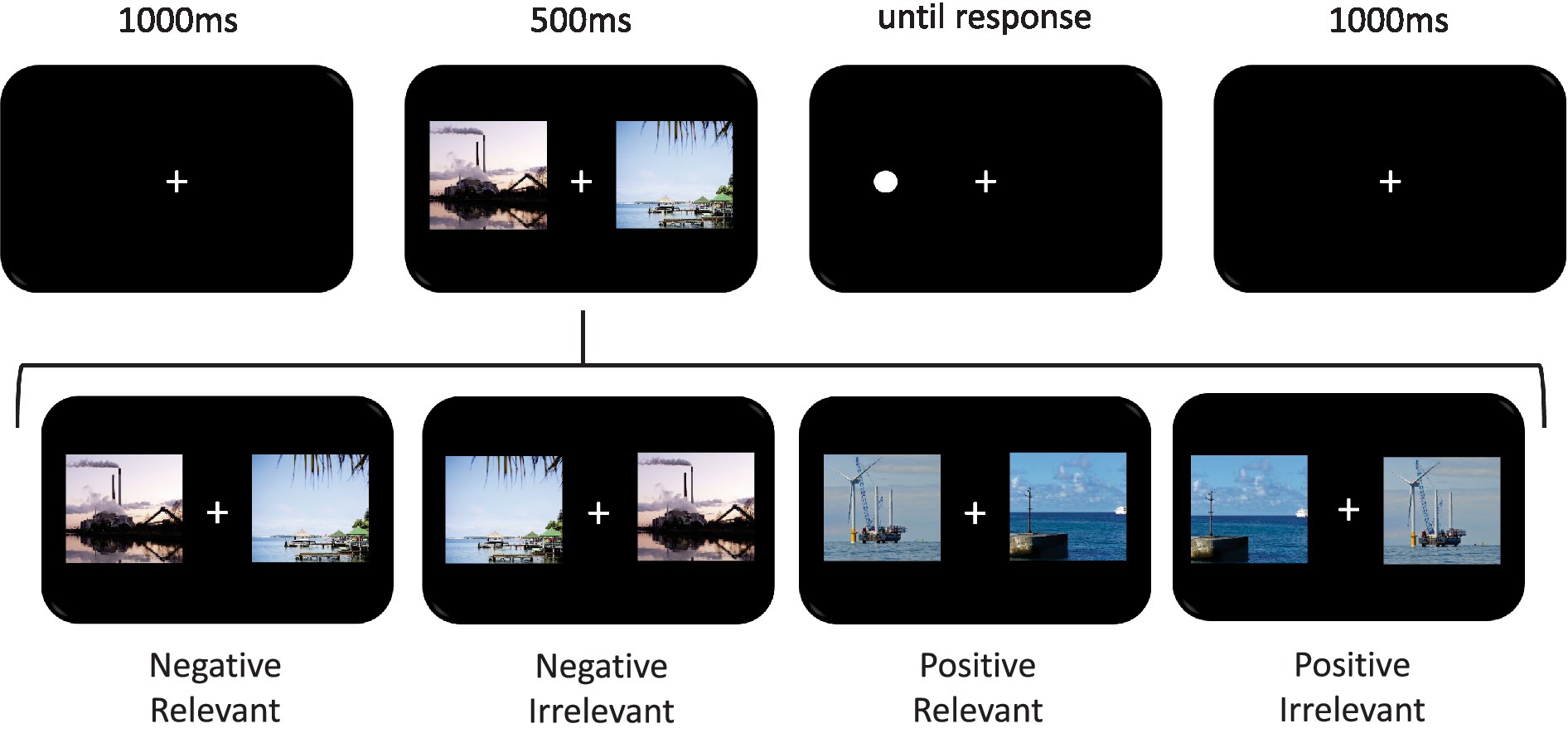

Each sample utilized a modified dot-probe task (MacLeod et al., 1986; MacLeod and Mathews, 1988) with climate change relevant images. The details of the specific images and dot-probe tasks used in this report have been previously published (Carlson et al., 2020). Briefly, images used in each experiment were selected from the affective images of climate change database (https://affectiveclimateimages.weebly.com; Lehman et al., 2019).1 The database contains a total of 320 digital images rated on their emotional valence (1 unpleasant to 9 pleasant), emotional arousal (1 calm to 9 exciting), and relevance (1 least relevant to 9 most relevant) to climate change. All tasks were programmed in E-Prime (Psychology Software Tools, Pittsburg, PA) and displayed on a 60 Hz 16′′ LCD computer monitor. All variants of the task used the same general sequence of events, which are depicted and summarized in Figure 1. It should be noted that for relatively simplistic and universal emotional stimuli (such as facial expressions), shorter (<300 ms) interstimulus intervals result in more robust bias effects (Torrence et al., 2017), greater reliability (Chapman et al., 2019), and a stronger association with anxiety (Bantin et al., 2016). However, informal pilot testing in our lab lead to the conclusion that the complex scenes of climate change related information used here would need longer display times for the content to be processed and therefore a stimulus duration of 500 ms was used here. As previously reported, this stimulus duration has been found to elicit attention bias effects for climate change images in the dot-probe task (Carlson et al., 2019b, 2020).

Figure 1. Trial structure of the dot-probe task of attentional bias. First, each trial started with a 1,000 ms white fixation cue (+) in the center of a black background. Second, two images were simultaneously presented to the left and right side of the fixation cue for 500 ms. All trials contained a climate relevant and a climate irrelevant image pair. These images each extended 10° × 12° of the visual angle and were separated by 14.5° of the visual angle. Third, a target dot appeared immediately after the images were removed and remained on the screen until a response was recorded. Fourth and finally, a 1,000 ms intertrial interval separated trials. Participants were seated 59 cm from the screen and instructed to focus on the central fixation cue throughout each trial while using their peripheral vision to locate the target dot as quickly as possible. An E-Prime serial response box was used to indicate left and right sided targets by (respectively) pressing the “1” button with their right index finger and the “2” button with their right middle finger. Faster reaction times to targets occurring at locations preceded by a climate change relevant image indicates attentional bias for the climate change relevant image over the irrelevant image.

Climate-relevant and climate-irrelevant images were randomly presented to the left visual field or right visual field for each participant. There were an equal number of trials with the target dot occurring on the same side of the screen as the climate change-relevant image and on the same side as the climate change-irrelevant image (see below for more details of each specific sample). Faster reaction times (RTs) to targets occurring at the climate-relevant location (i.e., traditionally referred to as congruent trials in the dot-probe literature) compared to climate-irrelevant location (i.e., incongruent trials) are considered representative of attentional bias (MacLeod et al., 1986; MacLeod and Mathews, 1988). At the conclusion of each block, participants received feedback about their overall accuracy and reaction time to encourage accurate rapid responses. The specific design of each sample is summarized below.

Sample one utilized a 2 × 2 (emotional valence × relevance of the target location) factorial design and consisted of 3 blocks of 120 trials with 30 in each cell: positive relevant, positive irrelevant, negative relevant, & negative irrelevant. This yielded 360 total trials, with 90 trials in each cell. Positive images included windmills and solar panels, whereas negative images included industrial air pollution, melting ice, and natural disasters.

Sample two utilized a 2 × 3 factorial design with location relevancy (relevant vs. irrelevant) × image type (cause vs. effect vs. solution) as the independent variables. The dot-probe task used in sample 2 contained 3 blocks of 180 trials with 30 in each cell: yielding 540 total trials, with 90 trials in each cell type. Causes included images of industrial air pollution and deforestation. Effects included images of melting ice and natural disasters. Solutions included images of windmills and solar panels.

Sample three utilized a 2 × 2 factorial design with location relevancy (relevant vs. irrelevant) and image type (cause vs. effect) as the independent variables. The dot-probe task in sample 3 consisted of 3 blocks of 144 trials with 36 trials in each cell: yielding 432 total trials, with 108 trials in each cell type. Cause and effect images included the same types of stimuli used in sample 2.

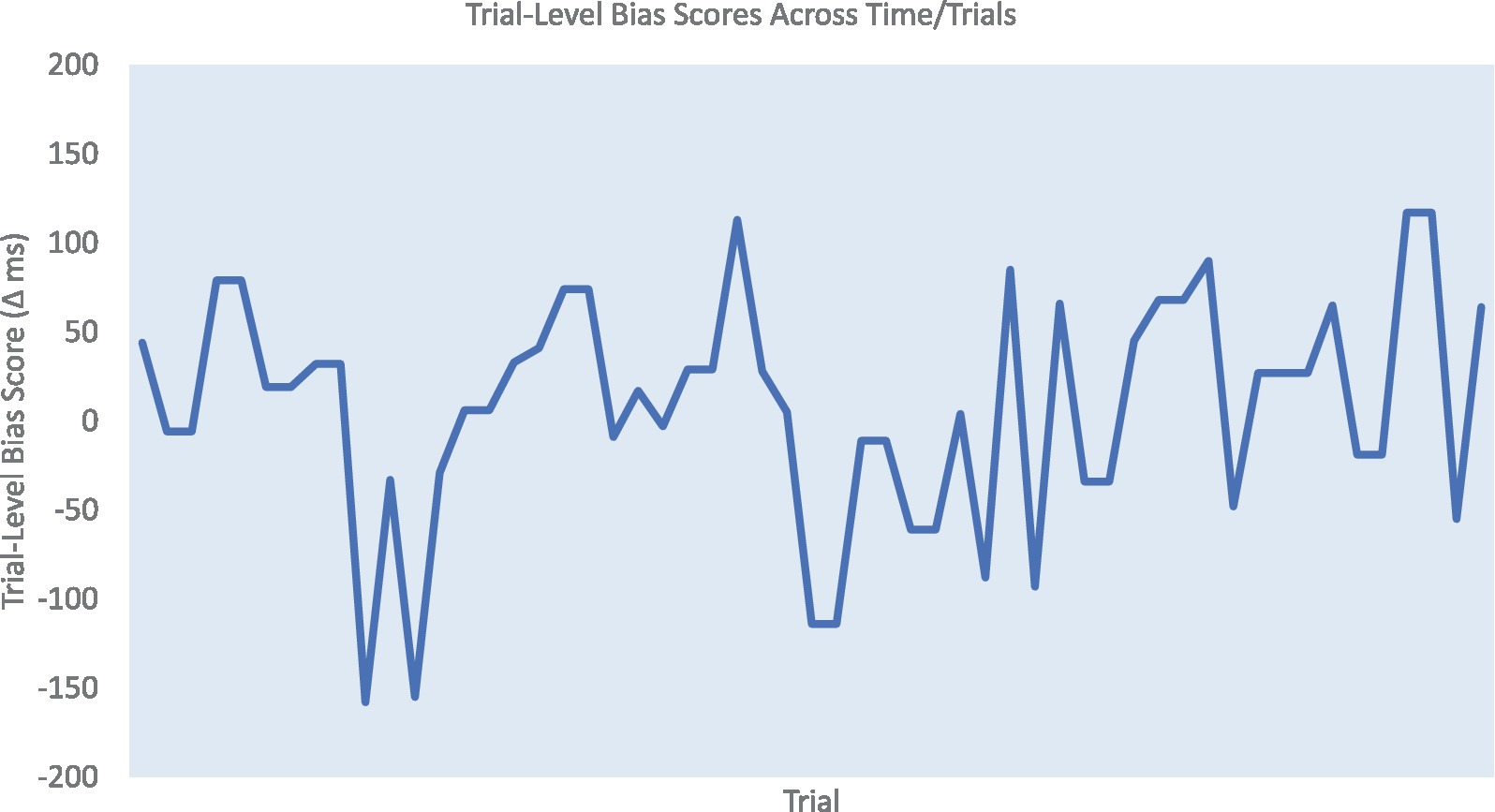

2.3. Data reduction and analysis procedures

Consistent with previous research (Torrence et al., 2017; Carlson et al., 2019a), we only included correct responses between 150 and 750 ms after the presence of the target in the dot-probe task to eliminate premature responses and lapses in attention (98.24% of the data was included for Sample 1, 95.38% for Sample 2, and 95.12% for Sample 3). Traditional attentional bias was defined as the difference between the mean RT of incongruent and congruent conditions (i.e., mean incongruent – congruent RT). The calculation of ABV was based on the trial-level bias score method (Zvielli et al., 2015), which has been shown to be more reliable than other ABV approaches (Molloy and Anderson, 2020). To compute the trial level bias score, each congruent trial was first paired with the closest incongruent trial with a maximum distance of 5 trials backward or forward. Similarly, each incongruent trial was paired with its closest congruent trial. Next, the trial level bias scores were obtained by subtracting the RT of congruent from incongruent trials for each pair (see Figure 2). To calculate ABV, the summed distance between succeeding trial level bias scores was divided by the total number of trial level bias scores. General RT variability (RTV) was obtained by calculating the standard deviation of RTs across all (congruent and incongruent) trials. All measures were computed separately for each block. To examine the reliability of traditional attentional bias and ABV, bivariate Pearson correlations across blocks were performed in SPSS 28. In addition, in order to control for the influence of general RTV, partial correlations across blocks were also conducted for each measurement.

Figure 2. An example of trial level bias scores (TLBSs) of one block in the dot-probe task. Attention bias variability (ABV) is computed as the summed distance between succeeding TLBSs divided by the total number TLBSs.

3. Results

3.1. Traditional attentional bias score reliability

In each of the three independent samples analyzed here, traditional measures of attentional bias were generally uncorrelated across blocks 1–3 for different types of climate change images.2 These measures were also generally uncorrelated with general RTV and partial correlations controlling for RTV did not drastically alter the association between traditional measures of attentional bias across blocks. For all correlations with traditional measures of attentional bias, see Table 1 (samples 1–3, respectively).

3.2. Attention bias variability reliability

In general, across samples 1–3, ABV-based measures of attentional bias were moderately to highly correlated across blocks and moderately to highly correlated with general RTV. In partial correlations controlling for RTV, ABV-based measures only weakly correlated across blocks and in the majority of cases these correlations were no longer significant. See Table 1 for all ABV correlations for samples 1–3, respectively.

3.3. Attention bias variability correlations with pro-environmental disposition

The New Ecological Paradigm questionnaire (Dunlap et al., 2000) was administered to samples 2 and 3 as a measure of pro-environmental disposition. Although ABV in these samples appears to be driven by RT variability, we nevertheless assessed the degree to which these scores offer predictive validity for pro-environmental disposition.3 Across samples 2 and 3, ABV scores were unrelated to pro-environmental disposition (Sample 2: Cause r = 0.03, Effect r = −0.08, Solution r = −0.16 & Sample 3: Cause r = −0.02 & Effect r = 0.02, ps ≥ 0.22). Note that in a separate sample, climate change anxiety was also unrelated to these ABV scores.4

4. Discussion

This study aimed to assess the reliability of RT-based measures of attention bias and ABV to climate change images in the dot-probe task. The results obtained here across three dot-probe tasks of attentional bias to climate change relevant images indicate that neither the traditional (RT difference score) approach nor the innovative ABV approach were reliable measures of attentional bias. The traditional approach was not consistently influenced by general RT variability, but was nevertheless unreliable. This finding is consistent with a growing body of literature using the dot-probe task in other fields (Schmukle, 2005; Staugaard, 2009; Price et al., 2015; Aday and Carlson, 2019; Chapman et al., 2019; Van Bockstaele et al., 2020). On the other hand, ABV scores initially correlated across blocks demonstrating some degree of reliability, which is consistent with prior ABV research (Naim et al., 2015; Price et al., 2015; Davis et al., 2016; Rodebaugh et al., 2016; Zvielli et al., 2016; Molloy and Anderson, 2020). Yet, when controlling for general RT variability, ABV measures were no longer correlated across blocks indicating that they likely measure general RT variability rather than attention bias behavior. Again, this finding echoes what has been reported in prior studies assessing attentional bias to threat and food related stimuli (Kruijt et al., 2016; Carlson and Fang, 2020; Vervoort et al., 2021; Carlson et al., 2022a). Finally, ABV measures were found to be unrelated to individual differences in pro-environmental orientation (and climate change anxiety)—suggesting poor predictive validity. Thus, many of the shortcomings of the RT difference score and ABV approaches reported in other fields appear to generalize to the use of environmental stimuli in the dot-probe task.

Based on these findings, we recommend that RT-based measures of attentional bias to environmental information should not be used for individual differences (or correlational) research. As much of the field of environmental attentional bias research is interested in linking variability in attentional bias to individual differences related to environmentalism or climate change concern (Beattie and McGuire, 2012; Sollberger et al., 2017; Whitman et al., 2018; Carlson et al., 2019b; Luo and Zhao, 2019; Meis-Harris et al., 2021), new/different approaches to capturing attentional bias are needed for these research objectives. As previously mentioned, eye tracking is another common approach to measuring attentional bias to climate change relevant information (Beattie and McGuire, 2012; Sollberger et al., 2017; Luo and Zhao, 2019). Some research suggests that eye-tracking measures of attention are (more) reliable (Sears et al., 2019; van Ens et al., 2019; Soleymani et al., 2020), whereas other research suggests that eye tracking may not be more reliable (Skinner et al., 2018). Therefore, future research should aim to assess the reliability of eye tracking-based measures from the paradigms used to measure environmental attentional bias. In addition, electroencephalographic measures of brain activity have been found to more reliably measure covert attention (Kappenman et al., 2014; Reutter et al., 2017) and may be appropriate for measuring environmental attentional biases.

Although the results obtained here indicate that RT-based measures in the dot-probe task are not suitable for capturing individual differences in attentional bias to climate change information, this does not preclude the use of RT-based tasks to assess the effects of experimental manipulations on attentional bias. Reliability is not required for comparisons across experimental groups/conditions, but is for individual differences research. The field needs to identify ways to increase the reliability of attention bias estimates. Reaction times start from a promising point (highly correlated across blocks: Sample 1: r = 0.77–0.92, Sample 2: r = 0.85–0.92, and Sample 3: r = 0.82–0.91), but data quality quickly diminishes when calculating differences scores (Hedge et al., 2018).

Initial research, based on RTs in the dot-probe task, indicates that emotionally positive images of climate change solutions capture attention to a greater extent than emotionally negative images of climate change causes and effects (Carlson et al., 2020). Future experimental research is needed to determine whether the same pattern is observed using other images as well as verbal, auditory, and multimodal information about climate change. Indeed, determining what types of climate change relevant information is best suited to capture individuals’ attention would be useful in the effective design of environmental communication related to climate change. Furthermore, identifying interventions, contextual factors, and other manipulations that can modify attention to climate change information has important implications for increasing attentional focus on climate change messaging. For example, research has shown that attention training can increase attention to climate change information (Carlson et al., 2022b). In summary, although much research on environmental attentional biases focuses on individual differences in attentional bias and our data indicate that RT-based (difference score & ABV) measures of attentional bias are unsuitable for correlational research, more experimental research is needed to better understand the variables that lead to an effective focus of attention on climate change information.

This study is not without limitation. First, the samples utilized here were primarily comprised of college-age females, and although it is unlikely that the reliability of attention bias and ABV measures differ across populations, the homogeneity of our sample(s) limits the generalization of the results. In addition, another limitation of this study is the sole use of climate change relevant images rather than other stimulus types. Although the reliability of attentional bias and ABV to other types of information (e.g., threat-related information) does not appear to be related to stimulus type (Staugaard, 2009; Carlson and Fang, 2020), it is possible that attention to climate change related information differs based on the stimulus type (e.g., images vs. words). Future research is needed to assess this possibility. Finally, although the dot-probe task is among the most common RT-based methods of assessing attentional bias, the extent to which RT-based reliability estimates of attentional bias observed here in the dot-probe task generalize to other RT-based tasks/measures is unclear. Yet, given that reliability is generally an issue for RT-based (difference score) measures (Hedge et al., 2018; Goodhew and Edwards, 2019), we do not expect these findings to be specific to the dot-probe task, but RT-based measures more broadly.

5. Conclusion

In summary, the present study aimed to assess the reliability of the dot-probe task using climate change relevant images. Both traditional (reaction time difference score) and innovative ABV measures were used, and both were found to lack reliability in measuring individual differences in attentional bias to climate images. These findings strongly suggest that the dot-probe task, and likely other RT difference score-based measures, are unsuitable for individual differences research assessing the correlation between participant factors, such as climate concern, and attention bias. Due to the growing body of work focusing on these and other individual differences, we argue that other measures of attention bias should be adopted for these purposes. No matter which measure of attentional bias is used, the reliability estimates of the measure should be included. Finally, RT-based cognitive tasks, such as the dot-probe, may still be appropriate for measuring differences in attention bias following various experimental interventions.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://osf.io/E9S8P/.

Ethics statement

The studies involving human participants were reviewed and approved by Northern Michigan University IRB. The patients/participants provided their written informed consent to participate in this study.

Author contributions

JC designed the study. JC and LF processed and analyzed the data. JC, LF, CC-C, and JF drafted the manuscript. All authors contributed to the article and approved the submitted version.

Acknowledgments

The authors would like to thank the students at Northern Michigan University for participating in this research as well as the students in the Cognitive × Affective Behavior & Integrative Neuroscience (CABIN) Lab for assisting in the collection of this data.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^The following images were used in Sample 1: 12, 13, 29, 39, 46, 49 (positive relevant), 8, 18, 23, 20, 37, 62 (negative relevant), 178, 194, 200, 210, 221, 240, 256, 277, 280, 303, 308, and 315 (irrelevant). The following images were used in Sample 2: 12, 13, 29, 39, 46, 49 (solution relevant), 8, 18, 21, 23, 35, 42 (cause relevant), 7, 16, 19, 20, 37, 62 (effect relevant), 178, 194, 200, 210, 221, 222, 240, 243, 256, 277, 280, 281, 289, 295, 303, 308, 312, and 315 (irrelevant). Sample 3 used the same cause and effect images used in sample 2.

2. ^Note that it could be argued that correlations might be weaker across blocks due to meaningful changes in bias across time. As an alterative approach, we performed odd-even split-half Pearson correlations for each condition. Split-half reliability for attentional bias to climate change solutions in Sample 2 revealed a significant negative correlation (r = −0.30, p = 0.023). All of the remaining split-half correlations were non-significant (r = −04 to 0.26, ps > 0.05).

3. ^Note that the associations with pro-environmental disposition and traditional measures of attentional bias from this sample have been reported elsewhere (Carlson et al., 2020).

4. ^In a separate sample (N = 120), we found no association between ABV and climate change anxiety for positive (r = 0.08, p = 0.39) and negative (r = −0.001, p = 0.99) images of climate change.

References

Aday, J. S., and Carlson, J. M. (2019). Extended testing with the dot-probe task increases test–retest reliability and validity. Cogn. Process. 20, 65–72. doi: 10.1007/s10339-018-0886-1

Bantin, T., Stevens, S., Gerlach, A. L., and Hermann, C. (2016). What does the facial dot-probe task tell us about attentional processes in social anxiety? A systematic review. J. Behav. Ther. Exp. Psychiatry 50, 40–51. doi: 10.1016/j.jbtep.2015.04.009

Beattie, G., and McGuire, L. (2012). See no evil? Only implicit attitudes predict unconscious eye movements towards images of climate change. Semiotica 2012, 315–339. doi: 10.1515/sem-2012-0066

Carlson, J. M., Aday, J. S., and Rubin, D. (2019a). Temporal dynamics in attention bias: effects of sex differences, task timing parameters, and stimulus valence. Cogn. Emot. 33, 1271–1276. doi: 10.1080/02699931.2018.1536648

Carlson, J. M., and Fang, L. (2020). The stability and reliability of attentional bias measures in the dot-probe task: evidence from both traditional mean bias scores and trial-level bias scores. Motiv. Emot. 44, 657–669. doi: 10.1007/s11031-020-09834-6

Carlson, J. M., Fang, L., and Kassel, D. (2022a). The questionable validity of attention bias variability: evidence from two conceptually unrelated cognitive tasks. J. Affect. Dis. Rep. 10:100411. doi: 10.1016/j.jadr.2022.100411

Carlson, J. M., Kaull, H., Steinhauer, M., Zigarac, A., and Cammarata, J. (2020). Paying attention to climate change: positive images of climate change solutions capture attention. J. Environ. Psychol. 71:101477. doi: 10.1016/j.jenvp.2020.101477

Carlson, J. M., Lehman, B. R., and Thompson, J. L. (2019b). Climate change images produce an attentional bias associated with pro-environmental disposition. Cogn. Process. 20, 385–390. doi: 10.1007/s10339-019-00902-5

Carlson, J. M., Voltz, M., Foley, J., Gentry, L., and Fang, L. (2022b). Changing how you look at climate change: Attention bias modification increases attention to climate change. Clim. Chang. 175. doi: 10.1007/s10584-022-03471-3

Chapman, A., Devue, C., and Grimshaw, G. M. (2019). Fleeting reliability in the dot-probe task. Psychol. Res. 83, 308–320. doi: 10.1007/s00426-017-0947-6

Davis, M. L., Rosenfield, D., Bernstein, A., Zvielli, A., Reinecke, A., Beevers, C. G., et al. (2016). Attention bias dynamics and symptom severity during and following CBT for social anxiety disorder. J. Consult. Clin. Psychol. 84, 795–802. doi: 10.1037/ccp0000125

Dunlap, R. E., Van Liere, K. D., Mertig, A. G., and Jones, R. E. (2000). Measuring endorsement of the new ecological paradigm: a revised NEP scale. J. Soc. Issues 56, 425–442. doi: 10.1111/0022-4537.00176

Goodhew, S. C., and Edwards, M. (2019). Translating experimental paradigms into individual-differences research: contributions, challenges, and practical recommendations. Conscious. Cogn. 69, 14–25. doi: 10.1016/j.concog.2019.01.008

Hedge, C., Powell, G., and Sumner, P. (2018). The reliability paradox: why robust cognitive tasks do not produce reliable individual differences. Behav. Res. Methods 50, 1166–1186. doi: 10.3758/s13428-017-0935-1

Iacoviello, B. M., Wu, G., Abend, R., Murrough, J. W., Feder, A., Fruchter, E., et al. (2014). Attention bias variability and symptoms of posttraumatic stress disorder. J. Trauma. Stress. 27, 232–239. doi: 10.1002/jts.21899

Kappenman, E. S., Farrens, J. L., Luck, S. J., and Proudfit, G. H. (2014). Behavioral and ERP measures of attentional bias to threat in the dot-probe task: poor reliability and lack of correlation with anxiety. Front. Psychol. 5:1368. doi: 10.3389/fpsyg.2014.01368

Kruijt, A.-W., Field, A. P., and Fox, E. (2016). Capturing dynamics of biased attention: new attention bias variability measures the way forward? PLoS One 11:e0166600. doi: 10.1371/journal.pone.0166600

Lehman, B., Thompson, J., Davis, S., and Carlson, J. M. (2019). Affective images of climate change. Front. Psychol. 10:960. doi: 10.3389/fpsyg.2019.00960

Luo, Y., and Zhao, J. (2019). Motivated attention in climate change perception and action. Front. Psychol. 10:1541. doi: 10.3389/fpsyg.2019.01541

Luo, Y., and Zhao, J. (2021). Attentional and perceptual biases of climate change. Curr. Opin. Behav. Sci. 42, 22–26. doi: 10.1016/j.cobeha.2021.02.010

MacLeod, C., and Mathews, A. (1988). Anxiety and the allocation of attention to threat. Q. J. Exp. Psychol. A 40, 653–670. doi: 10.1080/14640748808402292

MacLeod, C., Mathews, A., and Tata, P. (1986). Attentional bias in emotional disorders. J. Abnorm. Psychol. 95, 15–20. doi: 10.1037/0021-843X.95.1.15

Meis-Harris, J., Eyssel, F., and Kashima, Y. (2021). Are you paying attention? How pro-environmental tendencies relate to attentional processes. J. Environ. Psychol. 74:101591. doi: 10.1016/j.jenvp.2021.101591

Molloy, A., and Anderson, P. L. (2020). Evaluating the reliability of attention bias and attention bias variability measures in the dot-probe task among people with social anxiety disorder. Psychol. Assess. 32, 883–888. doi: 10.1037/pas0000912

Naim, R., Abend, R., Wald, I., Eldar, S., Levi, O., Fruchter, E., et al. (2015). Threat-related attention bias variability and posttraumatic stress. Am. J. Psychiatry 172, 1242–1250. doi: 10.1176/appi.ajp.2015.14121579

Pecl, G. T., Araújo, M. B., Bell, J. D., Blanchard, J., Bonebrake, T. C., Chen, I.-C., et al. (2017). Biodiversity redistribution under climate change: impacts on ecosystems and human well-being. Science 355:eaai9214. doi: 10.1126/science.aai9214

Price, R. B., Kuckertz, J. M., Siegle, G. J., Ladouceur, C. D., Silk, J. S., Ryan, N. D., et al. (2015). Empirical recommendations for improving the stability of the dot-probe task in clinical research. Psychol. Assess. 27, 365–376. doi: 10.1037/pas0000036

Reutter, M., Hewig, J., Wieser, M. J., and Osinsky, R. (2017). The N2pc component reliably captures attentional bias in social anxiety. Psychophysiology 54, 519–527. doi: 10.1111/psyp.12809

Rodebaugh, T. L., Scullin, R. B., Langer, J. K., Dixon, D. J., Huppert, J. D., Bernstein, A., et al. (2016). Unreliability as a threat to understanding psychopathology: the cautionary tale of attentional bias. J. Abnorm. Psychol. 125, 840–851. doi: 10.1037/abn0000184

Schmukle, S. C. (2005). Unreliability of the dot probe task. Eur. J. Pers. 19, 595–605. doi: 10.1002/per.554

Sears, C., Quigley, L., Fernandez, A., Newman, K., and Dobson, K. (2019). The reliability of attentional biases for emotional images measured using a free-viewing eye-tracking paradigm. Behav. Res. Methods 51, 2748–2760. doi: 10.3758/s13428-018-1147-z

Skinner, I. W., Hübscher, M., Moseley, G. L., Lee, H., Wand, B. M., Traeger, A. C., et al. (2018). The reliability of eyetracking to assess attentional bias to threatening words in healthy individuals. Behav. Res. Methods 50, 1778–1792. doi: 10.3758/s13428-017-0946-y

Soleymani, A., Ivanov, Y., Mathot, S., and de Jong, P. J. (2020). Free-viewing multi-stimulus eye tracking task to index attention bias for alcohol versus soda cues: satisfactory reliability and criterion validity. Addict. Behav. 100:106117. doi: 10.1016/j.addbeh.2019.106117

Sollberger, S., Bernauer, T., and Ehlert, U. (2017). Predictors of visual attention to climate change images: an eye-tracking study. J. Environ. Psychol. 51, 46–56. doi: 10.1016/j.jenvp.2017.03.001

Staugaard, S. R. (2009). Reliability of two versions of the dot-probe task using photographic faces. Psychol. Sci. Q. 51, 339–350.

Tollefson, J. (2019). Humans are driving one million species to extinction. Nature 569, 171–172. doi: 10.1038/d41586-019-01448-4

Tollefson, J. (2020). How hot will earth get by 2100? Nature 580, 443–445. doi: 10.1038/d41586-020-01125-x

Torrence, R. D., Wylie, E., and Carlson, J. M. (2017). The time-course for the capture and hold of visuospatial attention by fearful and happy faces. J. Nonverbal Behav. 41, 139–153. doi: 10.1007/s10919-016-0247-7

Van Bockstaele, B., Lamens, L., Salemink, E., Wiers, R. W., Bögels, S. M., and Nikolaou, K. (2020). Reliability and validity of measures of attentional bias towards threat in unselected student samples: seek, but will you find? Cognit. Emot. 34, 217–228. doi: 10.1080/02699931.2019.1609423

van Ens, W., Schmidt, U., Campbell, I. C., Roefs, A., and Werthmann, J. (2019). Test-retest reliability of attention bias for food: robust eye-tracking and reaction time indices. Appetite 136, 86–92. doi: 10.1016/j.appet.2019.01.020

Vervoort, L., Braun, M., De Schryver, M., Naets, T., Koster, E. H., and Braet, C. (2021). A pictorial dot probe task to assess food-related attentional bias in youth with and without obesity: overview of indices and evaluation of their reliability. Front. Psychol. 12:561. doi: 10.3389/fpsyg.2021.644512

Whitman, J. C., Zhao, J., Roberts, K. H., and Todd, R. M. (2018). Political orientation and climate concern shape visual attention to climate change. Clim. Chang. 147, 383–394. doi: 10.1007/s10584-018-2147-9

Zvielli, A., Bernstein, A., and Koster, E. H. (2015). Temporal dynamics of attentional bias. Clin. Psychol. Sci. 3, 772–788. doi: 10.1177/2167702614551572

Keywords: dot-probe, reliability, climate change, attention bias, attention bias variability

Citation: Carlson JM, Fang L, Coughtry-Carpenter C and Foley J (2023) Reliability of attention bias and attention bias variability to climate change images in the dot-probe task. Front. Psychol. 13:1021858. doi: 10.3389/fpsyg.2022.1021858

Edited by:

Mandy Rossignol, University of Mons, BelgiumReviewed by:

Mario Reutter, Julius Maximilian University of Würzburg, GermanyDaniela Acquadro Maran, University of Turin, Italy

Copyright © 2023 Carlson, Fang, Coughtry-Carpenter and Foley. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joshua M. Carlson, ✉ joshcarl@nmu.edu

Joshua M. Carlson

Joshua M. Carlson Lin Fang

Lin Fang Caleb Coughtry-Carpenter

Caleb Coughtry-Carpenter John Foley

John Foley