- Department of Home Economics and Ecology, Harokopio University, Athens, Greece

This paper focused on examining primary school students’ creative potential (CP) through dynamic assessment (DA). The study was carried out through a quasi-experimental design. The sample consisted of 90 Greek primary school students between fourth and sixth grade who were randomly divided into a group that received DA (N = 37) and a control group (N = 53). Both groups were initially tested with the graphic-artistic form of the Evaluation of Potential Creativity (EPoC) test. The DA group received mediation with graduated prompting while no such treatment was applied to the control group, and both groups were post-tested. The results demonstrated that mediation significantly improved DA group’s CP. It appeared that DA contributes in demonstrating a clearer portrait of students’ CP which can be of valuable assistance for nurturing creativity.

Introduction

Classroom assessment has been defined as a “process of collecting, synthesizing, and interpreting information to aid in decision making” (Russell and Airasian, 2011, p. 3). It has been characterized as a core element of teaching and learning (Newton, 2007; Russell and Airasian, 2011) while one of its core aims is to help students to reach their fullest potential (Jones, 2009).

Intellectual potential has traditionally been assessed by psychometric testing that claimed to measure the “g factor,” an unchangeable genetically predetermined ability (Jensen, 1998). Nevertheless, psychometric testing has not been proven to be very helpful in education as it does not provide adequate information about children’s learning processes and their levels of understanding (e.g., Haywood and Lidz, 2007; Grigorenko, 2009) on which differentiated instruction can be grounded (Fisher and Frey, 2015). It has also been claimed that psychometric testing even has a negative impact on some students’ learning (Stiggins, 2005; Black et al., 2010).

On the contrary, formative assessment or assessment for learning aims at unlocking and enhancing students’ potential by utilizing judgments about the quality of student performances and pieces, or works (Sadler, 1989; Black and Wiliam, 2009; Wiliam, 2011). However, formative assessment has received criticisms related to the absence of fundamental measurement principles and mostly to the lack of a solid psycho-educational theoretical background (Yorke, 2003; Bennett, 2011; Coffey et al., 2011).

Dynamic Assessment

Dynamic assessment (DA) aims at revealing student potential systematically and based on a sound and valid theoretical background. It was defined as:

An umbrella term used to describe a heterogeneous range of approaches that are linked by a common element, that is, instruction and feedback are built into the testing process and are differentiated on the basis of an individual’s performance (Elliott, 2003, pp. 16–17).

Although the given definitions of DA may vary, their constant aspect is the active intervention by examiners and the assessment of examinees’ response to intervention (Haywood and Tzuriel, 2002). Theoretically DA is grounded in Vygotsky’s Sociocultural Theory of Mind (e.g., Vygotsky, 1978) and Feuerstein’s theory of structural cognitive modifiability (e.g., Feuerstein et al., 1979, 1981, 1991; Feuerstein and Jensen, 1980). The main focus of DA is to reveal the potential of the assessee. The term “potential” is endorsed by most DA theorists as described in the definition of zone of proximal development Vygotsky (1978) as:

The distance between the actual developmental level as determined by independent problem solving and the level of potential development as determined through problem-solving under adult guidance, or in collaboration with more capable peers (p. 86).

According to this definition learning potential is conceptualized by taking into account the expressed ability (level) of a student before and after guidance. Hence, the fundamental difference of DA compared to static normative assessment is its interest in the learning outcomes that occur through a process that involves guidance or mediation and not in a score provided by static measurement that might not be indicative of the assessees’ potential (Gustafson et al., 2014; Navarro and Lara, 2017). DA theorists do not completely deny that static normative tests provide evidence about students’ potential as they appear to have a predictive validity of school performance (Haywood and Lidz, 2007), but argue that such a manifestation may be fractional and biased especially against those from different cultural environments (Grigorenko, 2009) and with learning disabilities (Fletcher et al., 2018).

Since the early studies of DA and in line with the Vygotskian approach, the word mediation has been used to describe a form of intervention aiming at removing the barriers of learning and performance through the tailor made individual instructions and the interaction between the mediator and the child (Poehner, 2008). The word mediation is conceptually close to educational or training interventions for developing cognitive abilities, however, minor differences have been identified: Mediational is directed to the means of promoting logical thinking and systematic learning while intervention usually refers to academic learning and curriculum (Haywood and Lidz, 2007).

The various models of DA have employed approaches that differ in the sequence of testing and mediation takes as well as in the type of mediation. The most commonly used DA approach is the “sandwich procedure” with graduated prompting. According to “sandwich” procedure the examinees are assessed by a first test (pre-test), then they receive individual mediation adjusted to their personal needs, strengths and weaknesses, and afterwards they are assessed through a second test (post-test) (Sternberg and Grigorenko, 2002). The assessment’s result is estimated by taking into account both the pre-test and the post-test (Haywood and Lidz, 2007) or only the post-test (Sternberg and Grigorenko, 2002). The result therefore, is not a static estimation of the child’s cognitive abilities but a process that leads to the manifestation of the child’s cognitive potential through individually tailored mediation (Elliott, 2003). Graduated prompting, rooted in the pioneering work of Campione et al. (1985) is a widely applied mediational approach of DA (e.g., Poehner and Lantolf, 2013; Navarro and Lara, 2017; Resing et al., 2017a,b). In this approach the prompts are designed on the basis of each child’s learning needs and gradually reduced as the child’s ability to cope sufficiently and independently with the task demands raises (Resing, 2013).

The sandwich approach with graduated prompting has been primarily employed for the DA of inductive or analogical reasoning. Several studies have shown that in post-testing through this procedure children from different cultural environments expressed their potential in analogical thinking by employing more advanced strategies in problem solving (e.g., Resing et al., 2009, 2017a) as well as advanced verbal and behavioral inductive strategies in learning (e.g., Resing et al., 2017b). It has also been successfully applied for the DA of second language listening and reading comprehension (e.g., Poehner and Lantolf, 2013), for the DA of academically disadvantaged students’ reading difficulties (e.g., Navarro and Lara, 2017) and for the DA of mathematical problem solving (e.g., Wang, 2010).

To our knowledge DA has never been applied to the domain of creativity. Based on its effectiveness in revealing student’s potential in the domains mentioned above we presumed that the employment of DA in creativity would provide useful insights regarding creative potential (CP) and its development.

Creative Potential and Its Measurement

Besides the lack of consensus among the researchers and theorists in defining creativity (Weisberg, 2015), it is commonly described as the ability to produce novel and context appropriate work (Kaufman and Sternberg, 2007; Beghetto and Kaufman, 2014). Although the development of creativity has been considered as one of the major goals of students’ learning (e.g., Rotherham and Willingham, 2010; Binkley et al., 2012; Wyse and Ferrari, 2015) still is generally accepted that there is a lot need to be done, since creativity expression at schools remains to a great extent an unachieved educational goal (Beghetto and Kaufman, 2014).

Creativity testing has followed the intelligence psychometric tradition; some divergent creative thinking batteries as Torrance (1972, 1988) test for creativity (TTCT) or Wallach and Kogan (1965) have been widely used for the assessment of creativity (Kaufman et al., 2008, 2012). Regardless of modern criticism of psychometric normative measurement in relation to their construct, content, predictive discriminant and ecological validity (for a review see Zeng et al., 2011), their creators claimed that they measured CP by assessing general abilities that under certain circumstances would be realized in later life, something which appears to be happening up to a degree (Kim, 2006; Runco et al., 2010).

Creative potential has been described as an existing, not yet expressed set of creative abilities that every student has, rather than outstanding creative performance (Runco, 2003). It has been defined as a latent ability to produce original, adaptive work, which is part of an individual’s “human capital” (Walberg, 1988). In recent years a systematic attempt to study and measure CP on a substantiated theoretical basis was attempted by Barbot et al. (2011, 2015, 2016) and Lubart et al. (2012, 2013). After an extensive review of the relevant literature and a significant number of studies they carried out, they proposed the confluence theory of CP according to which several distinct, but interrelated resources, such as biological and genetic factors, aspects of cognition like divergent thinking, aspects of conation (personality, motivational and emotional factors) and task-relevant knowledge are reflected in CP. They described that CP has been assessed either by resource-based (or analytic) approaches where the fit between an individual’s resources and creative task demands has been examined, or outcome-based (or “holistic”) approaches which include test tasks that resemble aspects of creative work.

On the basis of the confluence theory of CP, and having taken into account the existing approaches of CP measurement, they devised the “EPoC” test. EPoC explores the human resources described in the confluence theory through abstract and concrete divergent-explanatory and convergent-integrative creative thinking tasks. Divergent thinking tasks stimulate flexibility, divergent thinking, selective encoding (aspects of cognition), supported by openness to experiences and intrinsic task-oriented motivation (conative factors). Convergent thinking tasks stimulate associative thinking selective comparison and combination (aspects of cognition) supported by conative factors such as tolerance for ambiguity, perseverance, risk taking, and achievement motivation. Task relevant knowledge is also taken into account by including concrete tasks stimulated by known objects to the student as well by abstract thinking, where students are asked to apply existing knowledge to abstract stimulation and make it concrete (Lubart et al., 2012; Barbot et al., 2015, 2016). EPoC results can formulate multifaceted CP profiles which describe students’ strengths and weaknesses in divergent, convergent, abstract and concrete creative thinking processes of each domain (Lubart et al., 2012) which can be used as the basis for the design of teaching creativity programs (Barbot et al., 2015).

According to the confluence theory, CP is trainable as well as most of the individual resources involved in it. Although Lubart and colleagues have repeatedly demonstrated that education may affect the development of CP (e.g., Besançon and Lubart, 2008; Barbot et al., 2011; Besançon et al., 2013), they have stressed that more should be done in order to comprehend how it can be trained (Barbot et al., 2015). Thus it is implicitly accepted that a zone of proximal development of CP exists.

The main endeavor of the present study was to relate the confluence theory of CP to the Vygotskian perspective of cognitive development. It aimed to examine CP as described by the confluence theory in relation to the Vygotskian conceptualization of potential i.e., the development from unassisted to assisted expression of CP. It actually intended to study students’ “potential of CP” through DA; in other words to investigate the development of CP that mediation evokes. More specifically, it was hypothesized that both divergent-exploratory and convergent-integrative thinking as well as abstract and concrete thinking would develop significantly after mediation in accordance to the theory of DA and the relevant research findings.

Materials and Methods

Sample

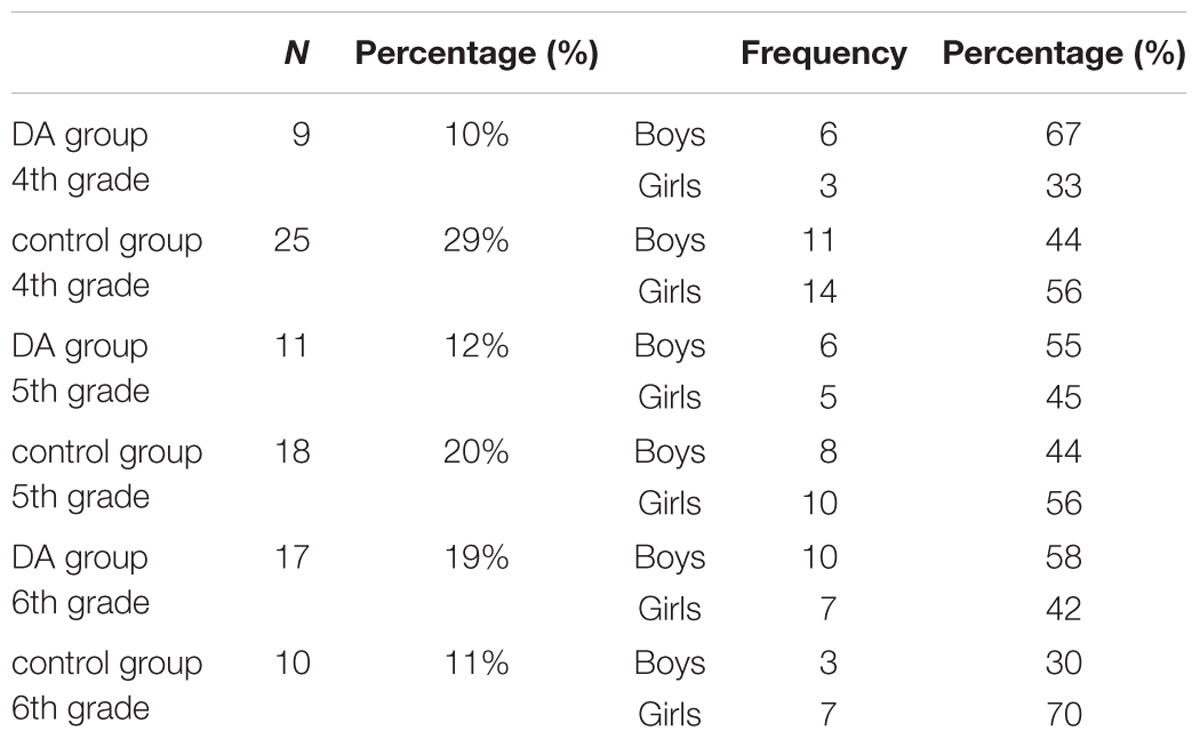

The sample consisted of ninety students from the fourth fifth and sixth grades studying in two public neighboring elementary schools of a socioeconomically deprived inner city area of Athens in Greece (see Table 1). Students of the first school were used as the experimental (DA group, N = 37) group and students of the second school as the control group (N = 53). Their mean age was 11 years. Both participants and their parents consented to participate. The majority of participants (92%) were of Greek origin. According to their teachers’ reports participants of non-Greek descent had efficient language understanding and expression compared to the Greek students. In total, boys accounted for 49% and girls for 51% of the sample.

Instrumentation

Creative thinking was assessed through the graphic – artistic version of EPoC (Lubart et al., 2012). EPoC provides two equivalent forms which can be used as pre- and post-tests for the measurement of interventions for creativity enhancement (Lubart et al., 2013). Both forms assess divergent explanatory thinking, through a graphic abstract and a concrete stimulus as well as convergent integrative thinking through a set of graphic abstract and concrete stimuli. The tasks are scored on a 7-point scale. EPoC is not standardized in Greece and therefore the French standardized scores were used as appear in the manual.

Design and Procedure

In accordance to the Greek regulations, approval of the study was obtained by the Greek Ministry of Education acting on a proposal by the Institute of Educational Policy which examined the ethics and the methodology of the study (approval number 62671/Δ1/16/06-04-2017). The study was not approved by an Ethics Committee as this was not required as per applicable institutional and national guidelines. In accordance to the approval terms, first, the principals and teachers of the participating schools consented after they were informed about the purpose, the duration, the anonymity and the confidentiality of the study. Afterwards, a letter including a form of consent was send to the parents of the students including detailed information about the purpose of data collection, the duration of the study, as well as assurance for anonymity and confidentiality. In the letter it was also stressed that children would not run physical or psychological risks during the study and that they could withdraw at any point during the process. In this research only students whose parents signed the consent form participated.

A quasi-experimental design was implemented as more suitable to examine the effect of manipulative conditions to a specific group compared to the effect of its absence to another group (e.g., Cook et al., 2002; Seel, 2012) and hence it has been used in most DA studies as mentioned above. The study included three stages: (a) pre-testing of both DA and control group on a group – setting (b) individual mediation to the DA group students (c) post-testing of both groups on a group setting. Both pre- and post-testing were administered under the supervision of the researchers. Each test completion required approximately 40 min and students were allowed to ask questions in an unstressed and uncompetitive atmosphere.

The mediation was designed in accordance with the general procedural guidelines for conducting DA assessment as appear in Haywood and Lidz (2007) and executed in three steps for each task. Each step included graduated prompting adjusted and modified according to the individual responses of each child in pre-test tasks. It took place individually to every participant of the DA group in two phases, one for the abstract stimulation, and another for the concrete. Each phase required approximately 20 min.

The first step concerning the linguistic understanding of the requirements of each task consisted of three prompts used for all four tasks. It included the following prompts:

Prompt 1.1: Are there any words or phrases that you do not understand in the task instruction?

Prompt 1.2: Which words do you believe that will help you to think what to do?

Prompt 1.3: Can you repeat in your own words the task instruction?

The second step in the mediation aims at the acknowledgment of familiar concepts and ways of coping with the task demands. In divergent thinking it aimed at acknowledging students’ responses to the stimuli, and ways of producing new multiple ones. The prompts for creative divergent thinking tasks of EPoC were:

Prompt 2.1: Give a name for each one of your drawings.

Prompt 2.2: Can you put your drawings into groups? For example toys, fruit, etc.

Prompt 2.3: What other drawings can you make? Can you think of other groups for your drawings? Try to think of as many as you can

In convergent thinking the second step aimed at acknowledging the stimuli they chose to use, as well as to use more in original and integrative ways. The prompts for the convergent thinking tasks (second and forth) of EPoC were:

Prompt 2.1: Name the pictures of the card that you chose and sketch on your drawing. What did you draw?

Prompt 2.2: Do you think that you could use more pictures of the card? How could you use more pictures in your drawing?

Prompt 2.3: Can you think any other ideas that those pictures could be used in your drawing? Do you think that your drawing is different from those of your classmates?

The third step, aimed at the organization of thinking processes, techniques and methods. It included two prompts that were identical for all tasks while the third prompt was different for divergent and convergent tasks.

Prompt 3.1: Describe your thoughts for making these drawings before our discussion.

Prompt 3.2: After our discussion, do you think that there is another way to make your drawings? Describe how.

The last prompt for the divergent thinking tasks was:

Prompt 3.3: How do you think that you could draw as many as possible different drawings?

And the final prompt for convergent thinking tasks:

Prompt 3.4: How do you think that you could use and combine as many pictures as you can to your drawing and make it different from your classmates’?

During the mediation process students cooperated and actively interacted with the assessor.

Results

Initial Statistics

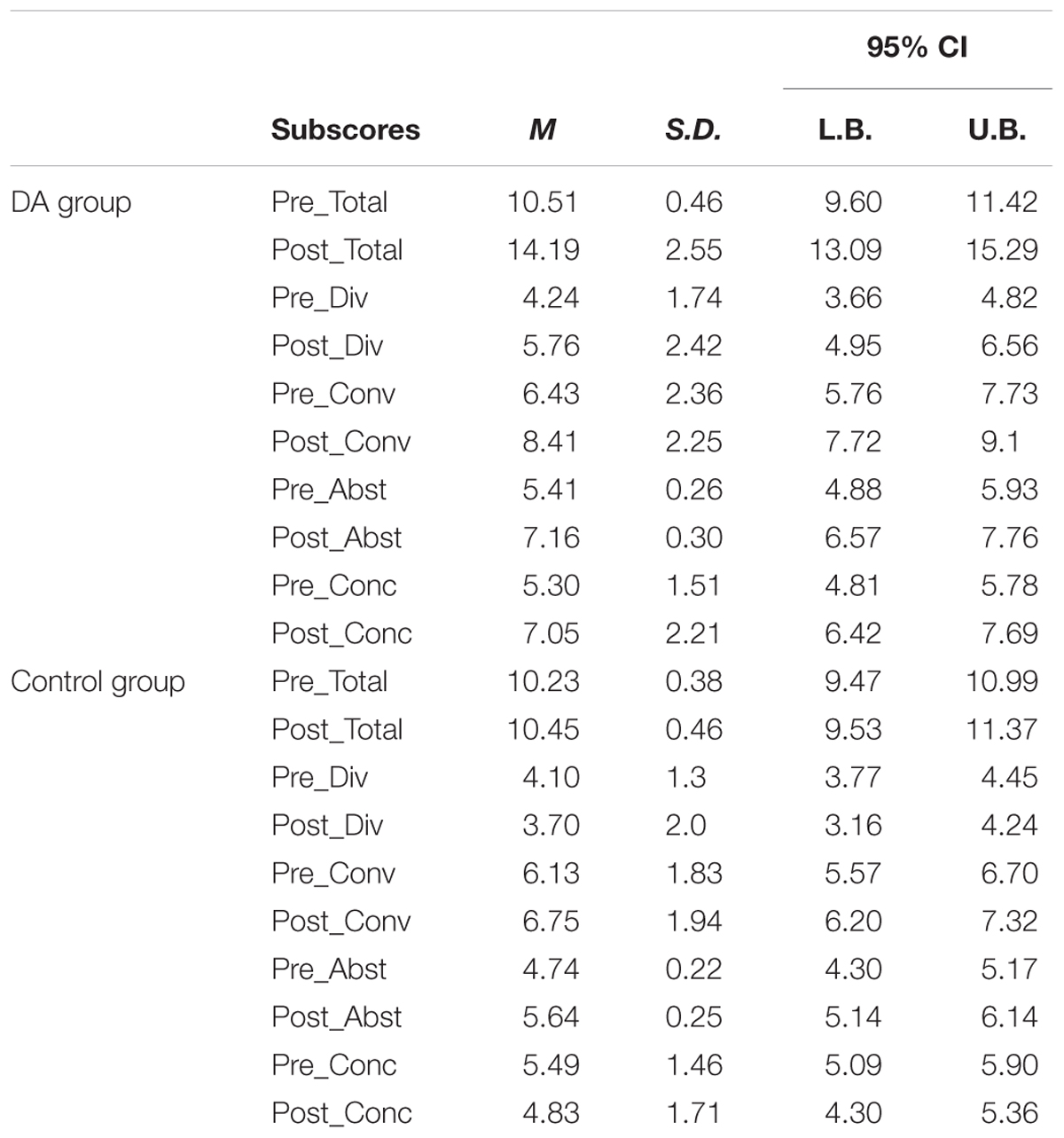

Table 2 shows the descriptive statistics of DA and control groups’ participants’ scores in the total pre-test and post-test of EPoC (Pre_Total and Post_Total). The subscores include the pre- and post-divergent creative thinking processes (Pre_Div and Post_Div) the pre- and post-convergent creative thinking processes (Pre_Conv and Post_Conv) the pre- and post-subscores of tasks with abstract stimuli (Pre_Abst and Post_Abst) and the pre- and post-subscores of tasks with concrete stimuli, (Pre_Conc and Post_Conc), called abstract and concrete creative thinking in the rest of the paper. Total EPoC scores represent the sum of divergent and convergent thinking subscores. The abstract and the concrete creative thinking processes scores were calculated summing the scores on abstract and concrete stimulation tasks.

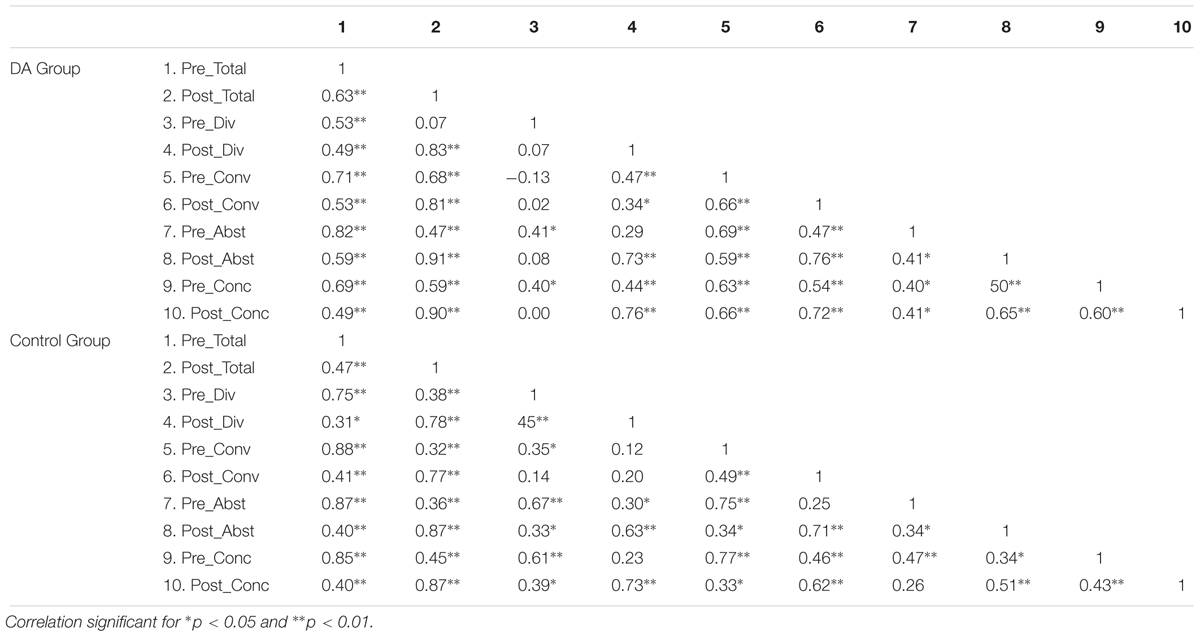

The correlations among all scores in the pre- and post-test of the DA group are presented in Table 3.

It is worth noticing that the correlations between the pre- and post-test in most subscores are moderate.

Effect of Mediation

In order to investigate the effect of mediation for the total score and the subscores of EPoC a series of analyses of variance (ANOVA) were carried out. First, the interactional effect of time × groups was investigated by a two-way mixed-design ANOVA having time (pre- and post-testing) as a within-subjects factor and the two groups of participants (DA group and control group) as a between-subjects factor. A series of Levene’s tests showed that the variances of the two groups were equal for both the pre-test [F (1,68) = 1.48, p > 0.05] and the post-test [F (1,68) = 2.82, p > 0.05] of total EPoC score, the pre-test [F (1,88) = 3.16, p > 0.05] and the post-test [F (1,88) = 1.91, p > 0.05] of divergent thinking, the pre-test [F (1.88) = 0.08, p > 0.05] and the post-test [F (1, 88) = 0.01, p > 0.05] of abstract thinking and the pre-test [F (1, 88) = 0.01, p > 0.05] and the post-test [F (1, 88) = 3.97, p = 0.05] of concrete thinking, while they were unequal for the pre-test [F (1, 88) = 4.76, p < 0.05] of convergent thinking but equal for the post-test [F (1,88) = 1.93, p > 0.05] of convergent thinking.

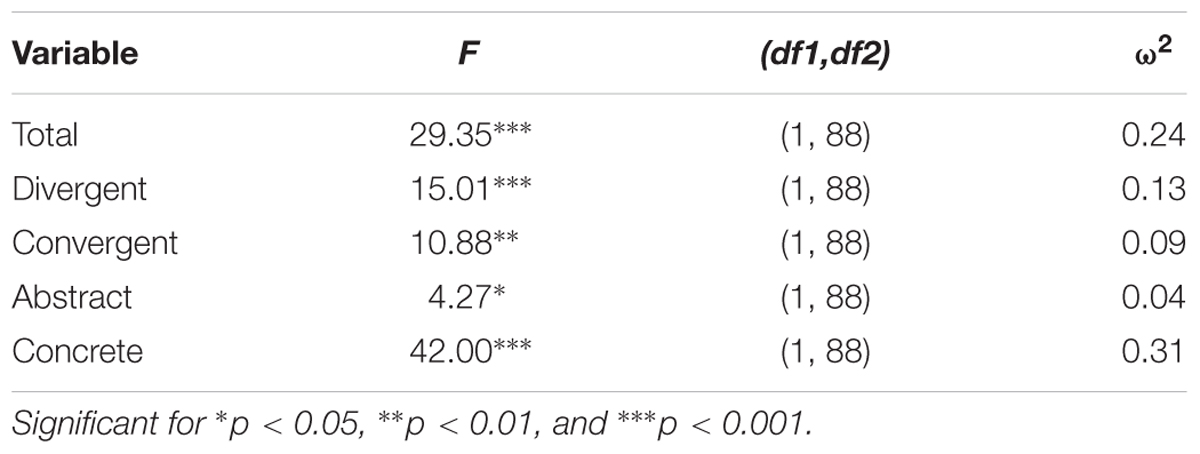

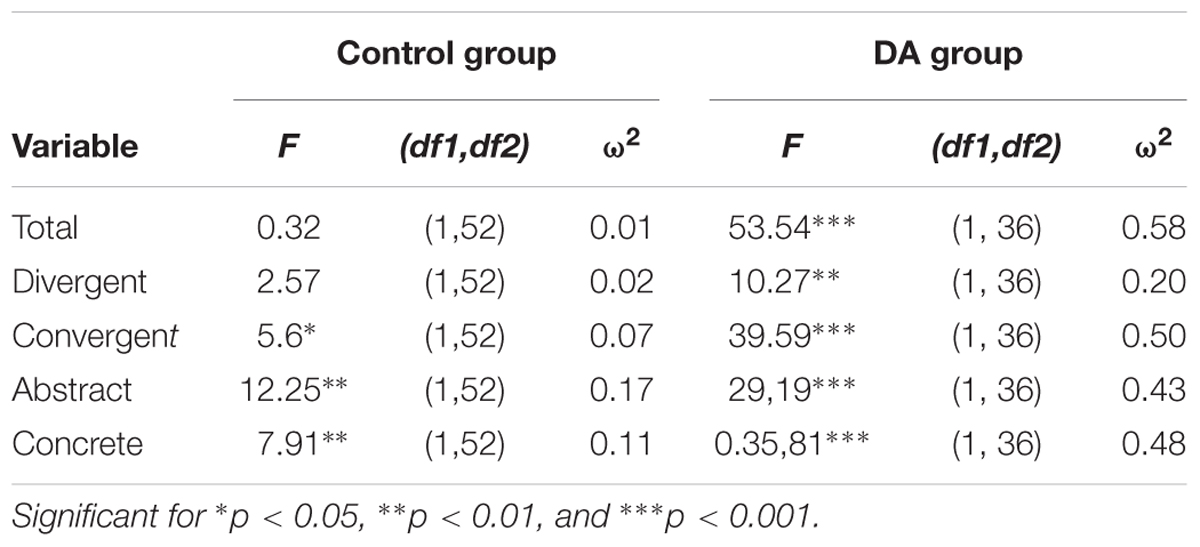

As seen in Table 4 the interactional effect of time by group was found to be statistically significant for the total score and for all EPoC subscores implying that that the mediation implemented on the DA group had a significant effect.

In order to explore the source of this interaction first we investigated the main effect of time on group separately, by the means of repeated measures analysis of variance (RM ANOVA) having pre- and post-scores as a within-subjects factor.

As seen in Table 5 for the DA group, the within subjects effect of time was significant for all measured variables. From the above analysis it appears that in total EPoC score, as well in the subscores of convergent, divergent, abstract and concrete thinking had a large effect. For the control group, the analysis showed that the subscores of post-test abstract and convergent thinking had a significant improvement over time, yet, with a small effect size, while a non-significant improvement over time was observed in total EPoC and divergent thinking. The subscores of divergent and concrete thinking had a drop in post-test compared with the pre-test (see Table 2). In sum, the results of this analysis demonstrated that mediation had a large significant effect on all subscores.

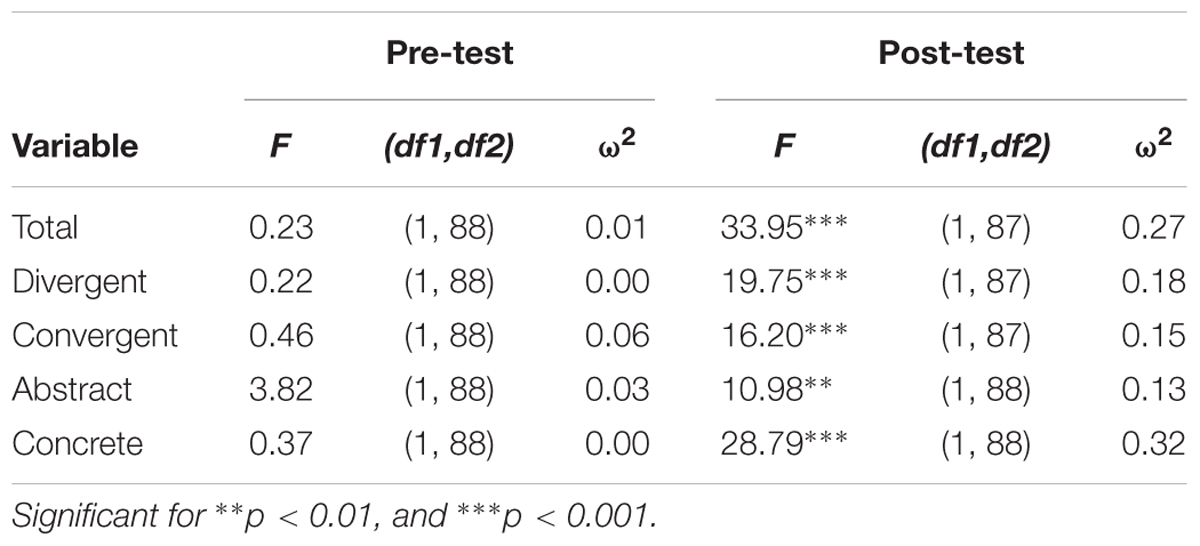

Finally, in order to investigate the main effect between the two groups univariate ANOVAs were conducted with pre-test scores as the dependent variables and group as the independent. Also, univariate ANCOVAs were carried out having the post-test score as the dependent variables, the two groups of participants as a fixed factor and pre-test as a covariant.

As demonstrated in Table 6 a non-significant between subjects effect between the two groups was observed in the pre-test scores of all measured variables, whereas a significant effect between the two groups was observed in the post-test of all variables. Specifically, it appears that in total score and concrete thinking there was a large effect size while a moderate effect size was found in divergent, convergent and abstract thinking. In conclusion, the two groups’ static scores as appear in the pre-test did not differ significantly while DA’s group post-test scores were significantly improved demonstrating the difference DA can make.

Discussion

In general, according to the results, DA appeared to be a better indicator of students CP which can assist subsequent decisions in instruction. In particular, the correlations among subscores reflected the construct validity of EPoC and the correlations between test and retest supported the reliability of EPoC (Barbot et al., 2015). Nevertheless, the finding that the pre- and post-test correlations of the DA group were not very strong and the variance explained was from 4.9 to 43.5% indicated that mediation effect varied among students in other words not all students gained the same benefit from DA.

Overall, the ANOVAs demonstrated the effectiveness of mediation. The non-significant differences between the groups in pre-testing showed that the two groups were of equal CP at the starting point of the study. The significant but small effect size of time on the control group’s post-test total score as well as the disparity in the effect of time on the individual subscores implied that the pre-testing experience may have had a limited impact in the control group’s post-test scores. However, the most important finding of the study was the significant effect of mediation on all variables examined. This is in line with past research regarding inductive (analogical) reasoning and problem solving (e.g., Wang, 2010; Resing et al., 2017a,b). The present study provided evidence that personalized instruction and interaction between the assessor and the assesse may enhance not only the development of children’s inductive reasoning but also their creative thinking skills.

The reason for the large effect sizes of improvement needs further consideration. Previous studies in other cognitive domains have demonstrated effect sizes (eta squared) that varied between 0.10 to 0.40 (e.g., Vogelaar and Resing, 2016; Resing et al., 2017b). Given the limited time length of the mediation, such effect sizes are hardly likely to demonstrate any major developments in creative thinking abilities. It is more likely that the effect sizes reflect improvement in children’s understanding regarding the task demands along with the development of strategies for expressing the required cognitive abilities, as well as an enhancement of motivation related constructs such as creative self-efficacy. The magnitude of the effect size may also be indicative of Greek students’ lack of experience in creative activities and testing. As mentioned in the sample description, the present sample consisted of primary school children from a socially deprived area of Athens inner city. It is possible that these young students probably had never been asked to employ divergent thinking or to produce novel ideas, or to have received any feedback on such efforts. In this regard it is likely that they had not fully understood the demands of the tasks and the required cognitive strategies nor they had been motivated to engage regardless the accuracy of the test instructions. It seems therefore that DA of CP could be helpful for revealing the CP of disadvantaged young students, confirming in the area of creativity the finding by numerous previous studies that demonstrated the benefits for disadvantaged students from the application of DA in various cognitive domains (e.g., Resing et al., 2009, 2017b; Navarro and Lara, 2017).

Implications for Practice

Despite its importance, creativity development in schools still remains an unachieved educational goal (e.g., Beghetto and Kaufman, 2014) partially due to the lack of an assessment method that identifies students’ CP and facilitates in setting the aims and the content of specific educational interventions and teaching decision making towards creativity development (Kaufman et al., 2009; Barbot et al., 2015). For this purpose, the static use of EPoC allows the creation of students’ CP personal profiles which can be of great assistance for assessing and nurturing creativity. In the light of the findings of the present study, we argue that students’ personal profiles based on the results obtained from DA of CP would be more informative for designing educational programs aiming at creativity nurturing, especially for children from disadvantaged backgrounds. That is so, because conclusions based on the results of static measurement may be misleading for educators, as they may interpret low student scores in creativity as lack of or low CP and consequently trigger the vicious circle of low expectations which may lead to low achievements and so on.

Furthermore, students may interpret low scores as lack of creative ability which could in turn result into low creative self-efficacy, low goals setting and into maladaptive motivation in general. A profile of CP created with DA of CP using EPoC would offer much more informed and beneficial facts for use by teachers for the nurturing of creativity. A profile of CP which includes the development in creativity dimensions together with the experience of the interaction between the assessor and the assessee during the process of mediation, would provide qualitative information about a series of factors that may had hindered the expression of CP such as misunderstandings, motivation, etc.

Limitations and Directions for Future Research

The present study can only be characterized as preliminary in the study of DA for the assessment of CP. Due to the limited size of the sample the results cannot be generalized for the student population and further research is needed with more students from different cultural and educational backgrounds as well as students of different age or cognitive ability. The data collected did not allow conclusions about the characteristics of the students that benefitted more from mediation, which needs further research. Certainly further research should be conducted in order to investigate the effect of DA in other domains such as linguistic or mathematical creativity. Moreover, the transfer of improvement on creative thinking in one domain of creativity into different domains needs to be studied. In addition, it would be useful to investigate DA using other CP tests to examine the effect of mediation on them. Finally, an “active control group” besides control group can be used in the semi-experimental design in the future. The “active control group” could receive a mediation aiming at developing a different, unrelated to creativity cognitive ability such as memory. Comparisons among post-scores of all groups would provide more valid information for the effectiveness of mediation in enhancing creativity.

Besides its limitations, we believe that the importance of our study lies in its implications for creativity assessment and real classroom practice. As this study demonstrated, static assessment of CP cannot fully depict children’s CP while DA provides a clearer portrait which can be utilized especially in education.

Author Contributions

Both authors contributed substantially to the conception or design of the work, the acquisition, analysis or interpretation of data for the work as well as to draft the work or revise it critically for important intellectual content. In addition they both provided approval for publication of the content and agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Barbot, B., Besançon, M., and Lubart, T. (2015). Creative potential in educational settings: its nature, measure, and nurture. Education 3-13 43, 371–381. doi: 10.1080/03004279.2015.1020643

Barbot, B., Besançon, M., and Lubart, T. (2016). The generality-specificity of creativity: exploring the structure of creative potential with EPoC. Learn. Ind. Diff. 52(Suppl. C), 178–187. doi: 10.1016/j.lindif.2016.06.005

Barbot, B., Besançon, M., and Lubart, T. I. (2011). Assessing creativity in the classroom. Open Educ. J. 4, 58–66. doi: 10.2174/1874920801104010058

Beghetto, R. A., and Kaufman, J. C. (2014). Classroom contexts for creativity. High Abil. Stud. 25, 53–69. doi: 10.1080/13598139.2014.905247

Bennett, R. E. (2011). Formative assessment: a critical review. Assess. Educ. Princ. Policy Pract. 18, 5–25. doi: 10.1080/0969594X.2010.513678

Besançon, M., and Lubart, T. (2008). Differences in the development of creative competencies in children schooled in diverse learning environments. Learn. Ind. Diff. 18, 381–389. doi: 10.1016/j.lindif.2007.11.009

Besançon, M., Lubart, T., and Barbot, B. (2013). Creative giftedness and educational opportunities. Educ. Child Psychol. 30, 79–88. doi: 10.1177/1529100611418056

Binkley, M., Erstad, O., Herman, J., Raizen, S., Ripley, M., Miller-Ricci, M., et al. (2012). “Defining twenty-first century skills,” in Assessment and Teaching of 21st Century Skills, eds P. Griffin, B. McGaw, and E. Care (Dordrecht: Springer), 17–66. doi: 10.1007/978-94-007-2324-5_2

Black, P., Harrison, C., Hodgen, J., Marshall, B., and Serret, N. (2010). Validity in teachers’ summative assessments. Assess. Educ. Princ. Policy Pract. 17, 215–232. doi: 10.1080/09695941003696016

Black, P., and Wiliam, D. (2009). Developing the theory of formative assessment. Educ. Assess. Eval. Account. (formerly: J. Pers. Eval. Educ.) 21:5. doi: 10.1007/s11092-008-9068-5

Campione, J. C., Brown, A. L., Ferrara, R. A., Jones, R. S., and Steinberg, E. (1985). Breakdowns in flexible use of information: intelligence-related differences in transfer following equivalent learning performance. Intelligence 9, 297–315. doi: 10.1016/0160-2896(85)90017-0

Coffey, J. E., Hammer, D., Levin, D. M., and Grant, T. (2011). The missing disciplinary substance of formative assessment. J. Res. Sci. Teach. 48, 1109–1136. doi: 10.1002/tea.20440

Cook, T. D., Campbell, D. T., and Shadish, W. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton Mifflin.

Elliott, J. (2003). Dynamic assessment in educational settings: realising potential. Educ. Rev. 55, 15–32. doi: 10.1080/00131910303253

Feuerstein, R., and Jensen, M. R. (1980). Instrumental enrichment: theoretical basis, goals, and instruments. Educ. Forum 44, 401–423. doi: 10.1080/00131728009336184

Feuerstein, R., Klein, P. S., and Tannenbaum, A. J. (1991). Mediated Learning Experience (MLE): Theoretical, Psychosocial and Learning Implications. Tel Aviv: Freund Publishing House Ltd.

Feuerstein, R., Rand, Y., and Hoffman, M. B. (1981). The dynamic assessment of retarded performers: the learning potential assessment device, theory, instruments and techniques. Int. J. Rehabil. Res. 4, 465–466. doi: 10.1097/00004356-198109000-00035

Feuerstein, R., Rand, Y. A., Hoffman, M., Hoffman, M., and Miller, R. (1979). Cognitive modifiability in retarded adolescents: effects of instrumental enrichment. Am. J. Ment. Defic. 83, 539–550.

Fisher, D., and Frey, N. (2015). Checking for Understanding: Formative Assessment Techniques for Your Classroom, 2nd Edn. Alexandria: ASCD.

Fletcher, J. M., Lyon, G. R., Fuchs, L. S., and Barnes, M. A. (2018). Learning Disabilities: From Identification to Intervention. New York, NY: Guilford Publications.

Grigorenko, E. L. (2009). Dynamic assessment and response to intervention: two sides of one coin. J. Learn. Disabil. 42, 111–132. doi: 10.1177/0022219408326207

Gustafson, S., Svensson, I., and Fälth, L. (2014). Response to intervention and dynamic assessment: implementing systematic, dynamic and individualised interventions in primary school. Int. J. Disabil. Dev. Educ. 61, 27–43. doi: 10.1080/1034912X.2014.878538

Haywood, H. C., and Lidz, C. S. (2007). Dynamic Assessment in Practice: Clinical and Educational Applications. Cambridge, NY: Cambridge University Press.

Haywood, H. C., and Tzuriel, D. (2002). Applications and challenges in dynamic assessment. Peabody J. Educ. 77, 40–63. doi: 10.1207/S15327930PJE7702_5

Kaufman, J. C., Kaufman, S. B., Beghetto, R. A., Burgess, S. A., and Persson, R. S. (2009). “Creative giftedness: beginnings, developments, and future promises,” in International Handbook on Giftedness, ed. L. V. Shavinina (Dordrecht: Springer), 585–598. doi: 10.1007/978-1-4020-6162-2_28

Kaufman, J. C., Plucker, J. A., and Baer, J. (2008). Essentials of Creativity Assessment. Hoboken, NJ: John Wiley & Sons.

Kaufman, J. C., Plucker, J. A., and Russell, C. M. (2012). Identifying and assessing creativity as a component of giftedness. J. Psychoeducat. Assess. 30, 60–73. doi: 10.1177/0734282911428196

Kaufman, J. C., and Sternberg, R. J. (2007). Resource review: creativity. Change 39, 55–58. doi: 10.3200/CHNG.39.4.55-C4

Kim, K. H. (2006). Can we trust creativity tests? A review of the torrance tests of creative thinking (TTCT). Creat. Res. J. 18, 3–14. doi: 10.1207/s15326934crj1801_2

Lubart, T., Besançon, M., and Barbot, B. (2012). EPOC Evaluation of Potential Creativity (English Version). Paris: Hogrefe.

Lubart, T., Zenasni, F., and Barbot, B. (2013). Creative potential and its measurement. Int. J. Talent Dev. Creat. 1, 41–51.

Navarro, J.-J., and Lara, L. (2017). Dynamic assessment of reading difficulties: predictive and incremental validity on attitude toward reading and the use of dialogue/participation strategies in classroom activities. Front. Psychol. 8:173. doi: 10.3389/fpsyg.2017.00173

Newton, P. E. (2007). Clarifying the purposes of educational assessment. Assess. Educ. Princ. Policy Pract. 14, 149–170. doi: 10.1080/09695940701478321

Poehner, M. E. (2008). Dynamic Assessment A Vygotskian Approach to Understanding and Promoting L2 Development. Boston,MA: Springer.

Poehner, M. E., and Lantolf, J. P. (2013). Bringing the ZPD into the equation: capturing L2 development during computerized dynamic assessment (C-DA). Lang. Teach. Res. 17, 323–342. doi: 10.1177/1362168813482935

Resing, W. (2013). Dynamic testing and individualized instruction: helpful in cognitive education? J. Cognit. Educ. Psychol. 12, 81–95. doi: 10.1891/1945-8959.12.1.81

Resing, W., Bakker, M., Pronk, C. M. E., and Elliott, J. G. (2017a). Progression paths in children’s problem solving: the influence of dynamic testing, initial variability, and working memory. J. Exp. Child Psychol. 153, 83–109. doi: 10.1016/j.jecp.2016.09.004

Resing, W., Touw, K. W. J., Veerbeek, J., and Elliott, J. G. (2017b). Progress in the inductive strategy-use of children from different ethnic backgrounds: a study employing dynamic testing. Educ. Psychol. 37, 173–191. doi: 10.1080/01443410.2016.1164300

Resing, W., Tunteler, E., De Jong, F. J., and Bosma, T. (2009). Dynamic testing in indigenous and ethnic minority children. Learn. Ind. Diff. 19, 445–450. doi: 10.1891/1945-8959.14.2.231

Runco, M. A. (2003). Education for creative potential. Scand. J. Educ. Res. 47, 317–324. doi: 10.1080/00313830308598

Runco, M. A., Millar, G., Acar, S., and Cramond, B. (2010). Torrance tests of creative thinking as predictors of personal and public achievement: a fifty-year follow-up. Creat. Res. J. 22, 361–368. doi: 10.1080/10400419.2010.523393

Russell, M. K., and Airasian, P. W. (2011). Classroom Assessment: Concepts and Applications, 7th Edn. New York, NY: McGraw-Hill Education.

Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instruct. Sci. 18, 119–144. doi: 10.1007/bf00117714

Seel, N. M. (2012). “Design experiments,” in Encyclopedia of the Sciences of Learning, ed. N. M. Seel (Boston, MA: Springer), 925–928. doi: 10.1007/978-1-4419-1428-6_913

Sternberg, R. J., and Grigorenko, E. L. (2002). Dynamic Testing: The Nature and Measurement of Learning Potential. Cambridge: Cambridge university press.

Stiggins, R. (2005). From formative assessment to assessment for learning: a path to success in standards-based schools. Phi Delta Kappan 87, 324–328. doi: 10.1177/003172170508700414

Torrance, E. P. (1972). Predictive validity of the torrance tests of creative thinking∗. J. Creat. Behav. 6, 236–262. doi: 10.1002/j.2162-6057.1972.tb00936.x

Torrance, E. P. (1988). “The nature of creativity as manifest in its testing,” in The Nature of Creativity: Contemporary Psychological Perspectives, eds R. J. Sternberg and R. J. Sternberg (New York, NY: Cambridge University Press), 43–75.

Vogelaar, B., and Resing, W. C. (2016). Gifted and average-ability children’s progression in analogical reasoning in a dynamic testing setting. J. Cognit. Educ. Psychol. 15:349. doi: 10.1891/1945-8959.15.3.349

Walberg, H. J. (1988). The Nature of Creativity: Contemporary Psychological Perspectives, ed. R. J. Sternberg. Cambridge: Cambridge University Press.

Wallach, M. A., and Kogan, N. (1965). A new look at the creativity-intelligence distinction1. J. Pers. 33, 348–369. doi: 10.1111/j.1467-6494.1965.tb01391.x

Wang, T.-H. (2010). Web-based dynamic assessment: taking assessment as teaching and learning strategy for improving students’ e-Learning effectiveness. Comput. Educ. 54, 1157–1166. doi: 10.1016/j.compedu.2009.11.001

Weisberg, R. W. (2015). On the usefulness of ‘Value’ in the definition of creativity. Creat. Res. J. 27, 111–124. doi: 10.1080/10400419.2015.1030320

Wyse, D., and Ferrari, A. (2015). Creativity and education: comparing the national curricula of the states of the European Union and the United Kingdom. Br. Educ. Res. J. 41, 30–47. doi: 10.1002/berj.3135

Yorke, M. (2003). Formative assessment in higher education: moves towards theory and the enhancement of pedagogic practice. Higher Educ. 45, 477–501. doi: 10.1023/a:1023967026413

Keywords: creative potential, dynamic assessment, mediation, graduated prompting, primary education

Citation: Zbainos D and Tziona A (2019) Investigating Primary School Children’s Creative Potential Through Dynamic Assessment. Front. Psychol. 10:733. doi: 10.3389/fpsyg.2019.00733

Received: 19 November 2018; Accepted: 15 March 2019;

Published: 05 April 2019.

Edited by:

Meryem Yilmaz Soylu, University of Nebraska–Lincoln, United StatesReviewed by:

Eva Viola Hoff, Lund University, SwedenMaciej Karwowski, University of Wrocław, Poland

Copyright © 2019 Zbainos and Tziona. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dimitrios Zbainos, zbainos@hua.gr

Dimitrios Zbainos

Dimitrios Zbainos Athanasia Tziona

Athanasia Tziona