- Anhui Engineering Laboratory of Human-Robot Integration System and Intelligent Equipment, Key Laboratory of Intelligent Computing and Signal Processing of Ministry of Education, School of Electrical Engineering and Automation, Anhui University, Hefei, China

The Group Sparse Representation (GSR) model shows excellent potential in various image restoration tasks. In this study, we propose a novel Multi-Scale Group Sparse Residual Constraint Model (MS-GSRC) which can be applied to various inverse problems, including denoising, inpainting, and compressed sensing (CS). Our new method involves the following three steps: (1) finding similar patches with an overlapping scheme for the input degraded image using a multi-scale strategy, (2) performing a group sparse coding on these patches with low-rank constraints to get an initial representation vector, and (3) under the Bayesian maximum a posteriori (MAP) restoration framework, we adopt an alternating minimization scheme to solve the corresponding equation and reconstruct the target image finally. Simulation experiments demonstrate that our proposed model outperforms in terms of both objective image quality and subjective visual quality compared to several state-of-the-art methods.

1. Introduction

Unsuitable equipment and other disturbances unavoidably contribute noise in the target images. Image denoising is a crucial area of image processing and has attracted much attention from scholars in related fields recently. Digital image denoising techniques have a wide range of uses, involving disciplines of medicine and industry, and also in spectral images for weather forecasting, remote sensing images, and so on. Taking image denoising as a basis, the method can be introduced to more image restoration problems and be useful in more fields (Buades et al., 2005; Osher et al., 2005; Elad and Aharon, 2006; Zoran and Weiss, 2011; Gu et al., 2014; Zhang et al., 2014b; Liu et al., 2017; Keshavarzian et al., 2019; Ou et al., 2020; Zha et al., 2020a, 2022; Jon et al., 2021). This task aims to generate a latent image x from the degraded version y. The process modeling can be depicted as

Where H is an irreversible linear operator in matrix form and n is the additive white Gaussian noise vector. By requiring H, Eq.(1) can be converted to diverse image restoration problems. For example, Eq.(1) represents the image denoising problem if H is an identity (Elad and Aharon, 2006; Ou et al., 2020); Eq.(1) denotes the image inpainting problem if H is a mask (Liu et al., 2017; Zha et al., 2020a); and Eq.(1) stands for the image CS problem if H is an undersampled random projection matrix (Keshavarzian et al., 2019; Zha et al., 2022). We concentrate on image denoising, inpainting, and CS challenges in this article.

Given that the problem always ill-posed, it is common to use image priors to regularize the model so as to gain excellent restored images. Namely, the Maximum A Posteriori (MAP) approach allows for the image restoration problem to be formulated as a mathematical equation to address the minimization problem:

The former is the data-fidelity term and the latter is the image prior constraint term. The weights between these two terms are regulated by the parameter λ. After establishing the mathematical model, we conceived an optimization algorithm to address various image restoration problems. The method yields a reconstructed image that approximates a clean image after several iterations.

Numerous image prior models have been put forward in earlier studies, mainly classified into local smoothness (Rudin et al., 1992; Osher et al., 2005; Dey et al., 2006), non-local self-similarity (Fazel et al., 2001; Buades et al., 2005; Gu et al., 2014), and sparsity (Zhang et al., 2014b; Ou et al., 2020, 2022a). Yet, the curse of dimensionality makes it difficult to construct a global model for the entire image. Therefore, the approach of building patch priors has become popular in recent years for its efficiency and convenience.

Sparse representation is one of the most representative patch-based priors. Elad and Aharon (2006) proposed K-SVD (K-Singular Value Decomposition) which is a pioneering work in applying sparse coding to image denoising. NSS is another crucial prior information widely used. Buades et al. (2005) proposed the first model using NSS for image denoising. In addition, the high correlation between patches leading to the data matrix of a clean image is as often low-rank. Related studies mainly fall into two categories: low-rank matrix factorization (LRMF) (Srebro and Jaakkola, 2003; Buades et al., 2005) and the Nuclear Norm Minimization (NNM) (Fazel et al., 2001; Hu et al., 2012). NNM is the more popular one in most cases. Gu et al. presented the Weighted Nuclear Norm Minimisation model (WNNM) (Gu et al., 2014) which dramatically enhances the flexibility of NNM, and it remains among most widespread image denoising methods. Apart from this, RRC (Zha et al., 2019), which makes use of low-rank residuals for modeling, has also achieved good quality in various image restoration problems.

Some studies have combined image sparsity and self-similarity to modeling, and these algorithms have shown great potential in image restoration research. For instance, in the study by Dabov et al. (2007), BM3D applies NSS to cluster patches before collaborative filtering, which is a benchmark method in the current area of image denoising. Both NCSR (Dong et al., 2012b) and GSR (Zhang et al., 2014b) use the NSS property to aggregate image patches into groups, and then perform sparse coding on the self-similar groups. Mairal J et al. devised the LSSC (Mairal et al., 2009) to force all self-similar groups to be imposed with the same dictionary. Zha et al. (2017) designed an efficient GSRC model that converts the task of image denoising into one of minimizing group sparse residuals. In addition, Zha et al. (2020a) also proposed a GSRC-NLP model with a better image restoration result based on the above.

Another groundbreaking patch-based image recovery method is Expected Patch Log Likelihood (EPLL) (Zoran and Weiss, 2011) which restores images by learning a Gaussian mixture model(GMM). Later on, Zoran et al. introduces a multi-scale EPLL (Papyan and Elad, 2015) model, which can improve the performance of image restoration further. Subsequently, image denoising methods using external GMM priors have been widely used. Most of the relevant studies have combined external GMM with internal NSS for modeling, such as Xu et al. (2015) proposed PGPD, Chen et al. (2015) proposed PCLR, and Zha et al. (2020b) proposed SNSS.

In addition to the above methods, deep convolutional neural networks (CNNs) (Zhang et al., 2017; Zhang and Ghanem, 2018) is an emerging approach in recent years, but it requires learning in an external database before restoring damaged images.

It is not comprehensive to only consider the sparsity or low-rankness property of the image. Hence, with the aim of obtaining a higher-quality restored image, our study uses the low-rank property of similar groups as a constraint in combination with sparsity to design the model. Furthermore, based on the NSS property, we can not only find similar patches for a specified patch on a single scale image but also extend the search window to multi-scales. Finally, we propose a novel Multi-scale Group Sparsity Residual Constraint (MS-GSRC) model with the following innovations:

1. We propose a novel MS-GSRC model that provides a simple yet effective approach for image restoration: find neighbor patches with an overlapping scheme for the input degraded image using a multi-scale strategy and perform a group sparse coding on these similar patches with a low-rank constraint.

2. An alternating minimization mechanism with an automatically tuned parameter scheme is applied to our proposed model, which guarantees a closed-form solution at each step.

3. Our proposed MS-GSRC model is validated on three tasks: denoising, inpainting, and compressed sensing. The model performs competitive in both objective image quality and subjective visual quality compared to several state-of-the-art image restoration methods.

The remainder of this article is as follows: In Section 2, after the brief overview of the GSRC framework and LR methods, we introduce a novel MS-GSRC model. Section 3 adopts an alternating minimization scheme with self-adjustable parameters to resolve our proposed algorithm. Section 4 lists extensive experimental results that prove the feasibility of our model. Conclusion is presented in Section 5.

2. Models

In this part, we briefly review some relevant knowledge and present our new model.

2.1. Group-based sparse representation

Principles of the GSR model can be described as follows: divide the image into many overlapping patches, find self-similarity groups for each image patch using the NSS property, perform sparse coding for each self-similarity group, and finally reconstruct the image (Dong et al., 2012b; Zha et al., 2020a; Ou et al., 2022a).

Specifically, the image x ∈ ℝM is divided into m overlapping patches , where . Next, for each overlapping patch xi, we use the K-Nearest Neighbor classification (KNN) algorithm (Keller et al., 1985; Xie et al., 2016) to select k neighbor patches from a W×W search window to form the group Ki. Subsequently, stack all Ki into a data matrix ; this matrix contains each element of Ki as its column, i.e., Xi = {xi, 1, xi, 2…xi, k}, where denotes the k-th similar patch of the k-th group. Each similarity group Xi is represented sparsely as , where Di denotes the dictionary.

Nevertheless, solving the 0-norm minimization problem is NP-hard, so for the ease of making the solution, The sparse code is obtained from the following equation (Zhang et al., 2014b):

It is well-known that clean images x are unavailable in image restoration problems. Thus, we replace x with degenerate images y ∈ ℝM × M. Eq.(3) can be transformed into the problem of recovering the group sparse code Ai from Yi:

The restored Xi is obtained by , and the final complete image X can be gained by simple averaging .

2.2. Group sparsity residual constraint

After observing the GSR model, it is clear that the closer the computed A approximates to B, the better the quality of the final restoration image. Consequently, the following definition of the group sparsity residual constraint (GSRC) (Zha et al., 2017) is given: R = A − B. Then, Eq.(4) for solving the group sparse coefficients Ai can be converted into:

This model uses BM3D to restore the the degenerate observation y to the image z. Moreover, z can be viewed as a good approximation of the target x considering BM3D has an excellent denoising performance. Thus, the group sparsity coefficients Bi can be obtained from z. In the study by Zha et al. (2020a), GSRC-NLP uses NLP before constraining the input image.

2.3. Low-rank approximation

According to Gu et al. (2014), Zha et al. (2019), and Zha et al. (2020b), it can be found that NNM is a popular low-rank approximations methods. For X, define the i-th singular value as σi(x), and the nuclear norm as ‖X‖* = Σiσi(x). The specific solution for X is:

Equation (6) yields a simple solution: , where Ŷ = UΣVT is the SVD for Y and Sτ(Σ) is a soft-thresholding (Cai et al., 2010) function. Namely, Sτ(Σ)ii = max(Σii − τ, 0), where Σii is the diagonal element of Σ.

2.4. Multi-scale GSRC

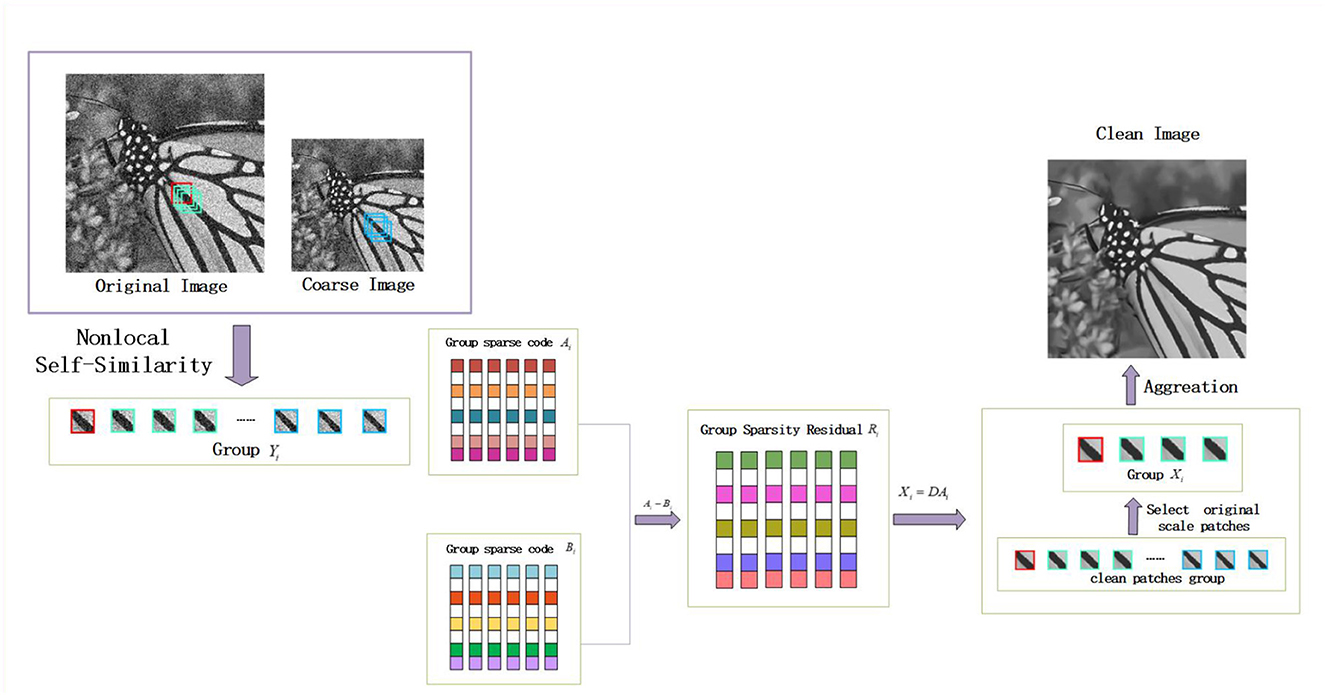

The established GSRC model has performed well in image denoising, but it requires additional pre-processing of degraded images for obtaining the group sparsity coefficients B. Thus, we combine group sparsity and low-rank property to build a model. Furthermore, the GSRC model only focuses on a single scale. However, it is evident that NSS can appear not only on the original scale of an image but also on a coarse scale, so we can find neighbor patches for the original image patch at multi-scales (Yair and Michaeli, 2018; Ou et al., 2022a,b). The specific steps of our proposed new Multi-Scale Group Sparse Residual Constraint (MS-GSRC) model are as follows:

(a) First, we use KNN to find a specified number of similar patches from both the original scale and scaled-down version for the overlapping patches of the input image.

(b) Then, these similar patches are stacked separately into groups.

(c) Next, the low-rank constraint is imposed on each group to obtain good group sparsity coefficients Bi.

(d) After estimating the group sparsity coefficients Ai by using the group sparsity residuals Ri, each group was recovered in sequence.

(e) Finally, we select the patch belonging to the original image from each group, and aggregate the complete image by simple averaging.

We propose the following constraint function:

is a multi-scale similarity group, which is a matrix with k nearest neighbor patches matched for each original image patch. These similar patches are derived from both the original and coarse scales of the image. The window size is W × W in the original scale, and it isχW × χW in the other scale images, where χ indicates the scale factor (0 < χ < 1). χ will be set to different values in different experiments.

For image denoising, for example, the flowchart of MS-GSRC model is shown in Figure 1.

3. Algorithm for image restoration

This section is a detailed analysis of our proposed MS-GSRC model. The solution of this algorithm is obtained using an alternating minimization method whose parameter is self-adjustment.

First, we divide Eq.(7) into three sub-problems:

3.1. Ai sub-problem

Given x and Di, we get a sub-problem of Ai:

where , αi, βi, pi stand for the vector representations of Ai, Bi, and Pi, respectively. Di is a dictionary, A crucial step for solving the Ai problem is to design an efficient Di. The restored image is prone to visual artifacts (Lu et al., 2013) if learning the over-complete dictionary. To reduce this terrible phenomenon, we choose to adopt principal component analysis (PCA) (Abdi and Williams, 2010) for learning the dictionary Di in this study because PCA is more robust and adjustable.

Equation (8) can be deduced as a closed-form solution:

Soft(·) represents the soft-thresholding operator.

Since x is an unknown target image, it is impossible to gain the group sparse coefficients Bi directly. Consequently, we must utilize methods to gain an approximation value.

The introduction of low-rank constraints into the model is a practical approach. After applying LR constraints to the Yi group, we can obtain a matrix Si. The clean group sparsity coefficients Bi can be computed from Si. It is easy to derive the following equation:

So,we can obtain

where ‖Bi‖* = Σjδi, j, are singular value of matrix. Apparently, we are able to get a closed-form solution for Bi:

where is the SVD for Ai and Δi is the diagonal element of the singular matrix.

3.2. x sub-problem

Given Ai and Di, subproblem of x in Eq.(7) turns into:

Clearly, Eq.(13) is a quadratic optimization equation. We adopt Alternate Directional Multiplication Method (ADMM) (Boyd et al., 2011) to simplify the optimization process.

First, we bring in an auxiliary variable s = xMS, and Eq.(13) can be converted into an equivalence equation:

By observing Eq.(14), it is plain that this equation has three unknown variables requiring solutions. Thus, we decompose Eq.(14) into three iterative processes. In the t-th iteration:

The parameter c indicates the Lagrangian multiplier. To make the derivation process look more concise, we omit t in the following formulation expression.

Update s : Given DiAi, x, and c, s can be represented as a closed-form solution by Eq.(16), namely:

Since I is a matrix of identities and represents a diagonal matrix, is positive. So the above formula is valid.

Update x : Given s and c, Eq.(15) provides a solution to the variable x:

Notably, since H is an unstructured random projection matrix, the cost required to solve x using Eq.(19) directly is too high in CS problem. Hence, after setting step size γ and gradient direction q, we employ the gradient descent method (Ruder, 2016): to rewrite Eq.(19) as:

In addition, it is recommended to compute HTH and HTy in advance to further enhance the algorithm efficiency.

3.3. Parameter settings

In the model we proposed above, there are four parameters (μ, λ, θ, ζ) requiring setting. Here, we set a strategy for the parameters that can be automatically adjusted in each iteration, which allows us to achieve more robust and accurate experimental results.

The noise standard deviation σn is automatically updated in each iteration (Osher et al., 2005):

Where ω represents a scaling factor, it is evident from Gu et al. (2014) and Chen et al. (2015) that this approach to regularize σe has been implemented in diverse models and has exhibited positive performance.

After setting σe, the value of μ is tuned to change in proportion to (Zha et al., 2022):

where ρ denotes a constant.

Moreover, the regularization parameters λ and θ represent the constraint penalties on sparsity and LR, respectively. Inspired by Dong et al. (2012a), they are adjusted in each iteration as follows:

where mi is the estimated standard variance of Ri and ni stands for the estimated standard variance of Δi. The ε and ϵ are two small constants to avoid zero divisors. α and β are set to two constants. Finally, parameter ζ is also set to a fixed constant.

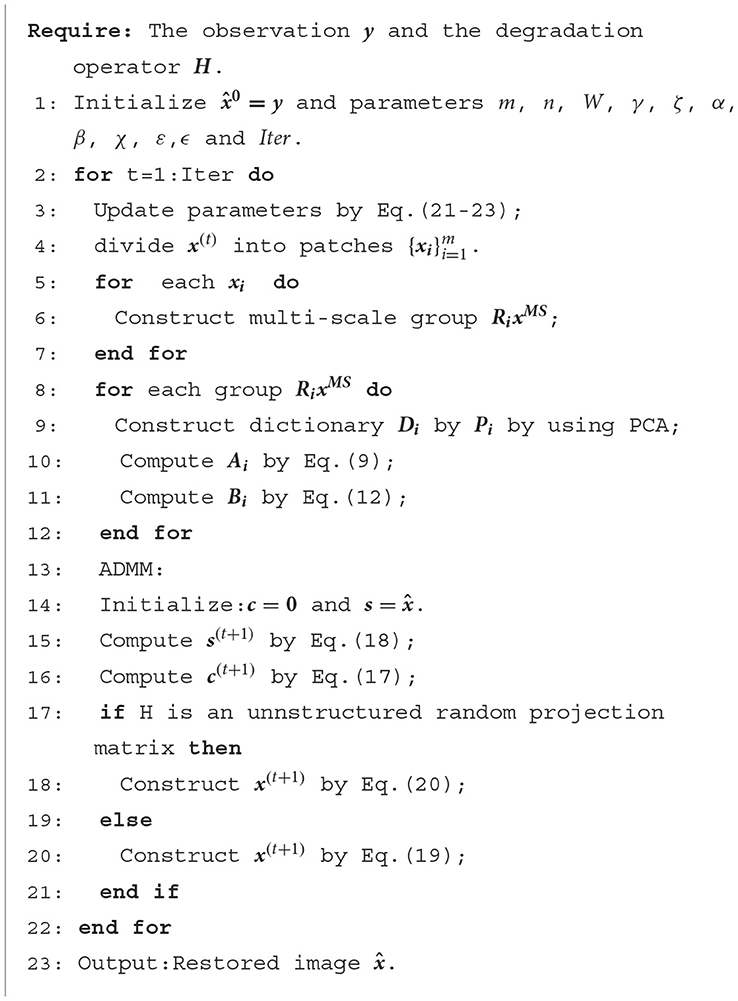

The detailed procedure of the MS-GSRC algorithm is presented in Algorithm 1.

4. Experiences

In this chapter, extensive trial are conducted on image denoising, inpainting, and CS to verify that our proposed MS-GSRC model possesses better image restoration capabilities compared to some classical methods. To obtain intuitive comparison results, we set on two metrics: peak signal-to-noise ratio (PSNR) and structural self-similarity (SSIM) (Wang et al., 2004).

PSNR is commonly used to measure signal distortion. This parameter is calculated based on the gray scale values of the image pixels. Although sometimes the value of PSNR is not consistent with competent human perception, it remains an important reference evaluation metric. SSIM is a metric intended for assessing similarity between two images, which is an intuitive human standard for evaluating image quality.

If the degraded image is in color, we mainly recover the luminance channel due to the fact that variations in the luminance of color images are more easily perceived by the human eye.

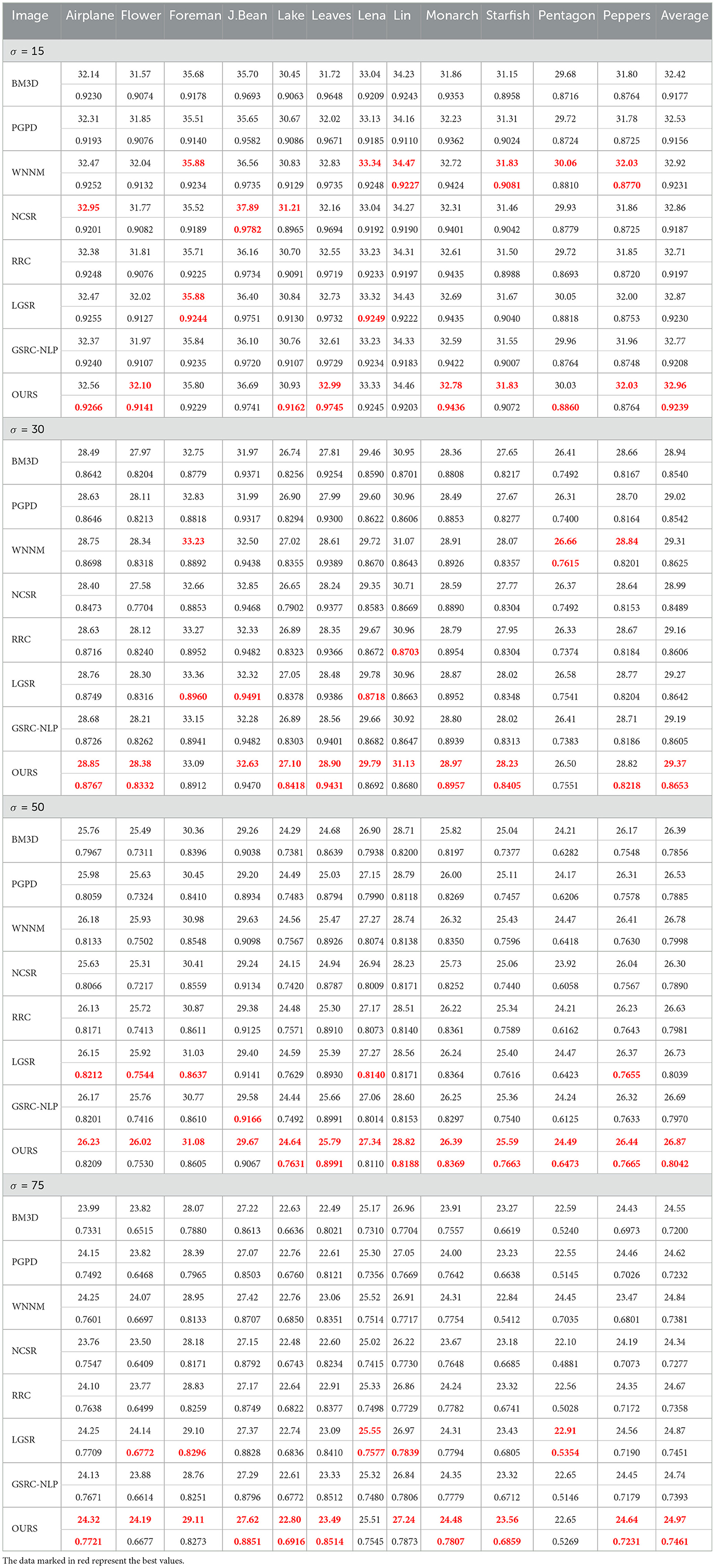

The codes for all comparison algorithms used in this study are obtained from the original author's homepage and uses the given default parameters directly. For reasons of limited space, only a few images frequently used for testing are detailed list in Figure 2. In all tables, the data marked in red represent the best values.

4.1. Image denoising

First, we verify the performance of our MS-GSRC model on the image denoising task. The corresponding parameters are set as follows. We set the search window W × W to 30 × 30, the patch size to 6 × 6, 7 × 7, 9 × 9 for σ ≤ 15, 15 < σ ≤ 30, and 30 < σ ≤ 75, with the number of neighbor patches k to 70, 110, 120 for σ ≤ 30, 30 < σ ≤ 50, 50 < σ ≤ 75, respectively. The parameters (α, β, ω, ζ) are set to (0.03, 1.75, 0.81, 0.085), (0.015, 1.8, 0.86, 0.07), (0.05, 2.2, 0.81, 0.12), (0.006, 2, 0.86, 0.05) for σ ≤ 15, 15 < σ ≤ 30, 30 < σ ≤ 50, 50 < σ ≤ 75. In addition, we set the multi-scale to [1,0.8], [1,0.85], and [1,0.9] for σ ≤ 15, 15 < σ ≤ 50, and 50 < σ ≤ 75, separately.

Our MS-GSRC method is compared with several recently proposed popular denoising methods and classical traditional denoising methods, including BM3D (Dabov et al., 2007), PGPD (Xu et al., 2015), WNNM (Gu et al., 2014), NCSR (Dong et al., 2012b), RRC (Zha et al., 2019), LGSR (Zha et al., 2022) and GSRC-NLP (Zha et al., 2020a). Of all the comparison methods, BM3D is a frequently adopted benchmarking method, NCSR, PGPD, and GSRC-NLP all use GSR as a prior, and WNNM and RRC exploit low-rankness knowledge. And LGSR combines GSR and LR. Besides, both GSRC-NLP and our proposed model use the GSRC framework. Taking 12 frequently used images as an example, Table 1 lists the PSNR and SSIM results for various denoising methods at different noise levels. It is observed that our proposed MS-GSRC method produced superior performance. Specifically, the average PSNR and SSIM we achieve are improved by (0.47 dB, 0.0149) compared to BM3D, (0.38 dB, 0.0107) compared to PGPD, (0.07 dB, 0.0032) compared to WWNM, (0.42 dB, 0.0149) compared to NCSR, (0.25 dB, 0.0066) compared to RRC, (0.1 dB, 0.0005) compared to LGSR, and (0.19 dB, 0.0054) compared to GSRC-NLP.

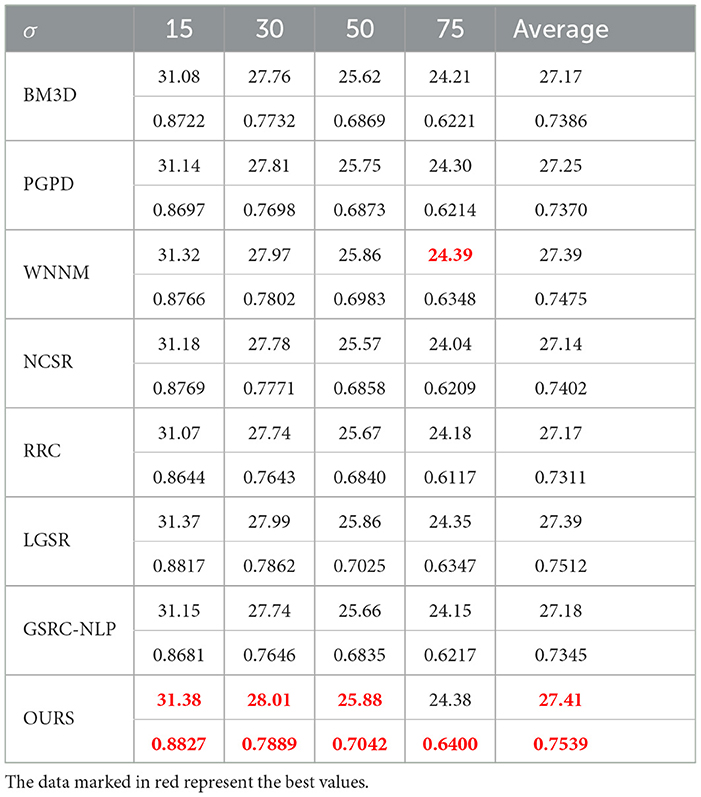

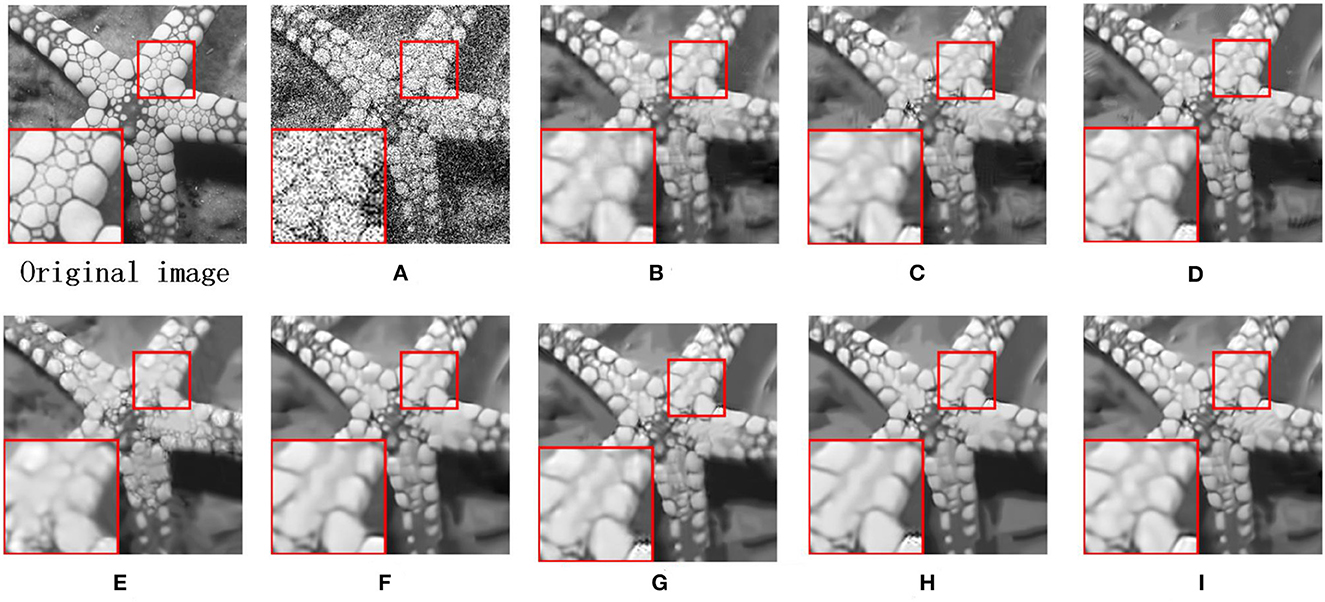

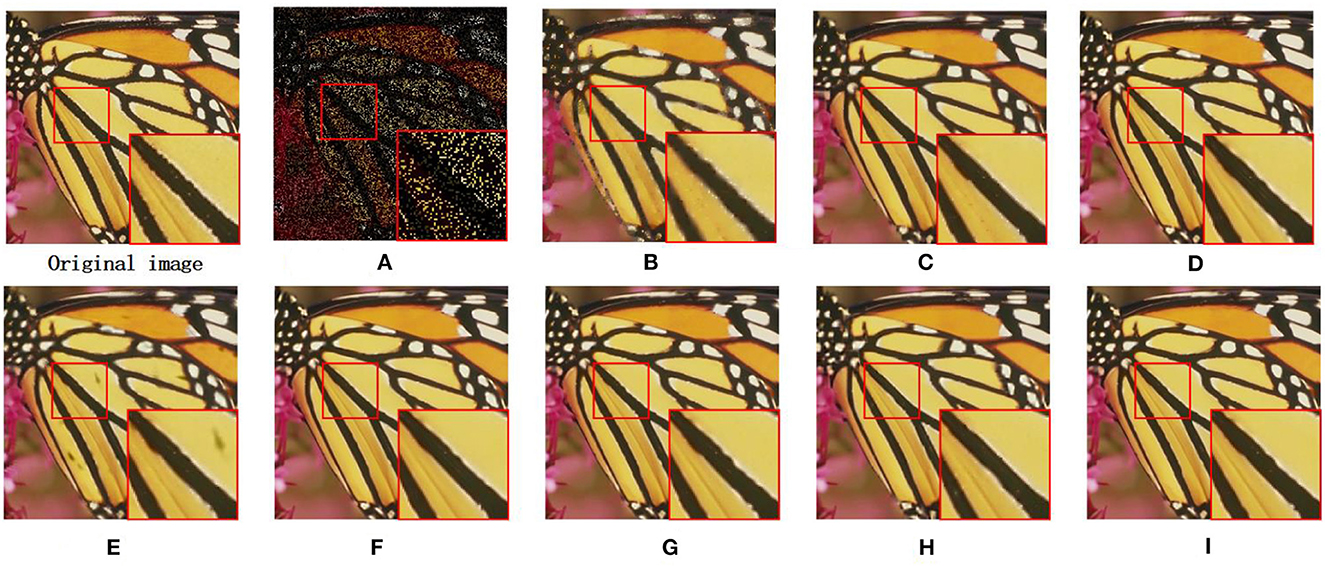

We also utilize the BSD68 dataset (Wang et al., 2004) to assess the denoising ability of all compared approaches. We can observe from Table 2 that the average PSNR gains obtained by our proposed MS-GSRC method in comparison to the BM3D, PGPD, WNNM, NCSR, RRC, GSRC-NLP, and LGSR methods are 0.24 dB, 0.16 dB, 0.02 dB, 0.27 dB, 0.24 dB, 0.23 dB, and 0.03 dB. Meanwhile, on average, the proposed MS-GSRC achieve an SSIM improvement of 0.0153 on BM3D, 0.0169 on PGPD, 0.0064 on WNNM, 0.0137 on NCSR, 0.0228 on RRC, 0.0027 on LGSR, and 0.0194 on GSRC-NLP. Evidently, our proposed MS-GSRC method yields better PSNR and SSIM in almost all noise cases. Our method is only 0.01 dB lower than WWNM in PSNR, but 0.0052 higher than in SSIM at σ = 75. Beyond objective metrics, the subjective perception of the human body is also a crucial criterion for assessing the quality of an image. Consequently, we present the visual contrast between the two images of starfish and 223,061 restored by different methods in Figures 3, 4, respectively. Figure 3 indicates that BM3D, PGPD, WNNM, and RRC are likely to over-smooth the restored image, whereas NCSR, GSRC-NLP, and LGSR can lead to the appearance of some undesired visual artifacts. As can be seen in Figure 4, although the image restored by WNNM has a higher PSNR, the image restored by our MS-GSRC method has a higher SSIM value and presents a better visual effect. PGPD, NCSR, RRC, and GSRC-NLP are susceptible to loss of detail in the restored images, while BM3D, WNNM, and LGSR may result in undesirable artifacts.

Figure 3. Denosing results on image starfish (σ = 75). (A) Noise image. (B) BM3D (PSNR = 23.27 dB and SSIM = 0.6619). (C) PGPD (PSNR = 23.23 dB and SSIM = 0.6638). (D) WNNM (PSNR = 22.84 dB and SSIM = 0.5412). (E) NSRC (PSNR = 23.18 dB and SSIM = 0.6685). (F) RRC (PSNR = 23.32 dB and SSIM = 0.6741). (G) LGSR (PSNR = 23.43 dB and SSIM = 0.6805). (H) GSRC-NLP (PSNR = 23.32 dB and SSIM = 0.6712). (I) OURS (PSNR = 23.56 dB and SSIM = 0.6859).

Figure 4. Denosing results on image 223061 (σ = 75). (A) Noise image. (B) BM3D (PSNR = 22.27 dB and SSIM = 0.5470). (C) PGPD (PSNR = 22.30 dB and SSIM = 0.5420). (D) WNNM (PSNR = 22.51 dB and SSIM = 0.5690). (E) NSRC (PSNR = 22.15 dB and SSIM = 0.5383). (F) RRC (PSNR = 22.22 dB and SSIM = 0.5351). (G) LGSR (PSNR = 22.32 dB and SSIM = 0.5545). (H) GSRC-NLP (PSNR = 22.13 dB and SSIM = 0.5313). (I) OURS (PSNR = 22.42 dB and SSIM = 0.5761).

4.2. Image inpainting

Next, we verify the superiority of the MS-GSRC model on inpainting. We likewise compare the proposed MS-GSRC method with many classical or recently popular methods, such as SAIST (Afonso et al., 2010), TSLRA (Guo et al., 2017), GSR (Zhang et al., 2014b), JSM (Zhang et al., 2014c), JPG-SR (Zha et al., 2018b), LGSR (Zha et al., 2022), and IDBP (Tirer and Giryes, 2018). Among these, SAIST is one of the earliest proposed methods for image restoration, GSR, JPG-SR, LGSR, TSLRA, and JSM use the NSS prior, and IDBP is a deep learning-based method. In simulation experiments, we test images by randomly generated masks that included missing pixels of 80%, 70%, 60%, and 50%. Following are the parameters that we set for the MS-GSRC model in different cases. We set the patch size to 7 × 7, the search window size to 25, and the non-local similar patches to 60. In addition, for all cases, we set the multi-scales to [1,0.85]. Moreover, we set (0.0002, 0.0001, 1.5, 15) and (0.0001, 0.0001, 1.5, 15) as parameters (ω, ζ, α, β) when the missing pixels are 0.8 and others, respectively. In addition, for all experiences.

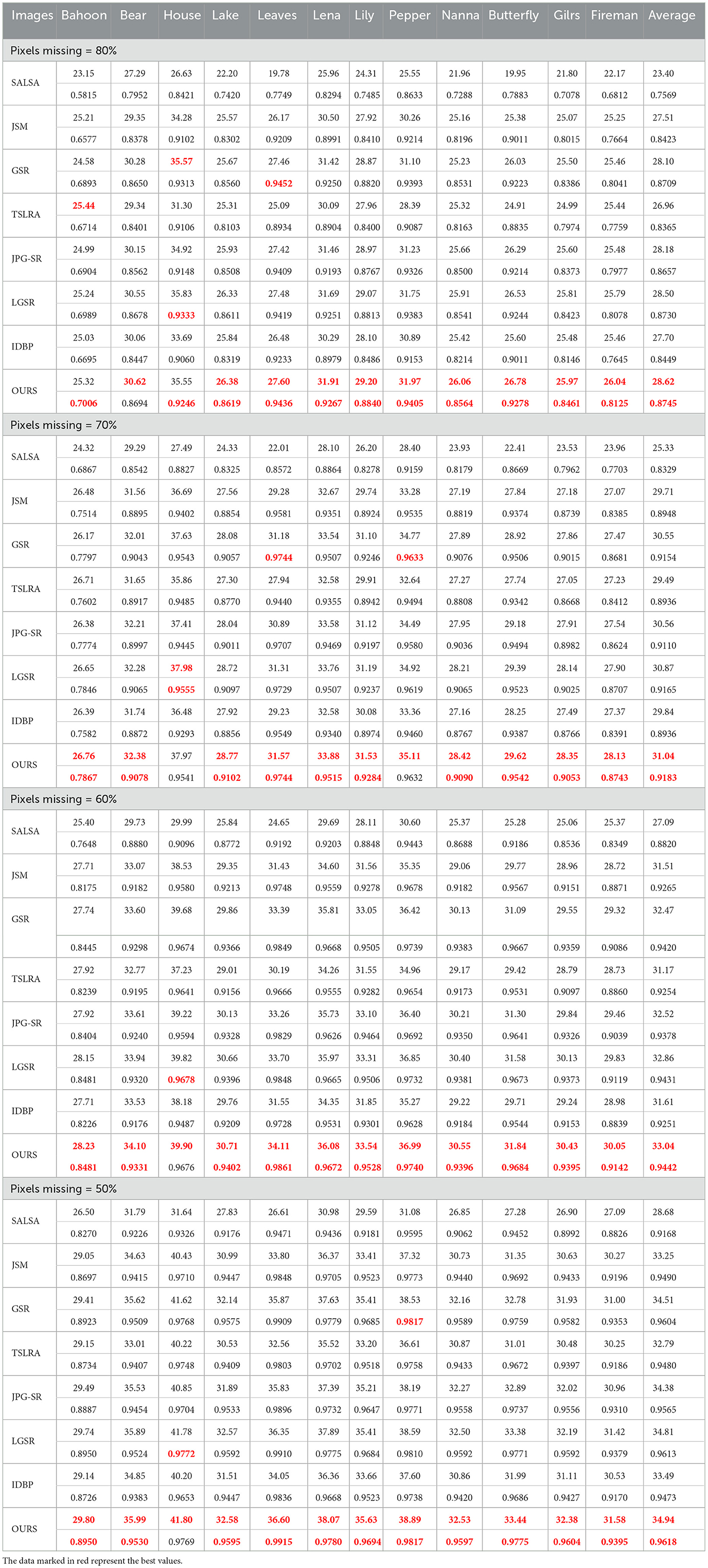

Table 3 illustrates the PSNR and SSIM results for each method on the 12 frequently used test images. As observed in Table 3, our proposed method exceeds the comparison algorithm virtually often when it comes to image inpainting performance. The proposed MS-GSRC outperforms SAIST, JSM, GSR, TSLRA, JPG-SR, LGSR, and IDBP approaches in average PSNR performance, with gains of 5.8 dB, 1.43 dB, 0.51 dB, 1.82 dB, 0.51 dB, 0.13 dB, and 1.26 dB, respectively. Additionally, on average, the proposed MS-GSRC surpasses SAIST by 0.0776, JSM by 0.0216, GSR by 0.0025, TSLRA by 0.0238, JPG-SR by 0.007, LGSR by 0.0012, and IDBP by 0.022.

Table 3. PSNR (dB) and SSIM comparison of different methods SAIST, TSLRA, GSR, JSM, JPG-SR, LGSR, IDBP, and OURS for image inpainting.

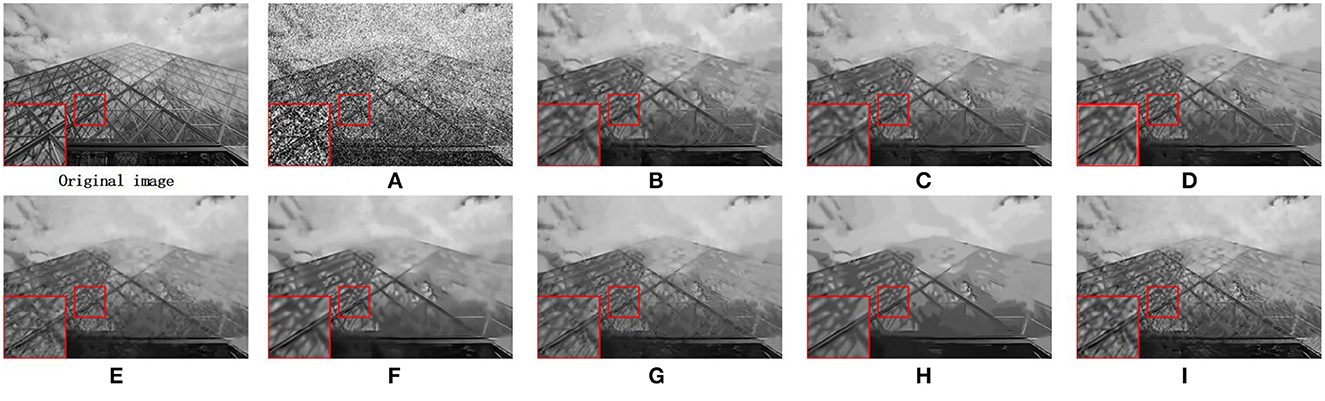

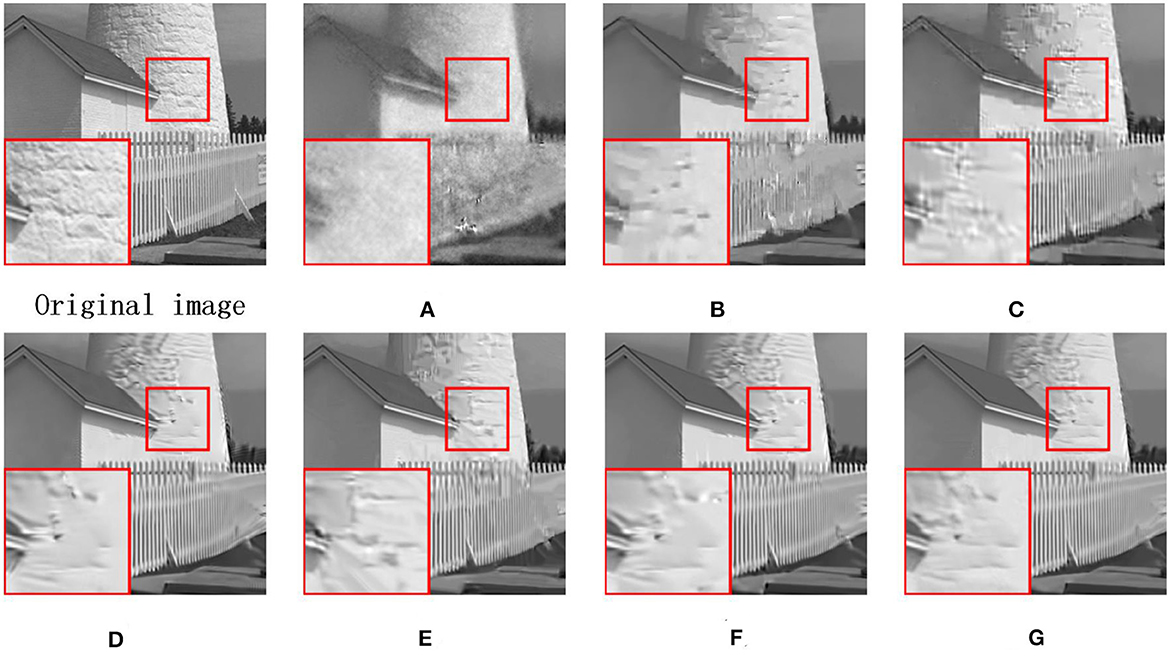

Similarly, two images are selected for detailed visual analysis. The image butterfly with a 80% loss of pixels restored by different methods are presented in Figure 5. Moreover, Figure 6 displays the outcomes of a visual comparison of image flowers with a 70% loss of pixels restored with different algorithms. By analyzing the visual comparison images, we can find that images restored using SAIST, JSM, TSLRA, IDBP GSR, and JPG-SR are susceptible to excessive smoothing, and images restored using LGSR tend to show excessive visual artifacts. The images restored using our proposed MS-GSRC model have significantly better restoration capabilities with regard to image detail and edges.

Figure 5. Inpainting results on image butterfly (missing ratio=80%). (A) Missing pixels image. (B) SAIST(PSNR = 19.95 dB and SSIM = 0.7883). (C) JSM (PSNR = 25.38 dB and SSIM = 0.9011). (D) GSR (PSNR = 26.03 dB and SSIM = 0.9223). (E) TSLRA (PSNR = 24.91 dB and SSIM = 0.8835). (F) JPG-SR (PSNR = 26.29 dB and SSIM = 0.9214). (G) LGSR (PSNR = 26.53 dB and SSIM = 0.9244). (H) IDBP (PSNR = 25.60 dB and SSIM = 0.9011). (I) OURS(PSNR = 26.78 dB and SSIM = 0.9278).

Figure 6. Inpainting results on image flowers (missing ratio = 70%). (A) Missing pixels image. (B) SAIST (PSNR = 27.69 dB and SSIM = 0.8422). (C) JSM (PSNR = 29.74 dB and SSIM =0.8924). (D) GSR (PSNR = 31.10 dB and SSIM = 0.9246). (E) TSLRA (PSNR = 29.91 dB and SSIM = 0.8942). (F) JPG-SR (PSNR = 31.12 dB and SSIM = 0.9197). (G) LGSR (PSNR = 31.19 dB and SSIM = 0.9237). (H) IDBP (PSNR = 30.08 dB and SSIM = 0.8974). (I) OURS (PSNR = 31.53 dB and SSIM = 0.9284).

4.3. Image compressed sensing

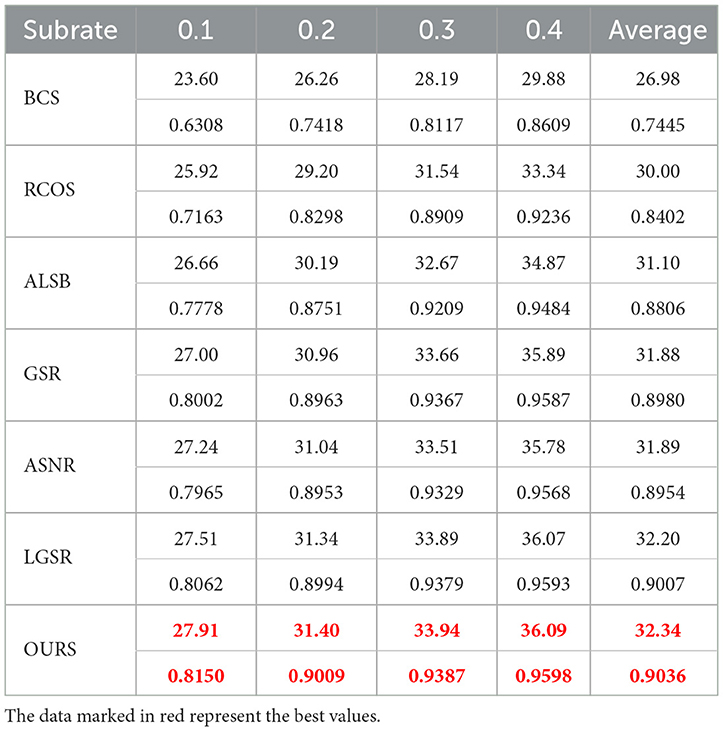

Finally, we validate the restoration capability of our proposed MS-GSRC model on the image compressed sensing problem. In this part of experiments, we use the Gaussian random projection matrix (Zhang et al., 2014b) to generate blocks of size 32 × 32 to test the CS restoration effects. The parameters set for the MS-GSRC model are as follows: For all cases, the patch size is set to be 8 × 8, the patch number to 80, the search window size to be 25, and the multi-scales to be [1,0.75]. In addition, (0.004, 0.00002, 0.6, 25), (0.0014, 0.00005, 0.9, 15), (0.0015, 0.00001, 0.5, 10), and (0.0015, 0.00001, 1.4, 6) are set for (ζ,ω, α,β) when subrate is 0.1N, 0.2N, 0.3N, and 0.4N.

BSC (Mun and Fowler, 2009), RCOS (Zhang et al., 2012), ALSB (Zhang et al., 2014a), GSR (Zhang et al., 2014b), ASNR (Zha et al., 2018a), and LGSR (Zha et al., 2022) are choosen as competing methods. Among them, GSR performs a sparse representation on similar groups of images, ASNR is an image of the CS method that extends on the basis of NCSR, and LGSR combines sparsity and LR. Similarly, we selected 12 images frequently used in image restoration experiments as test images. Table 4 presents the average outcomes of PSNR and SSIM of the restored images using different method. To be concrete, the proposed MS-GSRC model over BCS, RCOS, ALSB, GSR, ASNR, and LGSR methods are 5.36 dB, 2.34 dB, 1.24 dB, 0.46 dB, 0.45 dB, and 0.14d B in PSNR and 0.1591, 0.0634, 0.0023, 0.0056, 0.0082, and 0.0029 in SSIM, respectively.

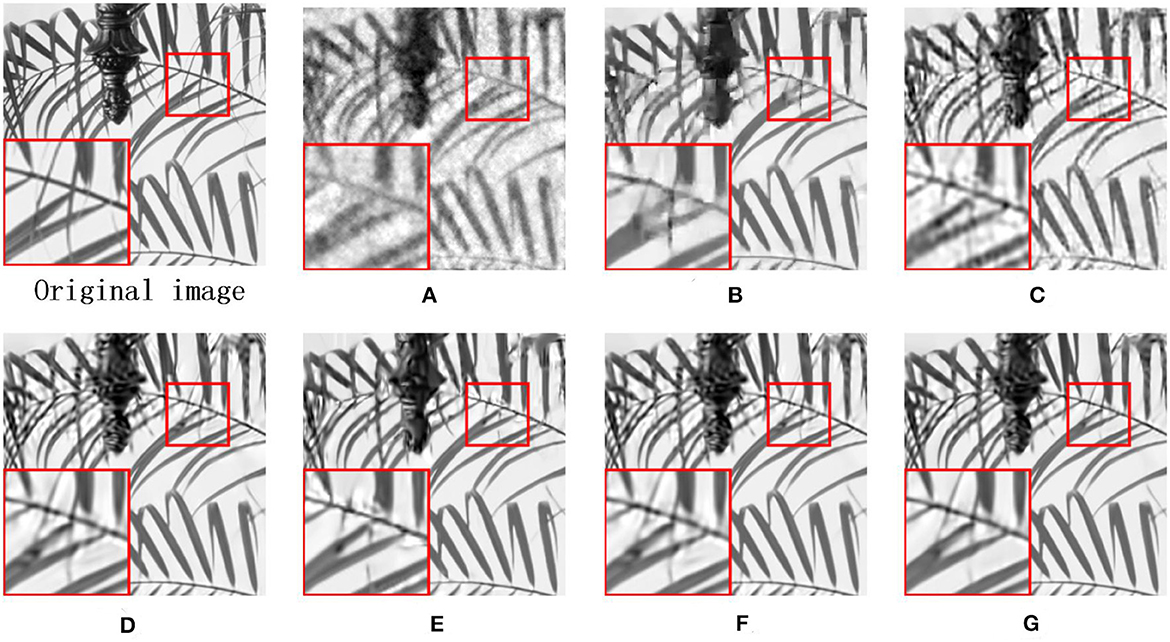

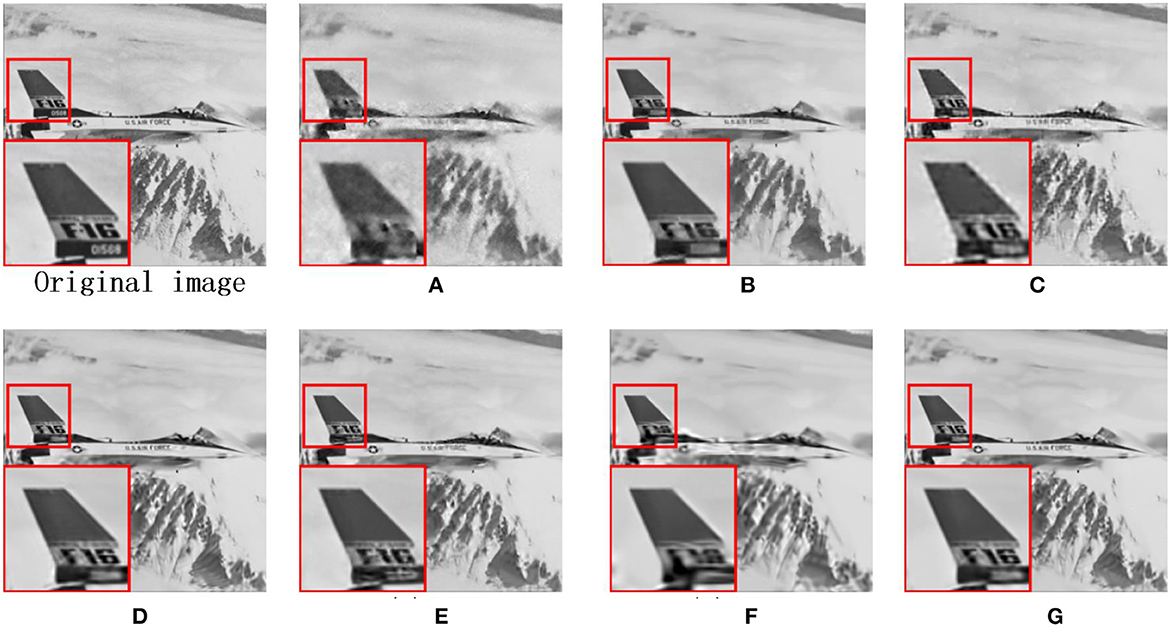

Due to the other competing algorithms used in this thesis, all use BCS to pre-process CS images, and here we use the BCS-processed images as corrupted images. Figure 7 shows the visual contrast of the image fence with 0.1 N CS measurements, and we can observe that RCOS and ALSB are less capable of restoring details, GSR and LGSR lead to over-smooth, and ASNR generates some redundant artifacts. Figure 8 illustrates the visual comparison of the image leaves measured with 0.1N CS. All comparison images have strong ringing phenomena and present terrible artifacts. In Figure 9, we have selected the image airplane processed with 0.2N CS for detailed analysis. It is obvious that the details of the images restored by ALSB and LGSR are seriously missing. The images restored by RCOS, GSR, and ASNR produced more artifacts. In the above three cases, our proposed MS-GSRC algorithm significantly outperforms other competing algorithms in recovering the image overall and some texture details.

Figure 7. CS results on image fence (subrate = 0.1 N). (A) BCS (PSNR = 19.54 dB, SSIM = 0.5034). (B) RCOS (PSNR = 23.29 dB, SSIM = 0.6932). (C) ALSB (PSNR = 25.05 dB and SSIM = 0.7736). (D) GSR (PSNR = 26.06 dB and SSIM = 0.8047). (E) ASNR (PSNR = 26.01 dB and SSIM = 0.8006). (F) LGSR (PSNR = 26.58 dB and SSIM = 0.8107). (G) OURS (PSNR = 27.26 dB and SSIM = 0.8216).

Figure 8. CS results on image leaves (subrate = 0.1 N). (A) BCS (PSNR = 18.37 dB, SSIM = 0.5767). (B) RCOS (PSNR = 22.17 dB, SSIM = 0.0.8323). (C) ALSB (PSNR = 21.52 dB and SSIM = 0.7939). (D) GSR (PSNR = 23.22 dB and SSIM = 0.8731). (E) ASNR (PSNR = 23.48 dB and SSIM = 0.8805). (F) LGSR (PSNR = 23.75 dB and SSIM = 0.8824). (G) OURS (PSNR = 24.57 dB and SSIM = 0.8992).

Figure 9. CS results on image airplane (subrate = 0.2 N). (A) BCS (PSNR = 25.87 dB, SSIM = 0.8111). (B) RCOS (PSNR = 28.22 dB, SSIM = 0.8854). (C) ALSB (PSNR = 28.39 dB and SSIM = 0.8942). (D) GSR (PSNR = 28.87 dB and SSIM = 0.9082). (E) ASNR (PSNR = 29.17 dB and SSIM = 0.9075). (F) LGSR (PSNR = 29.43 dB and SSIM = 0.9110). (G) OURS (PSNR = 29.59 dB and SSIM = 0.9120).

5. Conclusion

In this study, we propose a novel model Multi-Scale Group Sparse Residual Constraint Model (MS-GSRC) for image restoration. This model introduces the low-rank property into the group sparse residual framework and finds similar patches for overlapping patches of the input image using a multi-scale strategy. Furthermore, under the MAP restoration framework, an alternating minimization method with adaptive tunable parameters is used to deliver a robust optimization solution for our MS-GSRC method. We employ the MS-GSRC model to three image restoration problems, namely, denoising, inpainting, and compressed sensing. Extensive simulation trials show that our novel model performs superior to many classical methods in terms of both objective image quality and subjective visual quality.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

WN: Writing — original draft. DS: Writing — review & editing. QG: Writing — review & editing. YL: Writing — review & editing. DZ: Writing — review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the National Natural Science Foundation of China (NSFC) (62071001), the Nature Science Foundation of Anhui (2008085MF192, 2008085MF183, 2208085QF206, and 2308085QF224), the Key Science Program of Anhui Education Department (KJ2021A0013), and was also supported by the China Postdoctoral Science Foundation (2023M730009).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdi, H., and Williams, L. J. (2010). Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2, 433–459. doi: 10.1002/wics.101

Afonso, M. V., Bioucas-Dias, J. M., and Figueiredo, M. A. (2010). An augmented lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Proc. 20, 681–695. doi: 10.1109/TIP.2010.2076294

Boyd, S., Parikh, N., Chu, E., Peleato, B., and Eckstein, J. (2011). Distributed optimization and statistical learning via the alternating direction method of multipliers. Trends Mach. Learn. 3, 1–122. doi: 10.1561/2200000016

Buades, A., Coll, B., and Morel, J.-M. (2005). “A non-local algorithm for image denoising,” in 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05). San Diego, CA: IEEE, 60-65.

Cai, J.-F., Candès, E. J., and Shen, Z. (2010). A singular value thresholding algorithm for matrix completion. SIAM J. Optimizat. 20, 1956–1982. doi: 10.1137/080738970

Chen, F., Zhang, L., and Yu, H. (2015). “External patch prior guided internal clustering for image denoising,” in Proceedings of the IEEE International Conference on Computer Vision (Santiago, CA: IEEE), 603–611.

Dabov, K., Foi, A., Katkovnik, V., and Egiazarian, K. (2007). Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Process. 16, 2080–2095. doi: 10.1109/TIP.2007.901238

Dey, N., Blanc-Feraud, L., Zimmer, C., Roux, P., Kam, Z., Olivo-Marin, J.-C., et al. (2006). Richardson-lucy algorithm with total variation regularization for 3d confocal microscope deconvolution. Microsc. Res. Tech. 69, 260–266. doi: 10.1002/jemt.20294

Dong, W., Shi, G., and Li, X. (2012a). Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Trans. Image Process. 22, 700–711. doi: 10.1109/TIP.2012.2221729

Dong, W., Zhang, L., Shi, G., and Li, X. (2012b). Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 22, 1620–1630. doi: 10.1109/TIP.2012.2235847

Elad, M., and Aharon, M. (2006). Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 15, 3736–3745. doi: 10.1109/TIP.2006.881969

Fazel, M., Hindi, H., and Boyd, S. P. (2001). “A rank minimization heuristic with application to minimum order system approximation,” in Proceedings of the 2001 American Control Conference.(Cat. No. 01CH37148). Arlington, VA: IEEE, 4734–4739.

Gu, S., Zhang, L., Zuo, W., and Feng, X. (2014). “Weighted nuclear norm minimization with application to image denoising,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2862–2869.

Guo, Q., Gao, S., Zhang, X., Yin, Y., and Zhang, C. (2017). Patch-based image inpainting via two-stage low rank approximation. IEEE Trans. Vis. Comput. Graph. 24, 2023–2036. doi: 10.1109/TVCG.2017.2702738

Hu, Y., Zhang, D., Ye, J., Li, X., and He, X. (2012). Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans. Pattern Anal. Mach. Intell. 35, 2117–2130. doi: 10.1109/TPAMI.2012.271

Jon, K., Sun, Y., Li, Q., Liu, J., Wang, X., and Zhu, W. (2021). Image restoration using overlapping group sparsity on hyper-laplacian prior of image gradient. Neurocomputing 420, 57–69. doi: 10.1016/j.neucom.2020.08.053

Keller, J. M., Gray, M. R., and Givens, J. A. (1985). A fuzzy k-nearest neighbor algorithm. IEEE transactions on systems, man, and cybernetics. IEEE Trans. Syst. Man. Cybern. 4, 580–585. doi: 10.1109/TSMC.1985.6313426

Keshavarzian, R., Aghagolzadeh, A., and Rezaii, T. Y. (2019). Llp norm regularization based group sparse representation for image compressed sensing recovery. Signal Proc.: Image Commun. 78, 477–493. doi: 10.1016/j.image.2019.07.021

Liu, S., Wu, G., Liu, H., and Zhang, X. (2017). Image restoration approach using a joint sparse representation in 3d-transform domain. Digital Signal Proc. 60, 307–323. doi: 10.1016/j.dsp.2016.10.008

Lu, C., Shi, J., and Jia, J. (2013). “Online robust dictionary learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 415–422.

Mairal, J., Bach, F., Ponce, J., Sapiro, G., and Zisserman, A. (2009). “Non-local sparse models for image restoration,” in 2009 IEEE 12th International Conference on Computer Vision. Kyoto: IEEE, 2272–2279.

Mun, S., and Fowler, J. E. (2009). “Block compressed sensing of images using directional transforms,” in 2009 16th IEEE International Conference on Image Processing (ICIP). Cairo: IEEE, 3021–3024.

Osher, S., Burger, M., Goldfarb, D., Xu, J., and Yin, W. (2005). An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 4, 460–489. doi: 10.1137/040605412

Ou, Y., Luo, J., Li, B., and Swamy, M. S. (2020). Gray-level image denoising with an improved weighted sparse coding. J. Vis. Commun. Image Represent. 72, 102895. doi: 10.1016/j.jvcir.2020.102895

Ou, Y., Swamy, M., Luo, J., and Li, B. (2022a). Single image denoising via multi-scale weighted group sparse coding. Signal Proc. 200, 108650. doi: 10.1016/j.sigpro.2022.108650

Ou, Y., Zhang, B., and Li, B. (2022b). Multi-scale low-rank approximation method for image denoising. Multimedia Tools Applicat. 81, 20357–20371. doi: 10.1007/s11042-022-12083-z

Papyan, V., and Elad, M. (2015). Multi-scale patch-based image restoration. IEEE Trans. Image Process. 25, 249–261. doi: 10.1109/TIP.2015.2499698

Ruder, S. (2016). An overview of gradient descent optimization algorithms. arXiv. abs/1609.04747. doi: 10.48550/arXiv.1609.04747

Rudin, L. I., Osher, S., and Fatemi, E. (1992). Nonlinear total variation based noise removal algorithms. Physica D: Nonlinear Phenom. 60, 259–268. doi: 10.1016/0167-2789(92)90242-F

Srebro, N., and Jaakkola, T. (2003). “Weighted low-rank approximations,” in Proceedings of the 20th International Conference on Machine Learning (ICML-03) (Washington, DC: AAAI Press), 720–727.

Tirer, T., and Giryes, R. (2018). Image restoration by iterative denoising and backward projections. IEEE Trans. Image Process. 28, 1220–1234. doi: 10.1109/TIP.2018.2875569

Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612. doi: 10.1109/TIP.2003.819861

Xie, Y., Gu, S., Liu, Y., Zuo, W., Zhang, W., and Zhang, L. (2016). Weighted schatten p-norm minimization for image denoising and background subtraction. IEEE Trans. Image Process. 25, 4842–4857. doi: 10.1109/TIP.2016.2599290

Xu, J., Zhang, L., Zuo, W., Zhang, D., and Feng, X. (2015). “Patch group based nonlocal self-similarity prior learning for image denoising,” in Proceedings of the IEEE International Conference on Computer Vision (Santiago, CA: IEEE), 244–252.

Yair, N., and Michaeli, T. (2018). “Multi-scale weighted nuclear norm image restoration,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 3165–3174.

Zha, Z., Liu, X., Zhang, X., Chen, Y., Tang, L., Bai, Y., et al. (2018a). Compressed sensing image reconstruction via adaptive sparse nonlocal regularization. Visual Comp. 34, 117–137. doi: 10.1007/s00371-016-1318-9

Zha, Z., Liu, X., Zhou, Z., Huang, X., Shi, J., Shang, Z., et al. (2017). “Image denoising via group sparsity residual constraint,” in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). New Orleans, LA: IEEE, 1787–1791.

Zha, Z., Wen, B., Yuan, X., Zhou, J., Zhu, C., and Kot, A. C. (2022). Low-rankness guided group sparse representation for image restoration. IEEE Trans. Neural. Netw. Learn Syst. 34, 7593–7607. doi: 10.1109/TNNLS.2022.3144630

Zha, Z., Yuan, X., Wen, B., Zhou, J., Zhang, J., and Zhu, C. (2019). From rank estimation to rank approximation: Rank residual constraint for image restoration. IEEE Trans. Image Process. 29, 3254–3269. doi: 10.1109/TIP.2019.2958309

Zha, Z., Yuan, X., Wen, B., Zhou, J., and Zhu, C. (2018b). “Joint patch-group based sparse representation for image inpainting,” in Asian Conference on Machine Learning, 145–160.

Zha, Z., Yuan, X., Wen, B., Zhou, J., and Zhu, C. (2020a). Group sparsity residual constraint with non-local priors for image restoration. IEEE Trans. Image Process. 29, 8960–8975. doi: 10.1109/TIP.2020.3021291

Zha, Z., Yuan, X., Zhou, J., Zhu, C., and Wen, B. (2020b). Image restoration via simultaneous nonlocal self-similarity priors. IEEE Trans. Image Process. 29, 8561–8576. doi: 10.1109/TIP.2020.3015545

Zhang, J., and Ghanem, B. (2018). “Ista-net: Interpretable optimization-inspired deep network for image compressive sensing,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Salt Lake City, UT: IEEE), 1828–1837.

Zhang, J., Zhao, C., Zhao, D., and Gao, W. (2014a). Image compressive sensing recovery using adaptively learned sparsifying basis via l0 minimization. Signal Proc. 103, 114–126. doi: 10.1016/j.sigpro.2013.09.025

Zhang, J., Zhao, D., and Gao, W. (2014b). Group-based sparse representation for image restoration. IEEE Trans. Image Process. 23, 3336–3351. doi: 10.1109/TIP.2014.2323127

Zhang, J., Zhao, D., Xiong, R., Ma, S., and Gao, W. (2014c). Image restoration using joint statistical modeling in a space-transform domain. IEEE Trans. Circuits Syst. Video Technol. IEEE. 24, 915–928. doi: 10.1109/TCSVT.2014.2302380

Zhang, J., Zhao, D., Zhao, C., Xiong, R., Ma, S., and Gao, W. (2012). Image compressive sensing recovery via collaborative sparsity. IEEE J. Emerg. Sel. Topics Power Electron. 2, 380–391. doi: 10.1109/JETCAS.2012.2220391

Zhang, K., Zuo, W., Gu, S., and Zhang, L. (2017). “Learning deep cnn denoiser prior for image restoration,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Honolulu, HI: IEEE), 3929–3938.

Keywords: image restoration, group sparsity residual, low-rank regularization, multi-scale, non-local self-similarity (NSS)

Citation: Ning W, Sun D, Gao Q, Lu Y and Zhu D (2023) Natural image restoration based on multi-scale group sparsity residual constraints. Front. Neurosci. 17:1293161. doi: 10.3389/fnins.2023.1293161

Received: 12 September 2023; Accepted: 09 October 2023;

Published: 06 November 2023.

Edited by:

Fudong Nian, Hefei University, ChinaCopyright © 2023 Ning, Sun, Gao, Lu and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dong Sun, sundong@ahu.edu.cn

Wan Ning

Wan Ning Dong Sun

Dong Sun Yixiang Lu

Yixiang Lu