Teachers’ perceptions of school assessment climate and realities of assessment practices in two educational contexts

- Sultan Hassanal Bolkiah Institute of Education, Universiti Brunei Darussalam, Bandar Seri Begawan, Brunei

School and national assessment climates are critical contextual factors that shape teachers’ assessment preferences. In this study, 431 secondary school teachers from Ghana (n = 308) and Brunei (n = 123) were surveyed to examine how their perceptions of school assessment climates influenced their assessment practices. Semi-structured interviews were also conducted with six Bruneian and eight Ghanaian teachers to understand how they practiced assessment amid their school assessment climates. Teachers were found to practice assessment and perceive their school assessment climates differently. They were either identified as moderately fair but less precise, standard-focused and more precise, formative-oriented but moderately precise assessors, or preferred contextual and need-based assessment. Teachers reported positive perceptions of the nature of school assessment climates that existed in their schools. However, the examination-oriented climates that prioritised summative assessment compelled teachers to practice assessment against their beliefs. This resulted in academic dishonesty, excessive pressure, and mental health problems among teachers and students. The findings provide implications for assessment policy and practice in terms of how result-driven educational climates compel school leaders, teachers, and students to be gatekeepers and implementers of highly marketised and politicised education and assessment systems, which hinder lifelong learning and teacher-preferred assessment practices.

1 Introduction

Classroom assessment (henceforth, assessment) is positively associated with effective teaching and learning. Effective assessment practices can motivate or demotivate students and engage or disengage them in lifelong and reflective learning (Cauley and McMillan, 2010; Gilboy et al., 2015). How students evaluate what to learn, the approach and time to learn, motivation, and self-perception of competence depend on assessment practices (Harlen and Crick, 2003; Brookhart, 2013). Assessment practices also influence students’ learning approaches, both deep or surface learning and their academic success (Scouller, 1998; Deneen and Brown, 2016). Therefore, assessment and measurement specialists, and educational researchers alike continue to advocate that teachers must have and apply the basic understanding and skills in assessment to improve instructional effectiveness (Stiggins, 1991; Mertler and Campbell, 2004; Popham, 2014). Teachers are one of the important agents driving classroom assessment. Their competency in implementing fair, valid and reliable assessment, and providing feedback that highlights where students are in their learning, where they need to go, and how to be successful learners are important to make holistic decisions about them (Hattie and Timperley, 2007; Black and Wiliam, 2018).

Even though teachers should have adequate cognitive understanding and skills to implement assessment, contemporary assessment practices require teachers to integrate their knowledge and skills with other unique contextual factors to ensure effective assessment practices. These factors, among others, are teaching and learning contexts, pedagogy, content, intrinsic characteristics, and multinational factors that may influence teachers’ assessment competencies (Willis et al., 2013; Xu and Brown, 2016; Looney et al., 2017; Herppich et al., 2018; DeLuca et al., 2019; Asamoah et al., 2023). For example, Herppich et al. (2018) argued that assessment literacy is beyond teachers’ assessment knowledge and skills but includes a diversity of experiences unique to their context and background experiences. This implies that assessment knowledge and technical skills are necessary but not sufficient for assessment literacy development. It is important to examine the interconnected factors of assessment to avoid oversimplification and to appreciate the complexity that shapes assessment practices in different educational contexts. This study examines how teachers’ perceptions of school assessment climates shape their assessment practices within their teaching and learning contexts. Quantitatively, the overriding research questions that guided the study are as follows:

1. What influence do teachers’ perceptions of school assessment climates have on their assessment practices?

a. Are there any distinct patterns in teachers’ assessment practices?

b. Are there any distinct patterns in teachers’ perceptions of school assessment climates?

c. What significant differences exist in teachers’ assessment practices depending on their perceptions of school assessment climates?

Further to the quantitative research questions, the key qualitative research questions that were addressed are:

1. How do teachers practice assessment?

2. How do teachers’ perceptions of school assessment climates influence their assessment practices?

2 Literature review

In this literature review, we embark on a comprehensive exploration of assessment practices. Our journey begins by delving into an analysis of assessment practices themselves, aiming to uncover the strategies and methodologies employed by educators in diverse settings. From there, we pivot to an examination of assessment policies within the educational systems of Brunei and Ghana, seeking insights into the institutional frameworks that shape assessment practices. Subsequently, our review culminates in an exploration of the vital link between school assessment climate and assessment practices, elucidating how the broader educational environment influences the implementation and efficacy of assessment strategies. Finally, we delve into the theoretical underpinnings provided by Kozma’s model of contextual factors, which serves as a guiding framework for understanding the intricate interplay between various elements influencing assessment practices. Through this multidimensional approach, we endeavour to gain a nuanced understanding of assessment practices and their contextual dynamics, ultimately contributing to the broader discourse on educational assessment.

2.1 Assessment practices

Assessment practices encompass all processes undertaken by students and teachers to gather information to (a) understand what students know, (b) how much they know and understand, and c) what they can do with the acquired knowledge and understanding (Black and Wiliam, 1998, 2018; Brookhart and McMillan 2019). These practices involve providing feedback to modify and improve teaching and learning (assessment for learning), students’ self and peer assessment (assessment as learning), and judging students’ mastery at the end of the instructional period (assessment of learning) (Glazer, 2014; Yan and Brown, 2021; Baidoo-Anu et al., 2023a). Key assessment practices include effective questioning, sharing learning intentions and success criteria with students, and using summative assessment for formative purposes (Moss and Brookhart, 2009; Sadler, 2013; Lam, 2016; Othman et al., 2022; Emran et al., 2023). While teachers tend to favor assessment of learning, limited studies have reported formative-oriented beliefs and practices (Price et al., 2011; Coombs et al., 2020; DeLuca et al., 2021).

Teachers’ assessment practices also involve developing and aligning assessment tasks with learning targets, including understanding the purpose, tools, and content of assessment, as well as the stage at which assessment is conducted (Koloi-Keaikitse, 2017; Cano, 2020; Grob et al., 2021; Asamoah et al., 2022). Additionally, teachers need to develop and use scoring rubrics to evaluate the quality of student work, including the criteria used to evaluate student work and how their responses are graded (Andrade, 2005). Communicating assessment results to stakeholders is also essential, requiring teachers to provide adequate information on time, format, nature, and directions for the assessment before it is conducted, as well as feedback to students and other stakeholders such as parents and educational administrators about students’ strengths and weaknesses after assessment is conducted (Cano, 2020). However, Figa et al. (2020) reported that teachers were unable to frequently provide assessment feedback to students and use feedback for instructional improvement due to low literacy in communicating assessment results.

Effective assessment practices should also include fair, reliable, and valid assessment. The assessment process should be flexible and reflect students’ capabilities, with decisions promoting equity, equality, transparency, consistency, and addressing the unique needs of students (Rasooli et al., 2019; Murillo and Hidalgo, 2020; Azizi, 2022). Fairness can also be standard, equitable, and differentiated, with assessment protocols being the same for all students to ensure standard fairness, and different assessment tasks implemented for some identified students, where necessary, to ensure equitable fairness (DeLuca et al., 2019). To promote valid and reliable assessment, teachers are expected to implement assessment tasks based on learning content and ensure that assessment results and decisions are consistent regardless of the rater and time (DeLuca et al., 2019). However, teachers’ assessment may have reliability issues due to low knowledge, values, and beliefs in assessment, leading to a preference for traditional tests over performance-based and criterion-referenced assessment options, which are associated with reliability and validity issues, coupled with heavy workload concerns (Falchikov, 2004; Xu and Liu, 2009). Teachers are also reported to overlook quality assurance in their assessment practices, resulting in poor test item construction and unfair assessment processes (Bloxham and Boyd, 2007; Klenowski and Adie, 2009).

2.2 Assessment policies in Bruneian and Ghanaian education systems

The education system in Brunei has undergone significant changes, particularly in assessment methods, with the introduction of the Bruneian National Education System for the 21st Century (SPN21). Traditionally, there has been a heavy emphasis on standardised examinations like the Brunei Cambridge General Certificate of Education Advanced and Ordinary Levels, and International General Certificate Secondary Examination. However, recent reforms advocate for a shift towards more formative assessment methods alongside summative ones. This includes encouraging self and peer-assessment among students and employing techniques like group work, portfolios, observations, project work, class exercises, and tests to cater for diverse learning needs (Ministry of Education, 2013). The School-Based Assessment (SBA) is a key component, focusing on diagnosing and intervening to support students facing difficulties. This approach prioritises the learning process over examination-oriented instruction. Additionally, initiatives like the Brunei Teachers’ Standards and Teacher Performance Appraisal highlight the importance of effective teaching, learning, and assessment practices in evaluating teachers’ competency (Ministry of Education, 2020).

Similarly, assessment in Ghana’s educational system is primarily examination-oriented, with a focus on both summative and formative practices as outlined in the teaching and learning syllabus. Assessment follows Bloom’s taxonomy, with 30% allocated to lower cognitive abilities and 70% to higher cognitive skills. SBA contributes 30–40% of every external examination, covering various forms of assessment to monitor learner achievement over time (Curriculum Research and Development Division, 2010).

The recent assessment policy, namely the New Standard-Based Ghanaian Curriculum, was introduced and implemented by the National Council for Curriculum and Assessment (2019, 2020). This reform emphasises formative assessment procedures over summative ones, urging teachers to provide timely feedback, effective questioning, and assessment tasks that promote student learning (National Council for Curriculum and Assessment, 2020). Teachers conduct summative assessments at the end of courses or semesters, grading students based on proficiency levels ranging from highly proficient (80%+), proficient (68–79%), approaching proficient (52–67%), developing (40–51%), to emerging (39% and below). These levels gauge students’ mastery of skills, understanding of concepts, and their ability to apply them to real-world tasks (National Council for Curriculum and Assessment, 2020). Assessment should also consider students’ core competencies across affective, psychomotor, and cognitive domains, evaluating their knowledge and skills in reading, writing, arithmetic, and creativity. Various assessment methods such as class exercises, quizzes, tests, portfolios, projects, and journal entries are utilised for assessment for learning and assessment as learning (National Council for Curriculum and Assessment, 2020).

The educational systems of Brunei and Ghana, like others globally, are undergoing a significant transition towards more comprehensive assessment practices. Initiatives such as the Bruneian National Education System for the 21st Century and the New Standard-Based Ghanaian Curriculum exemplify this shift. While both systems maintain a focus on summative assessments, there is a growing acknowledgment of the value of integrating formative assessment strategies to accommodate diverse student needs and enrich the learning experience. As underscored by Harun et al. (2023), the evolving assessment landscape in these contexts underscores the critical importance of assessment literacy among educators and highlights the imperative of cultivating supportive teaching and learning environments to facilitate effective assessment practices and enhance teacher professionalism.

2.3 School assessment climate and assessment practices

School climate refers to the unique characteristics that distinguish one school from another, shaping the perceptions of both the school community and those outside it (Cohen, 2009). In this study, we utilize the concept of school climate or culture to denote how teaching and learning are conducted within a school. Specifically, school assessment climate or culture encompasses the conception and practice of assessment, as well as the available support within the school to facilitate high-quality assessment (Inbar-Lourie, 2008). According to Inbar-Lourie (2008), the assessment climate allows school members to adopt certain beliefs and assumptions regarding the nature and role of assessment in the learning process.

Research, predominantly conducted in Western contexts, has highlighted the association between assessment cultures or climates and assessment practices. For instance, teachers who perceive their school climates as supportive of assessment for learning principles are more likely to prioritize summative assessment to meet student accountability (Carless, 2011; Jiang, 2020). Similarly, Vogt and Tsagari (2014) found that Greek and German teachers were more inclined to use paper and pencil tests in language assessment compared to their counterparts in Cyprus, attributed to differences in assessment climates favoring assessment of learning. The dominance of an examination culture in Singapore compels teachers to develop competencies in assessment of learning (Sellan, 2017). Moreover, school assessment policies, such as those in China directing pre-service teachers on assessment approaches, have implications for the implementation of formative assessment (Xu and He, 2019).

Studies by Brown et al. (2009, 2015) have emphasised the impact of assessment policies on teachers’ assessment practices. In Hong Kong, teachers believed that learning outcomes improved due to the use of assessment to prepare students for examinations, reflecting cultural norms on examinations influencing school culture and assessment reforms (Brown et al., 2009). Similarly, in Indian private schools, a greater emphasis on school-based and internal assessment was associated with increased assessment practices aimed at educational improvement (Brown et al., 2015). These studies underscore the significant influence of assessment policies on teachers’ practices, with findings from both Hong Kong and Indian private schools highlighting the pivotal role of assessments in shaping educational outcomes and fostering improvements in teaching methodologies.

Additionally, management support for assessment has been shown to influence assessment practices. For example, Pedder and MacBeath (2008) argued that management support for teacher development and collaboration predicted the level of school practices. Shared visions and a sense of belonging among employees create a climate conducive to teaching and learning. Conversely, teachers may feel constrained in their assessment practices if they perceive limited support or voice in assessment decisions made by school management (Coombe et al., 2012). Feedback practices and teacher implementation of assessment methods are also influenced by school policies and conditions, highlighting the importance of school leaders and policymakers in providing an environment conducive to effective assessment practices.

2.4 Kozma’s model of contextual factors

This study utilises Kozma’s (2003) model of contextual factors to comprehend teachers’ assessment practices within the framework of their school assessment climates. Teaching and learning processes, including assessment, can be significantly impacted by external factors beyond the classroom. These factors encompass attributes of students, teachers, schools, and the broader community. Teachers who design assessments to gauge student learning must grasp the settings and contexts in which students learn and are evaluated. This understanding is pivotal in fostering a formative-driven environment that can enhance teaching and learning outcomes. Kozma underscores how certain contextual factors have the potential to influence teaching and learning, offering crucial insights into how assessment practices can also be shaped by these factors.

Contextual factors are specific to individuals, groups, institutions, or societies. Kozma (2003) identifies three distinct yet interrelated levels of contextual factors that can impact teaching strategies: micro, meso, and macro levels. At the micro-level, Kozma highlights immediate classroom factors, including the characteristics of teachers and students that can influence teaching practices. This encompasses students’ experiences in assessment and how teachers collaborate with students to make assessment decisions (Fulmer et al., 2015).

Factors outside the classroom but within the school environment are categorised as meso-level factors (Kozma, 2003). These factors directly influence how teaching and learning activities are conducted in the classroom. In the context of assessment, meso-level factors specific to the school may include school policies, leadership support for assessment practices, school climate, and the provision of materials and technology to support assessment.

The macro-level encompasses national factors that influence both micro (classroom) and meso (school) factors. These factors include the broader educational systems and goals of a country that influence school practices (Kozma, 2003). Additionally, they encompass how the activities of other organisations in the community, cultural norms, and educational decisions impact teaching, learning, and assessment.

A substantial and growing body of knowledge, as evidenced by systematic reviews, has demonstrated how contextual factors influence assessment practices (Brown and Harris, 2009; Willis et al., 2013; Fulmer et al., 2015; Xu and Brown, 2016; Looney et al., 2017). For instance, Brown and Harris (2009) proposed a relationship between contextual factors, assessment practices, and student learning outcomes. Building on Kozma’s model, Fulmer et al. (2015) suggested that teachers’ knowledge, values, and conceptions of assessment are linked to their assessment practices. They recommended further research to explore how meso-level factors, such as school assessment climates, influence assessment practices. According to Xu and Brown (2016), assessment literacy is contingent upon assessment knowledge, skills in integrating assessment with teaching and learning, and teachers’ identity as assessors.

Assessment practices can be influenced by a multitude of factors unique to teachers, including the prevailing assessment climates in their schools. Kozma’s model offers a valuable framework for understanding how teachers’ assessment practices are shaped by the assessment climates within their schools. The theory is crucial for understanding the assessment practices of teachers in Brunei and Ghana within the context of their respective school assessment climates.

In sum, the education and assessment policies in both Ghana and Brunei aim to shift teaching and learning away from exam-centric and rote learning environments towards instructional settings that emphasise formative assessment to enhance teaching and learning quality. These reforms also aim to equip students with 21st-century skills such as critical thinking, creativity, and communication (Ministry of Education, 2013; National Council for Curriculum and Assessment, 2020). While these reforms prioritise school-based formative assessment over summative evaluation, the implementation may face challenges, particularly in environments accustomed to examination-focused teaching. Teachers’ perceptions of these reforms may influence their adoption, with negative attitudes potentially hindering effective implementation (Brown, 2004; Brown et al., 2011).

To meet evolving accountability standards, teachers in both contexts must employ valid and reliable assessment methods. However, research exploring teachers’ assessment practices and perceptions in response to these changes remains limited, particularly in African and Southeast Asian countries like Brunei and Ghana. Given our comprehensive understanding of the education and assessment systems in both countries compared to other contexts, this study strategically selects samples from these two educational environments to address this research gap. The study examines teachers’ perspectives on their school assessment climates and how these climates influence their assessment practices and beliefs. While both countries share similar assessment priorities, this initial investigation aims not to compare them but to concurrently study them to understand their assessment practices within their respective school contexts.

3 Methods

3.1 Design and approach

The potential impact of teachers’ perceptions of school assessment climates on their assessment practices was investigated using a mixed-method research design employing a sequential explanatory approach (Creswell, 2014). Rooted in the pragmatist paradigm, this approach allows for the collection and analysis of quantitative data preceding the collection of qualitative data, which serves to elucidate the quantitative findings. Recognising that neither the quantitative nor qualitative approach alone could fully elucidate the intricate relationship between school assessment climates and teachers’ assessment practices, both methodologies were employed to address this limitation (Teddlie and Tashakkori, 2009). This study gave precedence to the quantitative phase, conducting online surveys wherein teachers self-reported their perceptions of school assessment climates and assessment practices. The subsequent qualitative phase involved semi-structured and in-depth interviews with a subset of participants from the quantitative phase, aimed at providing insights into the quantitative findings.

3.2 Participant and sampling

A total of 431 secondary school teachers, comprising 123 from Brunei and 308 from Ghana, participated in the study. Participants were selected using snowball and convenience sampling techniques (Naderifar et al., 2017; Stratton, 2021). In snowball sampling, participants helped identify additional potential participants, facilitated by sharing the survey link with established contacts. Given the constraints of the COVID-19 pandemic, data collection relied on digital dissemination methods such as WhatsApp, Facebook, and emails. Convenience sampling involved selecting participants based on their availability and accessibility to the survey links. Participation was contingent upon factors including geographical location, availability, willingness, and ease of access to the survey links.

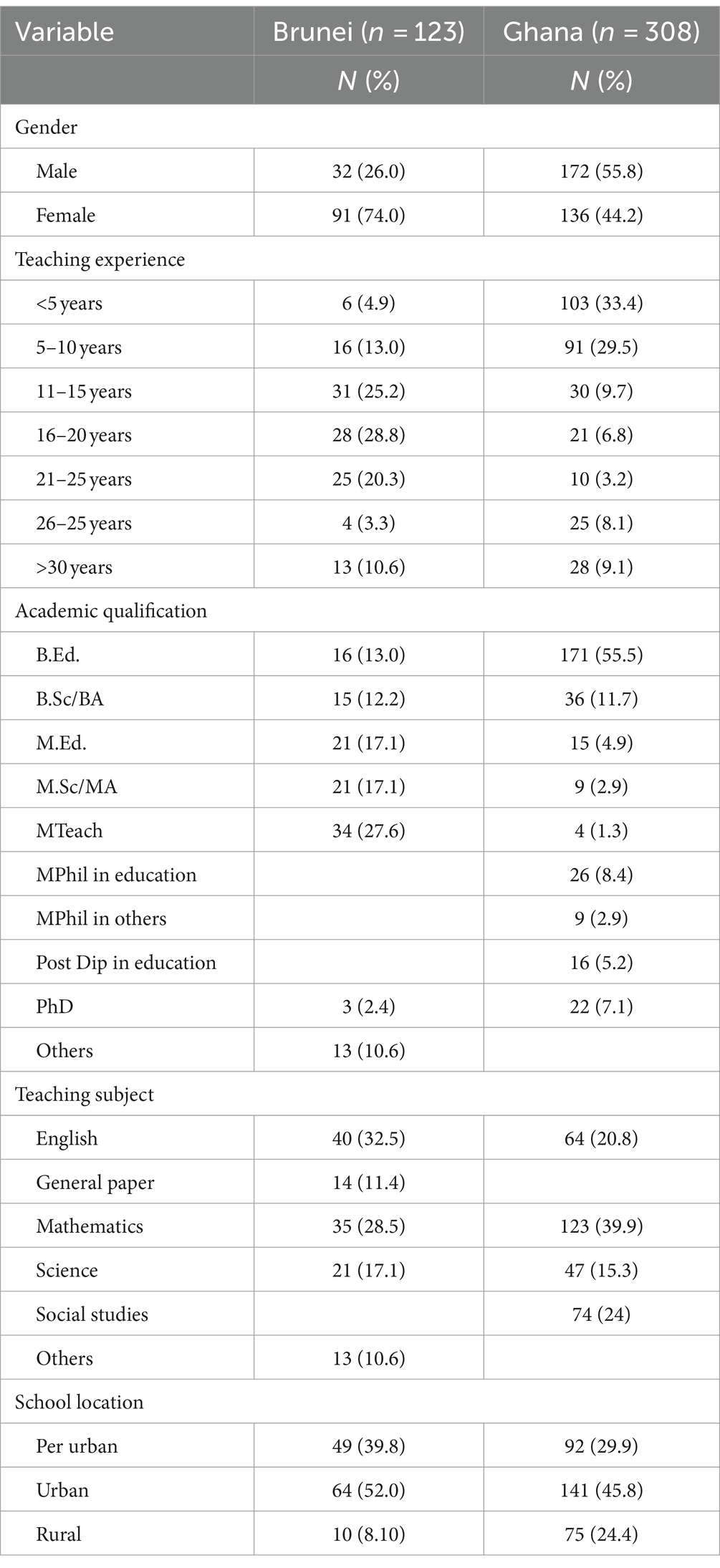

Regarding the Bruneian participants detailed in Table 1, the majority were female (74%), with a significant portion (28.8%) possessing 16 to 20 years of teaching experience. Over 60% held master’s degrees, the largest percentage (32.5%) identified as English teachers, and the majority of schools (more than 60%) were located in urban areas. On the other hand, among the Ghanaian participants, over 55% were male, and the predominant segment (33.4%) had less than 5 years of teaching experience. More than half of the Ghanaian participants held bachelor’s degrees in education, with the majority (39.9%) teaching mathematics. Approximately 46% of the schools represented by Ghanaian participants were situated in urban areas.

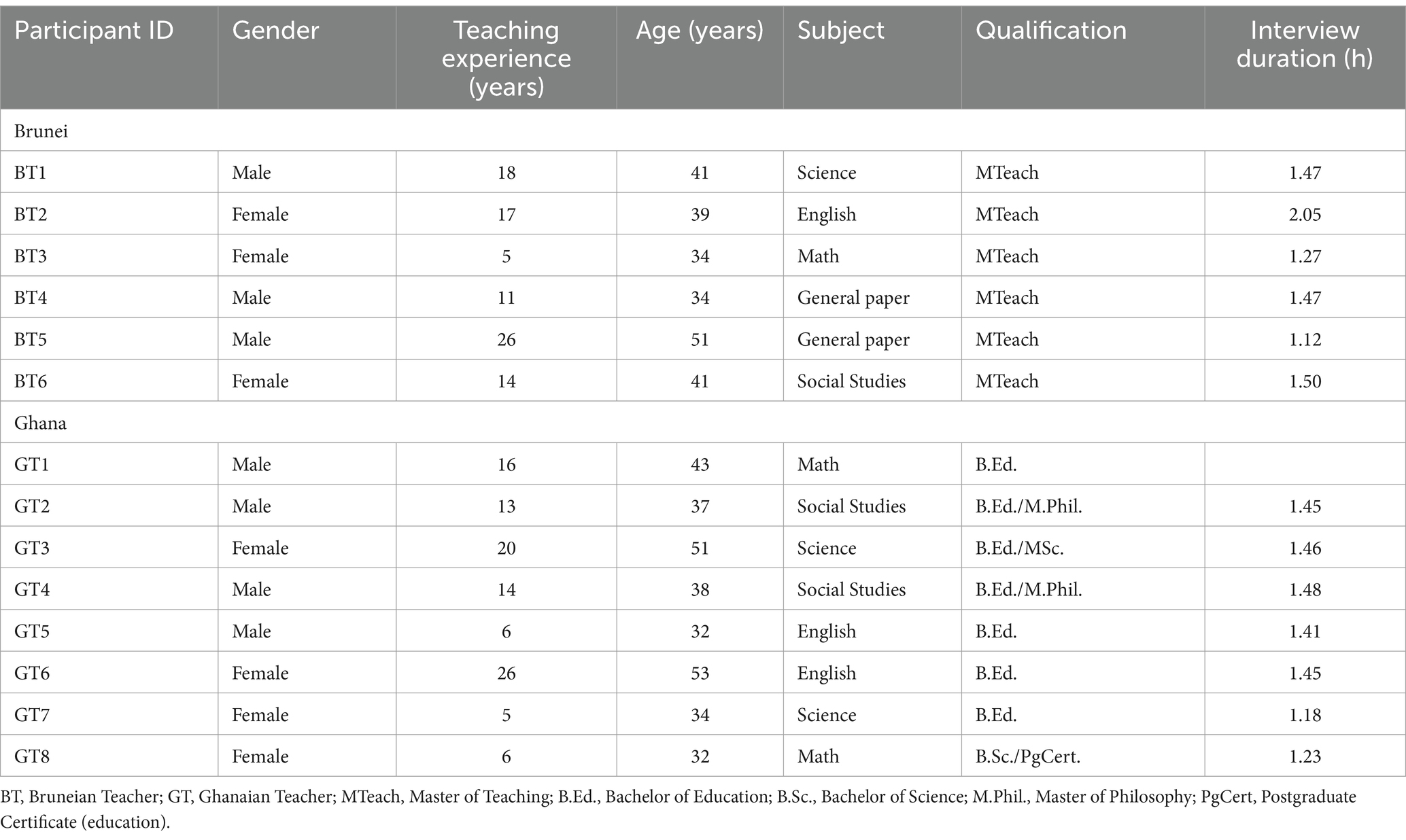

Semi-structured interviews were conducted with a total of 14 participants, comprising six from Brunei and eight from Ghana. The participants were selected using multistage sampling techniques, including random, purposive, and stratified sampling, aimed at gaining insights into teachers’ assessment practices and how their school assessment climates influenced these practices. A total of 8 Bruneian and 12 Ghanaian teachers agreed to participate in the interviews. Simple and stratified random sampling methods were employed to select the participants, with variables such as gender, years of teaching experience, and teaching subjects used for stratification. For instance, the proportion of female and male participants among Bruneian teachers was determined, and the interview proportion was calculated accordingly based on the total female population. The same approach was applied to determine the proportion of participants based on years of teaching experience and teaching subjects. After stratification, simple random sampling was used to select participants meeting the criteria. Subsequently, participants’ IDs were randomly selected from pieces of paper placed in boxes. The same procedures were followed to select the 8 Ghanaian participants.

The six Bruneian and eight Ghanaian participants were deemed sufficient to understand how their school assessment climates influenced their assessment practices. Redundancy in the interview data was observed among the sixth and eighth participants in both contexts. According to Galvin (2015), there is over a 99% chance of identifying a theme among six participants compared to if the theme is shared among 55% of the population. The demographic characteristics of the interviewed teachers are summarised in Table 2. Among the six Bruneian participants, teaching experience ranged from 5 to 26 years, and ages ranged from 34 to 51 years, with all possessing a Master of Teaching degree. Similarly, among the eight Ghanaian teachers, teaching experience ranged from 5 to 26 years, and ages ranged from 32 to 53 years, with the majority holding a Bachelor of Science and a Postgraduate Certificate in Education. In both contexts, participants were evenly distributed by gender. The diverse educational and professional backgrounds of the participants in both phases of the study facilitated accurate insights into their assessment practices and school assessment climates.

3.3 Instruments

Quantitative data was collected using online surveys that involved three sections. The first section involved the demographic data of the participants. The second section consisted of 27 items that measured the assessment practices of teachers. The items were adapted from Part C of the Approaches to Classroom Assessment Inventory (DeLuca et al., 2016b), and were measured on a six-point scale, 1-strogly disagree to 6-strongly agree. Section three consisted of 15 items, adapted from the School Climate Assessment Instrument, part 6 (Alliance for the Study of School Climate, 2016), and measured on a six-point scale 1-extremely low to 6-extremely high. The two instruments were suitable to achieve the objectives of this study. For example, the items that measured assessment practices were designed based on contemporary assessment literacy standards, including assessment purpose, process, fairness, validity, and reliability. The school assessment climate instrument was the widely used and validated instrument for measuring school assessment climate across contexts.

In addition to the online survey, semi-structured and in-depth interviews were conducted based on the survey’s results to understand how school assessment climates influenced assessment practices. The interview questions asked teachers about their experiences with their assessment practices and the prevailing school assessment policies that influence them. Sample questions included: What is the purpose of your assessment? What processes do you undertake when assessing your students? How do you ensure that your assessment is fair to all students? How do you ensure consistency and validity in your assessment? What is the nature of assessment practices in your school? How does the environment or condition in your school hinder or improve your assessment practices? The quantitative and qualitative phases were administered separately, but both phases were integrated in the interpretation and discussion of the findings.

3.3.1 Validity and reliability of instruments

In DeLuca et al. (2016a), the psychometric properties of the instrument that measured assessment practices were determined by an Exploratory Factor Analysis (EFA) using 400 Canadian teachers. This resulted in internal consistencies of 0.90 and 0.89 for the two subscales that measured assessment practices. The SCAI instrument had an initial internal consistency of 0.88 among teachers (ASSC, 2022). The measurement quality of both instruments was also assessed through EFA and a Confirmatory Factor Analysis (CFA). The purpose was not to validate the instruments but to assess their psychometric properties among the Bruneian and Ghanaian samples. Summaries of the EFA and CFA results are detailed in the results section.

The interview guide consisted of open-ended questions, which were validated by two experts in qualitative research. A teacher from each context was invited to participate in an informal interview to revise, clarify and address any unforeseen issues during the main interview. After the pilot interviews, the two participants provided their feedback, which helped revise the semi structured interview guide. Comments from both the expert review and informal interviews concerned the pace of questioning and feedback that helped clarify wording and language. These corrections were made before the final interviews were conducted, which improved the accuracy of the interview data. Through the interview process and analysis, trustworthiness was ensured through credibility, dependability, transferability, and confirmability (Guba and Lincoln, 2005). To ensure dependability and confirmability, all processes and procedures that were involved in the data collection, and how data was analysed and interpreted, as well as how the final interview report used in this study have been described. The study contexts have been justified and all interview findings have been supported by theory-based and relevant excerpts that resulted from the data analysis. This improves transferability and the reader’s understanding of the study findings, and makes inferences in similar contexts. Finally, credibility was also ensured by collecting data from two sequential sources through surveys and interviews. The quantitative phase was dominant, while the qualitative phase supported and explained the quantitative phase.

3.4 Ethical issues and data collection

This study received ethical clearance from the Ethics committee of the Sultan Hassanal Institute of Education, Universiti Brunei Darussalam. Participants were informed of their rights and responsibilities by completing invitation letters, consent forms, and information sheets. They had the opportunity to withdraw from this investigation when they wished. The information they provided was treated confidential and their identities were kept anonymous. From August to December 2022, quantitative data was collected through online surveys, set up in Qualtrics. Two separate Qualtrics links, which lasted 25 min, were generated and distributed to all participants through emails, Facebook and WhatsApp group chats.

After the quantitative data collection and analysis, all participants who agreed that they would be interviewed during the surveys were contacted through the details they provided. Online (via WhatsApp voice calls) and in-person interviews were conducted in both study contexts based on the availability and convenience of the participants. All interviews were conducted in English, lasted an average of 1.20h, and was conducted from December and February 2023. An informal conversation style was used to allow participants to share their experiences in a relaxed manner. Probing questions were asked to follow up on the responses the participants provided, which promoted in-depth understanding of their experiences of the issues discussed. Field notes (i.e., short phrases and sentences) on some key responses were taken to complement the interview data. All interviews were recorded on a voice recorder for transcription and further analysis.

3.5 Data analysis

Before the main analysis, EFA and CFA were performed to examine the measurement quality of the instruments. The EFA was performed in SPSS. Principal Component Analysis (PCA) was used as the extraction method considering the sample sizes (Bandalos and Finney, 2018). Kaiser-Meyer-Olkin measure of sampling adequacy (KMO) and Bartlett’s test of sphericity were used to assess sampling adequacy (Hutcheson and Sofroniou, 1999). A cut-off points of 0.40 was used to suppress the factor loadings (Stevens, 1992). Eigenvalues greater than 1 and screen plots were used to determine factor solutions. Monte Carlo’s Principal Component Analysis (PCA) for Parallel Analysis was performed to confirm the explored factor solutions based on eigenvalues and screen plots (Watkins, 2000). All items that did not meet the cutoff or were not significant loadings of a factor were deleted. Cronbach’s alpha reliabilities (α) were used to evaluate item consistencies. Alpha values of 0.70 and above suggests acceptable internal consistency (Pallant, 2010), which are the evaluation criteria for the emerged factors.

The CFA was performed in AMOS to confirm the explored factors of the EFA. Indices such as chi-square divided by the degrees of freedom (i.e., cmin/df), Comparative Fit Index (CFI), Tucker-Lewis Index (TLI), Root Mean Square Error Approximation (RMSEA) and Standardised Root Mean Square Residual (SRMR) were used as the evaluation criteria. Cmin/df, RMSEA, and SRMR were used to determine the absolute fitness of the theoretical model. The CFI and TLI judged incremental fitness (Alavi et al., 2020). Also, low values of Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) judged parsimonious model fit (Schumacker and Lomax, 2004). For a model to be close to fitness, the literature suggests that cmin/df should be less than 3.0 or 5.0, p-value should be greater than 0.05, CFI should be greater than 0.95 or 0.90, or sometimes permissible if it is greater than 0.80, SRMR should be less than 0.09 and RMSEA should be less than 0.05 to be good, or from 0.05 to 0.10 to be moderate (e.g., Hu and Bentler, 1999). Composite reliability (CR), discriminant, and convergent validity were also used to judge composite internal consistency and validity of the confirmed items. According to Hair et al. (2010), acceptable CR value should be greater than 0.70, Average Variance Extracted (AVE) should be greater than 0.50, Mean Square Variance (MSV) should be less than AVE or the square root of AVE should be greater than inter-construct correlations. These evaluation criteria were used to examine the psychometric properties of the emerged scales.

In the main analysis, frequency counts were perfumed in SPSS to clean the data and address all missing values. For the first research question and related sub-questions that focused on the distinct patterns of assessment practices and school assessment climates, a latent profile analysis (LPA) was performed in MPLUS to examine such patterns (Magidson and Vermunt, 2004). Composite scores on each scale were estimated in SPSS and transported to MPLUS for the LPA. The LPA involved testing one to six latent class-models before determining suitable class models based on specific assumptions (Nylund et al., 2007). Our study was centred on collecting data from distinct populations in two different countries to understand their assessment practices within the context of school assessment climates, rather than comparing groups or responses. Utilising LPA aligned with our research objectives, allowing for a person-centred analysis considering the population differences. Consequently, we did not conduct measurement invariance testing, a methodology consistent with prior studies such as those conducted by DeLuca et al. (2021) and Coombs et al. (2018), who also employed LPA without testing for invariance. To examine the difference in assessment practices depending on school assessment climates, a chi-square test of association was performed in SPSS to test for any associations. The class probabilities that emerged from the LPA or the factors that emerged from the CFA from the two scales were used. Statistical significance was determined at 5% alpha.

Regarding the second and third research questions that focused on how teachers practiced assessment and how their school assessment climates influenced their assessment practices, thematic analysis was used to analyse interview transcripts and field notes (Braun and Clarke, 2006). The data was read thoroughly for in-depth understanding and manually analysed using deductive and inductive coding. The inductive process helped observe patterns and label recurring themes, while deductive coding helped identify and label themes based on the research questions and theoretical framework (Fereday and Muir-Cochrane, 2006). To improve the consistency of interview analysis, two independent qualitative data analysts were invited to code and analyse the transcripts using inductive and deductive processes. Their negotiated consensus resulted in a coding agreement of 88%, which is sufficient (Miles and Huberman, 1994). Member checking was done by inviting interview participants to judge the accuracy of their responses after transcription. Combining deductive, inductive, and fields notes ensured triangulation, which improved the accuracy of the interview data. Direct experts from the raw data have been used to confirm the general interview findings. In the analysis, participants in Brunei and Ghana have been referred to as BT and GT, respectively.

4 Results

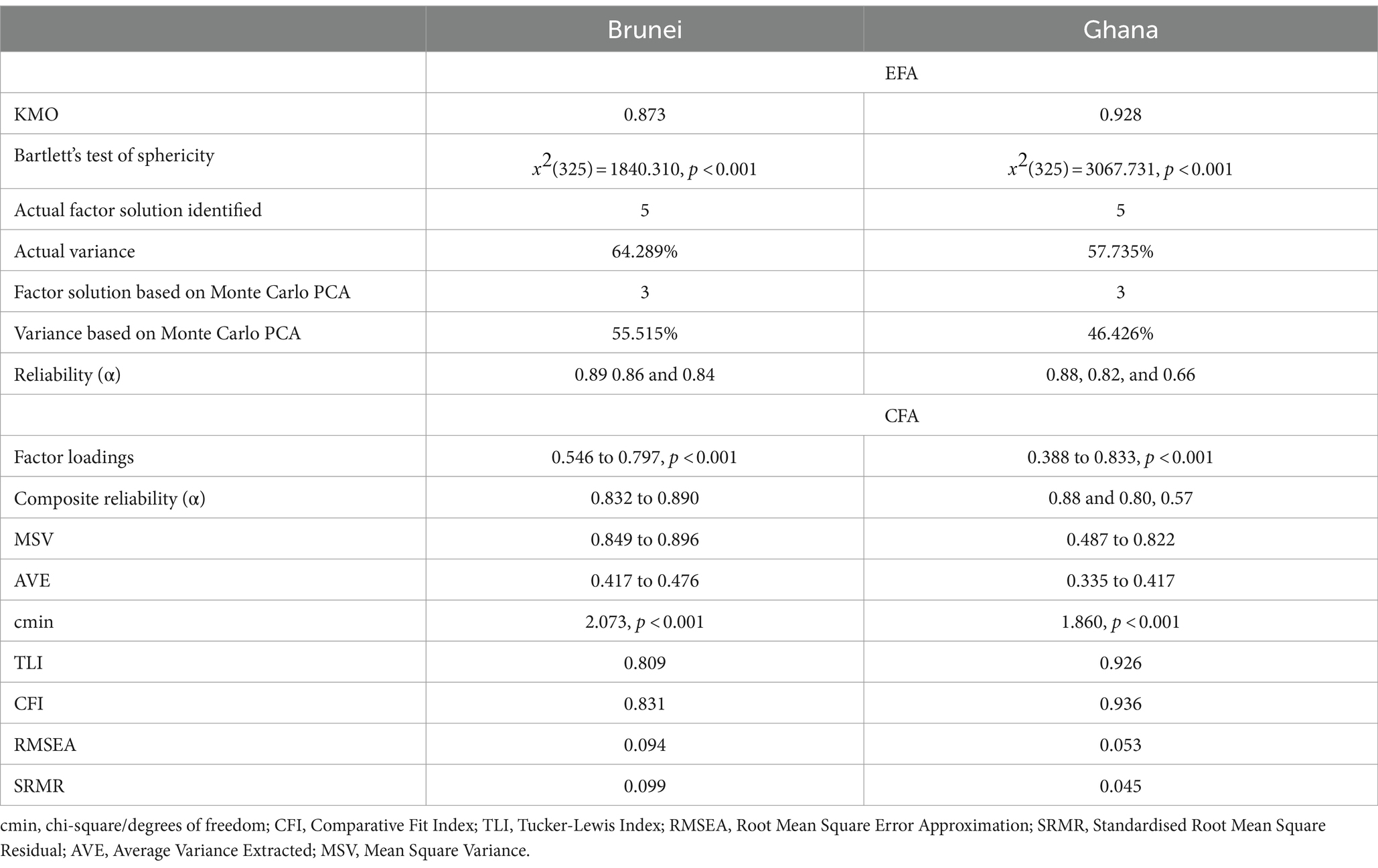

Sampling adequacy for the EFA was fulfilled among the Bruneian sample, KMO = 0.873, Bartlett’s test of sphericity (325) = 1840.310, p < 0.001. The analysis resulted in a three-factor solution that described teachers’ assessment practices, which accounted for 55.515% of the accumulated variance. The factors were formative-focused assessment (9 items), equitable and differentiated assessment (7 items), and standard-focused and precise assessment (7 items), with acceptable internal consistencies (see Table 3). The CFA confirmed the three factors that emerged from the EFA, with satisfactory composite reliability, but weak discriminant and convergent validity (Hair et al., 2010); however, the model exhibited a fair close to fitness as shown in Table 3 (Hu and Bentler, 1999).

Similarly, sampling adequacy for the EFA was fulfilled among the Ghanaian sample, KMO = 0.928 and Bartlett’s test of sphericity (325) = 3067.731, p < 0.001. A three-factor solution, which accounted for 46.426% of the accumulated variance was supported (see Table 3). The factors that described teachers’ assessment practices were purpose and process of assessment (11 items), need-based and accurate assessment (8 items), and contextual and standard fairness (3 items), with acceptable internal consistencies (see Table 3). The CFA confirmed the three-factor model from the EFA, with moderate to strong composite reliabilities, but weak discriminant and convergent validity. A satisfactory close to fitness was achieved (see Table 3).

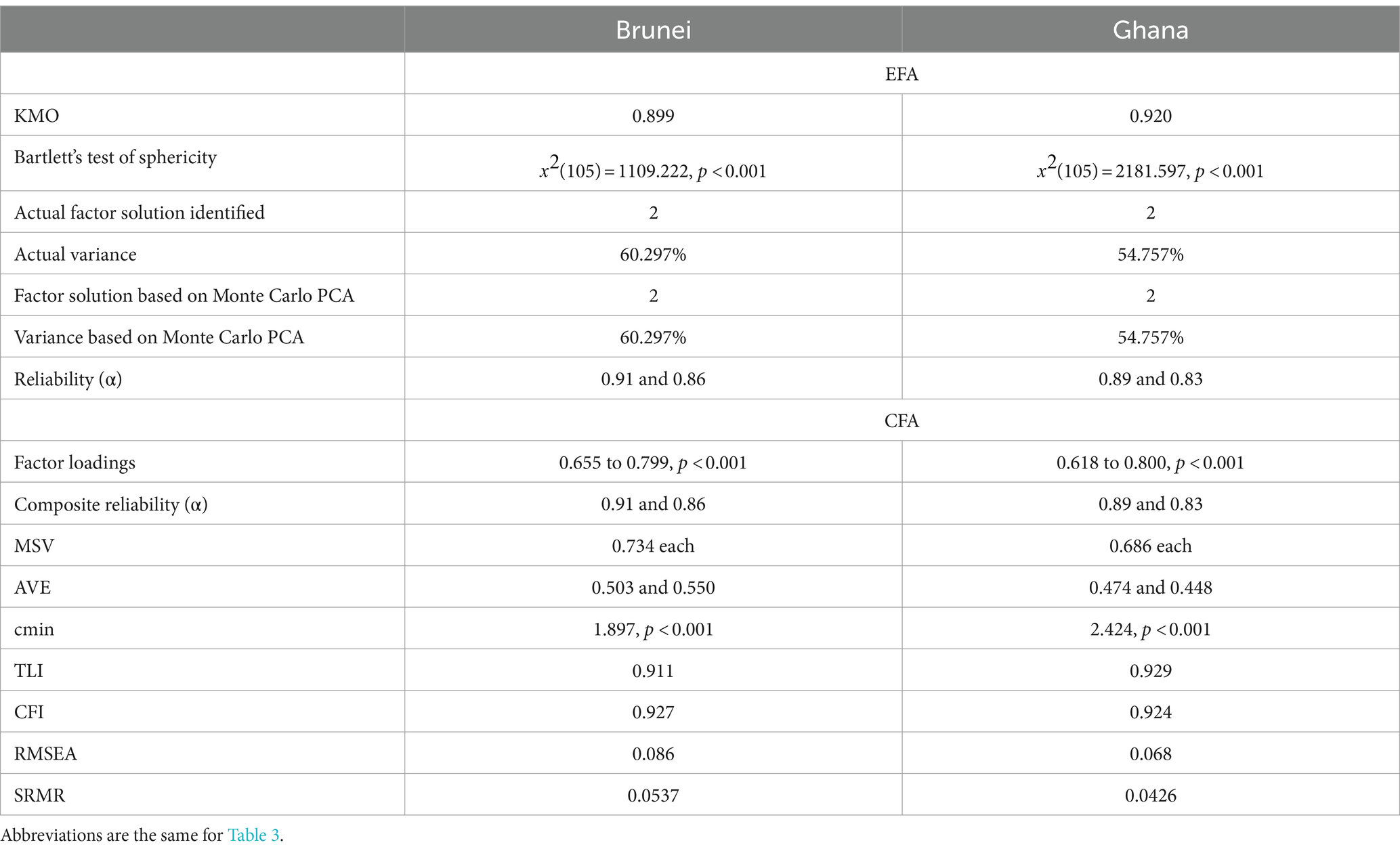

Regarding the scale that measured school assessment climate, sampling adequacy was fulfilled among the Bruneian sample, KMO = 0.899 and Bartlett’s test of sphericity (105) = 1109.222, p < 0.001. A two-factor solution was identified to describe the nature of the school assessment climates that existed in schools. The two factors explained 60.297% of the accumulated variance (see Table 4). The factors were assessment-focused (10 items) and teaching and learning-focused climates (5 items), with excellent internal consistencies. The CFA confirmed the two factors that emerged from the EFA, with excellent composite reliabilities, good convergent validity, but weak discriminant validity, as well as a moderate close to fitness (see Table 4). The adequacy of the sampling was met for the EFA among the Ghanaian sample, KMO =0.920 and Bartlett’s test of sphericity, (105) = 2181.597, p < 0.001. A two-factor solution, which accounted for 54.757% of the accumulated variance, was supported to describe the nature of school assessment climates that existed in schools (see Table 4). The factors were student-centred assessment climate (9 items) and school support to instruction and assessment climate (6 items), with acceptable internal consistencies. The CFA confirmed the two-factor solution from the EFA, with acceptable composite reliabilities, weak discriminant, and convergent validity, but adequate closeness to model fitness (see Table 4).

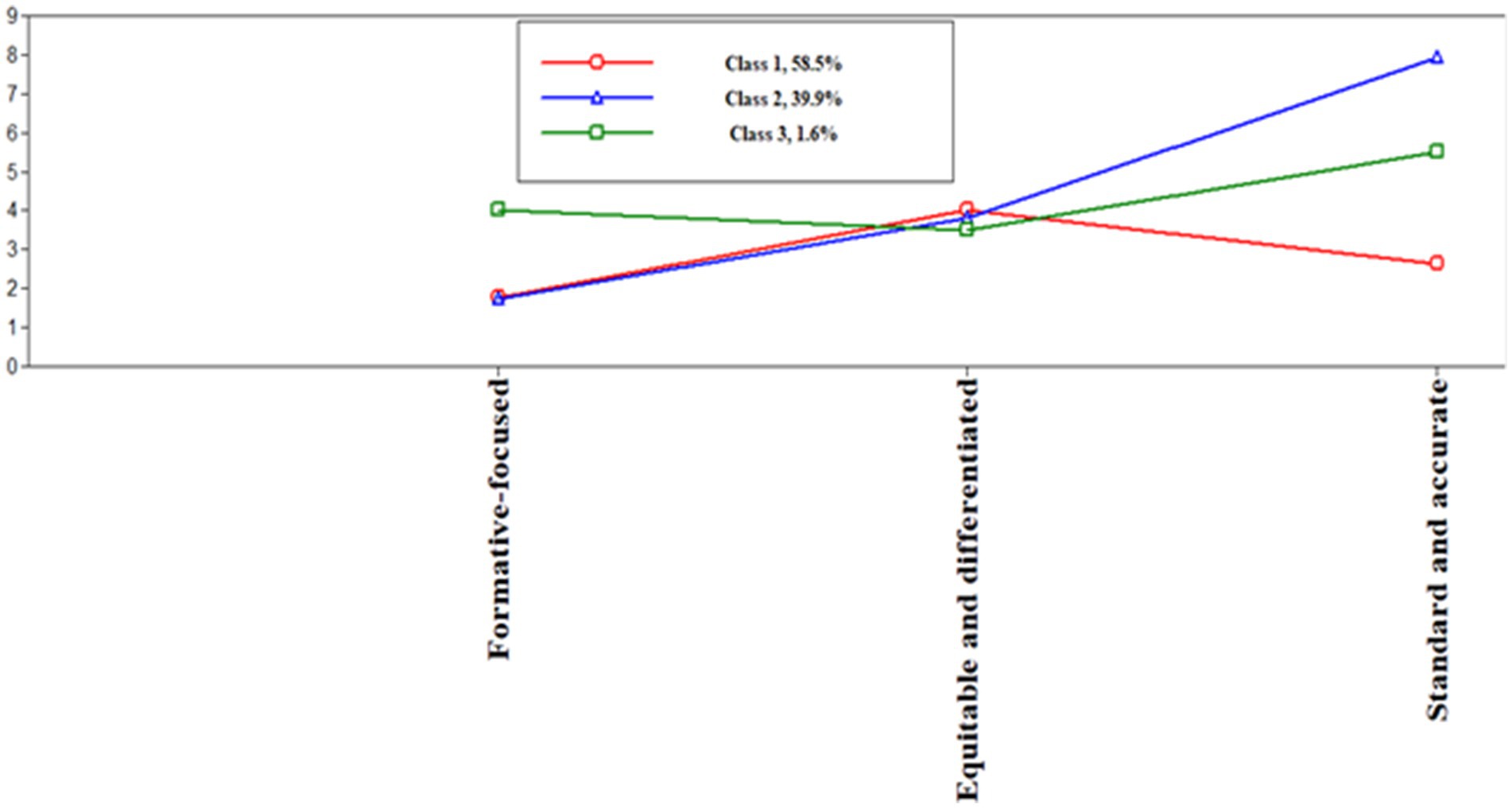

4.1 Patterns in teachers’ assessment practices

Among the Bruneian sample, the LPA analysis supported a three-class model. The class-model was determined after examining key assumptions: Entropy = 0.986, low AIC, BIC and SSA BIC values of 1211.759, 1251.129 and 1206.863, receptively, with VLMR-LRT (p) and LMR-LRT (p) >0.05. The probabilities of class membership ranged from.997 to 1.00. The first, second and third classes had a membership of 72 (58.5%), 49 (39.9%), and 2 (1.6%), receptively. The results of the LPA revealed distinct patterns in Bruneian teachers’ assessment practices. Most teachers were identified as moderately fair, but less precise assessors (see Figure 1).

Figure 1. Patterns in Brunei teachers’ assessment practices. Class 1 = Moderately fair but less precise assessors, Class 2 = Standard-focused and more precise assessors, Class 3 = Formative-oriented but moderately precise assessors.

4.1.1 Class 1: moderately fair, but less precise assessors

Most (58.5%) of the teachers belonged to this class. Their assessment practices did not prioritise formative assessment. They were somewhat fair since they preferred an equitable and differentiated assessment. These teachers did not prefer to practice precise and standard-focused assessment. This shows that their assessment practices lacked validity, reliability and use of the same assessment protocols for all students.

4.1.2 Class 2: standard-focused and more precise assessors

This class had the second highest membership of 39.9%. Like their counterparts in the first class, teachers in this class practiced less formative assessment. They preferred standard fairness, reliable, and valid assessment. They preferred assessment that is more consistent, content-based, and fair to all students.

4.1.3 Class 3: formative-oriented, but moderately precise assessors

This class had the least number of teachers, representing 1.6%. Teachers’ assessment practices focused on formative assessment. They moderately practiced valid, reliable, and standard assessment.

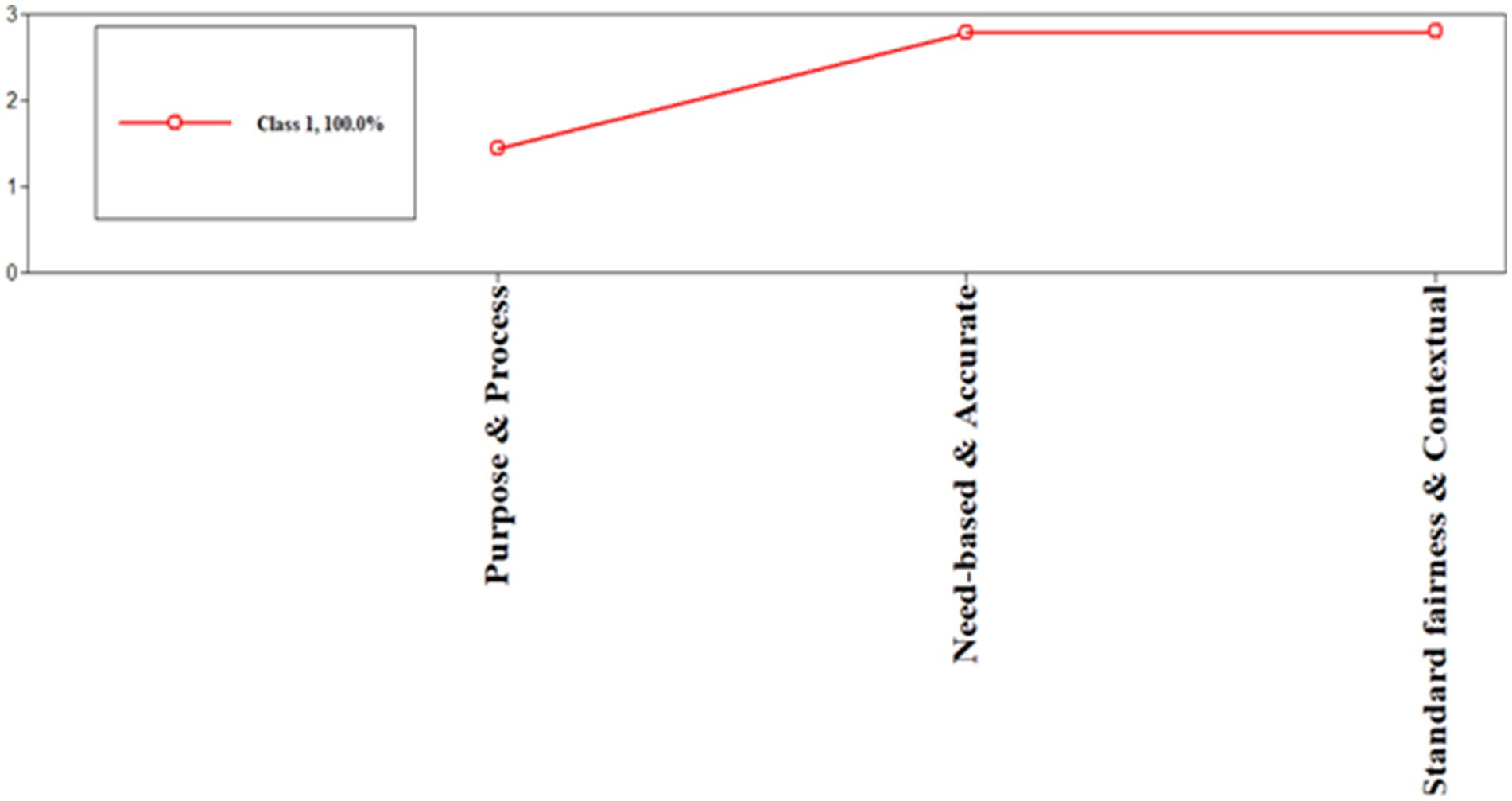

In the Ghanaian participants, the results of the LPA revealed no distinct patterns in teachers’ assessment practices (see Figure 2), as the LPA supported a one-class model with Entropy = 1.00, VLMR-LRT and LMR-LRT (p) >0.05, low AIC = 3226.893, BIC = 3249.274, SSA BIC = 3230.244 and class probability of 1. The pattern of teachers’ assessment practices was further investigated to determine the type of assessors (see Figure 2). As shown in Figure 2, the Ghanaian participants belonged to the same group of assessors (i.e., fair, and accuracy-concerned assessors). They prioritised need-based and accurate, standard fairness and contextual assessment. For example, they preferred an equitable, differentiated, and a balance between reliability and validity of assessment. Conversely, they did not prefer assessment practices that focused on the purpose and process of assessment. They were less likely to prioritise assessment of, as and for learning, test design, use, scoring, and communication of assessment results.

4.2 Patterns in teachers’ perceptions of school assessment climate

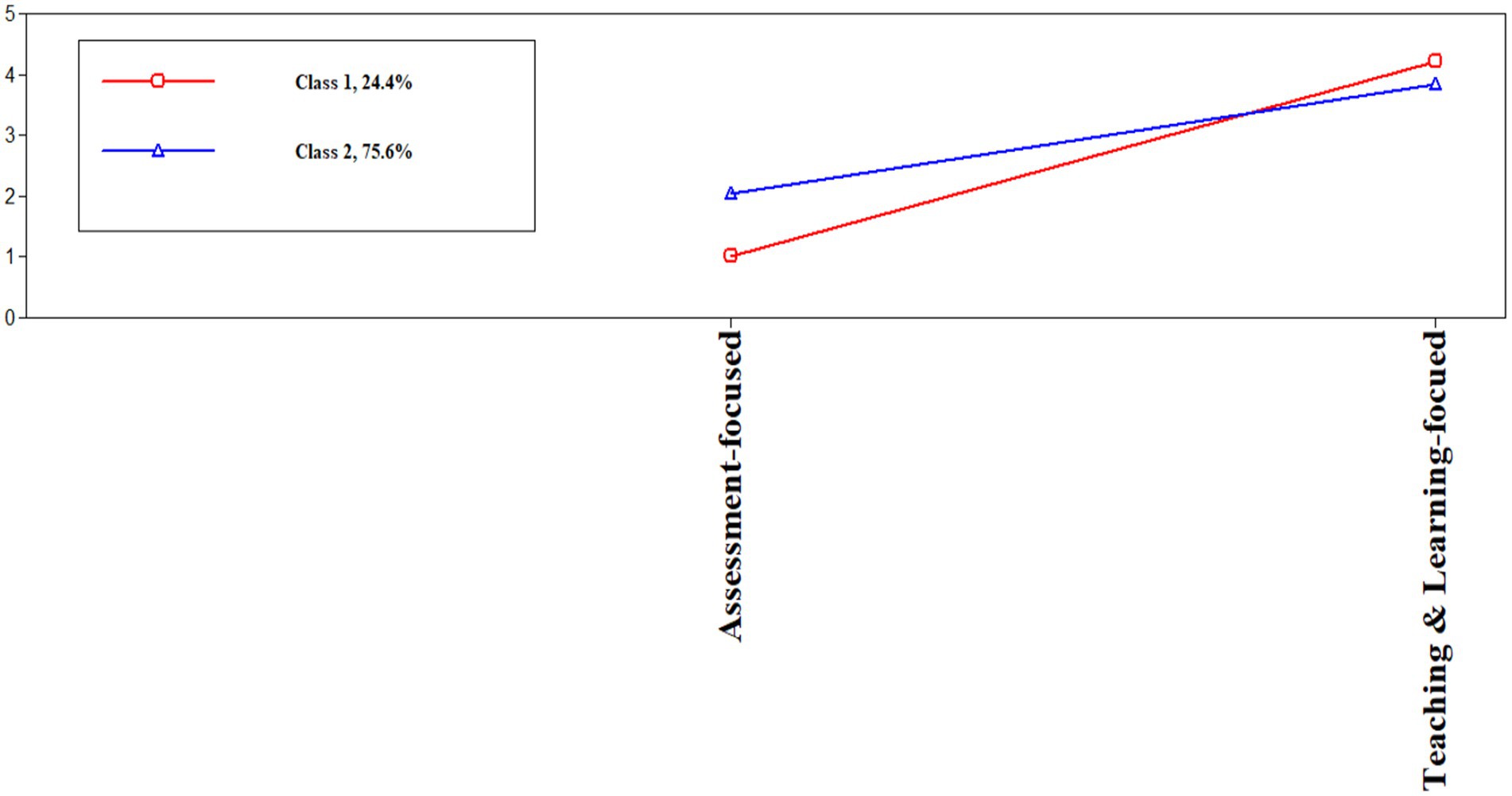

Among the Bruneian participants, the LPA supported a two class-model with Entropy = 0.997, low AIC = 620.963, BIC = 640.648, SSA BIC = 618.515, VLMR-LRT (p) and LMR-LRT (p) >0.05. The first and second class had membership probabilities of 0.999 and 1.00, respectively. The LPA revealed distinct patterns in teachers’ perception of school assessment climate in their schools. Most teachers believed that there was a mixed climate, involving teaching and learning, and assessment-focused climates. The results also showed an increasing perception from assessment-focused to teaching and learning-focused climates (see Figure 3).

Figure 3. Patterns in Bruneian teachers’ perceptions of school assessment climate. Class 1 = High teaching and learning-focused climate, Class 2 = Mixed climate perceivers.

4.2.1 Class 1: high teaching and learning-focused climate perceivers

This class had the least membership of 24.4%. Teachers in this class believed that there was a high teaching and learning-focused climate compared to assessment-focused climate in their schools.

4.2.2 Class 2: mixed climate perceivers

This class had the highest membership of 75.6%. Teachers perceived their school assessment climate as both assessment-focused and teaching- and learning-focused climates. Unlike the teachers in Class 1, those in this class believed that there was a high assessment-focused compared to teaching and learning-focused climate in their schools.

Among Ghanaian participants, the LPA did not reveal a distinct pattern in how teachers perceived their school assessment climates. The LPA supported a one-class model with Entropy = 1.00, VLMR-LRT and LMR-LRT (p) >0.000 and low AIC = 1749.270, BIC = 1764.190, and SSA BIC = 1751.504 and a class probability of 1 (see Figure 4). As shown in Figure 4, the participants reported similar perceptions about their school assessment climates. They perceived the assessment climate in their schools as school support to instruction and assessment compared to being a student-centred assessment climate. There was an increasing perception from student-centred assessment to school support to instruction and assessment climate (see Figure 4).

4.3 Influence of teachers’ perceptions of school assessment climates on assessment practices

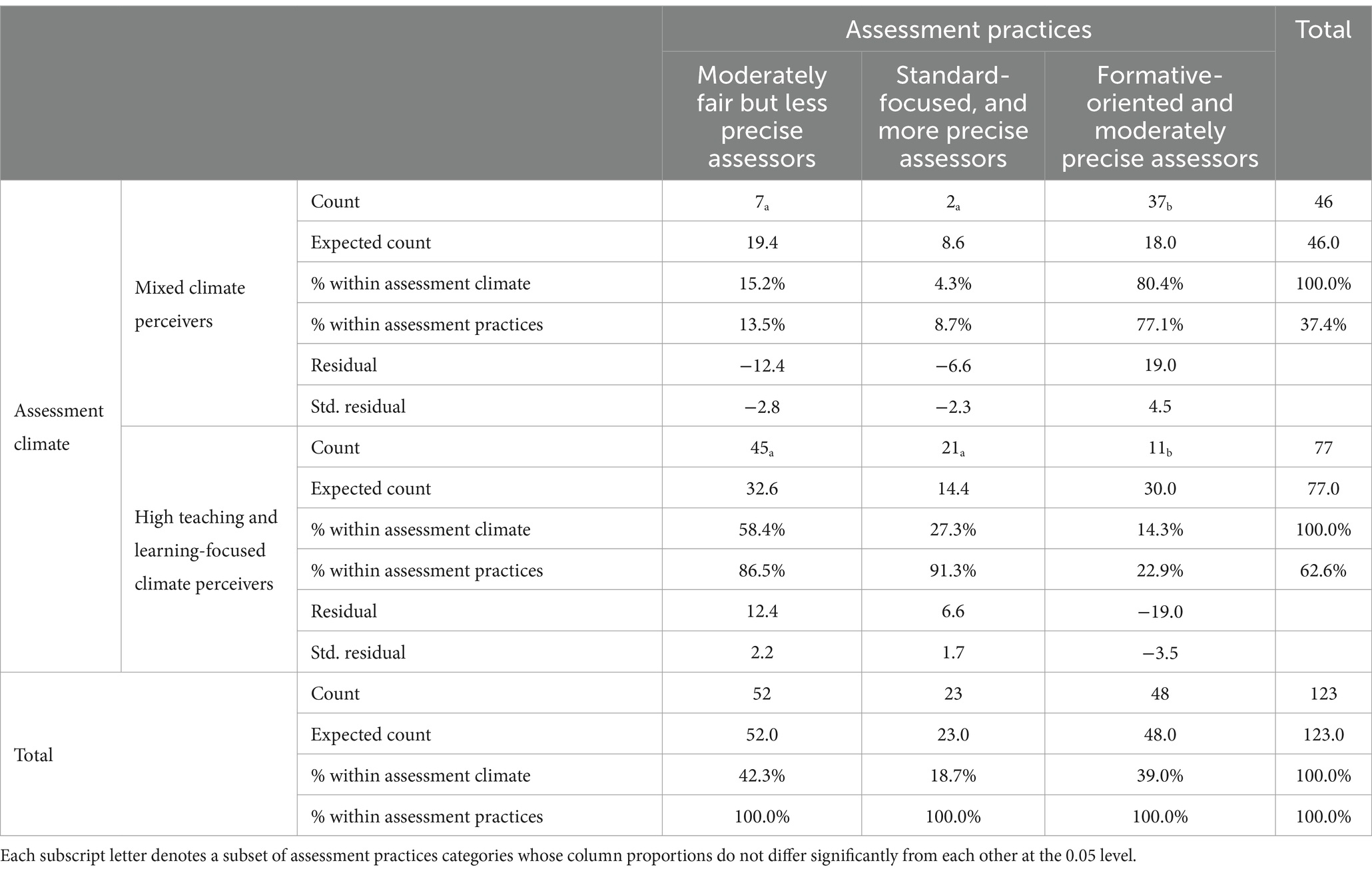

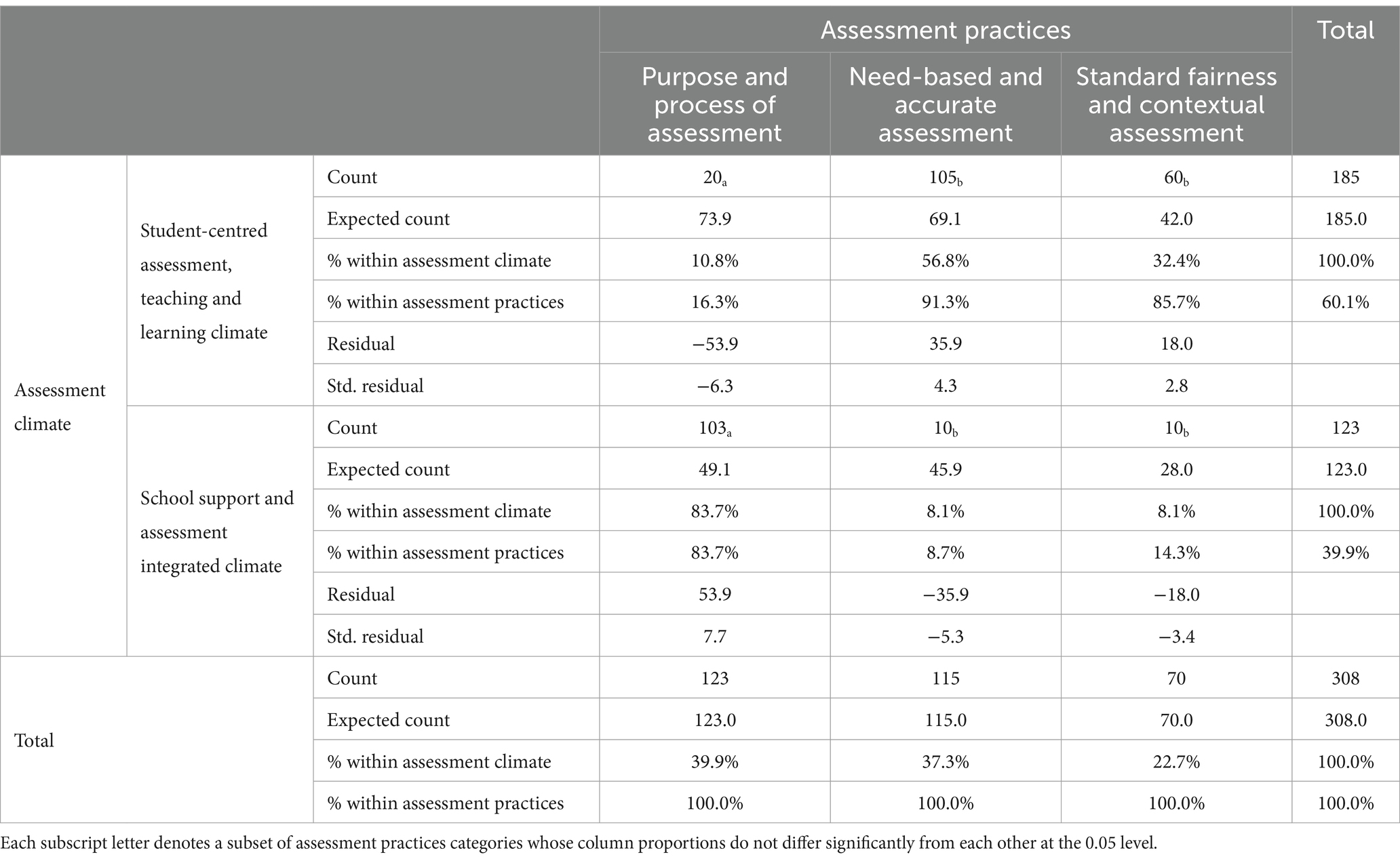

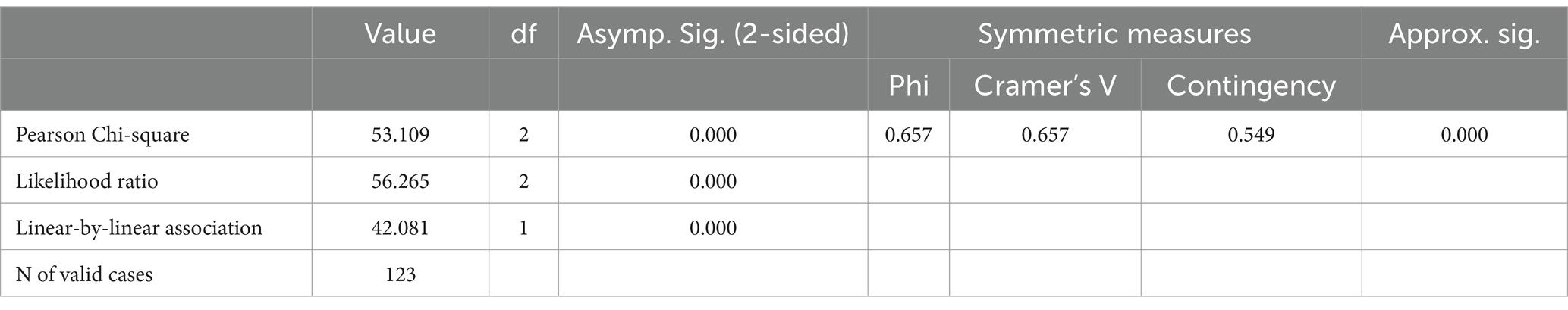

As shown in Table 5, the association between Bruneian teachers’ perceptions of their school assessment climates and their assessment practices is statistically significant, (2, 123) = 53.109, p < 0.001. The results indicate that teachers’ assessment practices differed significantly depending on the kind of assessment climates that existed in their schools. Approximately 66% of teachers’ assessment practices was influenced by their school assessment climates (see Table 5). A cross-tabulation analysis (see Table 6) showed that teachers who perceived that a mixed climate of teaching and learning, and assessment existed in their schools were 80.4% more likely to be formative-oriented and precise assessors. Those who perceived that there was a high teaching and learning-focused climate in their schools were 58.4% more likely to be moderately fair, but less precise assessors.

Table 5. Chi-square test of association between Bruneian teachers’ perceptions of school assessment climate and assessment practices.

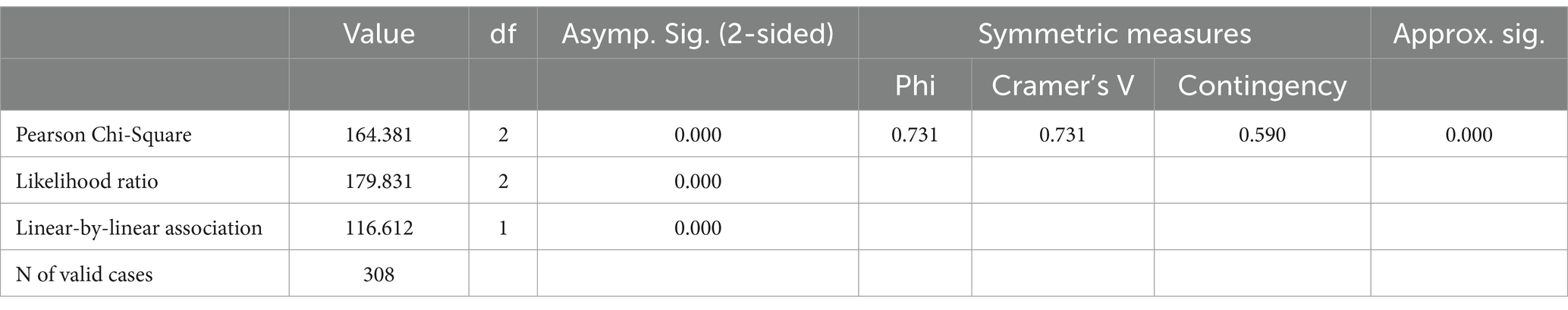

The results also revealed a statistically significant association between Ghanaian teachers’ perceptions of school assessment climates and their assessment practices, (2, 308) = 164.381, p < 0.001 (see Table 7). Approximately 73% of teachers’ assessment practices was influenced by their perceptions of school assessment climates. A cross-tabulation analysis (see Table 8) showed that teachers who perceived that there was a student-centred assessment climate in their schools were 56.8% and 32.4% more likely to practice need-based and accurate assessment, and standard fairness and contextual assessment, respectively. Teachers who believed that their schools support instruction and assessment climate were 83.7% more likely to focus their assessment practices on the purpose and process of assessment.

Table 7. Chi-square test of association between Ghanaian teachers’ perceptions of school assessment climate and assessment practices.

4.4 Interview findings on teachers’ assessment practices

To understand the results of the survey, participants were asked to describe (a) their assessment practices and (b) how their school assessment climates influenced their assessment practices. Their views on assessment practices were based on key themes such as formative and summative assessment practices, and how they designed valid, fair, and reliable assessment tasks. Teachers’ perceptions of how their school assessment climates influenced their assessment practices were based on key themes: politisation and marketisation of education and assessment systems, and the need for a culturally responsive assessment.

4.4.1 Teachers’ views on their assessment practices

The six Bruneian teachers practiced questioning, peer assessment, and feedback; however, they prevalently practiced summative assessment that drilled students toward factual knowledge. They adapted previous questions from existing materials that challenged their test development skills. Their assessment practices did not adequately encourage fairness, validity, and reliability, as they argued that they used previous questions set by their examination board. All teachers were unaware of the table of test specification, an ideal way to improve test validity and reliability. They adapted scoring rubrics from their examination board, but mostly drifted from the rubrics when scoring assessment tasks. They also awarded non-achieving grades to motivate students.

For example, BTI practiced a less formative and more summative assessment. He prevalently drilled his students towards final examinations. His practices of fairness, reliability and validity were based on using questions of different difficulty adapted from previous examination questions. His class exercises did not count toward the final assessment. He ensured that the content of class exercises was based on learning objectives. He asserted:

I would often go for both formative and summative assessment, but I tend to focus on summative assessment to prepare the student for exams… [I] will take [my] questions from Cambridge past papers [since] I believe there are no biases…. MCQs are difficult to set on my own…[especially] on writing good distractors… I’m not aware of test specification table…. exercises and assignment do not count for marks… [they are] just for concept building. (BT1)

BT2 normally used tests to determine factual knowledge. She practiced instructional dialogues and scaffolding. She mostly used formative assessment to prepare students for summative assessment. She gave the same assessment tasks to all students but unable to ensure fairness effectively due to the lack of skills. She adapted scoring rubrics from her examination board, but drifted away from them when scoring assessment tasks since she did not understand the demands of the adapted scoring rubrics. As she reported:

I like dialogic teaching… [I] ask for students’ opinions… The important thing is to prepare them well to pass the exams…. I explain what we want to do and then test it…if they’re successful we move… [at times] I give them a sample essay. I tell them, have these things in the introduction… Just follow the template… [I] focus on their level of ability and not on their needs because the needy students can be smart to memorise… [But] the tests I give are equal… I scaffold equally… I don’t trust specific needs because I really cannot tell. (BT2)

BT3 said that she used fewer formative assessment. She mostly tested her students since they had to pass examinations. She argued that summative assessment helps drill students. She adapted different questions from existing materials for all students. She assumed that the previous questions were reliable, valid, and fair since they were from her examination board. She awarded non-achievement grades to motivate students. She justified:

[Students] need to get used to answering exam questions…I'm more toward summative assessment [because] it is useful for further education and work. Formative is just group work, discussion, or feedback. When I see students struggling, [I] tend to change back to summative. Mine is usually from past year questions, but I would mix them up. If more students can answer a question, I just grab…. I ask my colleague… Is this a good question to ask my students?… I standardise their marks for failures to pass…. [so] some get 50%. Then they say, I’ve a chance to pass my exams. They become motivated. [My] questions target lower ability students if they can answer then everyone can…. I haven’t heard of the test specification table. (BT3)

BT4, BT5, and BT6 shared similar assessment practices focusing on summative assessment; however, they preferred to use questioning (closed- and open-ended) and feedback to identify the difficulties of students. BT5 and BT6 shared learning intentions, success criteria and rubrics with students, but doubted if students had the skills to assess their own learning. They used more summative assessment since their school encouraged summative assessment practices. Like other colleague teachers, they also adapted previous questions from existing materials to improve fairness. BT4 copied questions from exiting materials without any modifications. BT4 and BT5 awarded non-achieving scores but BT6 did not. The three teachers determined reliability and validity by aligning questions with learning objectives and drilling students repeatedly using the same previous questions to prepare students for external examinations. They also lacked the skills to practice differentiated assessment. One of the teachers reported:

Discussion with students is important… Give [some] feedback like ‘that’s a good answer but it’s not correct’… [I] feel that students are not good enough to assess themselves…. I give different questions [easy, medium, hard] from past Cambridge questions since that’s the little I can do…I can’t assist special students… [I] reward [some] students with [some] marks… that would be fair enough to assess them…[students] need summative assessment to prepare for their final Cambridge exams…Overall, [I] use summative assessment to assess students…[The] school requires that…[I] always refer back to the learning objectives for consistency…for table of test specification, I’m not aware of it. (BT5)

Relatedly, the eight Ghanaian teachers described their assessment practices as summative-oriented. They argued that teaching and learning focused on ‘teaching to test.’ Their prevalent formative assessment practices were limited to questioning, feedback, and peer assessment. GT1 practiced questioning, feedback, and peer assessment, but rarely shared learning intentions and success criteria with students. He looked at the grade level and understanding of students to determine what to assess. He then set possible questions based on instructional content and administered to students at the end of his instruction.

GT2 limited his formative assessment practices to questioning and feedback. He aligned test items with learning objectives and reviewed test items to address wording and ambiguity problems. He provided instructions and weightings for each question, and developed and used scoring rubrics, but used analytical scoring. These practices promote fair, valid, and reliable assessment. GT3, GT4 and GT6 also practiced similar summative-driven assessment. GT6 shared that summative assessment took more weight compared to formative assessment. Her prevalent continuous assessment practices were project work, quizzes, and tests. Two of the teachers explicitly stated:

We have continuous assessment and final exams. [But] continuous assessment takes 30% and final exams take 70%. For [the] continuous assessment, we normally have project work and quizzes or class tests. (GT6)

I use both [formative and summative assessment] …. In class, I use oral and written questions [and] feedback. [But] the high focus on exams makes me to conduct more tests. [Because] students are always nervous… I believe in continuous assessment and student portfolios, which is a better assessment option. [But] we don’t normally practice [so] normally, I’ll look at my objectives and expected learning outcomes and set test items…. I address [any] ambiguous words, provide directions and the time for students to respond to the test…. I develop marking scheme when writing test items [and] indicate the score on each test item on the test paper. (GT2)

GT5, GT7 and GT8 solved previous examination questions with students to prepare them for internal and external examinations. GT7 only practiced questioning, feedback, and group work, and normally exposed students to the basics of passing external examinations. One of the teachers asserted:

I ask questions to know whether students are following what I’m teaching. I don’t normally share lesson objectives with students…. [I] don’t need to share because students need to discover knowledge on their own…. I use questions that reflect [my] objectives… I put students into groups [and] ask them to present what they learn… [and] give [my] feedback. [But] students aren’t interested in these practices compared to solving past questions [and] giving them possible exam areas…. [So] I’m compelled to go through several of those questions with them [and] give them more tests after every teaching. (GT8)

The assessment practices of Ghanaian teachers lacked adequate fairness, validity, and reliability. Like the Bruneian teachers, most of them (n = 7) were unaware of the table of test specification. They somewhat ensured construct validity by aligning assessment to learning objectives. They could not describe how they aligned assessment tasks with learning objectives. However, they could develop and use scoring rubrics. Their departmental heads moderated their assessment tasks. GT1 practiced fair assessment by ensuring that assessment tasks covered learning domains. He moderated his assessment tasks (i.e., tests), and developed and used scoring rubrics.

GT3 practiced fair, reliable, and valid assessment through the moderation and setting of test questions by different teachers who taught a specific subject. For her, test questions were based on learning objectives and a standard scoring rubric was developed to ensure consistent scoring for all students. These practices were limited to summative assessment (final school examinations) but not in her formative assessment practices. The rest of the teachers (i.e., GT2, GT4, GT5, GT6, GT7, GT8) shared similar practices. For example, GT5 set his test questions based on instructional objectives, which contributed to construct validity. He informed his students about assessments tasks and testing conditions. This included the time and instructions for the test. He also ensured adequate supervision to prevent students from cheating. These were important practices that could improve standard and equitable fairness. He used scoring rubrics; however, he argued that using scoring rubrics strictly affected fairness, especially in situations where the rubrics did not consider the ‘genius answers’ of students. GT4 considered the health and psychological conditions of the students to encourage fair assessment. He also argued that scoring rubrics should be used as a guide and not follow it rigidly. The only teacher who had heard of the table of test specification was GT2, but could not explain how to develop or use it. Two of the teachers explicitly reported:

In the case of end of semester exam, we set the questions to cover for all the classes… [so] once we set the questions, [the] head of department will validate them…. Questions are set based on the scheme of work by the teachers who teach the subject…… Yes, for end of semester exam, we all use the same marking scheme for a particular subject. In my case, in terms of formative assessment, I don’t really consider those things [but] I might consider that in the future…Hmm, none I can think of in using table of test specification. (GT3)

I ensure that students are aware of [my] tests. I give equal time and ensure that no one cheats…. I use a standard marking scheme [but] if student uses a genius way of expressing their ideas, the scheme couldn’t capture it should be considered. That’s fair… Validity? [If] I teach nouns, I should test students on nouns…. We align the questions with the scheme of work. [The] questions are moderated by all teachers and [the] head of department…. I think it will make the test valid… Table of test specification? Hmm, no! (GT5)

In both contexts, the participants shared varied assessment practices that favoured summative assessment (i.e., tests). Validity and reliability practices in assessment were limited to construct validity evidence, where most of them aligned assessment tasks with instructional objectives. They lacked the knowledge of developing and using the table of test specification, an important approach to improve valid and reliable assessment. They ensured some strategies that promoted fair and accurate assessment: assessing what they taught, providing clear instructions and timing of assessment tasks, developing, and using scoring rubrics, moderating test items, and considering students’ health and psychological conditions during assessment. These practices were limited to summative assessment. Their formative assessment practices also lacked assessment reporting and communication.

4.4.2 How teachers’ perceptions of school assessment climates influence their assessment practices

The six Bruneian teachers described the assessment climates in their schools as highly examination and results-driven that prioritised drilling students toward good grades in high-stakes examinations. This forced them to teach in a way against their beliefs. They shared that the accountability pressures in their schools required them to protect the image of their schools and ensured that assessment practices were consistent with school expectations. Students must pass examinations to protect this image, as this was the way to make students, schools, and education accountable. Consequently, they changed their pedagogical approaches and assessment to conform to the accountability standards in their schools. This resulted in excessive pressure and stress on both teachers and students, which affected their mental health and well-being. For most of them, these practices did not encourage lifelong learning since the competencies of students were based solely on high-stake tests.

BT1 and BT2 asserted their schools were interested in summative grading. They believed that summative grading could be a misleading perception for students’ performance and school accountability; however, they adapted their teaching approaches to meet the demands of this accountability system, which affected student learning and progress. BTI shared that over-concentration on grades failed to develop assessment that could train students toward character building. BT2 corroborated that educational stakeholders were interested in summative scores and teachers were manipulated to act based on the demands of stakeholders. She believed that the examination-oriented climate did not improve lifelong learning since other students have other skills that cannot be judged mainly by high-takes tests. The two teachers mentioned that teaching, learning, and assessment are limited to textbooks. The serious examination pressures also forced parents to engage students in excessive extra tuition, which exacerbated the existing pressure on students. The tuition focused on practicing previous examination papers after school hours to prepare students for high-stake examinations. For them, the lack of students’ retention in their schools also affected their assessment practices and beliefs. For example, they did not see the use of their little formative and summative assessment practices since they contributed little to final assessment decisions. They explicitly stated:

[We] are asked to do 100% summative assessment because we’re following Cambridge…. [But] the marks don't reflect what students learn…. More of a problem in the system…. Even if we do our [own] assessment, Cambridge won’t accept it as SAG marks. They’re [very] strict about using their papers only…. [We] know the changes to kids over time…. But higher ups, they only see statistics…. If nobody fails, it looks good on paper… I think that's not the right way…. There are different qualities more important than just purely academic… character building is very important… some intelligent kids are very rude….no respect for elders… [our] education system is stressful…. I pity the kids because parents force them…after school tuition, tuition, tuition…[and] that’s tiring…. a very big mistake, we promote kids every year. (BT1)

We rely [too] much on exams…[and] it's detrimental because the kids have to do a lot of work…Our students [now], they're not high-stake exam material… Brunei believes in more standardisation…the government is very obsessed with collecting data on [kids’] performance to justify that the nation is improving intellectually … [And] teachers are just subjected to these whims of the MoE…. [Which] to me, it’s not important. Many students without good grades are succeeding in life…. [But] the government says this is what we must do and that is what we do…[We] submit data monthly, weekly, and don’t know what it is used for. (BT2)

BT3 justified how her school used assessment for school accountability, compelling many teachers like her to practice more summative assessment. She argued that the lack of students’ retention hindered how teachers’ assessment could be useful in decision making and expressed her disbelief in using grading alone to judge performance. She shared:

[Most] teachers don’t want to drill students… [but] they must do to maintain the school's image…. They want to feel comfortable and assess how they want to… [But] it depends…. [Some] school management would pressure tutors to maintain the image. So, make sure you follow this and this… Like Japan and China… the same thing is happening here…. Students are promoted every year…. [and] I don’t just want students to say I got a C in math, [But] I have learned something from teacher’s lesson, [and] I can apply this elsewhere, not just in my exam. (BT3)

BT3 also cited an example of how high-stake assessment climate affects the holistic development of students. For her, summative assessment alone should not be the only way to judge students’ ability; however, formative assessment could be used to prepare students for other soft skills that are important for them to excel in the future. She narrated that most higher ability students lacked soft skills; and formative assessment can be a way to solve this problem. She narrated:

[We] know that high-ability students are good [and] only want to get high scores… [So] for them it’s mostly summative. But is that summative good for them to prepare? They can do well academically, but in other things they are not doing particularly well. I have heard [that] they were interviewing some graduates who want to work at Polytechnic. They found that the second lower class can present and talk well…. can explain what they want to do in interviews compared to the first-class students… [When] teaching the higher ability students, perhaps, summative alone isn’t a good way to assess them. They need more formative assessment to prepare them for other things. (BT3)

The rest of the teachers (i.e., BT4, BT5 and BT6) argued that the results-driven climates in their schools made students tired, which affected student learning and progress. BT4 asserted that he could not complete his syllabus due to the many examinations conducted in his school, despite that formative assessment is also time consuming. He also supported that the holistic abilities of students should be nurtured compared to depending on grades alone. The teachers shared that they were tired of excessive marking and reporting of assessment data.

BT6 shared that her school and educational context compelled students to pass examinations although formative assessment could be the preferred supportive tool that could contribute to the holistic development of students. She also asserted that the results-driven focus in her school did not only exert too much pressure on teachers and students, but it also made education ignore other important things such as extra curriculum activities (sports, drama, music, and painting). She believed that this results-driven notion has been increasingly prevalent in the competitive world; however, it has contributed to unhealthiness and mental issues among students and teachers. She described her school’s assessment climate as rigid, which was detrimental to rounded and holistic education, and assessment practices. BT5 also described his school as data-driven, without which there is nothing to do about students’ learning and progress. He reported:

I certainly feel Brunei is very result driven…[And] there's a danger if that’s the sole focus. Education is so much more than that…It's about creating rounded citizens. We [must] mark everything…we [sometimes] lose sight of [other more] important things and forget the bigger picture…. A structure is good, but the danger of a rigorous structure is too much of it’s at the expense of other things… [I] think that's increasingly the case globally, isn't it?… We live in such a competitive world…[but] it's not all about marks… if students do presentations, they'd have a more fun marking criteria…giving feedback to their peers. That's all assessment! [We] forget [the importance] of sports, drama, music, and painting… increasing the levels of unhealthiness and mental health issues. (BT5)

Relatedly, the eight Ghanaian teachers reported that the accountability pressures in their schools, which prioritised summative assessment affected their assessment practices. GT1 described his school as a high-stake environment that prioritised students’ passes in external examinations. GT2 argued that teaching and learning did not focus on students’ understanding, which hindered lifelong learning and the quality of learners that can meet the expectations of this changing world. GT4 also shared that there is a mismatch between the demands of the curriculum and the test questions of the examination board. Therefore, teachers trained students to pass examinations and were compelled to act according to the demands of the examination board through their schools. He also argued that most teachers did not follow the curriculum but used their experiences to decide on the learning areas that appeared most frequently in external examinations.

GT6 added that the content of external examinations was limited to a few areas in the curriculum. Most of her students questioned the relevance of learning contents that did not normally appear in external examinations. This affected student learning of those concepts since most teachers did not teach such concepts. The rest of the teachers (i.e., GT3, GT5, GT7, and GT8) confirmed that most schools tailored teaching and learning to a pass in examinations. For example, GT3 shared that more attention was paid to summative assessment and that the purpose and practices of formative assessment were refuted. According to her, this affected the relevance of formative assessment to teachers and students. Two of the teachers explicitly shared:

We have reached the peak of education in Ghana where the focus is just to let the student pass the final exam, rather than to encourage students’ understanding of what we are teaching them. It’s a high-stake learning environment, that’s what is happening. (GT1)

How WAEC [a high take exam body] asks the question compels teachers to deviate from the syllabus. What the syllabus demands is different from what the exam body asks [so] teachers are forced to go by what the exams board says. Teachers drill students to go through past questions [so that] the students become familiar with the process [for them] to pass. At the end, the purpose of the syllabus is deviated. The syllabus encourages analytical reasoning, concept building, etc., [but] exams encourage memorisation [for] students to pass. (GT4)

For most teachers (n = 6), the politicisation of education is the key factor that drove the existing high-stake examination climate that affected formative, fair, valid, and reliable assessment practices in their schools. The teachers reported that a performance contract existed in their schools. School heads sign a contract to declare their commitment to achieve higher pass rates in external examinations. According to them, the performance contract exacerbated the already existing ‘teaching to test’ conception among teachers and students.

Four of the teachers (i.e., GT1, GT5, GT6, and GT8) admitted that there was an overemphasis on performance contracts. School leaders and teachers are compelled to do all that it takes for students to pass external examinations. They expressed that education has become a public good in Ghana. Politicians use it to score political points, especially when students perform well on external examinations. This puts pressure on school heads, teachers, and educational administrators to obtain the needed results for political gains. GT2 and GT3 also described performance contracts as highly political, which school heads and teachers had no control of. They argued that the performance contracts exacerbated the over emphasis of summative testing, in their schools, which affected teaching and learning, as well as the educational needs of students such as problem-solving skills. For example, two teachers narrated:

Every teacher, including me, focuses on student passing an exam. Even the government itself has this policy of performance contract. Before writing WASSCE, headmasters must sign a performance contract with the government. For instance, if your student probably got 85% in English language, in the following year, the performance contract should see improvement in that 85%…. The headteacher comes [and] discusses with teachers… The ultimate focus is to do everything for the students to pass, neglecting other development…the effective, psychomotor, and all those things…. This affects their problem-solving skills. (GT5)

Politicians have taken over the educational sector ensuring that students perform well to use it for politics [and] to support that… during my regime, the students performed in the annual exam. Because of that, pressure is mounted on various headmasters to ensure that students get the needed results so that politicians can use it for politics. That’s why there is a performance contract now [laughs]. (GT2)

The eight teachers described the existing assessment culture that limits students’ competencies to a pass in a one-shot examination as dangerous. They argued that this climate limits their formative, fair, reliable, and valid assessment beliefs. It also has a catastrophic effect on teaching, learning, and the kind of students the climate produces. Four of the teachers (i.e., GT1, GT2, GT3, and GT4) asserted that performance contracts and accountability pressures affected the quality of students that are produced for the future. For them, assessment climates in their schools hindered the production of lifelong, responsible, and independent learners who could function meaningfully in society.

Particularly, GT2, shared that accountability pressures through performance contracts provided an avenue for schools to be accountable; however, he agreed with GT3 and GT4 who commented that the existing assessment climate has led to a ‘politics of education’. The government of the day provides previous questions to students to boost their performance for political gains. According to them, the new system is associated with examination malpractices such as leaking examination questions in schools, affecting the validity and reliability of assessment results that are used to make certification and placement decisions. They also argued that the existing climate encouraged objectivist-based teaching and learning. A teacher shared:

…. Once you sign a contract, it becomes a dead-end, no matter what happens, you must meet your contract. This is when some heads and teachers leak questions and teach the students during WASSCE. [But] this contract is skewed to objective compared to performance-based learning. [But] there are the two ways; it’s good because it puts headmasters on their toes to perform [and] make sure [that] teachers are punctual in school. Heads have a direct responsibility to ensure [that] teachers are teaching. [Conversely], it leads to malpractices such as cheating…The government will be giving students past questions [because] it wants them to perform. This is the politics in our education. So, these are what we are facing. (GT2)