Utility analysis of an adapted Mini-CEX WebApp for clinical practice assessment in physiotherapy undergraduate students

- 1Department of Health Sciences, Faculty of Medicine, Pontificia Universidad Católica de Chile, Santiago, Chile

- 2School of Health Professions Education (SHE), Maastricht University, Maastricht, Netherlands

- 3School of Dentistry, Pontificia Universidad Católica de Chile, Santiago, Chile

- 4Department of Gastroenterology, School of Medicine, Pontificia Universidad Católica de Chile, Santiago, Chile

- 5Centre for Medical Education and Health Sciences, Faculty of Medicine, Pontificia Universidad Católica de Chile, Santiago, Chile

- 6Emergency Medicine Section, Department of Internal Medicine, School of Medicine, Pontificia Universidad Católica de Chile, Santiago, Chile

Clinical workplace-based learning is essential for undergraduate health professions, requiring adequate training and timely feedback. While the Mini-CEX is a well-known tool for workplace-based learning, its written paper assessment can be cumbersome in a clinical setting. We conducted a utility analysis to assess the effectiveness of an adapted Mini-CEX implemented as a mobile device WebApp for clinical practice assessment. We included 24 clinical teachers from 11 different clinical placements and 95 undergraduate physical therapy students. The adapted Mini-CEX was tailored to align with the learning outcomes of clinical practice requirements and made accessible through a WebApp for mobile devices. To ensure the validity of the content, we conducted a Delphi panel. Throughout the semester, the students were assessed four times while interacting with patients. We evaluated the utility of the adapted Mini-CEX based on validity, reliability, acceptability, cost, and educational impact. We performed factor analysis and assessed the psychometric properties of the adapted tool. Additionally, we conducted two focus groups and analyzed the themes from the discussions to explore acceptability and educational impact. The adapted Mini-CEX consisted of eight validated items. Our analysis revealed that the tool was unidimensional and exhibited acceptable reliability (0.78). The focus groups highlighted two main themes: improving learning assessment and the perceived impact on learning. Overall, the eight-item Mini-CEX WebApp proved to be a valid, acceptable, and reliable instrument for clinical practice assessment in workplace-based learning settings for undergraduate physiotherapy students. We anticipate that our adapted Mini-CEX WebApp can be easily implemented across various clinical courses and disciplines.

1. Introduction

In undergraduate health professions, such as physical therapy, clinical workplace-based learning is crucial (WCPT, 2011). This learning process involves observation and supervision, which provide valuable performance information (Kogan et al., 2009; Hauer et al., 2011). However, challenges arise due to the high clinical workload and restrictions on clinical practice, which often hinder the ability to provide adequate observation and supervision (Schopper et al., 2016). Furthermore, there is often a lack of observation and appropriate feedback (Haffling et al., 2011; Boud, 2015; O'Connor et al., 2018; Fuentes-Cimma et al., 2020; Noble et al., 2020). Consequently, students struggle with knowledge integration, clinical reasoning, practical skills, and learning new clinical topics (Milanese et al., 2013).

Well-conducted feedback has the potential to significantly impact student learning (Norcini and Burch, 2007; Boud and Molloy, 2013). The utilization of observation and feedback as strategies in workplace-based assessments has shown satisfactory results among medical residents (Hicks et al., 2018; Singhal et al., 2020; Pinilla et al., 2021). One important assessment instrument in this context is the Mini Clinical Evaluation Exercise (Mini-CEX), which was originally developed for medical residents and has been adapted for undergraduate health students (Norcini et al., 1995, 2003; Kim and Hwang, 2016; Fuentes-Cimma et al., 2020; Mortaz Hejri et al., 2020). The Mini-CEX has demonstrated robust psychometric properties (De Lima et al., 2007; Cook et al., 2010; Pelgrim et al., 2011; Al Ansari et al., 2013) and has shown educational impact (Montagne et al., 2014). Multiple preceptors can utilize the Mini-CEX on several occasions during a clinical rotation (De Lima et al., 2007; Cook et al., 2010; Pelgrim et al., 2011; Al Ansari et al., 2013). The assessment process begins with direct observation of a student while interacting with a patient (Norcini et al., 1995, 2003; De Lima et al., 2007). Then, the student presents clinical findings, a diagnosis, and an intervention plan. Finally, the observer provides timely and individualized feedback to the student (Norcini et al., 1995; De Lima et al., 2007).

In the context of a culture of assessment for learning, assessment instruments like the Mini-CEX have gained increased importance. Notably, within the field of physical therapy, there have been initiatives to incorporate assessment into learning practices (Fuentes-Cimma et al., 2020; Walker and Roberts, 2020). However, there remains a need to share experiences regarding the development and implementation of clinical assessment systems. O'Connor et al. (2018) conducted a systematic review that highlighted the necessity for further research in workplace-based assessments within physical therapy.

Unfortunately, despite the recognition of the Mini-CEX as an excellent assessment tool, the high clinical workload often poses challenges to its implementation. Therefore, technological advancements such as the development of a WebApp can offer potential solutions for workplace-based assessments. A WebApp refers to a software program accessible through a web browser that provides interactive functionalities to users via the Internet. Hence, we conducted a utility analysis of an adapted Mini-CEX implemented in the form of a WebApp designed for mobile devices. Our aim was to assess the validity, reliability, and acceptability of this adapted Mini-CEX WebApp as a tool for assessing physical therapy undergraduate students during their clinical practice. We hypothesized that the implementation of this adapted Mini-CEX WebApp would result in a valid, reliable, and acceptable tool for both students and preceptors.

2. Methodology

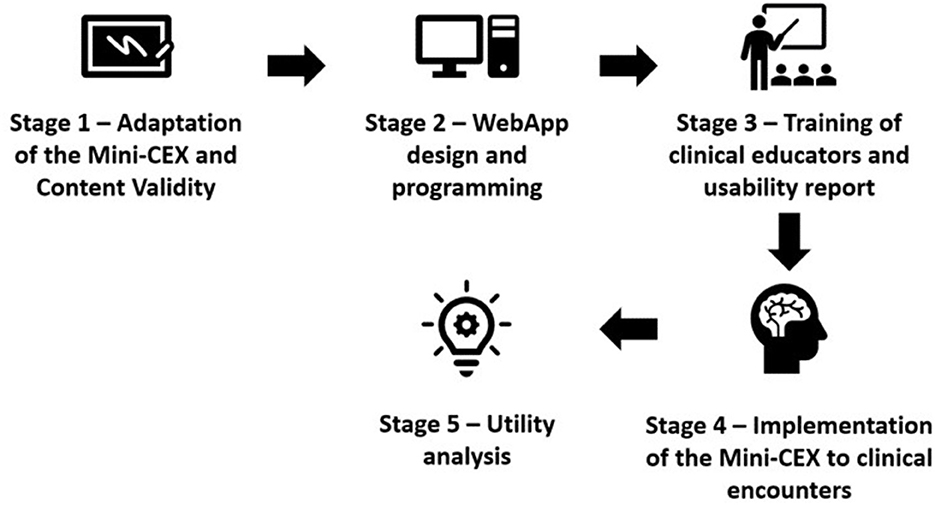

The fourth-year undergraduate physical therapy students were assessed using an adapted Mini-CEX WebApp on four occasions during their final semester (6 months of clinical practice, Figure 1). The assessments were conducted by clinical educators in outpatient musculoskeletal clinical placements. To implement the Mini-CEX WebApp, we conducted a utility analysis that encompassed various aspects, including validity, reliability, acceptability, costs, and educational impact (Van Der Vleuten, 1996). Both quantitative data from the Mini-CEX WebApp and qualitative data from focus groups were collected to provide a comprehensive evaluation. The study was approved by the Ethics Committee of the Faculty of Medicine of the Pontificia Universidad Católica de Chile (ID 170707003).

2.1. Participants

A total of 24 clinical educators from 11 different clinical placements and 95 undergraduate physical therapy students were invited to participate in the study. The students came from an undergraduate 4-year physical therapy curriculum at the Pontificia Universidad Católica de Chile (Santiago, Chile).

Our methodology and results are described in six sections (content validity, construct validity, reliability, acceptability, costs, and educational impact) to didactically simplify the comprehension of the utility analysis purpose of our study.

2.2. Content validity

In line with the learning outcomes of the course, the first step involved engaging in a discussion with faculty members regarding the utilization of the original eight Mini-CEX items. As a result, this discussion resulted in the first version of the adapted Mini-CEX tool. Then, a Delphi panel was conducted remotely with the purpose of evaluating the level of consensus among the items in terms of their relevance, pertinence, and comprehensibility. The consensus was determined by achieving an average score of 4.5 points on a 5-point Likert scale (1: strongly disagree; 5: strongly agree). A total of 23 experts in health science education or musculoskeletal physiotherapy agreed to participate in the Delphi panel through a Google Forms© survey.

2.3. Construct validity

The construct validity of the Mini-CEX was obtained through exploratory and confirmatory factor analysis. The exploratory factor analysis aimed to detect the constructs (Byrne, 2012) underlying the instrument items' scores (Flora and Curran, 2004), while the confirmatory factor analysis confirmed the number of factors proposed in the exploratory analysis (Byrne, 2012). We used Kaiser's rule to determine the number of factors that should be retained in the exploratory factor analysis with an eigenvalue >1. This criterion explains more variance than a single variable (Goretzko and Measurement, 2019).

Since the items were ordinal variables, the robust Weighted Least Squares Mean-Variance (WLSMV) adjusted method was used (Byrne, 2012). The WLSMV method has high accuracy in estimating statistical tests, model parameters, and their respective standard errors (Flora and Curran, 2004; Byrne, 2012). Additionally, the Kaiser-Meyer-Olkin (KMO) index was used to determine whether the sample was suitable for factor analysis. The KMO takes values between 0 and 1, where small values indicate that the variables have weak correlations to perform factor analysis. A KMO index above 0.80 was considered “excellent” (Kaiser, 1974).

Standardized factor weights were obtained from exploratory and confirmatory analyses, representing the relationship between the latent factor (measured construct) and the item scores. Furthermore, the coefficient of determination (R2) was obtained to quantify each item's variance percentage explained by the factor identified in the exploratory analysis (Kaiser, 1974). Several goodness-of-fit indexes were calculated for the confirmatory factor model: (a) comparative fit index (CFI), which relates to the degree of correlation between survey items; (b) Tucker-Lewis index (TLI), which penalizes complex models (Wang and Wang, 2012); and (c) root mean square error of approximation (RMSEA), which quantifies the model's lack of adjustment (Wang and Wang, 2012). The RMSEA incorporates a hypothesis test called the close-fit test, which enables the statistical evaluation of the confirmatory model's goodness of fit. The CFI and the TLI indexes have a cutoff point of 0.9 (Wang and Wang, 2012). The RMSEA index has a cutoff point of ≤ 0.06, and the range of acceptable adjustment was between 0.05 and 0.08 (Hu and Bentler, 1999; Wang and Wang, 2012). An index equal to or greater than 0.05 was considered “excellent” (Wang and Wang, 2012).

2.4. Reliability

Cronbach's alpha reliability coefficient was calculated to determine the internal consistency of each item obtained from the factor analysis. Cronbach's alpha coefficient equal to or >0.70 was considered “adequate” (Kline Rex, 2015). The interpretation of the coefficient magnitude should consider the number of items on the scale and the sample size (Ponterotto and Ruckdeschel, 2007). For instruments consisting of 7–11 items and a sample size below 100 participants, an alpha coefficient of 0.75 is considered “good” (Ponterotto and Ruckdeschel, 2007).

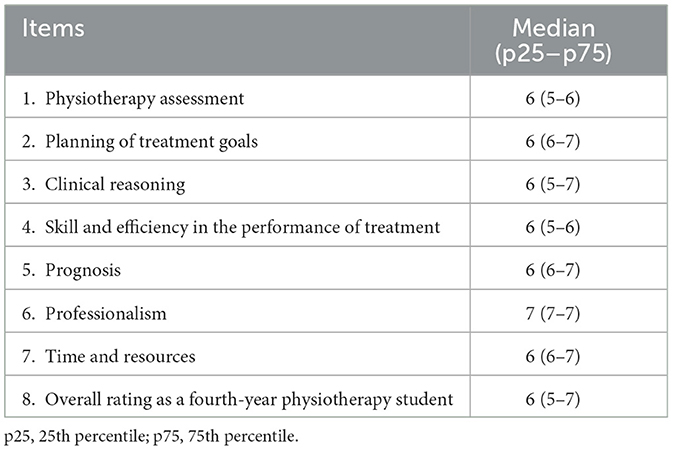

After content, construct validity, and reliability, the 8-item Mini-CEX was implemented in musculoskeletal clinical placements. The 8-item Mini-CEX was designed into a WebApp for mobile use (cellphones or tablets, Figure 2). Two independent researchers constantly ensured network accessibility, the availability of mobile equipment, and the completeness of the assessment. The Webapp allowed score data to be collected from each clinical practice assessment, and comments were accepted for feedback items. When the assessment ended, the WebApp instantly sent a report to the student, clinical educator, and faculty email, respectively. All obtained data were stored in a server (database) implemented for this project.

Figure 2. WebApp implementation flow. (A) Mini-CEX Web-App welcome view. (B) Student and clinical placement selection view. (C) Mini-CEX items and scoring.

Prior to student assessments, the clinical educators underwent training on how to use the Mini-CEX WebApp in simulated assessment sessions. Furthermore, a workshop was conducted at the university during the same semester, aiming to enhance feedback practices using the assessment tool.

2.5. Acceptability

A pragmatic qualitative approach was used to assess the acceptability of the adapted Mini-CEX WebApp by conducting two focus groups of students and clinical teachers. To ensure impartiality and prevent biased assumptions, an expert in qualitative research facilitated the focus groups (see the Supplementary material). The focus was to investigate the participants' perspectives on aspects related to the acceptability of the new Mini-CEX WebApp. Additionally, the study aimed to gain insight into the participants' discussions and interactions regarding their opinions. The information obtained from the focus groups was recorded and transcribed verbatim. Subsequently, a thematic analysis was conducted employing open coding techniques (Nowell et al., 2017).

2.6. Costs

In evaluating the costs associated with the implementation, several factors were considered, including usability, preceptor training, time spent per encounter, and the resources allocated to measure stakeholder perceptions and experiences. Usability factors included aspects such as Internet access, availability of mobile equipment, operating system, and recording of forms.

2.7. Educational impact

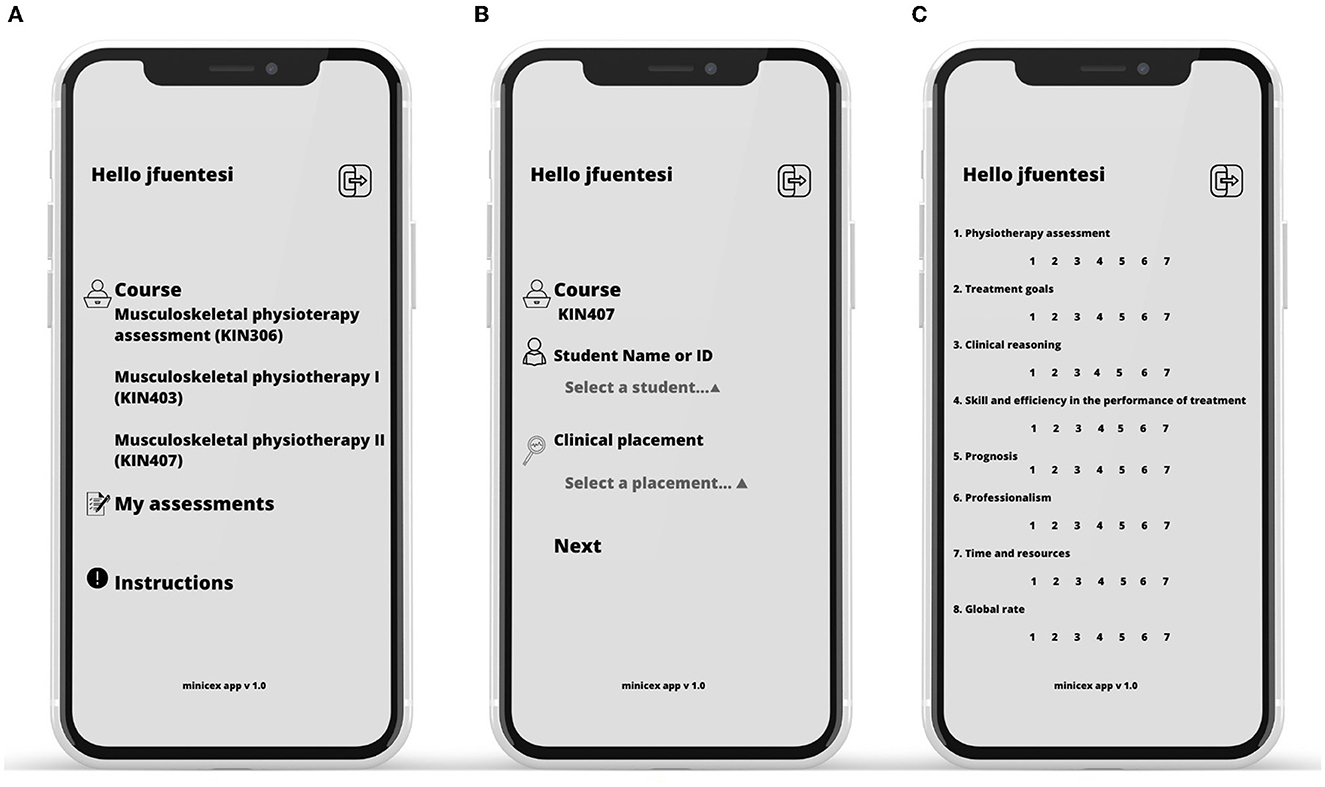

To determine the educational impact, the first two levels of the Kirkpatrick model for program evaluation were utilized (Kirkpatrick, 1994). For level 1, the reaction was assessed by conducting a descriptive analysis of two satisfaction items—one for the student and one for the teacher. These items had a 7-point scale, ranging from 1 indicating “low satisfaction” to 7 indicating “high satisfaction.” Both items were incorporated into the same WebApp, enabling immediate evaluation of the Mini-CEX. Additionally, the time spent by the teacher on observing the student-patient interactions and providing feedback was measured.

For level 2, learning outcomes were evaluated by analyzing the Mini-CEX scores of the students. A comparison was made between the scores obtained at four different assessments throughout the semester, distinguishing between improved scores and those that did not show improvement. To further determine the effectiveness of the intervention, the difference between the fourth measurement and the baseline measurement was calculated, enabling the identification of performance improvement or failure to improve. This analysis helped determine if non-significant results were due to a significant number of students showing a decreasing trend while others exhibited the opposite trend. Furthermore, the overall high-stakes assessment score, representing a final examination conducted at the end of the semester, was described and compared.

The Shapiro-Wilk test showed a non-normal distribution for the scores obtained by students who improved (p < 0.01) and who did not improve (p < 0.001). Therefore, we assessed a linear trend of scores increasing or decreasing over time using a non-parametric trend test based on the method proposed by Cuzick (1985). Furthermore, the median of the four measurements was estimated and compared over time for both groups. Using these, we built Quantile regression models with robust and clustered standard errors (Machado et al., 2011) to assess score differences over time in both groups. The Quantile regression with robust and clustered standard errors expresses the estimations in medians, considering the intra-cluster correlation. Hence, similar consecutive measurements of the Mini-CEX scores should have a high positive correlation.

2.8. General statistical methods

The data description, exploratory factor analysis, and quantile regression models were analyzed using the STATA version 17 (StataCorp, 2021), while the confirmatory factor analysis was conducted using MPlus software version 7 (Muthén and Muthén, 1998). A significance level of 5% was applied to all statistical analyses performed.

3. Results

A total of 24 available clinical educators (~6 years of experience), at 11 different clinical placements, and 95 undergraduate physical therapy students (64 women and 31 men, aged 22.4 ± 1.4 years) were enrolled. A total of 378 clinical encounters were assessed since one student attended only two of his four visits.

3.1. Content validity

The initial version of Mini-CEX consisted of seven items. Two rounds of the Delphi panel were needed to reach an agreement on the items and ensure the validity of the content. In the first and second rounds, 18 and 12 experts, respectively, answered the online survey within the requested time. After two rounds of the Delphi panel, an 8-item Mini-CEX was obtained, with all items above 4.5 on the five-point Likert scale.

3.2. Construct validity

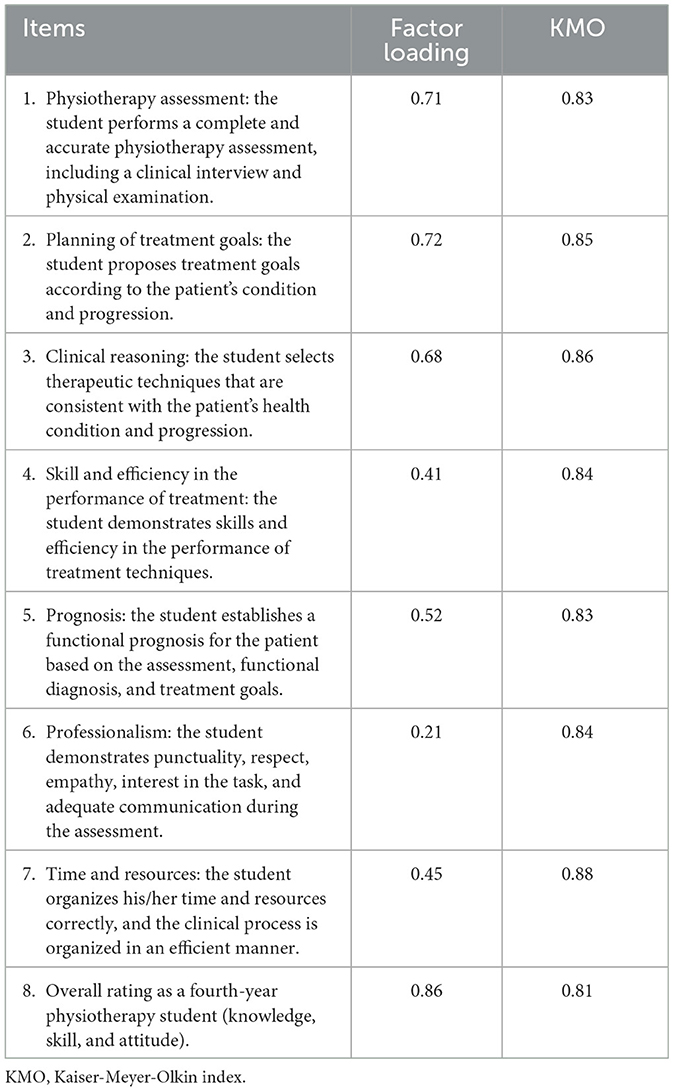

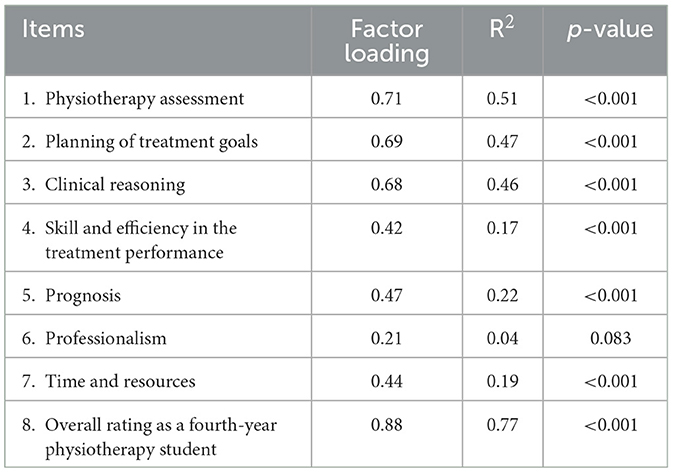

The polychoric correlations among the eight items demonstrated moderate to strong relationships, with values ranging mostly between 0.4 and 0.9 (Supplementary Table 1). The construct validity in the exploratory factor analysis showed one factor with an eigenvalue greater than one (Kaiser's rule). This factor explained 73% of the total variance in Mini-CEX scores. All standardized factor loadings for the questionnaire items were found to be high, with values >0.4, except for question 6. Additionally, the Kaiser-Meyer-Olkin (KMO) index exceeded 0.8 for all questions, indicating an adequate to excellent sample (Table 1). The highest fit was obtained for one factor [X2 (7) = 11.22; p = 0.129], and the CFI and the TLI indices (0.94 and 0.92, respectively) were the highest.

Table 1. Standardized factor loadings and the Kaiser-Meyer-Olkin (KMO) index of the Mini-CEX items obtained through an exploratory factor analysis.

The confirmatory factor analysis obtained standardized factor loadings >0.4 for most items, with a maximum of 0.88 for item 8. Thus, each item was strongly correlated with the previously identified latent factor (Table 2). In addition, the R2 was over 0.4 for most items, fluctuating between 0.04 (item 6) and 0.77 (item 8). In the latter case, 77% of the variability in scores from item 8 was explained by the single factor identified in the confirmatory factor analysis.

Table 2. Standardized factor loadings and R2 of the items included in the Mini-CEX obtained through a confirmatory factor analysis.

The goodness-of-fit (improved confirmatory model) indicates that the CFI and TLI indices were 0.98 and 0.97, respectively. The RMSEA = 0.04 was within a good fit range (p < 0.05), and the upper limit of its 95% confidence interval (p = 0.06) was within the acceptable fit range. Considering that the close-fit test was not statistically significant (p = 0.78), it was not possible to reject the goodness-of-fit hypothesis of the confirmatory model (RMSEA < 0.05). Item 6 obtained the highest scores (Table 3).

3.3. Reliability

The obtained Cronbach's alpha was 0.78.

3.4. Acceptability

Two focus groups were conducted to explore participants' acceptance of the WebApp. The first focus group consisted of five clinical teachers, while the second involved nine students. Through the focus groups, two themes were identified: improvement in the assessment of learning and perception of the impact on learning.

3.4.1. Improvement in the assessment of learning

One of the strengths identified by participants was the perceived efficiency of the adapted Mini-CEX due to the reduced time required for the assessment process and the user-friendly nature of the Mini-CEX WebApp. Therefore, it allows for more time for student feedback. The following excerpt reflects this idea:

… in this tool, it is much easier, much simpler, and that makes you spend less time with the student in the evaluation and have more time to give feedback afterward… (Student 1, paragraph 10).

The simplicity of the Mini-CEX was perceived as a significant advantage, particularly in a busy workplace environment with a heavy patient care workload. The following excerpt from one of the clinical teachers explains this impression:

... I had a lot of patients at the same time when 3 or 4 students arrived, the feedback time was much more effective... I tell them “look, the summary will be sent to you by mail so that you can look over it well”, I give them the necessary feedback, and you move forward much faster with each student... (Teacher 4, paragraph 20).

However, students expressed a somewhat different perspective regarding the efficiency of the Mini-CEX WebApp, mentioning that it has led to a sense of impersonality in the assessment process. Their viewpoint is captured in the following statement:

... it becomes a little bit more impersonal, the assessment becomes a little bit distant in the sense that you are quiet and sitting down and the tutor is like [gestures typing on the phone] and doesn't say anything, then he is like 5, 3, 7, and says “okay I sent your grade”... (Student 8, paragraph 38).

Additionally, students highlighted the significance of implementing actions that contribute to sustainability by reducing the use of printed paper. Students also perceived that the 7-point scale utilized in the assessment was more subjective compared to a rubric that provides clear and specific criteria for evaluation.

3.4.2. Perception of the impact on learning

The most critical aspect in terms of its impact on learning is timely feedback. Both groups perceive that there is a greater amount of time for student feedback, highlighting strengths and weaknesses based on clinical activity.

..., since the app is individualized, the possibility of assessing them with a patient is great for learning as well as more personalized for each student... (Teacher 3, paragraph 106).

3.5. Costs

The design of the WebApp incurred a one-time cost financed by an internal fund (US$1,400). Additionally, there was a monthly cost of US$50 for server hosting. Usability aspects of the WebApp were provided by the clinical placements or each clinical tutor. Two research assistants helped monitor these aspects. To ensure the successful implementation of the Mini-CEX Webapp, the research team organized a training session for the participating clinical teachers. Furthermore, a feedback workshop was conducted at the university during the same semester to promote feedback processes using the adapted instrument. In total, these faculty training activities involved a total duration of 20 h.

3.6. Educational impact

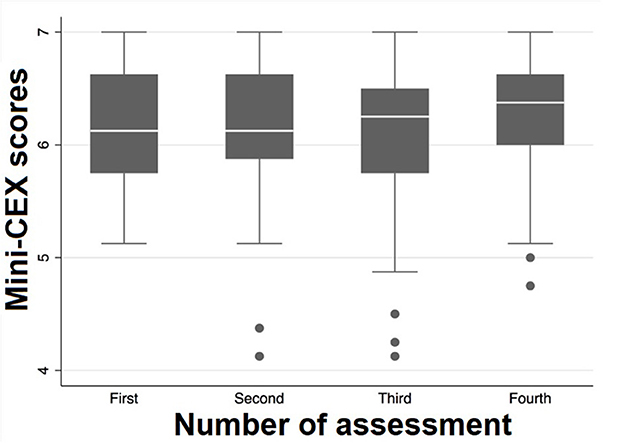

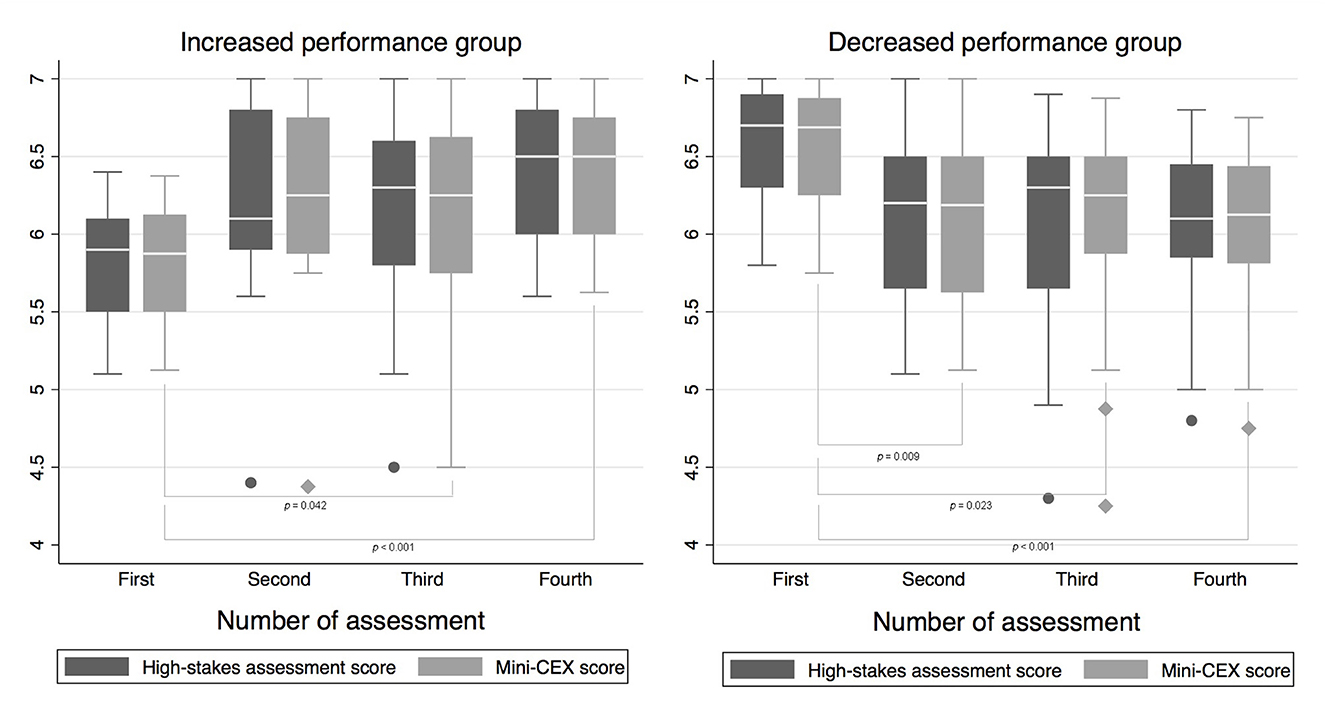

For level 1, the reaction was assessed by conducting a descriptive analysis of two satisfaction items, namely, teacher satisfaction, which had a median (IQR) of 6, and student satisfaction, which had a median (IQR) of 6, on a 7-point scale. Furthermore, the time spent observing the student-patient interaction was 22.5 ± 6.5 min, and the feedback time was 9.4 ± 2.9 min. Regarding learning, the assessment of the entire student cohort using Cuzick's test with rank scores did not reveal a significant trend in scores over time (p = 0.230; Figure 3). However, a group of students improved (49.9%) their scores compared with the baseline assessment (Figure 4). For the group that improved their scores, Cuzick's test showed a significant and positive trend over time (p < 0.001). The subsequent median scores were 5.9 (95% CI 5.7–6.1), 6.3 (95% CI 5.9–6.6), 6.3 (95% CI 6.0–6.5), and 6.5 (95% CI 6.3–6.7). The third (p = 0.042) and fourth (p < 0.001) assessments were significantly higher than the baseline. The largest difference in medians reached 6.3-tenths point (95% CI 3.4–9.1), corresponding to the difference between the first and fourth assessments.

Figure 3. Box plots for the Mini-CEX scores of the entire student cohort according to consecutive assessments.

Figure 4. Box plots for the Mini-CEX and overall high-stakes assessment scores according to consecutive assessments and performance trends (increased and decreased).

On the contrary, for the group that did not improve their scores, Cuzick's test showed a significant and negative trend over time (p = 0.0005) (Figure 4). The subsequent median scores were 6.6 (95% CI 6.4–6.8), 6.1 (95% CI 5.9–6.4), 6.3 (95% CI 6.0–6.5), and 6.1 (95% CI 5.9–6.3). The second (p = 0.009), third (p = 0.023), and fourth (p < 0.001) assessments were significantly lower than the baseline. The largest difference in medians reached 5.0-tenths point (95% CI −6.8 to −3.2), corresponding to the difference between the first and fourth assessments. A similar pattern was observed for both groups of students in the overall composite high-stakes assessment, which closely follows the performance of the Mini-CEX (Figure 4).

4. Discussion

The main findings about the 8-item Mini-CEX WebApp were that (1) the tool is a valid, acceptable, and reliable instrument for students, faculties, and clinical educators for clinical practice assessment in workplace-based learning settings for health undergraduate professions. (2) The tool is designed to measure the progress or change in student performance, particularly in those who started with the lowest scores followed by a few encounters (n = 4). (3) There was high satisfaction among students and clinical educators. Both agreed that there was more time for feedback. (4) The tool was able to show the same patterns of change as the overall high-stakes assessment score. (5) Some students perceived the Mini-CEX WebApp implementation as more impersonal and subjective than a rubric that clearly describes the assessment criteria.

Our findings support the idea that the Mini-CEX WebApp helped provide timely, individualized, and specific feedback to students while also aiding faculty in managing assessment data. These findings align with previous research on the benefits of observation and feedback in facilitating workplace-based assessments (Lefroy et al., 2017; Levinson et al., 2019). Furthermore, our findings are consistent with the growing integration of technology in higher education, such as simulation, blended methodologies, and apps, which have significantly advanced and become more common among faculties and students in the last decade (Masters et al., 2016). Although our instrument was adapted for the purpose of assessing the learning outcomes of undergraduate physiotherapy students, given that it has been modified from the original instrument, all items could be readily adapted to other contexts.

The development of the 8-item Mini-CEX WebApp involved a rigorous construction and content validation process. The high level of reproducibility achieved indicates its positive impact on participants' satisfaction. Factor analysis helped us understand the unidimensional nature of the Mini-CEX and select the right items for our clinical educators (Cook et al., 2010; Véliz et al., 2020). The confirmatory factor analysis showed Mini-CEX characteristics similar to previous studies by Cook et al. (2010) and Berendonk et al. (2018). Moreover, the 7-point scale we applied was easier to answer compared with the original 9-point scale. This distinction was made because the 7-point scale was better accepted and more familiar to our clinical educators and students. Because of that, the 7-point scale encouraged no interference with student grading (Fuentes-Cimma et al., 2020; Véliz et al., 2020). As for the R2 of item 6, which was low and not significant, there is an explanation based on the data. There is a ceiling effect in that item (median 7, with the 25th and 75th percentiles, also at 7). The effect of this bias (extreme response bias) has been studied in factor analyses (Liu et al., 2017). Furthermore, this item refers to professionalism, which has been intentionally developed in curriculum reforms in our faculty (Cisternas et al., 2016), and it is also the item with the highest score in previous applications (Fuentes-Cimma et al., 2020).

The Mini-CEX WebApp proved to be a perceptive tool for measuring progress and changes in student performance, particularly for students who initially had lower scores. This finding aligns with the study of Holmboe et al. (2003) who suggested that the Mini-CEX can distinguish between poor or marginal performance and satisfactory or superior performance. Thus, the Mini-CEX we implemented is a valuable tool for distinguishing student performance over time. Students with the lowest initial scores are habitually those who require more support. In contrast, we identified a second group that showed inverse performance over time (declined performance). Notably, both groups showed significant statistical median differences over time, reflecting the change in performance compared to the baseline measurement. The scores of the first application of the Mini-CEX served as the baseline measure where they received the first feedback using the WebApp. Prior to this assessment, no interventions or feedback had been provided. Following the first assessment, students underwent three additional experiences or assessments, which allowed for tracking and analyzing their performance over time.

Several factors could potentially explain the decline in performance observed in half of the sample over time. First, the literature has previously established a higher number of encounters, 6 or 8, needed to improve student performance using the Mini-CEX (Mortaz Hejri et al., 2020). Thus, more encounters would be necessary to show improvements for students with higher scores (Véliz et al., 2020). Second, the variability in clinical cases and the students' own knowledge levels may have contributed to the perception that some clinical cases were less challenging for students who achieved high scores early on. Third, it is worth considering that some students may have perceived the Mini-CEX WebApp as more impersonal and subjective compared to a rubric-based assessment approach. This subjective perception may have influenced their performance and responses, potentially impacting the observed results. All these hypotheses must be tested with new studies.

Interestingly, clinical educators appreciated having a brief tool set in a WebApp for mobile devices, optimizing the time spent on multiple assessments. However, students perceived it as a more impersonal process because it limited the possibility of discussing the assessment criteria. These results are consistent with Lefroy et al. (2017), who pointed out that mobile applications in workplace-based assessments can disrupt the necessary social interaction required for feedback conversations. Here, we can discuss why it is relevant to instruct that feedback sent over email cannot replace feedback conversations. Nevertheless, students and clinical educators perceived that the 8-item Mini-CEX WebApp is an excellent tool for observing student improvement and strengths, consistent with previous studies (Schopper et al., 2016; O'Connor et al., 2017, 2018; Fuentes-Cimma et al., 2020). The students particularly appreciated that this paperless strategy was an excellent faculty policy due to environmental concerns.

The present study is not without limitations. First, the literature recommends a minimum of 6–8 encounters per student, and there was no comparison with a non-reduced Mini-CEX tool because the organization of the course did not allow it. Second, the focus groups had a limited number of participants, which could limit the study's value. Third, we could not randomize the participants due to feasibility issues. Finally, our confirmatory analysis should have used different samples, according to Tavakol and Dennick (2012).

5. Conclusion

The 8-item Mini-CEX WebApp is a valid, acceptable, and reliable instrument for students, teachers, and clinical educators for clinical practice assessment in workplace-based learning settings for physical therapy undergraduate students. The enhanced direct observation allows for better feedback for the worst-performing students.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Pontificia Universidad Catolica de Chile Ethics Committee (ID170908016). The patients/participants provided their written informed consent to participate in this study.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This study was funded by the INNOVADOC grant from the Teaching Development Center of the Pontificia Universidad Católica de Chile (Santiago, Chile).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.943709/full#supplementary-material

References

Al Ansari, A., Ali, S. K., and Donnon, T. (2013). The construct and criterion validity of the mini-CEX: a meta-analysis of the published research. Acad. Med. 88, 413–420. doi: 10.1097/ACM.0b013e318280a953

Berendonk, C., Rogausch, A., Gemperli, A., and Himmel, W. (2018). Variability and dimensionality of students' and supervisors' mini-CEX scores in undergraduate medical clerkships - a multilevel factor analysis. BMC Med. Educ. 18, 1–18. doi: 10.1186/s12909-018-1207-1

Boud, D. (2015). Feedback: ensuring that it leads to enhanced learning. Clin. Teach. doi: 10.1111/tct.12345

Boud, D., and Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assess Eval High Educ. 38, 698–712. doi: 10.1080/02602938.2012.691462

Byrne, B. (2012). Structural Equation Modeling with Mplus: Basic Concepts, Applications, and Programming. New York: Routledge, Taylor and Francis Group. doi: 10.4324/9780203807644

Cisternas, M., Rivera, S., Sirhan, M., Thone, N., Valdés, C., Pertuzé, J., et al. (2016). Curriculum reform at the pontificia universidad catolica de chile school of medicine. Rev. Med. Chil. 144, 102–107. doi: 10.4067/S0034-98872016000100013

Cook, D. A., Beckman, T. J., and Mandrekar, J. N. (2010). Pankratz. Internal structure of mini-CEX scores for internal medicine residents: factor analysis and generalizability. Adv. Health Sci. Educ. 15, 633–645. doi: 10.1007/s10459-010-9224-9

De Lima, A. A., Barrero, C., Baratta, S., Costa, Y. C., Bortman, G., Carabajales, J., et al. (2007). Validity, reliability, feasibility and satisfaction of the Mini-Clinical Evaluation Exercise (Mini-CEX) for cardiology residency. Training 29, 785–790. doi: 10.1080/01421590701352261

Flora, D., and Curran, P. (2004). An empirical evaluation of alternative methods of estimation for confirmatory factor analysis with ordinal data. Psychol. Methods 9, 466–491. doi: 10.1037/1082-989X.9.4.466

Fuentes-Cimma, J., Villagran, I., Torres, G., Riquelme, A., Isbej, L., Chamorro, C., et al. (2020). Evaluación para el aprendizaje: diseño e implementación de un mini-CEX en el internado profesional de la carrera de kinesiología. ARS MEDICA Revista de Ciencias Médicas 45, 22–28. doi: 10.11565/arsmed.v45i3.1683

Goretzko, D., and Measurement, M. B. A. P. (2019). Factor retention using machine learning with ordinal data. Appl. Psychol. Meas. 2022, 406–421. doi: 10.1177/01466216221089345

Haffling, A. C., Beckman, A., and Edgren, G. (2011). Structured feedback to undergraduate medical students: 3 years' experience of an assessment tool. Med. Teach. 33, E349–E357. doi: 10.3109/0142159X.2011.577466

Hauer, K. E., Holmboe, E. S., and Kogan, J. R. (2011). Twelve tips for implementing tools for direct observation of medical trainees' clinical skills during patient encounters. Med. Teach. 33, 27–33. doi: 10.3109/0142159X.2010.507710

Hicks, P. J., Margolis, M. J., Carraccio, C. L., Clauser, B. E., Donnelly, K., Fromme, H. B., et al. (2018). A novel workplace-based assessment for competency-based decisions and learner feedback. Med. Teach. 40, 1143–1150. doi: 10.1080/0142159X.2018.1461204

Holmboe, E. S., Huot, S., Chung, J., Norcini, J., and Hawkins, R. E. (2003). Construct validity of the miniclinical evaluation exercise (miniCEX). Acad. Med. 78, 826–830. doi: 10.1097/00001888-200308000-00018

Hu, L., and Bentler, P. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Kaiser, H. F. (1974). An index of factor simplicity. Psychometrika 39, 31–36. doi: 10.1007/BF02291575

Kim, K. J., and Hwang, J. Y. (2016). Ubiquitous testing using tablets: its impact on medical student perceptions of and engagement in learning. Korean J Med Educ. 28, 57–66. doi: 10.3946/kjme.2016.10

Kirkpatrick, D. L. (1994). Evaluating Training Programs : the Four Levels. Oakland: Berrett-Koehler, 229.

Kline Rex, B. (2015). Principles and Practice of Structural Equation Modeling. New York City: Guilford Publications.

Kogan, J. R., Holmboe, E. S., and Hauer, K. E. (2009). Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA. 302, 1316–1326. doi: 10.1001/jama.2009.1365

Lefroy, J., Roberts, N., Molyneux, A., Bartlett, M., Gay, S., McKinley, R., et al. (2017). Utility of an app-based system to improve feedback following workplace-based assessment. Int J Med Educ. 8, 207–216. doi: 10.5116/ijme.5910.dc69

Levinson, A. J., Rudkowski, J., Menezes, N., Baird, J., and Whyte, R. (2019). “Use of mobile apps for logging patient encounters and facilitating and tracking direct observation and feedback of medical student skills in the clinical setting,” in Systems Thinking and Moral Imagination, 93–102. doi: 10.1007/978-3-030-11434-3_14

Liu, M., Harbaugh, A. G., Harring, J. R., and Hancock, G. R. (2017). The effect of extreme response and non-extreme response styles on testing measurement invariance. Front. Psychol. 8, 726. doi: 10.3389/fpsyg.2017.00726

Machado, J. A., Parente, P., and Santos Silva, J. (2011). QREG2: Stata Module to Perform Quantile Regression With Robust and Clustered Standard Errors. Statistical Software Components S457369, Department of Economics, Boston College.

Masters, K., Ellaway, R. H., Topps, D., Archibald, D., and Hogue, R. J. (2016). Medical teacher mobile technologies in medical education: AMEE Guide No. 105 Mobile technologies in medical education. Med. Teacher. 38, 537–549. doi: 10.3109/0142159X.2016.1141190

Milanese, S., Gordon, S., and Pellatt, A. (2013). Profiling physiotherapy student preferred learning styles within a clinical education context. Physiotherapy 99, 146–152. doi: 10.1016/j.physio.2012.05.004

Montagne, S., Rogausch, A., Gemperli, A., Berendonk, C., Jucker-Kupper, P., Beyeler, C., et al. (2014). The mini-clinical evaluation exercise during medical clerkships: are learning needs and learning goals aligned? Med. Educ. 48, 1008–1019. doi: 10.1111/medu.12513

Mortaz Hejri, S., Jalili, M., Masoomi, R., Shirazi, M., Nedjat, S., Norcini, J., et al. (2020). The utility of mini-Clinical Evaluation Exercise in undergraduate and postgraduate medical education: a BEME review: BEME Guide No. 59. Med Teach. 42, 125–142. doi: 10.1080/0142159X.2019.1652732

Muthén, L. K., and Muthén, B. O. (1998). Mplus: Statistical analysis with latent variables. User's guide, 6, 1998–2010. Los Angeles, CA.

Noble, C., Billett, S., Armit, L., Collier, L., Hilder, J., Sly, C., et al. (2020). “It's yours to take”: generating learner feedback literacy in the workplace. Adv. Health Sci. Educat. 25, 55–74. doi: 10.1007/s10459-019-09905-5

Norcini, J., and Burch, V. (2007). Workplace-based assessment as an educational tool: AMEE Guide No. 31. Med Teach. 29, 855–71. doi: 10.1080/01421590701775453

Norcini, J. J., Blank, L. L., Arnold, G. K., and Kimball, H. R. (1995). The mini-CEX (clinical evaluation exercise): a preliminary investigation. Ann. Intern. Med. 123, 795–799. doi: 10.7326/0003-4819-123-10-199511150-00008

Norcini, J. J., Blank, L. L., Duffy, F. D., and Fortna, G. S. (2003). The mini-CEX: a method for assessing clinical skills. Ann. Intern. Med. 138, 476–481. doi: 10.7326/0003-4819-138-6-200303180-00012

Nowell, L. S., Norris, J. M., White, D. E., and Moules, N. J. (2017). Thematic analysis: striving to meet the trustworthiness criteria. Int. J. Qual. Method. 16, 1. doi: 10.1177/1609406917733847

O'Connor, A., Cantillon, P., McGarr, O., and McCurtin, A. (2018). Navigating the system: Physiotherapy student perceptions of performance-based assessment. Med. Teach. 40, 928–933. doi: 10.1080/0142159X.2017.1416071

O'Connor, A., McGarr, O., Cantillon, P., McCurtin, A., and Clifford, A. (2017). Clinical performance assessment tools in physiotherapy practice education: a systematic review. Physiotherapy. 104, 46–53. doi: 10.1016/j.physio.2017.01.005

Pelgrim, E. A. M., Kramer, A. W. M., Mokkink, H. G. A., van den Elsen, L., Grol, R. P. T. M., van der Vleuten, C. P. M., et al. (2011). In-training assessment using direct observation of single-patient encounters: a literature review. Adv. Health Sci. Educ. 6, 131–42. doi: 10.1007/s10459-010-9235-6

Pinilla, S., Kyrou, A., Klöppel, S., Strik, W., Nissen, C., Huwendiek, S., et al. (2021). Workplace-based assessments of entrustable professional activities in a psychiatry core clerkship: an observational study. BMC Med. Educ. 21, 223. doi: 10.1186/s12909-021-02637-4

Ponterotto, J. G., and Ruckdeschel, D. E. (2007). An overview of coefficient alpha and a reliability matrix for estimating adequacy of internal consistency coefficients with psychological research measures. Percept. Mot. Skills. 105, 997–1014. doi: 10.2466/pms.105.3.997-1014

Schopper, H., Rosenbaum, M., and Axelson, R. (2016). ‘I wish someone watched me interview:' Medical student insight into observation and feedback as a method for teaching communication skills during the clinical years. BMC Med. Educ. 16, 1–8. doi: 10.1186/s12909-016-0813-z

Singhal, A., Subramanian, S., Singh, S., Yadav, A., Hallapanavar, A., Anjali, B., et al. (2020). Introduction of mini-Clinical Evaluation Exercise as a mode of assessment for postgraduate students in medicine for examination of sacroiliac joints. Indian J. Rheumatol. 15, 23–26. doi: 10.4103/injr.injr_106_19

Tavakol, M., and Dennick, R. (2012). Post-examination interpretation of objective test data: monitoring and improving the quality of high-stakes examinations. Med. Teach. 34, e161–e175. doi: 10.3109/0142159X.2012.651178

Van Der Vleuten, C. P. M. (1996). The assessment of professional competence: developments, research and practical implications. Adv. Health Sci. Educ. 1, 41. doi: 10.1007/BF00596229

Véliz, C., Fuentes-Cimma, J., Fuentes-López, E., and Riquelme, A. (2020). Adaptation, psychometric properties, and implementation of the Mini-CEX in dental clerkship. J. Dent. Educ. 85, 300–310. doi: 10.1002/jdd.12462

Walker, C. A., and Roberts, F. E. (2020). Impact of simulated patients on physiotherapy students' skill performance in cardiorespiratory practice classes: a pilot study. Physiotherapy Canada 72, 314–322. doi: 10.3138/ptc-2018-0113

Wang, J., and Wang, X. (2012). Estructural Equation Modeling Aplications Using MPLUS. Hoboken: Jhon Wiley and Sons, Inc. doi: 10.1002/9781118356258

Keywords: assessment for learning, Mini-CEX, workplace-based assessment, utility analysis, education

Citation: Fuentes-Cimma J, Fuentes-López E, Isbej Espósito L, De la Fuente C, Riquelme Pérez A, Clausdorff H, Torres-Riveros G and Villagrán-Gutiérrez I (2023) Utility analysis of an adapted Mini-CEX WebApp for clinical practice assessment in physiotherapy undergraduate students. Front. Educ. 8:943709. doi: 10.3389/feduc.2023.943709

Received: 14 May 2022; Accepted: 03 July 2023;

Published: 27 July 2023.

Edited by:

Ana Grilo, Escola Superior de Tecnologia da Saúde de Lisboa (ESTeSL), PortugalReviewed by:

Anand S. Pandit, National Hospital for Neurology and Neurosurgery (NHNN), United KingdomYijun Lv, Wenzhou Medical University, China

Carina Silva, Universidade de Lisboa, Portugal

Copyright © 2023 Fuentes-Cimma, Fuentes-López, Isbej Espósito, De la Fuente, Riquelme Pérez, Clausdorff, Torres-Riveros and Villagrán-Gutiérrez. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ignacio Villagrán-Gutiérrez, invillagran@uc.cl

Javiera Fuentes-Cimma

Javiera Fuentes-Cimma Eduardo Fuentes-López

Eduardo Fuentes-López Lorena Isbej Espósito

Lorena Isbej Espósito Carlos De la Fuente

Carlos De la Fuente Arnoldo Riquelme Pérez

Arnoldo Riquelme Pérez Hans Clausdorff

Hans Clausdorff Gustavo Torres-Riveros

Gustavo Torres-Riveros Ignacio Villagrán-Gutiérrez

Ignacio Villagrán-Gutiérrez