OER interoperability educational design: enabling research-informed improvement of public repositories

- 1Department of Didactics of Science, University of Jaen, Jaen, Spain

- 2Department of Specific Didactics, University of Cordoba, Cordoba, Spain

Introduction: According to UNESCO, open educational resources (OERs) could be tools for meeting Objective for Sustainable Development 4, as long as they have the appropriate characteristics and sufficient quality to promote citizen education.

Methods: This work presents a quality analysis of OERs in a public repository using mixed methods techniques and a participatory approach.

Results & Discussion: Though the quantitative results show high mean values in all the dimensions, the qualitative analysis provides a better understanding of how key stakeholders perceive particular aspects and how we can take a step forward to enhance usability and improve OER psychopedagogical and didactic design. The triangulation of information from different sources strengthens consistency and reliability and provides a richer perspective to inform future work.

1. Introduction

Open educational resources (OERs) have been envisioned by the United Nations Educational, Scientific and Cultural Organization (UNESCO, 2019) as promising tools for meeting the Objectives for Sustainable Development (OSD) collected in the 2030 Agenda, specifically those dealing with the promotion of high-quality, equitable and inclusive education for all (i.e., OSD4). In this sense, UNESCO has already claimed that, due to their free nature and capability to transform education, OERs may play a key role in ensuring inclusive, equitable and quality education and promoting lifelong learning opportunities for all; therefore, they must be exploited. However, their effective pedagogical application (and derived learning outcomes) is linked to their quality in terms of content, design, adaptability, usage, etc. Beyond that, in an attempt to stay competitive in global education, university are focusing on digital transformation strategies that imply (among other things) the design and implementation of OERs (Mohamed Hashim et al., 2022). Therefore, UNESCO has also stated the need to develop specific research strategies focused on the quality evaluation of existing OER repositories. Thus, this study responds to experts’ claims about the need to systematically evaluate the quality of OERs (UNESCO, 2019) and, previously, the necessity to address the validity and reliability issues of the evaluation instruments, previously adapted and applied (Yuan and Recker, 2015).

Within a wider study about OERs and their impact on teaching and learning, this paper presents the results of the quality analysis of the OER repository offered by the National Centre for Curriculum Development through Non-Proprietary Systems (CEDEC), dependent on the Spanish Ministry of Education. The educational resources analyzed have been developed within the national project named EDIA (from the Spanish name “Educativo, Digital, Innovador y Abierto”), which fosters the creation of innovative open digital resources that allow the intended educational transformation.

In particular, the present work intends to respond to the following research questions (RQ):

RQ1; What is the quality of EDIA OERs in terms of interoperability, psycho-pedagogical and didactic design and opportunities for enhanced learning and formative assessment?

RQ2; Which aspects might be improved?

To respond to these questions, we will use a participatory approach involving teachers and OER experts and draw on quantitative and qualitative data gathered using different instruments, unveiling a rich picture that goes beyond the evaluation of technical features related to OER interoperability and usability and offers an interesting landscape to discuss key psycho-pedagogical and didactic aspects, with some implications for the improvement of OER design.

OERs might be defined as learning, teaching and research materials in any format and medium that reside in the public domain or are under copyright that have been released under an open license, permit no-cost access, re-use, re-purpose, adaptation, and redistribution by others (UNESCO, 2019). According to this definition, OERs provide teachers with legal permissions to revise and change educational materials to adapt to their needs and engage them in continuous quality-improvement processes. Therefore, OERs empower teachers to “take ownership and control over their courses and textbooks in a manner not previously possible” (Wiley and Green, 2012, p. 83). The unique nature of OERs allows educators and designers to improve curriculum in a way that might not be possible with a commercial, traditionally copyrighted learning resource (Bodily et al., 2017). Moreover, and although many institutions are promoting the adoption and creation of OER, they are still lacking in the policies and development guidelines related to their creation (Mncube and Mthethwa, 2022). However, to make the most of the opportunities offered by OERs, it is necessary to ensure a quality standard for the resources by implementing proper quality assurance mechanisms. In response to these concerns, UNESCO recommends encouraging and supporting research on OERs through relevant research initiatives that allow for the development of evidence-based standards and criteria for quality assurance and the evaluation of educational resources and programs. In response to this claim, some research studies have even proposed specific frameworks aimed at assessing the quality of OER (Almendro and Silveira, 2018).

Literature reviews (Zancanaro et al., 2015) and recent research on OER-based teaching (Baas et al., 2022) show that the main topics covered in the OER literature are related to technological issues, models of businesses, sustainability and policy issues, pedagogical models, and quality issues, as well as barriers, difficulties, or challenges for teacher’s adoption and OER use (Baas et al., 2019). On a wider scope, some authors discuss the current impact of technology on key cognitive aspects such as attention, memory, flexibility and autonomy and claim for an effective and pedagogical use of technological resources (Pattier and Reyero, 2022).

The analysis of students’ online activities, along with their learning outcomes, might be used to understand how to optimize online learning environments (Bodily et al., 2017). Learning analytics offers amazing opportunities for the continuous improvement of OERs embedded in online courses. However, Bodily et al. (2017) state that despite this claim, it is very hard to identify any publications showing results from this process, and there is a need for a framework to assist this continuous improvement process. In response to this need, they propose a framework named RISE for evaluating OERs using learning analytics to identify features that should be revised or further improved. Pardo et al. (2015) argued that learning analytics data does not provide enough context to inform learning design by itself. In agreement with this idea, Bodily et al. (2017) clarify that the RISE (Resource Inspection, Selection, and Enhancement) framework does not provide specific design recommendations to enhance learning but offers a means of identifying resources that should be evaluated and improved. The RISE framework is supposed to provide an automated process to identify learning resources that should be evaluated and either eliminated or improved. It counts on a scatterplot with resource usage on the x-axis and grade on the assessments associated with that resource on the y-axis. This scatterplot has four different quadrants to find resources that are candidates for improvement. Resources that reside deep within their respective quadrant should be further analyzed for continuous course improvement. The authors conclude that although this framework is very useful for identifying and selecting resources that are strong candidates to be revised, it is not applicable to the last phase of the framework intended at making decisions about how to improve them. This last phase requires in-depth studies that combine quantitative and qualitative data and involve experts in learning design. In this line it is worth mentioning the work of Cechinel et al. (2011) where the complexity of assuring a standard of quality inside a given repository was stated, given the fact that it involves different aspects and dimensions such as quality regarding not only the content, but also, its effectiveness as a teaching tool, usability, among others.

Recently, Stein et al. (2023) analyzed, in the context of the evaluation of the general quality of the Merlot1 repository, to which extent different raters tended to agree about the quality of the resources inside it. Also, the work of Cechinel et al. (2011), which analyzed the characteristics of highly-rated OER inside Learning Object Repositories (LOR) to take them as priori indicators of quality, provided meaningful insights regarding the assessment process for determining the quality of OER. As a result of their study, they found that some of the metrics presented significant differences between what they typified as highly rated and poorly-rated resources and that those differences were dependent on the discipline to which the resource belongs and, also, on the type of the resource. Besides, they found different rating depending on the typology of rater (i.e., peer-review or user evaluation). These aspects are of important consideration for the design of any quality evaluation process of an OER repository.

Moreover, pedagogical issues have no sense without looking at teachers. They are often key targets in the OER literature, and some authors argue that teachers should not only accompany but also drive the change toward openness in education as crucial players in the adoption of the OER paradigm. Along this line, Nascimbeni and Burgos (2016) draw attention to the necessity of teachers who embrace the OER philosophy and that makes open education their signal of identity. In this sense, they provide a self-development framework to foster openness for educators. The framework focuses on four areas of activity of an open educator—design, content, teaching, and assessment—and integrates objects, tools, teaching content and teaching practices. Aligned with Nascimbeni and Burgos (2016), we consider open educators as crucial players and give them a voice in the evaluation of OERs as key stakeholders. For this reason, we adopt a participatory approach in which we actively engage open educators in the piloting of instruments and in the evaluation of OERs. We advocate the potential of OERs to promote teacher professional development and the importance of developing mechanisms to create communities of practice and networks of OER experts, as well as to properly recognize OER creation as a professional or academic merit. As teacher educators, we are especially interested in the pedagogies underlying the design and use of OERs and envision open education as a powerful field for teacher professional development.

Our research study has been focused on the so-called EDIA project, which originated around 2010 and constituted a national initiative promoted by the Spanish Ministry of Education to promote and support the creation of dynamics of digital and methodological transformation in schools to improve student learning and encourage new models of education across the country. It offers a repository of educational content for early childhood, primary and secondary education, as well as vocational studies. The OERs are designed by expert creators and active teachers; therefore, they are based on current curricular references. The main feature of the EDIA OERs relates to the methodological approaches that characterize their proposals. These are linked to active methodologies, such as problem-based learning and flipped learning, among others, and to the promotion of digital competences in the classroom. Another important feature of the EDIA project is its continuous evolution, both through the incorporation of new professionals and the incorporation of improvements into the digital tools used for the creation of resources. This is reflected in the free repository accessible through its website, which incorporates new resources and associated materials (rubrics, templates, etc.). Additionally, in the context of the EDIA community, networks of teachers who dialogue about the application of resources in the classroom and the use of technology have been generated. This virtual cloister constitutes a framework for experimentation to propose new models of educational content that develop aspects, such as accessibility, and issues, such as gender equality and digital citizenship, among others.

The EDIA project fits positively into what it is expected for an OER repository that offers suitable informational ecosystems and appropriate social and technological infrastructures (Kerres and Heinen, 2015). Some of the key elements for promoting OER-enabled pedagogies are the EDIA network, the annual EDIA meetings, and the shared use of eXeLearning software.

2. Materials and methods

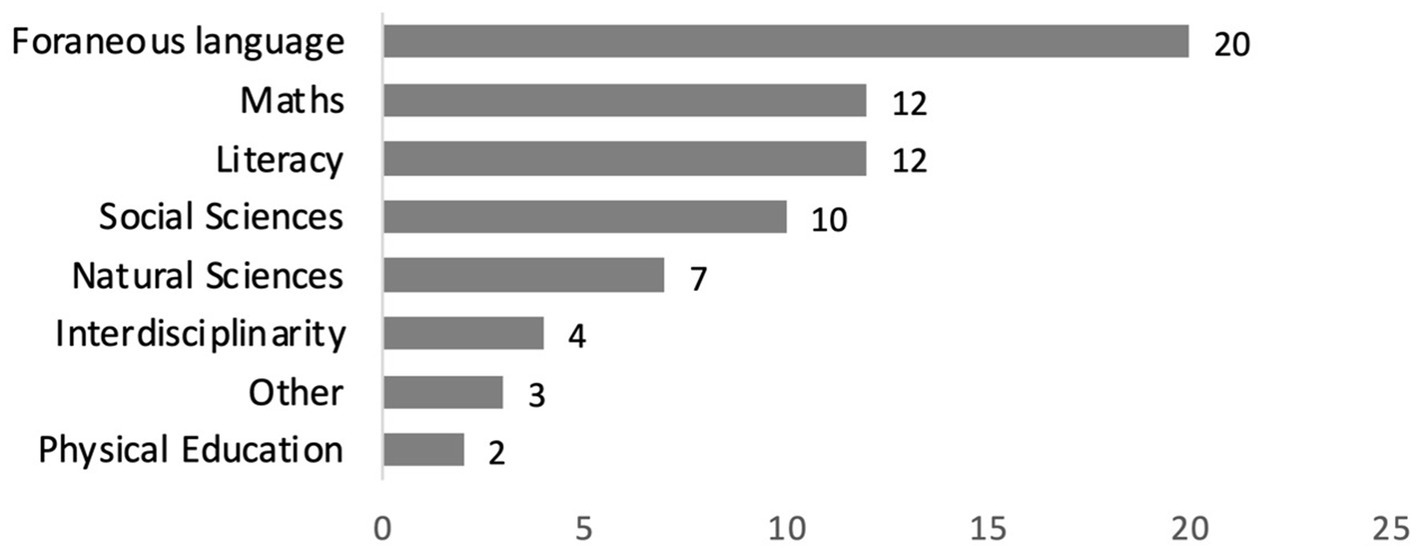

The analysis of the open resources from the EDIA repository has been made using a participatory approach involving 48 evaluators in the application of two previously adapted, refined, and validated instruments for OER quality assurance. The quantitative data obtained were triangulated and enriched with the content analysis of the comments for improvement received from the evaluators involved. The sample contains the OERs included in the EDIA repository, 70 OERs including all subjects of the Spanish curricula from K-6 and K-12 levels (Figure 1).

2.1. Profile of OER evaluators

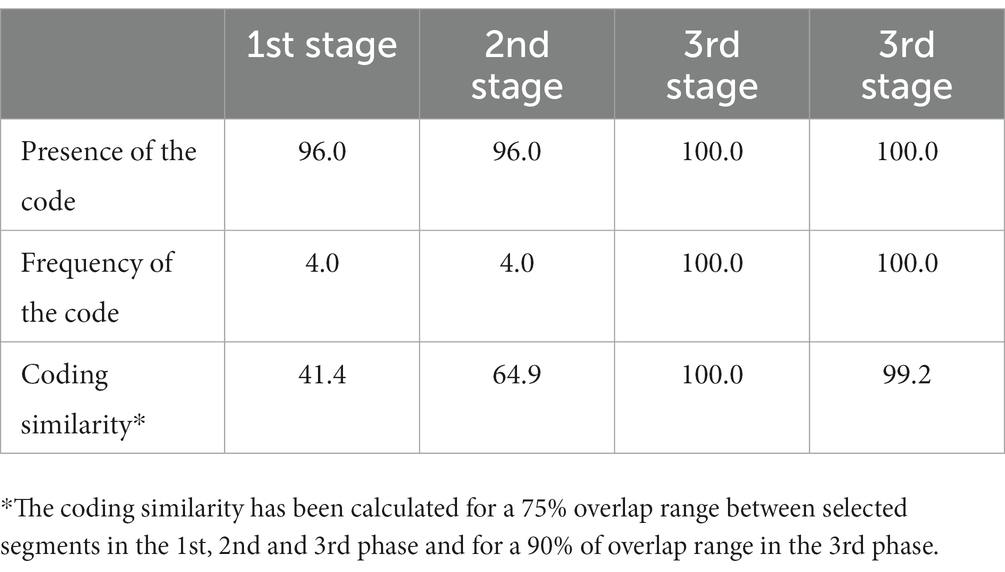

Evaluators were purposefully selected according to their background and teaching experience; those excluded were possible authors of the OERs under evaluation. A total of 48 teachers (54% male, 46% female), with a profile of teaching experience ranging between 4 and 35 years, participated in the review process of the EDIA OER evaluation. Overall, more than 87% had up to 10 years of teaching experience, and 32.6% had more than 20 years of experience; the average experience was 18.5 years. To check reliability, some OERs were evaluated simultaneously by two or three independent evaluators (see Table 1).

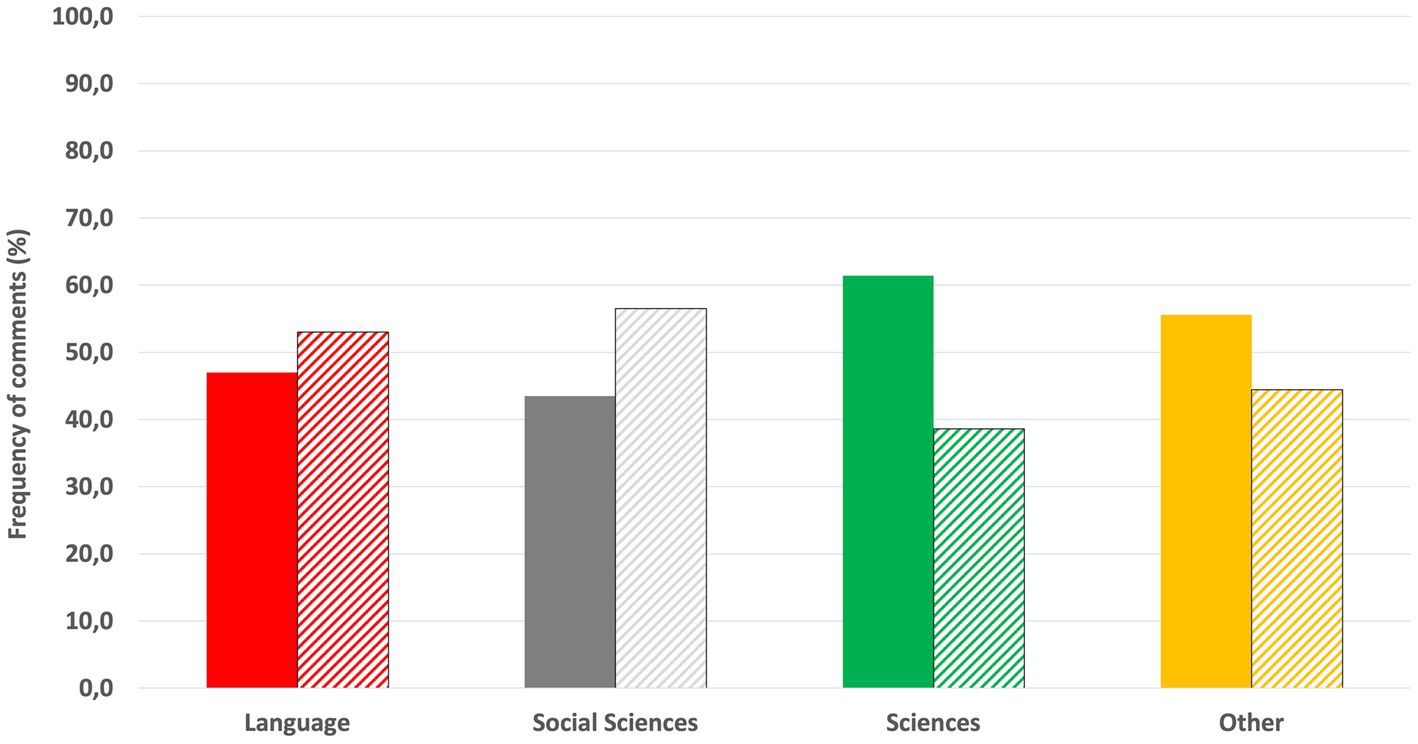

Table 1. Specifications related to the sample characteristics: (1) number of OER evaluated per subject and number of evaluations per subject and (2) distribution of the OER evaluated as a function of educational stage.

2.2. Quantitative research

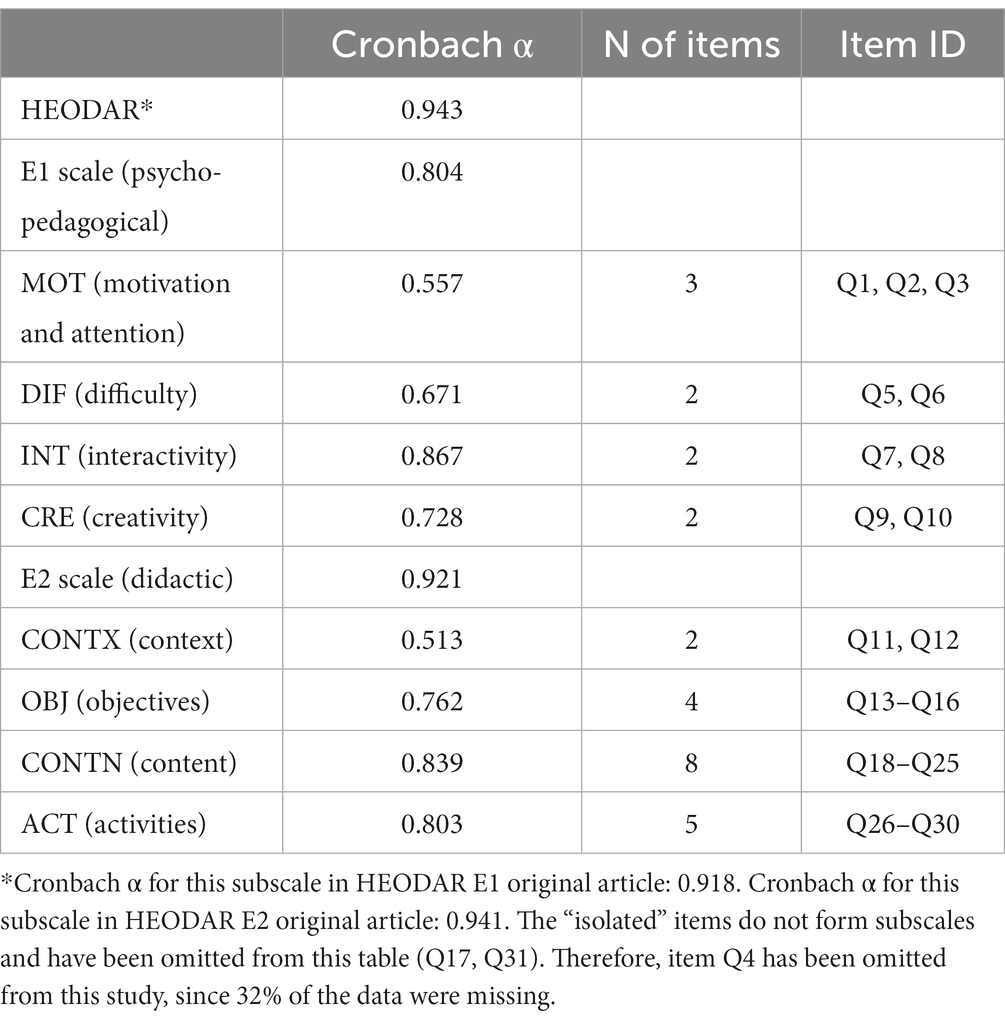

OERs were evaluated through two instruments developed and validated by educational researchers in the context of the evaluation of learning objects, such as the instrument known as HEODAR (Orozco and Morales, 2016) and rubrics from the Achieve OER Evaluation tool (Birch, 2011), using a translated and adapted version for this research.

In the case of HEODAR, we used a short version with two scales. The original instrument (Orozco and Morales, 2016) was developed and validated using four scales: psycho-pedagogical scale (E1), didactic scale (E2), interface design scale and navigation scale. The E1 and E2 dimensions were mainly focused on aspects related to teaching and learning processes, which are the focus of this research. The instrument developed for this study applies a 5-point Likert-type scale ranging from a value of 1 for the quality of the resource under evaluation (i.e., very poor) to a value of 5 corresponding to the maximum value (i.e., very high quality). The questionnaire also includes an item option that states for “not applicable.”

In addition, in this adapted version, we included an additional item formulated under the heading of “Global assessment of the resource,” which has been phrased as “Score the global quality of the OER (from 1-very poor to 5-very high) and write explicitly the indicators used to assign that score.” The inclusion of this item responds to the need of deepening the analysis and allowing triangulation.

Table 2 shows the Cronbach’s alpha values obtained in this study for the subscales described in the original article, which, in comparison with the corresponding values reported in the original article (see footnote in Table 2), could be considered more than acceptable considering our sample. Results from preliminary exploratory factor analysis (EFA) are also coincident for explaining variance and the number of theoretical factors in the dimensions studied when compared to the original study.

Thus, the scales defined within the HEODAR instrument are as follows:

• HEODAR global scale, which establishes an overall mark (from 1 to 5) to the OER

• E1 (psycho-pedagogical scale), which establishes the mean value for the marks assigned to items Q1–Q3 and Q5–Q10

• E2 (didactic scale), which establishes the mean value for the marks assigned to items Q11–Q16, Q18–Q30.

Additionally, the Q32 item (an ad hoc item that provides the mean value for the OER based on the values assigned to the first 30 items in the HEODAR instrument) was created to measure internal consistency per evaluation (by comparing against the value assigned to Q31—“Global assessment of the resource”).

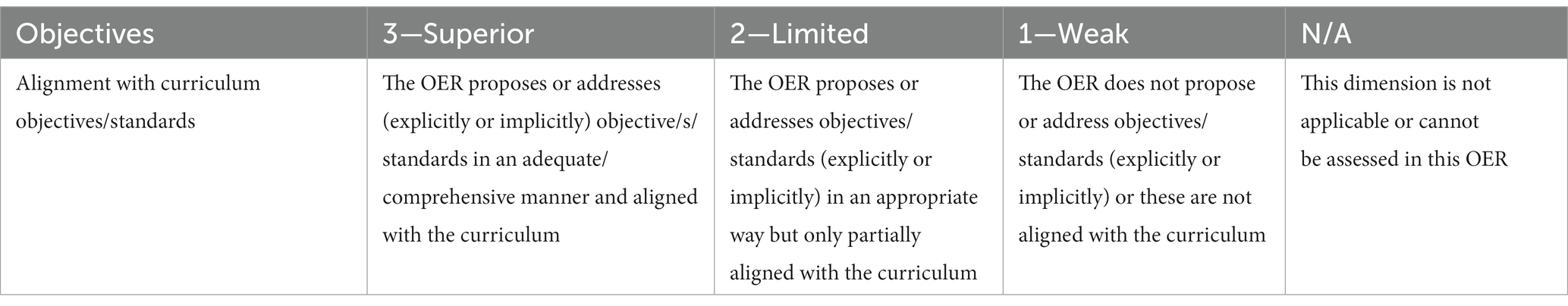

Moreover, the Achieve instrument (Birch, 2011) consists of a set of eight rubrics developed to carry out online OER assessments. We used a translated version of the simplified instrument (Birch, 2011). Rubrics associated with the instrument refer to eight dimensions: OER objectives (OBJ), quality of the contents (QCO), usefulness of the resources/materials (UTI), quality of the evaluation (QEV), quality of technological interactivity (QTI), quality of exercises/practices/tasks/activities (QEX), deep and meaningful learning (DML) and accessibility (ACC). Rubric ACC should be applied only if evaluators are experts in this category, so this last dimension showed the most missing data, probably due to the lack of expertise of evaluators in technical issues related to accessibility.

According to the authors, these rubrics would be used to rate the effective potential of a particular OER in each learning environment. Each rubric can be used independently of the others by using scores that describe levels of potential quality, usefulness, or alignment with the standards. The original version uses a score from 3 (superior) to 0 (null). In the version developed for this study, the scale has been simplified to 3 (superior), 2 (limited) and 1 (weak), including the “neutral” item (N/A, it is not applicable or “I cannot evaluate this dimension”).

Table 3 shows the first dimension of this instrument and the evaluation of the corresponding OER objectives (OBJ), including the explicit criteria related to any quality level. The whole rubric is shown in Supplementary Table S1.

2.3. Qualitative research

Two independent researchers/encoders carried out the qualitative analysis. Out of the 105 compiled documents, 90 included qualitative data that contained explicit information regarding a plethora of aspects related to the quality of the resources. According to the quantitative instrument, 14 native categories were defined, which could be considered positive or negative; therefore, we considered 28 subcategories. See Supplementary Table S2 to check the list of categories and how they were defined. Each encoder worked independently with MAXQDA 2020 (VERBI Software, 2020). The intercoder agreement analysis involved checking (i) the presence of the code in the document, (ii) the frequency of the code in the document, and (iii) the coding similarity. Thus, the qualitative analysis was developed in three phases to obtain consensus; the results are shown in Table 4.

3. Results

3.1. Characteristics of the sample

The instruments described above were applied to the analysis of 100 reports derived from the evaluation of 71 different OER belonging to the EDIA project, which correspond to different educational levels: 74% applied to secondary education, 20% to primary education, 2.8% to pre-university stage and the remaining 2.8% to vocational studies. Table 1 provides data in relation to the subject domain and educational stage of the OER considered in the sample.

3.2. Quantitative study

It should be noted that the good internal consistency of the data obtained through the instruments are kept for a random selection of a set of evaluations. Next, we summarize the most remarkable results obtained through the quantitative analysis.

3.2.1. HEODAR instrument

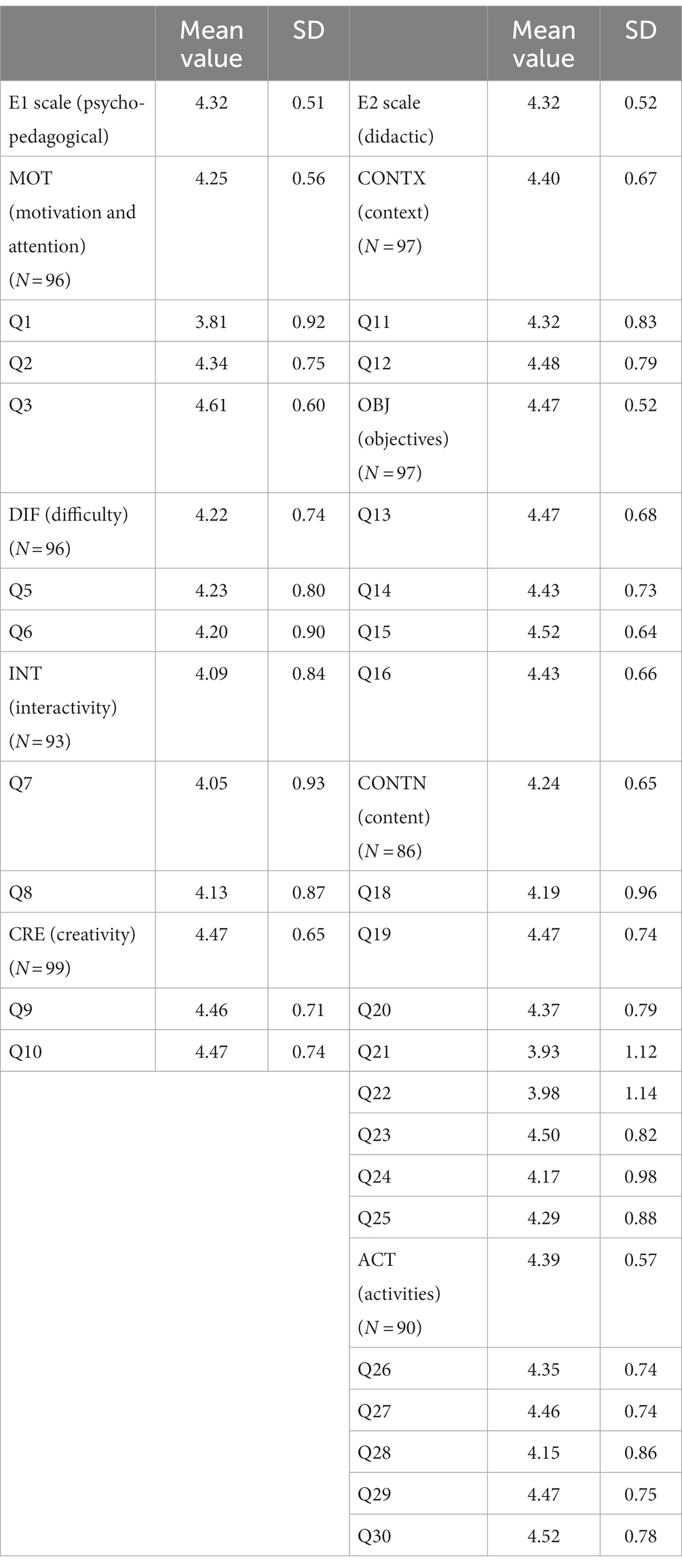

Considering the complete set of data (with no missing cross values in any of the items within each scale), analysis from the HEODAR data showed the following results (Table 5).

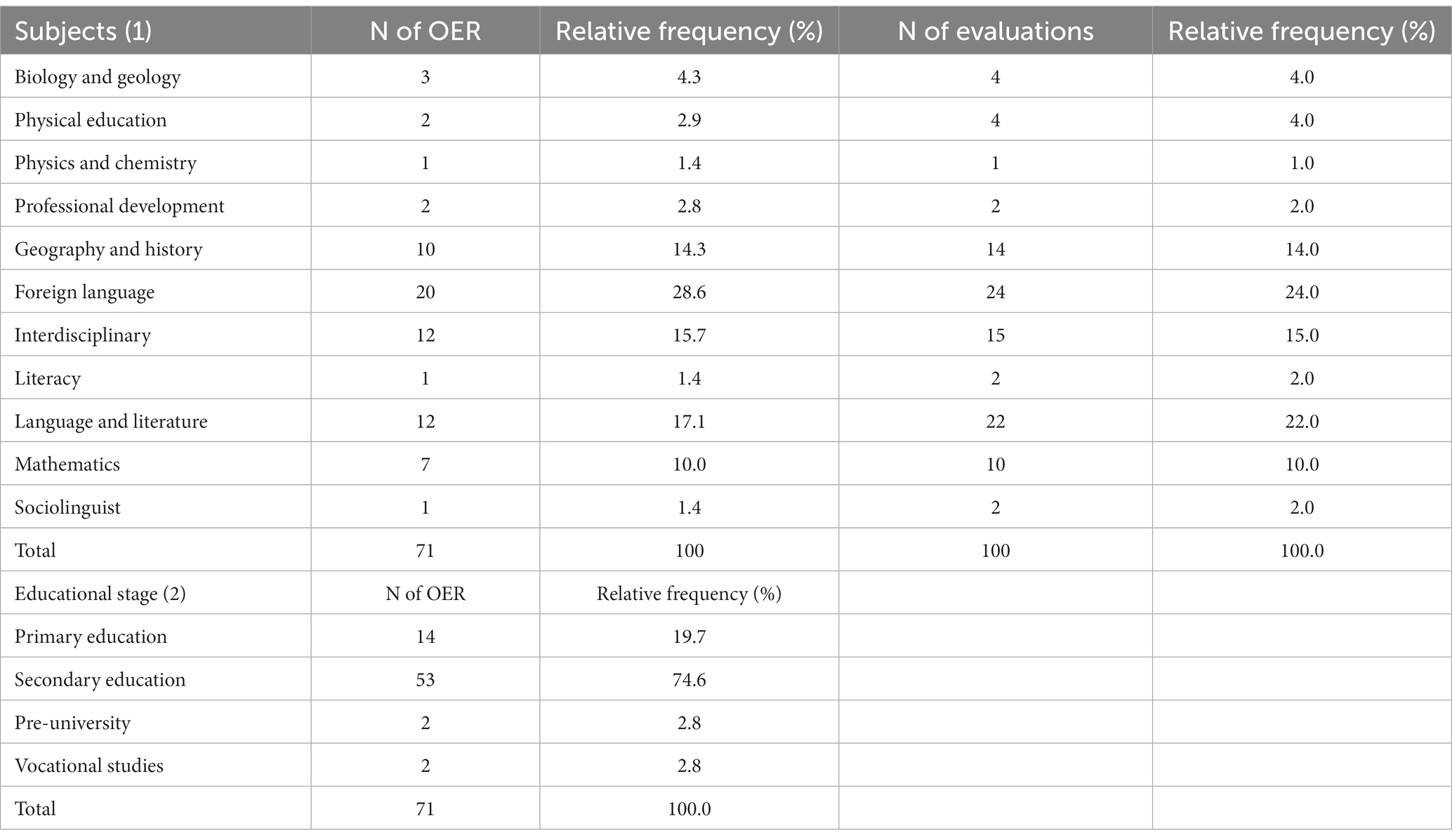

Table 5. Mean values and corresponding standard deviations (SD) obtained for the global scales included in HEODAR and Q32.

The results concerning the mean values of the global HEODAR scale (i.e., overall evaluation) and Q32 item reveal that the EDIA OER are considered of good or very good quality. Approximately 84% of the evaluations scored are equal to or higher than 4. Moreover, among the 88 total evaluations considered, only 14 were marked with values below 4 (the value 3 being the lowest mark assigned to one specific OER). The analysis of the mean/mode values given to the four global scales reveals a good internal consistency of the evaluation performed per evaluator. That is, the mean values assigned to item Q32 show no significant difference from the corresponding means in the psycho-pedagogical (E1) and didactic (E2) scales. This reveals a robust coherence of the evaluators applying the HEODAR questionnaire, showing the high reliability of the data obtained via this instrument and by the group of evaluators involved. Table 6 shows the values from the psycho-pedagogical and curricular aspects and their subscales.

Table 6. Mean values and corresponding standard deviations (SD) obtained for the E1 and E2 global scales and sub-scales included in the HEODAR instrument.

In agreement with its global value, all the corresponding subscales in the E1 scale are valued over 4.0 with the sub-scale about interactivity (INT) being the worst considered within this group. However, item Q1 within the subscale MOT (which refers to the general aspect and presentation of the OER) is the one receiving the lowest mark (3.81), which is even below the mean value of the scale.

Within the MOT subscale, item Q3 exhibited higher values than the rest of the items in the subscale. This item refers to students’ engagement with the resource, the student’s role and what they must do according to the information provided by a particular OER. This result is consistent with the qualitative analysis in the category “Motivation and Attention,” with a frequency of 5.2% (Table 7) being 75% of the comments related to positive aspects. Within this category, we have included allusions such as “the theme pulls off the attention of the students” or “It is a sensational OER, very motivating and with a great deal of support.”

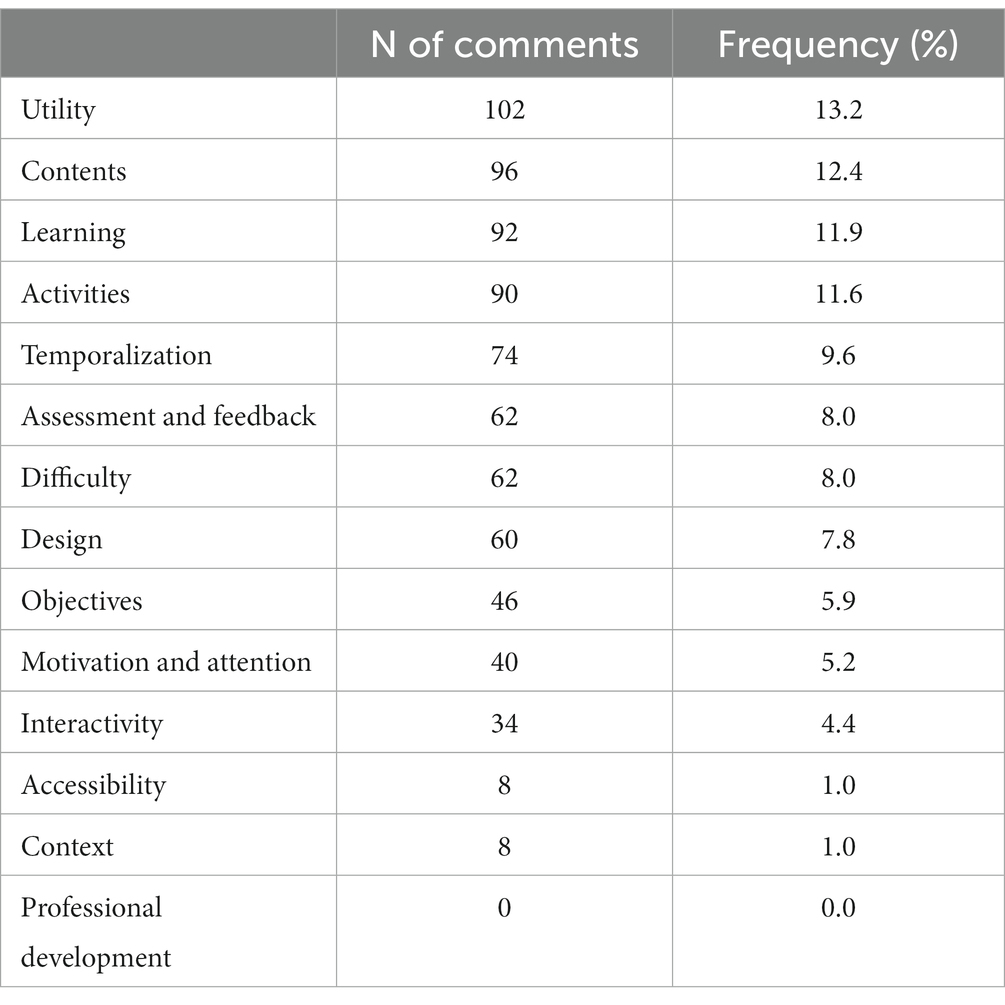

Table 7. Categories and frequencies in the qualitative analysis of the feedback received from evaluators.

Similarly, none of the corresponding subscales within the scale related to didactic aspects receive a significantly lower mark than the mean value for the scale (4.32). It is the subscale CONTENT that obtains a slightly lower value, with the case of items Q21 and Q22 being remarkable (3.93 and 3.98, respectively):

• Q21: Allowing the students to interact with the content using the resource.

• Q22: Providing complementary information for those students interested in widening and deepening their knowledge.

This specific result associated with the type of learning will be discussed later. However, on the scale, this aspect has been well valued (4.24). Even in qualitative analysis, the content category exhibits one of the highest frequencies (12.40%, Table 7), with 61.3% of the comments coded within positive aspects.

3.2.2. ACHIEVE instrument

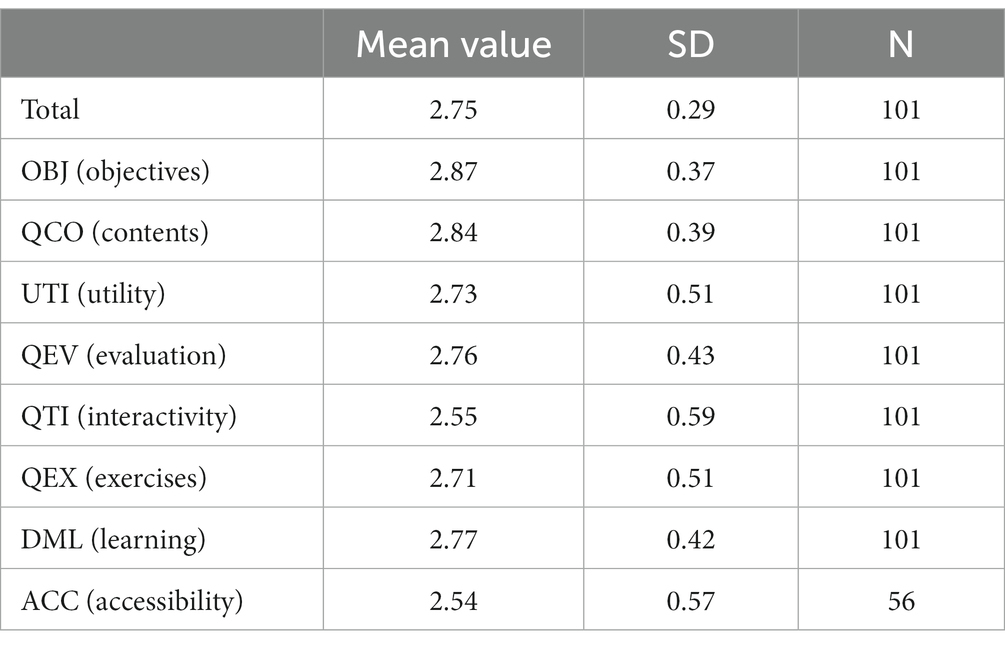

Table 8 shows the mean values and the corresponding standard deviations of the nine dimensions included in the Achieve instrument. Each dimension was evaluated using a rubric describing three different levels of achievement.

Table 8. Mean values and corresponding standard deviations (SD) obtained for each category in the Achieve instrument.

First, it must be noted that the dimension evaluating accessibility (ACC) was left blank by almost half of the evaluators, obtaining only 56 evaluations of the total sample (N = 101). This fact is aligned with a low frequency for the corresponding category in the qualitative analysis (1.03%, Table 7), suggesting that this dimension is somehow neglected by the evaluators, either for lack of information or expertise in the area.

Most of the remaining items received a mean value above 2.0 out of a 3-point evaluation, which denotes the good quality perceived for the EDIA OER. This is in line with the previously described results obtained from the HEODAR instrument. Although the general qualification is good (2.75 out of 3), several aspects must be stated. On one side, objectives, contents, and learning are among the best valued (2.87, 2.84, and 2.77, respectively), which is endorsed by the qualitative analysis where the homonymous dimensions have received a remarkably larger amount of positive than negative feedback (81.8, 61.3, and 86.0%, respectively). On the other hand, interactivity and accessibility are among the lowest valued (2.55 and 2.54, respectively), which is properly supported by the qualitative data, where the homonymous categories have received a sensibly larger amount of negative than positive comments (62.5 and 75.0%, respectively).

3.3. Qualitative analysis

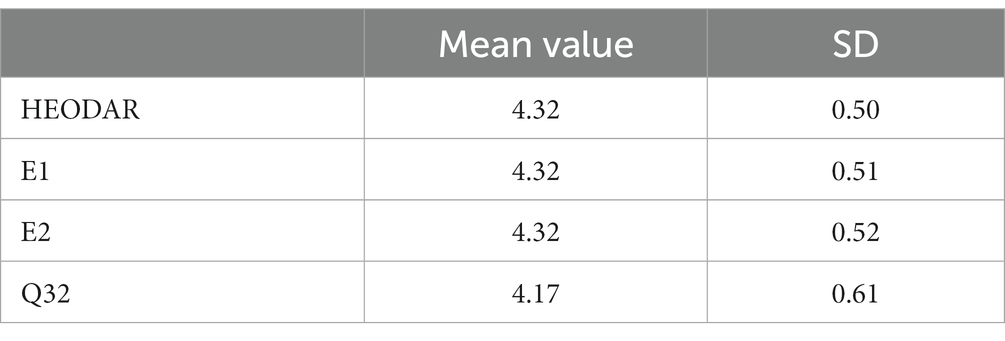

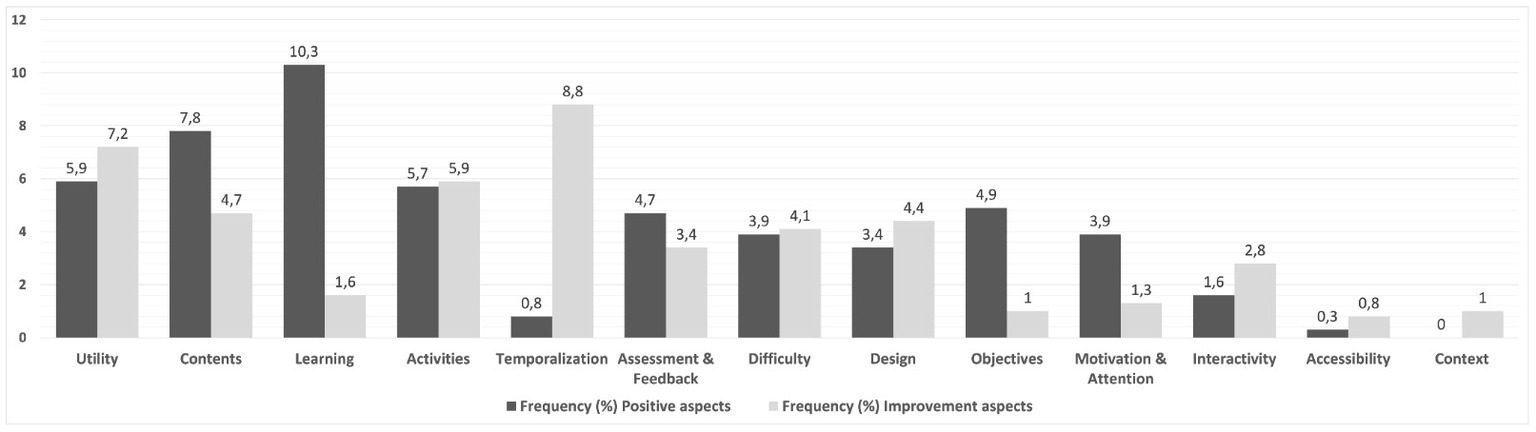

The categories used for the content analysis of the open feedback provided by the evaluators are aligned with themes included in the items of both quantitative instruments, affording an in-depth view of these issues. Table 7 shows that most comments received were coded under the categories related to the utility of the resources, the quality of the contents, how the learning was orchestrated, and the learning activities suggested. Next, we provide a brief overview of concrete disciplinary areas. For the sake of simplicity, the disciplinary subjects will be grouped into four main areas: (1) Language (language and literature, foreign language, literacy and sociolinguist), (2) Social Sciences (geography and history), (3) Sciences (biology and geology, physics and chemistry and mathematics), and (4) Other (physical education, professional development and interdisciplinary). Since there are very different amounts of OERs per area (Table 1), we are cautious with data. However, the asymmetrical OER distribution could be a result per se. In this sense, the most remarkable aspect is related to the fact that scientific subjects are underrepresented, with the number of Natural Sciences OERs being just about 6% of the total sample. Figure 2 shows the frequency of positive and the to be improved aspects in each of the above-mentioned four areas.

As shown, Sciences is the area with the largest number of positive comments; however, if we disclose this subset, we observe that Natural Science subjects are the ones with the poorest evaluations (biology and geology and physics and chemistry, with 71.7 and 100%, respectively), while only 23.3% of the feedback for the math’s OERs were negative. Considering the whole set of OERs, the best ranked were those corresponding to physical education (included in “Other”) and literature (included in “Language”), with a higher positive/negative ratio of the whole set of evaluated OERs. With the aim of identifying strengths and improvement aspects of the repository, Figure 3 shows the frequency of comments received in each category.

Figure 3. Frequency (%) of comments about positive and the to be improved aspects in the EDIA repository.

Most valued aspects of the repository are related to features included in the categories learning, contents, and objectives, whereas the OER’s utility and timing and schedule (i.e., temporalization) seem to be a characteristic to amend/improve. Check Supplementary Table S2 to identify the descriptors included in each category.

Moreover, the analysis per subject shows that the Language OER’s have more than 60% comments for improvement on psycho-pedagogical aspects, such as context, design, difficulty level and assessment/feedback. Similarly, the “Social Sciences” resources could also be improved in psycho-pedagogical aspects (more than 40% comments for improvement in motivation or learning). On the other hand, scientific OERs have been mostly negatively evaluated in relation to didactic aspects, such as objectives or activities (more than 30% in both cases) and psycho-pedagogical ones (utility, 39.1%). Note that “Foreign Language” concentrates all the negative feedback of the category accessibility simply because this aspect was only explicitly evaluated for those OERs.

Hereinafter, we will draw on results obtained to discuss and respond to the research questions previously posed.

4. Discussion and conclusion

In the context of OER, interoperability refers to the capability of a resource to facilitate the exchange and use of information, allowing reusability and adaptation by others. On the one hand, for accessibility, the results show a mean value of 2.55 out of 3 in the Achieve instrument (lower than the other items) and the highest amount of missing data (only 54 out of 101 evaluations), with few comments from the evaluators (frequency 1%). The missing data and low frequency of comments suggest that accessibility is an aspect somehow neglected by evaluators. On the other hand, perceived utility obtains 54.9% of negative comments referring to technical aspects and to the lack of appropriate educational metadata describing the educational level and age group. According to some authors (Santos-Hermosa et al., 2017), this is a key aspect to consider when discussing quality issues since OER metadata are used to assess the educational relevance of the OER and the repository to which it belongs, as they determine its likeliness to be used.

Another issue affecting the utility of OER is related to temporalization. Item Q17 in HEODAR refers to the estimated time for the use/implementation of the OER. This item received a mean value of 3.69 (sensibly lower than the remaining items). This information is in line with the data obtained through the qualitative study, where temporalization frequently commented (9.56%) and most of the comments were negative (91.9%); for example, “the number of sessions should be considerably incremented” or “it dedicates too much time to some particular activities.” Since time and temporalization are critical issues highlighted by experts because they might significantly affect the usability of resources, we suggest that carrying about granularity in OER will enhance OER flexibility. Time optimization through the articulation of digital resources in minimum meaningful pieces may be used independently and combined with others to increase OER versatility and thus usability (Ariza and Quesada, 2011).

Regarding the pedagogical and didactic design of OER, Van Assche (2007) defined interoperability as the ability of two systems to interoperate. Experts in didactics claim that this term should be interpreted in a wider sense, also including semantic, pragmatic, and social interoperability related to the educational systems where OER operate. Semantic interoperability refers to the way information is given and interpreted, while pragmatic and social interoperability address, among others, the appropriateness and relevance of the pedagogical goals, their content quality, and the perceived utility of the resources (Ariza and Quesada, 2011). From this perspective, we can conclude that there are aspects related to the pedagogical and didactic dimensions that somehow influence the semantic, pragmatic, and social interoperability of the OER under evaluation. We will further discuss them based on the results obtained.

The quantitative results reveal that the aspect best evaluated was the learning objectives (4.47 out of 5 in HEODAR and 2.87 out of 3 in the Achieve instrument), considering whether they were aligned with the OER content and the learning activities. These were explicitly evaluated (2.71 out of 3), showing one of the highest frequencies, with a balance between positive and negative feedback. Regarding aspects to be improved, evaluators refer to the nature of the activities, either too repetitive, too complex, or diverse, or not aligned with objectives. On the contrary, some of the positive comments referred to an adequate number and sequence of activities or the capacity to address different learning styles.

Regarding the quality of OER contents and their impact on the social interoperability of the resources, content quality is the second one receiving more comments from the evaluation (12.4%), with 61.3% of positive comments. Evaluators referred to the clarity, appropriateness, comprehension, level of detail of the information provided, the presence or not of complementary information to gain a complete and deep understanding of the topic to teach, the reliability of the references and sources of information provided or the OER adequacy to the educational target group. In addition, the quantitative data show a very positive evaluation of the quality of the OER content. This was reflected in the high scores received from both instruments (4.24 out of 5 and 2.84 out of 3).

Under the dimension interactivity, the evaluation instruments include items determining the students’ roles in the learning process according to the kind of activities, learning scenarios and methodological approaches used. This dimension shows mean values per subscale of 4.09 out of 5 in HEODAR and 2.55 out of 3 in the Achieve instruments, with a prevalence of comments for improvement in the qualitative analysis.

Sometimes, interactivity is related to opportunities for formative assessment. Evaluation is explicitly considered in the ACHIEVE instrument, obtaining a mean value of 2.76; this reveals that its quality is closer to level 3 (superior) than to level 2 (limited). In addition, this is the case for Item Q31 in HEODAR, which has received a mean value of 4.01 out of 5. This item refers to the feedback given to students as a formative assessment. Looking at the comments received from experts, we find references to assessment in 8.01% of the cases; from them, 41.9% referred to aspects that could be improved: “there are no explicit indications for proper feedback after doing each activity/ task, as well as the final project” or “self-evaluation resources should be included.” These results are useful for guiding the improvement of evaluation in the EDIA resources analyzed so far.

As described in the previous section, the psycho-pedagogical dimension refers to the type of learning taking place, the level of difficulty, students’ motivation and attention and the learning context. In the qualitative analysis, 11.9% of experts’ comments were coded under the category “learning” being most of them positive (86.9%), highly appreciating the opportunities to conduct collaborative work, to promote autonomous learning and to develop key competences while achieving transversal learning outcomes in a meaningful way. Items Q21 and Q22 in the HEODAR instrument explicitly evaluated enhanced learning, showing mean values of 3.93 and 3.98 out of 5, respectively, which, though showing good quality, are within the lowest mean values.

The overall evaluation of the psycho-pedagogical aspects in the HEODAR and the Achieve instruments shows very high quality, even leaving little room for improvement. The open feedback received from experts shows more positive comments than negative ones. It is within the category “difficulty,” where we find more comments pointing out aspects that may be improved, with these being attributable to specific OERs for which either the elicitation mechanism and use of student’s previous knowledge, the language employed, the cognitive demand or the progression of the learning sequence are not considered appropriate for the target students.

Finally, it should be noted that if we had limited this study to the quantitative data, it would not have been possible to conclude anything else but the high quality of the EDIA repository, with the lowest mean value being 3.93 out of 5 in HEODAR for item Q21 about interactivity and the lowest mean value of 2.54 out of 3 in the Achieve instrument for OER accessibility. However, the qualitative data provide a rich, detailed picture, which allows us to better describe the characteristics of the EDIA OER and to identify which aspects might still be improved and how to take a step forward toward excellence.

4.1. Final remarks

This work responds to one of the challenges or deficiencies associated with the adoption of OER-based education pointed out by UNESCO: the lack of clear mechanisms for evaluating the quality of repositories (lack of any clear quality assurance mechanisms), which has resulted in unclear standards and poor quality of distance education (UNESCO, 2010).

The use of a mixed-method approach involving both quantitative and qualitative methods combine the affordances of different techniques and compensates for their limitations, allowing us to triangulate the information obtained from different sources, which strengthens the consistency and reliability of the results and provides a richer perspective. In this sense, the qualitative analysis of the constructive feedback received from evaluators offered an in-depth view about the general quality of the resources that widened the perspective reached solely by the application of two previously validated quantitative instruments. Indeed, although the results from the quantitative approach show high mean values in all the quality dimensions evaluated, the qualitative approach serve us to: (1) triangulate and verified results and (2) obtain comprehensive information about which aspects should be improved, why and how. All results allow us to develop a better understanding of how OERs are perceived by a broad group of key stakeholders and how they can be improved to move a step forward in unveiling OERs’ whole potential to provide inclusive, high-quality education.

Considering what has been previously presented and discussed, we can draw several conclusions in relation to the characteristics of the EDIA repository:

The interoperability of EDIA OERs, though quite good, may be improved by enhancing the accessibility and usability of resources. Indeed, the dimensions related to interactivity and accessibility are those receiving the lowest scores on the quantitative scales and significant comments for improvement in the open feedback from evaluators. Usability is a general term that depends on a wide range of aspects, including not only accessibility and technical interoperability but also the associated metadata and OER granularity. Time seems to be a controversial issue in the lesson planning associated with several OERs since evaluators often refer to either over or under estimation of the time necessary to successfully implement a particular OER in the classroom. We consider that one way to address time issues would be to increase granularity in EDIA OERs.

On the other hand, usability depends on the perceived quality of educational resources. The study carried out shows that the dimensions better evaluated are those related to the quality of objectives, content and the type of learning fostered by the evaluated OER, as can be seen by the mean values achieved in the quantitative instruments and the frequency of the positive comments received in relation to the categories identified through the qualitative analysis.

In relation to psycho-pedagogical aspects, quantitative and qualitative data show that EDIA OERs promote students’ engagement, motivation and the acquisition of key abilities and deep learning. We find differences in the feedback received from evaluators depending on the school subject. On a general level and with some exceptions, comments for improvement are mainly referred to psycho-pedagogical aspects (motivation, attention, interactivity, and creativity) in OER for social sciences subjects, while science-related OERs might be improved in issues related to the didactic domain (objectives, content and activities). In line with observation, there is a deficit in science related OERs in the study sample. Given the reduced number of resources and the poorer evaluation received, there is a clear need to increase designing efforts and stimulate the development and exchange of open materials under the philosophy of OER in this field. It will enhance the co-creation and continuous improvement of science teaching and learning at the national or international level.

Finally, this work contributes to increasing the available research evidence necessary to inform future steps and to optimize public efforts aimed at making the most of the OER paradigm to enhance learning and promote inclusive and equitable quality education. Nevertheless, it must be taken into account the limitation of our work, mainly in relation to the conclusions derived for some disciplines for which the sample size was narrow due to the own constraints of the EDIA repository. Anyway, this aspect has, indeed, been pointed as an improvement aspect for the EDIA project, which is now getting improved also in this line.

In relation to future lines of work, we are extending the collaboration between the Spanish Ministry of Education and our research group to uptake the study of the impact of EDIA OERs on students’ motivation and learning and to develop a better understanding of the key issues related to teachers’ engagement in the design, adaptation, and implementation of OER.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

MR was responsible for the conceptualization of the manuscript, the project administration and supervision, the investigation, writing the original draft, and review and editing the final version. AA was responsible for the conceptualization of the manuscript, the visualization, the research methodology, investigation, software use, the formal analysis, and review and editing the final version. AQ was responsible for the visualization, the research methodology, investigation, software use, the data curation, the validation of the instruments, the formal analysis, writing part of the original draft, and review and editing the final version. PR was responsible for the visualization, the research methodology, investigation, software use, the formal analysis, writing the original draft, and review and editing the final version. All authors contributed to the article and approved the submitted version.

Acknowledgments

The authors acknowledge funding from the Spanish National Centre for Curriculum Development through Non-Proprietary Systems (CEDEC), dependent on the Spanish Ministry of Education, through the transference contract project with the University of Jaen with reference 3858.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1082577/full#supplementary-material

Footnotes

References

Almendro, D., and Silveira, I. F. (2018). Quality assurance for open educational resources: the OER trust framework. Int. J. Learn. Teach. Educ. Res. 17, 1–14. doi: 10.26803/ijlter.17.3.1

Ariza, M. R., and Quesada, A. (2011, 2011). “Interoperability: standards for learning objects in science education” in Handbook of research on E-learning standards and interoperability: frameworks and issues. eds. E. F. Lazarinis and S. G. Y. E. Pearson (IGI Global: Hershey, NY), 300–320.

Baas, M., Admiraal, W., and van den Berg, E. (2019). Teachers’ adoption of open educational resources in higher education. J. Interact. Media Educ. 2019:9. doi: 10.5334/jime.510

Baas, M., van der Rijst, R., Huizinga, T., van den Berg, E., and Admiraal, W. (2022). Would you use them? A qualitative study on teachers' assessments of open educational resources in higher education. Internet High. Educ. 54:100857. doi: 10.1016/j.iheduc.2022.100857

Birch, R.. (2011). Synthesized from eight rubrics developed by ACHIEVE. Available at: www.achieve.org/oer-rubrics.

Bodily, R., Nyland, R., and Wiley, D. (2017). The RISE framework: using learning analytics to automatically identify open educational resources for continuous improvement. Int. Rev. Res. Open Distrib. Learn. 18, 103–122. doi: 10.19173/irrodl.v18i2.2952

Cechinel, C., Sánchez-Alonso, S., and García-Barriocanal, E. (2011). Statistical profiles of highly-rated learning objects. Comput. Educ. 57, 1255–1269. doi: 10.1016/j.compedu.2011.01.012

Kerres, M., and Heinen, R. (2015). Open informational ecosystems: the missing link for sharing educational resources. Int. Rev. Res. Open Dist. Learn. 16, 24–39. doi: 10.19173/irrodl.v16i1.2008

Mncube, L. S., and Mthethwa, L. C. (2022). Potential ethical problems in the creation of open educational resources through virtual spaces in academia, e09623. Heliyon 8. doi: 10.1016/j.heliyon.2022.e09623

Mohamed Hashim, M. A., Tlemsani, I., and Duncan, M. R. (2022). A sustainable university: digital transformation and beyond. Educ. Inf. Technol. 27, 8961–8996A. doi: 10.1007/s10639-022-10968-y

Nascimbeni, F., and Burgos, D. (2016). In search for the open educator: proposal of a definition and a framework to increase openness adoption among university educators. Int. Rev. Res. Open Distrib. Learn. 17, 1–17. doi: 10.19173/irrodl.v17i6.2736

Open Educational Resources Evaluation Tool Handbook (2012). Available at: https://www.achieve.org/files/AchieveOEREvaluationToolHandbookFINAL.pdf.

Orozco, C., and Morales, E. M. (2016). Psychometric testing for HEODAR tool. TEEM '16: Proceedings of the Fourth International Conference on Technological Ecosystems for Enhancing Multiculturality, pp.163–170.

Pardo, A., Ellis, R., and Calvo, R. A. (2015) Combining observational and experiential data to inform the redesign of learning activities. LAK’15 Proceedings of the Fifth International Conference on Learning Analytics and Knowledge, pp. 305–309.

Pattier, D., and Reyero, D. (2022). Contributions from the theory of education to the investigation of the relationships between cognition and digital technology. Educ. XX1 25, 223–241. doi: 10.5944/educxx1.31950

Santos-Hermosa, G., Ferran-Ferrer, N., and Abadal, E. (2017). Repositories of open educational resources: an assessment of reuse and educational aspects. Int. Rev. Res. Open Dist. Learn. 18, 84–120. doi: 10.19173/irrodl.v18i5.3063

Stein, M. S., Cechinel, C., and Ramos, V. F. C. (2023). Quantitative analysis of users’ agreement on open educational resources quality inside repositories. Rev. Iberoam. Tecnol. Aprend. 18, 2–9. doi: 10.1109/RITA.2023.3250446

UNESCO. (2010). Global trends in the development and use of open educational resources to reform educational practices.

Van Assche, F. (2007). Roadmap to interoperability for education in Europe. Available at: http://insight.eun.org/shared/data/pdf/life_book.pdf

VERBI Software. (2020). MAXQDA 2020 [computer software]. Berlin, Germany: VERBI Software. Available from maxqda.com.

Wiley, D., and Green, C. (2012). “Why openness in education” in Game changers: education and information technologies, 81–89. Available at: https://library.educause.edu/resources/2012/5/chapter-6-why-openness-in-education

Yuan, M., and Recker, M. (2015). Not all rubrics are equal: a review of rubrics for evaluating the quality of open educational resources. Int. Rev. Res. Open Distrib. Learn. 16, 16–38. doi: 10.19173/irrodl.v16i5.2389

Keywords: open educational resources, interoperability, educational design, quality analysis, research-based improvement

Citation: Romero-Ariza M, Abril Gallego AM, Quesada Armenteros A and Rodríguez Ortega PG (2023) OER interoperability educational design: enabling research-informed improvement of public repositories. Front. Educ. 8:1082577. doi: 10.3389/feduc.2023.1082577

Edited by:

Tom Crick, Swansea University, United KingdomReviewed by:

Lawrence Tomei, Robert Morris University, United StatesHai Wang, Saint Mary’s University, Canada

Copyright © 2023 Romero-Ariza, Abril Gallego, Quesada Armenteros and Rodríguez Ortega. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pilar Gema Rodríguez Ortega, mrodriguez1@uco.es

Marta Romero-Ariza

Marta Romero-Ariza Ana M. Abril Gallego

Ana M. Abril Gallego Antonio Quesada Armenteros

Antonio Quesada Armenteros Pilar Gema Rodríguez Ortega

Pilar Gema Rodríguez Ortega