- 1Department of Clinical Psychology and Psychotherapy, Institute of Psychology and Education, University Ulm, Ulm, Germany

- 2Department of Rehabilitation Psychology and Psychotherapy, Institute of Psychology, Albert-Ludwigs University Freiburg, Freiburg, Germany

- 3Medical Psychology and Medical Sociology, Faculty of Medicine, Albert-Ludwigs University Freiburg, Freiburg, Germany

Background: Accurate and timely diagnostics are essential for effective mental healthcare. Given a resource- and time-limited mental healthcare system, novel digital and scalable diagnostic approaches such as smart sensing, which utilizes digital markers collected via sensors from digital devices, are explored. While the predictive accuracy of smart sensing is promising, its acceptance remains unclear. Based on the unified theory of acceptance and use of technology, the present study investigated (1) the effectiveness of an acceptance facilitating intervention (AFI), (2) the determinants of acceptance, and (3) the acceptance of adults toward smart sensing.

Methods: The participants (N = 202) were randomly assigned to a control group (CG) or intervention group (IG). The IG received a video AFI on smart sensing, and the CG a video on mindfulness. A reliable online questionnaire was used to assess acceptance, performance expectancy, effort expectancy, facilitating conditions, social influence, and trust. The self-reported interest in using and the installation of a smart sensing app were assessed as behavioral outcomes. The intervention effects were investigated in acceptance using t-tests for observed data and latent structural equation modeling (SEM) with full information maximum likelihood to handle missing data. The behavioral outcomes were analyzed with logistic regression. The determinants of acceptance were analyzed with SEM. The root mean square error of approximation (RMSEA) and standardized root mean square residual (SRMR) were used to evaluate the model fit.

Results: The intervention did not affect the acceptance (p = 0.357), interest (OR = 0.75, 95% CI: 0.42–1.32, p = 0.314), or installation rate (OR = 0.29, 95% CI: 0.01–2.35, p = 0.294). The performance expectancy (γ = 0.45, p < 0.001), trust (γ = 0.24, p = 0.002), and social influence (γ = 0.32, p = 0.008) were identified as the core determinants of acceptance explaining 68% of its variance. The SEM model fit was excellent (RMSEA = 0.06, SRMR = 0.05). The overall acceptance was M = 10.9 (SD = 3.73), with 35.41% of the participants showing a low, 47.92% a moderate, and 10.41% a high acceptance.

Discussion: The present AFI was not effective. The low to moderate acceptance of smart sensing poses a major barrier to its implementation. The performance expectancy, social influence, and trust should be targeted as the core factors of acceptance. Further studies are needed to identify effective ways to foster the acceptance of smart sensing and to develop successful implementation strategies.

Clinical Trial Registration: identifier 10.17605/OSF.IO/GJTPH.

1. Introduction

Mental disorders are rising in prevalence worldwide (1–3) and constitute a leading cause of years lived with disability (4) and economic costs (5, 6). Effective treatment options exist ranging from face-to-face treatment (e.g., cognitive behavioral therapy) (7, 8) and pharmacological treatment (9, 10) to digital mental health interventions (11, 12). However, a fundamental prerequisite to treatment is an accurate diagnosis and the identification of clinically relevant symptoms (13–15). Facing economic pressure and limited resources in many healthcare systems (16–18), researchers develop novel digital diagnostic procedures such as smart sensing aiming for a scalable, accurate, and time-efficient diagnosis (19–22).

In the context of mental health diagnoses, smart sensing is used to predict mental disorders and mental symptoms by features generated based on digital markers collected via the smartphone or other wearables (e.g., time stayed at home derived from the GPS sensor) (23, 24). Recent studies show the high potential of smart sensing (20, 21, 25–31). For instance, depression status could be detected with over 90% accuracy solely based on smartphone data (21). But also, in other mental disorders (e.g., psychosis spectrum or bipolar disorder), smart sensing achieves promising results (30–35).

However, before applying novel diagnostic approaches such as smart sensing into clinical routine care, it is important to assess the acceptance of it and identify factors associated with using smart sensing. The unified theory of acceptance and use of technology (UTAUT) (36) is a widely applied framework for the use and acceptance of technology (37, 38). UTAUT analyzed several behavior change models to identify performance expectancy as the perception of personal benefit derived from utilizing the technology, effort expectancy as the anticipated ease of use, social influence as the perception that others consider the technology worthwhile, and facilitating conditions as the expected support and availability of practical resources as the core determinants of acceptance (36, 37). Given the successful validation of the UTAUT in various contexts [e.g., Internet finance (39), electronic health records (40), or digital health interventions (38)], it may also provide a strong framework to investigate the acceptance of smart sensing and its determinants. In addition, trust has been identified as an important factor influencing the acceptance in application areas of technology and AI-augmented systems [e.g., automatic driving (41)]. Based on the trust concept in automated technology (42), we define trust in smart sensing as the attitude that a smart sensing system can help achieve an individual's goal in an uncertain or vulnerable situation. For instance, a smart sensing system for mental health could prompt a user that they show a high risk for depression and recommend action (e.g., changing routines or visiting a therapist). The users could either show trust in the system (e.g., following the action recommendations) or distrust the system (e.g., rejecting the recommendations). Similar to the findings in automatic driving [e.g., (43)], trust might be affecting the acceptance of smart sensing. However, the role of trust in the acceptance of smart sensing has not been examined.

Besides, understanding the determinants of acceptance of smart sensing, opportunities to foster the acceptance of smart sensing need to be explored for a successful implementation of smart sensing. In the past, acceptance facilitating interventions (AFIs) have been shown to be effective in influencing the acceptance of novel approaches (e.g., Internet-based or blended psychotherapy) (44–48). AFIs are usually grounded on an acceptance model such as UTAUT (36, 37) or other models [e.g., health action process approach (49)]. Based on the underlying theoretical background (e.g., UTAUT), assumed determinants of acceptance are directly targeted to increase the acceptance: for instance, in an AFI constructed based on UTAUT, the performance expectancy could be targeted by highlighting the personal benefit, effort expectancy by showing the novel approach in action, social influence by providing reports of other users, and facilitating conditions by targeting concerns of practical resources or the availability of support. In addition to their background, AFI can be characterized by their presentation formats (e.g., written informative text, expert talks, videos, or one-on-one conversations). However, while AFIs were explored in various settings (44–48), it is unknown whether they are effective in the context of smart sensing.

Given the success of the UTAUT model in the context of technology and its application in AFI in other contexts [e.g., Internet- and mobile-based interventions (38)], the present study examines the effectiveness of an UTAUT-based AFI on the acceptance of smart sensing compared with an attention control group.

1. We hypothesize that (a) the self-reported acceptance, (b) interest in using a smart sensing app, and (c) installation of a smart sensing app will be higher in the intervention group compared with the control group.

In addition, the present study aims to apply the UTAUT framework extended by a trust factor in the context of smart sensing to investigate the core determinants of the acceptance of smart sensing.

2. We hypothesize that the UTAUT factors are determinates of acceptance of smart sensing.

Lastly, the present study investigates the general level acceptance of smart sensing (i.e., unmanipulated acceptance in the control group) and answers the following question:

3. What is the acceptance of smart sensing (i.e., self-reported acceptance, interest in using a smart sensing app, installation of a smart sensing app) in the context of mental health?

2. Methods and materials

2.1. Study design and sample

We report on a randomized controlled trial with a single post-assessment to investigate the effect of the AFI between an intervention group (IG) and a control group (CG). The participants were allocated to IG or CG using a 1:1 randomization. The randomization was conducted automatically by the online survey platform LimeSurvey. The allocation sequence was concealed for the participants and trial personnel until the participants were enrolled and assigned to the groups. All procedures were approved by the ethics committee of Ulm University (462/20—FSt/Sta) and registered at OSF (10.17605/OSF.IO/GJTPH). The registration took place after all the assessment procedures were finalized and recruitment had started. The data were not accessed or analyzed before registration.

The sample size planning assumed that the AFI affects the acceptance, and the increase in acceptance carries over to an increased usage of a smart sensing app (installation of a smart sensing application: yes/no; see measures and outcomes). A usage rate of 20% in the CG and 33% in the IG was expected. To detect this effect using logistic regression with a power of 80% and an α = 5%, an effective sample of N = 124 was needed. However, due to a technical limitation, the smart sensing app was only functional on Android devices. To avoid potential bias in the investigation of acceptance and determinates of acceptance by excluding users of other systems (e.g., iOS), the possession of an Android smartphone was not defined as an inclusion criterion. Instead, the recruitment was continued until a maximum of N = 206 participants to adjust for the forced dropout of Apple iPhone users (or other operating systems).

2.2. Inclusion criteria and data collection procedures

All procedures and data collection were conducted online. Aiming to recruit participants from the general population, the participants were recruited and forwarded to the online survey via digital (e.g., email lists, social media posts) and analog (e.g., flyers, on-site recruitment) ways from April 2021 to June 2021. The flyers and on-site recruitment included public (e.g., libraries, fitness centers) and university-related places at Ulm and Freiburg in Germany.

Inclusion criteria were as follows: (1) being of legal age (≥18 years), (2) having Internet access, (3) providing informed consent, and (4) agreement to data processing procedures according to the European General Data Protection Regulation. The online survey was aborted if the criteria were not fulfilled. The eligible participants first answered socio-demographic and mental health questionnaires followed by their automatic randomization to one of two videos (IG or CG; see description below). The participants were not explicitly informed about their group allocation. However, they were aware of the presence of two different conditions due to the informed consent process. After the video, the acceptance of smart sensing and the assumed determinants were assessed (see outcomes below). In addition, the participants could sign up for a study, in which they could use a smart sensing app. The sign-up process did not include any intervention content. The participants received only the information that the university is conducting a smart sensing study without any further explanation of smart sensing or, e.g., how they could benefit from using the smart sensing app. The participants were prompted to indicate whether they are interested in participating in that smart sensing study. The participants reporting interest were forwarded to a survey page, where they could provide their email to be invited to the smart sensing study. The information about expense allowance in the smart sensing study was included on the forwarded survey page. This process was the same in IG and CG.

After completion of this acceptance study, the participants who were psychology students at Ulm University and the University of Freiburg could receive credits for their course of studies, and all the participants could participate in a lottery for one 20 Euro voucher.

2.3. Intervention and control condition

The experimental intervention was a video with a total duration of 3:04 min. The structure and content of the video were based on the UTAUT model and focused on the assumed determinants of acceptance: performance expectancy (e.g., presenting application areas such as early recognition of mental health symptoms), effort expectancy (information on effort: e.g., data are mainly collected passively without additional effort for the user), facilitating conditions (information on needed resources: e.g., the broad availability of smartphones), and social influence (e.g., the inclusion of user reports and why others think smart sensing is use-worthy). First, an expert (YT) explained the concept of smart sensing and application areas in healthcare. The expert talk was structured in the following parts: (1) “What is smart sensing?” (2) “Which data is collected?” and (3) “Aims and application areas.” Afterward, three examples were presented of how smart sensing applications could be used in daily life and which benefit it provides for the users. The examples focused on (a) sleep monitoring, (b) physical activity, and (c) general wellbeing. A summary of the key concepts and examples presented in the AFI can be found in Supplementary Material 1.

In the control condition, the participants received a video with an expert (EM) explaining the concept of mindfulness, its influence on health, and suggestions on how mindfulness can be integrated into daily life (e.g., meditation exercises). The total duration of the control video was 3:00 min.

2.4. Measures and outcomes

2.4.1. Participant characteristics

We assessed the age, gender, nationality, and personality to describe the participant characteristics. Personality assessment was conducted using the 10-item version of the Big Five Inventory [BFI-10; (50)]. The BFI-10 assesses openness, conscientiousness, extraversion, agreeableness, and neuroticism with a 5-point Likert scale from “fully disagree” to “fully agree.” The BFI-10 shows good reliability and validity (50). In addition, the eight-item version of the patient health questionnaire (PHQ-8) and the seven-item version of the generalized anxiety disorder questionnaire (GAD-7) were used for a reliable assessment of depression (PHQ-8) and anxiety (GAD-7) symptoms in the last 2 weeks (51, 52). The items (e.g., feeling nervous, anxious, or on the edge) were answered from 0—“not at all” to 3—“nearly every day.” According to their scoring procedures, the sum scores for PHQ-8 and GAD-7 were calculated.

2.4.2. Acceptance

Acceptance was operationalized in three ways. First, it was assessed as a continuous dimension with the UTAUT questionnaire (36–38) consisting of four items rating the intention to use smart sensing on a 5-point Likert scale ranging from “fully disagree” to “fully agree” (=self-reported acceptance). The items are presented in Supplementary Material 2. Second, it was determined by the number and percentage of the participants registering for the study (=interest), in which they could use a smart sensing app, and third the actual number and percentage of installation of the smart sensing app were assessed as a direct behavioral outcome.

2.4.3. Potential determinants of acceptance

The performance expectancy (three items), effort expectancy (three items), social influence (two items), and facilitating conditions (two items) were assessed as potential determinants of acceptance with the UTAUT questionnaire (36–38). All items were rated on a 5-point Likert scale from “fully disagree” to “fully agree.” The items are presented in Supplementary Material 2.

In addition, trust (e.g., trust in smart sensing-based treatment recommendations) was assessed with the short version of the German automation trust scale (41, 53). The scale was originally developed in the context of automated driving and adapted to the context of digital health. It consists of seven items (e.g., “I trust the system”) rated on a 7-point Likert scale from “fully disagree” to “fully agree.”

2.5. Analysis

2.5.1. Intervention effects

Intervention effects were operationalized on three levels: (1) the self-reported acceptance in the UTAUT questionnaire, (2) the reported interest rate to use a smart sensing app, and (3) the installation of a smart sensing app.

The self-reported acceptance of smart sensing was analyzed by investigating the mean difference between IG and CG in the observed data using an unpaired t-test. In addition, we investigated the intervention effect on acceptance following the intention to treat principle. Therefore, we applied the structural equation modeling (SEM). First, a measurement model was defined in SEM analysis consisting of the latent factors for all items of acceptance, performance expectancy, effort expectancy, facilitating conditions, social influence, and trust. In all SEM analyses, the root mean square error of approximation (RMSEA) as a non-centrality parameter and the standardized root mean square residuals (SRMR) as a residual index were used to assess the goodness of fit due to the tendency of the χ2-test to reject the misspecified models too harshly (54–56). Following the established guidelines, a cut-off value of RMSEA ≤ .06 and SRMR ≤ .08 were chosen to determine a good model fit (57). The full information maximum likelihood was used to handle missing data (58). Robust (Huber–White) standard errors were obtained. In the second step of SEM analysis, a regression from the acceptance factor to the group variable (dummy coded: CG = 0, IG = 1) was introduced. The path loading of the dummy coded group variable on the latent acceptance factor was the parameter of interest to determine the effects of the intervention on latent level.

The intervention effects on the interest rates (dummy coded outcome: 0: not interested, 1: interested) were investigated with a logistic regression model. Odds ratio were reported as effect sizes. Analog potential differences in the installation rate of a smart sensing app were analyzed.

2.5.2. Latent structural equation modeling: determinants of acceptance

To investigate the influence of potential determinants of acceptance, SEM was applied. Building on the measurement model consisting of the latent factors for all items of acceptance, performance expectancy, effort expectancy, facilitating conditions, social influence, and trust, we introduced paths between acceptance and all other latent factors. These path estimates were used to determine the presence of significant effects of the postulated UTAUT factors on acceptance (see Section 2.5.1 for SEM criteria and process).

2.5.3. Acceptance of smart sensing for health

Following the previous studies on the acceptance of digital interventions (44–48), the acceptance (i.e., self-reported, interest rates, and installation rates) in the CG that did not receive any AFI is assumed to be the general acceptance of smart sensing. Acceptance is quantified by the mean and standard deviation of the sum score of the UTAUT questionnaire (numerical mean of the scale: 12.5, range: 4–20). In addition, we categorized the sum score following the previous studies (44–48): low acceptance (sum score: 4–9), moderate acceptance (sum score: 10–15), and high acceptance (sum score: 16–20). The percentages for each category were summarized.

2.6. Software

The statistical software R was used for all analyses (59). The R package “lavaan” was used as the core package for all the structural equation models (60). See Supplementary Material 3 for an overview of all packages and versions used in the present analysis.

3. Results

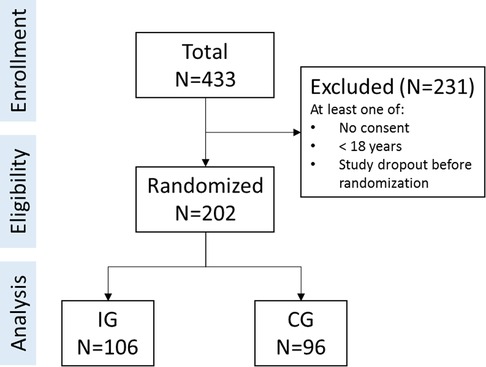

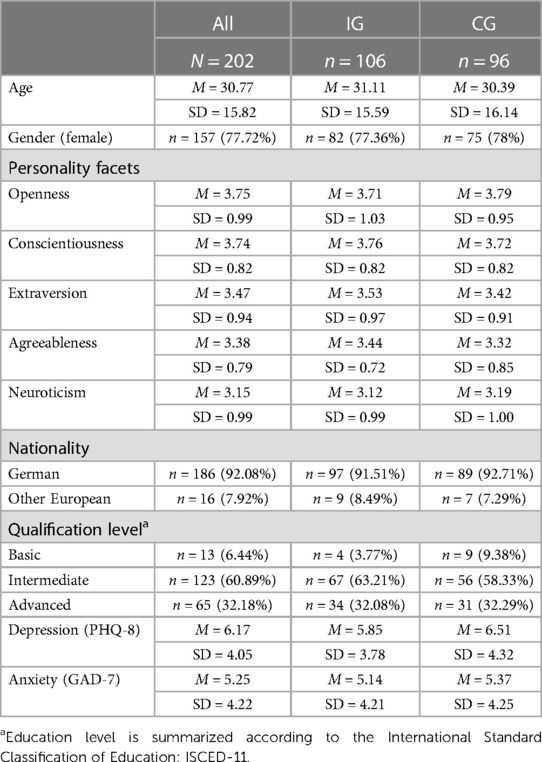

Of N = 433 interested individuals, a total of N = 202 were eligible and included in the study (CG: n = 96; IG: n = 106). The study flow is summarized in Figure 1. The included participants covered a broad age range from 18 years to 79 years (M = 30.77, SD = 15.82). Gender was unequally represented in the study (female: n = 157, 77.72%). All participants had a European background with the majority being German (n = 186, 92.08%). Education level was distributed as follows: advanced education level n = 65 [32.18%; e.g., bachelor degree and higher or other International Standard Classification of Education (ISCED-11) level >4 qualifications], intermediate education level n = 123 (60.89%; e.g., A levels, completed an apprenticeship or other ISCED-11 level <5 qualifications), and basic education level n = 13 (6.44%; i.e., no qualification or other ISCED-11 level <3 qualifications; one participant did not report on qualification level). Mental health symptoms were below clinical relevance on average (PHQ-8: M = 6.17, SD = 4.05; GAD-7: M = 5.25, SD = 4.22). Baseline differences did not suggest a problem with the randomization process. For group-specific details, see Table 1.

3.1. Intervention effects

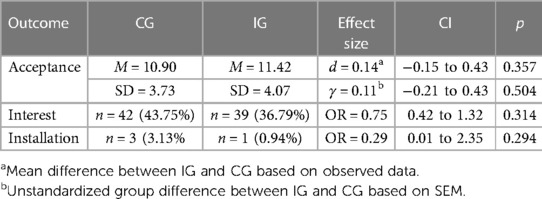

With an average self-reported acceptance of M = 11.42 (SD = 4.07, Min = 4, Max = 19) in the IG, there was descriptively higher acceptance compared with the control group, but with no significant difference (d = 0.14, 95% CI: −0.15–0.43; t = 0.92, df = 187, p = 0.357). This held true on the latent level using the SEM and accounting for missingness (γ = 0.11, 95% CI: −0.21–0.43, p = 0.503; γstandardized = 0.05). The model fit for the underlying questionnaire was excellent (RMSEA = 0.06, SRMR = 0.05). The full parameter list of the measurement model is reported in Supplementary Material 4.

With interest rates of nyes = 39 (36.79%) and nno = 57 (53.77%) in the IG, the odds for being interested to use a smart sensing app did not differ significantly compared with the CG (OR = 0.75, 95% CI: 0.42–1.32, p = .314). Only one participant (0.94%) in the IG installed the smart sensing app, amounting to a non-significant intervention effect compared with the CG (OR = 0.29, 95% CI: 0.01–2.35, p = .294). For a summary of all intervention effects and group-specific results see Table 2.

3.2. Determinants of acceptance

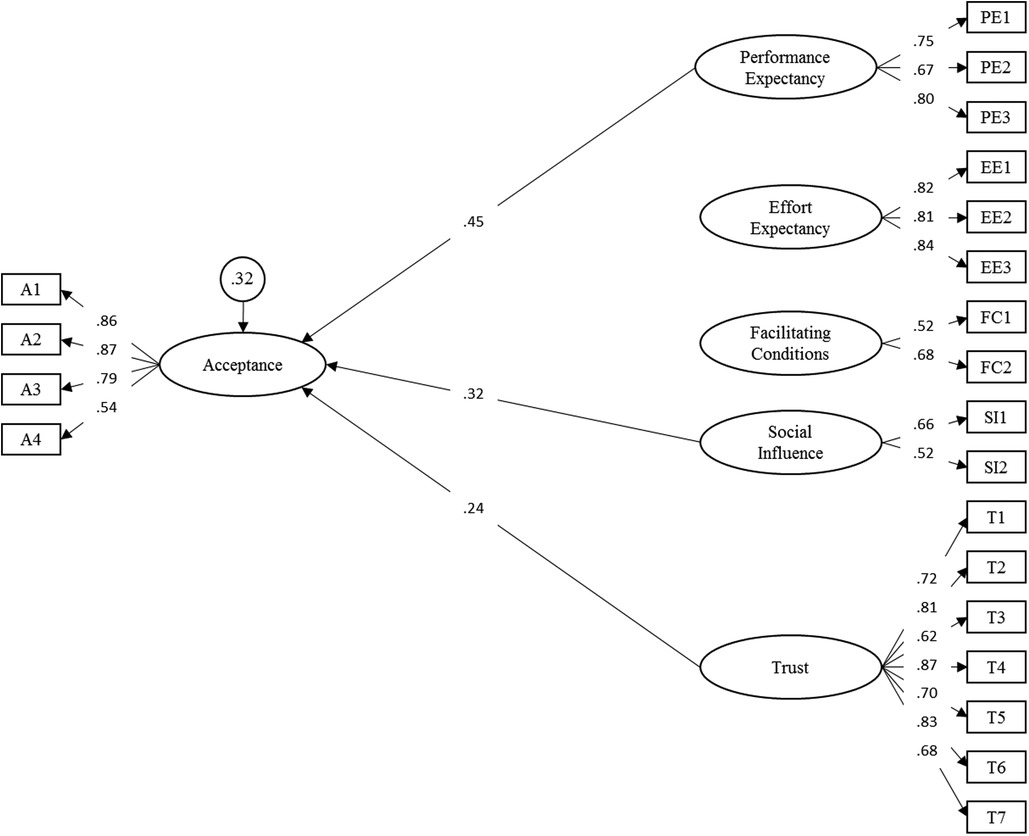

The following analysis of the latent effects on acceptance across groups identified the performance expectancy (γ = 0.45, p < 0.001), trust (γ = 0.24, p = 0.002), and social influence (γ = 0.32, p = 0.008) as determinants of acceptance (overall model fit: RMSEA = 0.06, SRMR = 0.05). All other factors were non-significant. Together, the three determinants explained 68% of the variance of the latent acceptance factor. The final path model is displayed in Figure 2. A list of all parameters is included in Supplementary Material 5.

Figure 2. Structural equation model of adapted model for the acceptance toward smart sensing. Latent variables are represented in ellipses: A, acceptance; PE, performance expectancy; EE, effort expectancy; FC, facilitating conditions; SI, social influence; T, trust. Observed items are indicated as rectangles. Path loadings are represented as single-headed arrows. Residual variances of endogenous latent variables are presented in circles. All exogenous latent variables were allowed to correlate. For improved readability, all latent correlations and residual variances of manifest items were omitted. Please see Supplementary Material 3 for a full list of all parameters.

3.3. General acceptance of smart sensing

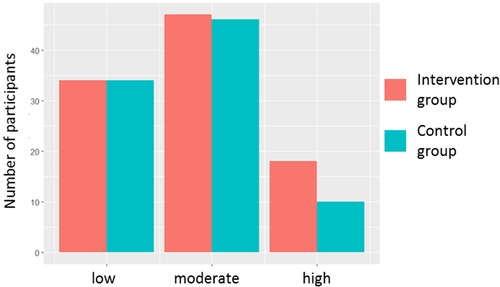

The unmanipulated self-reported acceptance of smart sensing in the control group was below average M = 10.90 (SD = 3.73, Min = 4, Max = 20). A total of n = 34 (35.41%) showed low, n = 46 (47.92%) moderate, and n = 10 (10.41%) high acceptance (see Figure 3). For a descriptive summary of the acceptance and subscales, please see Supplementary Material 6.

Figure 3. Acceptance of smart sensing. Acceptance was measured by the UTAUT questionnaire. The sum score of all four acceptance items was categorized as low (sum score: 4–9), moderate (sum score: 10–15), and high (sum score: 16–20).

A total of n = 42 (43.75%) participants stated interest to try smart sensing in another study (no interest: n = 45, 46.86%; not responded: n = 9, 9.38%). Of all 42 participants with interest, only n = 3 (7.14%; 3.13% of all participants in the CG) installed the smart sensing app.

4. Discussion

Acceptance is a fundamental precondition for the dissemination, uptake, and clinical impact. The present UTAUT-based acceptance facilitating intervention was unable to significantly affect the acceptance of smart sensing. We identified three core determinants for the acceptance of smart sensing measured with the UTAUT questionnaire: combined the performance expectancy, the social influence, and the trust factor explained 68% of the variance in the self-reported acceptance. Most participants reported a below-average acceptance toward smart sensing in the UTAUT questionnaire and even one-third showed low acceptance, highlighting the sensitivity of smart sensing. Interestingly, despite the low acceptance in the questionnaire, 44% stated general interest in using smart sensing. Though, the actual installation rate of a smart sensing app as a behavioral outcome was below 5%.

The lack of acceptance of smart sensing by potential end users clearly highlights a major barrier to the implementation of smart sensing applications. Developing successful AFI and implementation strategies are of utmost importance to fully exploit the potential of smart sensing. As shown in the structural equation modeling, the performance expectancy had the strongest influence on self-reported acceptance with an effect of γ = 0.45. Accordingly, the personal benefit for individuals should especially be highlighted, when the intention of the individual to use a smart sensing application is targeted.

However, besides the context of AFI, future studies should explore which formats (e.g., with extended case examples in daily life, a showcase of specific example apps and functions, or an in-person AFI with direct participant interaction) are best suited to achieve positive effects. For instance, the present AFI format consisting of a combination of an expert talk with short examples was unable to achieve this goal despite targeting the determinants postulated in UTAUT. Looking to the field of instructional design and online learning research, in particular, whiteboard videos may pose an opportunity to development scalable and effective AFI (61). Following the cognitive theory of multimedia learning, information (e.g., on the personal benefit) could be divided in verbal and visual components to reduce cognitive load during the intervention (62). Furthermore, a dynamic visualization of content and narrative style could potentially increase the effectiveness of whiteboard-based AFI (61, 63, 64). However, the effectiveness of such AFI in the context of smart sensing is currently unclear and needs to be explored.

Extending the findings of below-average acceptance in the questionnaire data, the transfer from behavioral intention to use smart sensing to the usage of smart sensing was identified as another issue in the present study: even in the subset of the participants stating interest in using a smart sensing app, only 7% installed the smart sensing app (3% if participants stating no interest are also included). Due to the low usage rate of the smart sensing app, the present study allowed no robust analysis of the factors influencing the relationship between intention to use and actual usage. Future studies on the use of smart sensing and how the transfer from intention to action can be maximized are needed. Based on another study in the context of mobile health, the factors such as existing habits and personal empowerment might be promising variables to investigate (65). In addition, it must be highlighted that installing a smart sensing app marks only the starting point of actual usage. Future studies should extend on this by observing not only the start of usage but also monitoring the duration, frequency of the use, and retention time (i.e., days past until an app is no longer opened) of a smart sensing app over time (66). The long-term use of digital applications has been identified as a major issue in the previous studies (11, 48, 66–69). Particularly in smart sensing, which is usually implemented as a longitudinal process requiring assessment over a longer period, early dropout could have a major impact on its potential benefit in healthcare. Hence, approaches fostering the maintenance of adherence and the prevention of disengagement over time need to be explored. For instance, therapeutic persuasiveness, user engagement, and usability may be important factors based on the findings in eHealth interventions (68, 70). Overall, the promising findings of smart sensing (30–35) will only translate into healthcare improvements if the requirement of acceptance is met and the underlying processes for the initial and long-term usage are understood.

While speaking of implications for future studies as well as when interpreting the present results, some limitations of the present study should be considered: the present sample showed an imbalance in education level, national backgrounds (i.e., >90% German), and gender (78% female). In addition, although a broad age range (18–79 years) was included, children and adolescents were excluded from this study. Given the higher usage of smartphones and affinity to digital platforms and technologies in younger individuals (71), the acceptance of smart sensing might be different in that population. Furthermore, the recruitment was not conducted in a clinical setting. As a result, depression (PHQ-8) and anxiety (GAD-7) symptoms were in a sub-clinical range. Since the perceived personal benefit was identified as the most important predictor, the general acceptance might be higher in a clinical population in which the benefits of smart sensing and tracking of health symptoms or diagnosis are more apparent (e.g., symptom tracking, early warning of relapse risk). Hence, generalizations of the below-average acceptance to the mental healthcare sector should be made carefully, and additional studies on the acceptance in patients and other stakeholders (e.g., psychotherapists) are required.

Furthermore, the present study assessed the acceptance of smart sensing with no further differentiation between data types (e.g., smartphone usage time or GPS data) or the recipients of data (e.g., physicians). Previous research has shown that the data type and recipients can be influential regarding acceptance (72). Hence, the acceptance of smart sensing in different settings such as tracking physical mobility after surgery or tracking mental health after inpatient psychotherapy might differ. Moreover, the degree of autonomous agency of a smart sensing system can be varied from (1) a full user-controlled self-monitoring system over (2) a system integrated into expert systems to support clinicians in their decisions to (3) a fully automated diagnosis and treatment system (72, 73). The influence of the varying degree of autonomous agency on the acceptance was not in the scope of the present study and should be examined in future studies.

5. Conclusions

The present AFI was unable to significantly impact the acceptance of smart sensing. However, we identified the performance expectancy, social influence, and trust toward smart sensing applications as the key predictors of acceptance. Future studies should focus on these factors and investigate different formats (e.g., whiteboard-based AFI) to improve the acceptance of smart sensing. Moreover, exploring the acceptance of smart sensing in patients and other stakeholders and agents in the mental health sector would be a valuable addition to this study. Based on the low to moderate acceptance of smart sensing found in the present study, the acceptance seems to pose a major barrier for the implementation of smart sensing and its impact. The development of successful implementation strategies including the facilitating of acceptance are highly needed to fully exploit the potential of smart sensing.

Data availability statement

Data requests should be directed to the corresponding author (YT). Data can be shared with researchers who provide a methodologically sound proposal, which is not already covered by other researchers. Data can only be shared for projects if the General Data Protection Regulation is met. Requestors may need to sign additional data access agreements. Support depends on available resources.

Ethics statement

The studies involving human participants were reviewed and approved by the Ethics Committee of the Ulm University (462/20—FSt/Sta). The patients/participants provided their written informed consent to participate in this study.

Author contributions

YT initiated this study. YT, LS, EM, and HB contributed to the study design and concept. NW and CS were responsible for the recruitment and data collection supervised by YT and LS. YT drafted the manuscript and conducted the analysis. All authors contributed to the article and approved the submitted version.

Funding

This study was self-funded by Ulm University and the University of Freiburg.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdgth.2023.1075266/full#supplementary-material

References

1. Walker ER, McGee RE, Druss BG. Mortality in mental disorders and global disease burden implications. JAMA Psychiatry. (2015) 72:334. doi: 10.1001/jamapsychiatry.2014.2502

2. Samji H, Wu J, Ladak A, Vossen C, Stewart E, Dove N, et al. Review: mental health impacts of the COVID-19 pandemic on children and youth—a systematic review. Child Adolesc Ment Health. (2022) 27:173–89. doi: 10.1111/camh.12501

3. Santomauro DF, Mantilla Herrera AM, Shadid J, Zheng P, Ashbaugh C, Pigott DM, et al. Global prevalence and burden of depressive and anxiety disorders in 204 countries and territories in 2020 due to the COVID-19 pandemic. Lancet. (2021) 398:1700–12. doi: 10.1016/S0140-6736(21)02143-7

4. James SL, Abate D, Abate KH, Abay SM, Abbafati C, Abbasi N, et al. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990–2017: a systematic analysis for the global burden of disease study 2017. Lancet. (2018) 392:1789–858. doi: 10.1016/S0140-6736(18)32279-7

5. Greenberg PE, Fournier A-A, Sisitsky T, Pike CT, Kessler RC. The economic burden of adults with major depressive disorder in the United States (2005 and 2010). J Clin Psychiatry. (2015) 76:155–62. doi: 10.4088/JCP.14m09298

6. Greenberg PE, Fournier A-A, Sisitsky T, Simes M, Berman R, Koenigsberg SH, et al. The economic burden of adults with major depressive disorder in the United States (2010 and 2018). Pharmacoeconomics. (2021) 39:653–65. doi: 10.1007/s40273-021-01019-4

7. Cuijpers P, Karyotaki E, Reijnders M, Ebert DD. Was Eysenck right after all? A reassessment of the effects of psychotherapy for adult depression. Epidemiol Psychiatr Sci. (2019) 28:21–30. doi: 10.1017/S2045796018000057

8. Cuijpers P, Andersson G, Donker T, van Straten A. Psychological treatment of depression: results of a series of meta-analyses. Nord J Psychiatry. (2011) 65:354–64. doi: 10.3109/08039488.2011.596570

9. Huhn M, Tardy M, Spineli LM, Kissling W, Förstl H, Pitschel-Walz G, et al. Efficacy of pharmacotherapy and psychotherapy for adult psychiatric disorders. JAMA Psychiatry. (2014) 71:706–15. doi: 10.1001/jamapsychiatry.2014.112

10. Cuijpers P, Noma H, Karyotaki E, Vinkers CH, Cipriani A, Furukawa TA. A network meta-analysis of the effects of psychotherapies, pharmacotherapies and their combination in the treatment of adult depression. World Psychiatry. (2020) 19:92–107. doi: 10.1002/wps.20701

11. Moshe I, Terhorst Y, Philippi P, Domhardt M, Cuijpers P, Cristea I, et al. Digital interventions for the treatment of depression: a meta-analytic review. Psychol Bull. (2021) 147:749–86. doi: 10.1037/bul0000334

12. Ebert DD, Van Daele T, Nordgreen T, Karekla M, Compare A, Zarbo C, et al. Internet- and mobile-based psychological interventions: applications, efficacy, and potential for improving mental health. Eur Psychol. (2018) 23:167–87. doi: 10.1027/1016-9040/a000318

13. Kramer T, Als L, Garralda ME. Challenges to primary care in diagnosing and managing depression in children and young people. Br Med J. (2015) 350:h2512. doi: 10.1136/BMJ.H2512

14. Wurcel V, Cicchetti A, Garrison L, Kip MMA, Koffijberg H, Kolbe A, et al. The value of diagnostic information in personalised healthcare: a comprehensive concept to facilitate bringing this technology into healthcare systems. Public Health Genom. (2019) 22:8–15. doi: 10.1159/000501832

15. Kroenke K. Depression screening and management in primary care. Fam Pract. (2018) 35:1–3. doi: 10.1093/FAMPRA/CMX129

16. Trautman S, Beesdo-Baum K. The treatment of depression in primary care—a cross-sectional epidemiological study. Dtsch Arztebl Int. (2017) 114:721–8. doi: 10.3238/ARZTEBL.2017.0721

17. Kroenke K, Unutzer J. Closing the false divide: sustainable approaches to integrating mental health services into primary care. J Gen Intern Med. (2017) 32:404–10. doi: 10.1007/S11606-016-3967-9

18. Irving G, Neves AL, Dambha-Miller H, Oishi A, Tagashira H, Verho A, et al. International variations in primary care physician consultation time: a systematic review of 67 countries. BMJ Open. (2017) 7:e017902. doi: 10.1136/BMJOPEN-2017-017902

19. Hennemann S, Kuhn S, Witthöft M, Jungmann SM. Diagnostic performance of an app-based symptom checker in mental disorders: comparative study in psychotherapy outpatients. JMIR Ment Heal. (2022) 9(1):E32832. doi: 10.2196/32832

20. Moshe I, Terhorst Y, Opoku Asare K, Sander LB, Ferreira D, Baumeister H, et al. Predicting symptoms of depression and anxiety using smartphone and wearable data. Front Psychiatry. (2021) 12:625247. doi: 10.3389/fpsyt.2021.625247

21. Opoku Asare K, Terhorst Y, Vega J, Peltonen E, Lagerspetz E, Ferreira D. Predicting depression from smartphone behavioral markers using machine learning methods, hyperparameter optimization, and feature importance analysis: exploratory study. JMIR MHealth UHealth. (2021) 9:e26540. doi: 10.2196/26540

22. Kathan A, Triantafyllopoulos A, He X, Milling M, Yan T, Rajamani ST, et al. Journaling data for daily PHQ-2 depression prediction and forecasting. In 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE (2022). p. 2627–30.

23. Garatva P, Terhorst Y, Messner E-M, Karlen W, Pryss R, Baumeister H. Smart sensors for health research and improvement. In: Montag C, Baumeister H, editors. Digital phenotyping and mobile sensing. Berlin: Springer (2023). Vol. 2. p. 395–411. doi: 10.1007/978-3-030-98546-2_23

24. Onnela J-P, Rauch SL. Harnessing smartphone-based digital phenotyping to enhance behavioral and mental health. Neuropsychopharmacology. (2016) 41:1691–6. doi: 10.1038/npp.2016.7

25. Saeb S, Lattie EG, Schueller SM, Kording KP, Mohr DC. The relationship between mobile phone location sensor data and depressive symptom severity. PeerJ. (2016) 4:e2537. doi: 10.7717/peerj.2537

26. Baumeister H, Montag C. Digital phenotyping and mobile sensing. In: Montag C, Baumeister H, editors. New developments in psychoinformatics. Cham: Springer International Publishing (2023). doi: 10.1007/978-3-030-98546-2

27. He X, Triantafyllopoulos A, Kathan A, Milling M, Yan T, Rajamani ST, et al. Depression diagnosis and forecast based on mobile phone sensor data. In 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE (2022). p. 4679–82.

28. Pratap A, Atkins DC, Renn BN, Tanana MJ, Mooney SD, Anguera JA, et al. The accuracy of passive phone sensors in predicting daily mood. Depress Anxiety. (2019) 36:72–81. doi: 10.1002/da.22822

29. Adler DA, Wang F, Mohr DC, Choudhury T. Machine learning for passive mental health symptom prediction: generalization across different longitudinal mobile sensing studies. PLoS One. (2022) 17:e0266516. doi: 10.1371/JOURNAL.PONE.0266516

30. Cornet VP, Holden RJ. Systematic review of smartphone-based passive sensing for health and wellbeing. J Biomed Inform. (2018) 77:120–32. doi: 10.1016/j.jbi.2017.12.008

31. Rohani DA, Faurholt-Jepsen M, Kessing LV, Bardram JE. Correlations between objective behavioral features collected from mobile and wearable devices and depressive mood symptoms in patients with affective disorders: systematic review. JMIR MHealth UHealth. (2018) 6:e165. doi: 10.2196/mhealth.9691

32. Benoit J, Onyeaka H, Keshavan M, Torous J. Systematic review of digital phenotyping and machine learning in psychosis spectrum illnesses. Harv Rev Psychiatry. (2020) 28:296–304. doi: 10.1097/HRP.0000000000000268

33. Faurholt-Jepsen M, Frost M, Vinberg M, Christensen EM, Bardram JE, Kessing LV. Smartphone data as objective measures of bipolar disorder symptoms. Psychiatry Res. (2014) 217:124–7. doi: 10.1016/J.PSYCHRES.2014.03.009

34. Gruenerbl A, Osmani V, Bahle G, Carrasco JC, Oehler S, Mayora O, et al. Using smart phone mobility traces for the diagnosis of depressive and manic episodes in bipolar patients. Proceedings of the 5th Augmented Human International Conference. New York, NY: ACM Press (2014). p. 1–8. doi: 10.1145/2582051.2582089

35. Wang R, Aung MSH, Abdullah S, Brian R, Campbell AT, Choudhury T, et al. CrossCheck. Proceeding of the 2016 ACM International Joint Conference Pervasive and Ubiquitous Computing. New York, NY, USA: ACM (2016). p. 886–97. doi: 10.1145/2971648.2971740

36. Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: toward a unified view. MIS Q. (2003) 27:425–78. doi: 10.2307/30036540

37. Blut M, Chong A, Tsiga Z, Venkatesh V. Meta-analysis of the unified theory of acceptance and use of technology (UTAUT): challenging its validity and charting a research agenda in the red ocean (March 16, 2021). J Assoc Inf Syst. (Forthcoming). Available at SSRN: https://ssrn.com/abstract=383487234321961

38. Philippi P, Baumeister H, Apolinário-Hagen J, Ebert DD, Hennemann S, Kott L, et al. Acceptance towards digital health interventions—model validation and further development of the unified theory of acceptance and use of technology. Internet Interv. (2021) 26:100459. doi: 10.1016/j.invent.2021.100459

39. Foon YS, Chan Yin Fah B. Internet banking adoption in Kuala Lumpur: an application of UTAUT model. Int J Bus Manag. (2011) 6(4):161. doi: 10.5539/ijbm.v6n4p161

40. Wills MJ, El-Gayar OF, Bennett D. Examining healthcare professionals’. Acceptance of electronic medical records using UTAUT. (2008) 9:396–401.

41. Kraus J, Scholz D, Stiegemeier D, Baumann M. The more you know: trust dynamics and calibration in highly automated driving and the effects of take-overs. System malfunction, and system transparency. Hum Factors. (2020) 62:718–36. doi: 10.1177/0018720819853686

42. Lee JD, See KA. Trust in automation: designing for appropriate reliance. Hum Factors. (2004) 46:50–80. doi: 10.1518/HFES.46.1.50_30392

43. Molnar LJ, Ryan LH, Pradhan AK, Eby DW, St. Louis RM, Zakrajsek JS. Understanding trust and acceptance of automated vehicles: an exploratory simulator study of transfer of control between automated and manual driving. Transp Res Part F Traffic Psychol Behav. (2018) 58:319–28. doi: 10.1016/J.TRF.2018.06.004

44. Baumeister H, Terhorst Y, Grässle C, Freudenstein M, Nübling R, Ebert DD. Impact of an acceptance facilitating intervention on psychotherapists’ acceptance of blended therapy. PLoS One. (2020) 15:e0236995. doi: 10.1371/journal.pone.0236995

45. Baumeister H, Seifferth H, Lin J, Nowoczin L, Lüking M, Ebert DD. Impact of an acceptance facilitating intervention on patients’ acceptance of internet-based pain interventions—a randomised controlled trial. Clin J Pain. (2015) 31:528–35. doi: 10.1097/AJP.0000000000000118

46. Baumeister H, Nowoczin L, Lin J, Seifferth H, Seufert J, Laubner K, et al. Impact of an acceptance facilitating intervention on diabetes patients’ acceptance of internet-based interventions for depression: a randomized controlled trial. Diabetes Res Clin Pract. (2014) 105:30–9. doi: 10.1016/j.diabres.2014.04.031

47. Ebert DD, Berking M, Cuijpers P, Lehr D, Pörtner M, Baumeister H. Increasing the acceptance of internet-based mental health interventions in primary care patients with depressive symptoms. A randomized controlled trial. J Affect Disord. (2015) 176:9–17. doi: 10.1016/j.jad.2015.01.056

48. Lin J, Faust B, Ebert DD, Krämer L, Baumeister H. A web-based acceptance-facilitating intervention for identifying patients’ acceptance, uptake, and adherence of internet- and mobile-based pain interventions: randomized controlled trial. J Med Internet Res. (2018) 20:e244. doi: 10.2196/jmir.9925

49. Schwarzer R. Modeling health behavior change: how to predict and modify the adoption and maintenance of health behaviors. Appl Psychol. (2008) 57:1–29. doi: 10.1111/j.1464-0597.2007.00325.x

50. Rammstedt B, John OP. Measuring personality in one minute or less: a 10-item short version of the big five inventory in English and German. J Res Pers. (2007) 41:203–12. doi: 10.1016/j.jrp.2006.02.001

51. Kroenke K, Strine TW, Spitzer RL, Williams JBW, Berry JT, Mokdad AH. The PHQ-8 as a measure of current depression in the general population. J Affect Disord. (2009) 114:163–73. doi: 10.1016/j.jad.2008.06.026

52. Spitzer RL, Kroenke K, Williams JBW, Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. (2006) 166:1092–7. doi: 10.1001/archinte.166.10.1092

53. Jian J-Y, Bisantz AM, Drury CG. Foundations for an empirically determined scale of trust in automated systems. Int J Cogn Ergon Lawrence Erlbaum Associates, Inc. (2010) 4:53–71. doi: 10.1207/S15327566IJCE0401_04

54. Browne MW, Cudeck R. Alternative ways of assessing model fit. Sociol Methods Res. (1992) 21:230–58. doi: 10.1177/0049124192021002005

55. Moshagen M. The model size effect in SEM: inflated goodness-of-fit statistics are due to the size of the covariance matrix. Struct Equ Modeling. (2012) 19:86–98. doi: 10.1080/10705511.2012.634724

56. Moshagen M, Erdfelder E. A new strategy for testing structural equation models. Struct Equ Modeling. (2016) 23:54–60. doi: 10.1080/10705511.2014.950896

57. Hu LT, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct Equ Modeling. (1999) 6:1–55. doi: 10.1080/10705519909540118

58. Enders CK. Applied missing data analysis. New York: The Guilford Press (2010). doi: 10.1017/CBO9781107415324.004

59. R Core Team. R: a language and environment for statistical computing. R foundation for statistical computing. Austria: Vienna (2022). https://www.R-project.org/.

60. Rosseel Y. Lavaan: an R package for structural equation modeling. J Stat Softw. (2012) 48(2):1–3. doi: 10.18637/jss.v048.i02

61. Schneider S, Krieglstein F, Beege M, Rey GD. Successful learning with whiteboard animations—a question of their procedural character or narrative embedding? Heliyon. (2023) 9:e13229. doi: 10.1016/j.heliyon.2023.e13229

62. Mayer RE. Multimedia learning. Multimed Learn. (2002) 41:85–139. doi: 10.1016/S0079-7421(02)80005-6

63. Klepsch M, Seufert T. Making an effort versus experiencing load. Front Educ. (2021) 6:645284. doi: 10.3389/feduc.2021.645284

64. Castro-Alonso JC, Wong M, Adesope OO, Ayres P, Paas F. Gender imbalance in instructional dynamic versus static visualizations: a meta-analysis. Educ Psychol Rev. (2019) 31:361–87. doi: 10.1007/s10648-019-09469-1

65. Salgado T, Tavares J, Oliveira T. Drivers of mobile health acceptance and use from the patient perspective: survey study and quantitative model development. JMIR MHealth UHealth. (2020) 8:e17588. doi: 10.2196/17588

66. Baumel A, Muench F, Edan S, Kane JM. Objective user engagement with mental health apps: systematic search and panel-based usage analysis. J Med Internet Res. (2019) 21:e14567. doi: 10.2196/14567

67. Baumel A, Kane JM. Examining predictors of real-world user engagement with self-guided eHealth interventions: analysis of mobile apps and websites using a novel dataset. J Med Internet Res. (2018) 20:e11491. doi: 10.2196/11491

68. Baumel A, Yom-Tov E. Predicting user adherence to behavioral eHealth interventions in the real world: examining which aspects of intervention design matter most. Transl Behav Med. (2018) 8:793–8. doi: 10.1093/tbm/ibx037

69. Baumel A, Edan S, Kane JM. Is there a trial bias impacting user engagement with unguided e-mental health interventions? A systematic comparison of published reports and real-world usage of the same programs. Transl Behav Med. (2019) 9:1020–33. doi: 10.1093/tbm/ibz147

70. Baumeister H, Kraft R, Baumel A, Pryss R, Messner E-M. Persuasive e-health design for behavior change. In: Baumeister H, Montag C, editors. Digital phenotyping and mobile sensing. Cham: Springer International Publishing (2023). p. 347–64. doi: 10.1007/978-3-030-98546-2_20

71. Brodersen K, Hammami N, Katapally TR. Smartphone use and mental health among youth: it is time to develop smartphone-specific screen time guidelines. Youth. (2022) 2:23–38. doi: 10.3390/youth2010003

Keywords: smart sensing, digital health, acceptance, implementation, unified theory of acceptance and use of technology acceptance of smart sensing

Citation: Terhorst Y, Weilbacher N, Suda C, Simon L, Messner E-M, Sander LB and Baumeister H (2023) Acceptance of smart sensing: a barrier to implementation—results from a randomized controlled trial. Front. Digit. Health 5:1075266. doi: 10.3389/fdgth.2023.1075266

Received: 20 October 2022; Accepted: 26 June 2023;

Published: 13 July 2023.

Edited by:

Heleen Riper, VU Amsterdam, NetherlandsReviewed by:

Nele A. J. De Witte, Thomas More University of Applied Sciences, BelgiumMarkus Wolf, University of Zurich, Switzerland

© 2023 Terhorst, Weilbacher, Suda, Simon, Messner, Sander and Baumeister. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yannik Terhorst yannik.terhorst@uni-ulm.de

Yannik Terhorst

Yannik Terhorst Nadine Weilbacher1

Nadine Weilbacher1 Eva-Maria Messner

Eva-Maria Messner Lasse Bosse Sander

Lasse Bosse Sander Harald Baumeister

Harald Baumeister