Level-set based adaptive-active contour segmentation technique with long short-term memory for diabetic retinopathy classification

- 1Deptartment of Computer Engineering and Applications, GLA University, Mathura, India

- 2Department of Computer Science and Engineering, CMR Technical Campus, Hyderabad, India

- 3Department of Computational Mathematics, Science and Engineering (CMSE), College of Engineering, Michigan State University, East Lansing, MI, United States

- 4Department of Mathematics, Faculty of Science, Mansoura University, Mansoura, Egypt

- 5Department of Statistics and Operations Research, College of Science, King Saud University, Riyadh, Saudi Arabia

- 6Department of Engineering and Technology, Bharati Vidyapeeth Deemed to be University, Navi Mumbai, India

- 7Department of Computer Science and Engineering, Koneru Lakshmaiah Education Foundation, Guntur, India

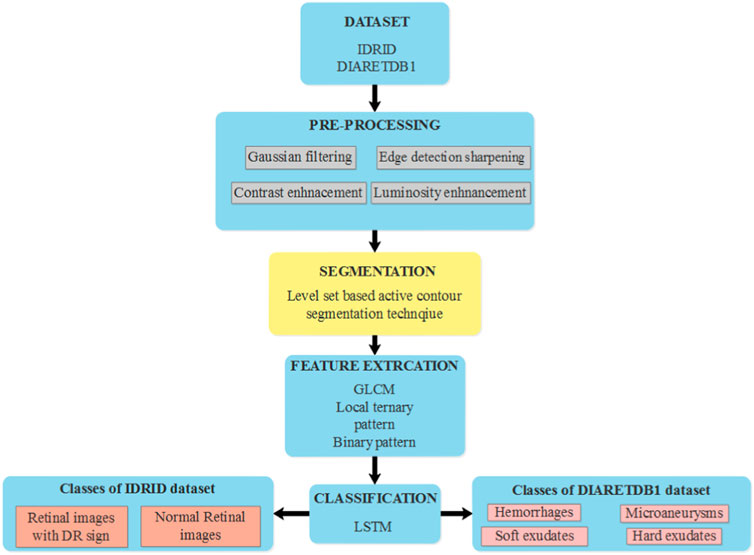

Diabetic Retinopathy (DR) is a major type of eye defect that is caused by abnormalities in the blood vessels within the retinal tissue. Early detection by automatic approach using modern methodologies helps prevent consequences like vision loss. So, this research has developed an effective segmentation approach known as Level-set Based Adaptive-active Contour Segmentation (LBACS) to segment the images by improving the boundary conditions and detecting the edges using Level Set Method with Improved Boundary Indicator Function (LSMIBIF) and Adaptive-Active Counter Model (AACM). For evaluating the DR system, the information is collected from the publically available datasets named as Indian Diabetic Retinopathy Image Dataset (IDRiD) and Diabetic Retinopathy Database 1 (DIARETDB 1). Then the collected images are pre-processed using a Gaussian filter, edge detection sharpening, Contrast enhancement, and Luminosity enhancement to eliminate the noises/interferences, and data imbalance that exists in the available dataset. After that, the noise-free data are processed for segmentation by using the Level set-based active contour segmentation technique. Then, the segmented images are given to the feature extraction stage where Gray Level Co-occurrence Matrix (GLCM), Local ternary, and binary patterns are employed to extract the features from the segmented image. Finally, extracted features are given as input to the classification stage where Long Short-Term Memory (LSTM) is utilized to categorize various classes of DR. The result analysis evidently shows that the proposed LBACS-LSTM achieved better results in overall metrics. The accuracy of the proposed LBACS-LSTM for IDRiD and DIARETDB 1 datasets is 99.43% and 97.39%, respectively which is comparably higher than the existing approaches such as Three-dimensional semantic model, Delimiting Segmentation Approach Using Knowledge Learning (DSA-KL), K-Nearest Neighbor (KNN), Computer aided method and Chronological Tunicate Swarm Algorithm with Stacked Auto Encoder (CTSA-SAE).

1 Introduction

Diabetic Retinopathy (DR) is one of the most common types of retinal vascular complication of diabetes mellitus manifested by elevated blood sugar which severely affects the blood vessels of retinal tissue (Garifullin et al., 2021; Huang et al., 2022). DR is a complex condition that can result in vision loss, and projections indicate that by 2040, approximately 600 million individuals will be affected by it with one-third of them experiencing diabetic retinopathy. Diabetic microvascular disease leads to DR which is classified into three classes such as blood vessel rupture, hemorrhage, and obstruction of blood vessels. Moreover, DR is categorized into five stages such as normal, mild, moderate, severe, and proliferative (Hasan et al., 2021; Xu et al., 2021; Pundikal and Holi, 2022; Maaliw et al., 2023) based on the severity of disease. Regular screening is required to aid in early DR identification, and early DR discovery will allow for thorough monitoring of the DR progression rate, which can be remarkably quick from early to high risk. By detecting and treating DR abnormalities early on, it is possible to prevent 95% of premature, irreversible visual damage as well as subsequent recurrences. At the initial stage, DR does not show any symptoms or minor vision impairment in the body parts. The symptoms of DR include blurred or color vision impairment and dark strings, which occur in the float of the patient’s vision (Sule, 2022; Ullah et al., 2023). DR can be diagnosed with the help of a laser or through a surgical procedure known as vitrectomy which inhibits the changes and helps to retain the vision. Non-Proliferative Diabetic Retinopathy (NPDR) leads to retinal swelling and minute blood vessel leaks (Kadan and Subbian, 2021; Nikoloulopoulou et al., 2023). The extreme phase in DR is referred to as Proliferative Diabetic Retinopathy (PDR) which damages the inner tissues of the retina and blocks the flow of blood (Guo and Peng, 2022; Li et al., 2022; Yan et al., 2022; Sundaram et al., 2023).

The formation of scar tissue has the maximum probability of affecting the central and peripheral vision. The manual diagnosis of DR is a time-consuming method, so computer-aided diagnosis gains attention among the ophthalmologist (Nallasivan et al., 2021; Ali et al., 2023). The hard exudates rupture the fatty blood vessels and worsen the condition of diabetic retinopathy. These hard exudates are yellow with different sizes and shapes. Since diabetic retinopathy is considered as a major concern which affects the people, relies a precise segmentation must be employed to detect and classify the type of diabetic retinopathy (Atli and Gedik, 2021; Udayaraju et al., 2023). The researchers were focusing on traditional image processing approaches such as morphological operations and threshold segmentation approaches. The existing researches are limited by heavy dependence of design level and the traditional lesion segmentation approaches. However, the existing research based on DR classification has not attained granularity while distinguishing PDR (Alam et al., 2023). Moreover, deep learning techniques are used in various applications related to segmentation and classification. Although deep learning techniques hold enormous promise for applications involving clinical imaging, the present techniques call for a greater volume of trained labelled data. Training datasets frequently include thousands of high-quality labelled photos, which are expensive to acquire and unavailable for rare conditions, but are necessary to achieve better outcomes. In addition to making more data available, enhancing current techniques to get the same results with less data offers another potential remedy for these restrictions. Precise segmentation has a significant stage in classifying DR with better categorization accuracy (Jebaseeli et al., 2019; Atwany et al., 2022; Chen et al., 2023). The existing approaches faced problems related to poor segmentation accuracy due to its incapability in detecting the boundaries of the image. Moreover, the edges of the images are not considered as the major part while segmentation which affects the classification accuracy of the model. So in this research, an effective segmentation approach by considering the boundary which makes the classification process easier and aids in better classification accuracy.

The significant contributions of this research are specified as follows:

1) In this research, the raw data obtained from IDRiD and DIARETDB 1 is pre-processed by noise removal using Gaussian filter, enhancing the color and luminosities of the image. The pre-processed image was used in the process of segmentation to improve the image quality.

2) Secondly, segmentation was performed before the stage of feature extraction and classification. The stage of segmentation plays an important role in analyzing the retinal fundus. So, this research introduced LBACS technique to segment the DR images.

3) The LBACS is comprised with LSMIBIF and AACM which effectively overcome the limitations of existing approaches in segmenting the boundaries and edges. The proposed approach effectively segments the image into partitions and detect the DR in every individual segments using LSMIBIF and AACM respectively.

4) The features are extracted using GLCM, LTP and HOG, which extract the features based on gradient and intensity of the image. Finally, the classification is performed with the help of LSTM to classify the type of diabetic retinopathy.

The rest of the paper is structured as follows, Section 2 presents the related work of this research and Section 3 presents the proposed method of this research. The results are presented in Section 4, while the Section 5 presents the overall conclusion of this research.

2 Related works

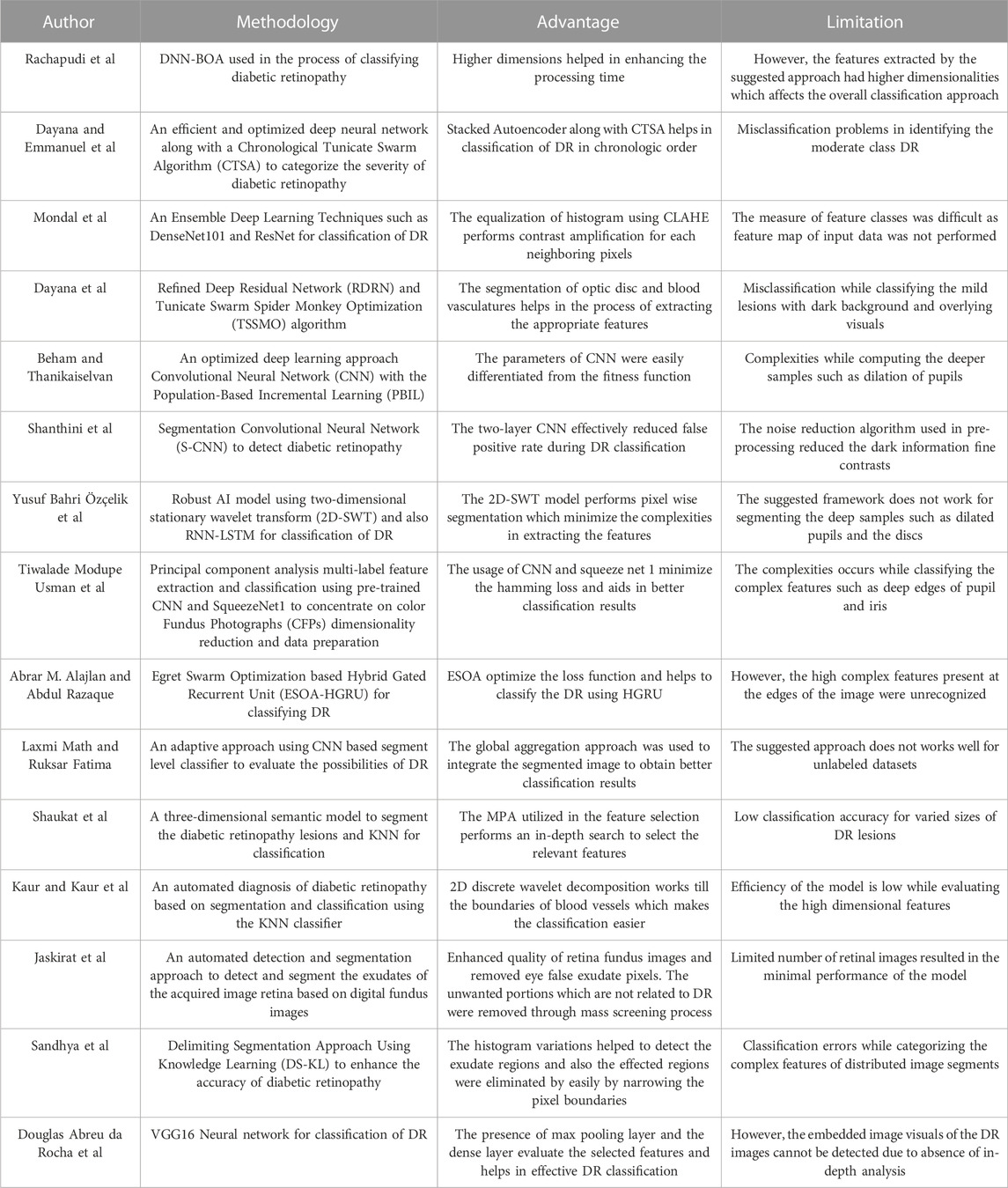

In this section, recent researches based on diabetic retinopathy segmentation and classification techniques are discussed. This section was classified based on the usage of deep learning and machine learning approaches in classifying the diabetic retinopathy.

2.1 DR classification using deep learning methods

Rachapudi et al. (2023) developed an optimized classification method using the Deep Neural Network and Butterfly Optimization Algorithm (DNN-BOA). After the data acquisition and pre-processing, the blood vessels were removed with the help of Gray Level thresholding, and segmentation was performed with the help of Modified Expectation Maximization (MEM). The feature extraction was performed using the GLCM and finally, categorization was performed using DNN-BOA. However, the features extracted by the suggested approach had higher dimensionalities which enhanced the processing time. Dayana and Emmanuel (2022b) introduced an efficient and optimized deep neural network along with a Chronological Tunicate Swarm Algorithm (CTSA) to categorize the severity of diabetic retinopathy. The U-Net and the fuzzy C-means hybrid entropy model were utilized to segment the optic disc and blood vasculatures. After this, the Gabor filter was used to identify the region of lesions and finally, the classification was performed with the help of a Stacked Autoencoder with CTSA on chronologic concept. However, the suggested approach faced misclassification issues while discerning the mild class DR.

Mondal et al. (2023) introduced an Ensemble Deep Learning Technique to Detect and categorize diabetic retinopathy. In this research, two deep learning approaches such as DenseNet101 and ResNeXt models were used for classification stage. The histogram equalization was performed using CLAHE and data augmentation was performed with the help of GAN based augmentation technique. However, the suggested approach did not perform a feature map for the whole input data. Dayana et al. (2023) introduced an improved classification method based on an optimization approach to grade the severity of the image. The dilated convolution-based spatial attention U-Net was used in optic disc segmentation and the entropy-based hybrid approach was used in the process of segmenting blood vessels. The extraction of features took place using a layered fusion network and the classification was performed with the help of a Refined Deep Residual Network (RDRN) and Tunicate Swarm Spider Monkey Optimization (TSSMO) algorithm. However, misclassification occurred while classifying the mild lesions with a dark background and overlying visuals.

Beham and Thanikaiselvan (2023) introduced an optimized deep-learning approach for automated retinopathy detection. The suggested approach introduced an Inception V3 model with a customized Convolutional Neural Network (CNN) with the Population-Based Incremental Learning (PBIL) algorithm referred to as PBIL-CNN. The PBIL-CNN effectively detected the DR from the color fundus images and helped in the process of classifying diabetic retinopathy. The suggested approach considered the feasible fitness function to distinguish the parameters of CNN. However, the PBIL-CNN exhibited complexities while computing the deeper samples such as dilation of pupils. Shanthini et al. (2021) introduced a Segmentation Convolutional Neural Network (S-CNN) to detect diabetic retinopathy. The S-CNN performed threshold-based segmentation to categorize the foreground and the background of the retinal image. The segmentation was performed with the pixel-based segmentation approach. The layers were accessed using the two-layer CNN which mitigated the rate of false positives during DR classification. However, the noise reduction algorithm used in pre-processing reduced the dark information fine contrasts. Özçelik and Altan (2023) developed a robust AI model that makes use of two-dimensional stationary wavelet transform (2D-SWT) and fractal analysis to handle the crucial problem of early identification in diabetic retinopathy. Validated by 10-fold cross-validation, the model used a recurrent neural network-long short-term memory (RNN-LSTM) architecture for classification and shown remarkable performance in diagnosing all stages of DR with low computational cost. However, the suggested framework faced complexities while reducing the dimensionality of the features.

Usman et al. (2023) developed an approach of using pre-trained CNNs such as ResNet50, ResNet152, and SqueezeNet1 to concentrate on color Fundus Photographs (CFPs) dimensionality reduction and data preparation. This study was focused to address issues of existing screening techniques which were frequently underutilized, delayed diagnosis and impaired vision. This approach also introduced a Deep Learning Multi-Label Feature Extraction and Classification (ML-FEC) model. The results have shown that ResNet152 achieved a low Hamming loss indicating that it could be useful in large-scale DR screening applications. Alajlan and Razaque. (2023) have introduced Egret Swarm Optimization based Hybrid Gated Recurrent Unit (ESOA-HGRU) for classifying DR. Initially, the input samples are pre-processed using the data augmentation approach and partitioned as training and testing data. The hybrid mask region was optimized with the help of Egret Swarm Optimization to diminish the loss of classifier. Finally, the classification was performed with the help of ESOA-HGRU. However, the suggested approach exhibits poor performance for asymmetric datasets. Math and Fatima (2021) have introduced an adaptive approach to segment and classify the DR images. Initially, the data is pre-processed using normalization method and the scaling is performed to equalize the contrast and illumination. After this, the CNN based segment level classifier is used to evaluate the possibilities of DR. Next to the stage of segmentation, the global aggregation is performed to integrate the segment level DR images and classification is performed with the help of CNN. However, the usage of CNN requires more number of labelled data.

2.2 DR classification using machine learning methods

Shaukat et al. (2022) introduced a three-dimensional semantic model to segment the diabetic retinopathy lesions. The pre-processed input data was fed into the pre-trained Xception model, and the extracted features were segmented with the help of Deeplabv3. After this, the feature selection was performed with the help of the Marine Predictor Algorithm (MPA). Finally, the classification of DR takes place with the help of a neural network and K-Nearest Neighbor classifier. However, the classification accuracy of the suggested approach was diminished for varying sizes of DR lesions. Kaur and Kaur (2022) developed an automated diagnosis of diabetic retinopathy based on segmentation and classification using the K-Nearest Neighbor (KNN) classifier. In the stage of pre-processing, the unwanted pixels were removed and 2D discrete wavelet decomposition was applied to extract the boundaries of blood vessels. Moreover, an adaptive thresholding approach was used to detect the statistical and geometrical location of the lesions. Finally, the KNN classifier was used in the process of classifying the DR lesions. The suggested approach lacked efficiency while evaluating the high-dimensional features. Jaskirat et al. (2023) developed an automated detection and segmentation approach to detect and segment the exudates of the acquired image retina based on digital fundus images. The suggested approach enhanced the quality of the retinal fundus image and removed the false exudate pixels. Additionally, the mass screening process filtered the unwanted portion that was not related to diabetic retinopathy. However, the evaluation of the suggested approach was limited with a minimal number of retinal images.

Sandhya et al. (2022) introduced a Delimiting Segmentation Approach Using Knowledge Learning (DS-KL) to enhance the accuracy of diabetic retinopathy. The suggested approach was based on the histogram variation classification that detected the exudate regions. The input images were obtained from the variation that took place from the histogram variations that help to detect the exudate regions. The segmentation approach discriminated the affected regions by delimiting the pixel boundaries. However, the suggested approach faced classification errors while categorizing the complex features of distributed image segments. Da Rocha et al. (2022) developed a novel approach of using the VGG16 neural network to address critical issues of diabetic retinopathy. The objective of this study was to create a fifth class (class 5) for low-quality digital retinal images from the DDR, EyePACS/Kaggle, and IDRiD databases in addition to classifying diabetic retinopathy into five categories. The methodology included image size modification, data cleaning, augmentation, class balance, and hyperparameter tuning. This method improved the standard of diagnosis and treatment for diabetic retinopathy. The Table 1 depicted below presents the outcome of key characteristics to highlight the constraints and proposed solution of the existing researches.

2.2.1 Scientific contribution

The aforementioned existing segmentation approaches did not consider the edges and the boundary conditions while segmenting the retinal images. When the segmentation approach does not consider the edges and the boundaries, the segmentation accuracy is less with improper boundaries which will affect the overall performance of the model. So, this research introduces an effective segmentation approach using LBACS and classification using LSTM to categorize various classes of DR with better accuracy. Moreover, LSMIBIF and AACM in LBACS allocate an improved boundary condition and detect the edges of the pre-processed image respectively. Thus, the proposed LBACS-LSTM provides better results in both segmentation and classification of diabetic retinopathy.

3 LBACS-LSTM method

In this research, effective segmentation and classification are performed with the help of LBACS, and the classification is performed with the help of LSTM classifier. Diabetic retinopathy is categorized by employing the following stages; initially, the data is acquired from publicly available datasets such as IDRID and DIARETDB1 and the pre-processing is performed with Gaussian filtering, edge detection sharpening, enhancement of contrast and luminosity. Then the pre-processed output is fed into the stage of segmentation where an effective segmentation is performed using the proposed LBACS. The segmented output is fed into the stage of feature extraction and finally, the categorization is performed using the LSTM classifier. Figure 1 presents the block diagram of the overall process involved in classifying diabetic retinopathy.

FIGURE 1. The overall process involved in the classification of diabetic retinopathy using LBACS-LSTM.

3.1 Data acquisition

In this research, the data is obtained from two publicly available datasets as Indian Diabetic Retinopathy Image Dataset (IDRiD) (Porwal et al., 2018) and Diabetic Retinopathy Database 1 (DIARETDB 1) (Kaggle, 2023). This section presents a brief description of those two datasets.

3.1.1 IDRiD

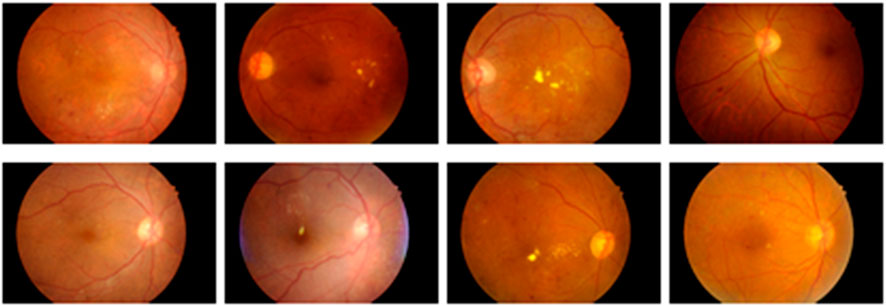

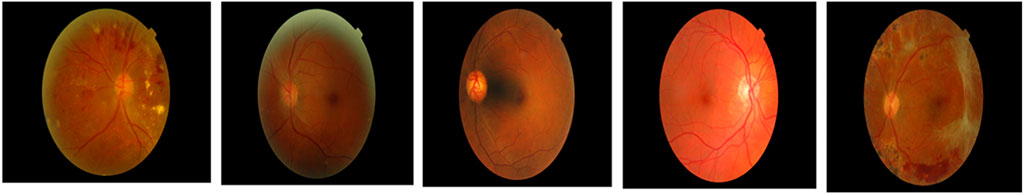

It is a publicly accessible dataset that can be downloaded from IEEE data port repository. This dataset is comprised of fundus images obtained from Indian eye clinics using the Kowa VX fundus camera. The captured IDRiD dataset has a 50-degree field view with a resolution of 4288

3.1.2 DIARETDB 1

This is a type of publicly available dataset that consists of 89 color fundus images of which 84 have mild NPDR signs, and the rest of the 5 images are normal images. Specifically, 41 images are bright lesions and 45 images are darker ones. The resolution of the pixels present in this dataset is 1500

3.2 Data pre-processing

After the stage of data acquisition, the raw data is pre-processed to get a pre-processed output without any noise. In this research, the pre-processing is performed with the help of Gaussian filtering, edge detection and sharpening, and enhancement of color and luminosity. In this section, the process involved in the aforementioned pre-processing techniques are described as follows:

3.2.1 Gaussian filtering

The fundus image is comprised of three bands of red, green, and blue. The exudates look brighter in color compared to red and blue channels. The Gaussian filter (González-Ruiz et al., 2023) smoothens the average value of neighboring pixels and removes noise, and the high-frequency constituents present in the image are calculated based on the Eq. 1 as follows:

Where the Gaussian function is represented as

3.2.2 Edge enhancement

It is one of the image processing techniques that improvise the edge contrast of the image to enhance sharpness of the image. This process creates a subtle bright and dark highlight of edges in the image and makes it look more defined.

3.2.3 Enhancement of color and luminosity

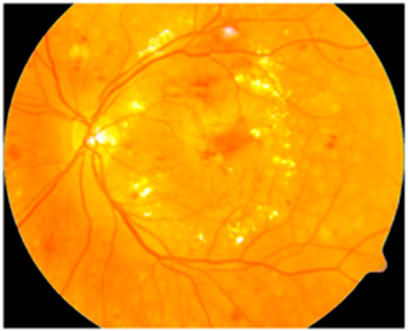

After edge enhancement, the color and luminosity of the image are enhanced with the help of Contrast Limited Adaptive Histogram Equalization (CLAHE). The CLAHE (Chandni et al., 2022) creates a realistic form of the image by improvising the color pattern and luminosity by histogram equalization. The sample image obtained after enhancing the color and luminosity is represented in Figure 4 as follows.

3.3 Segmentation

After the stage of pre-processing, the pre-processed output is fed into the stage of segmentation which is performed with the help of Level-set Based Adaptive-active Contour Segmentation (LBACS) technique. The following section describes the process involved in segmentation using the proposed LBACS which is the improvisation made with boundary indicator function in level set function, and adaptive method in active contour method. The segmentation efficiency is enhanced with the help of improved boundary conditions and an adaptive active counter to determine the edges of the pre-processed image. The detailed information related to the Level Set Method (LSM), the Level Set Method with Improved Boundary Indicator Function (LSMIBIF), and Adaptive-Active counter models are described in the sub-sections below.

3.3.1 Level set method (LSM)

The contour has a zero level set of time-dependent Level Set Function (LSF) and assumes that LSF considers the negative value inside zero level contour and positive values at the outside counter. The Euler’s equation for Distance Regularized Level Set Evolution (DRLSE) is represented in Eq. 2 as follows:

The edge indicator function of DRLSE is denoted as

The weighted coefficient values are represented as

3.3.2 Level set method with Improved Boundary Indicator Function (LSMIBIF)

The issues rely on the existing LSM being overwhelmed using the proposed LSIMIBIF. The improvisation is made in the boundary indicator function which segments the diabetic retinopathy in patients. The consideration of boundary function A counter is combined with a zero level set of level set factor

Where the external energy which is determined with the help of image attribute is denoted as

Where the image smoothening performed with the Gaussian filter using standard deviation

The improved boundary indicator is used to exhibit the expression related to gradient descent value which is presented in Eq. 7 with three different parts. The first part is based on the regularization term that excludes the process of re-initialization. The second part is based on zero level set approach which offers long term driving for the boundaries of the target. The third part of the equation is utilized to enhance the region among the neighboring targets and the evolution rate.

Where the weighed co-efficient which evaluates each parameter is represented as

Where the obtained Heaviside function is represented as

3.3.3 Adaptive-Active Counter Model (AACM)

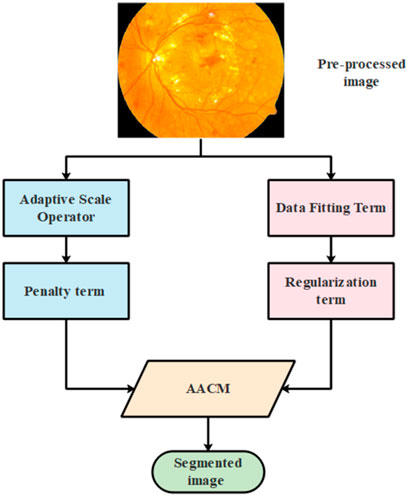

AACM is used in the second stage of segmentation where the edges are detected to minimize the size of visual data and aid in better classification. The general architectural diagram of the process involved in segmenting the image using AACM is represented in Figure 5 as follows:

The energy function of the suggested AACM is comprised with two terms such as data fitting and regularization term which is represented in Equation 9 as follows:

Where the term related to data fitting and regularization is represented as

3.3.3.1 Data fitting term

The entropy value of the Shannon information to the image domain and the proposed image entropy value is represented in Eq. 10 as follows:

Where the intensity of distributing small circular neighborhoods is represented as

Where the Euclidean distance from point

Where the mean operator and the factor related to normalization are represented as

Where the value of fitting intensity is represented as

Where the respective region area is represented as

3.3.3.2 Regularization term

The stabilized evolution and the smoothened level set function is represented as

Where the constant values which contribute to two terms are represented as

Where the Hamilton operator is represented as

Where the operator which is related to divergence is represented as

3.4 Feature extraction

After segmentation, feature extraction is performed to select relevant or appropriate features that reduce the complexities while categorizing the diabetic retinopathy in patients. In this research, the features from the segmented output are extracted with the help of Local Ternary Pattern (LTP), Gray Level Co-occurrence Matrix (GLCM) and Histogram Oriented Gradients (HOG). The steps involved in extraction of features are presented as follows:

3.4.1 Local Ternary Pattern

LTP (Dayana and Emmanuel, 2022b) is an improved version of Local Binary Pattern (LBP) which utilizes fixed threshold value to perform an effective extraction of binary pattern. LTP is computed using the Eq. 20 as follows:

Where the central pixel value is represented as

3.4.2 Gray level Co-occurrence matrix

The Gray Level Co-occurrence Matrix (GLCM) (Patel and Kashyap, 2023) is a kind of second-order statistical approach utilized in the process of analyzing image textures. The second order evaluates two-pixel pairs of the actual image. GLCM offers a probable combination of various gray level images. In this research, features such as contrast, energy, correlation, homogeneity and entropy are considered to extract features from segmented images. Moreover, GLCM evaluates interconnected pixels from the grayscale image of varying angles of

3.4.3 Histogram Oriented Gradients

Histogram of Oriented Gradients (HOG) (Shaukat et al., 2023) are characterized by distributing the local intensity gradients and edge directions. HOG effectively captures and presents the deformations and the gradient orientation is represented in Eq. 21 as follows:

Where the gradient values of

The features extracted using GLCM, LTP, and HOG are fed as input for the stage of diabetic retinopathy classification which is performed with the help of the Long Short Term Memory Classifier (LSTM) classifier.

3.5 Classification

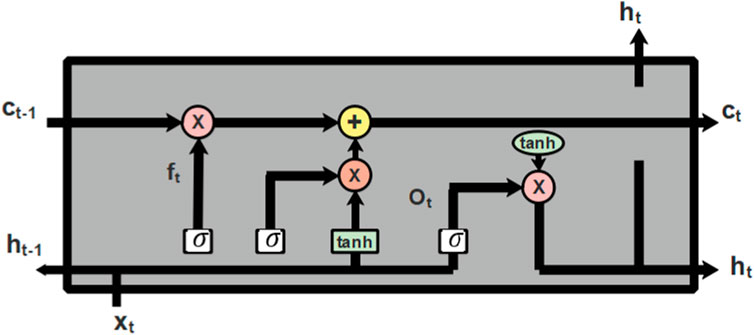

The features extracted from LTP, GLCM, and HOG are fed into the phase of classification which is performed using Long Short Term Memeory (LSTM) (Ewees et al., 2022), a deep learning classifier. The classification is the final stage where the extracted features are used to categorize the classes of diabetic retinopathy. LSTM is a kind of RNN that learns through long term dependencies. The architectural diagram of LSTM is represented in Figure 6 as follows:

Cell state is the central component of LSTM which can add or remove information from cells and selectively permit information to pass through the door mechanism to accomplish this. The forget gate, input gate, and output gate make up an LSTM. The input gate chooses what information to add to the cell state after the forget gate decides which information to remove from the cell state. The cell state can be updated once these two points have been established. The output gate regulates the network’s output. The process of node present in LSTM is described in Eqs. 22–27 as follows:

Where, the hidden state of the prior layer is denoted as

Computing the output of the input and output gate individually does not provide better performance so, the output from the input and output gate can be distinguished using the factor

The classification which is performed with the help of an LSTM classifier aids in better classification results due to its capability to select the image pattern for a longer time duration. The LSTM effectively classifies the various classes of diabetic retinopathy and helps the ophthalmologist to categorize the retina of the disease-affected patients.

4 Results and analysis

In this section, the information related to the experimental setup, performance metrics used to estimate the efficacy of the suggested approach, and results obtained through simulation analysis and comparative analysis are considered to evaluate the efficiency of the proposed approach.

4.1 Experimental setup

The proposed approach is simulated in Python software and the system is configured with specifications such as Windows 11 operating system, 16 GB of RAM and Intel i7 processor. The following python libraries are utilized in this research to evaluate the efficiency of the proposed approach. The data pre-processing is performed using the libraries such as NumPy, Pandas and Open CV. The libraries such as Tensor flow and Scikit-Learn are used to develop the deep learning classification model and result analysis respectively.

4.2 Evaluation metrics

The results obtained while evaluating the proposed approach are estimated by considering the performance metrics such as accuracy, sensitivity, specificity, precision, and dice co-efficient. The aforementioned performance metrics can be evaluated using the mathematical equations listed in Eqs 29–33.

Accuracy: It is defined as the fraction of total number of samples which are predicted accurately to the total number of samples.

Sensitivity: It is the type of performance metric used to predict the true positives and it is the ratio of true positives to the total number of true positives and the false negatives.

Specificity: It is ratio of proportion of true negatives to the sum of predicted false positives and true negatives

Precision: It is the ratio of proportion of true positive values to the sum of true positives and predicted false positives.

Where,

4.3 Simulation results

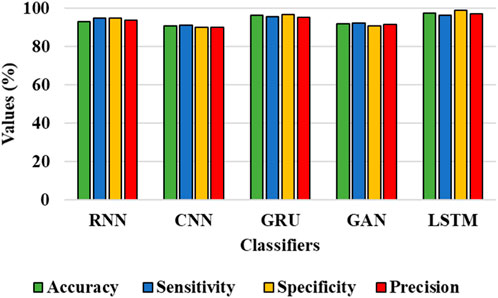

In this section, the effectiveness of the suggested method is assessed based on segmentation and classification. The datasets such as IDRiD and DIARETDB 1 are utilized in evaluating the suggested method. The efficacy of the LBACS segmentation approach is related to the efficiency of state-of-art methods used for segmentation, and the efficacy of the LSTM classifier is assessed with some of the existing deep learning classifiers used in classifying diabetic retinopathy.

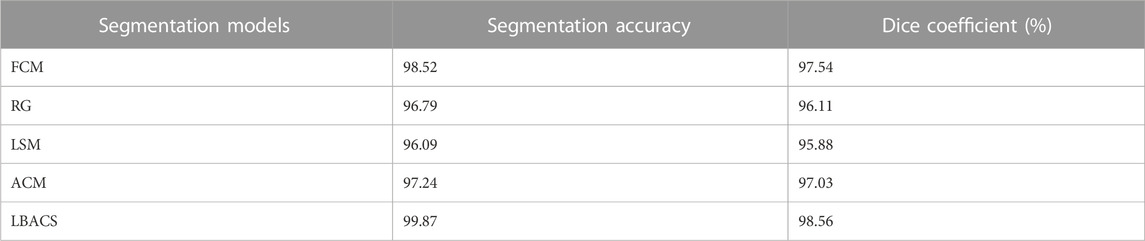

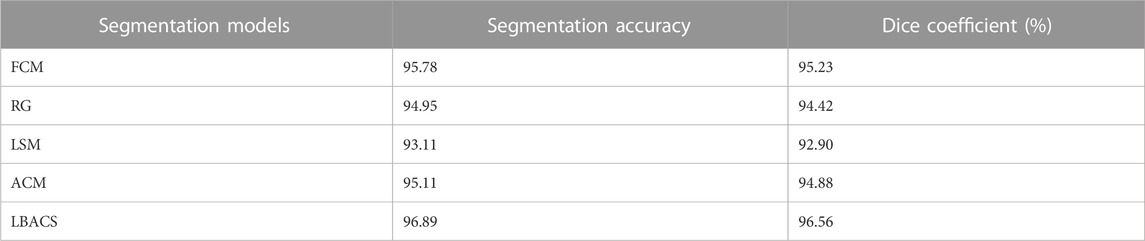

4.3.1 Segmentation analysis

In this sub-section, the performance of LBACS is estimated with existing segmentation approaches such as Level set method (LSM), Active Contour model (ACM), Fuzzy C Means (FCM) algorithm and Region growing (RG). The segmentation accuracy is evaluated by considering the efficiency of the proposed approach while segmenting the pre-processed image. In other words, it is represented as ratio of correctly segmented pixels to the total number of pixels in the pre-processed image. The segmentation accuracy is mathematically represented in as follows:

The performance of the proposed segmentation approach is evaluated with the existing segmentation approach based on the accuracy value obtained at the time of segmentation and the value of dice co-efficient. Table 2 shows the results obtained while evaluating the proposed LBACS with the IDRiD dataset, while Table 3 shows the results obtained while evaluating the proposed LBACS with the DIARETDB 1 dataset.

The results from Table 1 and Table 2 demonstrate the analysis of the suggested approach for segmenting diabetic retinopathy by considering the data obtained from the IDRiD dataset and DIARETDB 1 dataset, respectively. The obtained outcomes depict that the suggested methodology achieved better segmentation accuracy of 99.87% for the IDRiD dataset and 96.89% for the DIARETDB1 dataset. These results are comparably higher than the existing methods, and this better result is due to the effectiveness of the suggested methodology by improving the boundary condition of the pre-processed image using LSIMBIF, while the AACM detects the edge of the image obtained from LSMIBIF, furthermore minimizing the size of visual data and aiding in better classification.

4.3.2 Classification analysis

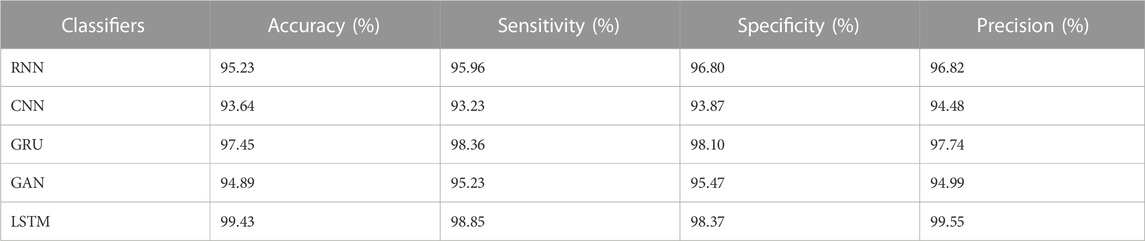

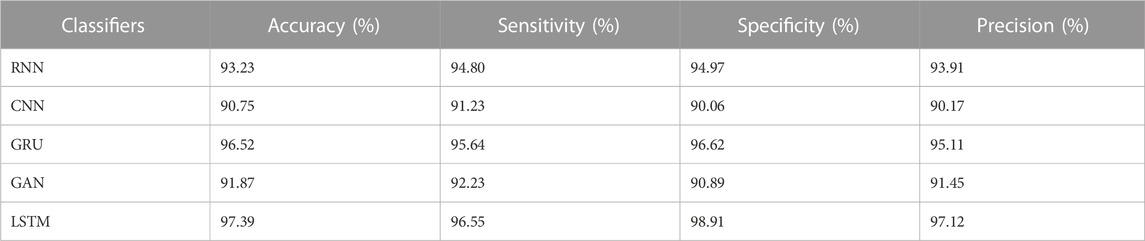

In this sub-section, the efficiency of the classifiers is assessed for the IDRiD and DIARETDB 1 datasets. Table 4 and Table 5 show the graphical representation for evaluation of classification performance for IDRiD and DIARETDB 1 datasets. The classification accuracy is the overall accuracy of the model while classifying the types of DR using the proposed segmentation approach. In other words, classification accuracy is defined as the accuracy score considered during classification task. It evaluates the proportion of correctly classified samples from the total number of samples and it can be mathematically represented in Eq. 35 as follows:

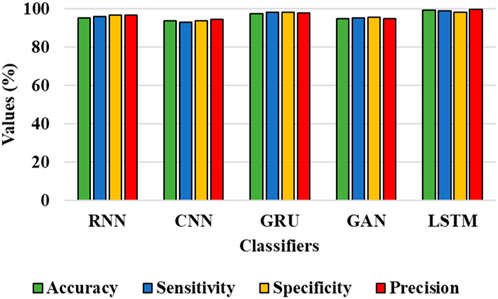

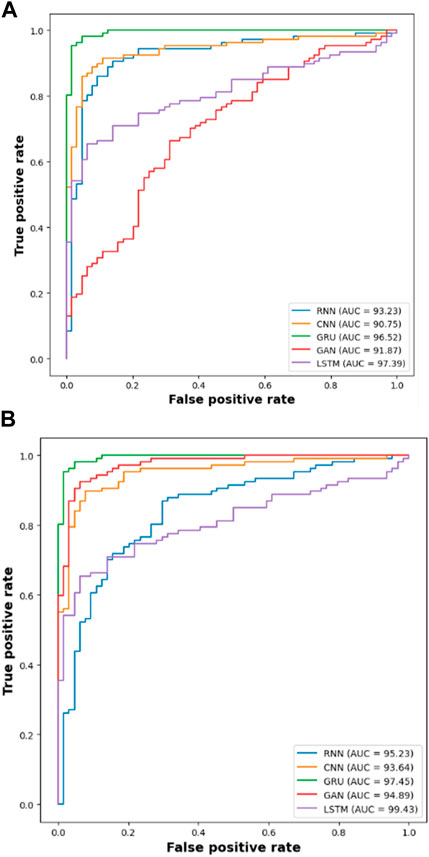

The results from Table 4 and Table 5 show that the LSTM classifier used in this research obtains better classification results in overall metrics when compared with existing classification approaches. When LSTM is evaluated for the IDRiD dataset it obtains a classification accuracy of 99.43%, similarly, when LSTM is evaluated with the DIARETDB 1 dataset, it obtains a classification accuracy of 97.39%. Thus, the LSTM classifier with LBACS segmentation achieves better results due to an effective segmentation performed by LSIMBIF and AACM. Figures 7, 8 show the graphical representation of the performance of the classifier for different datasets such as IDRiD and DIARETDB 1. The LSTM classifier used in this research have the ability to capture long term dependencies and the complex patterns of the image. The LSTM generates visual captions for the image without vanishing gradient problem which helps in an effective classification and provides better classification results.

FIGURE 8. Graphical representation for the performance of the classifier for the DIARETDB 1 dataset.

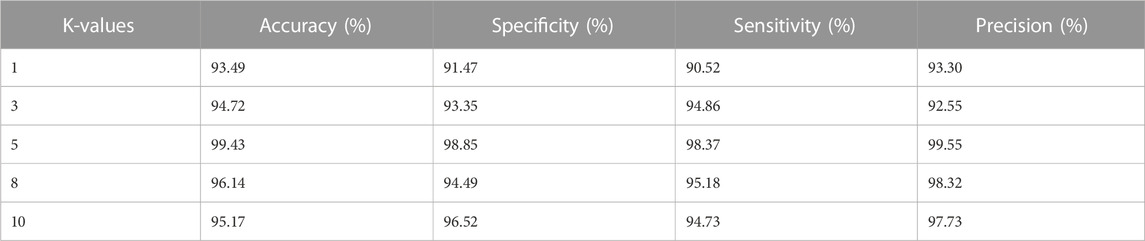

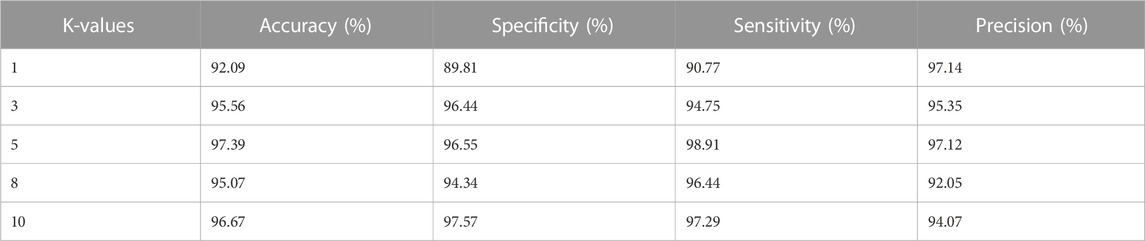

Moreover, the LBACS-LSTM is evaluated with various k-fold values such as 1,3,5,8 and 10. Table 6 and Table 7 present the analysis of the proposed approach for different K-fold values.

The results from Table 6 and Table 7 depict that the proposed approach achieves better value of accuracy, specificity, sensitivity, and precision for IDRiD and DIARETDB 1 datasets. The LBACS-LSTM achieves better metrics when the K-value is assigned as 5, when the K fold is assigned as 5, the data is separated in the ratio of 80% for training and 20% for testing.

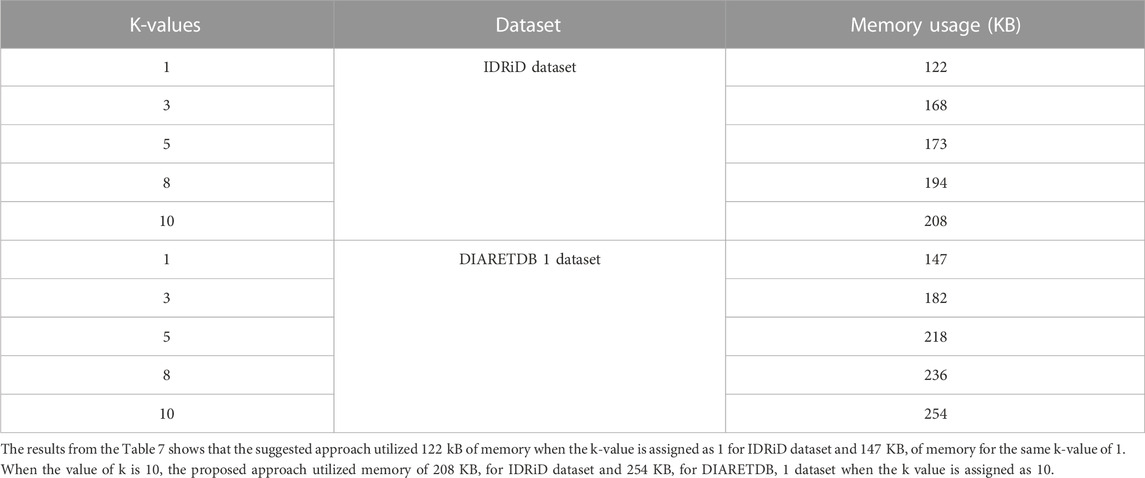

After the evaluation of proposed approach with different K-fold methods, the memory usage of the proposed approach is evaluated for training. The Table 8 depicted below shows the memory usage of the proposed approach for different two different datasets such as IDRiD and DIARETDB 1.

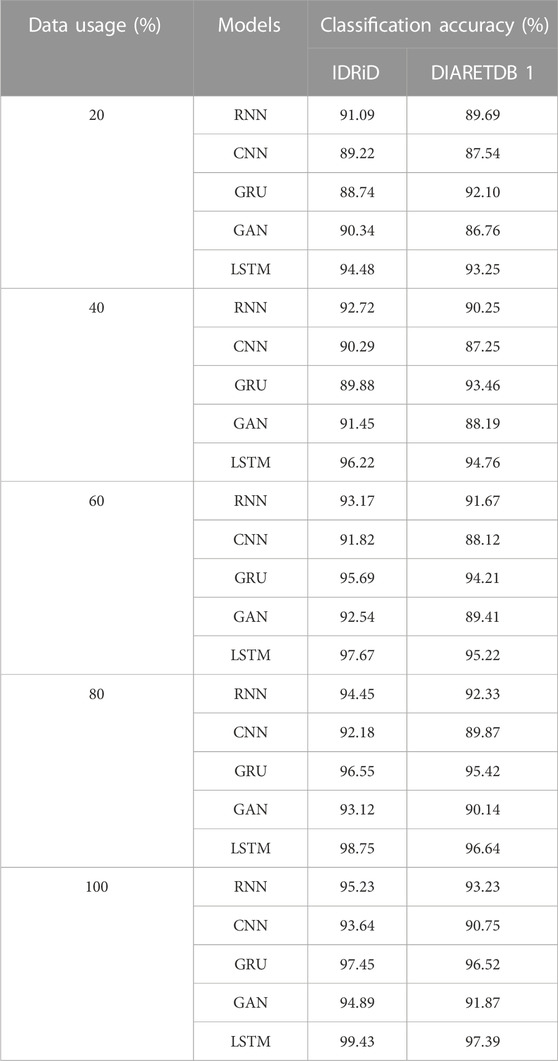

Next to the evaluation of memory usage, the scalability of the dataset is evaluated when the proposed method is evaluated with 20%,40%,60%,80% and 100% of the data obtained from IDRiD dataset and DIARETDB 1 dataset. The existing classification approaches such as RNN, CNN, GRU and GAN are used to evaluate the performance of the LSTM classifier used in this research. The performance is evaluated based on the classification accuracy as the evaluation metric. The Table 9 depicted below presents the results obtained while evaluating the proposed approach.

The results from the Table 8 shows that the LSTM classifier utilized in this research obtained better classification accuracy when compared with other existing classification approaches. For instance, when 20% of the data is used, the classification accuracy of the proposed approach is 94.48% whereas the existing classification approaches such as RNN, CNN, GRU and GAN obtained the classification accuracy of 91.09%, 89.22%, 88.74% and 90.34% respectively. The better result of the LSTM classifier is due to the effective segmentation performed using the proposed LBACS which effectively segments the DR images in a precise manner by considering the boundary levels.

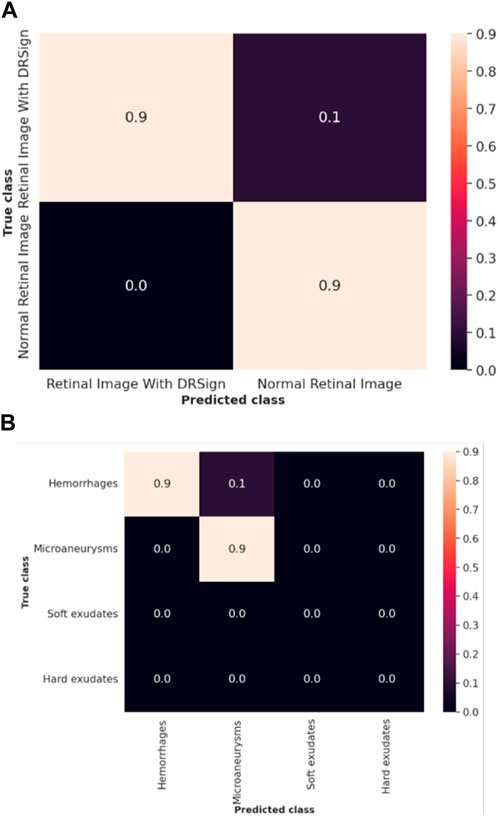

The efficacy of the LSTM classifier used in this research is determined with the help of the confusion matrix and Receiver Operational Characteristics (ROC) curve which are shown in Figure 9 and Figure 10 respectively. The ROC graph depicts the measure of classification performance and it is graphically represented in True Positive Rate (TPR) and True Negative Rate (TNR).

4.4 Comparative analysis

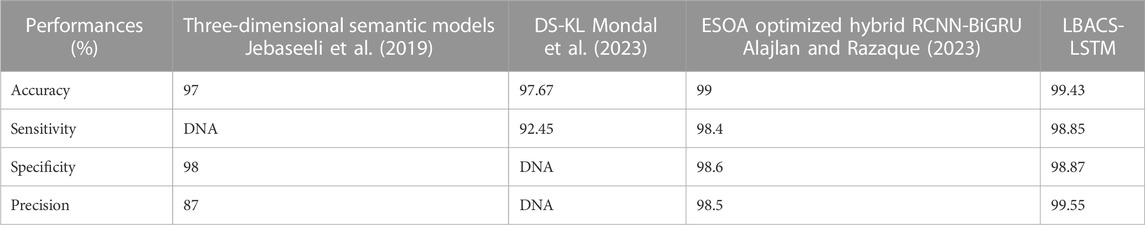

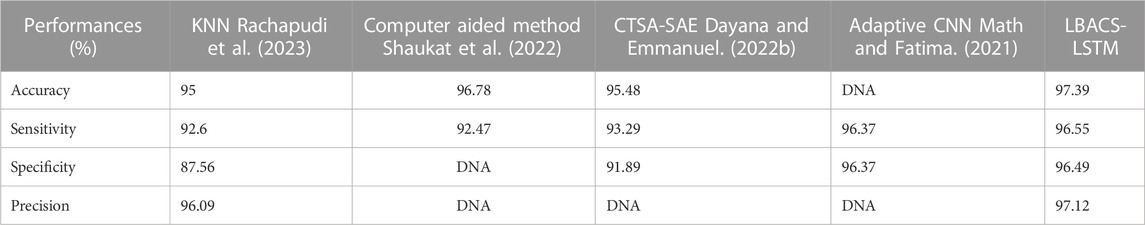

The comparison of proposed LBACS-LSTM with the existing approaches such as three-dimensional semantic model (Jebaseeli et al., 2019), KNN (Rachapudi et al., 2023), Computer-aided method (Shaukat et al., 2022), CTSA-SAE (Dayana and Emmanuel, 2022a; Dayana and Emmanuel, 2022b), DS-KL (Mondal et al., 2023), ESOA optimized hybrid RCNN-BiGRU (Alajlan and Razaque, 2023) and Adaptive CNN (Math and Fatima, 2021) are described in this section. Table 10 presents the comparative analysis for IDRiD dataset and Table 11 depicts the comparative analysis of DIARETDB 1 dataset.

The results from Table 10 and Table 11 show that the proposed LBACS-LSTM achieves better performance in overall metrics when compared with the existing three-dimensional semantic models (Jebaseeli et al., 2019), KNN (Rachapudi et al., 2023), Computer-aided method (Shaukat et al., 2022), CTSA-SAE (Dayana and Emmanuel, 2022b), DS-KL (Mondal et al., 2023) and ESOA optimized hybrid RCNN-BiGRU (Alajlan and Razaque, 2023) and Adaptive CNN (Math and Fatima, 2021). The accuracy of the proposed method for the IDRiD dataset is 99.43% and 97.39% for the DIARETDB 1 dataset. The data which is not available is represented as DNA (i.e., Data Not Available). The better result of the proposed approach is due to the effectiveness of the suggested method by improving the boundary condition of the pre-processed image using LSIMBIF, and the AACM detects the edge of the image obtained from LSMIBIF, and also minimizes the size of visual data and aids in better classification.

4.5 Discussion

This research is carried out by considering a precise segmentation and classification of diabetic retinopathy. The proposed LBACS-LSTM is evaluated with two datasets, namely, IDRiD and DIARETDB 1. The performance of the proposed segmentation model is compared with evaluated based on segmentation accuracy and dice co-efficient. The existing segmentation models such as FCM, RG, LSM and ACM are used in comparing the performance of the proposed segmentation model. For instance, by considering IDRiD dataset, the segmentation accuracy of proposed approach is 98.87% whereas the existing FCM, RG, LSM and ACM obtains segmentation accuracy of 98.52%, 96.79%, 96.09% and 97.24% respectively. The performance of the LSTM classifier used in this research is evaluated with the existing classification approaches such as RNN, CNN, GRU, and GAN. The DIARETDB 1 dataset is used to evaluate the performance of the classifier. The LSTM classifier obtains the classification accuracy of 97.39% whereas the existing RNN, CNN, GRU and GAN obtains classification accuracy of 93.23%, 90.75%, 96.52% and 91.87% respectively. In a comparative analysis, the proposed approach is evaluated with three-dimensional semantic model and DS-KL for the IDRiD dataset. In the same way, the proposed method is evaluated with KNN, computer-aided method and CTSA-SAE for DIARETDB 1 dataset. The LBACS-LSTM obtains an overall accuracy of 99.43% for IDRiD dataset, whereas the existing three dimensional semantic model and DS-KL obtain overall accuracy values of 97% and 97.67%. When the proposed method is evaluated with DIARETDB 1 dataset, it obtains an overall accuracy value of 97.39%. The value of LBACS-LSTM for the DIARETDB 1 dataset is comparably higher than KNN, computer aided method, and CTSA-SAE with accuracies of 95%, 96.78%, and 95.48% respectively. The better result is due to the effectiveness of the suggested methodology by improving the boundary condition of the pre-processed image using LSIMBIF and the AACM. The existing approaches were incapable to detect the edges of the pre-processed image and aids in poor segmentation accuracy. But the proposed approach effectively segments the image into partitions and detect the DR in every individual segments. This process effectively enhances the performance of segmenting the images including edges of the images. Moreover, the suggested approach detects the edge of the image obtained from LSMIBIF, and minimizes the size of visual data which further minimizes the complexity while classifying the DR images.

5 Conclusion

In this research, diabetic retinopathy segmentation and classification are performed to segment and categorize various classes of diabetic retinopathy. The LBACS is proposed to perform an effective segmentation which helps to diminish the complexities while segmenting the images. After data acquisition, pre-processing is performed for the removal of noise, enhancing the color and luminosity of the image. Then, the proposed LBACS is used to segment the pre-processed image using LSIMBIF with the improved boundary condition and the AACM is utilized in the process of detecting the edges of the image obtained from LSMIBIF, while minimizing the size of visual data and aiding in better classification. The features are extracted using GLCM, HOG and LTP, finally the various classes of diabetic retinopathy are categorized with the help of LSTM. The accuracy of the proposed LBACS-LSTM for IDRiD and DIARETDB 1 datasets is 99.43% and 97.39% respectively. Similarly, the sensitivity of the proposed approach is 98.85% for the IDRiD dataset and 96.55% for the DIARETDB 1 dataset. However, the segmentation accuracy of the proposed approach is minimized when the images with extreme noises are subjected as input.

5.1 Future scope

The experimental results show that the proposed approach obtains better results by means of segmentation and classification. However, the absence of feature selection probably diminishes the overall performance of the model in classifying DR images. So, the optimization based feature selection will be performed in future to obtain better classification results.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

AB: Conceptualization, Formal Analysis, Methodology, Resources, Software, Visualization, Writing–original draft, Writing–review and editing. RP: Formal Analysis, Investigation, Methodology, Writing–review and editing. MAb: Conceptualization, Methodology, Project administration, Software, Visualization, Writing–original draft. SA: Funding acquisition, Investigation, Validation, Writing–review and editing. MAw: Investigation, Methodology, Project administration, Visualization, Writing–original draft. KR: Investigation, Methodology, Resources, Validation, Writing–review and editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This project is funded by King Saud University, Riyadh, Saudi Arabia.

Acknowledgments

Researchers Supporting Project number (RSP2024R167), King Saud University, Riyadh, Saudi Arabia.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alajlan, A. M., and Razaque., A. (2023). ESOA-HGRU: egret swarm optimization algorithm-based hybrid gated recurrent unit for classification of diabetic retinopathy. Artif. Intell. Rev., 1–30. doi:10.1007/s10462-023-10532-1

Alam, M., Zhao, E. J., Lam, C. K., and Rubin, D. L. (2023). Segmentation-assisted fully convolutional neural network enhances deep learning performance to identify proliferative diabetic retinopathy. J. Clin. Med. Res. 12 (1), 385. doi:10.3390/jcm12010385

Ali, G., Dastgir, A., Iqbal, M. W., Anwar, M., and Faheem, M. (2023). A hybrid convolutional neural network model for automatic diabetic retinopathy classification from fundus images. IEEE J. Transl. Eng. Health Med. 11, 341–350. doi:10.1109/JTEHM.2023.3282104

Atli, I., and Gedik, O. S. (2021). Sine-Net: a fully convolutional deep learning architecture for retinal blood vessel segmentation. Eng. Sci. Technol. Int. J. 24 (2), 271–283. doi:10.1016/j.jestch.2020.07.008

Atwany, M. Z., Sahyoun, A. H., and Yaqub, M. (2022). Deep learning techniques for diabetic retinopathy classification: a survey. IEEE Access 10, 28642–28655. doi:10.1109/ACCESS.2022.3157632

Beham, A. R., and Thanikaiselvan, V. (2023). An optimized deep-learning algorithm for the automated detection of diabetic retinopathy. Soft Comput. doi:10.1007/s00500-023-08930-2

Chandni, P. R. R., Justin, J., and Vanithamani, R. (2022). “Fundus image enhancement using EAL-CLAHE technique,” in Advances in data and information sciences: proceedings of ICDIS 2021 (Singapore: Springer), 613–624. doi:10.1007/978-981-16-5689-7_54

Chen, Y., Xu, S., Long, J., and Xie, Y. (2023). DR-Net: diabetic Retinopathy detection with fusion multi-lesion segmentation and classification. Multimed. Tools Appl. 82 (17), 26919–26935. doi:10.1007/s11042-023-14785-4

Da Rocha, D. A., Ferreira, F. M. F., and Peixoto, Z. M. A. (2022). Diabetic retinopathy classification using VGG16 neural network. Res. Biomed. Eng. 38 (2), 761–772. doi:10.1007/s42600-022-00200-8

Dayana, A. M., and Emmanuel, W. R. S. (2022a). An enhanced swarm optimization-based deep neural network for diabetic retinopathy classification in fundus images. Multimed. Tools Appl. 81 (15), 20611–20642. doi:10.1007/s11042-022-12492-0

Dayana, A. M., and Emmanuel, W. R. S. (2022b). Deep learning enabled optimized feature selection and classification for grading diabetic retinopathy severity in the fundus image. Neural comput. Appl. 34 (21), 18663–18683. doi:10.1007/s00521-022-07471-3

Dayana, A. M., Emmanuel, W. R. S., and Linda, C. H. (2023). Feature fusion and optimization integrated refined deep residual network for diabetic retinopathy severity classification using fundus image. Multimed. Syst. 29 (3), 1629–1650. doi:10.1007/s00530-023-01078-x

Ewees, A. A., Al-qaness, M. A. A., Abualigah, L., and Elaziz, M. A. (2022). HBO-LSTM: optimized long short term memory with heap-based optimizer for wind power forecasting. Energy Convers. manage. 268, 116022. doi:10.1016/j.enconman.2022.116022

Garifullin, A., Lensu, L., and Uusitalo, H. (2021). Deep Bayesian baseline for segmenting diabetic retinopathy lesions: advances and challenges. Comput. Biol. Med. 136, 104725. doi:10.1016/j.compbiomed.2021.104725

González-Ruiz, V., Fernández-Fernández, M. R., and Fernández, J. J. (2023). Structure-preserving Gaussian denoising of FIB-SEM volumes. Ultramicroscopy 246, 113674. doi:10.1016/j.ultramic.2022.113674

Guo, Y., and Peng, Y. (2022). CARNet: cascade attentive RefineNet for multi-lesion segmentation of diabetic retinopathy images. Complex Intell. Syst. 8 (2), 1681–1701. doi:10.1007/s40747-021-00630-4

Hasan, M. K., Alam, M. A., Elahi, M. T. E., Roy, S., and Martí, R. (2021). DRNet: segmentation and localization of optic disc and Fovea from diabetic retinopathy image. Artif. Intell. Med. 111, 102001. doi:10.1016/j.artmed.2020.102001

Huang, S., Li, J., Xiao, Y., Shen, N., and Xu, T. (2022). RTNet: relation transformer network for diabetic retinopathy multi-lesion segmentation. IEEE Trans. Med. Imaging 41 (6), 1596–1607. doi:10.1109/TMI.2022.3143833

Jaskirat, K., Mittal, D., Malebary, S., Nayak, S. R., Kumar, D., Kumar, M., et al. (2023). Automated detection and segmentation of exudates for the screening of background retinopathy. J. Healthc. Eng. 2023, 4537253. doi:10.1155/2023/4537253

Jebaseeli, T. J., Durai, C. A. D., and Peter, J. D. (2019). Retinal blood vessel segmentation from diabetic retinopathy images using tandem PCNN model and deep learning based SVM. Optik 199, 163328. doi:10.1016/j.ijleo.2019.163328

Kadan, A. B., and Subbian, P. S. (2021). Optimized hybrid classifier for diagnosing diabetic retinopathy: iterative blood vessel segmentation process. Int. J. Imaging Syst. Technol. 31 (2), 1009–1033. doi:10.1002/ima.22482

Kaggle (2023). Link for DIARETDB 1 dataset. Available at: https://www.kaggle.com/datasets/nguyenhung1903/diaretdb1-standard-diabetic-retinopathy-database.

Kaur, J., and Kaur, P. (2022). Automated computer-aided diagnosis of diabetic retinopathy based on segmentation and classification using K-nearest neighbor algorithm in retinal images. Comput. J. 66 (8), 2011–2032. doi:10.1093/comjnl/bxac059

Li, Y., Zeghlache, R., Brahim, I., Xu, H., Tan, Y., Conze, P.-H., et al. (2022). “Segmentation, classification, and quality assessment of UW-octa images for the diagnosis of diabetic retinopathy,” in MIDOG 2022, DRAC 2022: Mitosis Domain Generalization and Diabetic Retinopathy Analysis, Singapore, September 18-22 2022 (Cham: Springer), 146–160. doi:10.1007/978-3-031-33658-4

Maaliw, R. R., Mabunga, Z. P., Veluz, M. R. D. D., Alon, A. S., Lagman, A. C., Garcia, M. B., et al. (2023). “An enhanced segmentation and deep learning architecture for early diabetic retinopathy detection,” in 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 08-11 March 2023 (IEEE). doi:10.1109/CCWC57344.2023.10099069

Math, L., and Fatima, R. (2021). Adaptive machine learning classification for diabetic retinopathy. Multimedia Tools Appl. 80 (4), 5173–5186. doi:10.1007/s11042-020-09793-7

Mondal, S. S., Mandal, N., Singh, K. K., Singh, A., and Izonin, I. (2023). EDLDR: an ensemble deep learning technique for detection and classification of diabetic retinopathy. Diagnostics 13 (1), 124. doi:10.3390/diagnostics13010124

Nallasivan, G., Vargheese, M., Revathi, S., and Arun, R. (2021). Diabetic retinopathy segmentation and classification using deep learning approach. Ann. Rom. Soc. Cell Biol. 25, 13594–13605. Available from: https://www.annalsofrscb.ro/index.php/journal/article/view/4392.

Nikoloulopoulou, N., Perikos, I., Daramouskas, I., Makris, C., Treigys, P., and Hatzilygeroudis, I. (2023). A convolutional autoencoder approach for boosting the specificity of retinal blood vessels segmentation. Appl. Sci. 13 (5), 3255. doi:10.3390/app13053255

Özçelik, Y. B., and Altan, A. (2023). Overcoming nonlinear dynamics in diabetic retinopathy classification: a robust AI-based model with chaotic swarm intelligence optimization and recurrent long short-term memory. Fractal Fract. 7 (8), 598. doi:10.3390/fractalfract7080598

Patel, R. K., and Kashyap, M. (2023). Automated screening of glaucoma stages from retinal fundus images using BPS and LBP based GLCM features. Int. J. Imaging Syst. Technol. 33 (1), 246–261. doi:10.1002/ima.22797

Porwal, P., Pachade, S., Kamble, R., Kokare, M., Deshmukh, G., Sahasrabuddhe, V., et al. (2018). Indian diabetic retinopathy image dataset (IDRiD): a database for diabetic retinopathy screening research. Data 3 (3), 25. doi:10.3390/data3030025

Pundikal, M., and Holi, M. S. (2022). Microaneurysms detection using grey wolf optimizer and modified K-nearest neighbor for early diagnosis of diabetic retinopathy. Int. J. Intell. Eng. Syst. 15 (1), 130–140. doi:10.22266/ijies2022.0228.13

Rachapudi, V., Rao, K. S., Rao, T. S. M., Dileep, P., and Roy, T. L. D. (2023). Diabetic retinopathy detection by optimized deep learning model. Multimed. Tools Appl. 82 (18), 27949–27971. doi:10.1007/s11042-023-14606-8

Sandhya, S. G., Suhasini, A., and Hu, Y.-C. (2022). Pixel-boundary-dependent segmentation method for early detection of diabetic retinopathy. Math. Probl. Eng. 2022, 1–12. doi:10.1155/2022/1133575

Shanthini, A., Manogaran, G., Vadivu, G., Kottilingam, K., Nithyakani, P., and Fancy, C. (2021). Threshold segmentation based multi-layer analysis for detecting diabetic retinopathy using convolution neural network. J. Ambient. Intell. Hum. Comput. doi:10.1007/s12652-021-02923-5

Shaukat, N., Amin, J., Sharif, M., Azam, F., Kadry, S., and Krishnamoorthy, S. (2022). Three-dimensional semantic segmentation of diabetic retinopathy lesions and grading using transfer learning. J. Personalized Med. 12 (9), 1454. doi:10.3390/jpm12091454

Shaukat, N., Amin, J., Sharif, M. I., Sharif, M. I., Kadry, S., and Sevcik, L. (2023). Classification and segmentation of diabetic retinopathy: a systemic review. Appl. Sci. 13 (5), 3108. doi:10.3390/app13053108

Sule, O. O. (2022). A survey of deep learning for retinal blood vessel segmentation methods: taxonomy, trends, challenges and future directions. IEEE Access 10, 38202–38236. doi:10.1109/ACCESS.2022.3163247

Sundaram, S., Selvamani, M., Raju, S. K., Ramaswamy, S., Islam, S., Cha, J.-H., et al. (2023). Diabetic retinopathy and diabetic macular edema detection using ensemble based convolutional neural networks. Diagnostics 13 (5), 1001. doi:10.3390/diagnostics13051001

Udayaraju, P., Murthy, K. S., Jeyanthi, P., Raju, B. V. S., Rajasri, T., and Ramadevi, N. (2023). A combined U-Net and multi-class support vector machine learning models for diabetic retinopathy macula edema segmentation and classification DME. Soft Comput. doi:10.1007/s00500-023-08690-z

Ullah, Z., Usman, M., Latif, S., Khan, A., and Gwak, J. (2023). SSMD-UNet: semi-supervised multi-task decoders network for diabetic retinopathy segmentation. Sci. Rep. 13, 9087. doi:10.1038/s41598-023-36311-0

Usman, T. M., Saheed, Y. K., Ignace, D., and Nsang, A. (2023). Diabetic retinopathy detection using principal component analysis multi-label feature extraction and classification. Int. J. Cognitive Comput. Eng. 4, 78–88. doi:10.1016/j.ijcce.2023.02.002

Xu, Y., Zhou, Z., Li, X., Zhang, N., Zhang, M., and Wei, P. (2021). Ffu-net: feature fusion u-net for lesion segmentation of diabetic retinopathy. Biomed. Res. Int. 2021, 6644071. doi:10.1155/2021/6644071

Yan, H., Xie, J., Zhu, D., Jia, L., and Guo, S. (2022). MSLF-Net: a multi-scale and multi-level feature fusion net for diabetic retinopathy segmentation. Diagnostics 12 (12), 2918. doi:10.3390/diagnostics12122918

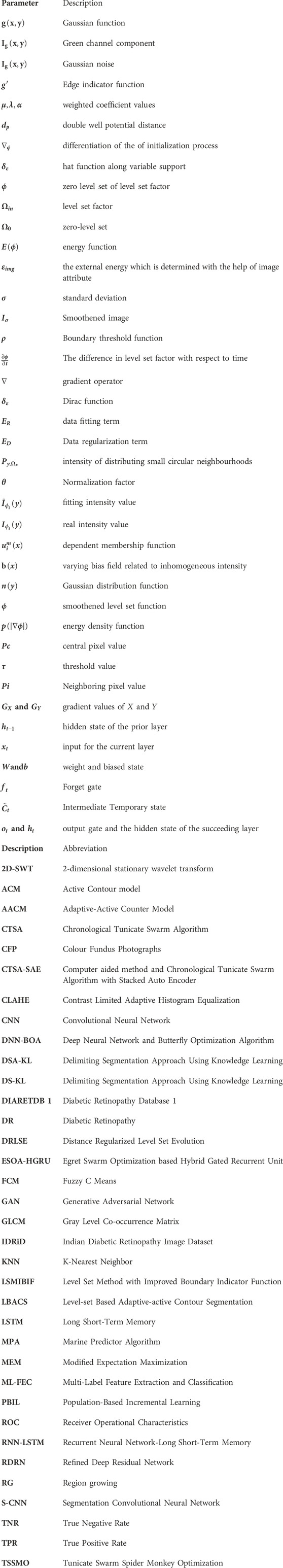

Glossary

Keywords: adaptive-active counter model, diabetic retinopathy, gray level co-occurrence matrix, level set method with improved boundary indicator function, long short term memory

Citation: Bhansali A, Patra R, Abouhawwash M, Askar SS, Awasthy M and Rao KBVB (2023) Level-set based adaptive-active contour segmentation technique with long short-term memory for diabetic retinopathy classification. Front. Bioeng. Biotechnol. 11:1286966. doi: 10.3389/fbioe.2023.1286966

Received: 31 August 2023; Accepted: 06 November 2023;

Published: 19 December 2023.

Edited by:

Parameshachari B. D, Nitte Meenakshi Institute of Technology, IndiaReviewed by:

Mohammed Rashad Baker, University of Kirkuk, IraqLuca Di Nunzio, Policlinico Tor Vergata, Italy

Sathyanarayana N, Vemana Institute of Technology, India

Copyright © 2023 Bhansali, Patra, Abouhawwash, Askar, Awasthy and Rao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohamed Abouhawwash, abouhaww@msu.edu

Ashok Bhansali1

Ashok Bhansali1  Rajkumar Patra

Rajkumar Patra Mohamed Abouhawwash

Mohamed Abouhawwash K. B. V. Brahma Rao

K. B. V. Brahma Rao