Background

Child maltreatment, encompassing physical, sexual, and emotional abuse, and neglect, is an urgent public health problem in the USA. Annually, almost 8 million children are reported to child protective services (CPS) for potential child maltreatment, and nearly three quarters of a million children are determined to be victims of abuse or neglect [1]. Child maltreatment can have a devastating impact on individual health, significantly increasing the risk of cognitive impairment [Reference Spratt, Friedenberg and LaRosa2,Reference Cowell, Cicchetti, Rogosch and Toth3] and mental health disorders [Reference Cowell, Cicchetti, Rogosch and Toth3], including behavioral problems [Reference Spratt, Friedenberg and LaRosa2,Reference Muniz, Fox, Miley, Delisi, Cigarran and Birnbaum4]. The adverse consequences of child maltreatment extend into adulthood by increasing individual risk for mental health issues and chronic health problems over one’s life course [Reference Soares, Howe and Heron5,Reference Ho, Celis-Morales and Gray6].

Over 12 million children in the USA, or approximately 60% of children under 5 years, are cared for by a nonparent on a regular basis (e.g., in a childcare center, or home-based care by a nonrelative) [7]. Young children are at highest risk for maltreatment and abuse-related fatalities [1]. Early childcare professionals (ECPs) spend substantial time with young children, often more than any other adult outside of their family. Therefore, this group is well positioned to identify signs of potential child maltreatment in young children. Further, ECPs are specifically identified as mandated reporters in all but three states (Indiana, New Jersey, and Wyoming), and in those three states all competent adults are designated as mandated reporters [8]. ECPs have a legal obligation to report suspected maltreatment. Yet, ECPs constitute less than one percent of referrals to CPS [1], suggesting that ECPs underreport child maltreatment [1,Reference Webster, O’Toole, O’Toole and Lucal9,Reference Greco, Guilera and Pereda10]. Underreporting represents a potentially critical gap to ensure child safety, given that proper CPS intervention following a report remains a key deterrent for child maltreatment recurrence and failure to report often results in continued or worsening abuse [Reference Vieth11,Reference McKenna12].

iLookOut for Child Abuse (iLookOut) was designed to provide online education to improve ECP’s knowledge and awareness of signs and symptoms of child maltreatment, reporting requirements, and practical approaches to understanding how to use reasonable suspicion as a threshold for reporting child maltreatment. Multiple studies have shown that iLookOut is effective at changing knowledge and attitudes related to child maltreatment, both in randomized controlled trials and real-world settings [Reference Mathews, Yang, Lehman, Mincemoyer, Verdiglione and Levi13–Reference Yang, Panlilio and Verdiglione16].

However, these same studies indicate that not all participants demonstrate the same knowledge gains. Differential knowledge gain may be due to variation in how specific learners experienced the training. For example, how acceptable or appropriate they found the training. Implementation outcomes (e.g., acceptability, appropriateness, and feasibility) are indicators of a program’s success. Studies have documented these as essential features of a program’s ability to affect training outcomes (e.g., to improve knowledge) [Reference Durlak and DuPre17]. Importantly, implementation outcomes indicate how well a program has been deployed and received by its targeted end user, reflecting end users’ experience of the program’s relevance, suitability, delivery methods, and appropriateness of content [Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman18].

Implementation science is still a relatively nascent field, concerned with understanding the translation of evidence-based programs and interventions into routine practice and identifying and addressing barriers that slow the uptake or impact the efficacy of evidence-based programs in real-world settings [Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman18]. Proctor et al. [Reference Proctor, Silmere and Raghavan19] established a conceptual framework to define and operationalize an approach to evaluate a program’s implementation success. Specifically, they outlined a list of “implementation outcomes” distinct from clinical or training outcomes (e.g., knowledge gain), including measurement of a program’s acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration, and sustainability. This framework provides a useful approach to assess translation gaps of evidence-based programs and, ultimately, to provide guidance about how a program may be improved to better reach its goals. Additionally, implementation outcomes have been shown to differ based on setting as well as user characteristics [Reference Dzewaltowski, Estabrooks, Klesges, Bull and Glasgow20,Reference Fell, Glasgow, Boles and McKay21].

iLookOut’s Core Training was developed to more effectively engage ECPs, compared to alternative mandated reporter trainings, through the use of a multimedia learning environment, video-based storylines, and game-based techniques [Reference Levi, Belser and Kapp22,Reference Kapp, Dore and Fiene23]. It is important to understand how these training approaches have been received by the end user to identify whether implementation outcomes for iLookOut help to explain the program’s efficacy for increasing knowledge. Important information about the efficacy of these approaches can be used to improve similar programs and aspects of iLookOut for specific users.

Previous work investigating the efficacy of iLookOut found that training outcomes differ by learner characteristics. Specifically, that learner age, race, education, and employment status impact pre- to post-training changes in knowledge and attitudes [Reference Yang, Panlilio and Verdiglione16]. Here, we aim to better understand whether specific program components and implementation outcomes differ for users based on professional (e.g., years of experience as a childcare worker, previous mandated reporter training) or demographic characteristics (e.g., age, gender, and educational attainment). Specifically, we will examine how the learner experience differs by user, whether these different experiences relate to efficacy of iLookOut, and ultimately, how iLookOut’s approach or content can be improved to increase learning for specific user groups. The present analyses include examining if prior training is related to acceptability of program components, how user profiles impact acceptability and knowledge gain, and whether targeted content based on pretest knowledge could yield shorter and more relevant training. Improved understanding of how user characteristics impact implementation and training outcomes could potentially enable individualized and responsive content, given that web-based trainings can be tailored (using branching logic) more easily than in-person training [Reference Bartley and Golek24,Reference Wild, Griggs and Downing25]. The present analysis also will examine the impact of iLookOut’s interactive approach to user engagement on perceived acceptability and appropriateness. Though implementation outcomes are commonplace for ensuring that evidence-based interventions are effective [Reference Matthews and Hudson26,Reference Sun, Tsai, Finger, Chen and Yeh27], few programs rigorously test implementation processes with the goal of understanding which program components are most effective for which users [Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman18,Reference Kilbourne, Glasgow and Chambers28]. In particular, mandated reporter education programs are seldom evaluated and often lack an evidence-based approach [Reference Carter, Bannon, Limbert, Docherty and Barlow29].

The current study sought to evaluate the implementation outcomes for more than 12,000 ECPs in Pennsylvania who completed the iLookOut Core Training. We tested whether learner demographic and professional characteristics were differentially associated with acceptability and appropriateness, and whether these explain (mediate) subsequent indicators of training effectiveness (e.g., gains in knowledge). Our overarching goal is to examine “what works for whom” with iLookOut in order to identify strategies for 1) optimizing outcomes for learners of iLookOut and 2) providing generalizable insights (for related web-based interventions) regarding what does (and does not) influence outcomes for various learners.

Methods

Participants

Participants were ECPs in Pennsylvania who completed the iLookOut Core Training as part of an open-enrollment trial. Participants were not actively recruited to the study; however, iLookOut is one of more than a dozen approved mandated reporter trainings which are required for all ECPs in Pennsylvania. iLookOut is listed on the Pennsylvania Department of Human Services website and is available free of charge. Those who completed the training from November 2014 to December 2018 were prospectively enrolled into the current study (n = 12,705). Of the 12,705 who were enrolled, all participants contributed demographic and professional characteristic data (Table 1); 12,409 (98%) completed the knowledge measure both pre- and post-training; 12,693 (99%) contributed evaluation data following the training (item-level missingness for evaluation data is indicated in Table 2). No remuneration was provided for completion; however, ECPs were able to earn three hours of professional credit for completing the program. ECPs provided informed consent online prior to participating.

Table 1. Learner characteristics and knowledge change (n = 12,705)

Note: CDA = Child Development Associate credential; GED = General Educational Development test.

Other race/ethnicities are: American Indian/Alaska Native, Native Hawaiian/Pacific Islander, and self-reported “other.”

Post-test knowledge score: n = 12,424; pretest knowledge score: n = 12,690; no missing demographic data.

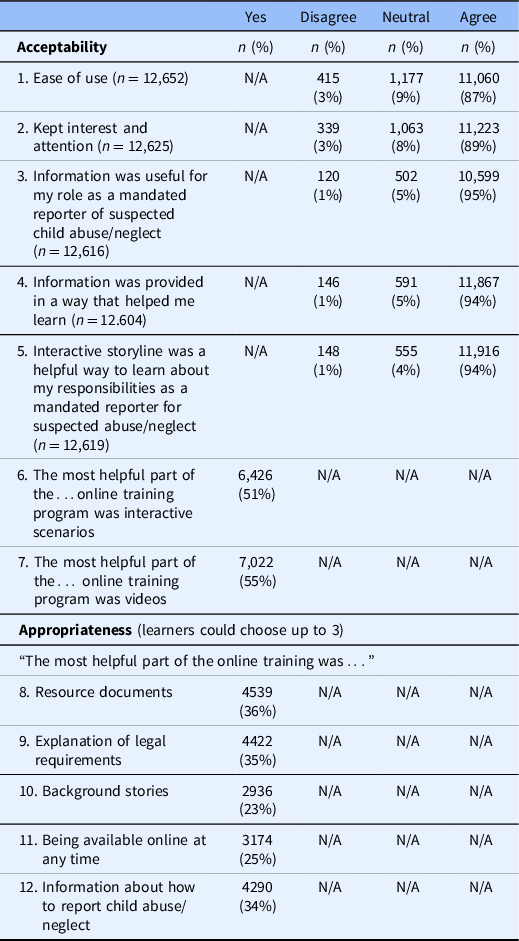

Table 2. Item-level indicators of acceptability and appropriateness of iLookOut

Notes: Program evaluation questions were given following the knowledge test after the training. Responses for each item were included for n = 12,705 unless otherwise noted.

iLookOut training

The iLookOut Core Training is a free, online, evidence-based mandated reporter training. It was created in 2013–2014 by a multidisciplinary team of experts (in child abuse, instructional design, pediatrics, early childhood education, ethics, and online learning) under the aegis of the Center for the Protection of Children at the Penn State Children’s Hospital. iLookOut uses an interactive, video-based storyline involving five children to engage learners with the goal of improving knowledge related to child maltreatment and changing ECP behaviors for recognizing, responding to, and reporting suspected child maltreatment. As events unfold, the learner receives didactic information and engages in various interactive exercises related to the different forms of child maltreatment. Training content includes red flags that should raise concern, risk factors, protective factors, strategies for supporting children and their families, as well as the process and legal requirements for reporting suspected child abuse. The goal is to engage learners in real-life stories which empower them to practice and implement what they have learned to help protect and support children who may be experiencing child maltreatment [Reference Levi, Belser and Kapp22].

Measures

Knowledge. Knowledge was measured using a 23-item, true/false, expert-validated instrument previously described [Reference Mathews, Yang, Lehman, Mincemoyer, Verdiglione and Levi13]. It measures individuals’ knowledge about what constitutes child maltreatment, risk factors for maltreatment, and legal requirements for reporting suspected maltreatment. Correct responses are scored as 1 and incorrect as 0 to provide a sum score for the 23-item knowledge measure, with a range of 0–23; higher scores indicate more knowledge about child maltreatment. Pre- and post-training question items were identical, but to reduce recall bias, their sequencing orders were changed between the pre- and post-tests. Knowledge change was the primary outcome used in models, calculated by subtracting pretraining score from post-training score. Change in knowledge score was included as a continuous variable in all models.

Demographics. Demographic and professional characteristics were collected pretraining using learner self-report, including gender, age, education, race/ethnicity, years of experience working as a childcare professional, learner’s work setting (e.g., religious facility, commercial center), and prior mandated reporter training. Each of these variables was dichotomized (dummy coded) prior to inclusion in analytic models.

Implementation outcomes. A post-training evaluation elicited feedback from learners about implementation outcomes. Constructs of acceptability and appropriateness were chosen based on Proctor’s conceptual framework 19, which aimed to define and operationalize a taxonomy for evaluating program implementation and success. Proctor defines Appropriateness as “the perceived fit, relevance, or compatibility” of a program for the end user and acceptability as the perception that it is “agreeable, palatable, or satisfactory” [Reference Proctor, Silmere and Raghavan19]. Learners reported on acceptability of the program, responding on a Likert scale (0 = strongly disagree to 6 = strongly agree) regarding whether the program was easy to use, if it kept their interest and attention, if information provided was useful to their role as a mandated reporter, whether information was provided in a way to help them learn, if the interactive storyline was helpful to learn about their responsibilities as a mandated reporter; and they indicated a yes or no to whether interactive scenarios or videos in the program were helpful. Appropriateness was reported as yes (1) or no (0) for whether each of the following were helpful: resource documents, explanation of legal requirements, background stories, the 24/7 online availability, or information on how to report child maltreatment. Likert response items were collapsed into three categories for inclusion in the final model: agree, neutral, and disagree based on responses.

Analytic approach

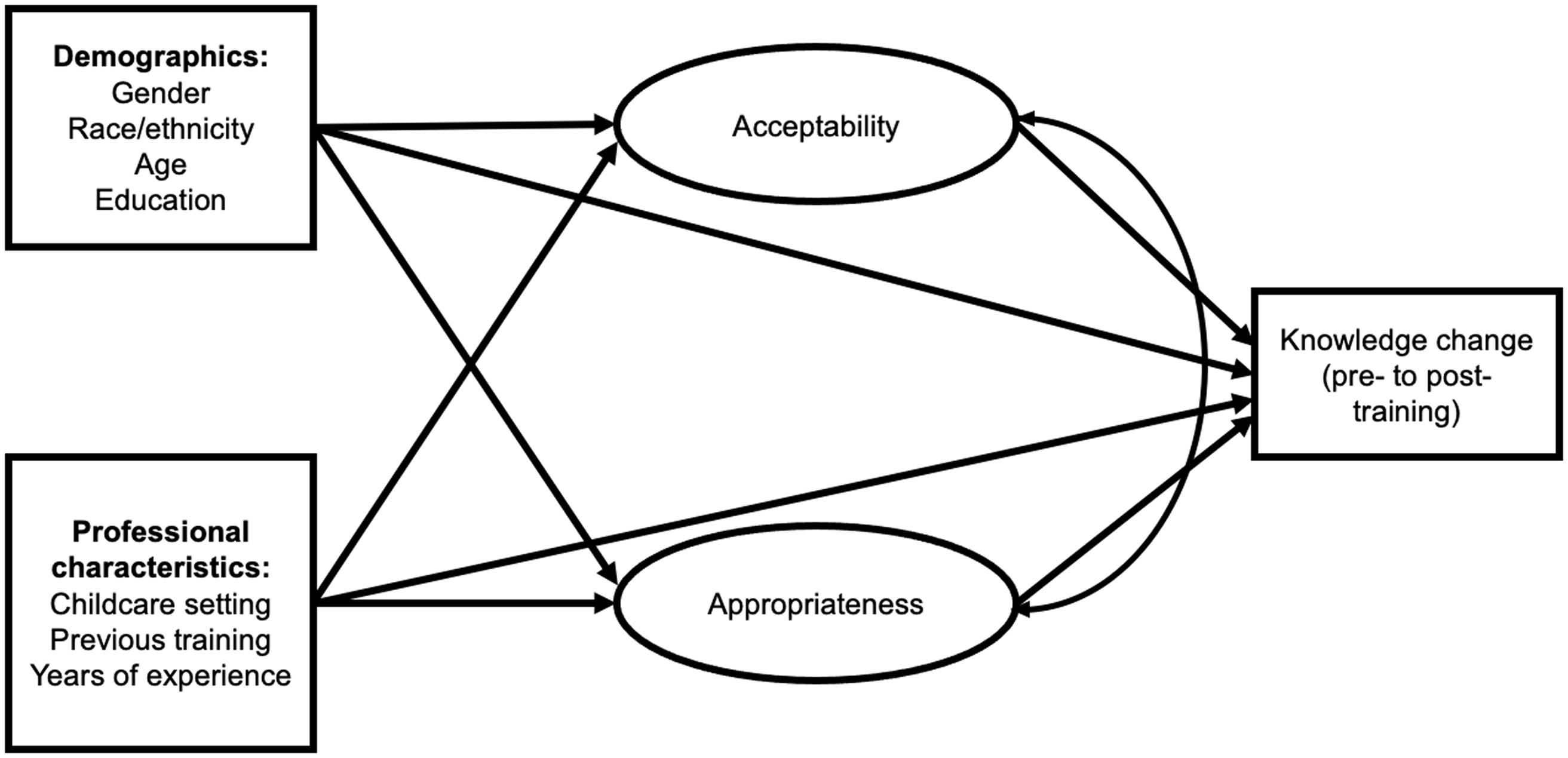

Guided by Proctor’s model on implementation outcomes 19, the current study employed a multiple indicator multiple cause (MIMIC) structural equation modeling (SEM) to examine the mediating effects of implementation outcomes on ECP learner demographic/professional characteristics and knowledge change. A measurement model was specified using confirmatory factor analysis (CFA) to estimate the factor structure of acceptability and appropriateness, based on observed variables (described in Table 2) and to verify the underlying latent construct. Once confirmed by the CFA, latent factors for both acceptability and appropriateness were included in a MIMIC structural model, which estimates the effect exogenous variables have on latent variables that are not directly observable [Reference Krishnakumar and Nagar30]. Acceptability and appropriateness were included concurrently in the MIMIC SEM to allow estimation of interrelationships between both latent variables (implementation outcomes) and observed variables (demographic/professional characteristics and knowledge change; Fig. 1). Once an appropriate measurement model was selected, the full latent variable path model (i.e., MIMIC SEM) was specified and included all hypothesized direct and indirect relationships, adjusting for baseline knowledge scores (see Fig. 1 for a conceptual diagram of the full MIMIC SEM model). MIMIC model was used in the main analysis of the study in order to examine the effects of demographic and professional characteristics as covariates in the CFA and SEM models [Reference Muthen. Muthén and Muthén31]. The MIMIC CFA and SEM analyses were conducted using Mplus v.8.5 using diagonally weighted least squares estimation with theta parameterization to account for inclusion of categorical variables in the model [Reference Muthen. Muthén and Muthén31].

Figure 1. Conceptual diagram of the full structural equation model estimating direct and indirect effects.

Model fit was evaluated using root-mean-square error of approximation with its 95% confidence interval (RMSEA ≤0.06), along with comparative fit index (CFI ≥0.90) and model χ2 [Reference tze and Bentler32]. In lieu of a Sobel test, which is inappropriate with violations of normality assumptions, we used bias-corrected bootstrapped confidence intervals to examine the significance of the indirect effects within the model [Reference Preacher and Hayes33,Reference Hayes. Hayes, Preacher, Myers, Bucy and Lance Holbert34]. Additionally, prior to running mediation models, we evaluated missingness in our dataset to test whether data employed in the covariance matrix were missing completely at random (MCAR) using Little’s MCAR test [Reference Little35].

Mediation. A mediation effect, or indirect effect, represents when a third variable operates as a pathway through which an exogenous variable affects an endogenous variable [Reference Preacher, Rucker and Hayes36]. For the present study, we estimated indirect effects separately for both acceptability and appropriateness for each category of learner (demographic and professional characteristics), as well as the total indirect effect through both constructs (acceptability plus appropriateness), and lastly total effect (direct and indirect effects) on knowledge change (Fig. 1). The aim was to investigate whether implementation outcomes differentially mediate relationships for specific learner groups and pre- to post-training knowledge change to better understand how the training program works (and/or may be modified) to improve knowledge outcomes.

Results

Baseline analyses

Demographic and professional characteristics of learners completing the training are presented in Table 1. A majority of the participants identified as female (89%), White (69%), had completed prior mandated reporter training (66%), and had worked less than 5 years as an early childcare professional (64%). Median baseline knowledge score was 14/23 (IQR 12, 16), and median post-training knowledge score was 17/23 (IQR 15, 19; Table 1). Learner responses to item-level indicators of appropriateness and acceptability are reported in Table 2. A large majority of learners reported that the program was easy to use (87%), that it kept their interest and attention (89%), that information was useful for their role as a mandated reporter (95%), that information was provided in a way that helped them to learn (94%), and that the interactive storyline was helpful for learning (94%). When asked to identify up to three components of the training that were most helpful, a slight majority identified iLookOut’s interactive scenarios (51%) or videos (55%), and a minority identified resource documents (36%), explanation of legal requirements (35%), background stories (23%), being available online at any time (25%), or how to report child maltreatment (34%) (Table 2).

Lower baseline knowledge scores were seen for learners who identified as Asian, Hispanic, Black, and other racial or ethnic minorities (compared to White learners), those whose highest level of education was high school or lower, as well as the youngest learners (18-29 years old), had<6 years’ experience as an ECP, had no prior mandated reporter training, or who worked in a religious early childhood program setting (Appendix Table 1). In general, consistent with previous research [Reference Humphreys, Piersiak and Panlilio15], individuals with a lower baseline knowledge score showed greater knowledge gains (β = −.57, 95%CI: −.59, −.56, p < .001; Appendix Table 2). Greater knowledge gains were seen with learners who rated the training more highly for acceptability (β = .08, 95%CI: .06, .11, p < .001) or appropriateness (β = .14, 95%CI: .11, .16, p < .001; Appendix Table 2).

Prior to running the full mediation models, we examined the factor structure of acceptability and appropriateness using CFA models. A single factor model was used for both implementation outcomes. See Fig. 2 for the visual depiction of item-level indicators of acceptability and appropriateness with standardized loadings. All were statistically significant, and model fit was high (CFI = .98; RMSEA = .05).

Figure 2. Confirmatory factor analysis of acceptability and appropriateness of iLookOut. Note: Figure depicts factor loadings (correlation) between the item-level indicator of either acceptability or appropriateness. All loadings are standardized; all paths are significant.

Results of Little’s test indicated that missing data mechanism did not meet MCAR assumptions: χ2 (2, 12,705) = 235.09, p < 0.001; Little, 1988). Missingness for one item-level indicator of acceptability (interactive storylines were most helpful) was associated with learner’s age and was therefore included in the final measurement model to control for possible effects of age on this item. The resulting estimates were nonsignificant, providing minimal evidence for missing at random (MAR) to proceed with the main analyses.

Results of path model by learner characteristic

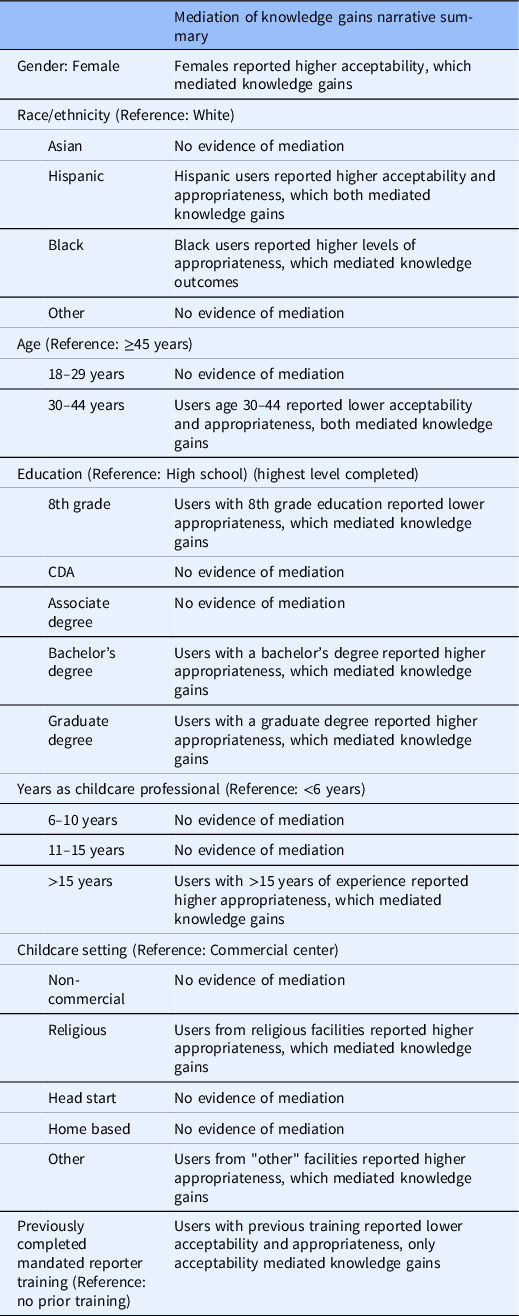

Results of the MIMIC SEM showed good data-model fit (CFI = .97; RMSEA = .03). See Figure 1 for a visual depiction of the SEM model and Table 3 for standardized path coefficients. All direct, indirect, and total effects for each path estimated are presented in Appendix Tables 2–7. Table 3 provides a summary of estimated direct effects, indirect effects via appropriateness and acceptability, and total indirect effects for learner characteristics and knowledge change (summarizing Appendix Tables 2, 5, and 6). Table 4 also summarizes these results and provides a narrative summary of mediation findings by learner characteristic. Paths to test for mediation were estimated concurrently in a single model and adjusted for learner demographic and professional characteristics, as well as baseline knowledge scores. SEM results are reported separately below for each demographic or professional characteristic investigated.

Table 3. Standardized path coefficients: direct and indirect effects

Table 4. Narrative summary by user characteristic of mediators of knowledge gains

Race/ethnicity

Adjusting for baseline knowledge and other demographic variables, results of the SEM showed that, relative to White learners, Asian (β = −.06; 95%CI: −.08, −.05, p < .001), Hispanic (β = −.08; 95%CI: −.09, −.06, p < .001), and Black (β = −.16; 95%CI: −.17, −.14, p < .001) learners had less knowledge gains following the training (Appendix Table 2). Hispanic learners reported higher acceptability (Appendix Table 3); Asian, Hispanic, and Black learners reported higher appropriateness (compared to White learners; Appendix Table 4). For most, these ratings of the program’s appropriateness and acceptability translated into mediation via implementation outcomes, indicating that their rating of the iLookOut training’s acceptability and appropriateness did impact their knowledge change. Specifically, acceptability mediated knowledge gains for Hispanic learners (indirect effect: β = .01; 95%CI: .003, .01, p = 0.001), whereas appropriateness mediated knowledge gains for both Hispanic (indirect effect: β = .01; 95%CI: .003, .01, p = .001) and Black learners (indirect effect: β = .01; 95%CI: .003, .01, p = .002; Appendix Table 5). However, for both Hispanic and Black learners, a statistically significant direct effect remained. For Asian learners, neither acceptability nor appropriateness mediated the relationship with knowledge gains, after adjusting for implementation outcomes, a significant direct effect remained (Table 3).

Gender

Gender was not associated with knowledge gains (Table 3). However, female learners reported higher acceptability of the training (β = .08; 95%CI: .05, .10, p < .001; Appendix Table 3), and the indirect effect via acceptability was statistically significant (Appendix Table 5). Although gender, per se, was not associated with knowledge gains, female learners gave (compared to males) higher acceptability ratings, which were associated with greater gains in knowledge.

Age

Younger learners had lower knowledge gains (age 18–29: β = −.07, 95%CI: −.09, −.04, p < .001; age 30–44 years: β = −.02, 95%CI: −.04, −.001, p = .036) compared to learners ≥45 years old (Appendix Table 2). Acceptability (β = −.004, 95%CI: −.01, −.001, p = .014) and appropriateness (β = −.02, 95%CI: −.02, −.01, p < .001) emerged as mediators for knowledge change only for learners age 30–44 years (Appendix Table 5), where lower acceptability and appropriateness were associated with less knowledge gains. For the youngest learners (age 18–29 years), implementation outcomes did not explain associations with less knowledge gains, compared to older learners (≥45 years; Table 3).

Education

Learners with higher levels of education had statistically significantly greater knowledge gains compared to those with a high school education (associate degree: β = .02, 95%CI: .01, .04, p = .002; bachelor’s degree: β = .13, 95%CI: .12, .15, p < .001; or graduate degree: β = .10, 95%CI: .08, .11, p < .001; Appendix Table 2). Acceptability did not mediate relationships between a learner’s education level and knowledge change (Table 3). Further, no mediation was found for learners with an associate degree, though a significant direct effect remained. Appropriateness mediated relationships between having a bachelor’s degree (β = .01, 95%CI: .01, .02, p = .001), a graduate degree (β = .01, 95%CI: .003, .02, p = .003), or an 8th grade education (β = −.01, 95%CI: −.01, −.002, p = .004) and knowledge gains (Appendix Table 5). For these groups, finding the program to be more appropriate was associated with greater knowledge gains. A statistically significant direct relationship remained for learners with a bachelor’s or graduate degree and knowledge gains, indicating that program appropriateness did not fully explain post-training knowledge gain (Table 3).

Years as practitioner

Number of years working as a childcare professional was not related to knowledge gains or acceptability (Table 3). However, those with more than 15 years of experience (compared to those with <6 years) found the training to be more appropriate (β = .05, 95%CI: .01, .08, p = .004; Appendix Table 4), and that appropriateness mediated the relationship with knowledge gains (Appendix Table 5). No mediation was found for learners with less than 15 years of childcare experience (Table 3).

Childcare setting

Compared to those working in a commercial center, learners working in a non-commercial center (β = .06, 95%CI: .04, .08, p < 0.001), a religious center (β = .08, 95%CI: .06, .09, p < .001), or other type of childcare setting (β = .07, 95%CI: .05, .08, p < .001) had greater knowledge gains (Appendix Table 2). No association was found between knowledge gains and working in either a Head Start or home-based program (Table 3). Type of childcare setting was not associated with acceptability (Table 3); however, learners who worked in a religious facility (β = .06, 95%CI: .03, .09, p < .001) or “other setting” (β = .04, 95%CI: .004, .07, p = .040) reported higher appropriateness of the training (Appendix Table 4). Appropriateness partially mediated the associations between knowledge gains and religious facility or other setting. For learners working in a non-commercial setting, there was no statistically significant indirect effect via either implementation outcome (Table 3).

Prior training

Prior mandated reporter training was associated with smaller gains in knowledge (compared to those without prior training; β = −.02, 95%CI: −.03, −.003, p = .015; Appendix Table 2). Those with prior training found the program to be less acceptable and less appropriate than those without (Table 3). Acceptability partially mediated the relationship between prior training and knowledge gain (direct effect: β = −.10, 95%CI: −.13, −.07, p < .001; indirect effect via acceptability: β = −.01, 95%CI: −.01, −.01, p < .001) such that among learners with prior mandated reporter training lower acceptability was associated with less knowledge gains (Appendix Tables 3 & 5).

Discussion

This study found that learner demographic and professional characteristics were differentially associated with knowledge change, and that program components explained these differences for most groups. Certain implementation outcomes were strongly associated with knowledge gains, notably acceptability for female learners and appropriateness for learners who had not completed high school or had >15 years of experience in a childcare setting. So too, group membership maintained a statistically significant direct relationship with knowledge change pre- to post-training (specifically, for Asian, Hispanic, and Black learners, those 30–44 years of age, with a bachelor’s or graduate degree, those working in a religious facility, and those with prior mandated reporter training). Where mediation was found, for the majority of groups, appropriateness emerged as the driving mediator.

Previous studies examining efficacy of the iLookOut Core Training have found strong evidence that iLookOut outperformed a standard mandated reporter training in a randomized controlled trial [Reference Mathews, Yang, Lehman, Mincemoyer, Verdiglione and Levi13,Reference Humphreys, Piersiak and Panlilio15] and resulted in knowledge gains in a real-world setting [Reference Yang, Panlilio and Verdiglione16]. Additionally, the real-world setting study found that learner age, race, and education were statistically significant predictors of knowledge change following the training. The present study aimed to build upon these results by examining whether (and how) knowledge change was associated with implementation outcomes. Building on Proctor’s conceptual framework [Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman18] for evaluating program implementation, we investigated whether implementation outcomes, specifically acceptability and appropriateness differentially mediated knowledge gains for specific groups – that is, was program acceptability or appropriateness responsible for differences in knowledge gain by learner characteristic.

Our finding that implementation outcomes are related to efficacy of a training on the sensitive topic of child maltreatment is consistent with other studies examining the relationship between acceptability and effectiveness [Reference Kim, Nickerson and Livingston37–Reference Villarreal, Ponce and Gutierrez39], as well as higher satisfaction with increases in knowledge 40. However, few studies have considered the impact of end-user demographics or professional characteristics on implementation outcomes and efficacy [Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman18,Reference Kilbourne, Glasgow and Chambers28].

Amidst the many associations identified, we believe the most salient findings involve the relationship between specific program components and characteristics of learners for whom the training was most (or least) effective. For example, learners who had not graduated high school demonstrated among the lowest knowledge gains despite having low baseline knowledge scores. As with prior research on the iLookOut training [Reference Humphreys, Piersiak and Panlilio15] and other interventions [Reference Villarreal, Ponce and Gutierrez39], lower baseline knowledge scores emerged as the strongest overall predictor of greater knowledge gains. This is important since it indicates that ECPs who are most in need of training benefit the most from the iLookOut training. Although, overall, learners without a high school education had lower knowledge gains compared to those with a high school education, those without a high school education did, as a group, endorse program components as appropriate at higher rates than those who graduated high school. Further, appropriateness emerged as a mediator for knowledge gains for those without a high school education, indicating that for those who did find the program appropriate, this was related to knowledge gain. Black learners had the lowest mean knowledge gain; however, there was not a clear pattern based on endorsement of item-level indicators of appropriateness, e.g., they endorsed program aspects such as reporting procedures or online availability at similar rates as other racial or ethnic groups. Although similar to learners without a high school education, appropriateness of the program emerged as a mediator for knowledge gains. More work is needed to understand what may be driving lower overall knowledge gains among Black learners. Lastly, this study did not investigate why certain program components were rated more or less appropriate by specific user groups, additional investigation is needed to understand how the training can be altered to increase knowledge gains for these groups.

Consistent with other studies of web-based learning [Reference Yu41], learners with a bachelor’s or graduate degree experienced the largest knowledge gains. These groups were also significantly more likely to endorse the value of resource documents and explanation of legal requirements, with item-level indicators of appropriateness mediating their higher knowledge gains.

Additionally important is understanding how group membership may overlap to impact training outcomes. Importantly, several user characteristics evaluated may represent causal relationships with knowledge gains (e.g., education level likely impacts a user’s ability to understand more complex content or number of years teaching may drive relevance or engagement with the iLookOut training). In the present study, race or ethnicity was included as a covariate based on previous findings that knowledge gain differed significantly by racial group.

However, race or ethnicity was not hypothesized here to be causal for knowledge outcomes; we acknowledge that differences seen are likely related to unmeasured racialized structures. Findings from this study show that Asian, Hispanic, and Black learners had statistically significantly lower knowledge gains than White learners. Implementation outcomes may help explain part of this, further, for Asian, Hispanic, and Black learners, some unmeasured learner characteristics may have also contributed to these lesser gains. For example, other studies have found that learner age [Reference Fell, Glasgow, Boles and McKay21] and education level [Reference Yu41] impact training outcomes. In the present study, we adjusted for these factors in analyses; however, there are likely important unmeasured characteristics of learners or factors associated with racialized structures that were not accounted for and may be contributing to learner discrepancies by racial/ethnic group. Further, Asian and Hispanic learners were less likely to have had previous mandated reporter training compared to White learners. We would expect not having previous training to lead to greater knowledge gains given that there is likely more to learn about child maltreatment signs and reporting procedures. Given that appropriateness partially mediated knowledge change in these groups, appropriateness of the program was important for learner outcomes, but other factors related to underrepresented racial/ethnic groups also appear to be driving knowledge change. Subsequent analyses should aim to better understand drivers of this relationship to identify how these lesser knowledge gains may be related to other structural factors, demographic, or professional characteristics. This may provide guidance on tailoring program components to increase knowledge gains for these groups e.g., to understand how multiple learner characteristics may interact to impact perceived usefulness of the program, and ultimately, knowledge change.

Strengths and limitations

Strengths of the present study include its large sample size, formal evaluation of acceptability and appropriateness and their impact on knowledge change, and use of a real-world setting. However, the study was not designed to assess various other implementation outcomes, including feasibility, adoption, fidelity, and penetration [Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman18]. Moreover, while our study provides information about which implementation outcomes impact knowledge change for specific groups, and which program components are most impactful, the present findings do not explain why specific components are less effective for certain groups, nor what changes are needed to increase knowledge gains for these groups. For such an understanding, in-depth qualitative work is needed. That said, the present findings reinforce the critical role that implementation outcomes have for ensuring that interventions are effective [Reference Matthews and Hudson26,Reference Sun, Tsai, Finger, Chen and Yeh27].

Conclusion

Implementation outcomes emerged as important drivers of knowledge change for multiple groups. Appropriateness emerged as a key mediator, providing support that iLookOut’s content and approach are important for knowledge change for a majority of learners. Moreover, the iLookOut Core Training’s use of a multimedia learning environment, video-based storylines, and game-based techniques [Reference Levi, Belser and Kapp22,Reference Kapp, Dore and Fiene23] were both endorsed by learners and correlated with increases in knowledge about child maltreatment and its reporting. Future work could build upon these findings by exploring first, why various aspects of the iLookOut training are rated by some groups as less acceptable or appropriate, and second, what changes would improve efficacy for low performing learners. Learner differences in acceptability and appropriateness of the iLookOut training may relate to how learners’ experiences or background impacts their engagement with the training, e.g., their age, cultural background, professional duties, or experience with technology may impact their training experience. Several studies have found that younger learners experience fewer technical difficulties with online trainings, whereas low-income learners experience greater technical difficulties [Reference Jaggars42]. Others have found that feelings of competency and a sense of affiliation with the training material are important drivers of learner outcomes [Reference Chen and Jang43]. For example, some users may identify more with vignettes presented based on the specific story or race or ethnicity of characters, impacting their engagement and learning. Users who perceive the training to be more relevant to their role as mandated reporters, possibly based on having made prior reports, or based on their professional designation (e.g., full-time versus volunteer) may be more invested in the training [Reference Baker, LeBlanc, Adebayo and Mathews44]. Future research should aim to better understand the reasons why specific user groups rated program aspects differently. Because web-based trainings are more easily tailored than many other training modalities, there are significant advantages to understanding what aspects of an online training optimize outcomes, and for whom. As such, the present findings can help inform the development of other web-based trainings with respect to content, approach, and which program components may help improve outcomes for specific learners.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/cts.2023.628.

Funding statement

This work was supported by the National Institutes of Health (grant numbers 5R01HD088448-04 and P50HD089922).

Competing interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.