1. Introduction

Typhoon-associated strong winds and heavy rains frequently cause a considerable amount of crop damages that negatively impacted farmers’ incomes and crop price balance on the agricultural market. Taiwan is located in an area that is one of the most susceptible to typhoons in the world. Based on the Taiwan council of agriculture (COA) agriculture statistics [

1,

2,

3,

4,

5], the average annual crop damage cost US

$352,482 in the past five years (2014–2018). Additionally, the average crop loss accounts for approximately 28% of the total crop production in Taiwan, which affects 31,009 hectares in average. Accordingly, the Taiwan government established the Implementation Rules of Agricultural Natural Disaster Relief for more than 30 years and has been trying to implement agricultural insurances recently. In an ideal situation, farmers’ incomes can be partially compensated by the emergency allowances based on the relief rules. However, limitations such as shortage of disaster relief funds, high administrative costs, and crop damage assessment disputes with the ongoing disaster relief rule urgently demand improvements.

Among the limitations, crop damage assessment disputes with associated high administrative costs are critical to the relief implementation. The current crop damage assessment relies heavily on in-situ manual visual field observations to quantify lodging percentage and lodging severity. However, the manual visual field observations are time-consuming and objective, which frequently lead to conflicts between observers and farmers. Additionally, observers from townships and county governments need to fulfill a required process, including a preliminary disaster assessment, a comprehensive disaster investigation, and a review sampling assessment. Generally, the required process takes approximately 1 to 2 months, depending on the size of damaged areas, which is time-consuming, subjective, and labor-intensive. Consequently, farmers’ livelihood is heavily impacted because they cannot resume cultivation but need to keep their damaged crops in the field until the whole process complete. Therefore, it is urgent to develop an efficient, objective, and scientific-based quantitative method to reduce crop damage assessment disputes and to accelerate the disaster compensation process.

Recently, thanks to the development of advanced remote sensing (RS) techniques, airborne-based unmanned aerial vehicles (UAVs) have been applied to many real-world problems such as disaster impacts related land cover change [

6,

7,

8] and crop lodging assessment. Compared to traditional RS techniques [

9,

10,

11,

12,

13], UAVs exhibit many advantages such as reasonable cost, fine spatial resolution, and real-time monitoring ability. Hence, UAVs have demonstrated many successful applications on combining multispectral data, textural features, plant traits information (such as plant height), and thermal data to assess crop lodging. Yang et al. [

14] combined spectral, plant heights, and textural features obtained from UAV and proposed a mixed classification method to determine the lodging rate of rice, which has an accuracy of 96.17%. Liu et al. [

15] presented their work using RGB images obtained by UAV and combined with thermal infrared images for rice lodging, which reveals the false positive/negative rate of less than 10%. Wilke et al. [

16] applied a UAV-based canopy height model combined with an objective threshold approach to quantify lodging percentage and severity.

On the other hand, with the cutting-edge computing power, machine learning and deep learning (DL) network technology have been revitalized and performed many successful agricultural applications recently. Zhao et al. [

17] proposed deep learning UNet (U-shaped Network) architecture to assess rice lodging using UAV imagery. The author reported that using RGB images reached 0.96 dice coefficients. Mardanisamani et al. [

18] used a deep convolutional neural network (CNN) augmented with handcrafted texture features to predict crop lodging, which achieves comparable results while having a substantially lower number of parameters. Kwak and Park [

19] reported their work of using two machine learning classifiers, including random forest and support vector machine, to improve crop classification. Linking gray-level co-occurrence matrix (GLCM)-based texture information and time-series UAV images, the authors have been able to achieve the overall accuracy of 98.72%. Yang et al. [

20] used deep CNN for rice grain yield estimation based on UAV images at the ripening stage. The authors stated that the deep CNN model has been able to provide a steadier yield forecast than the traditional vegetation index-based method.

Besides, DL technology plays a vital role in the development of precision agriculture (PA) which emerged in the mid-1980s. Due to the information-based approach of PA, DL technology, especially deep CNN or so-called DNNs (deep neural networks), enhances the substantial number of input image-based analysis such as weed detection, disease detection, and species recognition. Huang et al. [

21] proposed a fully convolutional network (FCN) to generate accurate weed cover maps, which achieved 93.5% overall accuracy and 88.3% weed recognition accuracy. Sa et al. [

22] overcame the limitation of the input image size of DNNs by introducing a sliding window technique for a large-scaled semantic weed mapping framework. Ma et al. [

23] compared the performance of SegNet, FCN, and UNet on the classification results of rice seedlings, background, and weeds. The authors reported that SegNet achieved 92.7% accuracy, which outperformed the other two models, was well-suited for processing the pixel classification of images of tiny and abnormally shaped rice seedlings and weeds in paddy fields. Ferentinos [

24] tested AlexNet, AlexNetOWTBn, GoogLeNet, Overfeat, and VGG models for 25 different plant disease detection and diagnosis and reached the best performance with a 99.53% success rate. Kerkech et al. [

25] used a CNN based model combined with three vegetation indices and color information from UAV images to detect diseases in vineyards, which revealed 95.8% accuracy. Fuentes-Pacheco et al. [

26] used a SegNet-based CNN architecture to perform pixel-wise fig plant semantic segmentation and achieved a mean accuracy of 93.85%. Grinblat et al. [

27] presented a successful work on utilizing deep CNNs to identify and classify species based on morphology patterns. However, to the best of our knowledge, the potential of using a semantic segmentation neural network for rice lodging identification of UAV imagery is not yet been accessed.

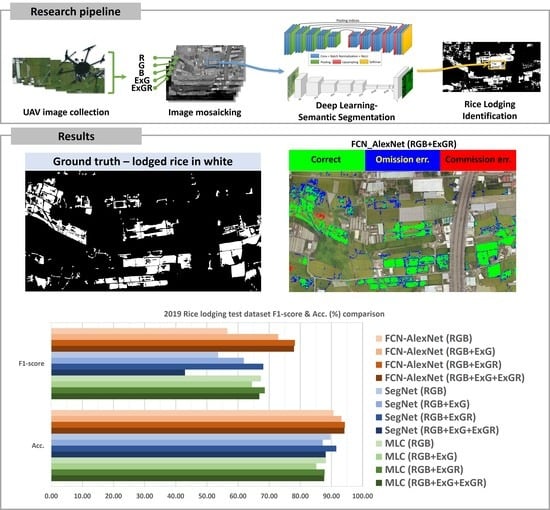

Therefore, to benefit from UAV data and deep learning network technologies, in this paper, a rice lodging assessment method is proposed by combining UAV images with deep learning techniques. Specifically, lodged rice visible spectrum information and vegetation indexes obtained from UAV images are combined to train a total of eight classification models in two semantic segmentation neural networks, SegNet and FCN-AlexNet. The performance of these eight models is evaluated by their image classification accuracy as well as the associated computation time. The overall objective of this paper is to achieve the following purposes:

Rice lodging spectrum information is obtained from UAV images collected from a study area (about 40 hectares) in Taiwan to reduce the workload of in-situ manual visual field observations.

UAV images are used to perform rice lodging classification by sematic segmentation neural network models, which aim to improve the accuracy of lodging assessment and serves as evidence of subsequent disaster subsidies.

Multiple information, including visible light spectrum and vegetation index information, are involved in the proposed rice lodging assessment method to improve image classification accuracy.

Two standard image semantic segmentation network models are tested. Their applicability is evaluated based on their computational speed and classification accuracy.

Establish a rice lodging image dataset that can serve as a valuable resource for expert systems, disaster relief assistance, and agricultural insurance application data.

4. Conclusions

To data, the rice lodging assessment still heavily relies on manual objective evaluation, which is time-consuming, labor-intensive, and problematic in terms of its poor efficiency and objectivity. The proposed rice lodging identification method aims to provide an effective and efficient scientific reference to assess rice lodging. In particular, two deep learning based semantic segmentation networks, FCN-AlexNet and SegNet, are implemented with vegetation indices for rice lodging identification in multi-date UAV visible images. As the testing dataset results show, FCN-AlexNet outperforms SegNet and MLC and reaches the highest F1-score of 83.56% and accuracy of 94.43%. The higher F1-score indicates that FCN-AlexNet has a better balance between precision and recall. The additional vegetation index information leverages the accuracy performance for both networks in terms of improved F1-scores and accuracy. Moreover, implementing FCN-AlexNet and SegNet can reduce the computation time by 10–15 times compared to using the traditional MLC. Furthermore, these two networks work well on the 230-ha image, which provides a great potential to broader area applications with promising rice lodging identification ability.

The proposed method also has a potential improvement space by providing more training data produced by implementing data argumentation process or employing other alternative network structures, such as E-Net or FC-DenseNet [

36,

37]. Meanwhile, to deal with a board area (up to hundred thousand ha) of an agricultural disaster survey in a temporal and spatial efficiency with economic benefit, parallel computation should be employed for deep-learning model execution in the future. On the other hand, edge computing techniques with a hierarchical image processing in UAV-equipped microcomputers can be applied to the deep-learning model to provide real-time agricultural disaster survey.