German Language Adaptation of the NAVS (NAVS-G) and of the NAT (NAT-G): Testing Grammar in Aphasia

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Participants

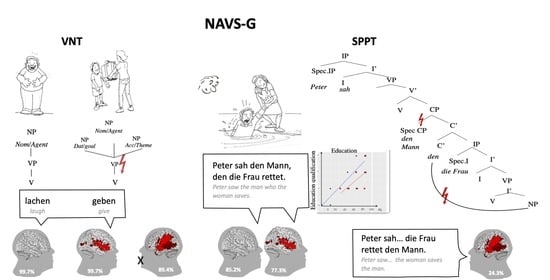

2.3. Adaptation of NAVS and NAT to the German Language

2.4. Scoring

2.5. Data Analysis

3. Results

3.1. The NAVS-G VNT

3.1.1. Participants without Aphasia: HP and RHSP Groups

3.1.2. Participants with Aphasia: LHSP Group

3.1.3. Difference between the Groups

3.2. The NAVS-G VCT

3.3. The NAVS-G ASPT

3.3.1. Participants without Aphasia (HP and RHSP)

3.3.2. Participants with Aphasia (LHSP)

3.3.3. Difference between the Groups

3.4. The NAVS-G SPPT

3.4.1. Participants without Aphasia (HP and RHSP Groups)

3.4.2. Participants with Aphasia (LHSP)

3.4.3. Difference between the Groups

3.5. The NAVS-G SCT

3.5.1. Participants without Aphasia (HP and RHSP Groups)

3.5.2. Participants with Aphasia (LHSP Group)

3.5.3. Difference between the Groups

3.6. The NAT-G

3.6.1. Participants without Aphasia (HP and RHSP Groups)

3.6.2. Participants with Aphasia (LHSP Group)

3.6.3. Difference between the Groups

3.7. NAVS-G and NAT-G Errors’ Analysis in Participants with Aphasia (LHSP Group)

3.8. Correlation and Comparison across the Subsets

4. Discussion

4.1. The Adaptation to the German Language

4.1.1. NAVS-G for Testing Syntactic Competence at the Verb Level

4.1.2. NAVS-G and NAT-G for Testing Syntactic Competence at the Sentence Level

4.1.3. Sensitivity in Detecting Aphasia

4.2. The Importance of the Covariates’ Control

4.2.1. The Covariate “Age”

4.2.2. The Covariate “Education”

4.2.3. The Role of the Right Hemisphere

4.2.4. The Covariates “Clinical Scores”

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chomsky, N. Logical structures in language. Am. Doc. 1957, 8, 284–291. [Google Scholar] [CrossRef]

- Jackendoff, R. Possible stages in the evolution of the language capacity. Trends Cogn. Sci. 1999, 3, 272–279. [Google Scholar] [CrossRef]

- Chomsky, N. Aspects of the Theory of Syntax; MIT Press: Cambridge, MA, USA, 1965. [Google Scholar]

- Chomsky, N. Minimalist Inquiries: The Framework. In Step by Step: Essays on Minimalis in Honor of Howard Lasnik; MIT Press: Cambridge, MA, USA, 2000; pp. 89–155. [Google Scholar]

- Chomsky, N.; Miller, G.A. Finite state languages. Inf. Control. 1958, 1, 91–112. [Google Scholar] [CrossRef] [Green Version]

- Ahrens, K. Verbal integration: The interaction of participant roles and sentential argument structure. J. Psycholinguist Res. 2003, 32, 497–516. [Google Scholar] [CrossRef] [PubMed]

- Barbieri, E.; Brambilla, I.; Thompson, C.K.; Luzzatti, C. Verb and sentence processing patterns in healthy Italian participants: Insight from the Northwestern Assessment of Verbs and Sentences (NAVS). J. Commun. Disord. 2019, 79, 58–75. [Google Scholar] [CrossRef] [PubMed]

- Cho-Reyes, S.; Thompson, C.K. Verb and sentence production and comprehension in aphasia: Northwestern Assessment of Verbs and Sentences (NAVS). Aphasiology 2012, 26, 1250–1277. [Google Scholar] [CrossRef] [Green Version]

- Chomsky, N. Lectures on Government and Binding: The Pisa Lectures, 4th ed.; Studies in generative grammar; Foris Publications: Dordrecht, The Netherlands, 1986; p. 371. [Google Scholar]

- Kim, M.; Thompson, C.K. Verb deficits in Alzheimer’s disease and agrammatism: Implications for lexical organization. Brain Lang. 2004, 88, 1–20. [Google Scholar] [CrossRef]

- Smolka, E.; Ravid, D. What is a verb? Linguistic, psycholinguistic and developmental perspectives on verbs in Germanic and Semitic languages. Ment. Lex. 2019, 14, 169–188. [Google Scholar] [CrossRef]

- Hentschel, E.; Weydt, H. Handbuch der Deutschen Grammatik, 2nd ed.; de Gruyter: Beling, Germany, 1994; p. 452. [Google Scholar]

- Ferreira, F. Effects of length and syntactic complexity on initiation times for prepared utterances. J. Mem. Lang. 1991, 30, 210–233. [Google Scholar] [CrossRef]

- Givón, T. Markedness in Grammar: Distributional, Communicative and Cognitive Correlates of Syntactic Structure. Stud. Lang. 1991, 15, 335–370. [Google Scholar] [CrossRef]

- Kubon, V. A Method for Analyzing Clause Complexity. Prague Bull. Math. Linguist. 2001, 75, 5–28. [Google Scholar]

- Mondorf, B. Support for More-Support. In Determinants of Grammatical Variation in English; Rohdenburg, G., Mondorf, B., Eds.; Mouton de Gruyter: Berlin, Germany, 2003; pp. 251–304. [Google Scholar]

- Rohdenburg, G. Cognitive complexity and increased grammatical explicitness in English. Cogn. Linguist. 1996, 7, 149–182. [Google Scholar] [CrossRef]

- Schleppegrell, M.J. Subordination and linguistic complexity. Discourse Process. 1992, 15, 117–131. [Google Scholar] [CrossRef]

- Hawkins, J.A. A Performance Theory of Order and Constituency; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Johnson, N.F. On the relationship between sentence structure and the latency in generating the sentence. J. Verbal Learn. Verbal Behav. 1966, 5, 375–380. [Google Scholar] [CrossRef]

- Rickford, J.R.; Wasow, T.A. Syntactic Variation and Change in Progress: Loss of the Verbal Coda in Topic-Restricting as Far as Constructions. Language 1995, 71, 102–131. [Google Scholar] [CrossRef] [Green Version]

- Miller, G.A.; Chomsky, N. Finitary Models of Language Users. In Handbook of Mathematical Psychology; Luce, R.D., Bush, R.R., Galanter, E., Eds.; John Wiley & Sons: New York, NY, USA, 1963; p. 419. [Google Scholar]

- Canu, E.; Agosta, F.; Imperiale, F.; Ferraro, P.M.; Fontana, A.; Magnani, G.; Mesulam, M.-M.; Thompson, C.K.; Weintraub, S.; Moro, A.; et al. Northwestern Anagram Test-Italian (Nat-I) for primary progressive aphasia. Cortex 2019, 119, 497–510. [Google Scholar] [CrossRef] [PubMed]

- Diessel, H.; Tomasello, M. The development of relative clauses in spontaneous child speech. Cogn. Linguist. 2000, 11, 131–151. [Google Scholar] [CrossRef] [Green Version]

- Gibson, E. Linguistic complexity: Locality of syntactic dependencies. Cognition 1998, 68, 1–76. [Google Scholar] [CrossRef]

- Gibson, E. The Dependency Locality Theory: A Distance-Based Theory of Linguistic Complexity. In Image, Language, Brain; The MIT Press: Cambridge, MA, USA, 2000; pp. 94–126. [Google Scholar]

- Jurafsky, D.; Martin, J. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition; Prentice Hall: Hoboken, NJ, USA, 2008; Volume 2. [Google Scholar]

- Givón, T. The Ontogeny of Relative Clauses: How Children Learn to Negotiate Complex Reference. In The Genesis of Syntactic Complexity; John Benjamins Publishing: Amsterdam, The Netherlands, 2009; pp. 205–240. [Google Scholar]

- Scott, C.M. Syntactic Ability in Children and Adolescents with Language and Learning Disabilities. In Language Development across Childhood and Adolescence; John Benjamins Publishing: Amsterdam, The Netherlands, 2004; pp. 111–133. [Google Scholar]

- Cairns, D.; Marshall, J.; Cairns, P.; Dipper, L. Event processing through naming: Investigating event focus in two people with aphasia. Lang. Cogn. Process. 2007, 22, 201–233. [Google Scholar] [CrossRef]

- Meltzer-Asscher, A.; Mack, J.E.; Barbieri, E.; Thompson, C.K. How the brain processes different dimensions of argument structure complexity: Evidence from fMRI. Brain Lang. 2015, 142, 65–75. [Google Scholar] [CrossRef] [Green Version]

- Thompson, C.K.; Meltzer-Asscher, A. Neurocognitive mechanisms of verb argument structure processing. In Structuring the Argument; John Benjamins Publishing: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Thompson, C.K.; Shapiro, L.P. Complexity in treatment of syntactic deficits. Am. J. Speech Lang. Pathol. 2007, 16, 30–42. [Google Scholar] [CrossRef]

- Dick, F.; Bates, E.; Wulfeck, B.; Utman, J.A.; Dronkers, N.; Gernsbacher, M.A. Language deficits, localization, and grammar: Evidence for a distributive model of language breakdown in aphasic patients and neurologically intact individuals. Psychol. Rev. 2001, 108, 759–788. [Google Scholar] [CrossRef] [PubMed]

- Thompson, C.K.; Tait, M.E.; Ballard, K.J.; Fix, S.C. Agrammatic Aphasic Subjects’ Comprehension of Subject and Object ExtractedWhQuestions. Brain Lang. 1999, 67, 169–187. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Thompson, C.K. Assessing Syntactic Deficits in Chinese Broca’s aphasia using the Northwestern Assessment of Verbs and Sentences-Chinese (NAVS-C). Aphasiology 2016, 30, 815–840. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Friedmann, N.; Shapiro, L.P. Agrammatic comprehension of simple active sentences with moved constituents: Hebrew OSV and OVS structures. J. Speech Lang. Hear Res. 2003, 46, 288–297. [Google Scholar] [CrossRef]

- Bastiaanse, R.; Hurkmans, J.; Links, P. The training of verb production in Broca’s aphasia: A multiple-baseline across-behaviours study. Aphasiology 2006, 20, 298–311. [Google Scholar] [CrossRef]

- Faroqi-Shah, Y.; Thompson, C.K. Effect of lexical cues on the production of active and passive sentences in Broca’s and Wernicke’s aphasia. Brain Lang. 2003, 85, 409–426. [Google Scholar] [CrossRef] [Green Version]

- Mohr, J.P. Revision of Broca Aphasia and the Syndrome of Broca’s Area Infarction and its Implications in Aphasia Theory. In Clinical Aphasiology, Proceedings of the 10th Clinical Aphasiology Conference, Bar Harbor, ME, USA, 1–5 June 1980; BRK Publishers: Minneapolis, MN, USA, 1980. [Google Scholar]

- Koester, D.; Schiller, N.O. The functional neuroanatomy of morphology in language production. NeuroImage 2011, 55, 732–741. [Google Scholar] [CrossRef] [Green Version]

- Kepinska, O.; De Rover, M.; Caspers, J.; Schiller, N.O. Connectivity of the hippocampus and Broca’s area during acquisition of a novel grammar. NeuroImage 2018, 165, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Musso, M.; Moro, A.; Glauche, V.; Rijntjes, M.; Reichenbach, J.R.; Büchel, C.; Weiller, C. Broca’s area and the language instinct. Nat. Neurosci. 2003, 6, 774–781. [Google Scholar] [CrossRef]

- Musso, M.; Weiller, C.; Horn, A.; Glauche, V.; Umarova, R.; Hennig, J.; Schneider, A.; Rijntjes, M. A single dual-stream framework for syntactic computations in music and language. NeuroImage 2015, 117, 267–283. [Google Scholar] [CrossRef]

- Heim, S.; Friederici, A.D.; Schiller, N.O.; Rüschemeyer, S.-A.; Amunts, K. The determiner congruency effect in language production investigated with functional MRI. Hum. Brain Mapp. 2008, 30, 928–940. [Google Scholar] [CrossRef]

- Marshall, J.C. The description and interpretation of aphasic language disorder. Neuropsychologia 1986, 24, 5–24. [Google Scholar] [CrossRef]

- Mesulam, M.M. Large-scale neurocognitive networks and distributed processing for attention, language, and memory. Ann. Neurol. 1990, 28, 597–613. [Google Scholar] [CrossRef]

- Berlingeri, M.; Crepaldi, D.; Roberti, R.; Scialfa, G.; Luzzatti, C.; Paulesu, E. Nouns and verbs in the brain: Grammatical class and task specific effects as revealed by fMRI. Cogn. Neuropsychol. 2008, 25, 528–558. [Google Scholar] [CrossRef] [Green Version]

- Berndt, R.; Haendiges, A.; Burton, M.; Mitchum, C. Grammatical class and imageability in aphasic word production: Their effects are independent. J. Neurolinguistics 2002, 15, 353–371. [Google Scholar] [CrossRef]

- Bornkessel, I.; McElree, B.; Schlesewsky, M.; Friederici, A.D. Multi-dimensional contributions to garden path strength: Dissociating phrase structure from case marking. J. Mem. Lang. 2004, 51, 495–522. [Google Scholar] [CrossRef]

- Hussey, E.K.; Teubner-Rhodes, S.E.; Dougherty, M.R.; Novick, J.M. Parsing under pressure: The role of performance pressure in cognitive control and syntactic ambiguity resolution. In Proceedings of the 23rd Annual CUNY Conference on Human Sentence Processing, New York, NY, USA, 18–20 March 2010. [Google Scholar]

- Kiran, S.; Thompson, C.K. Neuroplasticity of Language Networks in Aphasia: Advances, Updates, and Future Challenges. Front. Neurol. 2019, 10, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schwartz, M.F.; Saffran, E.M.; Marin, O.S. The word order problem in agrammatism. Brain Lang. 1980, 10, 249–262. [Google Scholar] [CrossRef]

- Shapiro, L.P.; Zurif, E.; Grimshaw, J. Sentence processing and the mental representation of verbs. Cognition 1987, 27, 219–246. [Google Scholar] [CrossRef]

- Wernicke, C. Der Aphasische Symptomenkomplex. In Der Aphasische Symptomencomplex; Springer: Berlin/Heidelberg, Germany, 1974; pp. 1–70. [Google Scholar]

- Poeppel, D.; Hickok, G. Towards a new functional anatomy of language. Cognition 2004, 92, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Gialanella, B. Aphasia assessment and functional outcome prediction in patients with aphasia after stroke. J. Neurol. 2010, 258, 343–349. [Google Scholar] [CrossRef]

- Greener, J.; Enderby, P.; Whurr, R. Pharmacological treatment for aphasia following stroke. Cochrane Database Syst. Rev. 2001, 2001, CD000424. [Google Scholar] [CrossRef] [PubMed]

- Henseler, I.; Regenbrecht, F.; Obrig, H. Lesion correlates of patholinguistic profiles in chronic aphasia: Comparisons of syndrome-, modality- and symptom-level assessment. Brain 2014, 137, 918–930. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huber, W.; Willmes, K.; Poeck, K.; Van Vleymen, B.; Deberdt, W. Piracetam as an adjuvant to language therapy for aphasia: A randomized double-blind placebo-controlled pilot study. Arch. Phys. Med. Rehabilitation 1997, 78, 245–250. [Google Scholar] [CrossRef]

- Luzzatti, C.; Willmes, K.; De Bleser, R.; Bianchi, A.; Chiesa, G.; De Tanti, A.; Gonella, M.L.; Lorenzi, L.; Bozzoli, C. Nuovi dati normativi per la versione italiana dell’Aachener Aphasie test (AAT). Arch. Psicol. Neurol. Psichiatria 1994, 55, 1086–1131. [Google Scholar]

- Miller, N.; Willmes, K.; De Bleser, R. The psychometric properties of the English language version of the Aachen Aphasia Test (EAAT). Aphasiology 2000, 14, 683–722. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bedirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool for Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Willmes, K. An approach to analyzing a single subject’s scores obtained in a standardized test with application to the aachen aphasia test (AAT). J. Clin. Exp. Neuropsychol. 1985, 7, 331–352. [Google Scholar] [CrossRef]

- Willmes, K.; Poeck, K.; Weniger, D.; Huber, W. Facet theory applied to the construction and validation of the Aachen Aphasia Test. Brain Lang. 1983, 18, 259–276. [Google Scholar] [CrossRef]

- Adelt, A.; Hanne, S.; Stadie, N. Treatment of sentence comprehension and production in aphasia: Is there cross-modal generalisation? Neuropsychol. Rehabilitation 2016, 28, 937–965. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ballard, K.J.; Thompson, C.K. Treatment and Generalization of Complex Sentence Production in Agrammatism. J. Speech Lang. Hear. Res. 1999, 42, 690–707. [Google Scholar] [CrossRef] [PubMed]

- Caplan, D.; Hildebrandt, N.; Makris, N. Location of lesions in stroke patients with deficits in syntactic processing in sentence comprehension. Brain 1996, 119, 933–949. [Google Scholar] [CrossRef] [Green Version]

- Jacobs, B.J.; Thompson, C.K. Cross-Modal Generalization Effects of Training Noncanonical Sentence Comprehension and Production in Agrammatic Aphasia. J. Speech Lang. Hear. Res. 2000, 43, 5–20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Levy, H.; Friedmann, N. Treatment of syntactic movement in syntactic SLI: A case study. First Lang. 2009, 29, 15–49. [Google Scholar] [CrossRef]

- Schröder, A.; Burchert, F.; Stadie, N. Training-induced improvement of noncanonical sentence production does not generalize to comprehension: Evidence for modality-specific processes. Cogn. Neuropsychol. 2014, 32, 195–220. [Google Scholar] [CrossRef] [PubMed]

- Thompson, C.K.; Choy, J.J.; Holland, A.; Cole, R. Sentactics®: Computer-automated treatment of underlying forms. Aphasiology 2010, 24, 1242–1266. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thompson, C.K.; Ouden, D.-B.D.; Bonakdarpour, B.; Garibaldi, K.; Parrish, T.B. Neural plasticity and treatment-induced recovery of sentence processing in agrammatism. Neuropsychol. 2010, 48, 3211–3227. [Google Scholar] [CrossRef] [Green Version]

- Thompson, C.K.; Shapiro, L.P. Treating agrammatic aphasia within a linguistic framework: Treatment of Underlying Forms. Aphasiology 2005, 19, 1021–1036. [Google Scholar] [CrossRef]

- Thompson, C.K.; Shapiro, L.P.; Kiran, S.; Sobecks, J. The Role of Syntactic Complexity in Treatment of Sentence Deficits in Agrammatic Aphasia. J. Speech Lang. Hear. Res. 2003, 46, 591–607. [Google Scholar] [CrossRef]

- Thompson, C.K.; Shapiro, L.P.; Tait, M.E.; Jacobs, B.J.; Schneider, S.L. TrainingWh-Question Production in Agrammatic Aphasia: Analysis of Argument and Adjunct Movement. Brain Lang. 1996, 52, 175–228. [Google Scholar] [CrossRef] [Green Version]

- Helm-Estabrooks, N.; Fitzpatrick, P.M.; Barresi, B. Response of an Agrammatic Patient to a Syntax Stimulation Program for Aphasia. J. Speech Hear. Disord. 1981, 46, 422–427. [Google Scholar] [CrossRef]

- Barbieri, E.; Mack, J.; Chiappetta, B.; Europa, E.; Thompson, C.K. Recovery of offline and online sentence processing in aphasia: Language and domain-general network neuroplasticity. Cortex 2019, 120, 394–418. [Google Scholar] [CrossRef]

- Thompson, C.K.; Ballard, K.J.; Shapiro, L.P. The role of syntactic complexity in training wh-movement structures in agrammatic aphasia: Optimal order for promoting generalization. J. Int. Neuropsychol. Soc. 1998, 4, 661–674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thompson, C.K.; Shapiro, L.P.; Ballard, K.J.; Jacobs, B.J.; Schneider, S.S.; Tait, M.E. Training and Generalized Production of wh- and NP-Movement Structures in Agrammatic Aphasia. J. Speech Hear. Disord. 1997, 40, 228–244. [Google Scholar] [CrossRef]

- Schröder, A.; Lorenz, A.; Burchert, F.; Stadie, N. Komplexe Sätze: Störungen der Satzproduktion: Materialien für Diagnostik, Therapie und Evaluation; NAT-Verlag: Hofheim, Germany, 2009. [Google Scholar]

- Burchert, F.; Lorenz, A.; Schröder, A.; De Bleser, R.; Stadie, N. Sätze Verstehen: Neurolinguistische Materialien für die Untersuchung von Syntaktischen Störungen beim Satzverständnis; NAT-Verlag: Hofheim, Germany, 2011. [Google Scholar]

- Blömer, F.; Pesch, A.; Willmes, K.; Huber, W.; Springer, L.; Abel, S. Das sprachsystematische Aphasiescreening (SAPS): Konstruktionseigenschaften und erste Evaluierung. Zeitschrift Für Neuropsychologie 2013, 24, 139–148. [Google Scholar] [CrossRef]

- Barbieri, E.; Aggujaro, S.; Molteni, F.; Luzzatti, C. Does argument structure complexity affect reading? A case study of an Italian agrammatic patient with deep dyslexia. Appl. Psycholinguist. 2013, 36, 533–558. [Google Scholar] [CrossRef]

- De Bleser, R.; Kauschke, C. Acquisition and loss of nouns and verbs: Parallel or divergent patterns? J. Neurolinguistics 2003, 16, 213–229. [Google Scholar] [CrossRef] [Green Version]

- Dragoy, O.; Bastiaanse, R. Verb production and word order in Russian agrammatic speakers. Aphasiology 2009, 24, 28–55. [Google Scholar] [CrossRef]

- Luzzatti, C.; Raggi, R.; Zonca, G.; Pistarini, C.; Contardi, A.; Pinna, G. DVerb–noun double dissociation in aphasic lexical impairments: The role of word frequency and imageability. Brain Lang. 2002, 81, 432–444. [Google Scholar] [CrossRef]

- Thompson, C.K.; Bonakdarpour, B.; Fix, S.F. Neural Mechanisms of Verb Argument Structure Processing in Agrammatic Aphasic and Healthy Age-matched Listeners. J. Cogn. Neurosci. 2010, 22, 1993–2011. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thompson, C.K.; Lukic, S.; King, M.C.; Mesulam, M.M.; Weintraub, S. Verb and noun deficits in stroke-induced and primary progressive aphasia: The Northwestern Naming Battery. Aphasiology 2012, 26, 632–655. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, L.P.; Levine, B.A. Verb processing during sentence comprehension in aphasia. Brain Lang. 1990, 38, 21–47. [Google Scholar] [CrossRef]

- Thompson, C.K.; Riley, E.A.; Ouden, D.-B.D.; Meltzer-Asscher, A.; Lukic, S. Training verb argument structure production in agrammatic aphasia: Behavioral and neural recovery patterns. Cortex 2013, 49, 2358–2376. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Caplan, D.; Futter, C. Assignment of thematic roles to nouns in sentence comprehension by an agrammatic patient. Brain Lang. 1986, 27, 117–134. [Google Scholar] [CrossRef]

- Caramazza, A. The disruption of sentence production: Some dissociations*1. Brain Lang. 1989, 36, 625–650. [Google Scholar] [CrossRef]

- Caramazza, A.; Miceli, G. Selective impairment of thematic role assignment in sentence processing. Brain Lang. 1991, 41, 402–436. [Google Scholar] [CrossRef]

- Luzzatti, C.; Toraldo, A.; Guasti, M.T.; Ghirardi, G.; Lorenzi, L.; Guarnaschelli, C. Comprehension of reversible active and passive sentences in agrammatism. Aphasiology 2001, 15, 419–441. [Google Scholar] [CrossRef]

- Rochon, E.; Laird, L.; Bose, A.; Scofield, J. Mapping therapy for sentence production impairments in nonfluent aphasia. Neuropsychol. Rehabil. 2005, 15, 1–36. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rochon, E.; Reichman, S. A Modular Treatment for Sentence Processing Impairments in Aphasia: Sentence Production. Can. J. Speech Lang. Pathol. Audiol. 2003, 27, 202–210. [Google Scholar]

- Berndt, R.S.; Mitchum, C.C.; Haendiges, A.N. Comprehension of reversible sentences in “agrammatism”: A meta-analysis. Cognition 1996, 58, 289–308. [Google Scholar] [CrossRef]

- Byng, S. Sentence processing deficits: Theory and therapy. Cogn. Neuropsychol. 1988, 5, 629–676. [Google Scholar] [CrossRef]

- Mitchum, C.C.; Greenwald, M.L.; Berndt, R.S. Cognitive treatments of sentence processing disorders: What have we learned? Neuropsychol. Rehabilitation 2000, 10, 311–336. [Google Scholar] [CrossRef]

- Menn, L.; Duffield, C.J. Aphasias and theories of linguistic representation: Representing frequency, hierarchy, constructions, and sequential structure. Wiley Interdiscip. Rev. Cogn. Sci. 2013, 4, 651–663. [Google Scholar] [CrossRef] [PubMed]

- Thompson, C.K.; Weintraub, S.; Mesulam, M.-M. Northwestern Anagram Test (NAT); Northwestern University: Evanston, IL, USA, 2012. [Google Scholar]

- Weintraub, S.; Mesulam, M.-M.; Wieneke, C.; Rademaker, A.; Rogalski, E.J.; Thompson, C.K. The Northwestern Anagram Test: Measuring Sentence Production in Primary Progressive Aphasia. Am. J. Alzheimer’s Dis. Other Dement. 2009, 24, 408–416. [Google Scholar] [CrossRef]

- Mesulam, M.-M. Primary Progressive Aphasia—A Language-Based Dementia. N. Engl. J. Med. 2003, 349, 1535–1542. [Google Scholar] [CrossRef]

- Hawkins, M.; Elsworth, G.R.; Osborne, R.H. Questionnaire validation practice: A protocol for a systematic descriptive literature review of health literacy assessments. BMJ Open 2019, 9, e030753. [Google Scholar] [CrossRef]

- Hunsley, J.; Meyer, G.J. The Incremental Validity of Psychological Testing and Assessment: Conceptual, Methodological, and Statistical Issues. Psychol. Assess. 2003, 15, 446–455. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rohde, A.; Worrall, L.; Godecke, E.; O’Halloran, R.; Farrell, A.; Massey, M. Diagnosis of aphasia in stroke populations: A systematic review of language tests. PLoS ONE 2018, 13, e0194143. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thompson, C.K.; Bonakdarpour, B.; Fix, S.C.; Blumenfeld, H.K.; Parrish, T.B.; Gitelman, D.R.; Mesulam, M.-M. Neural Correlates of Verb Argument Structure Processing. J. Cogn. Neurosci. 2007, 19, 1753–1767. [Google Scholar] [CrossRef] [Green Version]

- El Hachioui, H.; Visch-Brink, E.G.; De Lau, L.M.L.; Van De Sandt-Koenderman, M.W.M.E.; Nouwens, F.; Koudstaal, P.J.; Dippel, D.W.J. Screening tests for aphasia in patients with stroke: A systematic review. J. Neurol. 2017, 264, 211–220. [Google Scholar] [CrossRef] [Green Version]

- Hickok, G.; Small, S.L. Neurobiology of Language. Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Hoffmann, M. Left Dominant Perisylvian Network for Language Syndromes (and Language ICN Network). In Clinical Mentation Evaluation; Metzler, J.B., Ed.; Springer: Cham, Switzerland, 2020; pp. 103–112. [Google Scholar]

- Vigneau, M.; Beaucousin, V.; Hervé, P.; Duffau, H.; Crivello, F.; Houdé, O.; Mazoyer, B.; Tzourio-Mazoyer, N. Meta-analyzing left hemisphere language areas: Phonology, semantics, and sentence processing. NeuroImage 2006, 30, 1414–1432. [Google Scholar] [CrossRef] [PubMed]

- Zatorre, R.J.; Belin, P.; Penhune, V.B. Structure and function of auditory cortex: Music and speech. Trends Cogn. Sci. 2002, 6, 37–46. [Google Scholar] [CrossRef]

- Caplan, D.; Alpert, N.; Waters, G. PET Studies of Syntactic Processing with Auditory Sentence Presentation. NeuroImage 1999, 9, 343–351. [Google Scholar] [CrossRef] [PubMed]

- Gajardo-Vidal, A.; Lorca-Puls, D.L.; Hope, T.M.H.; Jones, O.P.; Seghier, M.L.; Prejawa, S.; Crinion, J.T.; Leff, A.P.; Green, D.W.; Price, C.J. How right hemisphere damage after stroke can impair speech comprehension. Brain 2018, 141, 3389–3404. [Google Scholar] [CrossRef] [Green Version]

- Antonenko, D.; Brauer, J.; Meinzer, M.; Fengler, A.; Kerti, L.; Friederici, A.D.; Flöel, A. Functional and structural syntax networks in aging. NeuroImage 2013, 83, 513–523. [Google Scholar] [CrossRef]

- Dąbrowska, E. Different speakers, different grammars: Individual differences in native language attainment. Linguist. Approaches Biling. 2012, 2, 219–253. [Google Scholar] [CrossRef]

- Hope, T.M.; Seghier, M.L.; Leff, A.P.; Price, C.J. Predicting outcome and recovery after stroke with lesions extracted from MRI images. NeuroImage Clin. 2013, 2, 424–433. [Google Scholar] [CrossRef] [Green Version]

- Lipson, D.M.; Sangha, H.; Foley, N.C.; Bhogal, S.; Pohani, G.; Teasell, R.W. Recovery from stroke: Differences between subtypes. Int. J. Rehabilitation Res. 2005, 28, 303–308. [Google Scholar] [CrossRef] [PubMed]

- Thye, M.; Mirman, D. Relative contributions of lesion location and lesion size to predictions of varied language deficits in post-stroke aphasia. NeuroImage Clin. 2018, 20, 1129–1138. [Google Scholar] [CrossRef]

- Yourganov, G.; Smith, K.G.; Fridriksson, J.; Rorden, C. Predicting aphasia type from brain damage measured with structural MRI. Cortex 2015, 73, 203–215. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Harvey, P.D. Clinical applications of neuropsychological assessment. Dialog Clin. Neurosci. 2012, 14, 91–99. [Google Scholar]

- Saykin, A.; Gur, R.; Gur, R.; Shtasel, D.; Flannery, K.; Mozley, L.; Malamut, B.; Watson, B.; Mozley, P. Normative neuropsychological test performance: Effects of age, education, gender and ethnicity. Appl. Neuropsychol. 1995, 2, 79–88. [Google Scholar] [CrossRef]

- Rohde, A.; Worrall, L.; Le Dorze, G. Systematic review of the quality of clinical guidelines for aphasia in stroke management. J. Eval. Clin. Pract. 2013, 19, 994–1003. [Google Scholar] [CrossRef]

- Wang, Y.; Li, H.; Wei, H.; Xu, X.; Jin, P.; Wang, Z.; Zhang, S.; Yang, L. Assessment of the quality and content of clinical practice guidelines for post-stroke rehabilitation of aphasia. Medicine 2019, 98, e16629. [Google Scholar] [CrossRef]

- Brott, T.; Adams, H.P.; Olinger, C.P.; Marler, J.R.; Barsan, W.G.; Biller, J.; Spilker, J.; Holleran, R.; Eberle, R.; Hertzberg, V. Measurements of acute cerebral infarction: A clinical examination scale. Stroke 1989, 20, 864–870. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, B.; Forkert, N.D.; Zavaglia, M.; Hilgetag, C.C.; Golsari, A.; Siemonsen, S.; Fiehler, J.; Pedraza, S.; Puig, J.; Cho, T.-H.; et al. Influence of Stroke Infarct Location on Functional Outcome Measured by the Modified Rankin Scale. Stroke 2014, 45, 1695–1702. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Collin, C.; Wade, D.T.; Davies, S.; Horne, V. The Barthel ADL Index: A reliability study. Int. Disabil. Stud. 1988, 10, 61–63. [Google Scholar] [CrossRef]

- Papadatou-Pastou, M.; Ntolka, E.; Schmitz, J.; Martin, M.; Munafò, M.R.; Ocklenburg, S.; Paracchini, S. Human handedness: A meta-analysis. Psychol. Bull. 2020, 146, 481–524. [Google Scholar] [CrossRef]

- Kroll, J.F.; Dussias, P.E.; Bice, K.; Perrotti, L. Bilingualism, Mind, and Brain. Annu. Rev. Linguistics 2015, 1, 377–394. [Google Scholar] [CrossRef] [Green Version]

- Gaser, C.; Schlaug, G. Brain Structures Differ between Musicians and Non-Musicians. J. Neurosci. 2003, 23, 9240–9245. [Google Scholar] [CrossRef] [Green Version]

- Musso, M.; Fürniss, H.; Glauche, V.; Urbach, H.; Weiller, C.; Rijntjes, M. Musicians use speech-specific areas when processing tones: The key to their superior linguistic competence? Behav. Brain Res. 2020, 390, 112662. [Google Scholar] [CrossRef] [PubMed]

- Biniek, R.; Huber, W.; Glindemann, R.; Willmes, K.; Klumm, H. The Aachen Aphasia Bedside Test—Criteria for validity of psychologic tests. Der Nervenarzt 1992, 63, 473–479. [Google Scholar] [PubMed]

- Nobis-Bosch, R.; Rubi-Fessen, I.; Biniek, R.; Springer, L. Diagnostik und Therapie der akuten Aphasie; Georg Thieme Verlag: Stuttgart, Germany, 2012. [Google Scholar]

- Carragher, M.; Conroy, P.; Sage, K.; Wilkinson, R. Can impairment-focused therapy change the everyday conversations of people with aphasia? A review of the literature and future directions. Aphasiology 2012, 26, 895–916. [Google Scholar] [CrossRef]

- Jaecks, P.; Hielscher-Fastabend, M.; Stenneken, P. Diagnosing residual aphasia using spontaneous speech analysis. Aphasiology 2012, 26, 953–970. [Google Scholar] [CrossRef]

- Huber, W.; Poeck, K.; Weniger, D.; Willmes, K. Aachener Aphasie Test (AAT): Handanweisung; Verlag für Psychologie Hogrefe: Göttingen, Germany; Zürich, Switzerland, 1983; p. 18. [Google Scholar]

- Cohen, R.; Kelter, S.; Engel, D.; List, G.; Strohner, H. On the validity of the Token Test. Der Nervenarzt 1976, 47, 357–361. [Google Scholar]

- Orgass, B.; Poeck, K. Clinical Validation of a New Test for Aphasia: An Experimental Study on the Token Test. Cortex 1966, 2, 222–243. [Google Scholar] [CrossRef]

- Wood, J.L.; Weintraub, S.; Coventry, C.; Xu, J.; Zhang, H.; Rogalski, E.; Mesulam, M.-M.; Gefen, T. Montreal Cognitive Assessment (MoCA) Performance and Domain-Specific Index Scores in Amnestic Versus Aphasic Dementia. J. Int. Neuropsychol. Soc. 2020, 26, 927–931. [Google Scholar] [CrossRef]

- Willmes, K.; Poeck, K. To what extent can aphasic syndromes be localized? Brain 1993, 116, 1527–1540. [Google Scholar] [CrossRef]

- Swisher, L.P.; Sarno, M.T. Token Test Scores of Three Matched Patient Groups: Left Brain-Damaged with Aphasia; Right Brain-Damaged without Aphasia; Non-Brain-Damaged. Cortex 1969, 5, 264–273. [Google Scholar] [CrossRef]

- Den Besten, H.; Edmondson, J.A. The Verbal Complex in Continental West Germanic. In On the Formal Syntax of the Westgermania; Abraham, W., Ed.; John Benjamins Publishing Company: Amsterdam, The Netherlands, 1983; pp. 155–216. [Google Scholar]

- Vikner, S. Verb Movement and Expletive Subjects in the Germanic Languages; Oxford University Press: New York, NY, USA, 1995. [Google Scholar]

- Gulikers, L.; Rattink, G.; Piepenbrock, R. German Linguistic Guide. In The Celex Lexical Database; Baayen, R.H., Piepenbrock, R., Gulikers, L., Eds.; Linguistic Data Consortium: Philadelphia, PA, USA, 1995. [Google Scholar]

- Jaeger, T.F. Categorical data analysis: Away from ANOVAs (transformation or not) and towards logit mixed models. J. Mem. Lang. 2008, 59, 434–446. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting linear mixed-effects models using lme4. arXiv 2014, arXiv:1406.5823. [Google Scholar]

- Kuznetsova, A.; Brockhoff, P.B.; Christensen, R.H.B. lmerTest Package: Tests in Linear Mixed Effects Models. J. Stat. Softw. 2017, 82, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Hothorn, T.; Bretz, F.; Westfall, P. Simultaneous Inference in General Parametric Models. Biom. J. 2008, 50, 346–363. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nakagawa, S.; Schielzeth, H. A general and simple method for obtainingR2from generalized linear mixed-effects models. Methods Ecol. Evol. 2012, 4, 133–142. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988. [Google Scholar]

- Moksony, F. Small Is Beautiful: The Use and Interpretation of R2 in Social Research. Szociol. Szle. 1999, 130–138. [Google Scholar]

- Brysbaert, M.; Stevens, M. Power Analysis and Effect Size in Mixed Effects Models: A Tutorial. J. Cogn. 2018, 1, 9. [Google Scholar] [CrossRef] [Green Version]

- Green, P.; MacLeod, C.J. SIMR: An R package for power analysis of generalized linear mixed models by simulation. Methods Ecol. Evol. 2016, 7, 493–498. [Google Scholar] [CrossRef]

- Ben-Shachar, M.; Hendler, T.; Kahn, I.; Ben-Bashat, D.; Grodzinsky, Y. The neural reality of syntactic transformations: Evidence from functional magnetic resonance imaging. Psychol. Sci. 2003, 14, 433–440. [Google Scholar] [CrossRef] [PubMed]

- Ben-Shachar, M.; Palti, D.; Grodzinsky, Y. Neural correlates of syntactic movement: Converging evidence from two fMRI experiments. NeuroImage 2004, 21, 1320–1336. [Google Scholar] [CrossRef]

- Ouden, D.-B.D.; Fix, S.; Parrish, T.B.; Thompson, C.K. Argument structure effects in action verb naming in static and dynamic conditions. J. Neurolinguistics 2009, 22, 196–215. [Google Scholar] [CrossRef] [Green Version]

- Bybee, J. Language, Usage and Cognition; Cambridge University Press: Cambride, UK, 2010. [Google Scholar]

- Bybee, J.L.; Hopper, P.J. Frequency and the Emergence of Linguistic Structure; John Benjamins Publishing: Amsterdam, The Netherlands, 2001; Volume 45. [Google Scholar]

- Ellis, N.C. Frequency effects in language processing: A review with implications for theories of implicit and explicit language acquisition. Stud. Second Lang. Acquis. 2002, 24, 143–188. [Google Scholar] [CrossRef]

- MacWhinney, B. Emergentist approaches to language. Typol. Stud. Lang. 2001, 45, 449–470. [Google Scholar]

- Liu, D. The Most Frequently Used English Phrasal Verbs in American and British English: A Multicorpus Examination. TESOL Q. 2011, 45, 661–688. [Google Scholar] [CrossRef]

- Bird, H.; Ralph, M.A.L.; Patterson, K.; Hodges, J.R. The rise and fall of frequency and imageability: Noun and verb production in semantic dementia. Brain Lang. 2000, 73, 17–49. [Google Scholar] [CrossRef] [Green Version]

- Crepaldi, D.; Aggujaro, S.; Arduino, L.S.; Zonca, G.; Ghirardi, G.; Inzaghi, M.G.; Colombo, M.; Chierchia, G.; Luzzatti, C. Noun–verb dissociation in aphasia: The role of imageability and functional locus of the lesion. Neuropsychologia 2006, 44, 73–89. [Google Scholar] [CrossRef] [PubMed]

- Bastiaanse, R.; Wieling, M.; Wolthuis, N. The role of frequency in the retrieval of nouns and verbs in aphasia. Aphasiology 2015, 30, 1221–1239. [Google Scholar] [CrossRef] [Green Version]

- Thompson, C.K.; Lange, K.L.; Schneider, S.L.; Shapiro, L.P. Agrammatic and non-brain-damaged subjects’ verb and verb argument structure production. Aphasiology 1997, 11, 473–490. [Google Scholar] [CrossRef]

- Chierchia, G. The Variability of Impersonal Subjects. In Quantification in Natural Languages; Springer: Berlin/Heidelberg, Germany, 1995; pp. 107–143. [Google Scholar]

- Reinhart, T. The Theta System—An overview. Theor. Linguist. 2003, 28, 229–290. [Google Scholar] [CrossRef]

- Shetreet, E.; Palti, D.; Friedmann, N.; Hadar, U. Cortical Representation of Verb Processing in Sentence Comprehension: Number of Complements, Subcategorization, and Thematic Frames. Cereb. Cortex 2006, 17, 1958–1969. [Google Scholar] [CrossRef] [Green Version]

- Jaecks, P. Restaphasie; Georg Thieme Verlag: Stuttgat, Germany, 2014. [Google Scholar]

- Fedorenko, E.; Piantadosi, S.; Gibson, E. Processing Relative Clauses in Supportive Contexts. Cogn. Sci. 2012, 36, 471–497. [Google Scholar] [CrossRef] [PubMed]

- Gibson, E.; Desmet, T.; Grodner, D.; Watson, D.; Ko, K. Reading relative clauses in English. Cogn. Linguistics 2005, 16, 313–353. [Google Scholar] [CrossRef]

- King, J.; Just, M.A. Individual differences in syntactic processing: The role of working memory. J. Mem. Lang. 1991, 30, 580–602. [Google Scholar] [CrossRef]

- King, J.W.; Kutas, M. Who Did What and When? Using Word- and Clause-Level ERPs to Monitor Working Memory Usage in Reading. J. Cogn. Neurosci. 1995, 7, 376–395. [Google Scholar] [CrossRef] [Green Version]

- Traxler, M.J.; Morris, R.K.; Seely, R.E. Processing Subject and Object Relative Clauses: Evidence from Eye Movements. J. Mem. Lang. 2002, 47, 69–90. [Google Scholar] [CrossRef]

- Mecklinger, A.; Schriefers, H.; Steinhauer, K.; Friederici, A.D. Processing relative clauses varying on syntactic and semantic dimensions: An analysis with event-related potentials. Mem. Cogn. 1995, 23, 477–494. [Google Scholar] [CrossRef]

- Betancort, M.; Carreiras, M.; Sturt, P. Short article: The processing of subject and object relative clauses in Spanish: An eye-tracking study. Q. J. Exp. Psychol. 2009, 62, 1915–1929. [Google Scholar] [CrossRef]

- Friederici, A.D.; Hahne, A.; Saddy, D. Distinct Neurophysiological Patterns Reflecting Aspects of Syntactic Complexity and Syntactic Repair. J. Psycholinguist. Res. 2002, 31, 45–63. [Google Scholar] [CrossRef]

- Osterhout, L.; Holcomb, P.J. Event-related brain potentials elicited by syntactic anomaly. J. Mem. Lang. 1992, 31, 785–806. [Google Scholar] [CrossRef]

- Caplan, D.; Hanna, J.E. Sentence Production by Aphasic Patients in a Constrained Task. Brain Lang. 1998, 63, 184–218. [Google Scholar] [CrossRef]

- Nespoulous, J.-L.; Dordain, M.; Perron, C.; Ska, B.; Bub, D.; Caplan, D.; Mehler, J.; Lecours, A.R. Agrammatism in sentence production without comprehension deficits: Reduced availability of syntactic structures and/or of grammatical morphemes? A case study. Brain Lang. 1988, 33, 273–295. [Google Scholar] [CrossRef]

- Friedmann, N.; Grodzinsky, Y. Tense and Agreement in Agrammatic Production: Pruning the Syntactic Tree. Brain Lang. 1997, 56, 397–425. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schwartz, M.F.; Saffran, E.M.; Fink, R.B.; Myers, J.L.; Martin, N. Mapping Therapy—A Treatment Program for Agrammatism. Aphasiology 1994, 8, 19–54. [Google Scholar] [CrossRef]

- Cho, S.; Thompson, C.K. What goes wrong during passive sentence production in agrammatic aphasia: An eyetracking study. Aphasiology 2010, 24, 1576–1592. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Driva, E.; Terzi., A. Children’s passives and the theory of grammar. In Language Acquisition and Development: Proceedings of GALA; Cambridge Scholar Publishing: Newcastle upon Tyne, UK, 2007; pp. 188–198. [Google Scholar]

- Lee, M.; Thompson, C.K. Agrammatic aphasic production and comprehension of unaccusative verbs in sentence contexts. J. Neurolinguistics 2004, 17, 315–330. [Google Scholar] [CrossRef] [Green Version]

- Thompson, C.K.; Lee, M. Psych verb production and comprehension in agrammatic Broca’s aphasia. J. Neurolinguistics 2009, 22, 354–369. [Google Scholar] [CrossRef] [Green Version]

- D’Ortenzio, S. Analysis and Treatment of Movement-Derived Structures in Italian-Speaking Cochlear Implanted Children. Ph.D. Thesis, Università Ca’Foscari Venezia, Venice, Italy, 2019. [Google Scholar]

- Jackendoff, R. What is the human language faculty?: Two views. Language 2011, 87, 586–624. [Google Scholar] [CrossRef]

- Dennis, M.; Kohn, B. Comprehension of syntax in infantile hemiplegics after cerebral hemidecortication: Left-hemisphere superiority. Brain Lang. 1975, 2, 472–482. [Google Scholar] [CrossRef]

- Papoutsi, M.; Stamatakis, E.A.; Griffiths, J.; Marslen-Wilson, W.D.; Tyler, L.K. Is left fronto-temporal connectivity essential for syntax? Effective connectivity, tractography and performance in left-hemisphere damaged patients. NeuroImage 2011, 58, 656–664. [Google Scholar] [CrossRef]

- Ivanova, M.V.; Hallowell, B. A tutorial on aphasia test development in any language: Key substantive and psychometric considerations. Aphasiology 2013, 27, 891–920. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Friedmann, N.; Aram, D.; Novogrodsky, R. Definitions as a window to the acquisition of relative clauses. Appl. Psycholinguist. 2011, 32, 687–710. [Google Scholar] [CrossRef] [Green Version]

- Brauer, J.; Anwander, A.; Perani, D.; Friederici, A.D. Dorsal and ventral pathways in language development. Brain Lang. 2013, 127, 289–295. [Google Scholar] [CrossRef] [Green Version]

- Dubois, J.; Dehaene-Lambertz, G.; Perrin, M.; Mangin, J.-F.; Cointepas, Y.; Duchesnay, E.; Le Bihan, D.; Hertz-Pannier, L. Asynchrony of the early maturation of white matter bundles in healthy infants: Quantitative landmarks revealed noninvasively by diffusion tensor imaging. Hum. Brain Mapp. 2007, 29, 14–27. [Google Scholar] [CrossRef]

- Lebel, C.; Walker, L.; Leemans, A.; Phillips, L.; Beaulieu, C. Microstructural maturation of the human brain from childhood to adulthood. NeuroImage 2008, 40, 1044–1055. [Google Scholar] [CrossRef]

- Perani, D.; Saccuman, M.C.; Scifo, P.; Anwander, A.; Spada, D.; Baldoli, C.; Poloniato, A.; Lohmann, G.; Friederici, A.D. Neural language networks at birth. Proc. Natl. Acad. Sci. USA 2011, 108, 16056–16061. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Evans, A.; Hermoye, L.; Lee, S.H.; Wakana, S.; Zhang, W.; Donohue, P.; Miller, M.I.; Huang, H.; Wang, X. Evidence of slow maturation of the superior longitudinal fasciculus in early childhood by diffusion tensor imaging. NeuroImage 2007, 38, 239–247. [Google Scholar] [CrossRef] [Green Version]

- Levy, R. Aging-associated cognitive decline. Working Party of the International Psychogeriatric Association in collaboration with the World Health Organization. Int. Psychogeriatrics 1994, 6, 63–68. [Google Scholar]

- Salat, D.H.; Kaye, J.A.; Janowsky, J.S. Greater orbital prefrontal volume selectively predicts worse working memory performance in older adults. Cereb. Cortex 2002, 12, 494–505. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hardy, S.M.; Segaert, K.; Wheeldon, L. Healthy Aging and Sentence Production: Disrupted Lexical Access in the Context of Intact Syntactic Planning. Front. Psychol. 2020, 11, 257. [Google Scholar] [CrossRef]

- Campbell, K.L.; Samu, D.; Davis, S.W.; Geerligs, L.; Mustafa, A.; Tyler, L.K.; Neuroscience, F.C.C.F.A.A. Robust Resilience of the Frontotemporal Syntax System to Aging. J. Neurosci. 2016, 36, 5214–5227. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tyler, L.K.; Wright, P.; Randall, B.; Marslen-Wilson, W.D.; Stamatakis, E.A. Reorganization of syntactic processing following left-hemisphere brain damage: Does right-hemisphere activity preserve function? Brain 2010, 133, 3396–3408. [Google Scholar] [CrossRef]

- Shetreet, E.; Palti, D.; Friedmann, N.; Hadar, U. Argument Structure Representation: Evidence from fMRI. In Proceedings of the Verb 2010: Interdisciplinary Workshop on Verbs, The Identification and Representation of Verb Features, Pisa, Italy, 4–5 November 2010. [Google Scholar]

- Peelle, J.E.; Cusack, R.; Henson, R.N. Adjusting for global effects in voxel-based morphometry: Gray matter decline in normal aging. NeuroImage 2012, 60, 1503–1516. [Google Scholar] [CrossRef] [Green Version]

- Sullivan, E.V.; Marsh, L.; Mathalon, D.H.; Lim, K.O.; Pfefferbaum, A. Age-related decline in MRI volumes of temporal lobe gray matter but not hippocampus. Neurobiol. Aging 1995, 16, 591–606. [Google Scholar] [CrossRef]

- Geerligs, L.; Maurits, N.M.; Renken, R.J.; Lorist, M.M. Reduced specificity of functional connectivity in the aging brain during task performance. Hum. Brain Mapp. 2014, 35, 319–330. [Google Scholar] [CrossRef]

- Lustig, C.; Snyder, A.Z.; Bhakta, M.; O’Brien, K.C.; McAvoy, M.; Raichle, M.E.; Morris, J.C.; Buckner, R.L. Functional deactivations: Change with age and dementia of the Alzheimer type. Proc. Natl. Acad. Sci. USA 2003, 100, 14504–14509. [Google Scholar] [CrossRef] [Green Version]

- Cohen, L.; Mehler, J. Click monitoring revisited: An on-line study of sentence comprehension. Mem. Cogn. 1996, 24, 94–102. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beland, R.; Lecours, A.R. The Mt-86 Beta-Aphasia Battery—A Subset of Normative Data in Relation to Age and Level of School Education. Aphasiology 1990, 4, 439–462. [Google Scholar] [CrossRef]

- Snitz, B.E.; Unverzagt, F.W.; Chang, C.-C.H.; Bilt, J.V.; Gao, S.; Saxton, J.; Hall, K.S.; Ganguli, M. Effects of age, gender, education and race on two tests of language ability in community-based older adults. Int. Psychogeriatrics 2009, 21, 1051–1062. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Troyer, A.K. Normative Data for Clustering and Switching on Verbal Fluency Tasks. J. Clin. Exp. Neuropsychol. 2000, 22, 370–378. [Google Scholar] [CrossRef]

- Gathercole, S.E.; Pickering, S.J.; Knight, C.; Stegmann, Z. Working memory skills and educational attainment: Evidence from national curriculum assessments at 7 and 14 years of age. Appl. Cogn. Psychol. 2003, 18, 1–16. [Google Scholar] [CrossRef]

- Chipere, N. Variations in Native Speaker Competence: Implications for First-language Teaching. Lang. Aware. 2001, 10, 107–124. [Google Scholar] [CrossRef]

- Diessel, H. The Acquisition of Complex Sentences; Cambridge University Press: Cambridge, UK, 2004; Volume 105. [Google Scholar]

- Givón, T. The Ontogeny of Relative Clauses: How Children Learn to Negotiate Complex Reference; Typescript; University of Oregon: Eugene, OR, USA, 2008. [Google Scholar]

- Christiansen, M.H.; Macdonald, M.C. A Usage-Based Approach to Recursion in Sentence Processing. Lang. Learn. 2009, 59, 126–161. [Google Scholar] [CrossRef]

- Reali, F. Frequency Affects Object Relative Clause Processing: Some Evidence in Favor of Usage-Based Accounts. Lang. Learn. 2014, 64, 685–714. [Google Scholar] [CrossRef]

- Reali, F.; Christiansen, M.H. Uncovering the Richness of the Stimulus: Structure Dependence and Indirect Statistical Evidence. Cogn. Sci. 2005, 29, 1007–1028. [Google Scholar] [CrossRef] [Green Version]

- Reali, F.; Christiansen, M.H. Processing of relative clauses is made easier by frequency of occurrence. J. Mem. Lang. 2007, 57, 1–23. [Google Scholar] [CrossRef]

- Reali, F.; Christiansen, M.H. Word chunk frequencies affect the processing of pronominal object-relative clauses. Q. J. Exp. Psychol. 2007, 60, 161–170. [Google Scholar] [CrossRef]

- Dabrowska, E. Learning a morphological system without a default: The Polish genitive. J. Child Lang. 2001, 28, 545–574. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dąbrowska, E. The effects of frequency and neighbourhood density on adult speakers’ productivity with Polish case inflections: An empirical test of usage-based approaches to morphology. J. Mem. Lang. 2008, 58, 931–951. [Google Scholar] [CrossRef]

- Dąbrowska, E.; Street, J. Individual differences in language attainment: Comprehension of passive sentences by native and non-native English speakers. Lang. Sci. 2006, 28, 604–615. [Google Scholar] [CrossRef]

- Street, J.A.; Dąbrowska, E. More individual differences in language attainment: How much do adult native speakers of English know about passives and quantifiers? Lingua 2010, 120, 2080–2094. [Google Scholar] [CrossRef] [Green Version]

- Blumenthal-Dramé, A.; Glauche, V.; Bormann, T.; Weiller, C.; Musso, M.; Kortmann, B. Frequency and Chunking in Derived Words: A Parametric fMRI Study. J. Cogn. Neurosci. 2017, 29, 1162–1177. [Google Scholar] [CrossRef]

- Caplan, D.; Waters, G.; DeDe, G.; Michaud, J.; Reddy, A. A study of syntactic processing in aphasia I: Behavioral (psycholinguistic) aspects. Brain Lang. 2007, 101, 103–150. [Google Scholar] [CrossRef]

- Friederici, A.D.; Kotz, S.A. The brain basis of syntactic processes: Functional imaging and lesion studies. NeuroImage 2003, 20, S8–S17. [Google Scholar] [CrossRef] [Green Version]

- Forster, R.; Corrêa, L.M.S. On the asymmetry between subject and object relative clauses in discourse context. Revista de Estudos da Linguagem 2017, 25, 1225. [Google Scholar] [CrossRef] [Green Version]

- Benghanem, S.; Rosso, C.; Arbizu, C.; Moulton, E.; Dormont, D.; Leger, A.; Pires, C.; Samson, Y. Aphasia outcome: The interactions between initial severity, lesion size and location. J. Neurol. 2019, 266, 1303–1309. [Google Scholar] [CrossRef] [PubMed]

- Boehme, A.K.; Martin-Schild, S.; Marshall, R.S.; Lazar, R.M. Effect of aphasia on acute stroke outcomes. Neurology 2016, 87, 2348–2354. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Maas, M.B.; Lev, M.H.; Ay, H.; Singhal, A.B.; Greer, D.M.; Smith, W.S.; Harris, G.J.; Halpern, E.F.; Koroshetz, W.J.; Furie, K.L. The Prognosis for Aphasia in Stroke. J. Stroke Cerebrovasc. Dis. 2012, 21, 350–357. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alcock, K.J.; Passingham, R.E.; Watkins, K.; Vargha-Khadem, F. Pitch and Timing Abilities in Inherited Speech and Language Impairment. Brain Lang. 2000, 75, 34–46. [Google Scholar] [CrossRef] [Green Version]

- Alcock, K.J.; Passingham, R.E.; Watkins, K.E.; Vargha-Khadem, F. Oral Dyspraxia in Inherited Speech and Language Impairment and Acquired Dysphasia. Brain Lang. 2000, 75, 17–33. [Google Scholar] [CrossRef]

- Vargha-Khadem, F.; Watkins, K.; Alcock, K.; Fletcher, P.; Passingham, R. Praxic and nonverbal cognitive deficits in a large family with a genetically transmitted speech and language disorder. Proc. Natl. Acad. Sci. USA 1995, 92, 930–933. [Google Scholar] [CrossRef] [Green Version]

| Patient | Gender | Age | Ed.q. | Ed.g. | TPO | Stroke’s Severity | Language (AAT Criteria) | Aphasia | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LHSP | (Months) | NIHSS | mRS | BI | S1 | S2 | S3 | S4 | S5 | S6 | TT | Severity | Type | ||||

| 1 | m | 71 | 2 | 2 | 9 | 5 | 3 | 80 | 4 | 5 | 5 | 3 | 5 | 3 | 44 | Mild | Broca |

| 2 | w | 69 | 3 | 2 | 6 | 1 | 1 | 100 | 4 | 5 | 5 | 4 | 3 | 4 | 48 | Mild | Anomic |

| 3 | w | 54 | 4 | 3 | 6 | 4 | 2 | 90 | 4 | 4 | 5 | 4 | 5 | 4 | 40 | Mild | Anomic |

| 4 | w | 71 | 3 | 2 | 5 | 0 | 1 | 100 | 4 | 5 | 5 | 4 | 5 | 5 | 50 | Minimal | Residual |

| 5 | m | 58 | 4 | 2 | 5 | 0 | 1 | 100 | 5 | 5 | 5 | 4 | 5 | 5 | 50 | Minimal | Residual |

| 6 | m | 48 | 2 | 2 | 6 | 0 | 1 | 100 | 5 | 5 | 5 | 5 | 4 | 5 | 50 | Minimal | Residual |

| 7 | m | 66 | 2 | 2 | 5 | 0 | 1 | 100 | 5 | 5 | 5 | 4 | 5 | 5 | * | Minimal | Residual |

| 8 | m | 50 | 4 | 3 | 50 | 2 | 2 | 100 | 5 | 4 | 5 | 4 | 4 | 5 | 45 | Minimal | Residual |

| 9 | w | 59 | 4 | 2 | 310 | 4 | 2 | 100 | 3 | 3 | 5 | 4 | 4 | 2 | 49 | Minimal | Residual |

| 10 | m | 58 | 3 | 2 | 26 | 2 | 1 | 100 | 2 | 3 | 5 | 3 | 4 | 2 | 27 | Mild | Broca |

| 11 | w | 63 | 3 | 2 | 109 | 7 | 3 | 60 | 1 | 3 | 4 | 4 | 3 | 1 | 47 | Middle | Broca |

| 12 | m | 58 | 3 | 2 | 34 | 2 | 1 | 100 | 5 | 5 | 5 | 3 | 5 | 5 | 46 | Mild | Broca |

| 13 | w | 77 | 2 | 2 | 0 | 1 | 1 | 100 | 3 | 3 | 5 | 3 | 5 | 2 | * | Minimal | Residual |

| 14 | m | 57 | 4 | 2 | 3 | 1 | 1 | 100 | 5 | 5 | 5 | 4 | 5 | 5 | * | Minimal | Residual |

| 15 | m | 70 | 4 | 3 | 6 | 0 | 1 | 100 | 5 | 5 | 5 | 4 | 5 | 5 | 45 | Minimal | Residual |

| Mean (SD) | 61.35 (8.18) | 3.07 (0.81) | 2.14 (0.40) | 38.66 (77.54) | 1.9 (2.08) | 1.4 (0.72) | 95.33 (10.87) | 3.9 (1.21) | 4.2 (0.87) | 4.9 (0.25) | 3.8 (0.54) | 4.4 (0.71) | 3.7 (1.41) | 45 (6.43) | |||

| RHSP | |||||||||||||||||

| 1 | m | 79 | 3 | 2 | 0 | 1 | 1 | 85 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 2 | m | 69 | 2 | 2 | 0 | 2 | 2 | 80 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 3 | m | 74 | 2 | 2 | 0 | 0 | 0 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 4 | w | 80 | 2 | 2 | 0 | 2 | 1 | 60 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 5 | m | 56 | 3 | 2 | 6 | 0 | 1 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 6 | m | 77 | 2 | 2 | 19 | 1 | 1 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 7 | m | 66 | 2 | 2 | 7 | 0 | 0 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 8 | w | 58 | 4 | 3 | 5 | 0 | 0 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 9 | w | 31 | 3 | 2 | 6 | 0 | 0 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 10 | m | 50 | 4 | 3 | 6 | 0 | 1 | 95 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 11 | w | 81 | 2 | 1 | 5 | 8 | 4 | 25 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 12 | w | 68 | 2 | 2 | 0 | 0 | 0 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 13 | w | 80 | 2 | 1 | 0 | 2 | 1 | 80 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 14 | w | 66 | 2 | 2 | 0 | 0 | 1 | 90 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| 15 | w | 88 | 2 | 1 | 7 | 1 | 2 | 100 | 5 | 5 | 5 | 5 | 5 | 5 | |||

| Mean (SD) | 68.2 (14.24) | 2.47 (0.72) | 1.93 (0.57) | 4.07 (4.96) | 1 (2.0) | 1 (1.3) | 87.67 (20.15) | 5 (0.0) | 5 (0.0) | 5 (0.0) | 5 (0.0) | 5 (0.0) | 5 (0.0) | ||||

| Subtest | Number of Test Items | Description | Stimuli |

|---|---|---|---|

| VNT | 22 + 2 examples | Verb naming (an action shown on a drawing) | 5 obligatory one-argument verbs 5 obligatory two-argument verbs 5 optional two-argument verbs 2 obligatory three-argument verbs 5 optional three-argument verbs |

| VCT | 22 + 2 examples | Verb comprehension (choosing the picture named by the examiner out of 4) | same as VNT |

| ASPT | 32 + 2 examples | Active sentences production based on verbs with different argument structures. (All words needed for the sentences are given) | every verb appearing in VNT and VCT is tested in all its argument structures |

| NAT | 30 + 2 examples | nonverbal production of sentences with different syntactic complexity by arranging printed word cards | 5 active sentences 5 passive sentences 5 subject extracted Wh-question 5 object extracted Wh-question 5 subject relative sentences 5 object relative sentences |

| SPPT | 30 + 3 examples | production of sentences with different syntactic complexity (primed by the sentence structure given by the examiner) | same as NAT |

| SVT | 30 + 3 examples | comprehension of sentences with different syntactic complexity (choosing the correct picture out of two) | same as NAT |

| HP: Healthy Participants without Aphasia | RHSP Participants without Aphasia | LHSP Participants with Aphasia | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| VNT | VCT | ASPT | VNT | VCT | ASPT | VNT | VCT | ASPT | ||||||||||||

| M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | SD | SD | |||||

| ob1 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | ob1 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | ob1 | 98.60 | 5.16 | 100 | 0.00 | 99.33 | 2.58 |

| op2 | 99.26 | 3.85 | 99.23 | 3.85 | 100 | 0.00 | op2 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | op2 | 82.70 | 7.04 | 100 | 0.00 | 97.48 | 6.97 |

| ob2 | 99.26 | 3.85 | 100 | 0.00 | 100 | 0.00 | ob2 | 98.67 | 5.16 | 100 | 0.00 | 100 | 0.00 | ob2 | 97.30 | 14.86 | 100 | 0.00 | 97.07 | 8.07 |

| op3 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | op3 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | op3 | 89.30 | 14.86 | 98.67 | 5.16 | 95.76 | 10.68 |

| ob3 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | ob3 | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | ob3 | 63.30 | 35.19 | 100 | 0.00 | 90.00 | 19.21 |

| mean | 99.67 | 1.21 | 99.83 | 0.87 | 100 | 0.00 | mean | 99.67 | 1.17 | 100 | 0.00 | 100 | 0.00 | mean | 89.40 | 8.18 | 99.70 | 1.17 | 96.48 | 8.32 |

| NAT | SPPT | SCT | NAT | SPPT | SCT | NAT | SPPT | SCT | ||||||||||||

| M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | |||

| Active | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | Active | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | Active | 96.92 | 7.51 | 97.33 | 10.33 | 98.67 | 5.16 |

| Passive | 100 | 0.00 | 99.26 | 3.84 | 99.26 | 3.84 | Passive | 100 | 0.00 | 100 | 0.00 | 100 | 0.00 | Passive | 75.38 | 39.29 | 65.33 | 41.03 | 88.00 | 22.42 |

| SW | 100 | 0.00 | 99.26 | 3.84 | 99.26 | 3.84 | SW | 100 | 0.00 | 97.33 | 7.04 | 98.67 | 5.16 | SW | 90.77 | 22.53 | 82.66 | 26.04 | 96.00 | 11.21 |

| OW | 100 | 0.00 | 97.78 | 6.41 | 99.26 | 3.84 | OW | 100 | 0.00 | 88.00 | 14.74 | 98.67 | 5.16 | OW | 76.92 | 33.51 | 66.67 | 41.86 | 89.33 | 22.51 |

| SR | 100 | 0.00 | 97.04 | 9.12 | 100 | 0.00 | SR | 98.67 | 5.16 | 94.67 | 14.07 | 100 | 0.00 | SR | 69.23 | 45.18 | 54.67 | 38.89 | 90.67 | 16.68 |

| OR | 100 | 0.00 | 80.74 | 32.57 | 98.52 | 7.70 | OR | 90.67 | 19.81 | 77.33 | 21.02 | 96.00 | 11.21 | OR | 60.00 | 42.43 | 25.33 | 24.46 | 82.67 | 19.81 |

| C | 100 | 0.00 | 98.77 | 3.22 | 99.75 | 1.28 | C | 99.56 | 1.72 | 97.33 | 4.91 | 99.56 | 1.72 | C | 85.64 | 22.58 | 78.22 | 22.03 | 95.11 | 9.25 |

| non-C | 100 | 0.00 | 92.59 | 12.59 | 99.01 | 5.13 | non-C | 96.89 | 6.60 | 88.44 | 11.12 | 98.22 | 4.69 | non-C | 70.77 | 36.47 | 52.44 | 32.16 | 86.67 | 14.70 |

| mean | 100 | 0.00 | 95.68 | 7.21 | 99.38 | 2.62 | mean | 98.22 | 3.96 | 92.89 | 7.11 | 98.89 | 3.00 | mean | 78.21 | 29.02 | 65.33 | 25.19 | 90.89 | 8.77 |

| A. NAVS-G VNT: LHSP with Aphasia | |||||||

|---|---|---|---|---|---|---|---|

| Fixed Effects | R2 | Estimate | SE | p | z | χ2 | Power |

| Single models | |||||||

| VAN | 0.193 | 0.047 | 6.106 | 70% | |||

| VAO | 0.054 | 1.087 | 0.738 | 0.140 | 1.474 | 29% | |

| VT ^ | 0.297 | 0.002 | 17.46 | 97% | |||

| AAT.gs | 0.592 | 0.004 | 0.002 | 0.036 | 2.101 | 0% | |

| AAT.sy | 0.048 | 0.374 | 0.147 | 0.011 | 2.555 | 64% | |

| Verb freq ^ | 0.157 | −1.043 | 0.376 | 0.006 | −2.774 | 82% | |

| Age | 0.062 | −0.072 | 0.029 | 0.013 | −2.478 | 63% | |

| Adjusted models | |||||||

| VAN + Age | 0.248 | ||||||

| VAN | 0.047 | 6.131 | 74% | ||||

| Age | −0.072 | 0.029 | 0.013 | −2.48 | |||

| VT + Age ^ | 0.357 | ||||||

| VT | 0.001 | 17.582 | 98% | ||||

| ob2 < ob1 | −2.845 | 1.121 | 0.028 | 2.54 | |||

| ob3 < ob1 | −4.001 | 1.197 | 0.004 | 3.34 | |||

| op2 > ob2 | 2.127 | 0.869 | 0.029 | 2.447 | |||

| op2 > ob3 | 3.283 | 0.964 | 0.004 | 3.406 | |||

| op3 > ob3 | 2.098 | 0.767 | 0.021 | 2.735 | |||

| Age | −0.072 | 0.029 | 0.013 | −2.493 | |||

| B. Between groups | |||||||

| Fixed effects | R2 | Estimate | SE | p | z | χ2 | Power |

| Group | 0.238 | 0.000 | 29.33 | ||||

| HP > RH | 0.416 | 0.623 | 0.504 | 0.668 | |||

| HP > LH ^ | 2.478 | 0.470 | 0.000 | 5.275 | |||

| RH > LH ^ | 2.062 | 0.510 | 0.000 | 4.046 | |||

| NAVS-G ASPT: LHSP with Aphasia | |||||||

|---|---|---|---|---|---|---|---|

| Fixed Effects | R2 | Estimate | SE | p | z | χ2 | Power |

| Single predictor models | |||||||

| VAN | 0.039 | 0.000 | 15.919 | 66% | |||

| VAO | 0.006 | −0.882 | 0.637 | 0.166 | −1.386 | 24% | |

| VT | 0.039 | 0.002 | 16.896 | 78% | |||

| NIHSS | 0.241 | −0.924 | 0.418 | 0.027 | −2.209 | 61% | |

| mRS | 0.280 | −2.906 | 1.212 | 0.016 | −2.938 | 69% | |

| BI ^ | 0.281 | 0.170 | 0.063 | 0.006 | 2.721 | 84% | |

| Adjusted models | |||||||

| VAN + NIHSS | 0.327 | ||||||

| VAN ^ | 0.000 | 16.026 | 87% | ||||

| 2Arg < 1Arg | −3.586 | 1.220 | 0.010 | 2.939 | |||

| 3Arg < 1Arg | −2.261 | 1.174 | 0.054 | 1.925 | |||

| 3Arg > 2Arg | 1.325 | 0.525 | 0.017 | 2.523 | |||

| NIHSS | −0.952 | 0.431 | 0.027 | −2.211 | |||

| VT + NIHSS | 0.329 | ||||||

| VT ^ | 0.002 | 16.896 | 87% | ||||

| ob3 < ob1 | −4.205 | 1.402 | 0.024 | 2.998 | |||

| op3 < ob1 | −3.416 | 1.210 | 0.024 | 2.823 | |||

| NIHSS | −0.9556 | 0.4338 | 0.027 | −2.203 | |||

| NAVS-G SPPT in LHPP with Aphasia | NAVS-G SPPT in RHSP without Aphasia | NAVS-G SPPT in HP | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fixed eff. | Est. | SE | p | χ2 | z | Pw | Fixed eff. | Est. | SE | p | χ2 | z | Pw | Fixed eff. | Est. | SE | p | χ2 | z | Pw |

| Single predictor models | Single predictor models | Single predictor models | ||||||||||||||||||

| Canonicity (R2 = 0.129) | Canonicity (R2 = 0.073) | Canonicity (R2 = 0.119) | ||||||||||||||||||

| Canonicity | 2.318 | 0.661 | 0.000 | 3.504 | 98% | Canonicity | 1.438 | 0.780 | 0.065 | 1.844 | 49% | Canonicity | 1.85 | 0.93 | 0.0465 | 1.99 | 56% | |||

| C. without relatives (R2 = 0.108) | C. without relatives (R2 = 0.04) | C. without relatives (R2 = < 0.001) | ||||||||||||||||||

| A + SW > P + OW | 2.618 | 0.551 | <0.001 | 4.748 | 21% | A + SW > P + OW | 1.017 | 1.086 | 0.349 | 0.936 | 19% | A + SW > P + OW | 0.003 | 0.826 | 0.997 | 0.003 | 6% | |||

| Sentence type (R2 = 0.394) | Sentence type (R2 = 0.234) | Sentence type (R2 = 0.239) | ||||||||||||||||||

| ST | <0.001 | 82.395 | 100% | ST | 0.018 | 13.672 | 82% | ST | 0.000 | 42.442 | 100% | |||||||||

| NIHSS (R2 = 0.09) | Ed. (R2 = 0.162) | Ed.q. (R2= 0.188) | ||||||||||||||||||

| NIHSS | −0.466 | 0.205 | 0.023 | −2.277 | 66% | Ed.q. | 1.568 | 0.567 | 0.006 | 2.768 | 80% | Ed.q. | 1.441 | 0.266 | 0.000 | 5.427 | 100% | |||

| AAT.ss (R2 = 0.177) | Ed. Grad. (R2 = 0.039) | Ed. Grad. (R2 = 0.114) | ||||||||||||||||||

| AAT.ss | 0.320 | 0.083 | 0.000 | 3.867 | 96% | Ed.grad | 0.939 | 0.613 | 0.126 | 1.531 | 39% | Ed.grad | 1.488 | 0.468 | 0.001 | 3.181 | 89% | |||

| AAT.sy (R2 = 0.135) | Age (R2 = 0.103) | Age (R2 = 0.243) | ||||||||||||||||||

| AAT.sy | 0.845 | 0.278 | 0.002 | 3.038 | 85% | Age | −0.063 | 0.029 | 0.029 | −2.181 | 70% | Age | −0.095 | 0.029 | 0.001 | −3.314 | 96% | |||

| Aph.se (R2 = 0.168) | ||||||||||||||||||||

| Aph.se | 1.125 | 0.337 | 0.001 | 3.338 | 0% | |||||||||||||||

| Token Test (R2 = 0.111) | ||||||||||||||||||||

| TT | 0.178 | 0.080 | 0.025 | 2.236 | 0% | |||||||||||||||

| adjusted models | adjusted models | adjusted models | ||||||||||||||||||

| Canonicity + AAT.ss (R2 = 0.307) | Canonicity + Ed.q. (R2 = 0.234) | Canonicity + Ed.q. (R2 = 0.28) | ||||||||||||||||||

| Canonicity | 2.318 | 0.661 | 0.000 | 3.506 | 96% | Canonicity | 1.432 | 0.775 | 0.065 | 1.847 | 43% | Canonicity | 1.790 | 0.902 | 0.047 | 1.985 | 53% | |||

| AAT.ss | 0.320 | 0.083 | 0.000 | 3.872 | Ed.q. | 1.562 | 0.564 | 0.00 | 2.767 | Ed.q. | 1.437 | 0.265 | 0.000 | 5.429 | ||||||

| Canonicity without rel. + AAT.ss (R2 = 0.449) | C. without rel. + Ed.q. (R2 = 0.140) | C. without rel. + Ed.q. (R2 = 0.059) | ||||||||||||||||||

| A + SW > P + OW | 2.6481 | 0.5583 | 0.000 | 4.743 | 99% | A + SW > P + OW | 1.027 | 1.091 | 0.346 | 0.942 | 16% | A + SW > P + OW | −0.005 | 0.826 | 0.995 | −0.006 | 7% | |||

| AAT.ss | 0.5021 | 0.164 | 0.002 | 3.062 | Ed.q. | 1.150 | 0.718 | 0.109 | 1.602 | Ed.q. | 0.533 | 0.447 | 0.233 | 1.193 | ||||||

| Sentence type + AAT.ss (R2 = 0.569) | Sentence type + Ed.q. (R2 = 0.376) | Sentence type + Ed.q. (R2 = 0.507) | ||||||||||||||||||

| ST | 0.000 | 82.377 | 100% | ST | 0.018 | 13.671 | 92% | ST | 0.000 | 42.457 | 100% | |||||||||

| OW < SW | −1.407 | 0.502 | 0.006 | −2.805 | OR < SR | −1.930 | 1.000 | 0.179 | −1.930 | OR < SR | −2.665 | 0.677 | 0.000 | −3.935 | ||||||

| OR < SR | −2.044 | 0.466 | 0.000 | −4.390 | OR < P | −3.604 | 1.359 | 0.040 | −2.652 | OR < P | −4.113 | 1.106 | 0.000 | −3.720 | ||||||

| SR < A | −4.807 | 0.861 | 0.000 | −5.583 | OR < OW | −1.241 | 0.957 | 0.325 | −1.296 | OR < OW | −3.381 | 0.845 | 0.000 | −4.001 | ||||||

| SW < A | −2.480 | 0.845 | 0.004 | −2.935 | nOR < OR | −9.599 | 3.176 | 0.025 | −3.023 | nOR < OR | −13.484 | 2.215 | 0.000 | −6.087 | ||||||

| OR < P | −2.858 | 0.501 | 0.000 | −5.701 | nRel < Rel | −1.919 | 0.826 | 0.020 | −3.250 | nRel < Rel | −2.898 | 0.730 | 0.000 | −3.969 | ||||||

| SR < SW | −2.327 | 0.517 | 0.000 | −4.500 | Ed.q. | 1.566 | 0.566 | 0.006 | 2.769 | Ed.q. | 1.426 | 0.263 | 0.000 | 5.417 | ||||||

| nOR < OR | −12.239 | 1.685 | 0.000 | −7.264 | ||||||||||||||||

| AAT.ss | 0.329 | 0.085 | 0.000 | 3.866 | ||||||||||||||||

| Canonicity + NIHSS (R2 = 0.219) | ||||||||||||||||||||

| Camonicity | 2.316 | 0.661 | 0.000 | 3.505 | 96% | |||||||||||||||

| NIHSS | −0.465 | 0.204 | 0.023 | −2.281 | ||||||||||||||||

| Canonicity without relatives + NIHSS (R2 = 0.274) | ||||||||||||||||||||

| A + SW > P + OW | 2.629 | 0.554 | 0.000 | 4.742 | 100% | |||||||||||||||

| NIHSS | −0.783 | 0.420 | 0.062 | −1.864 | ||||||||||||||||

| Sentence type + NIHSS (R2 = 0.482) | ||||||||||||||||||||

| ST | < 0.001 | 69.185 | 100% | |||||||||||||||||

| P < A | −3.974 | 0.845 | 0.000 | −4.702 | ||||||||||||||||

| OW < SW | −1.401 | 0.500 | 0.006 | −2.800 | ||||||||||||||||

| OR < SR | −2.056 | 0.467 | 0.000 | −4.403 | ||||||||||||||||

| SR < A | −4.785 | 0.858 | 0.000 | −5.574 | ||||||||||||||||

| NIHSS | −0.477 | 0.210 | 0.023 | −2.276 | ||||||||||||||||

| NAVS-G SPPT: Between population-grpups results | ||||||||||||||||||||

| Fixed eff. | Est. | SE | p | χ2 | z | Pw | Fixed eff. | Est. | Est. | p | χ2 | z | Pw | Fixed eff. | Est. | SE | p | χ2 | z | Pw |

| Single predictor models | ||||||||||||||||||||

| Group (R2 = 0.229) | Group Sentence type (R2 = 0.441) | group sent. type age (R2 = 0.654) | ||||||||||||||||||

| Group | 0.000 | 35.736 | 100% | Group | 0.000 | 4.021 | Group | 0.000 | 38.928 | |||||||||||

| HP > RH | 1.078 | 0.625 | 0.084 | 1.727 | ST | 0.013 | 22385 | ST | 0.000 | 83.104 | ||||||||||

| HP > LH ^ | 3.857 | 0.620 | 0.000 | 6.226 | SWQ | Age | −0.066 | 0.024 | 0.006 | −2.728 | ||||||||||

| RH > LH ^ | 2.779 | 0.650 | 0.000 | 4.278 | Group | 0.051 | 5.934 | 43% | Group age ST | 0.0218 | 23.773 | |||||||||

| models with interactions | HP > LH | 2.955 | 1.128 | 0.026 | 2.621 | ST age | 0.032 | 12.238 | ||||||||||||

| Group canonicity (R2 = 0.318) | OWQ | Group age | 0.023 | 0.397 | ||||||||||||||||

| Group | 0.000 | 4.021 | Group | 0.013 | 8.691 | 66% | HP | −0.095 | 0.028 | 0.003 | −3.314 | 98% | ||||||||

| Canonicity | 0.000 | 12.526 | HP > RH | 3.010 | 1.406 | 0.048 | 2.141 | RH | −0.062 | 0.002 | 0.043 | −2.181 | 62.5% | |||||||

| Group Canonicity | 0.512 | 0.774 | HP > LH | 4.905 | 1.479 | 0.003 | 3.316 | Group Sentence type Education (R2= 947) | ||||||||||||

| C. without relatives (R2 = 0.143) | SR | Group | 0.000 | 47.131 | ||||||||||||||||

| Group | 0.000 | 15.269 | Group | 0.000 | 24.200 | 94% | Sentence type | 0.000 | 75.390 | |||||||||||

| Canonicity without relatives | 0.000 | 17.310 | HP > LH | 5.489 | 1.486 | 0.001 | 3.693 | Ed.q. | 1.423 | 0.388 | 0.000 | 3.671 | ||||||||

| A + SWQ | 56% | RH > LH | 4.980 | 1.631 | 0.003 | 3.054 | ST Ed.q. | 0.067 | 1.300 | |||||||||||

| HP > LH | 2.450 | 0.901 | 0.020 | 2.718 | OR | Group ST Ed.q. | 0.000 | 36.714 | ||||||||||||

| P + OWQ | 97% | Group | 0.000 | 3.263 | 100% | HP | 0.000 | 4.667 | 100% | |||||||||||

| HP > RH | 2.426 | 1.104 | 0.028 | 2.197 | HP > LH | 3.771 | 0.794 | 0.000 | 4.749 | RH | 0.003 | 2.323 | 55% | |||||||

| HP > LH | 4.705 | 1.086 | 0.000 | 4.333 | RH > LH | 3.205 | 0.799 | 0.000 | 4.011 | |||||||||||

| RH > LH | 2.280 | 0.986 | 0.028 | 2.312 | ||||||||||||||||

| Group Canonicity without relatives | 0.029 | 7.071 | ||||||||||||||||||

| NAVS-G SCT Results in LHSP with Aphasia | |||||||

|---|---|---|---|---|---|---|---|

| Fixed Effects | R2 | Estimate | SE | p | χ2 | z | Power |

| single predictor models | |||||||

| Canonicity ^ | 0.088 | 1.329 | 0.466 | 0.004 | 2.851 | 83% | |

| Canonicity without relatives | 0.092 | 1.424 | 0.599 | 0.018 | 2.376 | 69% | |

| Sentence type ^ | 0.19 | 0.004 | 17.211 | 100% | |||

| NIHSS | 0.058 | −0.254 | 0.121 | 0.036 | −2.098 | 67% | |

| BI | 0.047 | 0.044 | 0.022 | 0.042 | 2.032 | 58% | |

| AAT.ss ^ | 0.096 | 0.161 | 0.053 | 0.003 | 3.005 | 91% | |

| AAT.sy | 0.055 | 0.368 | 0.187 | 0.050 | 1.961 | 53% | |

| Aph.se | 0.108 | 0.701 | 0.270 | 0.009 | 2.599 | 0% | |

| adjusted models | |||||||

| Canonicity + AAT.ss | 0.185 | ||||||

| Canonicity ^ | 1.332 | 0.467 | 0.004 | 2.853 | 85% | ||

| AAT.ss | 0.161 | 0.053 | 0.003 | 3.015 | |||

| Canonicity without relatives + AAT.ss | 0.286 | ||||||

| Canonicity | 1.435 | 0.602 | 0.017 | 2.385 | 72% | ||

| AAT.ss | 0.232 | 0.082 | 0.005 | 2.821 | |||

| Sentence type + AAT.ss | 0.287 | ||||||

| Sentence type ^ | 0.004 | 17.574 | 97% | ||||

| OR < non-OR | −5.812 | 1.700 | 0.006 | −3.418 | |||

| Rel < non-Rel | −1.145 | 0.454 | 0.012 | −2.519 | |||

| AAT.ss | 0.162 | 0.053 | 0.002 | 3.026 | |||

| NAVS-G SCT: between group results | |||||||

| Fixed effects | R2 | Estimate | SE | p | χ2 | z | Power |

| Single predictor model | |||||||

| Group | 0.213 | 0.000 | 17.401 | 98.00% | |||

| HP > LHSP | −3.078 | 0.770 | 0.000 | 3.996 | |||

| RHSP > LHSP | −2.357 | 0.817 | 0.006 | 2.885 | |||

| A.NAT-G Results in LHSP with Aphasia | |||||||

|---|---|---|---|---|---|---|---|

| Fixed effects | R2 | Estimate | SE | p | χ2 | z | Power |

| Single predictor models | |||||||

| Canonicity | 0.066 | 2.228 | 0.652 | 0.001 | 3.416 | 96% | |

| Canonicity without relatives | 0.000 | 2.883 | 0.652 | 0.000 | 4.425 | 91% | |

| Sentence type | 0.194 | 0.000 | 39.635 | 99% | |||

| NIHSS | 0.235 | −1.042 | 0.468 | 0.026 | −2.227 | 77% | |

| AAT.sy * ^ | 0.306 | 1.630 | 0.551 | 0.003 | 2.958 | 89% | |

| Aph.se | 0.287 | 1.805 | 0.654 | 0.006 | 2.761 | 0% | |

| Token Test | 0.259 | 0.319 | 0.126 | 0.011 | 2.530 | 0% | |

| AAT.ss * ^ | 0.41 | 0.613 | 0.157 | 0.000 | 3.916 | 99% | |

| adjusted models | |||||||

| Canonicity + AAT.ss 0.485 | |||||||

| Canonicity | 2.273 | 0.663 | 0.001 | 3.426 | 99% | ||

| AAT.ss | 0.630 | 0.161 | 0.000 | 3.921 | |||

| Canonicity without relatives + AAT.ss (R2 0.567) | |||||||

| Canonicity without relatives | 2.861 | 0.637 | 0.000 | 4.489 | 100% | ||

| AAT.ss | 0.627 | 0.182 | 0.001 | 3.444 | |||

| Sentence type + AAT.ss | 0.615 | ||||||

| Sentence type | 0.000 | 4.124 | 100% | ||||

| P < A | −4.137 | 1.035 | 0.000 | 3.996 | |||

| OW < SW | −2.258 | 0.780 | 0.005 | 2.895 | |||

| SR < A | −5.067 | 1.073 | 0.000 | 4.722 | |||

| OR < P | −2.169 | 0.730 | 0.005 | 2.972 | |||

| OR < OW | −2.403 | 0.743 | 0.002 | 3.236 | |||

| SR < SW | −3.421 | 0.832 | 0.000 | 4.113 | |||

| OR < non-OR | −1.472 | 2.435 | 0.000 | 4.301 | |||

| Rel < Non-Rel | −3.104 | 0.712 | 0.000 | 4.261 | |||

| AAT.ss | 0.679 | 0.171 | 0.000 | 3.963 | |||

| between group analyses | |||||||

| Fixed effects | R2 | Estimate | SE | p | χ2 | z | Power |

| Single predictor models | |||||||

| Group | 0.196 | ||||||

| Group | 0.001 | 13.309 | 88.00% | ||||

| HS > RHSP | −1.431 | 1.024 | 0.162 | 1.398 | |||

| HS > LHSP | −3.787 | 0.981 | 0.000 | 3.861 | |||

| RHSP > LHSP | −2.355 | 1.041 | 0.036 | 2.262 | |||

| models with interactions | |||||||

| Group * Canonicity | 0.199 | ||||||

| Group | 0.002 | 12.902 | |||||

| Canonicity | 0.001 | 1.756 | |||||

| Group * Canonicity | 0.150 | 3.792 | |||||

| Group * Canonicity without relatives | 0.120 | ||||||

| Group | 0.067 | 5.396 | |||||

| Canonicity without relatives | 0.001 | 11.093 | |||||

| Group * Canonicity without relatives | 0.018 | 80.031 | |||||

| Non-Canonical | |||||||

| Group | 0.249 | 0.002 | 12.692 | 77.00% | |||

| HS > LHSP | 4.834 | 1.281 | 0.000 | 3.774 | |||

| RHSP > LHSP | 2.908 | 1.293 | 0.037 | 2.250 | |||

| Group * Sentence type | 0.204 | ||||||

| Group | 0.010 | 9.237 | |||||

| Sentence type | 0.000 | 3.745 | |||||

| Group * Sentence type | 0.059 | 17.763 | |||||

| Test | Error Type in Patients with Aphasia | Number | % |

|---|---|---|---|

| VNT | Semantic paraphasia with a verb with: | 24 | 71 |

| A. the same argument (e.g., schiebento push—instead of ziehen—to pull) | 17 | ||

| B. a lower argument when the target verb was 3 Ob and 3 Op (e.g., holen—to get instead of geben—to give) | 7 | ||

| Verb omission | 6 | 18 | |

| Phonematic paraphasia | 2 | 6 | |

| Substitution by nouns | 1 | 3 | |

| Perseveration | 1 | 3 | |

| ASPT | Missing verb’s conjugation together with wrong verb’s position (e.g., Der Mann Brief schicken—The man letter send) | 26 | 56 |

| The omission of one given argument: | 6 | 13 | |

| A. of the agents (e.g., Retten die Frau—Saves the woman) | 1 | ||

| B. of the goal (e.g., Mann stellt Schachtel—Man puts box) | 1 | ||

| C. of the patients (e.g., Der Mann schreibt einen Brief, instead of Der Mann schreibt der Frau einen Brief—The man writes a letter, the word woman is missing) | 4 | ||

| Wrong order of arguments in sentences with 3 arguments (e.g., Der Mann schickt einen Brief der Frau—The man sends a letter the woman. (In German the dative object is always before the accusative object when this one is preceded by an undefined article:The correct sentence in German is: Der Mann schickt der Frau einen Brief)). | 5 | 11 | |

| Missing/wrong conjugation with right verb position (e.g., Hund beißen Katze—Dog bite cat) | 3 | 6 | |

| Use of a wrong, not given verb (e.g., Das Baby strampelt statt krabbelt—The baby is kicking instead of crawling) | 3 | 6 | |

| Perseveration | 1 | 2 | |

| Omission of the whole sentence | 1 | 2 | |

| Role reversal (e.g., Die Frau rettet den Mann—The woman is saving the man, while the picture showed the opposite) | 2 | 4 | |

| SPPT | Incorrect word order | 96 | 64 |

| Passive subordinate clauses instead of an OR sentences | 23 | 15 | |

| Canonical instead of non-canonical | 21 | 14 | |

| Reveal of roles | 4 | 3 | |

| Verb omission (SR/OR) | 3 | 2 | |

| Use of wrong personal pronouns | 3 | 2 |

| Results of the Pearson-Correlation Analysis across All Subtests of the NAVS-G and NAT-G | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| VNT | VCT | ASPT | |||||||

| p-value | r | CI | p-value | r | CI | p-value | r | CI | |

| VNT | 1 | 0.029 | 0.564 | 0.07|0.84 | 0.007 | 0.661 | 0.23|0.88 | ||

| VCT | 0.029 | 0.564 | 0.07|0.84 | 1 | 0.000 | 0.878 | 0.67|0.96 | ||

| ASPT | 0.007 | 0.661 | 0.23|0.88 | 0.000 | 0.878 | 0.67|0.96 | 1 | ||

| NAT | 0.096 | 0.481 | −0.1|0.82 | 0.276 | 0.327 | −0.27|0.74 | 0.115 | 0.459 | −0.12|0.81 |

| SPPT | 0.104 | 0.436 | −0.1|0.78 | 0.253 | 0.315 | −0.24|0.71 | 0.069 | 0.482 | −0.04|0.8 |

| SCT | 0.041 | 0.533 | 0.03|0.82 | 0.094 | 0.449 | −0.08|0.78 | 0.017 | 0.605 | 0.14|0.85 |

| NAVS-G and NAT-G | 0.008 | 0.656 | 0.22|0.87 | 0.010 | 0.640 | 0.19|0.87 | 0.001 | 0.777 | 0.44|0.92 |

| NAT | SPPT | SCT | |||||||

| p-value | r | CI | p-value | r | CI | p-value | r | CI | |

| VNT | 0.096 | 0.481 | −0.1|0.82 | 0.104 | 0.436 | −0.1|0.78 | 0.041 | 0.533 | 0.03|0.82 |

| VCT | 0.276 | 0.327 | −0.27|0.74 | 0.253 | 0.315 | −0.24|0.71 | 0.094 | 0.449 | −0.08|0.78 |

| ASPT | 0.115 | 0.459 | −0.12|0.81 | 0.069 | 0.482 | −0.04| 0.8 | 0.017 | 0.605 | 0.14|0.85 |

| NAT | 1 | 0.000 | 0.881 | 0.64|0.96 | 0.000 | 0.845 | 0.55|0.95 | ||

| SPPT | 0.000 | 0.881 | 0.64|0.96 | 1 | 0.000 | 0.904 | 0.73|0.97 | ||

| SCT | 0.000 | 0.845 | 0.55|0.95 | 0.000 | 0.904 | 0.73|0.97 | 1 | ||

| NAVS-G and NAT-G | 0.000 | 0.848 | 0.56|0.95 | 0.000 | 0.816 | 0.52|0.94 | 0.000 | 0.896 | 0.71|0.96 |

| B. Results of the Comparison between the Subtests Using Wilcoxon Tests. | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| VNT | VCT | ASPT | NAT | SPPT | SCT | |||||||||||||

| p | Z | r | p | Z | r | p | Z | r | p | Z | r | p | Z | r | p | Z | r | |

| VNT | 0.002 | −3.078 | 0.795 | 0.003 | −2.976 | 0.768 | 0.346 | 0.942 | 0.261 | 0.003 | 2.954 | 0.763 | 0.002 | −3.078 | 0.854 | |||

| VCT | 0.002 | 3.078 | 0.795 | 0.043 | 2.023 | 0.522 | 0.007 | −2.675 | 0.742 | 0.001 | 3.415 | 0.882 | 0.003 | −2.956 | 0.762 | |||

| ASPT | 0.003 | 2.976 | 0.768 | 0.043 | −2.023 | 0.522 | 0.009 | −2.601 | 0.721 | 0.001 | 3.411 | 0.881 | 0.015 | −2.443 | 0.631 | |||

| NAT | 0.346 | 0.942 | 0.261 | 0.007 | −2.675 | 0.742 | 0.009 | −2.601 | 0.721 | 0.005 | 2.836 | 0.787 | 0.065 | −1.843 | 0.511 | |||

| SPPT | 0.003 | −2.954 | 0.763 | 0.001 | −3.415 | 0.882 | 0.001 | −3.411 | 0.881 | 0.005 | −2.836 | 0.787 | 0.001 | −3.413 | 0.881 | |||

| SCT | 0.002 | 3.078 | 0.854 | 0.003 | −2.956 | 0.763 | 0.015 | −2.442 | 0.631 | 0.065 | 1.843 | 0.511 | 0.001 | 3.413 | 0.881 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ditges, R.; Barbieri, E.; Thompson, C.K.; Weintraub, S.; Weiller, C.; Mesulam, M.-M.; Kümmerer, D.; Schröter, N.; Musso, M. German Language Adaptation of the NAVS (NAVS-G) and of the NAT (NAT-G): Testing Grammar in Aphasia. Brain Sci. 2021, 11, 474. https://doi.org/10.3390/brainsci11040474