Abstract

This study assesses the performance of large ensembles of global (CMIP5, CMIP6) and regional (CORDEX, CORE) climate models in simulating extreme precipitation over four major river basins (Limpopo, Okavango, Orange, and Zambezi) in southern Africa during the period 1983–2005. The ability of the model ensembles to simulate seasonal extreme precipitation indices is assessed using three high-resolution satellite-based datasets. The results show that all ensembles overestimate the annual cycle of mean precipitation over all basins, although the intermodel spread is large, with CORDEX being the closest to the observed values. Generally, all ensembles overestimate the mean and interannual variability of rainy days (RR1), maximum consecutive wet days (CWD), and heavy and very heavy precipitation days (R10mm and R20mm, respectively) over all basins during all three seasons. Simple daily rainfall intensity (SDII) and the number of consecutive dry days (CDD) are generally underestimated. The lowest Taylor skill scores (TSS) and spatial correlation coefficients (SCC) are depicted for CDD over Limpopo compared with the other indices and basins, respectively. Additionally, the ensembles exhibit the highest normalized standard deviations (NSD) for CWD compared to other indices. The intermodel spread and performance of the RCM ensembles are lower and better, respectively, than those of GCM ensembles (except for the interannual variability of CDD). In particular, CORDEX performs better than CORE in simulating extreme precipitation over all basins. Although the ensemble biases are often within the range of observations, the statistically significant wet biases shown by all ensembles underline the need for bias correction when using these ensembles in impact assessments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most extreme climate events, especially those related to precipitation, have profound effects on human populations, ecosystems, and the environment (Lu et al. 2022). In many parts of the globe, substantial changes in the intensity and frequency of extreme precipitation events have already been reported (Wan et al. 2021, Seneviratne et al. 2021). Because of poor adaptive capacity due to restricted access to climate-related information, technology, finance, and capital assets, developing nations are particularly vulnerable to the effects of precipitation extremes, including floods and droughts (Stephenson et al. 2010; Sylla et al. 2016; Yaduvanshi et al. 2021; Abiodun et al. 2020; Akinsanola et al. 2021). This is particularly evident in southern African nations; for instance, during the 2015–16 rainy season in southern Africa, a severe drought and subsequent dry spells caused widespread crop failure, which resulted in severe food insecurity in the region (approximately 40 million people required humanitarian support, SADC 2016). Recently, thousands of people have died, millions of people have been forced to evacuate, and infrastructure has been destroyed by heavy precipitation events caused by tropical cyclones (e.g., cyclones Idai in 2019, Chalane in 2020, and Eloise in 2021).

River basins are not exceptional to the effects of climate change, and they contribute substantially to socioeconomic development (Jain and Singh 2020). For instance, agricultural production was weakened over the Zambezi basin during droughts that occurred during the rainy seasons of 1991–1992 and 1994–1995 (SADC-WD/ZRA 2008). In addition, river levels in sub-Saharan Africa were very low in 2019, reducing the water available in Kariba to run the hydropower plant to 10% (Hulsman et al. 2021). Climate change and human population growth are expected to impose more stress on the ecology of the Zambezi basin (SADC-WD/ZRA 2008). The Limpopo and Orange basins are also expected to experience significant desiccation and a decline in water levels due to changes in precipitation and temperature (Mitchell 2013).

The construction of climate information, especially when relevant for decision-making at local and regional scales, must be based on multiple lines of evidence, including but not limited to the analysis of the results of different classes of climate models (Doblas-Reyes et al. 2021). In fact, using different classes of model ensembles is very useful for detecting areas of disagreement and agreement in climate information across various ensembles (Dosio et al. 2021a; Doblas-Reyes et al. 2021).

The World Climate Research Programme (WCRP) launched several coordinated programs to provide historical and future climate projections using large ensembles of global climate models (GCMs) and regional climate models (RCMs). The most prominent among these coordinated programs are Coupled Model Intercomparison Project Phase 5 (CMIP5; Taylor et al. 2012), Phase 6 (CMIP6; Eyring et al. 2016), and the Coordinated Regional Climate Downscaling Experiment (CORDEX; Giorgi and Gutowski 2015). CMIP experiments provide historical and future climate projections from a large ensemble (approximately 30) of GCMs (Luo et al. 2022). CMIP6 was launched as an improvement to CMIP5, particularly due to improved physical processes, parameterizations, increased spatial resolutions, and additional biogeochemical processes (Eyring et al. 2016). However, for regionally and locally tailored impact assessments, high-resolution climate projections are required (Doblas-Reyes et al. 2021). The simulation of regional phenomena, especially those impacted by complex topography, land use heterogeneity, coastal lines, and mesoscale convection, is often poor in GCMs because of their low horizontal resolution (typically on the order of a hundred kilometers or more). To this extent, although dynamic downscaling does not always add value, compared to GCMs, in the simulation of mean quantities (e.g., Dosio et al. 2015), RCMs improve the simulation of precipitation characteristics, especially for extreme events (e.g., Gibba et al. 2019).

Under the CORDEX initiative, RCMs were used to dynamically downscale several CMIP5 GCMs to an ~ 50 km horizontal resolution over several domains (Giorgi et al. 2021). More recently, the CORDEX-CORE (Coordinated Output for Regional Evaluations) initiative was launched, aiming at producing climate projections in a more homogeneous framework, where all participating RCMs were required to downscale the same set of driving GCMs (in contrast to CORDEX, where the choice of GCMs was left to the individual RCM modeling groups). Additionally, to make the CORE results more suitable for application in impact studies, the horizontal resolution was set twice as high as that of CORDEX (~ 25 km).

The application of climate projections from CMIP and CORDEX is an important tool for generating information on climate change, which is important for policymaking and developing adaptation strategies. However, before their use, it is essential to validate the performance of climate models over a historical reference period, especially when climate simulations are used as inputs to impact models. Most studies evaluating the ability of CMIP, CORDEX, and CORE to simulate extreme precipitation over Africa have focused on continental or regional scales (Pinto et al. 2016; Abiodun et al. 2017; Gibba et al. 2019; Abiodun et al. 2020; Dosio et al. 2021a; Ogega et al. 2020; Faye and Akinsanola 2022; Akinsanola et al. 2021 Ayugi et al. 2021; Dosio et al. 2022a, b). Additionally, most of these studies are based on climate models from one or two coordinated projects or use a limited subset of the model ensembles. Recently, studies have been conducted in Africa using ensembles of CMIP5, CMIP6, CORDEX, and CORE. For instance, extreme precipitations from large CMIP (approximately 30 CMIP5 and CMIP6 models), CORDEX (24), and CORE (9) simulations were compared by Dosio et al. (2021a) over Africa, but their analysis was focused on future projections. Focusing on southern Africa, Karypidou et al. (2022) assessed the performance of CMIP5, CMIP6, CORDEX, and CORE ensembles in simulating mean and extreme precipitation. However, their findings were confined to a relatively small subset of the CMIP ensembles (13 CMIP5 and 8 CMIP6 models), and the study’s main emphasis was on mean precipitation.

Several studies have evaluated how well GCMs and RCMs can simulate extreme rainfall in African river basins. Diatta et al. (2020) investigated the Rossby Center Regional Climate Model’s (RCA4) ability to simulate extreme precipitation over the Casamance river basin. Salaudeen et al. (2021) evaluated the CMIP5 GCMs’ ability to reproduce extreme precipitation in the Gongola Basin. Agyekum et al. (2022) investigated the performance of CMIP6 in simulating extreme precipitation over the Volta Basin. Samuel et al. (2022) evaluated CORE’s capacity to reproduce Zambezi’s extreme precipitation.

Although prior studies have evaluated climate models’ capacity to reproduce extreme precipitation over southern Africa, to our knowledge, no studies have compared the seasonal performance of large ensembles over southern Africa and its major river basins. In this study, we investigate how well GCM (CMIP5 and CMIP6) and RCM (CORDEX and CORE) ensembles can reproduce observed extreme precipitation during the rainy season. This study focuses on DJF and transitional seasons (SON and MAM). These seasons are chosen because of their impact on southern Africa’s rain-fed agriculture. Understanding climate model simulations performances during these seasons is vital for southern African policymakers and climate information users. We employed six indices to characterize mean and extreme precipitation as established by the Expert Team on Climate Change Detection and Indices (ETCCDI, Zhang et al. 2011), focusing on indices for identifying excessive dryness and moderately wet conditions. The results of this research provide information that is beneficial to the scientific and user communities, especially regarding the use of these ensembles as inputs in impact models. The remainder of this paper is organized as follows: Sect. 2 presents the study area, data, and methods. The results and discussion are presented in Sect. 3, and a summary and concluding remarks are presented in Sect. 4.

2 Study area, data, and methods

2.1 Definition of the study area and sub-regions

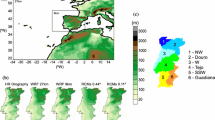

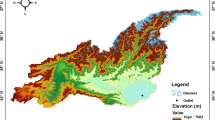

In this study, we define southern Africa as the region that lies between 10–35°S and 10–40°E, focusing on four major river basins (the Limpopo, Okavango, Orange, and Zambezi basins), as shown in Fig. 1. In fact, major economic activities, such as agriculture and power production, occur within the basins, making them vital in socioeconomic activities across the region (Abiodun et al. 2019).

2.2 Observational data

Several studies (Gibba et al. 2019; Abiodun et al. 2020; Dosio et al. 2021b; Hamadalnel et al. 2022; Olusegun et al. 2022) have highlighted the lack of reliable high-quality in situ datasets at spatiotemporal coverage suitable for model evaluation as a key challenge in model evaluation in Africa. Despite the considerable differences between merged satellite and gauged station data, they are widely used as references for model evaluation over Africa (e.g., Abiodun et al. 2020; Ayugi et al. 2021; Klutse et al. 2021; Samuel et al. 2022). Discrepancies among the observations make it difficult to choose a specific dataset as a reference for model evaluation. Therefore, the mean of multiple observational data has been used as a reference for model evaluation in previous studies (e.g., Abidium et al. 2020; Wan et al. 2021; Karypidou et al. 2022; Ilori and Balogun 2021).

In this study, we used gridded data based on merged satellite and gauge observations. In particular, we used daily precipitation datasets obtained from the Climate Hazards Group Infrared Precipitation with Station (CHIRPs version 2, Funk et al. 2015), with a spatial resolution of 0.05° × 0.05°, the Tropical Applications of Meteorology using SATellite and ground-based observations (TAMSAT version 3.1, Maidment et al. 2017), with a spatial resolution of 0.04° × 0.04°, and the African Rainfall Climatology (ARC version 2, Novella and Thiaw. 2013) from the Famine Early Warning System, with a spatial resolution of 0.1° × 0.1°. These datasets have been evaluated against gauge stations over southern Africa and have demonstrated better performance compared to other existing gridded observational data over the region (Maidment et al. 2017). Their high spatial resolution and superior performance over southern Africa make these datasets suitable for climate model evaluation, particularly over small regions such as river basins.

2.3 Climate model simulations

In this study, we used historical daily precipitation simulations from both global (CMIP5, CMIP6) and regional (CORDEX, CORE) climate models. Tables S1–4 provide a list of the models and their basic descriptions. In particular, we used 30 simulations from CMIP5, 26 simulations from CMIP6, 25 simulations based on six RCMs downscaling 13 CMIP5 GCMs under the CORDEX experiment, and 9 simulations based on three RCMs downscaling three CMIP5 GCMs under the CORE experiment, obtained from the Earth System Grid Federation (ESGF) servers. The models are selected based on the availability of both historical and future (SSP5-8.5 for CMIP6 and RCP 8.5 for CMIP5, CORDEX, and CORE) simulations at the time of writing. The models selected here are used to project future changes in the second part of our study. To simplify the evaluation, we used simulations of one ensemble member for each model.

2.4 Extreme precipitation indices

This study analyzes six extreme precipitation indices (Table 1) as defined by the Expert Team on Climate Change Detection and Indices (ETCCDI) (Zhang et al. 2011). We used Climate Data Operators (CDO, https://code.zmaw.de/projects/cdo) to compute all the indices. The selected indices provide information on the present and future characteristics of both wet and dry conditions in terms of intensity and duration. These indices have been widely used to define extreme precipitation (Gibba et al. 2019; Akinsanola et al. 2021; Zhu et al. 2021a, b; Abiodun et al. 2020; Dosio et al. 2021a; Ayugi et al. 2021; Yao et al. 2021; Dike et al. 2022; Luo et al. 2022; Samuel et al. 2022). The indices we used can be classified into three categories: duration indices, frequency indices, and intensity indices (Table 1). We analyze the indices for each year during December–January–February (DJF), March–April–May (MAM), and September–October–November (SON) from each observational dataset and climate model simulation on their native grids.

2.5 Evaluation methods

The performance of the CMIP5, CMIP6, CORDEX, and CORE simulations in representing historical extreme precipitation indices over southern Africa is evaluated for 23 years (1983–2005), which is common for both observations and climate model simulations. The spatial resolution differs across the individual models for CMIP5 and CMIP6. Although individual simulations for CORDEX and CORE are available on 0.5° and 0.25° grids, respectively, the grid types differ across climate models. Hence, for CMIP5 and CMIP6, the indices are regridded onto a 1.32° × 1.32° grid using the bilinear method, while for CORDEX and CORE, are remapped to a common grid type (latitude × longitude) at their original resolution. The equal-weighted method is used for computing multimodel ensemble means (MMEs). We admit that the equal-weighted technique utilized here is constrained because models generated by the same institute or GCMs downscaled by the same RCM may have similar structural biases. This method has been used by most research dealing with ensembles of climate models over different regions of the world, including the “Africa-box” in the recent IPCC Special Report on 1.5 °C warming (Hoegh-Guldberg et al. 2018) and AR6 (Gutiérrez et al. 2021). Weigel et al. (2010) found that, for many applications, equal weighting may be the more transparent way to combine models and is preferable to a weighting that does not appropriately represent the true underlying uncertainties, as “optimum weighting” requires both accurate knowledge of the single model skill and the relative contributions of the joint model error and unpredictable noise; both issues are still open to discussion.

To compute the mean of the three observations (OBSE) and evaluate the MMEs using statistical methods, we regridded the indices for each observation onto the corresponding MME grid using a conservative method.

The mean bias (MB; MME minus OBSE) is used to evaluate the performance of the MMEs in reproducing the spatial distribution of the magnitude of extreme precipitation. To assess the statistical significance of the bias, we used the method defined by Dosio et al. (2021a), which is similar to that developed for the Intergovernmental Panel on Climate Change (IPCC) 6th Assessment Report (AR6, Gutiérrez et al. 2021). Briefly, the bias of each simulation is considered statistically significant if it is greater than the interannual variability of the observations (regardless of the sign of bias). The interannual variability is defined as γ = √(2⁄23) × 1.645 × σ, where sigma is the standard deviation of the linearly detrended annual time series of the observations.

-

If more than 66% of the individual models exhibit significant bias and more than 66% of those models agree on the sign of the bias, the bias of the MMEs is deemed significant.

-

If more than 66% of simulations show significant bias but less than 66% agree on its sign, the bias of the MMEs is deemed conflicting.

We further evaluated the performance of the model ensembles in simulating the observed extreme precipitation indices spatially averaged over four major river basins in southern Africa. The Taylor diagram (Taylor 2001) is used to evaluate the ability of the models to reproduce the observed spatial patterns of extreme precipitation. The Taylor diagram is used to summarize the three statistics (spatial correlation coefficients: SCC; standard deviation: SD; and root mean square error: RMSE). The Taylor skill score (TSS; Wang et al. 2018) is used to further quantify the similarities between the ensembles and the observation. The TSS is calculated as follows:

Furthermore, the skill of the ensembles is quantified using the Kling–Gupta efficiency (KGE; Gupta et al. 2009). The KGE is calculated as follows:

where PC is the SCC between the OBSE and models and \({PC}_{0}\) is the maximum SCC (here, we used 1). \({\sigma }_{o}\) and \({\sigma }_{m}\) are the SDs of the OBSE and models, respectively. \({\mu }_{o}\) and \({\mu }_{m}\) are the means of the OBSE and models, respectively.

The standard deviation (SD) of basin-averaged time series is used to evaluate the ability of the models to represent the magnitude of the observed interannual variability of each extreme precipitation index. The SD has been used to assess interannual variability in previous studies (e.g., Rajendran et al. 2022; Dosio et al. 2022a, b) and the IPCC AR6 report (Gutiérrez et al. 2021).

3 Results and discussion

The main focus of this section is on the performance of multimodel ensembles in reproducing extreme precipitation, with a brief evaluation of the monthly mean precipitation annual cycle.

3.1 Annual cycle of daily precipitation

Figure 2 shows the annual cycles of monthly averaged daily precipitation for the OBSE and multimodel ensemble means (MMEs) for CMIP5, CMIP6, CORDEX, and CORE. The results have been spatially averaged over the four southern Africa major river basins (Limpopo, Okavango, Orange, and Zambezi) shown in Fig. 1. Generally, all MMEs can reproduce the annual cycle of precipitation over all four river basins (Fig. 2). This is consistent with the findings of Karypidou et al. (2022) over southern Africa and Dosio et al. (2021a) over western southern Africa and eastern southern Africa, respectively. Although MMEs generally capture the temporal evolution of the precipitation cycle, they overestimate precipitation over all basins, especially between November and March. CMIP6 (CORDEX) exhibits the largest (lowest) biases over all basins except over the Orange basin. Similar to the findings of previous studies (Lim Kam Sian 2022; Karypidou et al. 2022), the wet biases of the MMEs are larger during the peak of the rainy season (DJF). Generally, the intermodel spread is very large, with larger uncertainties for CMIP5 than for the other ensembles. More specifically, CMIP5 (CORDEX) shows the largest (smallest) intermodel uncertainty over the Okavango and Orange (Limpopo and Zambezi) basins, while CMIP6 (CORE) shows the smallest (largest) intermodel uncertainty over the Limpopo (Zambezi) basins. The performance of the RCM MMEs is better than that of the GCM MMEs, especially during the peak (DJF) of precipitation. CORDEX shows good agreement with the observed precipitation peak during the DJF over the Zambezi basin. In agreement with a previous study (Karypidou et al. 2022), CORDEX and CORE MMEs perform better than CMIP5 and CMIP6, which shows the added value of downscaling in simulating annual precipitation cycles over the four basins. However, better performance in CORDEX than in CORE shows that other than the resolution, the model physical configuration also plays a critical role in improving the performance of climate models in simulating precipitation. Similar findings were reported by Wu et al. (2020). For instance, the better performances in CCLM and REMO might be associated with improvements in the convective scheme under CORE (Tiedtke with modifications) compared to under CODEX (Tiedtke). In fact, Olusegun et al. (2022) noted that the modified Tiedtke cumulus convection scheme is more suitable for West Africa than cumulus convection. Figure 6 in Panitz et al. (2014) shows that the increase in resolution of CCLM at ~ 50 to ~ 25 km has no impact in simulating annual precipitation cycles over southern Africa.

The climatological (1983–2005) annual cycle of mean precipitation (mm/day) for the avarage of the observation (OBSE) and model ensembles spatially averaged over southern Africa major river basins shown in Fig. 1. The shaded areas show the range of the OBSE and each model ensemble

3.2 Daily extreme indices

Here, we present the results of the performance of the ensembles in simulating extreme precipitation indices during the main rainy season (DJF) only, with results for other seasons (SON and MAM) available as supplementary materials (Figs. S1–S10).

3.2.1 Spatial distribution of biases

Figure 3 shows the spatial distribution of the climatological biases of CDD, CWD, and RR1 from CMIP5, CMIP6, CORDEX, and CORE MMEs. Similar maps for the SON and MAM seasons are shown in Figs. S1 and S2. The results show that all the ensembles tend to significantly underestimate the observed values of CDD over most of southern Africa (Fig. 3d–g). In particular, all ensembles largely underestimate CDD values over most of the region, with negative biases of more than 10 days over coastal Angola, the northeastern Orange basin, and the eastern Limpopo basin. However, CORDEX and CORE overestimate CDD values by up to 6 days over the eastern Zambezi basin, northern Mozambique, and in some areas over southwestern coastal south Africa and the southwestern Orange basin. CMIP5 overestimates CDD by 6 days over northern Mozambique and in some areas over the northeastern Zambezi basin, southwestern Orange, and southwestern coastal South Africa. The biases of MME for CDD are lower over the Zambezi basin than over the other three basins. Similar to the DJF season, all the ensembles significantly underestimate CDD over all basins during MAM (Fig. S1d–g). In contrast, during SON, the ensembles exhibit larger areas of overestimation of CDD (Fig. S2d–g). For instance, during SON, the ensembles exhibit larger areas of statistically significant overestimation (underestimation) of CDD values over the Orange basin (northern Mozambique and southern Tanzania). Overall, for CDD, the ensembles exhibit less biases over the Zambezi basin than over the other three basins. All the ensembles tend to overestimate CWD (Fig. 3h–k) and RR1 (Fig. 3l–o) over southern Africa. More specifically, CMIP6 and CMIP5 (CORE) overestimate CWD values by more than 20 days over the northern part of southern Africa and most of the Zambezi basin (northern part and northwestern Zambezi basin). CORDEX overestimates CWD values by more than 20 days over small-localized areas in the northern part. CORDEX and CORE underestimate CWD values by up to 4 days over northern Mozambique and the southeastern Zambezi basin. A comparison of the seasonal biases shows that the large biases in both GCMs and RCM MMEs during DJF are reduced during MAM and SON, particularly in CMIP5, CMIP6, and CORE over the northern Zambezi basin (Figs. 3, S1, and S2).

Spatial distribution 23-year observed (OBSE) climatological (1983–2005) for a CDD, b CWD, c RR1 during December–January–February (DJF); and the climatological biases of multimodel mean (MME minus OBSE) from top to bottom are CDD (d–g), CWD (h–k), and RR1 (l–o). Areas with full color are those where the bias is statistically significant. The regions where the bias is non-significant and uncertain are highlighted by vertical and horizontal lines, respectively

Overall, CORDEX has better performance than CMIP5, CMIP6, and CORE in simulating CWD over southern Africa. The greater extent of overestimation of CWD in CORE than in CORDEX could be associated with the excess moisture supply in CORE than in CORDEX. Pinto et al. (2016) associated the wet biases in CORDEX RCMs with poor representation of atmospheric circulation patterns such as the Angola low and hence increased moisture input from the Atlantic Ocean. In contrast to CDD, MME biases are larger over the Zambezi basin compared to the other three basins for CWD, especially in CMIP5, CMIP6, and CORE. The largest positive biases of RR1 are located in southeastern Zimbabwe, the southern Okavango basin, and the eastern Orange and Limpopo basins (Figs. 3, S1, and S2). Similar to previous studies (Abidum et al. 2020; Karypidou et al. 2022; Luo et al. 2022), larger biases over Drakensberg Mountain illustrate the influence of complex topography on precipitation. Furthermore, the lower biases in the RCM MMEs in this region demonstrate their ability to resolve fine-scale regional processes better than GCMs (e.g., complex topography; Mishra et al. 2014; Karypidou et al. 2022). CMIP6 shows a larger overestimation of RR1 over a larger area than CMIP5. For instance, CMIP6 exhibits an overestimation of RR1 by 24 days over large areas of the southeastern Zimbabwe, Okavango, and Limpopo basins (eastern coastal areas of southern Africa) during (MAM) DJF (Figs. 3 and S1). Overall, RCM MMEs demonstrate better performance than GCM MMEs in simulating CWD and RR1, with slightly better performance in CORDEX. The better performance in CORDEX and CORE than CMIP5 and CMIP6 could be a result of their ability to better represent topography than GCMs and hence better in capturing northerly moisture transport into southern Africa (Munday and Washington 2018; Karypidou et al. 2022). However, the smaller number of ensemble members in CORE compared to CORDEX and the larger overestimation of RR1 and CWD in RegCM simulations may partly be responsible for the slight underperformance of CORE compared to CORDEX.

The climatological spatial distribution biases of SDII, R10mm, and R20mm are shown in Fig. 4. All ensembles underestimate the observed SDII values over southern Africa, except over a few areas where they slightly underestimate SDII. A common region with the largest underestimation of SDII values is shown in all MMEs. In particular, all MMEs exhibit a negative bias of more than 6 mm/day over southern coastal Mozambique and the southern and eastern Limpopo basin (Fig. 4d–g). Conversely, all MMEs show larger areas of slight overestimation over the southeastern Orange basin. Additionally, the CORDEX (CORE) MME shows areas of slight overestimation of SDII over south Angola, north-coastal Namibia, southeastern Limpopo basin, and (southern Angola, northern-eastern Okavango basin, and most of Orange basin). A comparison of the three seasons shows that all MMEs exhibit larger biases during MAM than during DJF and SON, particularly during MAM over the south coastal Mozambique, Limpopo basin, and northern Orange basin (Figs. 4, S3, and S4). It is important to note that GCM and RCM MMEs show similarities in the magnitude of biases for SDII. CMIP5 and CMIP6 MMEs show statistically significant positive biases for R10mm and R20mm in most of southern Africa and small-negative biases over southern Tanzania, northern Mozambique, and northern Angola. CORDEX and CORE MMEs tend to overestimate R10mm over the Okavango, Orange, and Limpopo basins and western coastal Angola but show a strong underestimation (up to 12 days) over the northern Angola, northern Mozambique, southern Tanzania, and eastern Zambezi basin (Fig. 4j, k).

Spatial distribution 23-year observed (OBSE) climatological (1983–2005) for a SDII, b R10mm, c R20mm during December–January–February (DJF); and the climatological biases of multimodel mean (MME minus OBSE) from top to bottom are SDII (d–g), R10mm (h–k), and R20mm (l–o). Areas with full color are those where the bias is statistically significant. The regions where the bias is non-significant and uncertain are highlighted by vertical and horizontal lines, respectively

For R20mm, the CORDEX and CORE MMEs exhibit positive biases over most of southern Africa, with few areas of negative biases, particularly over Mozambique. Comparing the biases of the DJF season to those of the MAM and SON seasons shows that all MMEs show better performance in simulating R10mm and R20mm during MAM and SON (Fig. 4, S3, and S4). Furthermore, there are larger areas of statistically insignificant biases in all MMEs for R10mm and R20mm during the MAM and SON seasons compared to DJF. The MMEs show a common larger wet bias over Lesotho and southeast coastal South Africa. Diallo et al. (2015) associated the wet bias shown over Lesotho and southeast coastal South Africa with the overestimation of southerly wind flux and the effects of complex topography on convection triggering. More specifically, the area of overestimation over Lesotho (southeastern coastal South Africa) is slightly larger in CMIP6 and CORE than in CMIP5 and CORDEX. However, the magnitude of the negative bias for R10mm and R20mm over northern Mozambique is slightly higher in CMIP5 than in CMIP6. Despite the persistence of statistically significant biases in MME simulations for the six extreme precipitation indices, they reasonably reproduce the spatial distribution of extreme precipitation over southern Africa (Fig. not shown). This implies that the MMEs can capture key climate systems that influence precipitation over southern Africa.

3.2.2 Regional analysis

Figure 5 shows the comparison between the observed and simulated extreme precipitation indices averaged over the four southern Africa major river basins shown in Fig. 1. The differences between the observed mean CDD are larger during MAM than during DJF and SON, with the largest difference over the Limpopo and Orange basins (Figs. 5a, S5a, and S6a). The MMEs tend to underestimate the observed mean CDD over all basins during the three seasons, with few cases of overestimation. The interquartile range of MMEs is smaller over the Zambezi basin, with ranges of 2.3, 2.0, 1.8, and 2.3 days for CMIP5, CMIP6, CORDEX, and CORE, respectively. Notably, all MMEs overestimate CDD relative to TAMSAT, while CMIP5, CMIP6, and CORE (CORDEX) underestimate (overestimate) mean CDD over the Orange basin during SON relative to ARC and CHIRPs (Fig. S6a). The CMIP5, CMIP6, CORDEX, and CORE medians overestimate the mean CWD and RR1 over all basins (Fig. 5b, c). In particular, for RR1 and CWD, the interquartile ranges of CMIP5 and CMIP6 are outside the maximum range of the observations over all basins, whereas CORDEX and CORE medians are generally higher than the largest mean of the observations (Fig. 5b, c).

December–January–February (DJF) climatological (1983–2005) means of a CDD, b CWD, c RR1, d R10mm, e R20mm, and f SDII spatially averaged over the four basins shown in Fig. 1. The results are shown for each observation (solid circles), CMIP5, CMIP6, CORDEX, and CORE ensembles (box and whisker plots). The boxes indicate the interquartile (25th and 75th) model range, the solid marks within the boxes show the multimodel median, and the whiskers indicate the total intermodal range

In contrast to CDD, for CWD, the interquartile range for MMEs is larger over Zambezi compared to other basins, particularly for CMIP5 (24.4 days) and CMIP6 (24.0 days). The differences between observations are larger for CDD (with a maximum difference of 4.6 days over the Limpopo basin) than for CWD (with a maximum difference of 2.7 days over the Zambezi basin). For R10mm, R20mm, and SDII, the biases of MMEs are different depending on the reference observation and river basins (Fig. 5d, e, f). Previous studies have reported that the performance of climate models depends on the choice of reference observation and geographical location (Dosio et al. 2021a, b; Faye and Akinsanola 2022). The differences between the observations are larger than the intermodel spread for the mean SDII over the Limpopo basin compared to other basins, with means of 10.4, 14.1, and 18.3 mm/day for ARC, CHIRPS, and TAMSAT, respectively. This illustrates that the agreement of the observations is less than that of the model simulations (see Fig. 5f and, e.g., Dosio et al. 2021b). Interestingly, CORE shows a larger overestimation of R20mm than CMIP5 and CMIP6 over the Orange basin (all basins) during DJF (MAM and SON, except for Zambezi during MAM). Additionally, the intermodel spread of CORE is larger than those of CMIP5, CMIP6, and CORE during MAM for R20mm (Fig. S5e). Samuel et al. (2022) noted that RegCM (under the CORE experiment) has challenges in simulating precipitation indices over the Zambezi River basin, therefore largely contributing to the interquartile spread of the CORE. A comparison between CMIP5 and CMIP6 shows that CMIP5 performs slightly better for most indices, except SDII and CWD, over the Zambezi basin. However, the interquartile range of CMP5 is generally larger than that of CMIP6. Overall, the intermodel spread is larger during MAM and SON than during DJF for most of the indices over all basins. Generally, RCMs show better performance in simulating the magnitude of the observed extreme precipitation indices and have lower interquartile spreads than GCMs for most indices, with some exceptions, particularly for CORE. In particular, CORDEX shows better performance than CORE in simulating extreme precipitation over the four basins.

Figures 6 and 7 show Taylor diagrams of the spatial correlation coefficient (SCC), normalized standard deviation (NDS), and centered mean-square difference (CRMSD) of the simulated indices (for the individual models and the multimodel ensemble means) against CHIRPS for the four river basins. To assess the uncertainty among the observations, the results for ARC and TAMSAT are also shown. The performance of individual models and MMEs varies with basin and index (Figs. 6 and 7). For instance, individual models and MMEs show lower SCCs over Limpopo (< 0.4) and Zambezi (< 0.6) for CDD. However, agreement among observations is also weak over the two basins for CDD. The spread among the models is larger for CWD than for CDD, RR1, R10mm, R20mm, and SDII. Notably, the uncertainty of the observations is larger over the Orange and Zambezi basins. In particular, the NSDs for most of the models are > 1.5 for CWD (RR1) over all river basins (Orange River basin). In particular, the CORDEX MME shows better performance than CMIP5, CMIP6, and CORE in replicating CHIRPS NSDs for CWD over the Limpopo and Okavango basins, with NSD values of 1.0 and 1.66, respectively, in the CORDEX MME. CORDEX MME performs better than CMIP5, CMIP6, and CORE MMEs in simulating most of the extreme precipitation indices. The SCCs (NSDs) for individual models and MME range from 0.6 to 0.95 (0.5 to 1.5) for R10mm and R20mm. Similar to CDD, most individual models and MMEs exhibit lower SCC (< 0.7) over the Limpopo and Zambezi basins compared to the Orange and Okavango basins for R10mm and R20mm. More specifically, the spread among models is larger over Okavango for R10mm. Most of the individual models underestimate CHIRPS NSDs for SDII, particularly over the Limpopo, Orange, and Zambezi basins. The model spread is smaller for CDD and SDII than for CWD, RR1, R10mm, and R20mm over the four river basins. Overall, individual models and MMEs exhibit the worst performance in simulating extreme precipitation over the Limpopo and Zambezi basins, although the uncertainty among the observations is also larger over the two river basins for most of the indices. Taking the uncertainty among the observations into account, all ensembles generally reproduce the spatial patterns of extreme precipitation over the four river basins, with better performance in MMEs compared to individual models.

Taylor diagram illustrating the uncertainty of the observations and performance of multimodel ensembles (CMIP5, CMIP6, CORDE, and CORE) in simulating December–January–February (DJF) climatological (1983–2005) CDD (first row), CWD (second row), and RR1 (third row) spatially averaged over Limpopo (first column). CHIRPS is used as a reference, while the black dots represent ARC and TAMSAT. Filled colored dots indicate multimodel ensembles, and crosses indicate ensemble members

Taylor diagram illustrating the uncertainty of the observations and performance of multimodel ensembles (CMIP5, CMIP6, CORDE, and CORE) in simulating December–January–February (DJF) climatological (1983–2005) R10mm (first row), R20mm (second row), and SDII (third row) spatially averaged over Limpopo (first column). CHIRPS is used as a reference, while the black dots represent ARC and TAMSAT. Filled colored dots indicate multimodel ensembles, and crosses indicate ensemble members

Figure 8 shows the Taylor skill score (TSS) for the model ensembles relative to the mean of the observations (OBS) during DJF, averaged over the four basins. For CDD, the MMEs show better (worst) skills in representing extreme precipitation over Okavango and Orange (Limpopo) with TSS greater than 0.8 (less than 0.2). For example, the TSS for CDD for CMIP5, CMIP6, CORDEX, and CORE ranges between 0.01 and 0.46, 0.03 and 0.28, and 0.05 and 0.39, respectively. The higher TSS over the Limpopo basin during MAM and SON (> 0.40 in all ensembles with the exception of CMIP6 during MAM) compared to DJF (< 0.2 in all ensembles) demonstrates the better performances of the ensembles for CDD during MAM and SON compared to DJF (Figs. 8, S7, and S8). For CWD in DJF, MMEs exhibit the highest and lowest skills over Limpopo and Zambezi, respectively. The range of TSS is higher for CWD over the Zambezi basin despite lower skill in the ensembles, with TSS ranging from 0.05 to 0.7 for CMIP5, 0.08 to 0.66 for CMIP6, 0.03 to 0.58 for CORDEX, and 0.02 to 0.42 for CORE. The TSS of the ensembles is generally greater than 0.5 for both R10mm and R20mm over all the basins. Over the Orange basin, the ensembles show TSS values less than 0.4 for SDII, with TSS values for individual models ranging from 0.10 to 0.72 for CMIP5, 0.06 to 0.72 for CMIP6, 0.12 to 0.62 for CORDEX, and 0.13 to 0.70 for CORE. Figures 8, S7, and S8 illustrate that MMEs can reasonably represent extreme precipitation indices over the four basins, especially in DJF and SON. However, the range of TSS is higher for most extreme precipitation indices. Notably, the results show that CORDEX has better skills than the other ensembles in representing extreme precipitation during DJF, MAM, and SON.

Taylor skill scores (TSS) showing the performance of multimodel ensembles in simulating December–January–February (DJF) climatological (1983–2005) a CDD, b CWD, c RR1, d R10mm, e R20mm, and f SDII, spatially averaged over the four major river basins of southern Africa. The filled bars represent the TSS for the multimodel ensemble means. Error bars indicate the intermodal range for each ensemble

Table 2 shows the Kling–Gupta efficiency (KGE) values of extreme precipitation for the ensembles over each basin. Generally, the ensembles show poor skills in simulating CWD, with all ensembles showing negative KGE values over the basins except for CORDEX over Limpopo and Okavango. In agreement with the Taylor diagram and the TSS discussed previously, the ensembles show poor skills in simulating CDD over the Limpopo basin. However, the ensembles show good skills in simulating CDD over Okavango, Orange, and Zambezi. The skills of the GCM ensemble (CORE) are poor in simulating RR1 over the Limpopo and Orange basins (Limpopo basin). On the other hand, CORDEX shows good skill in simulating RR1 over these basins. Generally, the KGE values for R10mm, R20mm, and SDII for the ensembles are positive over all basins, with few exceptions (Table 2). Overall, the ensembles show good skills in simulating CDD, RR1, SDII, R10mm, and R20mm.

Figure 9 shows the box and whisker plots of the interannual variability of extreme precipitation indices calculated using the standard deviation (SD) of the time series (1983–2005) for the MMEs. The circles show the interannual variability of each observation. The results for CDD show the largest differences among the observations (4.9, 2.3, and 4.1 days for ARC, CHIRPS, and TAMSAT, respectively) over the Orange basin and the lowest (1.3, 1.4, and 1.7 days for ARC, CHIRPS, and TAMSAT, respectively) over the Zambezi basin (Fig. 9a). The sign of MME biases for the interannual variability in CDD differs depending on the observation (Figs. 9a, S9a, S10a). For instance, all MMEs overestimate (underestimate) the interannual variability relative to TAMSAT (ARC and CHIRPS) over orange basins. Similar to the observations, the interquartile ranges of CDD for CMIP5 (0.7 days), CMIP6 (0.5 days), CORDEX (0.4 days), and CORE (0.8 days) are lower over the Zambezi basin and larger (1.8, 1.5, 1.2, and 1.0 days, respectively) over the Orange basin. The larger intermodel spread shown for the interannual variability in CDD during MAM and SON is similar to that for the mean CDD (Figs. 9a, S9a, and S10a). It is interesting to note that CMIP5 and CMIP6 exhibit smaller biases than CORDEX and CORE for CDD over the Limpopo, Okavango, and Zambezi basins (Fig. 9a). For CWD, all MMEs tend to overestimate the interannual variability relative to all observations, except over the Zambezi basin, where the CORE underestimates interannual variability relative to the ARC. Similar to CDD, the biases of RR1, R10mm, and R20mm vary depending on the observation (Fig. 9c, d, e, f). MMEs overestimate the observed interannual variability for CWD, with larger positive biases in GCM ensembles compared to RCM ensembles over all basins (Fig. 9b–d). Generally, the intermodel spread is larger in GCM ensembles than in RCM ensembles for most of the indices over the basins. A comparison of CMIP5 and CMIP6 shows that CMIP5 exhibits a larger intermodel spread than CMIP6 for most indices over all basins.

DJF interannual variability (1983–2005) of a CDD, b CWD, c RR1, d R10mm, e R20mm, and f SDII for the four basins shown in Fig. 1. The results are shown for the observations (solid circles) and CMIP5, CMIP6, CORDEX, and CORE ensembles (box and whisker plots)

4 Summary and concluding remarks

The evaluation of historical climate simulations is fundamental, especially before they are used to assess the future impacts of extreme precipitation on key sectors such as agriculture and water resources. This is particularly important over southern Africa because of the difficulties of climate models in representing precipitation over the region (Desbiolles et al. 2020; Samuel et al. 2022).

This study investigates the performance of large ensembles of global (CMIP5 and CMIP6) and regional (CORDEX and CORE) climate models in simulating extreme precipitation over the four major river basins (Limpopo, Okavango, Orange, and Zambezi) of southern Africa. The performance of climate models in simulating extreme precipitation was evaluated for 23 years (1983–2005) during DJF, MAM, and SON using six extreme precipitation indices (CDD, CWD, RR1, R10mm, R20mm, and SDII) defined by ETCCDI. Three satellite-based observations (ARC, CHIRPS, and TAMSAT) are used. The assessment is mainly focused on the performance of MMEs compared to the mean of the three observations (OBSE) during the peak of the rainy season (DJF). However, we considered the spread of the observations and ensembles to assess their respective uncertainties. Several statistical metrics were used to quantify the performance of MMEs over the four basins.

The results show that all MMEs can reproduce precipitation peak during DJF over all basins, albeit with wet biases in all ensembles. The spread of the ensembles is generally larger than that of the observations, with a larger spread in the GCM than in the RCM. CORDEX is closer to the observations compared to the other three ensembles. The spatial distributions of the biases of extreme precipitation are consistent across the ensembles. In particular, all the ensembles overestimate (underestimate) CDD, RR1, R10mm, and R20mm (CDD and SDII) over all basins, except for CORDEX and CORE over the eastern region of the Zambezi basin for R10mm. Lower biases in CORDEX and CORE compared to CMIP5 and CMIP6 show the added value of dynamic downscaling. In particular, the biases of CORDEX simulations are lower than those of CORE, despite CORDEX having a lower spatial resolution than CORE. This can be partially because the number of CORE simulations is very limited (only 3 RCMs downscaling 3 GCMs), but a detailed analysis of the CORE performance over southern Africa is still missing.

The intermodel spread is larger than the observational spread for most of the indices over all basins. In particular, the spread of the CMIP5 and CMIP6 ensembles is larger than those of CORDEX and CORE.

The biases of interannual variability of extreme precipitation indices are generally consistent with those of the mean of extreme precipitation over the four basins, with regional models usually performing better than the GCMs, apart from CDD.

We used several statistical metrics to quantify the performance of the ensembles in simulating extreme precipitation spatially averaged over the four basins. The lowest SCC and TSS are observed over the Limpopo basin for CDD compared with the other three basins. However, MMEs largely overestimate the NSD relative to CHIRPS, with NSD values greater than three for most ensemble members over all basins. Generally, the ensembles show good skill in simulating extreme precipitation over the basins, except for CDD and CWD over the Limpopo basin and all basins, respectively.

In summary, RCM ensembles perform better than GCM ensembles for most extreme precipitation indices over all basins, which illustrates the added value of dynamic downscaling in simulating extreme precipitation. Generally, the intermodel spread is very large for all indices over all basins.

Without any claim for completeness, we acknowledge several caveats about this study. First, the results of this study provide a first-order assessment of multimodel ensemble performances in reproducing the observed extreme precipitation. However, persistent biases in the ensembles indicate the need for additional study on model evaluation over basins using a process-based approach. Second, multimode ensembles, which do not represent the performance of individual models, are the primary focus of this study. To account for individual model performance, we computed the intermodel spread for each ensemble. Nonetheless, a thorough assessment of individual model performance may be helpful in better understanding ensemble biases. Third, due to the lower resolution of GCM simulations, regionally averaged assessments are restricted to basin averaging, which disregards spatial heterogeneity of precipitation within the basin borders. Even CORDEX simulations (at ~ 50 km resolution) may still be considered coarse to be used when subdividing basins into subregions with homogeneous precipitation. As the main aim of our studies is to compare different classes of models (with different resolutions), we therefore believe that pour choice is a valid compromise and, while spatial heterogeneity of precipitation within basins is not considered in the basin-averaged results, the information on model performance is still informative, as shown in previous studies (e.g., Abiodun et al. 2019; Zhu et al. 2021a, b). Finally, annual cycles of precipitation are evaluated using climatological monthly means, which may introduce some uncertainty into the results. Despite its shortcomings, this approach is frequently used to evaluate the ability of climate models to simulate annual precipitation cycles (Ayugi et al. 2021; Hamadalnel et al. 2022; Dike et al. 2022).

Despite the aforementioned caveats, the study still provided important information on the ability of CMIP5, CMIP6, CORDEX, and CORE to reproduce observed extreme precipitation over southern Africa’s major river basins. Hence, we believe the results in this study are robust and provide important information to the scientific community and policymakers on the capabilities and limitations of CMIP5, CMIP6, CORDEX, and CORE in representing extreme precipitation over southern Africa’s major river basins. In particular, this study shows that wet biases persist in all model ensembles across all of the basins for most indices except for SDII, which has not always been reduced (and sometimes has been increased) by model development (CMIP6 vs. CMIP5) or increased resolution (CORE vs. CORDEX).

Data availability

The datasets used in the study are freely available.

References

Abiodun BJ, Adegoke J, Abatan AA et al (2017) Potential impacts of climate change on extreme precipitation over four African coastal cities. Clim Change 143:399–413. https://doi.org/10.1007/s10584-017-2001-5

Abiodun BJ, Makhanya N, Petja B, Abatan AA, Oguntunde PG (2019) Future projection of droughts over major river basins in Southern Africa at specific global warming levels. Theoret Appl Climatol 137:1785–1799. https://doi.org/10.1007/s00704-018-2693-0

Abiodun BJ, Mogebisa TO, Petja B, Abatan AA, Roland TR (2020) Potential impacts of specific global warming levels on extreme rainfall events over southern Africa in CORDEX and NEX-GDDP ensembles. Int J Climatol 40(6):3118–3141. https://doi.org/10.1002/joc.6386

Agyekum J, Annor T, Quansah E, Lamptey B, Okafor G (2022) Extreme precipitation indices over the Volta Basin: CMIP6 model evaluation. Sci Afr 12:e01181. https://doi.org/10.1016/j.sciaf.2022.e01181

Akinsanola AA, Kooperman GJ, Reed KA, Pendergrass AG, Hannah WM (2020) Projected changes in seasonal precipitation extremes over the US in CMIP6 simulations. Environ Res Lett. https://doi.org/10.1088/1748-9326/abb397

Akinsanola AA, Ongoma V, Kooperman GJ (2021) Evaluation of CMIP6 models in simulating the statistics of extreme precipitation over eastern Africa. Atmos Res 254:105509. https://doi.org/10.1016/j.atmosres.2021.105509

Ayugi B, Zhihong J, Zhu H, Ngoma H, Babaousmail H, Rizwan K, Dike V (2021) Comparison of CMIP6 and CMIP5 models in simulating mean and extreme precipitation over East Africa. Int J Climatol 41(15):6474–6496. https://doi.org/10.1002/joc.7207

Builes-Jaramillo A, Pántano V (2021) Comparison of spatial and temporal performance of two regional climate models in the Amazon and La Plata River basins. Atmos Res 250:105413. https://doi.org/10.1016/j.atmosres.2020.105413

Chisanga C, Mubanga K, Sichigabula H, Banda K, Muchanga M, Ncube L et al (2022) Modelling climatic trends for the Zambezi and Orange River Basins: implications on water security. J Water Clim Change 13:1275–1296. https://doi.org/10.2166/wcc.2022.308

Desbiolles F, Howard E, Blamey RC, Barimalala R, Hart NCG, Reason CJC (2020) Role of ocean mesoscale structures in shaping the Angola low pressure system and the southern Africa rainfall. Clim Dyn 54:3685–3704. https://doi.org/10.1007/s00382-02005199-1

Diallo I, Giorgi F, Sukumaran S, Stordal F, Giuliani G (2015) Evaluation of RegCM4 driven by CAM4 over Southern Africa: mean climatology, interannual variability and daily extremes of wet season temperature and precipitation. Theor Appl Climatol. https://doi.org/10.1007/s00704-014-1260-6

Diatta S, Mbaye ML, Sambou S (2020) Evaluating hydro-climate extreme indices from a regional climate model: a case study for the present climate in the Casamance river basin, southern Senegal. Sci Afr 10:e00584. https://doi.org/10.1016/j.sciaf.2020.e00584

Dike VN, Lin Z, Fei K, Langendijk GS, Nath D (2022) Evaluation and multimodel projection of seasonal precipitation extremes over central Asia based on CMIP6 simulations. Int J Climatol 42:7228–7251. https://doi.org/10.1002/joc.7641

Doblas-Reyes FJ, Sörensson AA, Almazroui M, Dosio A, Gutowski WJ, Haarsma R, Hamdi R, Hewitson B, Kwon W-T, Lamptey BL, Maraun D, Stephenson TS, Takayabu I, Terray L, Turner A, Zuo Z (2021) Linking global to regional climate change. In Climate change 2021: the physical science basis. Contribution of working group I to the sixth assessment report of the intergovernmental panel on climate change. In: Masson-Delmotte V, Zhai P, Pirani A, Connors SL, Péan C, Berger S, Caud N, Chen Y, Goldfarb L, Gomis MI, Huang M, Leitzell K, Lonnoy E, Matthews JBR, Maycock TK, Waterfield T, Yelekçi O, Yu R, Zhou B (eds) Cambridge University Press, Cambridge and New York, NY, p 1363–1512. https://doi.org/10.1017/9781009157896.012

Dosio A, Panitz H-J, Schubert-Frisius M, Lüthi D (2015) Dynamical downscaling of CMIP5 global circulation models over CORDEX Africa with COSMO-CLM: evaluation over the present climate and analysis of the added value. Clim Dyn 44(9–10):2637–2661. https://doi.org/10.1007/s00382-014-2262-x

Dosio A, Jones RG, Jack C, Lennard C, Nikulin G, Hewitson B (2019) What can we know about future precipitation in Africa? Robustness, significance and added value of projections from a large ensemble of regional climate models. Clim Dyn 53(9–10):5833–5858. https://doi.org/10.1007/s00382-019-04900-3

Dosio A, Jury MW, Almazroui M, Ashfaq M, Diallo I, Engelbrecht FA et al (2021) Projected future daily characteristics of African precipitation based on global (CMIP5, CMIP6) and regional (CORDEX, CORDEX-CORE) climate models. Clim Dyn. https://doi.org/10.1007/s00382-021-05859-w

Dosio A, Pinto I, Lennard C, Sylla MB, Jack C, Nikulin G (2021) What can we know about recent past precipitation over Africa? Daily characteristics of African precipitation from a large ensemble of observational products for model evaluation. Earth Space Sci 8:e2020EA001466. https://doi.org/10.1029/2020EA001466

Dosio A, Lennard C, Spinoni J (2022) Projections of indices of daily temperature and precipitation based on bias-adjusted CORDEX-Africa regional climate model simulations. Clim Change 170:13. https://doi.org/10.1007/s10584-022-03307-0

Dosio A, Lennard C, Spinoni J (2022) Projections of indices of daily temperature and precipitation based on bias-adjusted CORDEX-Africa regional climate model simulations. Clim Change 170:1–24. https://doi.org/10.1007/s10584-022-03307-0

Eyring V, Bony S, Meehl GA, Senior CA, Stevens B, Stoufer RJ, Taylor KE (2016) Overview of the coupled model intercomparison project phase 6 (Cmip6) experimental design and organization. Geosci Model Dev 9(5):1937–1958. https://doi.org/10.5194/gmd-9-1937-2016

Faye A, Akinsanola AA (2022) Evaluation of extreme precipitation indices over West Africa in CMIP6 models. Clim Dyn 58:925–939. https://doi.org/10.1007/s00382-021-05942-2

Funk C, Verdin A, Michaelsen J, Peterson P, Pedreros D, Husak G (2015) A global satellite-assisted precipitation climatology. Earth Syst Sci Data. https://doi.org/10.5194/essd-7-275-2015

Gibba P, Sylla MB, Okogbue EC, Gaye AT, Nikiema M, Kebe I (2019) State-of-the-art climate modeling of extreme precipitation over Africa: analysis of CORDEX added-value over CMIP5. Theoret Appl Climatol 137(1–2):1041–1057. https://doi.org/10.1007/s00704-018-2650-y

Giorgi F, Gutowski WJ (2015) Regional dynamical downscaling and the cordex initiative. Annu Rev Environ Resour 40(1):467–490. https://doi.org/10.1146/annurev-environ-102014-021217

Giorgi F, Coppola E, Teichmann C et al (2021) Editorial for the CORDEX-CORE Experiment I Special Issue. Clim Dyn 57:1265–1268

Gupta HV, Kling H, Yilmaz KK, Martinez GF (2009) Decomposition of the mean squared error and NSE performance criteria: implications for improving hydrological modeling. J Hydrol 377:80–91

Gutiérrez JM, Jones RG, Narisma GT, Alves LM, Amjad M, Gorodetskaya IV, Grose M, Klutse NAB, Krakovska S, Li J, Martínez-Castro D, Mearns LO, Mernild SH, Ngo-Duc T, van den Hurk B, Yoon J-H (2021) Atlas. In Climate change 2021: the physical science basis. Contribution of working group I to the sixth assessment report of the intergovernmental panel on climate change. In: Masson-Delmotte V, Zhai P, Pirani A, Connors SL, Péan C, Berger S, Caud N, Chen Y, Goldfarb L, Gomis MI, Huang M, Leitzell K, Lonnoy E, Matthews JBR, Maycock TK, Waterfield T, Yelekçi O, Yu R, Zhou B (eds) Cambridge University Press, Cambridge, United Kingdom and New York, NY, p 1927–2058. https://doi.org/10.1017/9781009157896.021

Hamadalnel M, Zhu Z, Lu R, Almazroui M, Shahid S (2022) Evaluating the aptitude of global climate models from CMIP5 and CMIP6 in capturing the historical observations of monsoon rainfall over Sudan from 1946 to 2005. Int J Climatol 42(5):2717–2738. https://doi.org/10.1002/joc.7387

Hoegh-Guldberg O, Jacob D, Taylor M, Bindi M, Brown S, Camilloni I, Diedhiou A, Djalante R, Ebi KL, Engelbrecht F, Guiot J, Hijioka Y, Mehrotra S, Payne A, Seneviratne SI, Thomas A, Warren RF, Zhou G, Tschakert P (2018) Impacts of 1.5ºC global warming on natural and human systems. In: Global warming of 1.5°c: an ipcc special report on the impacts of global warming of 1.5°C above pre-industrial levels and related global greenhouse gas emission pathways, in the context of strengthening the global response to the threat of climate change, sustainable development, and efforts to eradicate poverty IPCC

Hulsman P, Savenije HHG, Hrachowitz M (2021) Satellite-based drought analysis in the Zambezi River basin: was the 2019 drought the most extreme in several decades as locally perceived? J Hydrol 34:100789. https://doi.org/10.1016/j.ejrh.2021.100789

Ilori O, Balogun I (2021) Evaluating the performance of new CORDEX-Africa regional climate models in simulating West African rainfall. Model Earth Syst Environ 8(1):665–688. https://doi.org/10.1007/s40808-021-01084-whttps:/

Jain CK, Singh S (2020) Impact of climate change on the hydrological dynamics of River Ganga, India. J Water Clim Chang 11:274–290. https://doi.org/10.2166/wcc.2018.029

Karypidou MC, Katragkou E, Sobolowski SP (2022) Precipitation over southern Africa: is there consensus among global climate models (GCMs), regional climate models (RCMs) and observational data? Geosci Model Dev 15(8):3387–3404

Klutse NAB, Quagraine KA, Nkrumah F, Quagraine KT, Berkoh Oforiwaa R, Dzrobi JF, Sylla MB (2021) The climatic analysis of summer monsoon extreme precipitation events over west Africa in cmip6 simulations. Earth Syst Environ 5(1):25–41

Lim Kam Sian KTC, Hagan DFT, Ayugi BO, Nooni IK, Ullah W, Babaousmail H, Ongoma V (2022) Projections of precipitation extremes based on bias-corrected Coupled Model Intercomparison Project phase 6 models ensemble over southern Africa. International Journal of Climatology 42(16):8269–8289. https://doi.org/10.1002/joc.7707

Luo N, Guo Y, Chou J, Gao Z (2022) Added value of CMIP6 models over CMIP5 models in simulating the climatological precipitation extremes in China. Int J Climatol 42(2):1148–1164. https://doi.org/10.1002/joc.7294

Lu LC, Chiu SY, Chiu YH, Chang TH (2022) Sustainability efficiency of climate change and global disasters based on greenhouse gas emissions from the parallel production sectors–a modified dynamic parallel three-stage network DEA model. J Environ Manag 317:115401. https://doi.org/10.1016/j.jenvman.2022.115401

Maidment RI, Grimes DIF, Black E, Tarnavsky E, Young M, Greatrex H, Allan RP, Stein T, Nkonde E, Senkunda A, Alcántara EMU (2017) A new, long-term daily satellite-based rainfall dataset for operational monitoring in Africa. Sci Data 4:170063. https://doi.org/10.1038/sdata.2017.63

Mishra V, Kumar D, Ganguly AR, Sanjay J, Mujumdar N, Krishnan R, Shah RD (2014) Reliability of regional and global climate models to simulate precipitation extremes over India. J Geophys Res Atmos 119:9301–9323. https://doi.org/10.1002/2014JD021636

Mitchell SA (2013) The status of wetlands, threats and the predicted effect of global climate change: the situation in sub-Saharan Africa. Aquat Sci 75:95–112

Munday C, Washington R (2018) Systematic climate model rainfall biases over Southern Africa: links to moisture circulation and topography. J Clim 31:7533–7548. https://doi.org/10.1175/JCLI-D-18-0008.1

Novella NS, Thiaw WM (2013) African rainfall climatology version 2 for famine early warning systems. J Appl Meteorol Climatol 52:588–606. https://doi.org/10.1175/JAMC-D-11-0238.1

Ogega OM, Koske J, Kung’u JB et al (2020) Heavy precipitation events over East Africa in a changing climate: results from CORDEX RCMs. Clim Dyn 55:993–1009. https://doi.org/10.1007/s00382-020-05309-z

Olusegun CF, Awe O, Ijila I, Ajanaku O, Ogunjo S (2022) Evaluation of dry and wet spell events over West Africa using CORDEX-CORE regional climate models. Model Earth Syst Environ. https://doi.org/10.1007/s40808-022-01423-5

Panitz HJ, Dosio A, Büchner M, Lüthi D, Keuler K (2014) COSMO-CLM (CCLM) climate simulations over CORDEX-Africa domain: analysis of the ERA-Interim driven simulations at 0.44 and 0.22 resolution. Clim Dynam. https://doi.org/10.1007/s00382-013-1834-5

Pinto I, Lennard C, Tadross M, Hewitson B, Dosio A, Nikulin G, Panitz HJ, Shongwe ME (2016) Evaluation and projections of extreme precipitation over southern Africa from two CORDEX models. Climatic Change 135(3–4):655–668. https://doi.org/10.1007/s10584-015-1573-1

Rajendran K, Surendran S, Varghese SJ, Sathyanath (2022) Simulation of Indian summer monsoon rainfall, interannual variability and teleconnections: evaluation of CMIP6 models. Clim Dyn 58:2693–2723. https://doi.org/10.1007/s00382-021-06027-w

SADC (2016) Regional humanitarian appeal 2016. Southern African Development Community. https://www.ipcc.ch/sr15/chapter/chapter-3/https://reliefweb.int/report/zimbabwe/sadc-regional-humanitarian-appeal-june-2016. Accessed 10 Jul 2022

SADC-WD/ZRA (2008) Integrated water resources management strategy and implementation plan for the Zambezi River Basin, ZAMCOM, Lusaka, Zambia. http://www.zambezicommission.org/sites/default/files/clusters_pdfs/Zambezi%20River_Basin_IWRM_Strategy_ZAMSTRAT.pdf. Accessed 10 Jul 2022

Salaudeen A, Ismail A, Adeogun BK, Ajibike MA, Shahid S (2021) Assessing the skills of inter-sectoral impact model intercomparison project climate models for precipitation simulation in the Gongola Basin of Nigeria. Sci Afr 13:e00921. https://doi.org/10.1016/j.sciaf.2021.e00921

Samuel S, Wiston M, Mphale K, Faka DN (2022) Changes in extreme precipitation events in the Zambezi River basins based on CORDEX-CORE models: Part I—Evaluation of historical simulation. Int J Climatol 42(13):6807–6828. https://doi.org/10.1002/joc.7612

Seneviratne SI, Zhang X, Adnan M, Badi W, Dereczynski C, Luca AD, Ghosh S, Iskandar I, Kossin J, Lewis S, Otto F, Pinto I, Satoh M, Vicente-Serrano SM, Wehner M, Zhou B (2021) Weather and climate extreme events in a changing climate. In: Masson-Delmotte V, Zhai P, Pirani A, Connors SL, Péan C, Berger S, Caud N, Chen Y, Goldfarb L, Gomis MI, Huang M, Leitzell K, Lonnoy E, Matthews JBR, Maycock TK, Waterfield T, Yelekçi O, Yu R, Zhou B (eds) Climate Change 2021: The physical science basis. contribution of working group I to the sixth assessment report of the intergovernmental panel on climate change. Cambridge University Press

Sian KTCLK, Wang J, Ayugi BO, Nooni IK, Ongoma V (2021) Multi-decadal variability and future changes in precipitation over southern Africa. Atmosphere 12(6):742. https://doi.org/10.3390/atmos12060742

Stephenson J, Newman K, Mayhew S (2010) Population dynamics and climate change: what are the links? J Public Health 32(2):150–156. https://doi.org/10.1093/pubmed/fdq038

Sylla MB, Nikiema PM, Gibba P, Kebe I, Klutse NAB (2016) Climate change over west Africa: recent trends and future projections. Adaptation to climate change and variability in rural west Africa 25–40. https://doi.org/10.1007/978-3-319-31499-0_3

Tamofo AT, Dosio A, Vondou DA, Sonkoué D (2020) Process-based analysis of the added value of dynamical downscaling over Central Africa. Geophys Res Lett. https://doi.org/10.1029/2020GL089702

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res Atmos 106:7183–7192

Taylor KE, Stoufer RJ, Meehl GA (2012) An overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93(4):485–498. https://doi.org/10.1175/BAMS-D-11-00094.1

Wan Y, Chen J, Xie P, Xu C-Y, Li D (2021) Evaluation of climate model simulations in representing the precipitation non stationarity by considering observational uncertainties. Int J Climatol 41:1952–1969. https://doi.org/10.1002/joc.6940

Wang B, Zheng LD, Liu L, Ji F, Clark A, Yu Q (2018) Using multi-model ensembles of CMIP5 global climate models to reproduce observed monthly rainfall and temperature with machine learning methods in Australia. Int J Climatol 38:4891–4902

Weigel AP, Knutti R, Liniger MA, Appenzeller C (2010) Risks of model weighting in multimodel climate projections. J Clim 23:4175–4191. https://doi.org/10.1175/2010JCLI3594.1

Wu M, Nikulin G, Kjellström E, Belušić D, Jones C, Lindstedt D (2020) The impact of regional climate model formulation and resolution on simulated precipitation in Africa. Earth Syst Dyn 11(2):377–394. https://doi.org/10.5194/esd-11-377-2020

Yaduvanshi A, Bendapudi R, Nkemelang T, New M (2021) Temperature and rainfall extremes change under current and future warming global warming levels across Indian climate zones. Weather Clim Extremes 31:100291. https://doi.org/10.1016/j.wace.2020.100291

Yao J, Chen Y, Chen J, Zhao Y, Tuoliewubieke D, Li J, Yang L, Mao W (2021) Intensification of extreme precipitation in arid central Asia. J Hydrol 598:125760. https://doi.org/10.1016/j.jhydrol.2020.125760

Zhang X, Alexander L, Hegerl GC, Jones P, Tank AK, Peterson TC, Trewin B, Zwiers FW (2011) Indices for monitoring changes in extremes based on daily temperature and precipitation data. Wiley Interdiscip Rev Clim Change 2:851–870. https://doi.org/10.1002/wcc.147

Zhu X, Ji Z, Wen X, Lee SY, Wei Z, Zheng Z, Dong W (2021a) Historical and projected climate change over three major river basins in China from Fifth and Sixth Coupled Model Intercomparison Project models. Int J Climatol 41:6455–6473. https://doi.org/10.1002/joc.7206

Zhu X, Lee SY, Wen X, Ji Z, Lin L, Wei Z, Zheng Z, Xu D, Dong W (2021b) Extreme climate changes over three major river basins in China as seen in CMIP5 and CMIP6. Clim Dyn 57:1187–1205. https://doi.org/10.1007/s00382-021-05767-z

Acknowledgements

The authors would like to thank the World Climate Research Programme’s Working Groups on Regional Climate and Coupled Modeling, the coordinating bodies of CORDEX and CMIP and all the climate modeling groups for making climate model simulations freely available through the Earth System Grid Federation (ESGF). The authors also express their gratitude to ARC, CHIRPS, and TAMSAT for providing the observational datasets through their respective websites. The first author would like to thank Dr. Bruhani Nyenzi from Climate Consult (T) LTD, Tanzania for his academic and career support.

Funding

Open access funding provided by University of Botswana.

Author information

Authors and Affiliations

Contributions

Sydney Samuel designed and carried out the experiment. Alessandro Dosio commented on the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All authors consent to publish the research findings.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Samuel, S., Dosio, A., Mphale, K. et al. Comparison of multimodel ensembles of global and regional climate models projections for extreme precipitation over four major river basins in southern Africa— assessment of the historical simulations. Climatic Change 176, 57 (2023). https://doi.org/10.1007/s10584-023-03530-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-023-03530-3