The rise of obfuscated Android malware and impacts on detection methods

- Published

- Accepted

- Received

- Academic Editor

- Muhammad Aleem

- Subject Areas

- Data Mining and Machine Learning, Mobile and Ubiquitous Computing, Security and Privacy, Operating Systems

- Keywords

- Android malware, Android security, Evasion techniques, Machine learning, Obfuscation techniques

- Copyright

- © 2022 Elsersy et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2022. The rise of obfuscated Android malware and impacts on detection methods. PeerJ Computer Science 8:e907 https://doi.org/10.7717/peerj-cs.907

Abstract

The various application markets are facing an exponential growth of Android malware. Every day, thousands of new Android malware applications emerge. Android malware hackers adopt reverse engineering and repackage benign applications with their malicious code. Therefore, Android applications developers tend to use state-of-the-art obfuscation techniques to mitigate the risk of application plagiarism. The malware authors adopt the obfuscation and transformation techniques to defeat the anti-malware detections, which this paper refers to as evasions. Malware authors use obfuscation techniques to generate new malware variants from the same malicious code. The concern of encountering difficulties in malware reverse engineering motivates researchers to secure the source code of benign Android applications using evasion techniques. This study reviews the state-of-the-art evasion tools and techniques. The study criticizes the existing research gap of detection in the latest Android malware detection frameworks and challenges the classification performance against various evasion techniques. The study concludes the research gaps in evaluating the current Android malware detection framework robustness against state-of-the-art evasion techniques. The study concludes the recent Android malware detection-related issues and lessons learned which require researchers’ attention in the future.

Introduction

Since the advent of Android systems, smartphone devices are seen everywhere with a market share of 87% (Chau & Reith, 2019). Hence, Android devices have become the most popular devices for hackers and malware authors to target. With many open-source libraries in Android, Android application development tools enable young developers to develop Android malware applications. Therefore, the number of Android malware increases exponentially. In the Google Android market, Android applications exponentially grow from 2.8 million as of September 2018 (Statista, 2016, 2021), to almost double, to reach 3.4 million apps as of the first quarter of 2021 (Statista, 2021). Nevertheless, Android malware authors attract end-users using cracked games, free applications, and video downloader applications. They mainly aim to spy on private data (e.g., contact lists, photos, videos, documents, and account details) or control devices by remote servers as botnets (Karim et al., 2015). Android applications use Java as a developing language because Java provides a very flexible code, dynamic code loading (Liang & Bracha, 1998), and many other features to make Android application development more accessible and efficient. Likewise, Java uses obfuscation tools (Aonzo et al., 2020; GuardSquare, 2014) to protect commercial software companies from software plagiarism issues; professional developers protect their source codes from being stolen using advanced evasion techniques (Aonzo et al., 2020) as protection mechanisms. However, malware authors use the above-mentioned advanced Java features and evasion tools to reproduce more sophisticated Android malware, evading professional anti-malware (Preda & Maggi, 2016). Google introduced Google Bouncer (Rahman et al., 2016); however, Android malware successfully defeats Google Bouncer using different evasion techniques (Maiorca et al., 2015). Furthermore, Google Play Protect (Xu et al., 2016) service is the default device protection tool available on Google Android from Version 6.0 onwards; however, the previous versions are deprecated.

The rationale behind this study is the ability of evasion techniques to hinder the analysis process and thus the detection of Android malware. In 2021, PetaDoid (Karbab & Debbabi, 2021) proposed Android malware detection using deep learning techniques. PetaDroid builds static analysis Android malware detection framework using a 10 million Android apps dataset. PetaDriod addressed obfuscations in his study and concluded in his experimental results that his trained machine learning model that reaches 99.2% using static analysis would not detect complex obfuscated malware applications. The complex obfuscation techniques defeat Android malware detection PetaDroid model, which results into false detection. Though PetaDroid focused on trivial and some non-trivial obfuscation techniques. PetaDroid admitted that further deep analysis is required to address the sophisticated obfuscation techniques. The study focused on several evasion techniques, such as package transformation, string encryption, bytecode encryption, code obfuscation, injecting new codes via dynamic code loading, junk/dead code injection, emulation detection running sandboxing, and user interaction emulation detection. Android malware modifies the package, developer signature, and other information using the repacking evasion technique.

Moreover, the availability of various evasion techniques to the malware attackers increases the fear of developing very advanced obfuscation techniques, as such newly developed malware applications adopt advanced obfuscation techniques. It creates a challenge between preventing source code piracy and malicious attacks (Gurulian et al., 2016; Zhang et al., 2014) and struggling to decompile the malware application packages for further analysis (Gonzalez et al., 2015). Android malware detection frameworks (Arp et al., 2015; Elish et al., 2015; Poeplau et al., 2014; Chen et al., 2015) suffer from False Negative (FN) detection, which means the Android malware detection frameworks fail to detect some malware applications. The main reason behind FN is the malware evasion techniques that malware applications adopt to hinder detection. For instance, Arp et al. (2015) achieved 94% detection accuracy because it fails to detect malware with dynamic code loading transformation, one of the advanced evasion techniques. Likewise, Elish et al. (2015) used trigger-based dependence for privileged API calls, but it is unable to detect malware families with code obfuscation and reflection transformation. Poeplau et al. (2014) used the call graph methodology to detect malicious code loading, and the native code dynamically loads the code.

Similarly, Chen et al. (2015) identifies a repackaged application in 10 s using code graph similarity but is incapable of tracking junk code insertion transformation. You & Yim (2010) reviewed the obfuscation technique, metamorphic and polymorphic malware types. They discussed the metamorphic and polymorphic evasion techniques; however, they neglected transformation and anti-emulation evasions. Furthermore, they merely reviewed evasion methods and failed to evaluate current evasion detection systems to evaluate whether they can detect evasive malware. Sharma & Sahay (2014) reviewed polymorphic and metamorphic malware and discussed their characteristics. They failed to mention evasion detection methods and evaluate the currently proposed methods. Sufatrio et al. (2015a) surveyed Android malware detection methods and briefly assessed a handful of related works in terms of evasion detection.

This study is intended for Android malware detection research highlighting the research gaps in malware detection caused by different evasion techniques. This study highlights the obfuscation and transformation techniques that need more attention from the research authors in future. It also provides guidelines and lesson learned to face this challenge. Due to the above facts, the authors take the challenge to introduce the following foremost contributions.

– We present evasion taxonomy, particularly in the Android platform. Our goal is to systematize the Android malware evasion techniques using a taxonomy methodology, which clearly shows various evasion techniques and how they affect malware analysis and detection accuracy.

– We scrutinise Android malware detection academic and commercial frameworks while a large portion of the past work concentrated on commercial Anti-malware solutions. This study examines different evasion techniques that hinder detecting malicious parts of applications and affect detection accuracy by reviewing state-of-the-art Android malware studies and issues limiting the detection of evasion techniques. It is worth noting that this work differs from related works that examine detection methods, as we go through evasion techniques that let malware eludes detection methods. Given the vast number in this study field, our investigation focuses on studies written between 2011 and early 2021 and innovative contributions that appeared in high-ranked journals or conferences such as IEEE, ACM, and Springer, hence the identified related papers are 511 research papers.

– We highlight the existing problems and gaps in Android malware evasion detection by examining the previous frameworks and identifying the Android malware evasion detection research gap.

– We introduce a decent number of recommendations and lessons learned to consider in future work around research. We also aim to highlight the contribution of each study, challenges, countermeasures, and open issues for future research.

Table 1 presents the differences between this study and the recent evasions detection reviews. Vikas (Sihag, Vardhan & Singh, 2021a) evaluated the hardening code obfuscation tools against the reverse engineering process; however, it focused on development advantage more than malware detection perspectives. FeCO (Jusoh et al., 2021) focused on Android application static analysis and Android malware detection using machine learning and deep learning methods. It highlighted the type of code obfuscations techniques and previous research obfuscation solutions. AAMO (Preda & Maggi, 2016) and Droidchameleon (Rastogi, Chen & Jiang, 2013) study the effectiveness of evading commercial anti-malware applications by using their evaluation tools; Droidchameleon (Rastogi, Chen & Jiang, 2013) examines trivial transformation, which easily evades the detection of Android malware using the most popular anti-malware commercial packages. However, Droidchameleon (Rastogi, Chen & Jiang, 2013) misses studying the effect of the evasion techniques on current detection accuracy. Likewise, Rastogi continued his study of Droidchameleon (Rastogi, Chen & Jiang, 2013, 2014) and added more composite transformation attacks that consist of more than evasion attacks and investigated evasion chains’ capability for hindering malware detection. Hoffmann develops a tool to thwart malware detection and evaluates the accuracy of a few typical static and dynamic malware analysis frameworks and concludes that code obfuscation evasion evades Android malware detection frameworks (Hoffmann et al., 2016). Nevertheless, Hoffmann excludes some evasion techniques from the evaluation of malware detection frameworks.

| Related studies | Evasion techniques discussion | Evasion detection tools evaluation |

|---|---|---|

| This study | Encryption, package and code transformation, code obfuscation, anti-emulation | Commercial + Academic |

| Droidchameleon (Rastogi, Chen & Jiang, 2013) | Transformation | Commercial |

| Vikas (Sihag, Vardhan & Singh, 2021a) | Code Obfuscation, repackaging | Academic |

| FeCO (Jusoh et al., 2021) | Code Obfuscation, Encryption | Academic |

| Rastogi (Rastogi, Chen & Jiang, 2014) | Encryption + Transformation | Commercial |

| AAMO (Preda & Maggi, 2016) | None | Commercial |

| Hoffmann (Hoffmann et al., 2016) | Obfuscation | Commercial |

| Tam et al. (Tam et al., 2017) | Transformation + Obfuscation | None |

| Nguyen-Vu et al. (Nguyen-Vu et al., 2017) | Transformation | None |

| Kim et al. (Kim et al., 2016) | Anti-emulation | None |

| Xue et al. (Xue et al., 2017) | Encryption | Commercial |

| Bulazel (Bulazel & Yener, 2017) | Virtualization and performance case studies | Academic |

The rest of the paper is organized as follows: the survey methodology and background section provide essential background information for this study; we explore the Android operating environment and its weaknesses. Evasion techniques section presents the evasion techniques taxonomy with regards to different categories of evasions. Android evasion detection frameworks section investigates the current state-of-the-art evasion detection frameworks and evasion test benches tools. We discuss the lessons learned and future directions in discussion and lessons learned sections. Finally the last section represents the conclusion of this study.

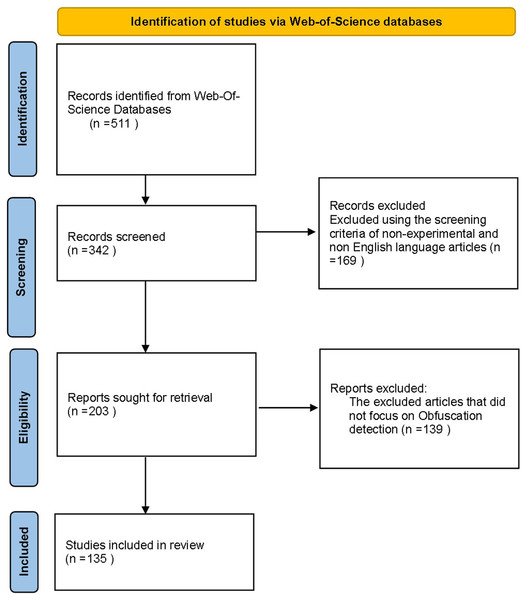

Survey methodology

Methodology

The methodology of retrieving Android malware obfuscation detection related articles is presented in this section. This study adopted Web-of-Science search engine to carry over the literature review using search terms with inclusions and exclusion criteria. The review process consists of four phases; first phase is identification, second phase is screening, third phase is eligibility, and fourth phase is analysis phase.

Identification

The adopted Web-of-Science search engine covers hundred years of citation data containing many journals related to computer security, software development, and network security. Clarivate Analystics established this citation database with ranking citations measure (citation per paper). Since this study focused on Android malware obfuscation, we had selected ‘Android malware, ‘malware obfuscation’, and ‘malware evasion’ as our search terms. The search results in 511 research from journals and conferences’ proceeding database. The search results mainly records are from IEEE, journals and conferences distributions as per Table 2.

| Article type | Full name | Publisher |

|---|---|---|

| Journals | ACM Computing Surveys | ACM |

| ACM Transaction on Computer system | ACM | |

| Computers & Security | ||

| Digital Investigation | ||

| Future Generation Computer Systems | ||

| IEEE Transactions on Dependable and Secure Computing | ||

| IEEE Access | IEEE | |

| IEEE Transactions on Industrial Informatics | IEEE | |

| IEEE Transactions on Information Forensics and Security | IEEE | |

| IEEE Transactions on Knowledge and Data Engineering | ||

| IEEE Transactions on Mobile Computing | ||

| IEEE Transactions on Network Science and Engineering | ||

| IEEE Transactions on Reliability | ||

| Information and Software Technology | ||

| Information Sciences | ||

| International Journal of Distributed Sensor Networks | ||

| International Journal of Information Security | ||

| International Journal of Interactive Multimedia & Artificial Intelligence | Springer | |

| Journal of Ambient Intelligence and Humanized Computing | ||

| Journal of artificial intelligence research | ||

| Journal of Computer Virology and Hacking Techniques | Springer | |

| Journal of Information Science and Engineering | ||

| Journal of Information Security and Applications | ||

| Journal of Supercomputing | ||

| PLOS ONE | ||

| Soft Computing | ||

| Security and Communication Networks | ||

| Conferences | Advanced Computing, Networking and Security | IEEE |

| Artificial Intelligence and Knowledge Engineering (AIKE) | IEEE | |

| Inventive Research in Computing Applications (ICIRCA) | IEEE | |

| International Arab Conference on Information Technology (ACIT) | IEEE | |

| Information Security | IEEE | |

| Network Computing and Applications (NCA) | IEEE | |

| Computer Software and Applications Conference | IEEE | |

| International Conference on Security and Privacy in Communication Systems | Springer | |

| International Conference on Security and Privacy in Communication Systems | Springer | |

| Seventh ACM on Conference on Data and Application Security and Privacy | ACM | |

| The symposium on applied computing | ACM | |

| Data and application security and privacy | ACM |

The list of collected articles represent the Android malware obfuscation and detection frameworks. It included the three types of the malware analysis techniques static, dynamic and hybrid techniques in the last decade from 2011 to early 2021. Hence, we collected Android malware frameworks for the last decade and innovative contributions that appeared in high-ranked journals or conferences such as IEEE, ACM, and Springer.

Screening

Since, this paper explored the last 10 years’ research to evaluate the Android detection frameworks against evasion techniques, we focused on experimental malware detection articles using static, dynamic and hybrid analysis techniques, excluding the unrelated articles. We excluded articles that are not Android specific malware detection such as IOS and Windows based operating system. In addition, we excluded all other languages and include only English language research to avoid translation overhead in future.

Eligibility

As shown in Fig. 1, the review process presented four phases flow diagram, the identification collect the articles from web of science (WOS) database using above mentioned search terms, next, screening identified the criteria of article inclusion and exclusion. After removing the duplicates and excluded the non-related articles, we categorize Android malware detection by the analysis methodology static, dynamic, and hybrid features. This paper decides to put metadata analysis out of this research scope. The screening phase resulted into 342 article from 511 collected in identification phase. However, we have examined 74 static analysis based frameworks. The number of dynamic based analysis frameworks are 35, the number of hybrid analysis frameworks is 26. Hence the total number of examined papers are 135 research paper that this study selected from top rank journals and conferences.

Figure 1: The review process flow diagram.

Data analysis

We scrutinise Android malware detection academic and commercial frameworks while a large portion of the past work concentrated on commercial anti-malware solutions. This study examines different evasion techniques that hinder detecting malicious parts of applications and affect detection accuracy by reviewing state-of-the-art Android malware studies and issues limiting the detection of evasion techniques. It is worth noting that this work differs from related works that examine detection methods, as we go through evasion techniques that let malware evades detection methods.

Android applications and weaknesses

In the section, we discussed the Android application architecture. Subsequently, we investigate the Android operating system (OS) weaknesses. This background highlights the seriousness of some drawbacks to rationalize the necessity of establishing this review and explain the essential terms to support the readers of this study.

Android application

Android application, Android app, or APK refers to the Android application from now on and throughout this paper. APK is a compressed file; an unzipping program extracts its files and folders. This segment explains the APK components and their contents, as some terms are essential in this study. APK developers use development tools that occasionally require simple programming experience from young developers. The Android app runs on Dalvik or ART equivalent to Java Virtual Machine (JVM) in a desktop environment. The APK structure consists of many files and directories; the main file is Classes.dex Java bytecode; it includes the classes and is packed together in a single .dex file. The AndroidManifest.xml file contains deployment specifications and the required permissions from Android OS. Resources .arsc is compiled resources, and Res folder is un-compiled resources.

The Android system must install the APK file so that the end-user can utilize the application’s functionalities. The Android system only accepts APK with a valid developer certificate, called developer identifier. Individual developers keep their certificate keys; there is no Central Authority (CA) server to maintain developers’ keys, and thus no chain of trust between app stores and developers.

End-users need to run the installed applications, while other apps run as a service in the OS background. Therefore, the Android application’s main components are as follows:

-

a)

Activities: The user interface that end-users interact with and that communicates with other activities using intents.

-

b)

Services: Android application component runs as a background process and bonds or un-bonds with other Android system components.

-

c)

Broadcast and Receivers Intents: send messages that all other applications or individual applications receive.

-

d)

Content Providers: It is the intermediate unit to share data between applications.

Android weaknesses

With some insight into the Android applications’ development design, we list the Android system’s weaknesses and definitions for the readers of this study. The following is a list of Android flaws and characteristics that malware authors and attackers abuse.

(a) Open Source:

The advantage of Android source code’s openness helps developers’ communities enhance the OS and add more features. Therefore, the Android community improves Android OS daily. But, this contradicts with the security concerns when malware writers take this advantage. It makes their job more straightforward than in closed source firmware, which commonly triggers new vulnerabilities and malware attacks (Xu et al., 2016).

(b) End-users Security Awareness:

End-users understanding malware’s seriousness plays a vital role in early prevention and detection when using feedback and reviews. However, the end-users feedback system is insecure and easily polluted by fake comments (Rashidi, Fung & Tam, 2015). End-users click on malicious URL links in emails, web browsers, pop-ups, or Android application dialogues that download and install malicious applications. The end-users grant permissions to the apps without studying the apps’ actual requirements; they believe and follow fake advertisements of permissions greedy apps.

(c) Third-party Apps Market:

Android lets end-users download applications from third-party markets and install such application offline by enabling installations from unknown sources in the phone settings menu. Several untrusted or well-verified application stores offer Android the third-party application, such as Amazon, GoApk, Slide ME, and other apps markets. In addition, there are four Chinese App markets Anzhi, Mumayi, Baidu, and eoe app third party markets, since Google Play restricted access to the Android Play Store for the Chinese population (Fsecure, 2013). End-users download mobile applications from any website to their mobiles devices, personal computers, or laptops via tools such as the ADB tool in Android SDK, which increases the probability of installing malicious apps (Sufatrio et al., 2015b; Tan, Chua & Thing, 2015).

(d) The Coarse Granularity of Android Permissions:

The Android system controls the users’ application access using coarse granulated permissions, i.e., one permission that provides access to entire Internet protocols and all sites. There is no competent permission administration or sufficient permission documentation, leading to excess permissions (Fang, Han & Li, 2014).

(e) Developers’ Signatures:

Android application developers have to sign their apps with their developer key before uploading the developed application to the market. There is no external party to authenticate developers’ signatures and thus no confidentiality or integrity (Holla & Katti, 2012). Hence, malware developers clone benign applications and sign the APK with their developer key after injecting malicious codes (Zhang et al., 2014). Later, malware developers upload malicious APK to third-party application markets or share the infected applications directly with their victims.

(f) Application Version Update:

Android applications usually enhance their functionalities in the form of version updates. The security frameworks analyze the application during installation, and the update process downloads new services/features without security precautions or checks (Luyi et al., 2014).

(g) USB Debugging:

USB debugging is a valuable feature for Android Application development; it helps developers be more productive and efficiently troubleshoot applications. It allows direct installation of an application to the Android device using Android SDK tools such as the ADB tool. In addition Expo framework (Zhang, Breitinger & Baggili, 2016) has the possibility of live reloading and dynamic code loading online. On the other hand, malware writers utilize live loading features to gain remote access to install malicious applications using static and dynamic methods. The static method injects JAR (Java) or *.SO (JNI) files to the application before running, while the dynamic method call external files during runtime (Zhang, Breitinger & Baggili, 2016).

(h) Dynamic Code Loading (DCL):

DCL is an Android OS feature that enables benign Android applications to call another APK or malicious code to compile and execute it in real-time. However, malware developers use this feature to load their malicious codes dynamically after the detection framework ranked the malicious app as benign.

(i) Inter-application Communication (intent):

Android OS uses the inter-application intent system to deliver a message from and to applications. Malware developers sniff, modify, or gain knowledge, compromising data integrity and privacy (Chin et al., 2011). The intent provides flexibility in Android application development, but it is an entry point for security threats (Feizollah et al., 2017; Salva & Zafimiharisoa, 2015).

Evasion techniques

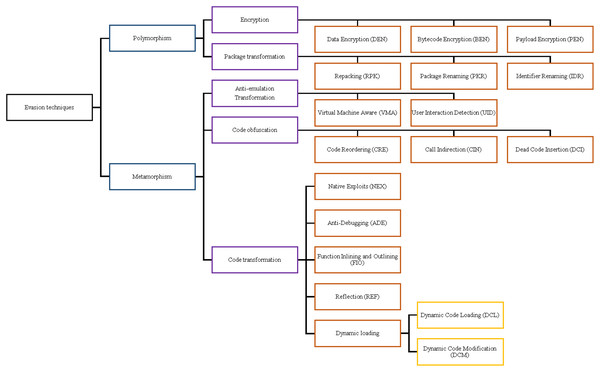

This section represents our taxonomy of the currently used evasion techniques and research studies on detecting obfuscated malware. Our taxonomy focuses on classifying the related studies with the same objectives and goals to harvest a comprehensive collection of material and comparative conclusions. When scrutinizing many existing studies, we find it more appropriate to study the evasion detection capabilities of each studied framework after introducing the evasion techniques that hinder malware analysis and detection. This section presents the taxonomy of detection techniques for the ground truth relation between the detection methodology and the evasion ability. Android applications have powerful tools and techniques to secure and protect their applications from being reverse-engineered. Conversely, malware authors are using obfuscation tools and techniques to evade detection. Therefore, evasions, or in other terms, transformation techniques, are techniques that try to defeat Android malware detection and rank the malware applications as benign.

As displayed in Fig. 2, we categorize evasion techniques into two main types. The first category is polymorphism; it transforms the malicious malware code without changing the original code of the mobile application. The second category is metamorphism, which mutates the application code, but maintains the same behaviour. Malware authors employ obfuscation tools, such as Obfuscapk (Aonzo et al., 2020), ProGuard (Lafortune, 2002), DashO (Wang et al., 2016), KlassMaster (Kuhnel, Smieschek & Meyer, 2015), and JavaGuard (Sihag, Vardhan & Singh, 2021a) to encrypt their code and decrypt during runtime; they modify the code itself to evade the heuristic detection and signature analysis of the malware detection techniques.

Figure 2: Evasion technique taxonomy.

Polymorphism

Polymorphic malware is the malware category that keeps changing its characteristics to generate different malware variants evading malware detectors. Polymorphic malware encrypts part of the code embedding malicious code. The polymorphic malwares encrypt itself with variable encryption keys but maintaining the malicious code body unaltered. Polymorphic malware is an advanced version of oligomorphic malware. The oligomorphic malware encrypts the malicious code to defeat source code static analysis based malware detection. Usually, the malware decrypts the malware using the same techniques. However, the oligomorphic malware decrypts the encrypted malicious code using different deyrptor to make decryptor analysis more difficult. The static analysis analyze the decryptor to find the encryption key that enable the detection of the malware. Hence, the static analysis approach is not effective with oligomorphic malware. Polymorphic malware continuously change the decryptor technique to make it more difficult to the source code static analysis approach. These symptoms are indications of the presence of malicious code in an application. In this section, we discuss the polymorphism evasions subcategories, which are package transformation and encryption.

Package transformation

In this section, we study types of package transformation, which are Repacking (RPK), Package Renaming (PKR), and Identifier Renaming (IDR).

(a) Repacking (RPK): It is the process of unpacking the APK file and repacking the original application files but signing the APK file with a developer security key (Rastogi, Chen & Jiang, 2013). This way, the code remains unchanged and signed the application with a different key. To repackage Android application, attackers unzips the APK file into DEX file, hence, attackers adopts reverse engineering tools to extract Java or smali code from the DEX file. Using classes, string, and methods rearrangement in DEX file, attacker modifies the architecture of the DEX arrangement resulting into defeating signature based Android malware detection. Canfora (Canfora et al., 2015b) considers a simple repacking evasion technique. It hinders malware detection using all of the commercial anti-malware that uses signature-based detection techniques. Thus, with every iteration, the malware’s signature is changed, after which the malware can evade detection. For instance, one AnserverBot malware sample repackaged and disguised as a paid application is available on the official Android Market.

(b) Package Renaming (PKR): Every Android application has a unique package name. For instance, com.android.chrome is the package name of Google Chrome. PKR uses multilevel techniques to obfuscate the application classes except for the main Class, for instance, “FlattenPackageHireachey” or “RepackageClass” options (Lafortune, 2002). As shown in Algorithm 1, PKR changes all classes’ names except the “MyMain” class.

This algorithm is applied relatedly to form the multilevel PKR obfuscation. The GinMaster family contains a malicious service that can root devices to escalate privileges, steal confidential information. Later, it receives instructions from a remote server to download and install applications without user interaction. The malware can successfully avoid detection by mobile anti-virus software by using polymorphic techniques to hide malicious code, obfuscating class names for each infected object, and randomizing package names and self-signed certificates for applications. Therefore, PKR evades the malware detection technique and causes false negatives, proven by Faruki et al. (2015c) by applying PKR to malware applications and scanned using Virustotal platform. It shows that the repackage malware detection accuracy dropped to half in all malware categories.

(c) Identifier Renaming (IDR): Identifier is another APK parameter representing the application developer’s signature. Classes, methods, and fields consider bytecode identifiers, as a signature is generated based on. Malware authors change developer identifiers using many obfuscation tools such as ProGuard (Lafortune, 2002) and DexGuard (GuardSquare, 2014) to appear as a variant application from the previously detected malicious application, leading to a different signature and evading detection methods. Real-world malware families that rename identifiers are as follows: DroidDream, Geinimi, Fakeplayer, Bgserv, BaseBridge, and Plankton.

Encryption transformation

Some Android malware families encrypt data values inside the code, compiled code or payload, and decrypt the payload whenever desirable. This paper refers to Data Encryption as DEN, Bytecode Encryption as BEN, and Payload Encryption as PEN. This paper examines the following types of evasions:

-

a)

Data Encryption (DEN): This evasion technique tends to encrypt specific data vital for the malicious action and decrypt the encrypted data later, which modifies the malware application characteristics to evade the detection techniques (Kuhnel, Smieschek & Meyer, 2015). The data refers to strings or network addresses embedded in the code. By encrypting such components, the malware can avoid detection methods (Shrestha et al., 2015), in which the authors extracted strings from APK files and analyzed the decrypted strings to detect malware. Real-world malware families that encrypt payload are as follows: DroidDream, Geinimi, Bgserv, BaseBridge, and Plankton.

-

b)

Bytecode Encryption (BEN): using ProGuard (Lafortune, 2002) or DashO (Maiorca et al., 2015) obfuscation tools, the BEN evasion hinders reverse engineering by encrypting original code and makes it almost impossible to read. It divides the code into two parts, the encrypted and non-encrypted parts. The non-encrypted code part includes the decryption code for the encrypted part (Faruki et al., 2014; Rastogi, Chen & Jiang, 2014) during run-time. Therefore, dynamic analysis is required to detect this decryption process. However, some static analysis-based detection frameworks propose BEN evasion detection, such as DroidAPIminer (Aafer, Du & Yin, 2013) and Wang (Wang & Wu, 2015) that successfully detect BEN evasion but fail in DEN or PEN evasions detection.

-

c)

Payload Encryption (PEN): Malware authors use payload encryption as in DroidDream (Foremost, 2012) malware to carry malicious payloads inside applications and install malicious applications at runtime once the system is compromised. The code is encrypted and decrypted during run time, which calls a decrypting function (Cho, Yi & Ahn, 2018) and runs it in real-time.

Metamorphism

Metamorphic malware is more complex than polymorphic malware that shows a better ability to evade detection frameworks. Malware authors adopt metamorphic malware so to make metamorphic malware detection harder than leveraging polymorphic malware. The metamorphic malware writes new malicious code that varies in each iteration using the same encryption and decryption key. For example, Opcode ngrams (Canfora et al., 2015a) adopts the ngrams feature extraction algorithm to extract the suspected string with n count in the Opcode. It assumes that the Malware writers rarely develop metamorphic Android malware variants. Based on that assumption, it ignored the evaluation of the ngrams’ detection framework against metamorphic evasions (Canfora et al., 2015a). Metamorphic malware rewrites itself in every iteration to evade detection methods.

Code obfuscation

Code obfuscation is an evasion technique initially used to protect applications from piracy and illegal use by many obfuscation techniques. Conversely, malware authors use code obfuscation techniques to evade malware detections. In this study, we highlight three types of code obfuscation the Code Reordering (CRE), Call Indirection (CIN), and Dead Code Insertion (DCI).

-

a)

Code Reordering (CRE): This transformation changes the order of the code by inserting the standard “goto” command to maintain the proper program instruction order.

-

b)

Call Indirections (CIN): CIN is an object-oriented feature used dynamically to reference specific values inside the code; CIN creates code transformation evasion, obfuscating the call graph detection techniques (Castellanos et al., 2016; Gascon et al., 2013). Malware families such as DroidDream, Geinimi, and FakePlayer incorporate call indirection to evade static analysis based Android malware detection.

-

c)

Dead Code Insertion (DCI): Malware inserts junk code into the sequence of the application to ruin its semantics. This type of transformation makes the malware more difficult to analyze (Kwon et al., 2014). AnDarwin (Crussell, Gibler & Chen, 2015) experimented with detecting Android malware based on code similarity. Their used method is unable to detect dead code insertion transformation (Crussell, Gibler & Chen, 2015). The code similarity approach uses a distance-vector technique, representing the distances between the original code or the DCI transformation representing a distance vector. The far the distance vector, the more complex the detection of such obfuscation.

Advanced code transformation

This section explains the advanced code transformation techniques that are more sophisticated in hindering the malware detection frameworks. We include advanced evasion techniques, such as Native Exploits (NEX), Function Inlining and Outlining (FIO), Reflection API (REF), Dynamic Code Loading/Modification (DCL/DCM), and Anti-debugging (ADE).

-

a)

Native Exploits (NEX): Android applications call native libraries to run system-related functions. The malware uses a native code exploit to escalate the root privilege while running (Xu et al., 2016). Unfortunately, many exploits’ source code is available for download. Official Android suppliers are working on a solution using regular system updates and fixes. Additionally, DroidDream malware (Wu et al., 2015) packs native code exploits with application payload, bypassing Android security monitoring and logging systems.

-

b)

Function Inlining and Outlining (FIO): Inlining and outlining are compiler optimization techniques options. Inlining replaces the function call with the entire function body, and the outlining function divides the function into smaller functions. This type of transformation obfuscates the call graph detection technique by redirecting function calls and creating a maze of calls (Gascon et al., 2013).

-

c)

Reflection API (REF): Reflection API is a technique to initiate a private method or get a list of parameters from another function or class, whether this class is private or public. Android developers legitimately use it to provide genericity, maintain backward compatibility, and reinforce application security. However, malware authors take advantage of this feature and use it to bypass detection methods. Reflection evasion facilitates the possibility to call private functions from any technique outside the main class. Recently few studies highlighted the reflection effect on code analysis and considered reflection during the analysis process (Kuhnel, Smieschek & Meyer, 2015; Li et al., 2016).

-

d)

Dynamic Code Loading/Modification (DCL/DCM): Since Java has the capability of loading code at runtime using class loader methods, Android malware application dynamically download malicious code using the dynamic code loading (DCL). The DCL and DCM techniques provide advanced evasion capability to malware authors, and improper use can make benign applications vulnerable to inject malicious code. For instance, the Plankton malware family uses dynamic code loading to evade detection methods. As being the first malware with DCL that stealthy extend its capabilities on Android devices. It installs an auto-launching background application or service to the device, collecting device critical information to a server. The server sends the malicious class payload URL link to the background service using an HTTP_POST message containing a Dalvik Bytecode jar malicious payload file. In the following trigger of “init()” event of the main application, the malicious payload is invoked using the “DexClassLoader” class. Due to the unavailability of the dynamically loaded code during Android malware static analysis, the DCL and DCM evasion technique is another transformation technique that is a big challenge for static analysis (Hsieh, Wu & Kao, 2016; Li et al., 2016). Although some researchers (Poeplau et al., 2014; Zhang, Luo & Yin, 2015; Zhauniarovich et al., 2015) studied how DCL evades malware detection, it is still an open issue that needs more attention. Grab’n run (Falsina et al., 2015) uses code verification techniques to secure dynamic code loading and protect it from misuse by malware authors and attackers.

-

e)

Anti-debugging (ADE): The malware developer presumes the limitation of Android that only one debugger can be attached to a process using ptrace functionality (Zhang, Luo & Yin, 2015). Hence, it prohibits attaching a debugger to the suspected application. If the malware detects the running debugging tool like Java Debug Wiring Protocol (JDWP), it discovers the operating environment running under an Android emulator or physical device. Andro-Dumpsys (Jang et al., 2016) is a hybrid Android malware analysis framework that claimed that it disables the attachment of “ptrace” monitoring application service to monitor the running applications, which lack ADE detection.

Anti-emulation transformation

The primary objective of anti-emulation evasion is to detect the running environment of the sandbox and benignly masquerade as a clean application instead of launching the malicious code, which we refer to as Virtual Machine Aware (VMA). Another side of anti-emulation evasion is detecting automatic user interaction emulation, which refers to as Programmed Interaction Detection like the monkeyrunner tool used in many frameworks, for instance, the Droidbox (Desnos & Lantz, 2014) sandbox tool in the Mobile-Sandbox (Spreitzenbarth et al., 2015).

-

a)

Virtual Machine Aware (VMA): The dynamic analysis requires either an Android virtual machine emulator or a physical device to install the suspected application. Scientists studied the possibility of detecting the running environment fingerprints to differentiate between an emulator and a physical device (Jing et al., 2014; Maier, Muller & Protsenko, 2014; Maier, Protsenko & Müller, 2015; Vidas & Christin, 2014). Android.obad (Faruki et al., 2015b; Singh, Mishra & Singh, 2015) is an emulator-aware malware, which complicates the analysis process. The malware looks for the “Android.os.build.MODEL” value throughout the code and exits if it matches the emulator’s model. The malware only runs in an emulator after patching WMA checks.

-

b)

Programmed Interaction Detection (PID): Android malware is an event-driven application that needs a particular series of user interactions to launch malicious actions. Therefore, dynamic analysis requires a running environment user/gesture interaction. Malware writer refers to PID obfuscation as code coverage. Some researchers have tried to address code coverage; however, it remains a challenge to detect it.

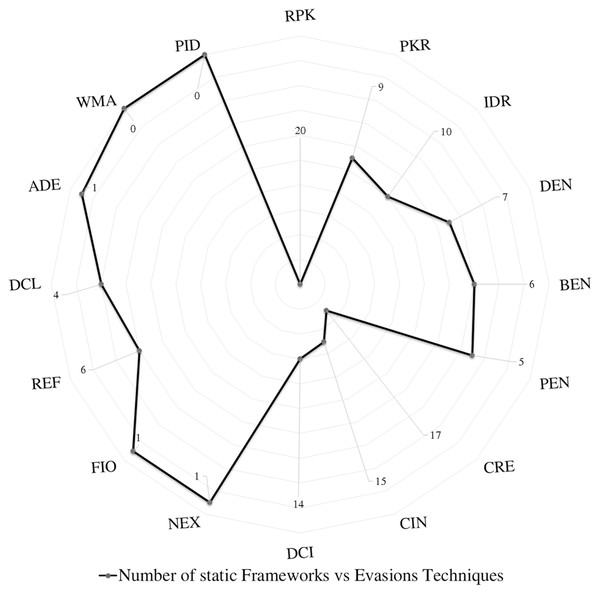

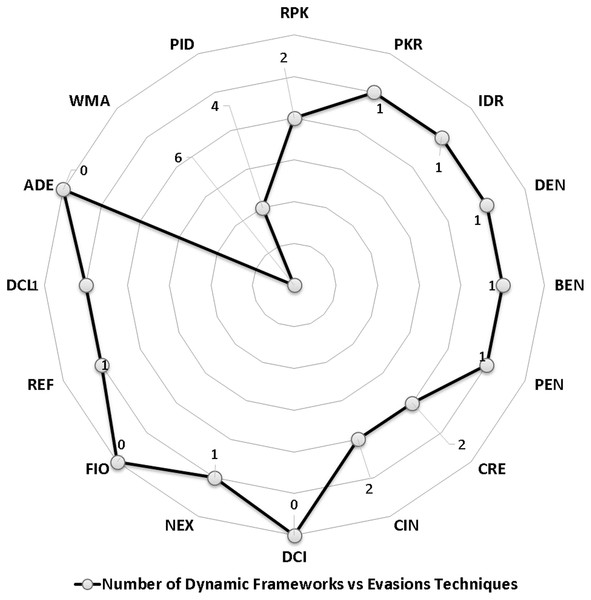

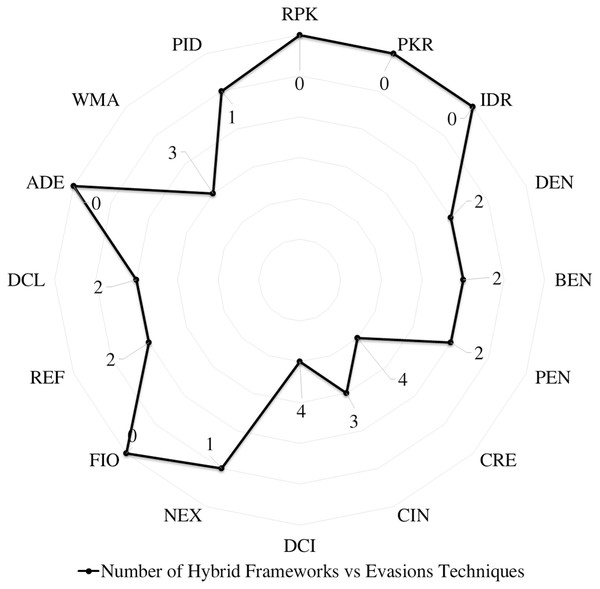

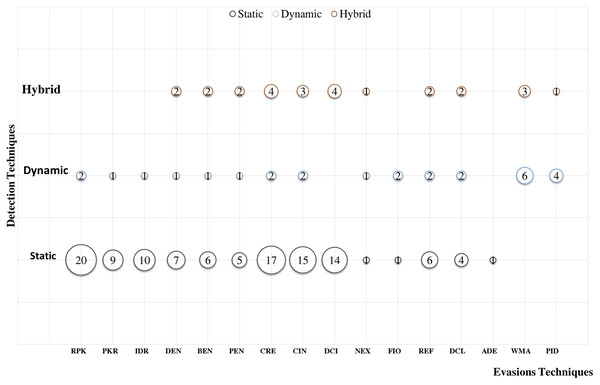

We scrutinize the top Android malware detection frameworks against the two main evasion categories based on the introduced definitions of Android malware evasion techniques. The first category is polymorphism, which consists of package transformation and encryption transformation. Package transformation includes Repacking (RPK), Package Renaming (PKR), and Identifier Renaming (IDR). Encryption transformation includes Data Encryption (DEN), Bytecode Encryption (BEN), and Payload Encryption (PEN). The metamorphism subcategories are obfuscation transformation, advanced code transformation, and anti-emulations transformation. The code obfuscation subcategory includes Code Reordering (CRE), Call Indirection (CIN), and Dead Code Insertion (DCI). Advanced code transformation includes Native Exploits (NEX), Function Inlining and outlining (FIO), Reflection API (REF), Dynamic Code Loading/Modification (DCL/DCM), and Anti-debugging (ADE) evasion techniques. Last but not least, anti-emulation transformation includes Virtual Machine Aware (VMA) and Programmed Interaction Detection (PID).

Android evasion detection frameworks

Many researchers (Apvrille & Apvrille, 2015; Bagheri et al., 2015; Battista et al., 2016; Chenxiong et al., 2015; Elish et al., 2015; Fratantonio et al., 2016; Gonzalez, Stakhanova & Ghorbani, 2014; Gurulian et al., 2016; Kuhnel, Smieschek & Meyer, 2015; Lei et al., 2015; Li et al., 2016; Martín, Menéndez & Camacho, 2016; Preda & Maggi, 2016; Sheen, Anitha & Natarajan, 2015; Shen et al., 2015; Sun, Li & Lui, 2015; Wang et al., 2016; Wu et al., 2016; Zhang, Breitinger & Baggili, 2016) examine their frameworks against different evasion techniques, and they take countermeasures to overcome evasion techniques, which prevent the anti-malware framework from detecting malicious applications. These evasions are the leading cause of false negatives, as they allow many malware applications to penetrate freely into Android smart devices. This section investigates the latest frameworks with different approaches, finding a robust solution to detect evasion techniques. We are aiming to discover the gap in this area of research. We also review the different evasion test benches and tools that researchers and commercial enterprises use to secure their codes. We review the latest detection frameworks and their resilience against five different evasion categories and 16 different subcategories distributed into 56% static analysis, 28% dynamic, and 16% hybrid frameworks.

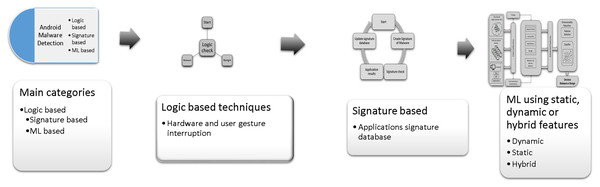

Android malware detection techniques

There are three leading techniques for Android malware detection Fig. 3 presents the three main categories of Android malware detection techniques, the first category is logic-based techniques (Lee et al., 2014; Zhang, She & Qian, 2015a), based on hard-coded safe lists and predefined alarms stored in text files or a small database like Amamra (Amamra, Robert & Talhi, 2015). The second category is signature based malware detection techniques (Niazi et al., 2015; Tchakounté et al., 2021), it based the malware detection on comparing the suspicious application with malware application signature. The third category of Android malware detection uses machine learning (ML) classification algorithms to classify the application as benign or malware (Afonso et al., 2015; Alzaylaee, Yerima & Sezer, 2016; Amamra, Robert & Talhi, 2015; Baskaran & Ralescu, 2016; Canfora et al., 2016; Canfora et al., 2015c; Castellanos et al., 2016; Faruki et al., 2015a; Feizollah et al., 2015; Fratantonio et al., 2016; Kurniawan, Rosmansyah & Dabarsyah, 2015; Lei et al., 2015; Lindorfer, Neugschwandtner & Platzer, 2015; Lopez & Cadavid, 2016; Meng et al., 2016; Nissim et al., 2016; Spreitzenbarth et al., 2015; Spreitzer et al., 2016; Wang & Wu, 2015; Wu et al., 2016; Xu et al., 2016; Yerima, Sezer & Muttik, 2014; Yuan, Lu & Xue, 2016; Zhang, Breitinger & Baggili, 2016). The ML-based techniques extract the Android devices feature that represent the Android application characteristics such as the application’s permission, code hierarchy from reverse engineering process, or monitoring application behaviour in runtime. The collected feature is a result of static, dynamic, or hybrid analysis of anlysing Android applications. The collected features are used to build machine learning classification model that decides whether the application is malware or benign.

Figure 3: The main categories of Android malware detection techniques.

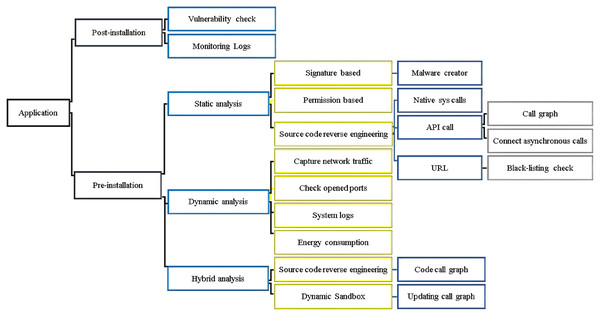

Android malware detection methodologies are classified from a different point of view, as depicted in Fig. 4, defining the Android malware detection taxonomy as post-installation and pre-installation methods.

Figure 4: Taxonomy of Android malware detection methodologies.

Post-installation detection

This section explains the Android vulnerability check and monitors the system logs after installing the application. Therefore, post-installation analysis reports the security issues and malicious activity to the end-users.

-

a)

Vulnerability Check: The vulnerability check method scans all existing Android apps and Android system versions against common security threats. APSET (Salva & Zafimiharisoa, 2015) collects the vulnerability pattern using the Android application’s test case execution framework, which supports receiving exceptions. However, using more vulnerability patterns or generating more test cases per pattern improves the APSET malware detection performance.

-

b)

Monitoring Logs: Android systems use process monitoring tools and network monitoring tools. Mobile-Sandbox (Spreitzenbarth et al., 2015) uses the process trace monitoring tool and PCAP network monitoring tool to capture the required data for analyzing the Android applications.

Pre-installation detection

Android malware detection frameworks perform static, dynamic, or hybrid analyses to analyze features for malware detection techniques, which classify the apps as benign or malware. Hence, we identify the following application analysis methodologies.

Static analysis

It is a technique to reverse engineer the APK statically without installing it; the analysis requires reading configuration settings, decompiling executable bytecode, and extracts the source code for further analysis.

-

a)

Signature-based: This paper classifies the signature-based method under static analysis detection because the signature-based detection approach builds its frameworks with static Android application characteristics. As such, DroidAnalytics (Zheng, Sun & Lui, 2013b) uses a signature-based manner in which it dynamically collects and creates a signature for each malware and stores malware signature into a central database. This model has limitations where each of the new malware family variants needs a different signature. LimonDroid (Tchakounté et al., 2021) proposed a signature-based database of Android malware signature based on fuzzy hashing technique. It builds a signature database for literature purposes rather than a malware detection framework.

-

b)

Permission-based: APK Auditor (Talha, Alper & Aydin, 2015) is a static model that leverages permission-based detection castoff decompressing the APK package; it extracts the malicious symptoms using permission and signature matching analysis. Likewise, Triggerscope (Fratantonio et al., 2016) uses permissions characteristics as an input to classify the application using different machine learning algorithms (Abdulla & Altaher, 2015; Alazab et al., 2020; Arora, Peddoju & Conti, 2019; Dharmalingam & Palanisamy, 2021; Fang, Han & Li, 2014; Glodek & Harang, 2013; Li et al., 2018; Niazi et al., 2015; Şahin et al., 2021; Shalaginov & Franke, 2014; Talha, Alper & Aydin, 2015; Tiwari & Shukla, 2018).

-

c)

Source code based Analysis: Arp et al. (2015) extracts features from the application’s Androidmanifest file and source code; it scrutinizes the code by listing the native calls, API calls, and URL addresses. It uses machine learning classification to discriminate between malware and benign apps. Likewise, DroidMat (Wu et al., 2012) uses the configuration file to get the required permission by the APK and counts the method that has API calls from the decompiled source code; it uses 1,500 benign APK applications and 238 malware, evaluates the accuracy of the framework, and achieves 97.87% accuracy. However, Lei et al. (2015) proposed a probabilistic discriminative model based on decompiled source code with permissions. It classified apps as benign and malware using machine learning classification techniques. Hanna et al. (2013) tried to find the code similarity among Android applications to detect similar code patterns with the same vulnerabilities and the repackaged or cloned applications in Android markets.

Dynamic analysis

Dynamic analysis is the process of running the suspect app in an isolated Android environment. It starts by receiving the Android application APK files, either using an online scanning portal VirusTotal (Google) or a scanning agent on an Android smartphone/device. Next is opening a suitable Android operating environment in a physical device or emulator, which we hereafter refer to as a sandbox. The sandbox isolates the application to protect the analysis device from possible malicious attacks. Later, the dynamic analysis starts system logging and network monitoring tools and captures the default system logs.

Once the sandbox and the logging or monitoring tools are ready, the APK installation follows, and once the installation is successful, the logging system captures all system logs. Dynamic analysis requires the application to start and run all codes and capture all changes to the Android system environment. The sandbox captures the system logs before installing the application and compares the system logs after installing and running the suspect Android application. The sandbox uses a monkeyrunner tool to randomly emulate user gestures and cover all the possible alleged code in an Android application. Dynamic analysis sandboxing techniques install and run Android applications in a virtual environment, emulator, or physical device and monitor the application’s behaviour. It considers network traffic, opened ports, and system calls. One of the main issues during the monitoring process is the user interaction simulation tool, which simulates the user interaction gestures that must cover all possible interactions. The following are types of sandboxing: Sandbox Emulator: Most researchers (Afonso et al., 2015; Desnos & Lantz, 2014; Faruki et al., 2015a; Spreitzenbarth et al., 2015) use Android emulators like Droidbox (Desnos & Lantz, 2014), TantDroid (Chao et al., 2020), and CuckooDroid (Check Point Software Technologies, 2015), which run an Android image as a virtual machine. Later, the framework destroys the used OS image and prepares a factory reset Android OS for the following analysis process. Physical sandbox device: The dynamic analysis algorithm resets the physical device to factory settings to make sure the analysis captures only the suspected application’s behaviour. It overcomes the limitations of using emulators and uses physical devices to analyze suspicious applications (Shrestha et al., 2015) dynamically.

Android malware dynamic analysis faces some challenges; some malware families evade the dynamic malware analysis environment by halting the malicious download until the dynamic analysis finishes the monitoring period. The sandbox environment suffers from the computational time required to load the Android operating system, create log files, install APK, capture system logs and network traffic, and copy the log files to form understandable characteristics. User gestures emulation using Android tools, such as monkeyrunner, is less precise and partially covers the code of an application. Phone calls, SMS, GPS, and NFC hardware emulation is another challenge in Android malware dynamic analysis, as they are not as realistic as a physical device. The dynamic analysis kills the emulator after the dynamic analysis time. Therefore, the dynamic analysis launches a new emulator instance needs for every App analysis. These challenges prevent the dynamic analysis from performing effective malware detection. Some studies have considered dynamic analysis to overcome the limitations of static analysis (Afonso et al., 2015; Amos, Turner & White, 2013; Desnos & Lantz, 2014; Enck et al., 2014a, 2014b; Lindorfer, Neugschwandtner & Platzer, 2015; Spreitzenbarth et al., 2015; Wang & Shieh, 2015; Zhao et al., 2014).

Hybrid analysis

The hybrid-based detection frameworks, like Mobile-Sandbox (Spreitzenbarth et al., 2015), Droiddetector (Yuan, Lu & Xue, 2016), and Andro-Dumpsys (Jang et al., 2016), combine the dynamic analysis and static analysis techniques to reconcile the limitations of the static analysis. The hybrid analysis extracts static features using reverse engineering techniques (Lim et al., 2016). Static features are apps permissions, code analysis, intent, network address, string, and hardware features. Likewise, it extracts the dynamic analysis of the application by capturing the network traffic, system calls, user interaction, and system components using sandbox methodologies. Later, it combines a group of static and dynamic features, driving the machine learning algorithms to classify the application to benign or malware.

Android malware dataset

Most Android malware detection frameworks adopt machine learning algorithms to build a detection model; hence researchers crawl apps from the official apps market store Google Play to build its dataset (Arp et al., 2015; Parkour, 2013; Yajin & Xuxian, 2012). It also crawls sample applications from third-party application stores, such as Soc.io Mall, Samsung Galaxy apps, SlideME, AppsLib, GetJar, Mobango, Opera Mobile Store, Amazon Appstore, and 1Mobile markets. To label the crawled applications as benign or malware, researchers employ online security scanning tools as listed in Table 3. For instance, Virustotal and AndroTotal, and the online service are used to scan the crawled apps and cluster the found malware apps into malware families. Researchers label all crawled apps using VirusTotal to build Android malware detection datasets. Many of the dataset are published for future academic research such as Drebin (Arp et al., 2015), Genome (Yajin & Xuxian, 2012), Kharon (Kiss et al., 2016), AMD (Li et al., 2017), AAGM (Lashkari et al., 2017), PRAGuard (Maiorca et al., 2015), AndroZoo (Allix et al., 2016) datasets.

| Online security scanning | Description | Started | Scanning rate (app/day) | Services | License |

|---|---|---|---|---|---|

| VirusTotal (Google, 2011) | https://www.virustotal.com | 2011 | Ignored | Web/API | Free |

| AndroTotal (Maggi, Valdi & Zanero, 2013) Droydseuss (Coletta, Van der Veen & Maggi, 2016) |

https://andrototal.org/ http://droydseuss.com | 2013 | Ignored | Web | Free |

| ANDRUBIS (Lindorfer et al., 2014) | https://anubis.iseclab.org commercialized to https://www.lastline.com/ | 2012 | 3,500 | API | Free/discontinued– Paid only |

| APK Auditor (Talha, Alper & Aydin, 2015) | http://app.ibu.edu.tr:8080/apkinspectoradmin | 2015 | Ignored | Web | Discontinued |

| NVISO (Hoffmann et al., 2016) | https://apkscan.nviso.be/ | – | 2,400 | Web/API | Free/Pro |

| Copperdroid | http://copperdroid.isg.rhul.ac.uk/copperdroid/ | 2015 | NA | Web | NA |

| Totalhash | https://totalhash.cymru.com | 10 | Web/API | Commercial |

Machine learning in android malware detection

Based on collected characteristics or so-called features (Feizollah et al., 2015), different machine learning classification techniques classify APK as benign or malware. However, deep insight into machine learning techniques is outside the scope of this study. Android malware detection classifies Android apps into two classes benign and malware. However, some papers detect Android Ransomware (Andronio, Zanero & Maggi, 2015; Maiorca et al., 2017) considering three classes benign, malware, and ransomware. Hence, we briefly explain the evaluation measures of ML classification. Machine learning comprises three main categories, namely supervised, unsupervised, and reinforcement learning.

(a) Supervised Model:

Supervised machine learning bases its model on a labelled dataset. The framework splits the dataset into two subsets; first subset is for training and creating the classification model, and the second subset is for testing and validating the trained classification model. Most researchers split the data into 70% training and 30% testing subsets, but some split the data into 50% for training and 50% for testing (Adebayo & AbdulAziz, 2014).

(b) Unsupervised Model:

In the unsupervised model, apps are unlabeled. The unsupervised model recognizes the class of the applications without knowing which App is malware or benign. Researchers use unsupervised models to learn the covert pattern of the unlabeled data (Akpojaro, Aigbe & Onwudebelu, 2014; Kohout & Pevny, 2015; Tang, Sethumadhavan & Stolfo, 2014).

(c) Reinforcement Learning:

The machine exposes itself to an environment where it trains itself continually using trial and error. This machine learns from experience and tries to capture the best possible knowledge to make accurate business decisions. An example of reinforcement learning is the Markov Decision Process (Kaelbling, Littman & Moore, 1996).

To understand the supervised model classification performance, ML introduces the confusion matrix to calculate the performance measures as per Table 4. Let D be the total number of test apps, which we use to examine the supervised ML model performance that classifies apps as benign or malware, let M be the number of malware samples, and B the number of benign samples.

True Positive (TP) represents the number of malware correctly classified.

False Positive (FP) accounts for the number of benign apps classified erroneously as malware.

True Negative (TN) represents the number of correctly classified benign apps.

False Negative (FN) accounts for the number of malware apps classified erroneously as benign.

The ML performance measures represent the accuracy of the Android malware detection classification frameworks. Table 5 explains the ML performance measure formulas and their direct mathematical relation to the confusion matrix.

| Performance measure | Short-form | Formulas | Description |

|---|---|---|---|

| Recall or Sensitivity | TPR | = | True Positive Rate |

| Miss rate | FNR | = | False Negative Rate |

| Fall-out | FPR | = | False Positive Rate |

| Specificity | TNR | = | True Negative Rate |

| Precision | PPV | = | Positive Predictive Value |

| False Discovery Rate | FDR | = | False Discovery Rate |

| False Omission Rate | FOR | = | False Omission Rate |

| Negative Predictive Value | NPV | = | Negative Predictive Value |

| Accuracy | ACC | = | Total truly detected apps over total examined apps |

| F-measure | F1 | = | The harmonic mean of precision and sensitivity |

The Receiver Operating Characteristic (ROC) curve plots the TPR against FPR where TRP is the y-axis and FPR is the x-axis. Every point in the ROC curve represents one confusion; it is all based on TP and FP values. Area Under the Curve (AUC) is the area under the ROC curve representing the aggregation of the ML trained model (Afifi et al., 2016; Baskaran & Ralescu, 2016; Feizollah et al., 2015).

Evasion test benches tools

Researchers or commercial companies have developed the evasion test benches to study the robustness of the currently available anti-malware applications or protect their software packages from piracy issues. The first test benches trials were ADAM (Zheng, Lee & Lui, 2013a) and Droidchameleon Rastogi (Rastogi, Chen & Jiang, 2013), which conclude that there is a detection performance degradation when applying trivial obfuscation techniques. However, researchers developed evasions tools to evaluate commercial anti-malware performance, such as PANDORA (Protsenko & Muller, 2013), Mystique (Meng et al., 2016), AAMO (Preda & Maggi, 2016), ProGuard (Lafortune, 2002), and others as listed in Table 6. Evasion tools were initially aiming to protect commercial software companies’ applications from piracy, such as DexGuard (GuardSquare, 2014), which is an extension of ProGuard (Lafortune, 2002), and Klassmaster (Klassmaster, 2013). Recently, a pretty good number of researchers develop frameworks targeting obfuscation and malware variant resiliency. PetaDroid (Karbab & Debbabi, 2021) introduces the severe first obfuscation dataset, which is a good initial. However, it proves that the accuracy degrades with time and needs malware variant and obfuscation adaptations. Dynamic analysis frameworks (Chen et al., 2018; Cho, Yi & Ahn, 2018; De Lorenzo et al., 2020; Feng et al., 2018; Sihag et al., 2021; Xue et al., 2017) declare the ability to detect all types of obfuscated malware; however, most of it misses the evaluation report of each obfuscation technique using obfuscated malware datasets. Researchers who evaluated their framework against particular evasions are identified by mentioning the detected evasion, which represents that the respective study either evaluated or presumed its ability to detect the evasion technique, while “Failed to detected or ignored” means the respective study is defeated the corresponding evasion technique. The “stared” cell indicates the framework that ignores the evaluation experiments on evasion techniques or assumptions to that effect, or the study misses evaluating its framework performance against this evasion technique.

| Polymorphism | Metamorphism | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Package transformation | Encryption | Code obfuscation | Advanced code transformation | Anti-emulator | ||||||||||||

| Framework | (RPK) | (PKR) | (IDR) | (DEN) | (BEN) | (PEN) | (CRE) | (CIN) | (DCI) | (NEX) | (FIO) | (REF) | DCL/DCM) | (ADE) | (VMA) | (PID) |

| ADAM (Zheng, Lee & Lui, 2013a) | ✓ | * | * | ✓ | * | * | ✓ | * | ✓ | * | * | * | * | * | * | * |

| DroidChameleon (Rastogi, Chen & Jiang, 2013) | ✓ | * | * | * | * | * | ✓ | * | * | * | * | ✓ | * | * | * | * |

| ProGuard (Lafortune, 2002) | * | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | * | * | * | * | * |

| DexGuard (GuardSquare, 2014) | * | * | * | ✓ | * | * | ✓ | ✓ | * | * | * | * | * | * | * | * |

| Klassmaster (Klassmaster, 2013) | * | * | * | ✓ | ✓ | * | ✓ | ✓ | * | * | * | * | * | * | * | * |

| Maiorca (Maiorca et al., 2015) | ✓ | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | ✓ | * | * | * | * |

| Vidas (Vidas & Christin, 2014) | * | * | * | * | * | * | * | * | * | * | * | * | * | * | ✓ | * |

| Petsas (Petsas et al., 2014) | * | * | * | * | * | * | * | * | * | * | * | * | * | * | ✓ | * |

| Morpheus (Jing et al., 2014) | * | * | * | * | * | * | * | * | * | * | * | * | * | * | ✓ | * |

| Garcia (Garcia et al., 2015) | * | ✓ | * | ✓ | ✓ | * | * | ✓ | * | * | * | * | * | * | * | * |

| DroidSieve (Suarez-Tangil et al., 2017) | * | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | ✓ | ✓ | * | * | * |

| MysteryChecker (Jeong et al., 2014) | ✓ | * | * | * | ✓ | ✓ | ✓ | ✓ | * | * | * | * | * | * | * | * |

| PANDORA (Protsenko & Muller, 2013) | * | * | * | ✓ | * | * | * | * | * | * | ✓ | ✓ | * | * | * | * |

| Mystique (Meng et al., 2016) | * | * | ✓ | ✓ | * | * | * | * | * | * | ✓ | * | * | * | * | * |

| Canfora (Canfora et al., 2015b) | ✓ | ✓ | ✓ | ✓ | * | * | ✓ | * | ✓ | * | * | * | * | * | * | * |

| Hatwar (Hatwar & Shelke, 2014) | * | * | * | * | * | * | * | * | * | * | * | * | ✓ | * | * | * |

| AAMO (Preda & Maggi, 2016) | ✓ | ✓ | * | * | ✓ | * | ✓ | ✓ | ✓ | * | ✓ | ✓ | * | ✓ | * | * |

| Abid (Abaid, Kaafar & Jha, 2017) | * | * | * | * | * | * | * | * | * | * | * | * | ✓ | * | * | * |

| EnDroid (Feng et al., 2018) | * | * | * | * | * | * | * | * | * | * | * | ✓ | ✓ | * | * | * |

| Bacci (Bacci et al., 2018) | ✓ | ✓ | ✓ | ✓ | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | * | * |

| DexMoinitor (Cho, Yi & Ahn, 2018) | * | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | * | * | * | * | * |

| Kim (Kim et al., 2019) | * | ✓ | ✓ | ✓ | * | * | * | ✓ | ✓ | * | * | * | * | * | * | * |

| DAMBA (Zhang et al., 2020) | * | * | * | ✓ | ✓ | ✓ | * | ✓ | * | * | * | * | ✓ | * | * | * |

| IMCFN (Vasan et al., 2020) | ✓ | ✓ | ✓ | ✓ | * | * | ✓ | * | ✓ | * | * | * | * | * | * | * |

| PetaDroid (Karbab & Debbabi, 2021) | ✓ | ✓ | ✓ | ✓ | * | ✓ | ✓ | ✓ | ✓ | * | * | ✓ | * | * | * | * |

| BLADE (Sihag, Vardhan & Singh, 2021b) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | * | * | * | * | * | * | * | * | * |

| DANDroid (Millar et al., 2020) | * | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | * | * | * | * | * |

| AndrODet (Mirzaei et al., 2019) | ✓ | ✓ | ✓ | ✓ | * | * | * | ✓ | * | * | * | * | * | * | * | * |

| Dadidroid (Ikram, Beaume & Kâafar, 2019) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | * | ✓ | * | * | * | * | * | * | * | * |

| Obfusifier (Li et al., 2019) | ✓ | ✓ | ✓ | * | * | * | ✓ | ✓ | ✓ | * | * | * | * | * | * | * |

Note:

RPK, Repacking; PKR, Package Renaming; IDR, Identifier Renaming; DEN, Data Encryption; BEN, Bytecode Encryption; PEN, Payload Encryption; CRE, Code Reordering; CIN, Call Indirections; DCI, Dead Code Insertion; NEX, Native Exploits; FIO, Function Inlining and Outlining; API (REF), Reflection; DCL/DCM, Dynamic code loading/Modification; ADE, Anti-debugging; VMA, Virtual Machine Aware; PID, Programmed Interaction Detection.

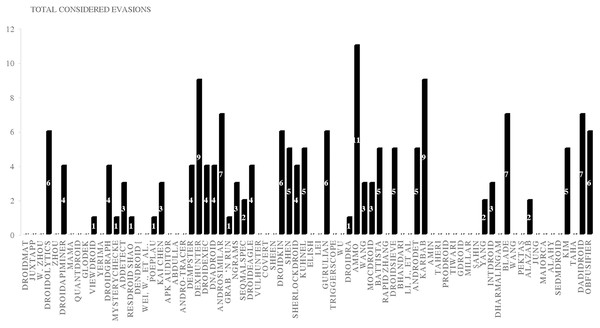

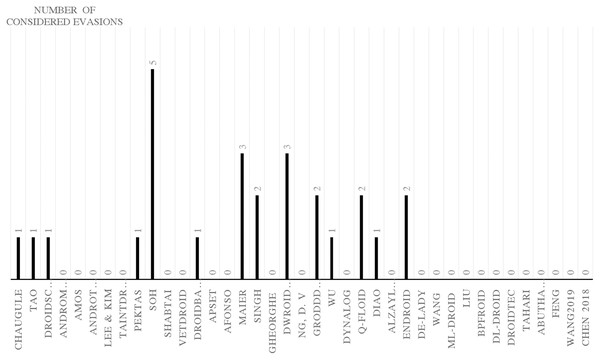

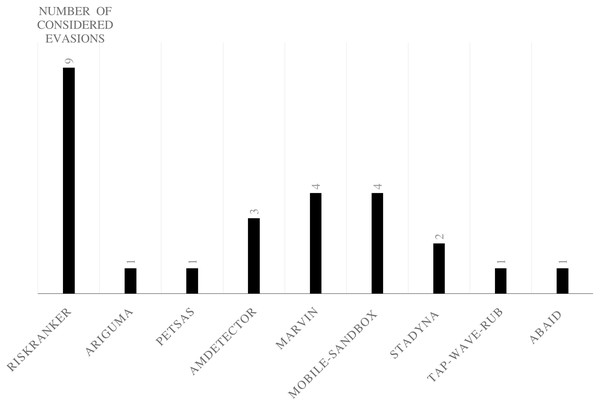

Evaluation of evasion detection frameworks

We have explored the last 10 years’ research to evaluate the Android detection frameworks against evasion techniques discussed in evasion techniques section. We studied Android malware detection frameworks for the last decade from 2011 to early 2021, as listed in Table 7. We categorize malware detection framework by the analysis methodology static, dynamic, and hybrid features. This paper decides to put metadata analysis out of this research scope. We have examined 74 static analysis based frameworks. The number of dynamic based analysis frameworks are 35. The number of hybrid analysis frameworks is 26. Hence, the total number of examined papers are 135 research paper that this study selected from top rank journals and conferences.

Polymorphism evasion detection

We examine the three main static, dynamic, and hybrid frameworks vs polymorphism evasions. Table 8 represents static, dynamic, and hybrid analysis based detection; we scrutinize each framework against polymorphism transformation techniques in the two categories package transformation and encryption transformation. Each framework uses various samples of Android malware and benign applications’ datasets in the evaluation process; each dataset contains a certain number of malware and benign applications. For instance, APK Auditor (Talha, Alper & Aydin, 2015) tested its framework against 6,909 malware and 1,853 benign applications; a total of 8,762 apps that APK Auditor crawled from Google play store and other datasets such as Genome Project and Contagio. APK Auditor achieved 88% malware detection accuracy. As it is signature-based, most of the evasion techniques prevent the APK Auditor detection framework from detecting malware applications.

(a) Package Transformation:

– RPK - Repacking Evasion Detection:

Detecting repacking evasion is possible using static analysis and detection techniques; Dempster–Shafe (Du, Wang & Wang, 2015) investigate repacking characteristics using a control flow graph and claimed better resistance to code obfuscation techniques. Likewise, Droidgraph (Kwon et al., 2014) used the hierarchical class levels to determine the repackaged malicious code to the original payload; it also considered the API calls, junk code, and code obfuscation. It reduced the code comparison time compared to the polynomial time-consuming native call graphs algorithm. Though, reflection successfully evades the detection framework that uses the control flow graph. Other static detection approaches such as MysteryChecker (Jeong et al., 2014), AnDarwin (Crussell, Gibler & Chen, 2015), AndroSimilar (Faruki et al., 2015d), ngrams (Canfora et al., 2015a), DroidEagle (Sun, Li & Lui, 2015), DroidKin (Gonzalez, Stakhanova & Ghorbani, 2014), DroidOlytics (Faruki et al., 2013), Gurulian (Gurulian et al., 2016), Shen (Shen et al., 2015), and AAMO (Preda & Maggi, 2016) have indicated their ability to detect RPK evasions. While studying dynamic analysis papers, we notice that most dynamic studies provide less attention to this evasion type. Similarly, Soh et al. (2015) and Wu et al. (2015) stressed that RPK evasion detection could detect RPK evasion, as illustrated in Table 8. The study spotted 20 papers that scrutinized the RPK evasion using static analysis, and only two papers scrutinized RPK using dynamic analysis.

– PKR - Package Renaming Detection:

Static analysis frameworks such as DroidoLytics (Faruki et al., 2013) and Droidkin (Gonzalez, Stakhanova & Ghorbani, 2014) examine their capability in detecting PKR evasion techniques. However, many other papers insufficiently evaluate its framework against PKR, such as APK Auditor (Talha, Alper & Aydin, 2015), DroidGraph (Kwon et al., 2014), Andro-tracer (Kang et al., 2015), Vulhunter (Chenxiong et al., 2015), and COVERT (Bagheri et al., 2015), as presented in Table 8. Dynamic and Hybrid analysis frameworks studies incompetently examine its robustness against PKR, except one research, Shen (Shen et al., 2015) highlighted the issue of PKR and its capability of detecting it as per Table 8. The study spotted nine papers that scrutinized the PKR evasion using static analysis, and only one papers scrutinized PKR using dynamic analysis.

– IDR Identifier Renaming Evasion Detection:

DroidOlytics (Faruki et al., 2013), AndroSimilar (Faruki et al., 2015d), Droidkin (Gonzalez, Stakhanova & Ghorbani, 2014), Kuhnel (Kuhnel, Smieschek & Meyer, 2015), Triggerscope (Fratantonio et al., 2016), AAMO (Preda & Maggi, 2016), and Battista (Battista et al., 2016) claim they can detect IDR evasion by using their static Android malware detection frameworks as presented in Table 8. Nevertheless, many other researchers inadequately evaluate its robustness against IDR evasion. Table 8 demonstrates the issue of assuring the Android malware detection frameworks’ robustness against IDR evasion and scrutinizes the researchers’ framework against IDR evasion techniques.

In summary, most Android malware detection frameworks based on static analysis can detect package transformation techniques (RPK, PKR, and IDR). However, most detection frameworks based on dynamic and hybrid analysis inadequately evaluate or report their resilience against IDR evasion techniques. The study spotted 20 papers that scrutinized the RPK evasion using static analysis, and only 10 papers scrutinized IDR. The study spotted nine papers that scrutinized the IDR evasion using static analysis, and only one paper scrutinized IDR using dynamic analysis.

(b) Encryption Transformation Evasion Detection:

Static analysis detects encryption evasion techniques; many studies, such as DexHunter (Zhang, Luo & Yin, 2015), DroidKin (Gonzalez, Stakhanova & Ghorbani, 2014), Sherlockdroid (Apvrille & Apvrille, 2015), Kuhnel (Kuhnel, Smieschek & Meyer, 2015), and AAMO (Preda & Maggi, 2016), have proved that they detect the three encryption evasions (DEN, BEN, and PEN). Static based detection studies, such as AndroSimilar (Faruki et al., 2015d), MysteryChecker (Jeong et al., 2014), DroidKin (Gonzalez, Stakhanova & Ghorbani, 2014), SherlockDroid (Apvrille & Apvrille, 2015), Kuhnel (Kuhnel, Smieschek & Meyer, 2015), Shen (Shen et al., 2015), and AAMO (Preda & Maggi, 2016), are able to detect DEN evasions. Likewise, Soh (Soh et al., 2015) and Q-floid (Castellanos et al., 2016) claimed robustness against BEN evasion. The dynamic analysis based detection DwroidDump (Kim, Kwak & Ryou, 2015) used code extraction executable code from the memory of Dalvik Virtual Machine (DVM) instead of using a decompilation tool, which is subject to obstruction by the three encryption evasions techniques as shown in Table 8. Nevertheless, the RiskRanker (Grace et al., 2012) hybrid based detection framework successfully detected DEN, BEN, and PEN. Hybrid detection frameworks such as RiskRanker (Grace et al., 2012), AMDetector (Zhao et al., 2014), MARVIN (Lindorfer, Neugschwandtner & Platzer, 2015), and Mobile-Sandbox (Spreitzenbarth et al., 2015) evaluated their frameworks against DEN evasion; they claim the ability to detect BEN evasion techniques. Two dynamic detections papers evaluate their frameworks against RPK evasion techniques: Soh (Soh et al., 2015) and Wu 2015 (Wu et al., 2015). Likewise, DwroidDump (Kim, Kwak & Ryou, 2015) examines its framework against encryption evasion techniques. Kumawat, Sharma & Kumawat (2017) also developed a system to detect cryptographic vulnerabilities in Android applications and to detect malware. This study spotted seven papers that scrutinized the DEN evasion using static analysis, only one paper scrutinized DEN using dynamic analysis, and two papers scrutinized DEN using hybrid analysis. However, this study spotted six papers that scrutinized the BEN evasion using static analysis, only one paper scrutinized BEN using dynamic analysis, and two papers scrutinized BEN using hybrid analysis. In addition, this study spotted five papers that scrutinized the PEN evasion using static analysis, only one paper scrutinized PEN using dynamic analysis, and two papers scrutinized PEN using hybrid analysis.

Metamorphism evasion detection

Table 8 represents static, dynamic, and hybrid-based Android malware detection frameworks and their robustness against metamorphism evasion detection techniques.

(a) Code Obfuscation Detection:

Code obfuscation consists of CRE, CIN, and DCI; we explain each evasion detection framework in the following list:

– CRE - Code Reordering Evasion Detection:

ResDroid (Shao et al., 2014), AnDarwin (Crussell, Gibler & Chen, 2015), and Seqmalspec (Sufatrio et al., 2015a) proposed static analysis based detection and managed to detect CRE evasion. Likewise, Q-floid (Castellanos et al., 2016) detected CRE using the dynamic sandboxing methodology. Mobile-Sandbox (Spreitzenbarth et al., 2015) hybrid based detection frameworks detect CRE evasions. Nonetheless, CRE evades ngrams (Canfora et al., 2015a) and Elish (Elish et al., 2015) static detection frameworks, which results in many false negatives (FN), as shown in Table 9. This study spotted 17 papers that scrutinized the CRE evasion using static analysis, only two papers scrutinized CRE using dynamic analysis, and four papers scrutinized CRE using hybrid analysis.