Place-cell capacity and volatility with grid-like inputs

Abstract

What factors constrain the arrangement of the multiple fields of a place cell? By modeling place cells as perceptrons that act on multiscale periodic grid-cell inputs, we analytically enumerate a place cell’s repertoire – how many field arrangements it can realize without external cues while its grid inputs are unique – and derive its capacity – the spatial range over which it can achieve any field arrangement. We show that the repertoire is very large and relatively noise-robust. However, the repertoire is a vanishing fraction of all arrangements, while capacity scales only as the sum of the grid periods so field arrangements are constrained over larger distances. Thus, grid-driven place field arrangements define a large response scaffold that is strongly constrained by its structured inputs. Finally, we show that altering grid-place weights to generate an arbitrary new place field strongly affects existing arrangements, which could explain the volatility of the place code.

Introduction

As animals run around in a small familiar environment, hippocampal place cells exhibit localized firing fields at reproducible positions, with each cell typically displaying at most a single firing field (O’Keefe and Dostrovsky, 1971; Wilson and McNaughton, 1993). However, a place cell generates multiple fields when recorded in single large environments (Fenton et al., 2008; Park et al., 2011; Rich et al., 2014) or across multiple environments (Muller et al., 1987; Colgin et al., 2008), including different physical and nonphysical spaces (Aronov et al., 2017).

Within large spaces, the locations seem to be well-described by a random process (Rich et al., 2014; Cheng and Frank, 2011), and across spaces the place-cell codes appear to be independent or orthogonal (Muller et al., 1987; Colgin et al., 2008; Alme et al., 2014), also potentially consistent with a random process. However, a more detailed characterization of possible structure in these responses is both experimentally and theoretically lacking, and we hypothesize that there might be structure imposed by grid cells in place field arrangements, especially when spatial cues are sparse or unavailable.

Our motivation for this hypothesis arises from the following reasoning: grid cells (Hafting et al., 2005) are a critical spatially tuned population that provides inputs to place cells. Their codes are unique over very large ranges due to their modular, multi-periodic structure (Fiete et al., 2008; Sreenivasan and Fiete, 2011; Mathis et al., 2012). They appear to integrate motion cues to update their states and thus reliably generate fields even in the absence of external spatial cues (Hafting et al., 2005; McNaughton et al., 2006; Burak and Fiete, 2006; Burak and Fiete, 2009). Thus, it is possible that in the absence of external cues spatially reliable place fields are strongly influenced by grid-cell inputs.

To generate theoretical predictions under this hypothesis, we examine here the nature and strength of potential constraints on the arrangements of multiple place fields driven by grid cells. On the one hand, the grid inputs are nonrepeating (unique) over a very large range that scales exponentially with the number of grid modules (given roughly by the product of the grid periods), and thus rich (Fiete et al., 2008; Sreenivasan and Fiete, 2011; Mathis et al., 2012); are these unique inputs sufficient to enable arbitrary place field arrangements? On the other hand, this vast library of unique coding states lies on a highly nonlinear, folded manifold that simple read-outs might not be able to discriminate (Sreenivasan and Fiete, 2011). This nonlinear structure is a result of the geometric, periodically repeating structure of individual modules (Stensola et al., 2012); should we expect place field arrangements to be constrained by this structure?

These questions are important for the following reason: a likely role of place cells, and the view we espouse here, is to build consistent and faithful associations (maps) between external sensory cues and an internal scaffold of motion-based positional estimates, which we hypothesize is derived from grid inputs. This perspective is consistent with the classic ideas of cognitive maps (O’Keefe and Nadel, 1978; Tolman, 1948; McNaughton et al., 2006) and also relates neural circuitry to the computational framework of the simultaneous localization and mapping (SLAM) problem for robots and autonomously navigating vehicles (Leonard and Durrant-Whyte, 1991; Milford et al., 2004; Cadena et al., 2016; Cheung et al., 2012; Widloski and Fiete, 2014; Kanitscheider and Fiete, 2017a; Kanitscheider and Fiete, 2017b; Kanitscheider and Fiete, 2017c). We can view the formation of a map as ‘decorating’ the internal scaffold with external cues. For this to work across many large spaces, the internal scaffold must be sufficiently large, with enough unique states and resolution to build appropriate maps.

A self-consistent place-cell map that associates a sufficiently rich internal scaffold with external cues can enable three distinct inferences: (1) allow external cues to correct errors in motion-based location estimation (Welinder et al., 2008; Burgess, 2008; Sreenivasan and Fiete, 2011; Hardcastle et al., 2014), through cue-based updating; (2) predict upcoming external cues over novel trajectories through familiar spaces by exploiting motion-based updating (Sanders et al., 2020; Whittington et al., 2020); and (3) drive fully intrinsic error correction and location inference when external spatial cues go missing and motion cues are unreliable by imposing self-consistency (Sreenivasan and Fiete, 2011).

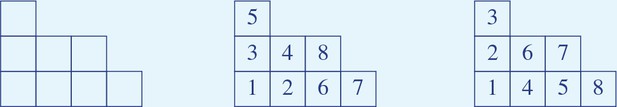

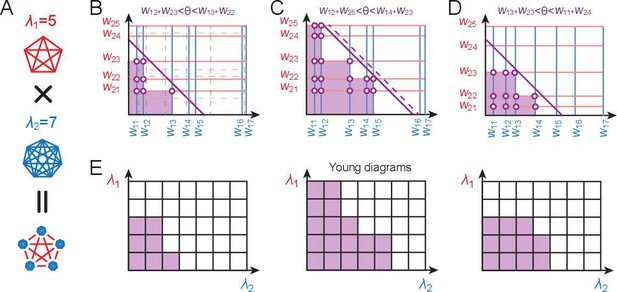

In what follows, we characterize which arrangements of place fields are realizable based on grid-like inputs in a simple perceptron model, in which place cells combine their multiple inputs and make a decision on whether to generate a field (‘1’ output) or not (‘0’ output) by selecting input weights and a firing threshold (Figure 1A,B). However, in contrast to the classical perceptron results, which are derived under the assumption of random inputs that are in general position (a property related to the linear independence of the inputs), grid inputs to place cells are structured, which adds substantial complexity to our derivations.

The grid-like code and modeling place cells as perceptrons.

(A) Grid-like inputs and a conceptual view of a place cell as a perceptron: each place cell combines its feedforward inputs, including periodic drive from grid cells (responses simplified here to one spatial dimension) of various periods and phases (blue and red cells are from modules with different periods) to generate location-specific activity that might be multiply peaked across large spaces. Can these place fields be arranged arbitrarily? (B) Idealization of a place cell as a perceptron: in discretized 1-D space, the grid-like inputs are discrete patterns that for simplicity we consider to be binary; place fields are assigned at locations where the weighted input sum exceeds a threshold . A place field arrangement can be considered as a set of binarized output labels (1 for each field, 0 for non-field locations) for the set of input patterns. We count field arrangements over the range of locations where the grid-like inputs have unique states; for two modules with periods , this range is 6 (the LCM of the grid periods). LCM = least common multiple; GCD = greatest common divisor.

We show analytically that each place cell can realize a large repertoire of arrangements across all possible space where the grid inputs are unique. However, these realizable arrangements are a special and vanishing subset of all arrangements over the same space, suggesting a constrained structure. We show that the capacity of a place cell or spatial range over which all field arrangements can be realized equals the sum of distinct grid periods, a small fraction of the range of positions uniquely encoded by grid-like inputs. Overall, we show that field arrangements generated from grid-like inputs are more robust to noise than those driven by random inputs or shuffled grid inputs.

Together, our results imply that grid-like inputs endow place cells with rich and robust spatial scaffolds, but that these are also constrained by grid-cell geometry. Rigorous proofs supporting all our mathematical results are provided in Appendix 1. Portions of this work have appeared previously in conference abstract form (Yim et al., 2019).

Modeling framework

Place cells as perceptrons

The perceptron model (Rosenblatt, 1958) idealizes a neuron as computing a weighted sum of its inputs () based on learned input weights () and applying a threshold () to generate a binary response that is above or below threshold. A perceptron may be viewed as separating its high-dimensional input patterns into two output categories () (Figure 2A), with the categorization depending on the weights and threshold so that sufficiently weight-aligned input patterns fall into category 1 and the rest into category 0:

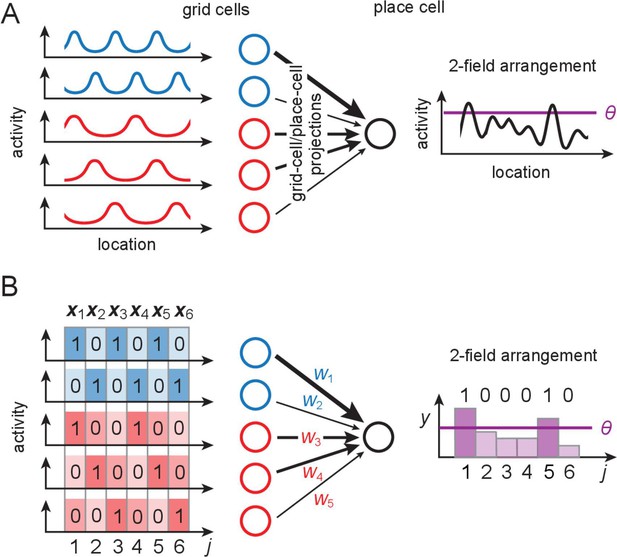

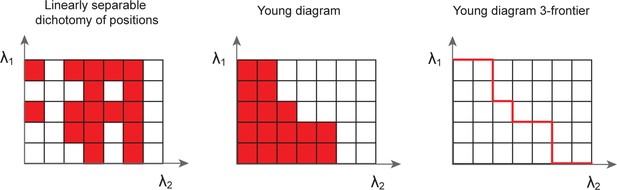

Linear separability, counting dichotomies, and separating capacity for perceptrons.

(A) A set of patterns (locations given by circles) that are assigned positive and negative labels (filled versus open), called a dichotomy of the patterns, is realizable by a perceptron if positive examples can be linearly separated (by a hyperplane) from the rest. The perceptron weights encode the direction normal to the separating hyperplane, and the threshold sets its distance from the origin. (B) An example with input dimension (the input dimension is the length of each input pattern vector, which equals the number of input neurons). When placed randomly, random real-valued patterns optimally occupy space and are said to be in general position (left); these patterns define a tetrahedron and all dichotomies are linearly separable. By contrast, structured inputs may occupy a lower-dimensional subspace and thus not lie in general position (right). This square configuration exhibits unrealizable dichotomies (as in A, bottom). (C) Cover’s results (Cover, 1965): for patterns in general position, the number of realizable dichotomies is , and thus the fraction of realizable dichotomies relative to all dichotomies is 1, when the number of patterns is smaller than the input dimension (). The fraction drops rapidly to zero when the number of patterns exceeds twice the input dimension (the separating capacity).

If each partitioning of inputs into the categories is called a dichotomy, then the only dichotomies ‘realizable’ by a perceptron are those in which the inputs are linearly separable – that is, the set of inputs in category 0 can be separated from those in category 1 by some linear hyperplane (Figure 2). Cover’s counting theorem (Cover, 1965; Vapnik, 1998) provides a count of how many dichotomies a perceptron can realize if input patterns are random (more specifically, in general position). A set of patterns in an -dimensional space is in general position if no subset of size smaller than is affinely dependent. In other words, no subset of points lies in a -dimensional plane for all . (Figure 2B) and establishes that for patterns, every dichotomy is realizable by a perceptron – this is the perceptron capacity (Figure 2C). For , exactly half of the possible dichotomies are realizable; when for fixed , the realizable dichotomies become a vanishing fraction of the total (Figure 2C).

Here, to characterize the place-cell scaffold, we model a place cell as a perceptron receiving grid-like inputs (Figure 1B). Across space, a particular ‘field arrangement’ is realizable by the place cell if there is some set of input weights and a threshold (Lee et al., 2020) for which its summed inputs are above threshold at only those locations and below it at all others (Figure 1A,B). We call an arrangement of exactly fields a ‘K-field arrangement.’.

In the following, we answer two distinct but related questions: (1) out of all potential field arrangements over the entire set of unique grid inputs, how many are realizable, and how does the realizable fraction differ for grid-like inputs compared to inputs with matched dimension but different structure? This is akin to perceptron function counting (Cover, 1965) with structured rather than general-position inputs and covers constraints within and across environments. We consider all arrangements regardless of sparsity, on one extreme, and -field (highly sparse) arrangements on the other; these cases are analytically tractable. We expect the regime of sparse firing to interpolate between these two regimes. (2) Over what range of positions is any field arrangement realizable? This is analogous to computing the perceptron-separating capacity (Cover, 1965) for structured rather than general-position inputs.

Although the structured rather than random nature of the grid code adds complexity to our problem, the symmetries present in the code also allow for the computation of some more detailed quantities than typically done for random inputs, including capacity computations for dichotomies with a prescribed number of positive labels (K-field arrangements).

Results

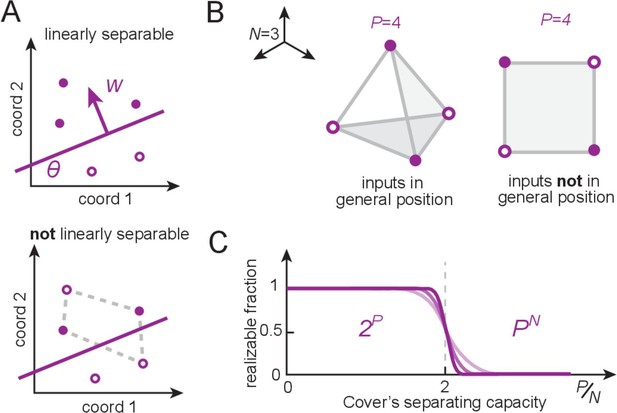

Our approach, summarized in Figure 3, is as follows: we define a mapping from space to grid-like input codes (Figure 3A,B), and a generalization to what we call modular-one-hot codes (Figure 3B). We explore the geometric structure and symmetries of these codes (Figure 3C). Next, we show how separating hyperplanes placed on these structured inputs by place-cell perceptrons permits the realization of some dichotomies (Figure 3D) and thus some spatial field arrangements (Figure 3E), but not others, and obtains mathematical results on the number of realizable arrangements and the separating capacity.

Our overall approach.

(A, B) Locations (indexed by ) map onto grid-like coding states (, defining the grid-like codebook) through the assignment of spatially periodic responses to grid cells, with different cells in a module having different phases and different modules having different periods. (This example: periods 2,3.) (C) The patterns in the grid-like codebook form some nonrandom, geometric structure. (D) The geometric structure defines which dichotomies are realizable by separating hyperplanes. (E) A realizable dichotomy in the abstract codebook pattern space, when mapped back to spatial locations, corresponds to a realizable field arrangement. Shown is a place field arrangement realized by the separating hyperplane from (D). Similarly, an unrealizable field arrangement can be constructed by examination of (D): it would consist of, for instance, fields at locations only (or, e.g., at only): vertices that cannot be grouped together by a single hyperplane.

The structure of grid-like input patterns

Grid cells have spatially periodic responses (Figure 1A,B). Cells in one grid module exhibit a common spatial period but cover all possible spatial phases. The dynamics of each module are low-dimensional (Fyhn et al., 2007; Yoon et al., 2013), with the dynamics within a module supporting and stabilizing a periodic phase code for position. Thus, we use the following simple model to describe the spatial coding of grid cells and modules: a module with spatial period (in units of the spatial discretization) consists of cells that tile all possible phases in the discretized space while maintaining their phase relationships with each other. Each grid cell’s response is a -valued periodic function of a discretized 1D location variable (indexed by ); cell in module fires (has response 1) whenever , and is off (has response 0) otherwise (Figure 1B). The encoding of location across all Mm modules is thus an -dimensional vector , where . Nonzero entries correspond to co-active grid cells at position . The total number of unique grid patterns is , which grows exponentially with for generic choices of the periods (Fiete et al., 2008). We refer to as the ‘full range’ of the code. We call the full ordered set of unique coding states the grid-like ‘codebook’ .

Because includes all unique grid-like coding states across modules, it includes all possible relative phase shifts or ‘remappings’ between grid modules (Fiete et al., 2008; Monaco et al., 2011). Thus, this full-range codebook may be viewed as the union of all grid-cell responses across all possible space and environments. We assume implicitly that 2D grid modules do not rotate relative to each other across space or environments. Permitting grid modules to differentially rotate would lead to more input pattern diversity, more realizable place patterns, and bigger separating capacity than in our present computations.

The grid-like code belongs to a more general class that we call ‘modular-one-hot’ codes. In a modular-one-hot code, cells are divided into modules; within each module only one cell is allowed to be active (the within-module code is one-hot), but there are no other constraints on the code. With modules of sizes , the modular-one-hot codebook contains unique patterns, with for a corresponding grid-like code. When are pairwise coprime, and the grid-like and modular-one-hot codebooks contain identical patterns. However, even in this case, modular-one-hot codes may be viewed as a generalization of grid-like codes as there is no notion of a spatial ordering in the modular-one-hot codes, and they are defined without referring to a spatial variable.

Of our two primary questions introduced earlier, question (1) on counting the size of the place-cell repertoire (the number of realizable field arrangements) depends only on the geometry of the grid coding states, and not on their detailed spatial embedding (i.e., it depends on the mappings in Figure 3B–D, but not on the mapping between Figure 3A,B,D,E). In other words, it does not depend on the spatial ordering of the grid-like coding states and can equivalently be studied with the corresponding modular-one-hot code instead, which turns out to be easier. Question (2), on place-cell capacity (the spatial range over which any place field arrangement is realizable), depends on the spatial embedding of the grid and place codes (and on the full chain of Figure 3A-E). For , this would correspond to a particular rather than random subset of , thus we cannot use the general properties of this generalized version of the grid-like code.

Alternative codes

In what follows, we will contrast place field arrangements that can be obtained with grid-like or modular-one-hot codes with arrangements driven by alternatively coded inputs. To this end, we briefly define some key alternative codes, commonly encountered in neuroscience, machine learning, or in the classical theory of perceptrons. For these alternative codes, we match the input dimension (number of cells) to the modular-one-hot inputs (unless stated otherwise).

Random codes , used in the standard perceptron results, consist of real-valued random vectors. These are quite different from the grid-like code and all the other codes we will consider, in that the entries are real-valued rather than -valued like the rest. A set of up to random input patterns in dimensions is linearly independent; thus, they have no structure up to this number.

Define the one-hot code as the set of vectors with a single nonzero element whose value is 1. It is a single-module version of the modular-one-hot code or may be viewed as a binarized version of the random patterns since patterns in dimensions are linearly independent. In the one-hot code, all neurons are equivalent, and there is no modularity or hierarchy.

Define the ‘binary’ code as all possible binary activity patterns of neurons (Figure 4B, right). We distinguish -valued codes from binary codes. In the binary code, each cell represents a specific position (register) according to the binary number system. Thus, each cell represents numbers at a different resolution, differing in powers of 2, and the code has no neuron permutation invariance since each cell is its own module; thus, it is both highly hierarchical and modular.

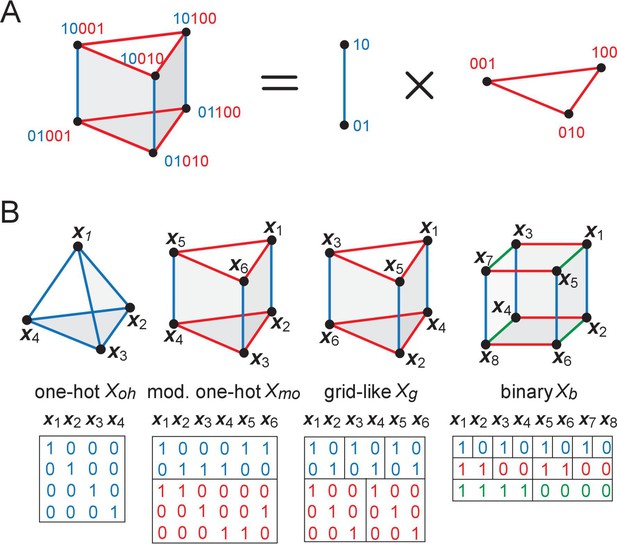

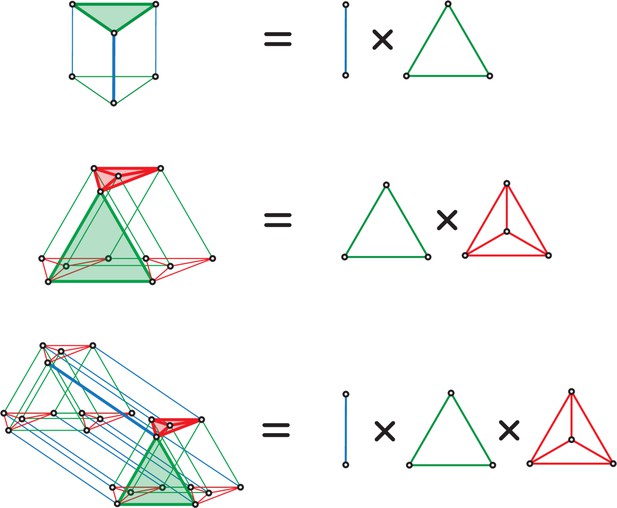

The geometry of structured inputs.

(A) Though the grid-like input patterns in the example Figure 1B are 5D, they have a simplified structure that can be embedded as a 3D triangular prism given by the product of a 2-graph (blue, middle) and 3-graph (red, right) because of the independently updating modular structure of the code. (B) Different codebooks and their geometries. At one end of the spectrum (left), one-hot codes consist of a single module; they are not hierarchical, and their geometry is always an elementary simplex (left). Grid cells and modular-one-hot codes (middle) have an intermediate level of hierarchy and consist of an orthogonal product of simplices. At the opposite end, the binary code (right) is the most hierarchical, consisting of as many modules as cells; the code has a hypercube geometry: vertices (codewords or patterns) on each face of the hypercube are far from being in general position.

The grid-like and modular-one-hot codes exhibit an intermediate degree of modularity (multiple cells make up a module). If the modules are of a similar size, the code has little hierarchy.

The geometry of grid-like input patterns

We first explore question . The modular-one-hot codebook is invariant to permutations of neurons (input matrix rows) within modules, but rows cannot be swapped across modules as this would destroy the modular structure. It is also invariant to permutations of patterns (input matrix columns ). Further, the codebook includes all possible combinations of states across modules, so that modules function as independent encoders. These symmetries are sufficient to define the geometric arrangement of patterns in , and the geometry in turn will allow us to count the number of field arrangements that are realizable by separating hyperplanes.

To make these ideas concrete, consider a simple example with module sizes (corresponding to the periods in the grid-like code), as in Figure 1B and Figure 3B. Independence across modules causes the code to have a product structure in the code: the codebook consists of six states that can be obtained as products of the within-module states: = , where and are the coding states within the size-2 and size-3 modules, respectively. We represent the two states in the size-2 module by two vertices, connected by an edge, which shows allowed state transitions within the module (Figure 4A, right). Similarly, the three states in the size-3 module and transitions between them are represented by a triangular graph (Figure 4A, right). The product of this edge graph and the triangle graph yields the full codebook . The resulting product graph (Figure 4A, left) is an orthogonal triangular prism with vertices representing the combined patterns.

This geometric construction generalizes to an arbitrary number of modules and to arbitrary module sizes (periods) , : by permutation invariance of neurons within modules, and independence of modules, the patterns of the codebook and thus of the corresponding grid-like codebook always lie on the vertices of some convex polytope (e.g., the triangular prism), given by an orthogonal product of simplicies (e.g., the line and triangle graphs). Each simplex represents one of the modules, with simplex dimension for module size (period) (see Place-cell capacity and volatility with grid-like inputs).

This geometric construction provides some immediate results on counting: in a convex polytope, any vertex can be separated from all the rest by a hyperplane; thus, all one-field arrangements are realizable. Pairs of vertices can be separated from the rest by a hyperplane if and only if the pair is directly connected by an edge (Figure 3D). Thus, we can now count the set of all realizable two-field arrangements as the number of adjacent vertices in the polytope. Unrealizable two-field arrangements, which consist geometrically of positive labels assigned to nonadjacent vertices, correspond algebraically to firing fields that are not separated by integer multiples of either of the grid periods (Figure 3D,E).

Moreover, note that the convex polytopes obtained for the grid-like code remain qualitatively unchanged in their geometry if the nonzero activations within each module are replaced by graded tuning curves as follows: convert all neural responses within a module into graded values by convolution along the spatial dimension by a kernel that has no periodicity over distances smaller than the module period (thus, the kernel cannot, for instance, be flat or contain multiple bumps within one module period). This convolution can be written as a matrix product with a circulant matrix of full rank and dimension equal to the full range . Thus, the rank of the convolved matrix remains equal to the rank of . Moreover, maintains the modular structure of : it has the same within-module permutation invariance and across-module independence. Thus, the resulting geometry of the code – that it consists of convex polytopes constructed from orthogonal products of simplices – remains unchanged. As a result, all counting derivations, which are based on these geometric graphs, can be carried out for -valued codes without any loss of generalization relative to graded tuning curves. (However, the conversion to graded tuning will modify the distances between vertices and thus affect the quantitative noise robustness of different field arrangements, as we will investigate later.) Later, we will also show that the counting results generalize to higher dimensions and higher-resolution phase representations within each module.

Given this geometric characterization of the grid-like and modular-one-hot codes, we can now compute the number of realizable field arrangements it is possible to obtain with separating hyperplanes.

Counting realizable place field arrangements

For modular-one-hot codes (but not for random codes), it is possible to specify any separating hyperplane using only non-negative weights and an appropriate threshold. This is an interesting property in the neurobiological context because it means that the finding that projections from entorhinal cortex to hippocampus are excitatory (Steward and Scoville, 1976; Witter et al., 2000; Shepard, 1998) does not further constrain realizable field arrangements.

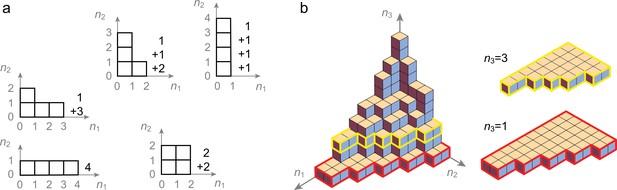

It is also an interesting property mathematically, as we explore below: combined with the within-module permutation invariance property of modular-one-hot codes, the non-negative weight observation allows us to map the problem onto Young diagrams (Figure 5), which enables two things: (1) to move from considering separating hyperplanes geometrically, where infinitesimal variations represent distinct hyperplanes even if they do not change any pattern classifications, to considering them topologically, where hyperplane variations are considered as distinct only if they change the classification of any patterns, and (2) to use counting results previously established for Young diagrams.

Counting realizable place field arrangements.

(A) Geometric structure of a modular-one-hot code with two modules of periods and . (B–D) Because cells within a module can be freely permuted, we can arrange the cells in order of increasing weights and keep this ordering fixed during counting, without loss of generality. We arrange the cells in modules 1 and 2 along the ordinate and abcissa in increasing weight order (solid blue and red lines, respectively). Because the weights can all be assumed to be non-negative for modular-one-hot codes, the threshold can be interpreted as setting a summed-weight budget: no cell (weight) combinations (purple regions with purple-white circles) below the threshold (diagonal purple line) can contribute to a place field arrangement, while all cell combinations with larger summed weights (unmarked regions) can. Increasing the threshold (from B to C) decreases the number of permitted combinations, as does decreasing the weights (B to D). Weight changes (B, from solid to dashed lines) and threshold changes (C, solid to dashed line), so long as they do not change which lines are to the bottom-left of the threshold, do not affect the number of permitted combinations, reflecting the topological structure of the counting problem. (E) With Young diagrams (each corresponding to B–D above), we extract the purely topological part of the problem, stripping away analog weights to simplify counting. A Young diagram consists of stacks of blocks in rows of nonincreasing width within a grid of a maximum width and height. The number of realizable field arrangements is simply the total number and multiplicity of distinct Young diagrams that can be built of the given height and width (see Appendix 3), which in our case is given by the periods of the two modules.

Let us consider the field arrangements permitted by combining grid-like inputs from two modules, of periods and , (Figure 5A). The total number of distinct grid-cell modules is estimated to be between 5 and 8 (Stensola et al., 2012). Further, there is a spatial topography in the projection of grid cells to the hippocampus, such that each local patch of the hippocampus likely receives inputs from 2, and likely no more than 3, grid modules (Witter and Groenewegen, 1984; Amaral and Witter, 1989; Witter and Amaral, 1991; Honda et al., 2012; Witter et al., 2000). We denote cells by their outgoing weights ( is the weight from cell in module ) and arrange the weights along the axes of a coordinate space, one axis per module, in order of increasing size (Figure 5B). Since modular-one-hot codes are invariant to permutation of the cells within a module, we can assume a fixed ordering of cells and weights in counting all realizable arrangements, without loss of generality. The threshold (dark purple line) sets which combination of summed weights can contribute to a place field arrangement: no cell combinations below the boundary (purple region) have too small a summed weight and cannot contribute, while all cell combinations with larger summed weights (white region) can (Figure 5B). Decreasing the threshold (from Figure 5B to C) or increasing weights (from Figure 5B,C to D) a sufficient amount so some cells cross the threshold increases the number of combinations. But changes that do not cause cells to move past the threshold do not change the combinations (Figure 5B, solid versus dashed gray lines).

Young diagrams extract this topological information, stripping away geometric information about analog weights (Figure 5E). A Young diagram consists of stacks of blocks in rows of nonincreasing width, with maximum width and height given in this case by the two module periods, respectively. The number of realizable field arrangements turns out to be equivalent to the total number of Young diagrams that can be built of the given maximum height and width (see Appendix 3). With this mapping, we can leverage combinatorial results on Young diagrams (Fulton and Fulton, 1997; Postnikov, 2006) (commonly used to count the number of ways an integer can be written as a sum of non-negative integers).

As a result, the total number of separating hyperplanes (K-field arrangements for all ) across the full range can be written exactly as (see Appendix 3).

where are Stirling numbers of the second kind and are the poly-Bernoulli numbers (Postnikov, 2006; Kaneko, 1997). Assuming that the two periods have a similar size , this number scales asymptotically as (de Andrade et al., 2015).

Thus, the number of realizable field arrangements with distinct modular-one-hot input patterns in a -dimensional space grows nearly as fast as , (Table 1, row 2, columns 1–3). The total number of dichotomies over these input patterns scales as Thus, while the number of realizable arrangements over the full range is very large, it is a vanishing fraction of all potential arrangements (Table 1, row 2, column 4).

Number and fraction of realizable dichotomies with binary, modular-one-hot ( modules) and one-hot input codes with the same input cell budget ().

| # cells | # input patts (L) | # lin dichot | Frac lin dichot | |

|---|---|---|---|---|

| Binary | 2λ | 22λ | 22λ2 | |

| = | << | << | >> | |

| Modular-one-hot | 2λ | λ2 | ||

| = | << | << | >> | |

| One-hot | 2λ | 2λ | 22λ | 1 |

If modules were to contribute to each place field’s response, then all realizable field arrangements still would correspond to Young diagrams; however, not all diagrams would correspond to realizable arrangements. Thus, counting Young diagrams would yield an upper bound on the number of realizable field arrangements but not an exact count (see Appendix 3). The latter limitation is not a surprise: Due to the structure of the grid-like code (a product of simplices), the enumeration of realizable dichotomies with arbitrarily many input modules is expected to be at least as challenging as that of Boolean functions. Counting the number of linearly separable Boolean functions of arbitrary (input) dimension (Peled and Simeone, 1985; Hegedüs and Megiddo, 1996) is hard.

Nevertheless, we can provide an exact count of the number of realizable -dichotomies for arbitrarily many input modules if is small ( and 4). This may be biologically relevant since place fields tend to fire sparsely even on long tracks and across environments. In this case, the number of realizable small- field arrangements scales as (the exact expression is derived analytically in Appendix 3)

The scaling approximation becomes more accurate for periods that are large relative to the spatial discretization (see Appendix 3). Since the total number of K-dichotomies scales as , the fraction of realizable K-dichotomies scales as , which for vanishes as a power law as soon as .

We can compare this result with the number of K-field arrangements realizable by one-hot codes. Since any arrangement is realizable with one-hot codes, it suffices to simply count all K-field arrangements. The full range of a one-hot code with cells is , thus the number of realizable K-field arrangements is , where the last scaling holds for . In short, a one-hot code enables arrangements, while the corresponding modular-one-hot code with cells enables field arrangements, for a ratio of realizable fields with modular-one-hot versus one-hot codes. Once again, as in the case where we counted arrangements without regard to sparseness, the grid-like code enables far more realizable K-field arrangements than one-hot codes.

In summary, place cells driven by grid inputs can achieve a very large number of unique coding states that grows exponentially with the number of modules. We have derived this result for and all K-field arrangements, on one hand, and for arbitrary but ultra-sparse (small-) field arrangements. It is difficult to obtain an exact result for sparse field arrangements for which is a small but finite fraction of ; however, we expect that regime should interpolate between these other two; it will be interesting and important for future work to shed light on this intermediate regime. In all cases, the number of realizable arrangements is large but a vanishingly small fraction of all arrangements, and thus forms a highly structured subset. This suggests that place cells, when driven by grid-cell inputs, can form a very large number of field arrangements that seem essentially unrestricted, but individual cells actually have little freedom in where to place their fields.

Comparison with other input patterns

How does the number of realizable place field arrangements differ for input codes with different levels of modularity and hierarchy? We directly compare codes with the same neuron budget (input dimension ) by taking , where for simplicity, we set for all modules in the modular-one-hot codes. This is because the modular-one-hot codes include all permutations of states in each module, the number of unique input states with equal-sized modules still equals the product of periods , as when the periods are different and coprime. The one-hot code generates far fewer distinct input patterns () than the modular-one-hot code, which in turn generates fewer input patterns than the binary code () (Table 1, column 2). This is due to the greater expressive power afforded by modularity and hierarchy.

Next, we compare results across codes for , the case for which we have an explicit formula counting the total number of realizable field arrangements for any , and which is also best supported by the biology.

How many dichotomies are realizable with these inputs? As for the modular-one-hot codes, the patterns of and fall on the vertices of a convex polytope. For , that polytope is just a -dimensional simplex (Figure 4C, left), thus any subset of vertices () lies on a -dimensional face of the simplex and is therefore a linearly separable dichotomy. Thus, all dichotomies of are realizable and the fraction of realizable dichotomies is 1 (Table 1, columns 3 and 4). For , the polytope is a hypercube; it therefore consists of square faces, a prototypical configuration of points not in general position (not linearly separable, Figure 2B and Figure 4, right) even when the number of patterns is small relative to the input dimension (number of cells). Counting the number of linearly separable dichotomies on vertices of a hypercube (also called linear Boolean functions) has attracted much interest (Peled and Simeone, 1985; Hegedüs and Megiddo, 1996). It is an NP-hard combinatorial problem, so no exact solution exists. However, in the limit of large dimension (), the number of linearly separable dichotomies scales as (Zuev, 1989), a much larger number than for one-hot inputs (Table 1, column 3). However, this number is a strongly vanishing fraction of all hypercube dichotomies (Table 1, column 4).

For modular-one-hot codes with modules, the polytopes contain -dimensional hypercubes and not all patterns are thus in general position. We determined earlier that the total number of realizable dichotomies with modules scales as , permitting a direct comparison with the one-hot and binary codes (Table 1, row 2).

Finally, we may compare grid-like codes with random (real-valued) codes, which are the standard inputs for the classical perceptron results. For a fixed input dimension, it is possible to generate infinitely many real-valued patterns, unlike the finite number achievable by -valued codes. We thus construct a random codebook with the same number, , of input patterns as the modular-one-hot code. We then determine the input dimension required to obtain the same number of realizable field arrangements as the grid-like code. The number of realizable dichotomies of the random code with patterns scales as according to an asymptotic expansion of Cover’s function counting theorem (Cover, 1965). For this number to match , the number of realizable field arrangements with a one-hot-modular code (of two modules of size each requires) . This is a comparable number of input cells in both codes, which is an interesting result because unlike for random codes the grid-like input patterns are not in general position, the states are confined to be -valued, and the grid input weights can be confined to be non-negative.

In sum, the more modular a code, the larger the set of realizable field arrangements, but these are also increasingly special subsets of all possible arrangements and are strongly structured by the inputs, with far from random or arbitrary configurations. Modular-one-hot codes are intermediate in modularity. Therefore, grid-driven place-cell responses occupy a middle ground between pattern richness and constrained structure.

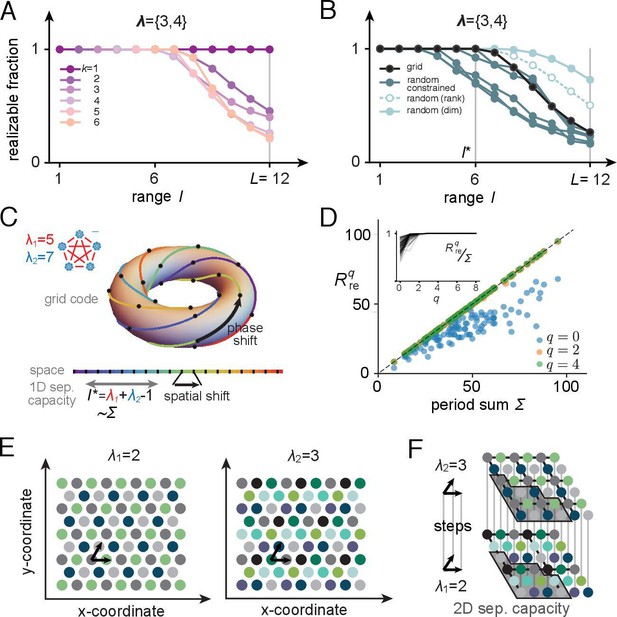

Place-cell-separating capacity

We now turn to question (2) from above: what is the maximal range of locations, , over which all field arrangements are realizable? Once we reference a spatial range, the mapping of coding states to spatial locations matters (specifically, the fact that locations in the range are spatially contiguous matters, but given the fact that the code is translationally invariant [Fiete et al., 2008], the origin of this range does not). We thus call the ‘contiguous-separating capacity’ of a place cell (though we will refer to it as separating capacity, for short); it is the analogue of Cover’s separating capacity (Cover, 1965), but for grid-like inputs with the addition of a spatial contiguity constraint.

We provide three primary results on this question. (1) We establish that for grid-structured inputs, the separating capacity equals the rank of the input matrix. (2) We establish analytically a formula for the rank of grid-like input matrices with integer periods and generalize the result to real-valued periods. (3) We show that this rank, and thus the separating capacity for generic real-valued module periods, asymptotically approaches the sum . Our results are verified by numerical simulation and counting (proofs provided in Supporting Information Appendix).

We begin with a numerical example, using periods {3,4} (Figure 6A): the full range is , while we see numerically that the contiguous-separating capacity is . Although the separating capacity with grid-structured inputs is smaller than with random inputs, it is notably not much smaller (Figure 6B, black versus cyan curves), and it is actually larger than for random inputs if the read-out weights are constrained to be non-negative (Figure 6B, pink curves). Later, we will further show that the larger random-input capacity of place cells with unrestricted weights comes at the price of less robustness: the realizable fields have smaller margins. Next, we analytically characterize the separating capacity of place cells with grid-like inputs.

Place-cell-separating capacity.

(A) Fraction of K-field arrangements that are realizable with grid-like inputs as a function of range ( indicates the full range; in this example, grid periods are and ). (B) Fraction of realizable field arrangements (summed over ) as a function of range for grid cells (black); for random inputs, range refers to number of input patterns (solid cyan: random with matching input dimension; open/dashed cyan: random with input dimension equal to rank of the grid-like input matrix; dark teal: same as open cyan, but with weights constrained to be non-negative, as for grid-like inputs). With the non-negative weight constraint for random inputs, different specific input configurations produce quite different results, introducing considerable variability in separating capacity (unlike the unconstrained random input case or the grid code case for which results are exact rather than statistical). (C) The grid code is generated by iterated application of a phase-shift operator as a function of one-step updates in position over a contiguous 1D range. This feature of the code leads to a separating capacity that achieves its optimal value, given by the rank of the input matrix. (D) Separating capacity as a function of the sum of module periods for real-valued periods (randomly drawn from with , 100 realizations), showing the quality of the integer approximation at different resolutions. Integer approximations to the real-value periods at successively finer resolutions quickly converge, with results from and nearly indistinguishable from each other. Inset: ratio of separating capacity to sum of periods ( as a function of resolution quickly approaches 1 from below as increases). (E, F) Capacity results generalize to multidimensional spatial settings: (E) in 2D, grid-cell-activity patterns lie on a hexagonal lattice (all circles of one color mark the activity locations of one grid cell). For grid periods , this code utilizes 4 two-periodic cells and 9 three-periodic cells, respectively. (F) Full range of the 2D grid-like code from (E). The set of contiguous locations over which any place field arrangement is realizable (the 2D separating capacity) is shown in gray.

Separating capacity equals rank of grid-like inputs

For inputs in general position, the separating capacity equals the rank of the input matrix (plus 1 when the threshold is allowed to be nonzero), and the rank equals the dimension (number of cells) of the input patterns – the input matrix is full rank. When inputs are in general position, all input subsets of size equaling the separating capacity have the same rank. But when input patterns are not in general position, some subsets can have smaller ranks than others even when they have the same size. Thus, when input patterns are not in general position the separating capacity is only upper bounded by the rank of the full input matrix. In turn, the rank is only upper bounded by the number of cells (the input matrix need not be full rank).

For the grid-like code, all codewords can be generated by the iterated application of a linear operator to a single codeword: a simultaneous one-unit phase shift by a cyclic permutation in each grid module is such an operator , which can be represented by a block-form permutation matrix. The sequence of patterns generated by applying to a grid-like codeword with the same module structure represents contiguous locations (Figure 6C).

The separating capacity for inputs generated by iterated application of the same linear operation saturates its bound by equaling the rank of the input pattern matrix. Since a code , generated by some linear operator with starting codeword is translation invariant, the number of dimensions spanned by these patterns strictly increases until some value , after which the dimension remains constant. By definition, is therefore the rank of the input pattern matrix. It follows that any contiguous set of patterns is linearly independent, and thus in general position, which means that the separating capacity of such a pattern matrix is .

For place cells, it follows that whenever , with the rank of the grid-like input matrix, all field arrangements are realizable, while for any , there will be nonrealizable field arrangements (Supporting Information Appendix). Therefore, the contiguous-separating capacity for place cells is . This is an interesting finding: the separating capacity of a place cell fed with structured grid-like inputs approaches the same capacity as if fed with general-position inputs of the same rank. Next, we compute the rank for grid-like inputs under increasingly general assumptions.

Grid input rank converges to sum of grid module periods

Integer periods

For integer-valued periods , the rank of the matrix consisting of the multi-periodic grid-like inputs can be determined through the inclusion-exclusion principle (see Section B.4):

where is the ith of the k-element subsets of . To gain some intuition for this expression, note that if the periods were pairwise coprime, all the GCDs would be 1 and this formula would quite simply produce , where is defined as the sum of the module periods. If the periods are not pairwise coprime, the rank is reduced based on the set of common factors, as in (5), which satisfies the following inequality: . When the periods are large (), the rank approaches . Large integers () evenly spaced or uniformly randomly distributed over some range tend not to have large common factors (Cesaro, 1881). As a result, even for non-coprime periods, the rank scales like and approaches (see below for more elaboration).

Real-valued periods

Actual grid periods are real- rather than integer-valued, but with some finite resolution. To obtain an expression for this case, consider the sequence of ranks defined as

where denotes the floor operation, is an effective resolution parameter that takes integer values (the larger , the finer the resolution of the approximation to a real-valued period), and the periods are real numbers. The rank of the grid-like input matrix with real-valued periods is given by , if this limit exists. A finer resolution (higher ) corresponds to representing phases with higher resolution within each module, and thus intuitively to scaling the number of grid cells in each module by .

Suppose that the periods are drawn uniformly from an interval of the reals, which we take without loss of generality to be . Then the values are integers in and as above we have that . In the infinite resolution limit (), the probability scales asymptotically as , independent of (Cesaro, 1881), which means that large randomly chosen large integers tend not to have large common factors. This implies that with probability 1, the limit is well-defined and equals , the sum of the input grid module periods.

When assessed numerically at different resolutions (), the approach of the finite-resolution rank to the real-valued grid period rank is quite rapid (Figure 6D). Thus, the separating capacity does not depend sensitively on the precision of the grid periods. It is also invariant to the resolution with which phases are represented within each module.

In summary, the place-cell-separating capacity with real-valued grid periods and high-resolution phase representations within each module equals the rank of the grid-like input matrix, which itself approaches , the sum of the module periods. Thus, a place cell can realize any arrangement of fields over a spatial range given by the sum of module periods of its grid inputs.

It is interesting that the contiguous-separating capacity of a place cell fed with grid-like inputs not in general position approaches the same capacity as if fed with general-position inputs of the same rank. On the other hand, the contiguous-separating capacity is very small compared to the total range over which the input grid patterns are unique: since each local region of hippocampus receives input from 2 to 3 modules (Witter and Groenewegen, 1984; Amaral and Witter, 1989; Witter and Amaral, 1991; Witter et al., 2000; Honda et al., 2012), the range over which any field arrangement is realizable is at most 2–3 times the typical grid period. By contrast, the total range of locations over which the grid inputs provide unique codes scales as the product of the periods. The result implies that once field arrangements are freely chosen in a small region, they impose strong constraints on a much larger overall region and across environments. We explore this implication in more detail below.

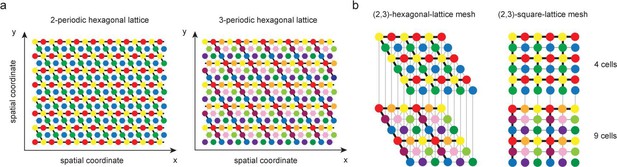

Generalization to higher dimensions

We have already argued that our counting arguments hold for realistic tuning curve shapes with graded activity profiles. This follows from the fact that convolution of the grid-like codes with appropriate smoothing kernels does not change the general geometric arrangement of codewords relative to each other as these convolution operations preserve within-module permutation symmetries and across-module independence in the code. We have also shown that the contiguous-separating capacity results apply to real-valued grid periods with dense phase encodings within each module.

Here, we describe the generalization to different spatial dimensions. Consider a -dimensional grid-like code consisting of cells in the mth module to produce a one-hot phase code for (discrete) positions along each dimension (Figure 6E). Since the counting results rely only on the existence of a modular-one-hot code and not any mapping from real spaces to coding states, this code across multiple modules is equivalent to a modular-one-hot coding for states, with modules of size each. All the counting results from before therefore hold, with the simple substitution in the various formulae.

The contiguous-separating capacity in -dimensions is defined as the maximum volume over which all field arrangements are realizable. Like the 1D separating capacity results, this volume depends upon the mapping of physical space to grid-like codes. We are able to show that for grid modules with periods the generalized separating capacity is (see Section B.4; Figure 6F). This result follows from essentially the same reasoning as for 1D environments, but with the use of -dimensional phase-shift operators.

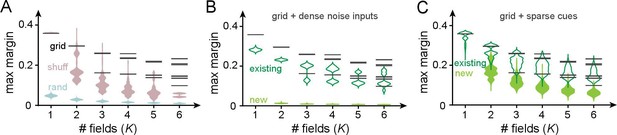

Robustness of field arrangements to noise and nongrid inputs

An important quality of field arrangements that is neglected when merely counting the number of realizable arrangements or determining the separating capacity is robustness: these computations consider all realizable field arrangements, but field arrangements are practically useful only if they are robust so that small amounts of perturbation or noise in the inputs or weights do not render them unrealizable. Above, we showed that grid-like codes enable many dichotomies despite being structurally constrained, but that random analog-valued codes as well as more hierarchical codes permit even more dichotomies. Here, we show that the dichotomies realized by grid codes are substantially more robust to noise and thus more stable.

The robustness of a realizable dichotomy in a perceptron is given by its margin: for a given linear decision boundary, the margin is the smallest datapoint-boundary distance for each class, summed for the two classes. The maximum margin is the largest achievable margin for that dataset. The larger the maximum margin, the more robust the classification. We thus compare maximum margins (herein simply referred to as margins) across place field arrangements, when the inputs are grid-like or not.

Perceptron margins can be computed using quadratic programming on linear support vector machines (Platt, 1998). We numerically solve this problem for three types of input codes (permitting a nonzero threshold and imposing no weight constraints): the grid-like code ; the shuffled grid-like code – a row- and column-shuffled version of the grid-like code that breaks its modular structure; and the random code of uniformly distributed random inputs (Figure 7). To make distance comparisons meaningful across codes, all patterns (columns) involve the same number of neurons (dimension), have the same total activity level (unity L1 norm), and the number of input patterns is the same across codes, and chosen to equal , the full range of the corresponding grid-like code. To compute margins, we consider only the realizable dichotomies on these patterns.

Robustness of place field arrangements to noise and nongrid inputs.

In (A–C), grid periods are ; the number of input patterns is set to for all input codes. Input patterns are normalized to have unity L1 norm in all cases. Maximum margins are determined by using SVC in scikit-learn (Pedregosa et al., 2011) (with thresholds and no weight constraints). (A) Black bars: the maximum margins of all realizable arrangements with grid-like inputs (bars have high multiplicity: across the very large number of realizable field arrangements, the set of distinct maximum margins is small and discrete because of the regular geometric structure of the grid-like code). Pink: margins for shuffled grid inputs that break the code’s modularity (shuffling neurons across modules for each pattern; 10 shuffles per and sampling 1000 realizable field arrangements per shuffle). Blue: margins for random inputs in general position (inputs sampled i.i.d. uniformly from ; 10 realizations of a random matrix per , 1000 realizable field arrangements sampled per realization). (B) Effect of noise on margins. We added dense noise inputs (100 non-negative i.i.d. random inputs at each location) to the place cell, in addition to the 74 grid-like inputs. (The expected value of each random input was 20% of the population mean of the grid inputs; thus, the summed random input was on average the size of the summed grid input.) Black: noise-free margins as in (A). Empty green violins: margins of existing field arrangements modestly shrink in size. Solid green violins: margins of some newly created field arrangements: these are small and thus unstable. (C) Effect of sparse spatial inputs (plots as in C). (We added 100 sparse inputs per location; each sparse input had fields placed randomly across the full range , so that the summed sparse input was on average the size of the summed grid input. The combined grid and nongrid input at each location was normalized to 1.)

The margins of all realizable place field arrangements with grid-like inputs are shown in Figure 7A (black); the margin values for all arrangements are discretized because of the geometric arrangements of the inputs, and each black bar has a very high multiplicity. The grid-like code produces much larger-margin field arrangements than shuffled versions of the same code and random codes (Figure 7A, pink and blue). The higher margins of the grid-like compared to the shuffled grid-like code show that it is the structured geometry and modular nature of the code that produce well-separated patterns in the input space (Figure 4B) and create wide margins and field stability. In other words, place field arrangements formed by grid inputs, though smaller in number than arrangements with differently coded inputs, should be more robust and stable against potential noise in neural activations or weights.

Next, we directly consider how different kinds of nongrid inputs, driving place cells in conjunction with grid-like inputs, affect our results on place field robustness. We examine two distinct types of added nongrid input: (1) spatially dense noise that is meant to model sources of uncontrolled variation in inputs to the cell and (2) spatially sparse and reliable cues meant to model spatial information from external landmarks.

After the addition of dense noise, previously realizable grid-driven place field arrangements remain realizable and their margins, though somewhat lowered, remain relatively large (Figure 7B, empty green violins). In other words, grid-driven place field arrangements are robust to small, dense, and spatially unreliable inputs, as expected given their large margins. Note that because the addition of dense i.i.d. noise to grid-like input patterns pushes them toward general position, and general-position inputs enable more realizable arrangements, the noise-added versions of grid-like inputs also give rise to some newly realizable field arrangements (Figure 7B, full green violins). However, as with arrangements driven purely by random inputs, these new arrangements have small margins and are relatively not robust. Moreover, since by definition noise inputs are assumed to be spatially unreliable, the newly realizable arrangements will not persist across trials.

Next, the addition of sparse spatial inputs (similar to the one-hot codes of Table 1, though the sparse inputs here are nearly but not strictly orthogonal) leaves previous field arrangements largely unchanged and their margins substantially unmodified (Figure 7C, empty green violins). In addition, a few more field arrangements become realizable and these new arrangements also have large margins (Figure 7C, full green violins). Thus, sufficiently sparse spatial cues can drive additional stable place fields that augment the grid-driven scaffold without substantially modifying its structure. Plasticity in weights from these sparse cue inputs can drive the learning of new fields without destabilizing existing field arrangements.

In sum, grid-driven place arrangements are highly robust to noise. Combining grid-cell drive with cue-driven inputs can produce robust maps that combine internal scaffolds with external cues.

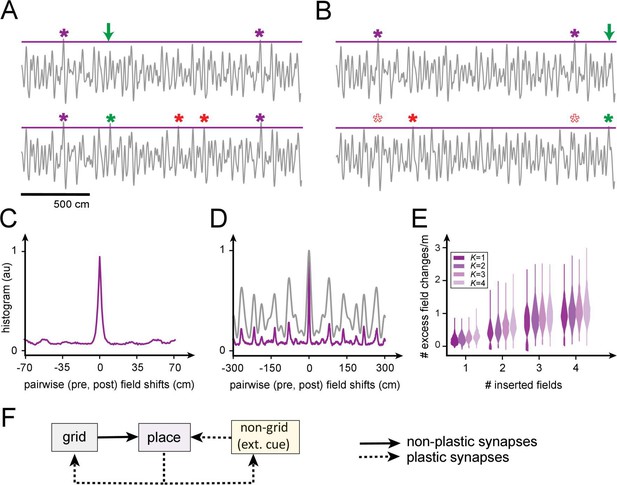

High volatility of field arrangements with grid input plasticity

Our results on the fraction of realizable place field arrangements and on place-cell-separating capacity with grid-like inputs imply that place cells have highly restricted flexibility in laying down place fields (without direct drive from external spatially informative cues) over distances greater than , the sum of the input grid module periods. Selecting an arrangement of fields over this range then constrains the choices that can be made over all remaining space in the same environment and across environments. Conversely, changing the field arrangement in any space by altering the grid-place weights should affect field arrangements everywhere.

We examine this question quantitatively by constructing realizable K-field arrangements (with grid-like responses generated as 1D slices through 2D grids [Yoon et al., 2016]), then attempting to insert one or a few new fields (Figure 8A,B). Inserting even a single field at a randomly chosen location through Hebbian plasticity in the grid-place weights tends to produce new additional fields at uncontrolled locations, and also leads to the disappearance of existing fields (Figure 8A,B).

Predicted volatility of place field arrangements.

(A) Top: original field arrangement over a 20 m space (gray line: summed inputs to place cell; purple stars: original field locations; green arrow: location where new field will be induced by Hebbian plasticity in grid-place weights). Bottom: after induction of the new field (green star), two new uncontrolled fields appear (red stars). (B) Similar to (A): the insertion of a new field at a random location (green star) leads to one uncontrolled new field (red star) and the loss of two original fields (empty red stars). (C) Histogram of changes, after single-field insertion, in pairwise inter-field intervals (spacings): the primary off-target effect of field insertion is for other fields to appear or disappear, but existing fields do not tend to move. (D) A spatially extended version of (C) (purple), together with the (vertically rescaled) autocorrelation of the grid inputs to the cell (gray): new fields tend to appear at spacings corresponding to peaks in the input autocorrelation function. (E) Sum of uncontrolled field insertions or deletions per meter, in response to inserted fields when starting with a K-field arrangement over 20 m. (F) High place field volatility resulting from plasticity in the grid-to-place synapses suggests the possibility that grid-place weights might be relatively rigid (nonplastic).

Interestingly, though field insertion affects existing arrangements through the uncontrolled appearance or disappearance of other fields, it does not tend to produce local horizontal displacements of existing fields (Figure 8C): fields that persist retain their firing locations or they disappear entirely, consistent with the surprising finding of a similar effect in experiments (Ziv et al., 2013).

The locations of fields, including of uncontrolled field additions, are well-predicted by the structure (autocorrelation) of that cell’s grid inputs (Figure 8D). This multi-peaked autocorrelation function, with large separations between the tallest peaks, reflects the multi-periodic nature of the grid code and explains why fields tend to appear or disappear at remote locations rather than shifting locally: modest weight changes in the grid-like inputs modestly alter the heights of the peaks, so that some of the well-separated tall peaks fall below threshold for activation while others rise above.

Quantitatively, insertion of a single field at an arbitrary location in a 20 m span grid-place weight plasticity results in the insertion or deletion, on average, of uncontrolled fields per meter. The insertion of four fields anywhere over 20 m results in an average of one uncontrolled field per meter (Figure 8E).

Thus, if a place cell were to add a field in a new environment or within a large single environment by modifying the grid-place weights, our results imply that it is extremely likely that this learning will alter the original grid-cell-driven field arrangements (scaffold). By contrast, adding fields that are driven by spatially specific external cues, though plasticity in the cue input-to-place cell synapses, may not affect field arrangements elsewhere if the cues are sufficiently sparse (unique); in this case, the added field would be a ‘sensory’ field rather than an internally generated or ‘mnemonic’ one.

In sum, the small separating capacity of place cells according to our model may provide one explanation for the high volatility of the place code across tens of days (Ziv et al., 2013) if grid-place weights are subject to any plasticity over this timescale. Alternatively, to account for the stability of spatial representations over shorter timescales, our results suggest that external cue-driven inputs to place cells can be plastic but the grid-place weights, and correspondingly, the internal scaffold, may be fixed rather than plastic (Figure 8F). In experiments that induce the formation of a new place field through intracellular current injection (Bittner et al., 2015), it is notable that the precise location of the new field was not under experimental control: potentially, an induced field might only be able to form where an underlying (near-threshold) grid scaffold peak already exists to help support it, and the observed long plasticity window could enable place cells to associate a plasticity-inducing cue with a nearby scaffold peak.

This alternative is consistent with the finding that entorhinal-hippocamapal connections stabilize long-term spatial and temporal memory (Brun et al., 2008; Brun et al., 2002; Suh et al., 2011).

Finally, we note that the robustness of place field arrangements obtained with grid-like inputs is not inconsistent with the volatility of field arrangements to the addition or deletion of new fields through grid-place weight plasticity. Grid-driven place field arrangements are robust to random i.i.d. noise in the inputs and weights, as well as the addition of nongrid sparse inputs. On the other hand, the volatility results involve associative plasticity that induces highly nonrandom weight changes that are large enough to drive constructive interference in the inputs to add a new field at a specific location. This nonrandom perturbation, applied to the distributed and globally active grid inputs, results in global output changes.

Discussion

Grid-driven hippocampal scaffolds provide a large representational space for spatial mapping

We showed that when driven by grid-like inputs, place cells can generate a spatial response scaffold that is influenced by the structural constraints of the grid-like inputs. Because of the richness of their grid-like inputs, individual place cells can generate a large library of spatial responses; however, these responses are also strongly structured so that the realizable spatial responses are a vanishingly small fraction of all spatial responses over the range where the grid inputs are unique. However, realizable spatial field arrangements are robust, and place cells can then ‘hang’ external sensory cues onto the spatial scaffold by associative learning to form distinct maps spatial maps for multiple environments. Note that our results apply equally well to the situation where grid states are incremented based on motion through arbitrary Euclidean spaces, not just spatial ones (Killian et al., 2012; Constantinescu et al., 2016; Aronov et al., 2017; Klukas et al., 2020).

Summary of mathematical results

Mathematically, formulating the problem of place field arrangements as a perceptron problem led us to examine the realizable (linearly separable) dichotomies of patterns that lie not in general position but on the vertices of convex regular polytopes, thus extending Cover’s results to define capacity for a case with geometrically structured inputs (Cover, 1965). Input configurations not in general position complicate the counting of linearly separable dichotomies. For instance, counting the number of linearly separable Boolean functions, which is precisely the problem of counting the linearly separable dichotomies on the hypercube, is NP-hard (Peled and Simeone, 1985; Hegedüs and Megiddo, 1996).

We showed that the geometry of grid-cell inputs is a convex polytope, given by the orthogonal product of simplices whose dimensions are set by the period of each grid module divided by the resolution. Grid-like codes are a special case of modular-one-hot codes, consisting of a population divided into modules with only one active cell (group) at a time per module.

Exploiting the symmetries of modular-one-hot codes allowed us to characterize and enumerate the realizable K-field arrangements for small fixed . Our analyses relied on combinatorial objects called Young diagrams (Fulton and Fulton, 1997). For the special case of modules, we expressed the number of realizable field arrangements exactly as a poly-Bernoulli number (Kaneko, 1997). Note that with random inputs, by contrast, it is not well-posed to count the number of realizable K-field arrangements when is fixed since the solution will depend on the specific configuration of input patterns. While we have considered two extreme cases analytically, one with no constraints on place field sparsity and the other with very few fields, it remains an outstanding question of interest to examine the case of sparse but not ultra-sparse field arrangements in which the number of fields is proportional to the full range, with a constant small prefactor (Itskov and Abbott, 2008). Finding results in this regime would involve restricting our count of all possible Young diagrams to a subset with a fixed filled-in area (purple area in Figure 5). This constraint makes the counting problem significantly harder.

We showed using analytical arguments that our results generalize to analog or graded tuning curves, real-valued periods, and dense phase representations per module. We also showed numerically that our qualitative results hold when considering deviations from the ideal, like the addition of noise in inputs and weights. The relatively large margins of the place field arrangements obtained with grid-like inputs make the code resistant to noise. In future work, it will be interesting to further explore the dependence of margins, and thus the robustness of the place field arrangements, on graded tuning curve shapes and the phase resolution per module.

Robustness, plasticity, and volatility

As described in the section on separating capacity, once grid-place weights are set over a relatively small space (about the size of the sum of the grid module periods), they set up a scaffold also outside of that space (within and across environments). Associating an external cue with this scaffold would involve updating the weights from the external sensory inputs to place cells that are close to or above threshold based on the existing scaffold. This does not require relearning grid-place weights and does not cause interference with previously learned maps.

By contrast, relearning the grid-place weights for insertion of another grid-driven field rearranges the overall scaffold, degrading previously learned maps (volatility: Ziv et al., 2013). If we consider a realizable field arrangement in a small local region of space then impose some desired field arrangement in a different local region of space through Hebbian learning, we might ask what the effect would be in the first region. Our results on field volatility provide an answer: if the first local region is of a size comparable to the sum of the place cell’s input grid periods, then any attempt to choose field locations in a different local region of space (e.g., a different environment) will almost surely have a global effect that will likely affect the arrangement of fields in the first region. A similar result might hold true if the first region is actually a disjoint set of local regions whose individual side lengths add up to the sum of input grid periods. This prediction might be consistent with the observed volatility of place fields over time even in familiar environments (Ziv et al., 2013).

Our volatility results alternatively raise the intriguing possibility that grid-place weights, and thus the scaffold, might be largely fixed and not especially plastic, with plasticity confined to the nongrid sensory cue-driven inputs and in the return projections from place to grid cells. The experiments of Rich et al., 2014 – in which place cells are recorded on a long track, the animal is then exposed to an extended version of the track, but the original fields do not shift – might be consistent with this alternative possibility. These are two rather strong and competing predictions that emerge from our model, each consistent with different pieces of data. It will be very interesting to characterize the nature of plasticity in the grid-to-place weights in the future.

Alternative models of spatial tuning in hippocampus

This work models place cells as feedforward-driven conjunctions between (sparse) external sensory cues and (dense) motion-based internal position estimates computed in grid cells and represented by multi-periodic spatial tuning curves. In considering place-cell responses as thresholded versions of their feedforward inputs including from grid cells, our model follows others in the literature that make similar assumptions (Hartley et al., 2000; Solstad et al., 2006; Sreenivasan and Fiete, 2011; Monaco et al., 2011; Cheng and Frank, 2011; Whittington et al., 2020). These models do not preclude the possibility that place cells feed back to correct grid-cell states, and some indeed incorporate such return projections (Sreenivasan and Fiete, 2011; Whittington et al., 2020; Agmon and Burak, 2020). It will be interesting in future work to analyze how such return projections affect the capacity of the combined system.

Our assumptions and model architecture are quite different from those of a complementary set of models, which take the view that grid-cell activity is derived from place cells (Kropff and Treves, 2008; Dordek et al., 2016; Stachenfeld et al., 2017). Our assumptions also contrast with a third set of models in which place-cell responses are assumed to emerge largely from locally recurrent weights within hippocampus (Tsodyks et al., 1996; Samsonovich and McNaughton, 1997; Battista and Monasson, 2020; Battaglia and Treves, 1998). One challenge for those models is in explaining how to generate stable place fields through velocity integration across multiple large environments: the capacity (number of fixed points) of many fully connected neural integrator models in the style of Hopfield networks tends to be small – scaling as states with neurons (Amit et al., 1985; Gardner, 1988; Abu-Mostafa and Jacques, 1985; Sompolinsky and Kanter, 1986; Samsonovich and McNaughton, 1997; Battaglia and Treves, 1998; Battista and Monasson, 2020; Monasson and Rosay, 2013) because of the absence of modular structures (Fiete et al., 2014; Sreenivasan and Fiete, 2011; Chaudhuri and Fiete, 2019; Mosheiff and Burak, 2019). There are at least two reasons why a capacity roughly equal to the number of place cells might be too small, even though the number of hippocampal cells is large: (1) a capacity equal to the number of place cells would be quickly saturated if used to tile 2D spaces: 106 states from 106 cells supply 103 states per dimension. Assuming conservatively a spatial resolution of 10 cm per state, this means no more than 100 m of coding capacity per linear dimension, with no excess coding states for error correction (Fiete et al., 2008; Sreenivasan and Fiete, 2011). (2) The hippocampus sits atop all sensory processing cortical hierarchies and is believed to play a key role in episodic memory in addition to spatial representation and memory. The number of potential cortical coding states is vastly larger than the number of place cells, suggesting that the number of hippocampal coding states should grow more rapidly than linearly in the number of neurons, which is possible with our grid-driven model but not with nonmodular Hopfield-like network models with pairwise weights between neurons.

Even if our assumption that place cells primarily derive their responses from grid-like inputs combined with external cue-derived nongrid inputs is correct, place cells may nevertheless deviate from our simple perceptron model if the place response involves additional layers of nonlinear processing. There are many ways in which this can happen: place cells are likely not entirely independent of each other, interacting through population-level competition and other recurrent interactions. Dendritic nonlinearities in place cells act as a hidden layer between grid-cell input and place cell firing (Poirazi and Mel, 2001; Polsky et al., 2004; Larkum et al., 2007; Spruston, 2008; Larkum et al., 2009; Harnett et al., 2012; Harnett et al., 2013; Stuart et al., 2016). Or, if we identify our model place cells as residing in CA1, then CA3 would serve as an intermediate and locally recurrent processing layer. In principle, hidden layers that generated a one-hot encoding for space from the grid-like inputs and then drove place cells as perceptrons would make all place field arrangements realizable. However, such an encoding would require a very large number of hidden units (equal to the full range of the grid code, while the grid code itself requires only the logarithm of this number). Additionally, place cells may exhibit richer input-output transformations than a simple pointwise nonlinearity, for instance, through cellular temporal dynamics including adaptation or persistent firing. Finding ways to include these effects in the analysis of place field arrangements is a promising and important direction for future study.

In sum, combining modular grid-like inputs produces a rich spatial scaffold of place fields, on which to associate external cues, much larger than possible with nonmodular recurrent dynamics within hippocampus. Nevertheless, the allowed states are strongly constrained by the geometry of the grid-cell drive. Further, our results suggest either high volatility in the place scaffold if grid-to-place-cell weights exhibit synaptic plasticity, or suggest the possibility that grid-to-place-cell weights might be random and fixed.

Numerical methods

Random, weight-constrained random, and shuffled inputs

Entries of the random input matrix are uniformly distributed variables in . To compare separating capacity (Figure 4) of random codes with the grid-like code, we consider matrices of the same input dimension (number of neurons) as the grid-cell matrix, or alternatively of the same rank as the grid-cell matrix, then use Cover’s theorem to count the realizable dichotomies (Cover, 1965). Weight-constrained random inputs (Figure 4B–D) are random inputs with non-negative weights imposed during training.

To compare margins (Figure 7), we use matrices with the same input dimension and number of patterns. As margins scale linearly with the norm of the patterns, to keep comparisons fair the input columns (patterns) are normalized to have unity L1 norm.

Nongrid inputs