Abstract

In the present study, we investigated the effect of initial-consonant intensity on lexical decisions. Amplification was selectively applied to the initial consonant of monosyllabic words. In Experiment 1, young adults with normal hearing completed an auditory lexical decision task with words that either had the natural or amplified initial consonant. The results demonstrated faster reaction times for amplified words when listeners randomly heard words spoken by two unfamiliar talkers. The same pattern of results was found when comparing words in which the initial consonant was naturally higher in intensity than the low-intensity consonants, across all amplification conditions. In Experiment 2, listeners were familiarized with the talkers and tested on each talker in separate blocks, to minimize talker uncertainty. The effect of initial-consonant intensity was reversed, with faster reaction times being obtained for natural than for amplified consonants. In Experiment 3, nonlinguistic processing of the amplitude envelope was assessed using noise modulated by the word envelope. The results again demonstrated faster reaction times for natural than for amplified words. Across all experiments, the results suggest that the acoustic–phonetic structure of the word influences the speed of lexical decisions and interacts with the familiarity and predictability of the talker. In unfamiliar and less-predictable listening contexts, initial-consonant amplification increases lexical decision speed, even if sufficient audibility is available without amplification. In familiar contexts with adequate audibility, an acoustic match of the stimulus with the stored mental representation of the word is more important, possibly along with general auditory properties related to loudness perception.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The neighborhood activation model has established that the speed and accuracy of word recognition is influenced by properties of the lexicon, such as the frequency of a word’s occurrence in the language and the number of phonologically similar “neighbors” (Luce & Pisoni, 1998). Predictions from this model are based on the strength and number of competitors activated during the lexical search (i.e., the neighborhood). Exemplar theory suggests that the full robustness (e.g., lexical and indexical cues) of the stimulus, the spoken word in this case, is stored in memory (Goldinger, 1998). Therefore, mental representations include phonetic and supralinguistic details of specific tokens, such as indexical characteristics that could be used to identify the talker. Modifying the acoustic properties of a target word from its natural production, such as implemented during the spectral shaping of hearing aids in which some frequencies are amplified more than others, may lead to poorer success during the lexical search (i.e., slower) due to an acoustic mismatch between the stimulus and the stored representation. Such an acoustic mismatch is explicitly coded in the Ease of Language Understanding model (ELU; Rönnberg, 2003; Rönnberg et al., 2013), which predicts greater recruitment of cognitive resources for unfamiliar acoustic modifications, such as the amplitude compression of hearing aids (Foo, Rudner, Rönnberg & Lunner, 2007; Rudner, Foo, Rönnberg, & Lunner, 2009). In contrast, certain acoustic modifications, such as amplification, may improve bottom-up acoustic processing of the stimulus prior to the lexical search, due to improved audibility. Amplification may increase the speed at which the stimulus is detected, giving later lexical processing a “head start.” It is clear that the lexical search is highly sensitive to phonetic manipulations of the input (see Tanenhaus, Magnuson, Dahan, & Chambers, 2000). However, subphonetic acoustic interactions with the lexical search (such as those resulting from amplification) have not been fully investigated, particularly as they relate to the speed of lexical decisions. The goal of the present study was to investigate the interaction between acoustic properties of the speech stimulus and the speed of lexical decisions.

Most consonant productions are characterized by high-frequency, low-intensity acoustic properties (Stevens, 2002). It is well known that as listeners age, typical age-related declines in hearing acuity result in the loss of hearing sensitivity in the high-frequency range most important for the detection of consonant cues. Furthermore, typical broadband background sources of noise easily mask consonants, even for normal-hearing listeners. In multitalker babble, the consonant–vowel ratio (CVR) is one predictor for consonant confusion patterns (Gordon-Salant, 1985). Gordon-Salant (1985) found that as the level of the masker increased, recognition accuracy for relatively weak consonants decreased. One signal-processing strategy for improving consonant recognition has been to apply differential amplification to consonant cues. This creates a modified speech signal that greatly enhances the consonant but does not appreciably impact the overall perceived loudness of the speech sample, since the vowel contributes most to the perceived loudness of consonant–vowel–consonant words (Montgomery, Prosek, Walden, & Cord, 1987). Thus, improved intelligibility via consonant amplification can be achieved with minimal effect on loudness perception. For sentences, selective amplification of consonants results in small but significant improvements in speech intelligibility, particularly at poor signal-to-noise ratios (Saripella, Loizou, Thibodeau, & Alford, 2011). Furthermore, in contrast to “conversational” speech, an increase in CVR for nonsense sentences has been observed to correspond with “clear” speech (Picheny, Durlach, & Braida, 1986), which is associated with better speech intelligibility (Picheny, Durlach, & Braida, 1985). The importance of the acoustic signal is supported by recent evidence that lexical decision reaction times (RTs) are influenced by both acoustic modifications produced in real communicative contexts (Scarborough & Zellou, 2013) and by the amount and type of acoustical distortion of the preceding context (Goy, Pelletier, Coletta, & Pichora-Fuller, 2013).

The acoustic consequence of consonant amplification is an alteration of the natural amplitude variations in the temporal envelope of speech. Natural intensity fluctuations are most prominent between consonant and vowel levels due to the naturally low intensity of the consonants relative to the vowels. This temporal amplitude envelope of speech has been demonstrated to be essential for speech recognition and is sufficient for quiet listening, in the absence of prominent spectral cues (Shannon, Zeng, Kamath, Wygonski, & Ekelid, 1995). Indeed, preservation of the amplitude envelope by maintaining amplitude differences across consonants and vowels may be an important contributor to speech intelligibility, particularly for sentences (Fogerty, 2013; Fogerty & Humes, 2012). Furthermore, measures that index the modification of the amplitude envelope by clinical amplification strategies help to predict consonant confusions (Souza & Gallun, 2010). Therefore, although amplification of the consonant may improve audibility in background noise when there is filtering by a hearing-impaired system, the abnormal amplitude envelope may lead to a weaker representation for lexical comparison. Studies of selective amplification have demonstrated improvements in consonant (Gordon-Salant, 1986) and word (Montgomery & Edge, 1988) recognition. However, although the eventual lexical item may be selected from its competitors for better overall speech recognition, this may come at a cost: It may be that the overall lexical search and processing is delayed due to a greater acoustic mismatch between the modified stimulus and the stored exemplars in memory. The present study was specifically designed to address this issue.

According to Gordon-Salant (1986), increasing consonant amplitudes can improve speech intelligibility under unfavorable listening conditions. Gordon-Salant tested nonsense syllable recognition in the presence of twelve talker babble presented at a 6-dB speech-to-background ratio. The CVR was increased through amplification of the consonant by 10 dB relative to the vowel level. This resulted in similar average intensity levels for consonants and vowels (interquartile range = –2 to 5 dB CVR) averaged across consonants in the three vowel contexts tested. The results demonstrated that younger and older listeners performed better with the modified syllables across manners, places, and voicing features.

Montgomery and Edge (1988) also found improvements in word recognition when the level of the consonant was equated to the level of the vowel (0 dB CVR). However, this effect was limited to speech presented at a conversational level (65 dB SPL). Testing conducted at 95 dB SPL with hearing-impaired listeners resulted in no significant effect of consonant amplification, presumably due to already sufficient audibility.

These results suggest that increasing the CVR is beneficial to speech recognition. However, such an effect may be most related to audibility, since improvement of the CVR while maintaining consonant audibility does not result in improved performance (Sammath, Dorman, & Stearns, 1999). In fact, an increase in CVR, by decreasing the level of the vowel relative to the consonant, may even result in poorer performance at unfavorable signal-to-noise ratios (Sammath et al., 1999). Thus, reduction of the temporal envelope amplitude through CVR manipulation may be detrimental in noisy listening conditions, although it may depend on the particular acoustic characteristics of the consonant (see Freyman, Nerbonne, & Cote, 1991). Indeed, Hickson and Byrne (1997) suggested that the CVR may actually be an important cue for some consonants, resulting in differential effects of CVR modification on different consonants.

The finding that recognition benefits from CVR are related to consonant audibility argues for bottom-up processing of the stimulus facilitating the lexical search when the audibility of the consonant is improved, not improvement of the CVR per se. However, further results suggest that the corresponding reduction in temporal envelope modulation is not the cause for improvement, resulting in abnormal temporal properties of the stimulus. Indeed, maintaining resolution of temporal modulations after amplification is important for consonant identification (Souza & Gallun, 2010). How increased audibility with decreased temporal modulation resolution impacts the lexical search is not clear.

In the present experiment, we tested the speed of lexical decisions by modifying the CVR through consonant amplification. Although consonant amplification benefits eventual syllable or word identification, it may be that the reduction in temporal modulation cues results in a longer time spent processing lexical information. However, if audibility improves the bottom-up, sensory processing of the stimulus prior to the lexical search, the lexical decision speed may be improved by the signal processing. The present experiments tested these hypotheses.

This investigation is significant, since processing speed is frequently cited as a secondary contributor to the poorer performance of older adults after audibility has been restored (e.g., Fitzgibbons & Gordon-Salant, 1998; Fitzgibbons, Gordon-Salant, & Friedman, 2006; Humes, Burk, Coughlin, Busey, & Strauser, 2007; Humes & Christopherson, 1991; Pichora-Fuller, 2003), such as through spectral shaping. Although the processing of rapid temporal events for many older adults does appear to be impaired (e.g., Fitzgibbons & Gordon-Salant, 1998; Fitzgibbons et al., 2006; Fogerty, Humes, & Kewley-Port, 2010), it may be that a contributor to the slower processing in speech recognition tasks is a result of how listeners retrieve lexical items from the modified, spectrally shaped acoustic input, in addition to reductions in listeners’ processing speeds.

The importance of this work is in identifying how fine-grained acoustic modifications may impact the speed of lexical processing. This has significant implications for general theories of spoken word recognition as well as for the amplification strategies of hearing aids. Significant interaction between amplification strategies and cognitive processing has already begun to be delineated (e.g., Rudner et al., 2009). This work will help to expand this discussion to how novel auditory input due to amplification may specifically impact lexical processing.

Experiment 1

Experiment 1 was designed to assess the effects of various levels of initial-consonant amplification, relative to the vowel, on lexical decision speeds for monosyllabic words. This was accomplished by using an auditory lexical decision task with words and nonwords modified through initial-consonant amplification. One of two alternatives was predicted. If consonant amplification reduced RTs to the target word, then consonant amplification could be viewed as facilitatory. This would support the bottom-up, audibility hypothesis. However, it may also be the case that consonant amplification could increase RTs, since the natural speech envelope of the target words was distorted by the amplification processing. That is, the natural amplitude modulations of speech were reduced at the initial portion of the word. Such an alternative finding would suggest that the acoustic mismatch between the stimulus and stored exemplars slows the lexical search. Thus, in this experiment we assessed the benefit of this amplification signal processing on lexical decisions. Furthermore, natural variations in consonant intensity were also investigated as predictors to lexical decision RTs.

Method

Participants

Forty listeners participated in the experiment for course credit (mean age = 23 years, SD = 4 years; one male). All listeners passed a hearing screen (thresholds ≤ 20 dB HL) at octave intervals from 250 to 8000 Hz (American National Standards Institute [ANSI], 2004) and were native speakers of American English. Listeners were randomly assigned to one of four consonant-amplification conditions (ten listeners each). These corresponded to amplifying the initial consonant by 0, 3, 6, or 12 dB relative to the original consonant level. Listeners in the 0-dB condition heard the unmodified speech recordings, whereas listeners in the 12-dB condition heard consonants maximally amplified. In this final condition, the consonant-to-vowel intensity ratio was near 0 dB (i.e., the peak level of the consonants were at the peak level for the vowels, on average across the total word list). Since the different experimental groups were presented with the same word list, lexical properties such as word frequency, density, and familiarity were identical across the experimental conditions.

Stimuli and design

Monosyllabic words and nonwords were recorded from two male talkers of general American English dialects. A total of 39 words were selected from each talker (78 words total) along with an equal number of nonwords to be used in the present study. The final word list consisted of words with initial obstruent consonants (/b, d, g, p, t, k, z, v, ð, s, f, θ, ʃ, ʤ, ʧ/). The entire word list (i.e., across both talkers) was balanced for equal numbers of voiced and voiceless consonants, as well as plosive and fricative/affricate consonants. However, due to the frequency of certain sounds occurring in initial position; some consonants occurred more frequently in the list than other consonants. For example, /b/ is more frequently occurring than /ð/. In addition, all words had uniqueness or discrimination points (Marslen-Wilson, 1984) at the end of the word.

The stimuli were processed according to one of four consonant-amplification conditions (i.e., 0, 3, 6, or 12 dB). For stimulus processing, two trained experimenters with acoustic–phonetic training identified by consensus the time point marking the end of the initial consonant. Obstruent/vowel boundaries were identified using time-aligned waveform and spectrogram displays following the phonetic segmentation rules of the Texas Instruments–Massachusetts Institute of Technology (TIMIT) corpus (Seneff & Zue, 1988; see also Li & Loizou, 2008). Segmentation used landmarks of acoustic change, such as highly salient and abrupt acoustic changes in the presence of aperiodicity, voicing, formant patterns, and signs of vocal tract constriction. Since only initial obstruent sounds were used, formant transitions were predominantly located within the vowel. Thus, amplification was predominantly limited to noise sources from consonant constriction. An independent expert in acoustics–phonetics independently analyzed the recordings. Differences in the boundary markings greater than 10 ms were reanalyzed by the three experimenters, and the final marking was determined by consensus. All boundary marks were placed at the nearest zero-crossing, to avoid the introduction of transients during consonant amplification. During signal processing, all of the stimuli were first equalized to the level measured at a 50-ms window centered at the vowel peak. That is, all stimuli were scaled to an equivalent peak amplitude for the vowel, which is largely responsible for loudness perception of isolated words (Montgomery et al., 1987). Next, the initial consonant was amplified in MATLAB according to the stimulus condition. Therefore, the level of the vowel was equivalent across all consonant-amplification conditions; only the level of the consonant varied. Figure 1 displays the waveforms and spectrograms for an example word processed according to the four amplification conditions.

Procedure

The participant was seated at an individual computer terminal within a sound-attenuating booth. Stimuli were presented to the listeners by E-Prime, using a standard auditory lexical decision paradigm. The interstimulus interval between stimulus presentations was 2 s. A fixation cross was displayed to participants for 1 s prior to presentation of the auditory stimulus, to alert them to the next stimulus. Participants were instructed to respond as quickly and accurately as possible, identifying whether or not the stimulus presented was a real word. Auditory stimuli were presented to the participant diotically using Sennheiser HD 280 headphones via a high-fidelity external sound card at a sampling rate of 22050 Hz. The presentation level was calibrated to 70 dB SPL on the basis of the average root-mean squared (RMS) level of the stimuli (with the stimuli being equated on the basis of the peak RMS of the vowel). The presentation order for words was fully randomized for all listeners.

Responses were recorded using a dedicated reaction-time response box with two large buttons for the two response alternatives. Participants responded using their dominant (right) hand. First they completed a practice trial of 36 stimuli (18 words, 18 nonwords) for task familiarization, followed by the experimental test. Reaction times were calculated from the offset of the auditory presentation in order to control for differences in word durations across the stimuli.

Results and discussion

Reaction times were analyzed over the correct responses for the real-word stimuli. The average accuracy across all 40 listeners for correctly categorizing the 78 word stimuli as lexical items was 91 %. However, certain words had much lower accuracy rates across the listeners. Specific effort was made to ensure comparisons over word stimuli that were equally categorized as lexical items over all amplification conditions. Therefore, only stimuli that had at least 80 % accuracy across all listeners within each amplification group were selected for analysis. Only four words failed to meet this criterion for the natural, 0-dB condition. However, between 10 and 15 words (M = 12) across the three amplification groups resulted in less than 80 % of the group correctly categorizing the stimulus as a word. Words failing to meet this criterion in at least one group were removed from the analysis across all groups, ensuring that all groups were analyzed over equivalent word lists. Therefore, only words with high lexical decision accuracy were used in the subsequent analyses. These procedures represent a strict criterion that resulted in a reduced set of 58 words. This reduced set maintained an equal number of plosive and fricative consonants, as well as equal numbers of items from both talkers. Observation of the data revealed that a few trials had excessively large RTs, characteristic of participant inattention to the task. Therefore, here and in subsequent experiments, outlier data greater than or equal to 1.5 standard deviations across all listeners for each word trial were removed from the analysis. Missing data due to incorrect responses or outlier RTs consisted of less than 5 % of the cases (i.e., individual word responses across all listeners in the group). In addition, as is characteristic of RTs, the raw data were skewed and nonnormal. Therefore, a reciprocal transformation of the data was performed prior to analysis in order to meet the assumption of normality.

The mean RTs for the four experimental groups are plotted in Fig. 2 for comparison. A one-way analysis of variance (ANOVA) was conducted across the four amplification groups. The between-group analysis demonstrated no significant differences among the groups, F(3, 36) = 1.4, p > .05. However, the overarching empirical question was whether any amplification resulted in significantly different RTs—that is, whether the 0-dB group, which received no consonant amplification, would perform differently from the other three amplification groups. A contrast comparing the original 0-dB group to the three amplification groups combined demonstrated a significant effect of amplification, t(36) = 2.3, p < .05. Independent-samples t tests were used to investigate planned comparisons between the amplification groups. The results demonstrated that even minimal consonant amplification (3 dB) resulted in significantly faster RTs than did stimuli that were not processed (0-dB condition), t(18) = –2.2, p < .05, Cohen’s d = 0.98 (0 dB: M = 429 ms, SD = 87 ms; 3 dB: M = 353 ms, SD = 64 ms). However, consistent with the initial ANOVA, no significant differences (p > .05) were obtained between the three conditions that amplified the consonant (3, 6, and 12 dB). Therefore, additional amplification beyond a minimal 3 dB did not result in faster lexical decisions for young normal-hearing listeners. In fact, subsequent additional amplification resulted in a trend toward increased (poorer) RTs, relative to 3-dB amplification [6 dB: M = 380 ms, SD = 83.7 ms; t(18) = 0.63, p > .05; 12 dB: M = 368 ms, SD = 101 ms; t(18) = 0.09, p > .05].

The intrinsic intensity of the initial consonant was also investigated, and the results are displayed in Fig. 3a. The words were median split into two lists that started with either high-intensity initial consonants (62 % plosives, 56 % voiced) or low-intensity initial consonants (40 % plosives, 48 % voiced). A mixed-model ANOVA with initial-consonant intensity as the repeated measure and amplification as the between-subjects variable demonstrated a main effect for initial-consonant amplitude, F(1, 35) = 14.1, p < .001. No significant main effect of group or interaction with group was observed (p > .05). Low-intensity initial consonants resulted in greater (i.e., poorer) RTs across all amplification conditions, t(38) = 3.9, p < .001, d = 0.3 (low intensity: M = 397 ms, SD = 94 ms; high intensity: M = 363 ms, SD = 86 ms). Therefore, the intrinsic amplitude properties of initial consonants, regardless of the amount of amplification, influence the speed of lexical decisions. Importantly, it is essential to note that such differences in RTs are specific to the consonant level, since all words were equated in terms of the peak vowel level. Therefore, the level of the vowel could not have also contributed to performance differences.

Mean reaction times are displayed for the 0-dB and 3-dB amplification conditions of Experiments 1 and 2. The results are displayed on the basis of a median split of the word list using the intrinsic initial consonant amplitude, as measured by the average root-mean squared intensity. Error bars represent standard errors of the means

The results demonstrate that the acoustic properties of the word influence the speed of lexical decisions. Natural intensity differences that are intrinsic to the production of different consonants were sufficient to decrease RTs. Similarly, providing a small, 3-dB amplification to the initial consonant has a similar effect on reducing RTs. These results appear to support the audibility hypothesis. Improved audibility through increased intensity and amplification facilitates lexical processing for unfamiliar talkers.

However, the impact of an acoustic mismatch with stored exemplars due to distortion of the amplitude envelope may be more prominent for familiar talkers. Therefore, Experiment 2 was conducted to investigate the effect of amplification when the talker is predictable and more familiar.

Experiment 2

Additional training was provided to a separate group of listeners prior to testing to determine the effect of additional familiarity and predictability of the talker. Improved performance is typically observed for low-uncertainty listening conditions (Kewley-Port, Watson, & Foyle, 1988; Mullennix, Pisoni, & Martin, 1989). Therefore, a further increase in the robustness of the facilitatory effect observed in Experiment 1 would be predicted if audibility continued to determine performance differences between the tasks. However, the familiarity and predictability of the talker could also increase the effect of any acoustic mismatch with stored exemplars, thereby hindering performance. Only two conditions were tested—natural 0-dB and 3-dB consonant amplification—because no difference between the different amplification conditions was observed.

Method

Participants

A group of 20 listeners, ten per group, participated in Experiment 2 for course credit (mean age = 21 years, SD = 2.4 years; one male). All of the listeners passed a hearing screen (thresholds ≤ 20 dB HL) at octave intervals from 250 to 8000 Hz (ANSI, 2004) and were native speakers of American English. The listeners were randomly assigned to one of two consonant-amplification conditions: 0-dB or 3-dB amplification relative to the original consonant level.

Design and procedure

The same word lists and signal processing were used as in Experiment 1. The participants completed an extended training session under minimal-uncertainty conditions by using blocked presentation of the two talkers. Familiarization consisted of 32 nonexperimental words that were presented twice in a random sequence without feedback. Experimental testing was also blocked by talkers to minimize stimulus uncertainty. Talker blocks were counterbalanced among the participants. Although words during the training session were repeated twice, test words during the main experiment were only presented once in a random order. The experimental setup, calibration, and procedures were identical to those in Experiment 1.

Results and discussion

As in Experiment 1, the data were analyzed for correct responses on the words correctly identified by more than 80 % of listeners in each group, which resulted in a list of 64 words. One listener in the 0-dB condition was removed from the analysis due to failure to respond to the stimuli and comply with the task.

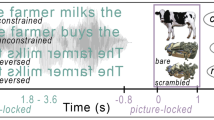

Figure 4 displays the mean RTs for the two listener groups in Experiment 2. After additional exposure, the unprocessed 0-dB condition (i.e., no consonant amplification) resulted in faster RTs than when the consonant was amplified, t(17) = 2.6, p < .05, d = 1.2 (0 dB: M = 298 ms, SD = 59 ms; 3 dB: M = 417 ms, SD = 137 ms).

Mean results across listeners are displayed for the two different consonant-amplification conditions tested in Experiment 1 for uncertain talker presentations, in Experiment 2 for low-uncertainty blocked talker presentations, and in Experiment 3 for the unintelligible modulated-noise versions of the experimental words. The 0-dB amplification condition refers to the natural, unprocessed word. Error bars represent standard errors of the means

As in Experiment 1, the intrinsic amplitude of the initial consonant was also investigated. From Fig. 3b, it can be observed that for both amplification conditions, faster RTs were obtained for low-intensity initial consonants. However, the size of this effect was small, and only reached significance for the 3-dB amplification condition, t(9) = 3.6, p < .01, d = 0.3 (low intensity: M = 289 ms, SD = 66 ms; high intensity: M = 369 ms, SD = 72 ms). Although small, this effect is notable because of the reversed trend toward better performance with low-intensity consonants, relative to the results obtained in Experiment 1. The results from Experiment 2 demonstrate a strong influence of talker familiarity and predictability by reversing the trends previously observed in Experiment 1. This was observed even with the relatively short familiarization training, with each talker on a different set of words than were used during testing. If talker familiarization and procedural learning of the task were the underlying cause of this reversal of effects, then a similar finding should be observed in Experiment 1, once the listeners had some familiarization with the talkers and the task (i.e., by the end of the test session). To examine these possible contributions to the results from Experiment 1, RTs were calculated over the first half and second half of words presented during testing for the 0-dB and 3-dB groups. Note that because word order was randomized, this resulted in a random selection of words for each listener in the two sublists. A mixed-model ANOVA was performed over the transformed data, with list half as the repeated measure and amplification as a between-group measure. The results demonstrated a main effect only for group, F(1, 18) = 6.7, p < .05. No significant effect of list half, F(1, 18) = 2.9, p > .05, nor interaction, F(1, 18) = 0.12, p > .05, was found. For Experiment 1, amplification resulted in significantly faster RTs, regardless of the amount of exposure. These results suggest that familiarization with the talker and the task did not significantly influence lexical decision RTs. The only other difference between Experiments 1 and 2 was blocked talker presentation. Thus, introducing minimal talker uncertainty through the blocked talker presentation appears to have resulted in reversing the effect of consonant amplification. The combined results of these two experiments suggest that selective amplification facilitates lexical decisions during high-uncertainty and interferes during low-uncertainty listening conditions.

In addition, comparison of the RTs for Experiments 1 and 2 suggests that the blocked presentation may have the most effect on the natural condition, not the amplified condition. Although comparison between these two experiments is difficult, due to the different listeners who participated, the qualitative comparison between the two experiments in Fig. 4 highlights the most change for the 0-dB condition. Thus, rather than slowing RTs for the amplified condition due to an acoustic mismatch with stored representations, the effect of Experiment 2 may have been to facilitate RTs for the natural condition. This is consistent with the facilitatory effect of minimizing talker uncertainty. However, it is puzzling why faster RTs would be found for intrinsically weak initial consonants rather than high-intensity consonants, as was found in Experiment 1. A bias toward stimulus naturalness does not predict performance differences for naturally produced words with consonants at intrinsically different levels. It may be that less distortion of the amplitude envelope occurred for the low-intensity initial consonants when 3-dB amplification was provided (i.e., amplitude modulation between consonants and vowels was still preserved), but this does not explain why the same trend, albeit not reaching significance, was also observed for the natural stimuli. Experiment 3 was conducted to explore the possible peripheral contributions to processing that could underlie some of these differences—specifically, differences in the amplitude envelope.

Experiment 3

Increased initial-consonant amplitude may have led to better consonant identification, and therefore faster RTs, in Experiment 1. However, it may also be that the faster RTs were simply the result of faster word onset detection, independent of actual consonant identification, due to the increased onset amplitude. In addition, faster RTs for words with low-intensity initial consonants in Experiment 2 could possibly be explained by peripheral processing of the amplitude envelope, since ramped amplitude envelopes are perceived to be longer and louder than damped envelopes (Ries, Schlauch, & DiGiovanni, 2008). Therefore, Experiment 3 was designed to assess the effect of the modified onset amplitude envelope for stimulus detection. RTs to stimulus detection were recorded in response to nonspeech stimuli matching the broadband speech amplitude envelope. Thus, the contribution of the amplitude envelope to RTs could be explored, independent of actual lexical processing.

Method

Participants

The same listeners from Experiment 2 completed Experiment 3. The listeners were assigned to the same amplification condition that they had been tested on previously.

Stimuli and design

First, a noise was created to match the power spectrum of each target word. Next, the amplitude envelope of the target word was extracted in a single, wide band by half-wave rectification, followed by low-pass filtering at 50 Hz using a 6th-order Butterworth filter. Finally, the matching noise was modulated by the extracted envelope and scaled to the original RMS level of the target word. This processing created a signal-correlated noise version of the original speech waveform (Bashford, Warren, & Brown, 1996). This type of signal has the advantage of providing some of the physical properties of the speech signal, such as speech rhythm, without providing any intelligible speech sounds (Schroeder, 1968). Although signal-correlated noise was originally defined by randomly reversing the polarity of individual samples (Schroeder, 1968), the modulation of the noise source by the broadband speech envelope, as created here, produces a signal-correlated noise that results in sentence recognition near 0 % (Shannon et al., 1995). Previous studies have used speech-modulated noise as a nonspeech signal to investigate signals that contain the amplitude and rhythmic properties of speech without the linguistic information (e.g., Bashford et al., 1996; Davis & Johnsrude, 2003).

Two sets of modulated-noise stimuli were created to match the spectrum and amplitude envelope of the 0-dB and 3-dB consonant-amplified word stimuli. Figure 5 displays the waveforms and spectrograms for modulated-noise stimuli created from an example word. The original stimulus is also provided in panel a, for comparison. This processing resulted in a stimulus that was modulated in amplitude over time like the original word, but was unintelligible. Differences in the amplitude envelopes across stimuli were due to the random amplitude fluctuations of the noise source.

Waveforms and spectrograms for an example word. a The original, unprocessed word “think” is displayed for comparison. b The modulated-noise version of the word used in Experiment 3 with 0-dB amplification. c The modulated-noise version of the word with 3-dB amplification. For Experiment 3, listeners were tested on either 0-dB or 3-dB initial-consonant amplification (relative to the original consonant level), with noise modulated by the wide-band speech envelope

Procedure

The experimental setup was identical to that in the previous experiments. Participants completed a nonspeech, stimulus detection task. The modulated-noise stimuli were presented at 70 dB SPL. Participants were instructed to press the button as soon as they detected the stimulus. To avoid expectation of the stimulus onset, the interstimulus interval was randomly roved between 2,005 and 2,530 ms.

Results and discussion

No significant differences were obtained by analyzing the RTs to all 78 stimuli, as compared to the subset of 64 words analyzed in Experiment 2 (p > .05). Therefore, the RTs for all 78 real words were analyzed. Figure 4 displays the mean data for listeners in this task. An independent-samples t test demonstrated that RTs were faster for the unprocessed, 0-dB modulated-noise stimuli (M = 248 ms, SD = 111 ms), as compared to the amplified modulated-noise stimuli (M = 378 ms, SD = 213 ms) of the words, t(17) = 2.3, p < .05, d = 1.06. No significant difference was observed between the intrinsically low-intensity (M = 300 ms) and high-intensity (M = 309 ms) initial consonants, as had been found in Experiments 1 and 2, possibly suggesting that lexical processing may underlie such differences, although this is not clear from this study.

General discussion

Overall, the results clearly suggest that a word’s acoustic structure influences the speed of lexical decisions. The faster RTs in Experiment 1 were likely due to improved initial-consonant audibility. The results demonstrated that only minimal amplification (i.e., 3 dB relative to the original consonant level) was necessary to impact performance, with higher amplification values not being associated with any additional improvement in RTs. However, additional talker familiarity and reduced talker uncertainty resulted in an improvement in RTs for the unprocessed stimuli, leading to faster RTs than for the amplified words. These procedural changes may have resulted in strengthening the acoustic match between the remembered and presented stimuli (see Goldinger, 1996). In addition, reduced trial-to-trial talker uncertainty may have facilitated access to stored exemplars of the talker. A comparison between RTs on the first and second halves of the word list for Experiment 1 demonstrated no main effect or interaction with list half, but a preserved main effect of amplification. Given that listeners were more familiar with the task and talkers during the second half of testing, procedural learning and talker familiarity likely had minimal roles in the reversal of results for Experiment 2. Instead, minimal talker uncertainty was likely the factor responsible for the difference in performance. This was most notable for the unmodified stimuli, which preserved the natural amplitude envelope of the word, where comparisons between Experiments 1 and 2 suggest the possibility of a facilitatory effect of minimal talker uncertainty for this condition.

It is important to note that the reliance on the lexical decision task in this set of studies does not allow us to delineate processes of lexical access from post-access decision processes. Indeed, the combination of these processing stages can lead to significant differences in the contributions of factors, such as word frequency, in explaining RTs between the lexical decision task and other measures of lexical access (Balota & Chumbley, 1984). Therefore, from Experiments 1 and 2, the stage of processing (e.g., access, decision, etc.) at which acoustic manipulations influence performance is unclear. However, the simple detection task of Experiment 3 helped to address some of these issues by demonstrating similar effects of consonant amplification on the detection of nonspeech modulated-noise stimuli. The results demonstrated faster RTs for 0-dB (relative to 3-dB) stimuli, containing only the natural amplitude envelope, when no lexical processing was involved. This RT advantage for weak amplitude onsets may be consistent with basic auditory findings for ramped amplitude envelopes, which are perceived to be longer and louder than damped envelopes (Ries et al., 2008). This suggests that acoustic manipulations influence processing at a very early, possibly sensory, level. Thus, acoustic manipulations influence processes that feed into lexical stages, rather than directly influence how lexical information is accessed or analyzed. This may also be due to perceptual matching of the external stimulus with stored phonemic representations, which precedes lexical processing (Rönnberg, 2003). However, in Experiment 3, no difference was observed between consonants that had intrinsically different amplitudes, unlike the results in Experiments 1 and 2. Thus, amplitude differences intrinsic to the production of different consonants also appear to play a role in subsequent lexical processing.

An additional low-level factor influencing performance could be related to possible forward masking effects due to the amplification of the initial consonant. However, the weak intensity of consonants relative to the following vowel, the minimal amplification (3 dB), the benefit of amplification of the consonant in Experiment 1 that was preserved with amplification values as high as 12 dB, and the reversal of findings between Experiments 1 and 2 all suggest that any forward-masking effects are not likely to be the primary factor influencing the results observed in the present study.

Combined, the findings from this set of three experiments support the following interpretations.

-

1.

The acoustic–phonetic detail of words, specifically identified here for the initial consonant, can significantly impact the speed of lexical decisions.

-

2.

Experience with and predictability of the talker, even with only a few minutes of exposure, is sufficient to impact the speed of lexical decisions. For novel talkers in moderate uncertainty conditions, amplification and high-intensity initial consonants facilitate lexical search and/or decision, likely due to increased audibility. However, for familiar talkers in low uncertainty conditions, an acoustic match with stored memory representations of the natural structure of the word in unamplified conditions leads to faster RTs. As was suggested by Experiment 3, this is possibly facilitated by general low-level peripheral processing of the amplitude envelope.

The evidence cited above suggests that, in addition to the lexical properties of word frequency and neighborhood density, the acoustic structure (phonetic and indexical) of words is another factor in lexical decisions. In addition, minimal selective consonant amplification (3 dB) is helpful, but not as beneficial to lexical decision speed as the familiarity and predictability of the talker.

These findings could have significant implications for hearing aid technologies that selectively amplify different frequency bands. Most consonants are characterized as having more energy in the higher frequencies than do vowels. Therefore, just as selective amplification of the consonants was conducted in this study, hearing-aid technologies for listeners with common high-frequency hearing loss amplify consonants more than vowels. The findings here suggest, at least for young, normal-hearing listeners, that increased audibility will improve the speed of lexical decisions in novel listening situations but might slow lexical search during familiar, low-uncertainty listening situations.

It is important to note that this study did not assess actual word recognition abilities. Many studies have clearly demonstrated that audibility is the primary predictor of word recognition performance (as discussed by Humes, 2007). However, it is also widely noted that older hearing impaired listeners often have decreased processing speed (Salthouse, 1996) that is believed to impact speech recognition abilities (Pichora-Fuller, 2003). Indeed, when audibility is controlled (such as through spectrally amplifying high frequency components), cognitive factors often account for individual differences in performance (Humes, 2007). It may be that in controlled, low uncertainty conditions, such cognitive contributions may vary due to the adverse impact of selective amplification on lexical decisions as observed in the present study. Overall word recognition in such conditions, as measured in percentages of words correctly identified, may remain high due to sufficient audibility. However, slower lexical processing may become more problematic for tasks that recruit greater cognitive resources, such as by increasing attention and memory requirements or by reducing the perceptual fidelity of the stimulus (Wingfield & Tun, 2001). In such conditions, slower lexical processing may combine with cognitive demands, possibly resulting in poorer word recognition scores. Such interactions have been identified for time compressed speech, which imposes a limitation on lexical processing time and reduces speech intelligibility (Wingfield, Tun, Koh, & Rosen, 1999). Significantly, cognitive capacity, as measured by reading span, is predictive of decreased speech understanding performance for novel amplification settings (Foo et al., 2007; Rudner et al., 2009). Despite this possibility, selective amplification is necessary to achieve sufficient audibility for successful word recognition, even though slower lexical processing may result. This possible decrease in lexical processing speed is another factor that must be considered as possibly contributing to performance. It may be that measures of lexical processing, in addition to sensory and general cognitive measures (e.g., Rudner et al., 2009), will be predictive of performance for amplification settings such as compression. Such a hypothesis is consistent with the ELU model, which predicts greater cognitive recruitment when there is a mismatch between the language input and stored mental representations, such as occurs with novel hearing aid amplification settings (Rönnberg, 2003). In addition, according to the indications from this study, in uncertain listening conditions selective amplification may increase lexical processing speed.

This study also suggests that the acoustic-phonetic and indexical properties of words impact spoken word recognition in addition to the lexical properties of words described by the neighborhood activation model. This supports the hypothesis that the acoustic structure of a word is stored with its lexical representation (Goldinger, 1998). Fine-grained phonetic details intrinsic to natural differences between unamplified consonants contribute to differences in the speed of lexical decisions between words. Furthermore, these acoustic properties interact with the listener’s experience and expectations, even after only short exposure. Indeed, talker variability may even have greater impact on word recognition than word frequency (Mullennix et al., 1989).

Overall, peripheral processing by the auditory system seems to play a significant role in the RTs obtained in Experiments 2 and 3 under minimal uncertainty conditions with familiar stimuli. Most of the performance differences between the natural and consonant-amplified stimuli for the speech and nonspeech conditions of these two experiments could be accounted for by increases in the sensation level for the consonants (i.e., increased audibility) as well as properties related to amplitude envelope processing of complex sounds (i.e., louder perception for sounds with gradual onsets). This appears to be the case even though sufficient audibility was provided to the normal-hearing listeners in this study across all stimulus conditions. Consistent with the nonsense syllable observations by Freyman et al. (1991), distortion of the amplitude envelope through selective consonant amplification can distort information contained in the natural envelope. In this study, increased RTs were observed for the amplified, nonlinguistic modulated-noise stimuli. In addition to the possible contribution of ramped envelope processing, these findings could also be related to selective distortion of some consonant features (Freyman et al., 1991).

The results clearly demonstrate the influence of acoustic properties on the speed of auditory lexical decisions. The interaction between talker familiarity and uncertainty is noteworthy, with slower lexical decisions obtained for amplified and high-intensity initial consonants in low-uncertainty conditions. Consistent with exemplar theories and the ELU model of speech understanding, mismatches between the acoustic stimulus and the stored mental representation of the auditory word, introduced during selective amplification, impacts the speed of lexical decisions. This has important ramifications for determining appropriate amplification settings for hearing aids and following up fittings with appropriate auditory training to facilitate adapting stored auditory mental representations of words with the new auditory input.

References

American National Standards Institute. (2004). ANSI S3.6-2004, American national standard specification for audiometers. New York, NY: American National Standards Institute.

Balota, D. A., & Chumbley, J. I. (1984). Are lexical decisions a good measure of lexical access? The role of word frequency in the neglected decision stage. Journal of Experimental Psychology: Human Perception and Performance, 10, 340–357. doi:10.1037/0096-1523.10.3.340

Bashford, J. A., Jr., Warren, R. M., & Brown, C. A. (1996). Use of speech-modulated noise adds strong “bottom-up” cues for phonemic restoration. Perception & Psychophysics, 58, 342–350.

Davis, M. H., & Johnsrude, I. S. (2003). Hierarchical processing in spoken language comprehension. The Journal of Neuroscience, 23, 3423–3431.

Fitzgibbons, P. J., & Gordon-Salant, S. (1998). Auditory temporal order perception in younger and older adults. Journal of Speech, Language, and Hearing Research, 41, 1052–1062.

Fitzgibbons, P. J., Gordon-Salant, S., & Friedman, S. (2006). Effects of age and sequence presentation rate on temporal order recognition. Journal of the Acoustical Society of America, 120, 991–999.

Fogerty, D. (2013). Acoustic predictors of intelligibility for segmentally interrupted speech: Temporal envelope, voicing, and duration. Journal of Speech, Language, and Hearing Research, 56, 1402–1408.

Fogerty, D., & Humes, L. E. (2012). The role of vowel and consonant fundamental frequency, envelope, and temporal fine structure cues to the intelligibility of words and sentences. Journal of the Acoustical Society of America, 131, 1490–1501.

Fogerty, D., Humes, L. E., & Kewley-Port, D. (2010). Auditory temporal-order processing of vowel sequences by young and older adults. Journal of the Acoustical Society of America, 127, 2509–2520.

Foo, C., Rudner, M., Rönnberg, J., & Lunner, T. (2007). Recognition of speech in noise with new hearing instrument compression release settings requires explicit cognitive storage and processing capacity. Journal of the American Academy of Audiology, 18, 553–566.

Freyman, R. L., Nerbonne, G. P., & Cote, H. A. (1991). Effect of consonant–vowel ratio modification on amplitude envelope cues for consonant recognition. Journal of Speech and Hearing Research, 34, 415–426.

Goldinger, S. D. (1996). Words and voices: Episodic traces in spoken word identification and recognition memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22, 1166–1183. doi:10.1037/0278-7393.22.5.1166

Goldinger, S. D. (1998). Echoes of echoes? An episodic theory of lexical access. Psychological Review, 105, 251–279. doi:10.1037/0033-295X.105.2.251

Gordon-Salant, S. (1985). Some perceptual properties of consonants in multitalker babble. Perception & Psychophysics, 38, 81–90.

Gordon-Salant, S. (1986). Recognition of natural and time/intensity altered CVs by young and elderly subjects with normal hearing. Journal of the Acoustical Society of America, 80, 1599–1607. doi:10.1121/1.394324

Goy, H., Pelletier, M., Coletta, M., & Pichora-Fuller, M. K. (2013). The effects of semantic context and the type and amount of acoustical distortion on lexical decision by younger and older adults. Journal of Speech and Hearing Research. 56, 1715–1732.

Hickson, L., & Byrne, D. (1997). Consonant perception in quiet: effect of increasing the consonant-vowel ratio with compression amplification. Journal of the American Academy of Audiology, 8, 322–332.

Humes, L. E. (2007). The contributions of audibility and cognitive factors to the benefit provided by amplified speech to older adults. Journal of the American Academy of Audiology, 18, 609–623.

Humes, L. E., Burk, M. H., Coughlin, M. P., Busey, T. A., & Strauser, L. E. (2007). Auditory speech recognition and visual text recognition in younger and older adults: Similarities and differences between modalities and the effects of presentation rate. Journal of Speech, Language, and Hearing Research, 50, 283–303.

Humes, L. E., & Christopherson, L. (1991). Speech-identification difficulties of the hearing-impaired elderly: The contributions of auditory processing deficits. Journal of Speech and Hearing Research, 34, 686–693.

Kewley-Port, D., Watson, C. S., & Foyle, D. (1988). Auditory temporal acuity in relation to category boundaries: Speech and non-speech stimuli. Journal of the Acoustical Society of America, 83, 1133–1145.

Li, N., & Loizou, P. C. (2008). The contribution of obstruent consonants and acoustic landmarks to speech recognition in noise. Journal of the Acoustical Society of America, 124, 3947. doi:10.1121/1.2997435

Luce, P. A., & Pisoni, D. B. (1998). Recognizing spoken words: The neighborhood activation model. Ear and Hearing, 19, 1–36.

Marslen-Wilson, W. (1984). Function and process in spoken word recognition: A tutorial review. In H. Bouma & D. Bouwhuis (Eds.), Attention and performance X: Control of language processes (pp. 125–148). Hillsdale, NJ: Erlbaum.

Montgomery, A. A., & Edge, R. A. (1988). Evaluation of two speech enhancement techniques to improve intelligibility for hearing-impaired adults. Journal of Speech and Hearing Research, 31, 386–393.

Montgomery, A. A., Prosek, R. A., Walden, B. E., & Cord, M. T. (1987). The effects of increasing consonant/vowel intensity ratio on speech loudness. Journal of Rehabilitation Research and Development, 24, 221–228.

Mullennix, J. W., Pisoni, D. B., & Martin, C. A. (1989). Some effects of talker variability on spoken word recognition. Journal of the Acoustical Society of America, 85, 365–378.

Picheny, M. A., Durlach, N. I., & Braida, L. D. (1985). Speaking clearly for the hard of hearing I: Intelligibility differences between clear and conversational speech. Journal of Speech and Hearing Research, 28, 96–103.

Picheny, M. A., Durlach, N. I., & Braida, L. D. (1986). Speaking clearly for the hard of hearing. II: Acoustic characteristics of clear and conversational speech. Journal of Speech and Hearing Research, 9, 434–446.

Pichora-Fuller, M. K. (2003). Processing speed and timing in aging adults: Psychoacoustics, speech perception, and comprehension. International Journal of Audiology, 42, 59–67.

Ries, D. T., Schlauch, R. S., & DiGiovanni, J. J. (2008). The role of temporal-masking patterns in the determination of subjective duration and loudness for ramped and damped sounds. Journal of the Acoustical Society of America, 124, 3772–3783.

Rönnberg, J. (2003). Cognition in the hearing impaired and deaf as a bridge between signal and dialogue: A framework and a model. International Journal of Audiology, 42, S68–S76.

Rönnberg, J., Lunner, T., Zekveld, A., Sörqvist, P., Danielsson, H., Lyxell, B., & Rudner, M. (2013). The Ease of Language Understanding (ELU) model: theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience, 7, 31.

Rudner, M., Foo, C., Rönnberg, J., & Lunner, T. (2009). Cognition and aided speech recognition in noise: Specific role for cognitive factors following nine-week experience with adjusted compression settings in hearing aids. Scandinavian Journal of Psychology, 50, 405–418.

Salthouse, T. A. (1996). The processing-speed theory of adult age differences in cognition. Psychological Review, 103, 403–428. doi:10.1037/0033-295X.103.3.403

Sammath, C. A., Dorman, M. F., & Stearns, C. J. (1999). The role of consonant–vowel amplitude ratio in the recognition of voiceless stop consonants by listeners with hearing impairment. Journal of Speech, Language, and Hearing Research, 42, 42–55.

Saripella, R., Loizou, P. C., Thibodeau, L., & Alford, J. A. (2011). The effects of selective consonant amplification on sentence recognition in noise by hearing-impaired listeners. Journal of the Acoustical Society of America, 130, 3028–3037.

Scarborough, R., & Zellou, G. (2013). Clarity in communication: “Clear” speech authenticity and lexical neighborhood density effects in speech production and perception. Journal of the Acoustical Society of America, 134, 3793–3807.

Schroeder, M. R. (1968). Reference signal for signal quality studies. Journal of the Acoustical Society of America, 44, 1735–1736.

Seneff, S., & Zue, V. (1988, November). Transcription and alignment of the TIMIT database. Paper presented at the Second Symposium on Advanced Man–Machine Interface Through Spoken Language, Oahu, HI.

Shannon, R. V., Zeng, F. G., Kamath, V., Wygonski, J., & Ekelid, M. (1995). Speech recognition with primarily temporal cues. Science, 270, 303–304.

Souza, P., & Gallun, F. (2010). Hearing aid amplification and consonant modulation spectra. Ear and Hearing, 31, 268–276.

Stevens, K. N. (2002). Toward a model for lexical access based on acoustic landmarks and distinctive features. Journal of the Acoustical Society of America, 111, 1872–1891.

Tanenhaus, M. K., Magnuson, J. S., Dahan, D., & Chambers, C. G. (2000). Eye movements and lexical access in spoken language comprehension: Evaluating a linking hypothesis between fixations and linguistic processing. Journal of Psycholinguistic Research, 29, 557–580.

Wingfield, A., & Tun, P. A. (2001). Spoken language comprehension in older adults: Interactions between sensory and cognitive change in normal aging. Seminars in Hearing, 22, 287–301.

Wingfield, A., Tun, P. A., Koh, C. K., & Rosen, M. J. (1999). Regaining lost time: Adult aging and the effect of time restoration on recall of time-compressed speech. Psychology and Aging, 14, 380–389.

Author note

K. A.C. is now affiliated with Columbia College, South Carolina. The authors thank Robin Goldberg and Britton Cohn for laboratory assistance associated with this project. This study was supported, in part, by a grant from the National Institutes of Health (No. NIDCD R03-DC012506) awarded to the first author.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fogerty, D., Montgomery, A.A. & Crass, K.A. Effect of initial-consonant intensity on the speed of lexical decisions. Atten Percept Psychophys 76, 852–863 (2014). https://doi.org/10.3758/s13414-014-0624-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-014-0624-4