Abstract

Continuous bag of words (CBOW) and skip-gram are two recently developed models of lexical semantics (Mikolov, Chen, Corrado, & Dean, Advances in Neural Information Processing Systems, 26, 3111–3119, 2013). Each has been demonstrated to perform markedly better at capturing human judgments about semantic relatedness than competing models (e.g., latent semantic analysis; Landauer & Dumais, Psychological Review, 104(2), 1997 211; hyperspace analogue to language; Lund & Burgess, Behavior Research Methods, Instruments, & Computers, 28(2), 203–208, 1996). The new models were largely developed to address practical problems of meaning representation in natural language processing. Consequently, very little attention has been paid to the psychological implications of the performance of these models. We describe the relationship between the learning algorithms employed by these models and Anderson’s rational theory of memory (J. R. Anderson & Milson, Psychological Review, 96(4), 703, 1989) and argue that CBOW is learning word meanings according to Anderson’s concept of needs probability. We also demonstrate that CBOW can account for nearly all of the variation in lexical access measures typically attributable to word frequency and contextual diversity—two measures that are conceptually related to needs probability. These results suggest two conclusions: One, CBOW is a psychologically plausible model of lexical semantics. Two, word frequency and contextual diversity do not capture learning effects but rather memory retrieval effects.

Similar content being viewed by others

Distributional semantic models (DSMs) encode the meaning of a word from its pattern of use across a corpus of text. There are a variety of classes of DSMs reported in the literature, with the two most commonly cited being latent semantic analysis (Landauer & Dumais, 1997) and the hyperspace analogue to language (Lund & Burgess, 1996), although a variety of other models have been proposed (e.g., Durda & Buchanan, 2008; Jones & Mewhort, 2007; Rohde, Gonnerman, & Plaut, 2006; Shaoul & Westbury, 2010a). Each uses different algorithms to encode the meaning of a word, but all algorithms converge on the notion that a word’s meaning can be expressed in terms of a numeric vector related to its pattern of use across a corpus of text. DSMs are distinguishable from other types of vector models in terms of how they construct the vector representing a word’s meaning. Other vector models of semantics represent meanings as sequences of pseudorandom values (e.g., Hintzman, 1988) or as sequences of semantic features derived from human judgments (e.g., McRae, Cree, Seidenberg, & McNorgan, 2005). In contrast to other classes of vector models, DSMs provide formal descriptions of how learning can be used to derive knowledge from experience; a word’s vector representation is the output of such learning.

The field of natural language processing (NLP) has a research area called word embedding that runs parallel to work on DSMs in psychology. Word embeddings are feature vectors that represent a word’s meaning, learned by predicting a word’s use over a corpus of text (e.g., Mikolov, Chen, Corrado, & Dean, 2013). The main algorithmic cleave that separates NLP and psychological models is that that psychological models primarily arrive at a representation of word meaning by counting contexts of occurrence and NLP models primarily arrive at a representation by predicting contexts of occurrence (for a comparison of these models in terms of predict and count descriptions, see Baroni, Dinu, & Kruszewski, 2014).

Two promising models from the NLP literature are the continuous bag of words (CBOW) model and the skip-gram model (both described in Mikolov, Chen, et al., 2013). Both use neural networks to learn feature vectors that represent a word’s meaning. The models accomplish this by finding statistical regularities of word co-occurrences that predict the identity of missing words in a stream of text. In the case of CBOW, a contiguous string of words is presented to the model (e.g., a sentence) with one word missing. The model then has to use the other words available in the sentence to predict the missing word. The skip-gram model solves the converse problem: given a single word as a cue, which other words are likely to be present in the context?

Predict models like CBOW and skip-gram tend to better capture variation in human behavior than the count models that psychology more commonly uses. Specifically, skip-gram and CBOW have unprecedented accuracies on analogical reasoning tasks (e.g., Mikolov, Chen, et al., 2013) and better fit lexical access times and association norms than count models (e.g., Mandera, Keuleers, & Brysbaert, 2017). More broadly, predict models have superior performance on a wide range of tasks, including categorization, typicality judgment, synonym judgment, and relatedness judgment among, others (Baroni et al., 2014). CBOW and skip-gram were never developed for the purpose of providing insight to psychological problems, yet they fit the aforementioned behavioral data better than the established models in the field. There is some literature describing the relationship between predict and count models in terms of the computational problems they are solving (e.g., LSA and skip-gram are computationally, formally equivalent for certain parameter settings; Levy & Goldberg, 2014), but no literature attempting to provide an explanatory theory as to why, as a general rule, CBOW and skip-gram models perform so much better than traditional psychological models at fitting human behavioral data, other than the fact that they can be trained with larger corpora (however, see Mandera et al., 2017, who still find differences in performance when corpus size is held constant). Attempting to understand why CBOW and skip-gram models fit behavioral data so well could help inform theories of language learning and word meaning.

A possible answer comes from Anderson’s rational analysis of memory (J. R. Anderson, 1991; J. R. Anderson & Milson, 1989; J. R. Anderson & Schooler, 1991). Like other aspects of human behavior and physiology, memory has been shaped over deep evolutionary time by pressures of natural selection. It is a reasonable assumption that memory retrieval should display evidence of being optimized with respect to facilitating effective interaction within the environment (see also Norris, 2006, for discussion on the optimality assumption of cognitive processes). Thus, Anderson starts from the question: What would the behavior of a memory system designed to facilitate effective interaction with the environment based on perceptual input look like?

A core prediction of Anderson’s analysis is that access to information in memory should be prioritized based on its likely relevance, given an agent’s current context (J. R. Anderson & Milson, 1989). This idea can be formalized with Bayesian statistics: Given a history of experience and a current set of contextual cues, the particular piece of knowledge that is most likely to be needed should be most readily accessible. Anderson and Milson refer to a memory’s relevance, given contextual cues, as its needs probability (J. R. Anderson & Milson, 1989, Equation 5; see also Steyvers & Griffiths, 2008, for an accessible introduction to needs probability). Others have invoked a related construct, likely need (Adelman, Brown, & Quesada, 2006; Jones, Johns, & Recchia, 2012), which is the needs probability of a word averaged across a distribution of possible contexts.

There are clear relationships between the optimization criterion of memory retrieval, as described by J. R. Anderson and Milson (1989), and the learning objectives of CBOW and skip-gram algorithms. The learning objective of CBOW in particular has a direct correspondence to Anderson’s concept of needs probability: CBOW learns to predict the identity of an omitted word from a phrasal context, given the identities of nonomitted words. Skip-gram learns to solve the converse problem: Given a word, what other words are likely to be present in the omitted phrasal context? These casual descriptions of learning objectives are supported by their proper formal descriptions in terms of conditional probabilities (e.g., Bojanowski, Grave, Joulin, & Mikolov, 2016). It is possible that CBOW and skip-gram are solving the same, or a similar, computational problem as human memory as described by Anderson and Milson. If this were the case, CBOW and skip-gram should produce behavior that is consistent with predictions made by the rational analysis of memory.

Testing predictions of needs probability with lexical access times

Estimates of needs probability should be linearly related to behavioral measures of memory retrieval times (J. R. Anderson & Milson, 1989). Within the domain of psycholinguistic research, this includes measures such as word naming time and lexical decision time, of which there are multiple large data sets freely available for simulating experiments (e.g., Balota et al., 2007; Keuleers, Lacey, Rastle, & Brysbaert, 2012). However, in such tasks, context cues are not provided, with the exception of special cases (e.g., priming experiments). For data from naming and lexical decision data sets to be usable for testing predictions of needs probability, some additional steps need to be taken.

It is important to recognize that even if contextual cues are not explicitly presented during memory retrieval tasks, such as word naming and lexical decision, participants’ minds are not empty vessels. Moment by moment, thoughts are likely acting as context cues for memory retrieval in otherwise context-sparse tasks favored by psychological research. So, how do those thoughts distribute? With a reasonable answer to that question, the expected need of a word could be estimated by averaging its needs probability over the distribution of context cues supplied by participant thoughts. We use the term average need to mean the needs probability of a word averaged over a distribution of possible contexts and distinguish it from needs probability, which is the probability that knowledge will be needed, given a set of actual context cues. The abovementioned definition of average need is consistent with what Adelman et al. (2006) and Jones et al. (2012) mean by likely need: the prior probability that a word will be needed when specific contextual details are unspecified. Although it is conceptually identical to likely need, we instead adopt the term average need for two reasons. First, although Anderson has consistently been cited as the source of the concept of likely need, there is not a single use of this term in any of his relevant work (J. R. Anderson, 1991; J. R. Anderson & Milson, 1989; J. R. Anderson & Schooler, 1991). Breaking from use of likely need is an attempt to correct a misattribution. Second, we specify the exact formal relationship between an actual construct introduced by Anderson et al., needs probability, and our own term, average need.

There are two obvious and simple approaches to estimating the distribution of context cues in lexical access tasks. One is to model the distribution based on words presented on previous trials. This is based on the assumption that when a participant reads a word, he or she involuntarily anticipate other words that may follow. For an experiment that uses random presentation order, that distribution is the same as the distribution of words about which participants make decisions. The second approach is to estimate the context distribution from the topics that people talk about in their day-to-day lives. For instance, the linguistic contexts captured by text corpora extracted from Internet discussion groups (e.g., Shaoul & Westbury, 2013), entertainment media (e.g., Brysbaert & New, 2009), or educational sources (e.g., Landauer, Foltz, & Laham, 1998). There exists other work on measuring needs probability within psycholinguistic research (e.g., semantic distinctiveness count, Jones et al., 2012; contextual diversity, Adelman et al., 2006). Past work favors corpus-based approaches for estimating context distribution.

Of note, corpora that closely match day-to-day linguistic exposure typically produce the highest quality estimates of lexical variables (e.g., word frequency), as measured by the ability of those variables to predict lexical access times (e.g., Brysbaert et al., 2012; Keuleers, Brysbaert, & New, 2010; Herdağdelen & Marelli, 2016). This observation is convergent with the earlier claim that the context distribution for memory retrieval during context-sparse psychological experiments is likely supplied by the active thoughts and knowledge that participants bring with them into the laboratory; in a moment, an argument will be provided that word frequency and contextual diversity are fundamentally related to each other as operationalizations of average need.

Measuring average need

Calculation of the average need of a word involves taking an average of the needs function over the context distribution of memory retrieval. Consider the calculation of contextual diversity (CD), which is the number of documents in a corpus within which a word appears. A particular document within a corpus can be thought of as a possible context. The full corpus provides a distributional model of contexts. The calculation of needs probability, given a context, is a binary check of whether the word is present or absent in that particular context. CD scores are the sum of a binary needs function over the distribution of possible contexts. In this case, a sum would be proportionally related to an average.

Longer documents like novels and plays undergo plot developments. These developments continually necessitate the need for new knowledge as the document progresses. Empirically, this means that word frequencies are not stable across sequential slices of a document (Baayen, 2001). Since the content of discussion can change across the length of a document, a question is merited: Is context better defined in terms of a textual unit smaller than “document”Footnote 1? Perhaps better CD measures could be produced if contexts were defined in terms of chapters, sections, paragraphs, sentences, phrases, or individual words. In the case where context is defined in terms of the smallest of these units, an individual word, CD measures would be identical to word frequency (WF) measures. Or stated alternately, if CD is a measure of average need, so is WF. What distinguishes the two measures is how they choose to define context when calculating needs probability.

CD and WF can both be thought of as measures of average need, each applying a different definition of context. In light of this, the finding of Adelman et al. (2006) that CD provides a better fit to lexical access times than WF can be can be restated as follows: Defining context in terms of larger linguistic units (documents), rather than smaller linguistic units (words), provides estimates of needs probabilities that more strongly correlate with lexical access times. However, it remains an open question as to whether intermediary definitions of context like sentences or paragraphs provide a better fit than the two extreme context sizes. The answer might be yes if documents are so coarse that they encompass shifts in the topic of discussion.

The concept of CD has recently been extended by Johns, Jones, and colleagues (Johns, Dye, & Jones, 2016; Johns, Gruenenfelder, Pisoni, & Jones, 2012; Jones, Johns, & Recchia, 2012). Their observation is that defining CD in terms of document counts overlooks the fact that some documents are not referencing unique contexts, either due to document duplication or due to multiple documents all referencing the same event (e.g., as may happen across news articles all discussing the same world event). Jones et al. (2012) propose the concept of semantic distinctiveness: the degree of variability of the semantic contexts in which a word appears. One of the ways semantic distinctiveness has been operationalized is with what Jones et al. (2012) call semantic distinctiveness count (SD_Count). SD_Count is a document count metric that is weighted by how semantically unique the document is. SD_Count is a better predictor of lexical access times than both CD and WF (Jones et al., 2012), and in an experiment using an artificial language, participant recognition times of nonce words are sensitive to effects of SD_Count when WF and CD are controlled for. Jones and colleagues have also pursued a variety of model-based approaches to operationalizing semantic distinctiveness, with similar results (e.g., Johns et al., 2016; Jones et al., 2012).

Jones et al.’s (2012) SD_Count is a calculation of average need that uses a binary needs function, defines context in terms of documents, but also includes a term that downweights the contribution of common contexts when calculating average need over the context distribution.

Measuring needs probability from word embeddings

One of the key features of word embeddings produced by CBOW, skip-gram, and other DSMs is that they allow for the measurement of similarity of meaning between two words (typically with cosine similarity between embeddings). J. R. Anderson (1991) has argued that similarity between context cues and memory features will be proportional to needs probability (see also, Johns & Jones, 2015; Hintzman, 1986). Thus, DSMs can also offer an operationalization of the average need of a word: the similarity between a word vector and a context vector, averaged over all contexts supplied by a context distribution.

Theoretical summary and research objective

Anderson’s rational analysis argues that memory is always engaged in a process of forward prediction. Contextual cues that are available now are used to predict and make accessible knowledge that will be needed in the near future. The purpose of such forward prediction is to aid efficient and adaptive behavior within the world (see also Clark, 2013, for a related discussion on the biological plausibility and adaptive significance of forward prediction).

A core theoretical construct that arises from Anderson’s rational analysis of memory is needs probability—the probability that some piece of knowledge will be needed in the future, given a set of contextual cues that are available now. Anderson expects that traces in memory should be accessible according to their needs probability. Thus, we arrive at a criterion for testing memory models: needs probabilities derived from them should be linearly related to empirical retrieval times. In cases where the context for forward prediction is unknown, we can expect that retrieval times should be linearly related to the average needs probability over the distribution of possible contexts.

There is currently a lack of understanding as to why CBOW and skip-gram models better fit behavioral data, including retrieval times (Mandera et al., 2017), than the DSMs more commonly used within psychological research. We hypothesize that CBOW and skip-gram are solving the same, or a similar, learning objective as human memory (as specified by Anderson’s rational analysis).

CBOW and skip-gram both learn to represent word meanings as connection weights to (skip-gram) or from (CBOW) the hidden layer of a three-layer neural network. That neural network is used to predict the words most likely to appear, given information about a surrounding context (CBOW), or the most likely contexts of occurrence, given a word (skip-gram). The functional description of CBOW appears most consistent with Anderson’s rational analysis of memory, whereas skip-gram appears to be solving a converse problem. As a side note, because of the formal equivalency between skip-gram and LSA (Levy & Goldberg, 2014), this also poses theoretical problems for LSA within the context of Anderson’s rational analysis.

The motivation of this research is to understand why CBOW and skip-gram do so well at fitting behavioral data, particularly in lexical retrieval tasks. We believe Anderson’s rational analysis of memory provides an appropriate theoretical framework for beginning to interpret the behavior of these models. Thus, we arrive at the main prediction of this research: insofar as they are plausible models of lexical semantic memory, measures of average need derived from skip-gram and CBOW should be linearly related to lexical retrieval times. Furthermore, average needs derived from these models should also be strongly correlated with other operationalizations: log WF, log CD, and log SD_Count.

Models that bear on the process of lexical access are typically concerned with the way in which stimulus properties activate lexical entries or their meanings (e.g., Coltheart, Rastle, Perry, Langdon, & Ziegler, 2001; McClelland & Rumelhart, 1981; Seidenberg & McClelland, 1989). Neither skip-gram nor CBOW have any information about stimulus properties, and they do not address issues of lexical access at this level. Rather, they learn from the contingencies between words and contexts (which is determined by other words). Thus, they only bear on the question of how context determines the activity of items in memory. This activity is not the lexical access of a word’s meaning, given the orthographic or phonological details of that word. Rather, it is the posterior accessibility of a lexical entry, given information about context. Ultimately, an incomplete but important piece of the puzzle of lexical access.

To begin to interpret a model within the framework of Anderson’s rational analysis of memory, an operationalization of needs probability is required from that model. Needs probability is conceptually a matter of trace activation, given a probe. We thus borrow from traditions in vector models of memory and define trace activity, given a probe, in terms of vector similarity between trace and probe (e.g., Hintzman, 1986; Johns & Jones, 2015). Although they do not provide an actual model implementation of their theory, J. R. Anderson and Milson (1989) also suggest this exact approach to operationalizing needs probability.

Experiment 1

In this experiment, we derive measures of average need from CBOW and skip-gram word embeddings. We use various text corpora as distributional models of context. Results of Adelman et al. (2006) suggest that when calculating needs probability, it may be more appropriate to use documents as the unit of context rather than words. However, the gains are marginal. As discussed earlier, WF and CD are calculations of average need that employ the same needs function, but different definitions of context (WF uses a word definition, CD uses a document definition). Within the corpora employed in this study (introduced shortly), WF and CD are nearly identical (all rs > .99). For the current study, we define context in terms of individual words. We do this because it results in a more straightforward calculation of needs probability.

The needs probability of a memory trace can be estimated by the similarity between its structure and the structure of the probe supplied by contextual cues (J. R. Anderson & Milson, 1989). In terms relevant to DSMs, the needs function for a target word (memory trace), given a context word (probe), is the cosine similarity between the two words’ embeddings. Similar equivalencies are also made in vector-based exemplar models of memory (e.g., Hintzman, 1986; Johns & Jones, 2015, where vector similarity is used to determine the activation level of a memory trace, given a probe). In the current application, the cosine similarity between a probe word (which is a stand-in for the semantic details of a possible linguistic context) and a lexical entry specifies the needs probability of that lexical entry in that particular linguistic context.

With a needs function and a distributional model of context defined, the average need of a word can be calculated as the average similarity between a target word’s embedding and the embeddings for all other words in the context distribution, weighted by the frequency of occurrence of the context word. Frequency weighting is required to ensure that measures of average need are based on the proper distributional form of contexts.

Words with high cosine similarity to other words on average should be precisely the words that are needed across a broad range of contexts. Conversely, words with low cosine similarity to other words on average should be those words that are used in a very narrow range of uncommon contexts. Using as example one of the models tested in the current experiment (CBOW, trained on a Subtitles corpus), high average need words included terms like he’s, won’t, that’s, tell, thanks, and gonna. Low average need words included terms like cordage, shampooer, spume, epicure, welched, and queueing. It is far more often, and across a more varied types of contexts, that we need to be prepared to know about telling, thanking, and doing than welching, queueing, and shampooing.

We make three predictions. First, estimates of average need should be linearly related to measures of word naming time and lexical decision time. This is the primary prediction of the current experiment and is supplied by J. R. Anderson (1991). We note that this is a prediction that other operationalizations of average need consistently fail to satisfy; CD (Adelman et al., 2006) and SD_Count (Jones et al., 2012) are logarithmically related to measures of lexical access.

Second, CBOW and skip-gram average needs should be related to other operationalizations insofar as they are psychologically plausible models of semantic knowledge. We focus on comparing values to measures of CD (Adelman et al., 2006) and WF. We exclude SD_Count (Jones et al., 2012) from analyses. SD_Count is a computationally costly calculation and does not scale to large corpora. Model-based approaches to calculating semantic distinctiveness are available that are claimed to scale to larger corpora (e.g., Johns et al., 2016); however, we do not yet have a working implementation of one such model and thus sidestep comparisons to model-based estimates of semantic distinctiveness from this analysis. Since CD and WF measures are best fit to lexical access times with a logarithmic function, it is predicted that average needs derived from CBOW and skip-gram will be linearly related to the logarithm of these two measures.

Third, the ability for average need to fit lexical access times or CD/WF measures will depend on the quality of the word embeddings that average needs are calculated from. With a poor model of semantic knowledge, we should expect correspondingly poor estimates of when knowledge is needed and when it is not. We anticipate this effect being expressed in two ways: one, measures of average need should have no predictive validity when randomized word embeddings are used. Two, as corpus size increases, average needs should improve in quality; as corpus size increases, more differentiation in word usage exists. Consequently, word embeddings likewise become more differentiated in values.

Method

Corpora

We repeat our analyses on three corpora to ensure generalizability of results. We use a movie subtitles corpus (Subtitles) that has previously been used to compare the performance of CBOW and skip-gram on modeling human performance on psychological tasks (Mandera et al., 2017), a 2009 dump of Wikipedia (Wikipedia) that is widely used in natural language processing research (Shaoul & Westbury, 2010b), and the Touchstone Applied Science Associates corpus (TASA; Landauer, Foltz, & Laham, 1998). These corpora were chosen because they vary in size (TASA 10 M tokens, Subtitles 356 M tokens, Wikipedia 909 M tokens), and all have a history of previous use in psycholinguistic research and distributional semantic modeling. The movie subtitles corpus, specifically, was chosen because WF measures derived from movie subtitles account for more variation in lexical access times than WF measures from other common corpora (Brysbaert & New, 2009). The context distribution being supplied by the subtitles corpus is likely a good match for the actual context distribution of memory retrieval during lexical access tasks. We further created two subsets of the subtitles corpus—one containing half of the full set of documents (approximately 180 M tokens; Subtitles-180 M) and another containing a quarter of the full set of documents (approximately 90 M tokens; Subtitles-90 M). Subsets were generated by randomly sampling documents from the full corpus (without replacement) until the desired token count was reached. Subtitles-90 M is a nested subsample of Subtitles-180 M.

Prior to analysis, each corpus was preprocessed by removing all punctuation and converting all words to lowercase. Words were not lemmatized (i.e., kick, kicking, and kicks were all treated as unique types) on the basis that nonlemmatized frequencies better account for variation in lexical access than lemmatized frequencies (Brysbaert & New, 2009).

Calculations of average need

Measures of average need were calculated from CBOW and skip-gram models after unique instances of each model had been trained on each of the five corpora. Thus, we derive average needs from 2 (model type) × 5 (corpus) = 10 models. The context distribution used for calculating average need was limited to words with entries in the English Lexicon Project (ELP; Balota et al., 2007), the British Lexicon Project (BLP; Keuleers et al., 2012), the Warriner et al. affective norms set (Warriner, Kuperman, & Brysbaert, 2013), the Brysbaert et al. concreteness norms set (Brysbaert, Warriner, & Kuperman, 2014), or the Kuperman et al. age of acquisition norms set (Kuperman, Stadthagen-Gonzalez, & Brysbaert, 2012). The scope of analysis needed to be limited to keep it tractable. These data sets contain entries for most words that would appear within psychological research contexts, so we deemed it a good inclusion criterion. The union of these datasets contains 70,167 unique words that will act as contexts for calculating average need.

For each corpus, CBOW and skip-gram word embeddings were trained for words appearing in the corpus a minimum requisite number of times (five). Models were trained with the Python gensim package. Embedding size was set to length 300, motivated by findings that this length seems to produce robustly applicable embeddings (Landauer & Dumais, 1997; Mandera et al., 2017; Mikolov, Chen, Corrado, & Dean, 2013). Parameters within the suggested range for each model were otherwise used: window size of five, 1e-5 downsampling parameter for frequent words, and five negative samples per trial.

After models were trained, WFs and CDs for the 70,167 words were calculated within each corpus. The average need of each word was then calculated by averaging the similarity between that word and 1,000 random context words, weighted by the WFs of the context words.Footnote 2 Context words were randomly sampled (with replacement) from the set of unique words included within this study that also had an entry in the corresponding model. Random sampling of context was used to keep calculation time reasonably short; average need was originally calculated over the exhaustive set of all 70,167 context words. However, this calculation took multiple days to complete for each model. Exploratory analysis performed on the subtitles dataset suggested that random sampling of context did not substantially affect the measures of average need (r > .99 between average needs derived with and without random sampling of contexts). A similar subsampling procedure has been demonstrated to be effective at improving the tractability of calculating a related measure, semantic diversity, without sacrificing quality of those estimates (Hoffman, Ralph, & Rogers, 2013).

Randomization models

Calculations of average need were repeated using randomization models. This was done to assess the relevance of well-structured word embeddings for the estimation of average need. A randomized version of each model was created by randomly permuting the values contained within each word embedding, prior to the calculating of average need. This process destroys any information available in a word’s embedding that might encode word meaning.

Results

We start by reporting analyses of the CBOW models. All analyses were conducted on the subset of 27,056 words that (1) were used in the calculation of average needs, and (2) occurred in each of our corpora at least five times (i.e., enough for a model to produce an embedding for the word). Within this subset, only 15,994 words had BLP lexical decision data so all analyses involving BLP lexical decisions are conducted only on this set of words. Likewise, 23,767 words had ELP lexical decision data, and 23,769 had ELP word naming data.

Measures of average need were highly correlated with measures of log CD and log WF within each corpus: for log WF, values ranged between r = .642 (p = 0Footnote 3; TASA) and r = .858 (p = 0; Wikipedia). For log CD, values ranged between r = .622 (p = 0; TASA) and r = .849 (p = 0; Wikipedia).

Very weak but reliable relationships were observed for the CBOW randomization models. For log WF, TASA r = .016 (p = .01); Wikipedia r = .033 (p = 4.8e-8); Subtitles r = -.006 (p = .29); Subtitles-90 M r = .016 (p = .01); Subtitles-180 M r = .003 (p = .60). For log CD, TASA r = .019 (p = .002); Wikipedia r = .032 (p = 1.6e-07); Subtitles r = -.005 (p = .36); Subtitles-90 M r = .016 (p = .01); Subtitles-180 M r = .000 (p = .94).

These small but reliable correlations between log CD/WF and average need in the randomization models is indicative of the fact that words could act as their own contexts. Context words were weighted by their frequency when calculating average need. Thus, common words contribute more to the weight of their own context than uncommon words. This effect is marginal and cannot account for the strong relationships seen between average need and log CD/WF in the nonrandom models. The strong relationship between average need and log CD/WF depends on the presence of well-ordered semantic representations.

CBOW average need consistently had a moderate linear relationship with measures of lexical access times: for BLP lexical decision data, values ranged from r = -.371 (p = 0; TASA) to r = -.498 (p = 0; Subtitles), for ELP lexical decision data, values ranged from r = -.279 (p = 0; TASA) to r = -.473 (p = 0; Subtitles), and for ELP naming data, values ranged from r = -.207 (p = 0; TASA) to r = -.403 (p = 0; Subtitles).

Quality of average need was also observed to depend on the size of the corpus over which word embeddings were learned. Log corpus size was predictive of the strength of relationship between estimates of average need and log WF, r(3) = .942, p = .016, and log CD, r(3) = .959, p = .009, though the pattern was not replicated for all behavioral measures: BLP lexical decision times, r(3) = .897, p = .04; ELP lexical decision times, r(3) = .504, p = 0.39; and ELP naming times, r(3) = .359, p = .55. We assume this lack of replication for some behavioral measures is simply due to a lack of power in the analyses.

Identifying that CBOW average need is strongly correlated with lexical access times establishes the presence of a linear relationship. However, it does not exclude the possibility of nonlinear relationships. Because the shape of the fit between average need and lexical access times is central to the thesis of this experiment, the possibility of nonlinear effects was considered.

CBOW average need was fit to lexical access times using polynomial regression. Linear, quadratic, and cubic terms were used. This was done in a stepwise manner. The linear term was added first. Then, the quadratic term was added to the regression. Its effect was measured, beyond the linear term. Finally, the cubic term was added and its unique effect measured. Effects for linear, quadratic, and cubic terms are displayed in Table 1. Only results for ELP lexical decision times are reported; effects for the other measures of lexical access displayed the same patterns, but with different magnitude.

It was clear from this analysis that a linear fit accounts for most of the shared variance between CBOW average needs and ELP lexical decision times. The linear term accounted for 7.79%, 20.48%, 20.67%, 22.45%, and 13.15% of the variance in lexical decision times, when considering the TASA, Subtitles-90 M, Subtitles-180 M, Subtitles, and Wikipedia corpora, respectively. In comparison, the square term only accounted for 0.33%, 0.41%, 0.52%, 0.32%, 0.35% of the variance, respectively. Likewise, the cubic term only accounted for 0.17%, 0.28%, 0.20%, 0.22%, and 0.17%. In every case, model comparison using the ANOVA function in R revealed that the unique contributions of quadratic and cubic effects were highly reliable (p = 0), as small as they were. This is not necessarily a problem; effects are primarily linear. The higher order effects could plausibly be due to distortions in estimates of average need resulting from lack of parameter optimization when training CBOW. Furthermore, the magnitudes of the quadratic and cubic effects are similar to those seen when performing polynomial regression of log WF and log CD on lexical decision times (see Table 1); comparison measures are also showing this same tendency to have slight nonlinear relationships with lexical decision times.

All three predictions were supported by results from the CBOW analyses: Average needs were (1) moderately linearly related to lexical access times, (2) strongly linearly related to the logarithms of WF and CD calculated from the same corpora, and (3) the quality of average needs depended on the quality of word embeddings, measured both by use of randomized embeddings and by using larger corpora on which to train embeddings.

We now turn our attention to the analysis of average needs derived from skip-gram models. Unlike the CBOW results, the skip-gram results did not support all three predictions of this experiment. For the smallest corpus, TASA, the observed relationship between average needs and all dependent measures was actually opposite the expected direction: r = -.605 (p = 0) for CD; r = -.612 (p = 0) for WF; r = .362 (p = 0) for BLP lexical decision time; r = .365 (p = 0) for ELP lexical decision time; r = .300 (p = 0) for ELP naming time. Models trained on the other three larger corpora showed relationships in the expected direction, but the strength of those relationships were overall quite more variable than in the case of CBOW and, when examining the three Subtitles corpus, very dependent on corpus size (see Table 1).

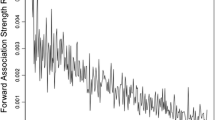

Skip-gram’s failings with respect to the predicted results became obvious from visual inspection: The skip-gram algorithm had a tendency to overestimate the average need of uncommon words. The result is that estimated needs probability takes on a U-shaped relationship with measures of log CD/WF. Results from the full Subtitles corpus are given in Fig. 1 as an example.

We tested whether the quality of skip-gram average needs improved after taking into account the fact that the algorithm overestimates values for uncommon words. The local minima in the function describing the relationship between log CD and skip-gram average need was found for each model (i.e., for Fig. 1a, the basin of the U shape). Data were split based on whether they fell to the left or the right of this minima. Minima were estimated by first running a polynomial regression of log CD on average need (including linear, square, and cube terms), and then finding the point at which the derivative of the resulting equation equaled zero (i.e., when the function is flat, as it would be in the basin of the U shape). The log CD values where average needs were minimal were 4.982 for TASA, 4.451 for Subtitles-90 M, 4.299 for Subtitles-180 M, 4.234 for Subtitles, and 3.591 for Wikipedia. Words falling above or below these CD values will be referred to as high-diversity and low-diversity words, respectively.

When data were divided by high- and low-diversity words, skip-gram average needs consistently provided better fits to the behavioral and CD/WF data than CBOW average needs (see Table 2). However, for low-diversity words, the relationship is consistently in the wrong direction.

We considered the possibility that skip-gram may be organizing words according to their contextual informativeness. Consider the example word cat as it relates to the topic of animals. When discussing animals, it is very likely that knowledge of cat will be needed (due to their ubiquity and exemplar membership). That is to say, cat has high needs probability, given cues about animals. However, the occurrence of cat is not necessarily informative of a context of animals due to the fact that the term is used in a wide variety of contexts—for example, contexts about Dr. Seuss (the cat in the hat), jazz (cool cats), musicals (Cats), and the Internet (pictures of cute animals). Now consider the example of the word capybara. Capybaras as large rodents related to guinea pigs. Due to its relative obscurity, knowledge of capybara is seldom needed when discussing animals. However, if capybara is mentioned, there is a large chance that the context of discussion is about animals (as opposed to Dr. Seuss, jazz, musicals, or the Internet). It seems plausible that skip-gram may be organizing words according to their informativeness of contexts of appearance; skip-gram learns word embeddings by predicting the identity of missing context words from a word that appeared in that context.

The information retrieval literature makes use of a measure called term frequency inverse document frequency (tf-idf). Tf-idf is measured as a word’s frequency of occurrence within a specific document, multiplied by the negative log of the percentage chance it appears in any particular context (typically defined in terms of a unique document). Tf-idf measures how specific a word’s use is to a particular context, weighted by its prevalence within that particular context; it is a measure of informativeness of context. Tf-idf happens to have a U-shaped relationship with log WF, much like average needs calculated from skip-gram.

Given that tf-idf has a U-shaped relationship with log WF, and that skip-gram average need estimates also had a U-shaped relationship with log WF, we tested the possibility that the two measures might be related. For each word, we calculated its average informativeness of context by averaging its tf-idf values across each document the word occurred in. We then correlated average informativeness of context with skip-gram average needs estimates. Within the Subtitles corpus, skip-gram average need and average informativeness of context were reliably, but weakly, correlated (r = -.25, p = 0). Relationships of similar strengths were observed for the other corpora. Only weak evidence is available that skip-gram is organizing words according to their informativeness of context, as measured by tf-idf.

Because skip-gram average need displayed a U-shaped relationship with measures of log WF and log CD, it was anticipated that skip-gram average need would also have a nonlinear relationship with measures of lexical access. This would account for the low correlations observed between lexical access measures and skip-gram average need in some of the corpora. However, to our surprise, skip-gram average need displayed very weak evidence of having a nonlinear relationship with lexical access measures (see Table 1). The effects are, again, primarily linear, though much more variable in magnitude between corpora than CBOW average need. The variability of magnitude does seem to coincide with the degree to which skip-gram average needs are linearly related to log WF and log CD measures.

Finally, although CBOW average needs do predict measures of lexical access reasonably well (see Table 1), log WF and log CD both provide a much better fit to the behavioral data (see Table 1). So although the primary predictions of this experiment were satisfied, log WF and log CD still provide the superior quantitative estimates of average need.

Discussion

Memory retrieval is optimized to produce the most relevant items, given contextual cues (J. R. Anderson, 1991; J. R. Anderson & Milson, 1989; J, R. Anderson & Schooler, 1991). Optimization is performed over associative relationships present in the stream of experience. The current hypothesis was that CBOW and skip-gram models are solving a similar type of problem as human memory retrieval processes; they are learning to predict the presence of words in a contiguous string of text, given other linguistic cues available in that text. It was anticipated that these models would be able to replicate the main prediction of Anderson’s rational analysis of memory. Namely, that estimates of needs probability derived from them would be linearly related to lexical access times. We further validated these estimates by comparing them to other measures of average need (log CD/WF).

CBOW results were consistent with predictions. These findings lend support for the hypothesis that CBOW and human memory are solving a same, or similar, computational problem of cue-memory association. Results from the skip-gram model were more complicated. Specifically, the skip-gram model appears to consistently overestimate the average need of uncommon words, resulting in a u-shaped relationship between average need derived from the models and log CD/WF.

When uncommon words were removed from analyses, the skip-gram model provided a superior fit to measures of lexical access and log CD/WF. When instead only uncommon words were analyzed, the skip-gram model also provided better fits than CBOW, but in the direction opposite what was expected. These findings lead to the conclusion that, although skip-gram is forming useful cue-memory associations, CBOW’s computational description may be closer to processes underlying the optimization of human memory retrieval than the skip-gram model. CBOW better fits lexical access times across the full range of common and uncommon words. This finding was expected; the learning problem that CBOW is solving (predict a word from context) is more similar in description to needs probability than the learning problem that skip-gram is solving (predict context from a word).

What was not expected was the shape of the relationship between skip-gram average needs and lexical access times. The nonlinearity seen between skip-gram average need and log CD/WF is not translating to nonlinearity between skip-gram and lexical access times; it is merely diminishing the magnitude of the effect. It is unclear what this lack of transitivity implies about the skip-gram model and its relationships to log WF/CD and lexical access measures.

We considered the possibility that skip-gram is organizing words according to their informativeness of contexts of appearance. However, when average needs estimated from skip-gram were compared to tf-idf measures, relatively weak correlations were observed. No compelling evidence was found that skip-gram is organizing words according to context informativeness as measured by tf-idf.

We note that the results pertaining to average need are not tautological. First, in line with the second prediction of this experiment, relationships between measures of average need and the various dependent measures employed in this study were only observed in cases where nonrandom word embeddings were used. Second, consistent with the third prediction of this experiment, the quality of fits depended on the size of the corpus models were trained on. Embeddings learned from large corpora provide better fits, not because the corpora are larger per se, but because larger corpora allow for the learning of more differentiated word meanings. This effect is most clearly seen when comparing the nested subsets of the Subtitles corpus. Consider doubling the size of a corpus by exactly duplicating its content. This does not change the statistical properties of word co-occurrences and consequently should have no impact on learned embeddings. Although not described in the Results section, we verified this by comparing model a trained on a corpus constructed out of duplicating the content of the Subtitles-180 M corpus (360 million token in size, total) to models derived from (1) the full Subtitles corpus (365 million tokens in size, total) and (2) a single copy of the Subtitles-180 M corpus (180 million tokens in size, total). Average need measures were calculated from the resulting CBOW model and regressed on ELP lexical decision data using polynomial regression. The linear term for the duplicate-content Subtitles-180 M corpus accounted for 20.74% of the variance in lexical decision times—in line with the 20.67% seen for the regular Subtitles-180 M corpus, and lower than the 22.45% seen for the full Subtitles corpus (see Table 1). The marginal 0.07% difference in fit seen between the duplicate-content and regular Subtitles-180 M corpus is likely due to what effectively amounts to adding an additional training epoch over the input data. It is the statistical properties of a corpus, not its size, that determines the content of learned embeddings. All other things being equal, larger corpora will generally contain more variability of word use than smaller corpora (Baayen, 2001; Heaps, 1978). This increased variability in word use leads to the learning of better structured and more differentiated word embeddings, which are required to produce behavior consistent with predictions from J. R. Anderson’s (1991) rational analysis of memory.

The reported results depend on the existence embeddings that carry information about a word’s expected use. This suggests that psycholinguistic effects captured by other measures of average need (i.e., log WF/CD) are semantic in nature; effects of these variables reflect the fact that we dynamically organize semantic knowledge in accordance to its needs probability, given contextual cues present in a memory retrieval context. A similar claim has been made by others who have studied needs probability (e.g., Jones et al., 2012) and results of this nature provide a challenge for memory models that account for frequency effects, as Baayen (2010) put it, by appealing to meaningless “counters in the head” (e.g., Coltheart et al., 2001; Murray & Forster, 2004; Seidenberg & McClelland, 1989).

DSMs typically throw away information about vector magnitude; embeddings are normalized to unit length. Johns, Jones, and Recchia (2012) have argued that when embeddings encode for the various contexts of use of a word, an embedding’s vector magnitude becomes informative of the semantic distinctiveness of that word. Information about semantic distinctiveness is encoded as the vector magnitude of word embeddings. Thus, something akin to average need may be explicitly represented in memory and used to prioritize access to knowledge contained within semantic memory, explaining semantic distinctiveness, WF, and CD effects.

The current results demonstrate that information about average need can also be recovered from certain classes of DSMs at the point of memory retrieval, even when information about average need is not explicitly encoded. When embeddings are learned by applying a learning objective that is consistent with the principle of likely need, e.g., CBOW, it is not necessary for average need to be encoded in memory to account for effects having to do with average need, such as CD and WF (at least, as they pertain to lexical access). The observable effects of these variables can be explained as the consequence of the distributional properties of retrieval cues interacting with the distributional properties of semantic memory during the process of memory retrieval. Such effects are a free lunch from processes of memory retrieval when semantic representations are learned by applying a learning objective that is consistent with the principle of likely need; commonly occurring words are more likely to be needed than uncommon words, given an arbitrary set of retrieval cues.

Measures of average need produced by CBOW have a linear relationship with measures of lexical access. This is a prediction that comes directly from J. R. Anderson’s (1991) rational analysis of memory, and the main focus of this current research. The two other measures motivated by Anderson’s concept of needs probability, contextual diversity (Adelman et al., 2006) and semantic distinctiveness (Jones et al., 2012), both have logarithmic relationships with lexical access times. Although these measures are capturing variation that is relevant for lexical access, the lack of linear relationship suggests that underlying models and motivations for these measures have a qualitative mismatch with Anderson’s theory. However, the fact that CBOW correctly models the shape of this relationship (1) encourages psychologists to treat CBOW as a psychologically plausible computational model of semantics, and consequently (2) supports the argument that we may wish to conceptualize CD/WF effects, not as being explicitly encoded in memory structures, but as being the byproduct of retrieval cues interacting with memory structures that are shaped to be optimally accessible with respect to needs probability over an historic distribution of memory retrieval contexts.

As a final point, CBOW average needs had a linear relationship with lexical access times and that fit was moderate in magnitude. However, the amount of variance accounted for was still substantially less than log CD/WF (see Table 1). Thus the possibility arises that, although it does capture the qualitative effect that was expected, quantitatively CBOW may be a poor model of memory retrieval. In the next experiment, we argue the reason for this is that our calculation of average need treats each dimension in an embedding vector as equally relevant and this is an unreasonable constraint on model testing.

Experiment 2

Word embeddings specify the dimensions along which the uses of words are most clearly distinguished. Conceptually, embeddings are feature vectors that represent a word’s meaning. However, these feature vectors are learned from experience without supervision rather than being specified by behavioral research findings (e.g., McRae et al., 2005) or pseudorandomly generated (e.g., Hintzman, 1988). Interestingly, even though embedding methods are never instructed on what types of features to learn, what they do learn is consistent with the primary dimensions over which humans make semantic distinctions: valence, arousal, dominance, concreteness, animacy. This has been demonstrated for the skip-gram model (Hollis & Westbury, 2016; Hollis, Westbury, & Lefsrud, 2016) as well as CBOW (Hollis & Westbury, 2017). Both models are honing in on, to some extent, psychologically plausible semantic spaces in an unsupervised manner.

It is useful to think about context of memory retrieval as the semantic features underlying probe stimuli (e.g., Howard & Kahana, 2002). It is not the word dog that provides context as a probe stimuli. Rather, it is the underlying meaning of dog that provides context. Two physically dissimilar stimuli (e.g., dog; schnauzer) act very similarly as context because they are united by their underlying semantics.

In a multicue retrieval context, not all cues will be equally salient; some will most certainly be attended to more or less than others, depending on the task or goal. Indeed, individual features learned by the skip-gram model vary substantially in how much they contribute to the prediction of forward association strengths (Hollis & Westbury, 2016). Since not all features are equally relevant in any particular task context, we should expect the ability of embedding models in predicting memory retrieval effects to depend on whether the contributions of individual semantic features are equally weighted or left free to vary.

In Experiment 1, needs probability was measured via cosine similarity. One of the limitations of cosine similarity is that it weights each feature equally; as a means of estimating needs probability, cosine similarity treats each available semantic feature as if it were equally relevant to the task being modeled. Consequently, the results of Experiment 1 are likely an underestimate of how well CBOW and skip-gram embeddings fit lexical access data.

The purpose of Experiment 2 was to provide an assessment of how well CBOW and skip-gram embeddings fit behavioral measures of lexical access when the contributions of each embedding dimension could have independently weighted contributions.

Method

Experiment 2 used the same words, embeddings, and dependent measures as Experiment 1. However, average needs were not calculated according to the method of Experiment 1. Instead, we took a regression approach. Embedding dimensions were regressed on dependent measures as a means of assessing the extent to which variation within a particular embedding dimension (i.e., a semantic feature) could account for variation within the dependent measures. This method accommodates for the fact that semantic features may differ in terms of salience or relevance for any particular task and thus may contribute to processes of memory retrieval by varying degrees.

Results

For ease of readability, we only report results for ELP lexical decision data. Patterns of results for the BLP lexical decision data and ELP naming data were similar but differed in the amount of variance that could be accounted for (BLP lexical decision data are generally most predictable, followed by ELP lexical decision data and then ELP naming data). We likewise limit written results to the Subtitles corpus for ease of readability. Summary results for other corpora are presented in tabular form. All results from regression analyses are after model validation using k-fold cross validation (k = 10). All regressions were performed over the same n = 27,056 used in Experiment 1, unless otherwise stated.

Predictive validity of CBOW and skip-gram embeddings

Individual dimensions from CBOW and skip-gram word embeddings (length 300 each) were regressed on ELP lexical decision times as a means of assessing the relevance of each feature to the task of lexical decision.

CBOW embeddings from the Subtitles corpus accounted for 40.47% of the variance in ELP lexical decision times. The value for the CBOW randomization model was much lower: 0.14%. In contrast, CBOW average needs from Experiment 1 only accounted for 22.37% of the variance in ELP lexical decision times. Leaving each dimension of the model free to contribute independently substantially improves the fit of the model. Readers are reminded that all results from regression analyses are after model validation using k-fold cross validation (k = 10), so increases in fit are not due to simply having a regression equation with more degrees of freedom.

To our surprise, skip-gram embeddings derived from the Subtitles corpus also accounted for a large portion of the variance in ELP lexical decision times: 37.52%. The value for the skip-gram randomization model was comparable to the CBOW randomization model: 0.22%. The skip-gram average needs from Experiment 1 accounted for 22.56% of the variance.

We performed a series of regressions to see how much variance CBOW embeddings accounted for beyond skip-gram embeddings and vice versa. Skip-gram embeddings accounted for 4.33% unique variance over CBOW embeddings, whereas CBOW embeddings accounted for 7.29% unique variance over skip-gram embeddings. Despite using converse learning objectives, the two models are honing in on largely the same variance, as it pertains to lexical decision.

An observation from Experiment 1 was that the skip-gram model overestimates the likely need of uncommon words. When words were split according to common and uncommon words, skip-gram provided a better fit to lexical decision times that CBOW on both halves, but in the wrong direction for uncommon words. This led to the conclusion that skip-gram average needs were capturing meaningful variation in lexical decision times, but for perhaps incidental reasons; the model does not display the correct qualitative pattern across the full range of words.

For the current experiments, we limited analyses to only those words that occurred five times or more in each of the five corpora. This necessarily biases analyses away from very uncommon words, which is not ideal because those are the words that are most highly informative for comparing CBOW and skip-gram as models of semantic memory. We thus reran the above regressions using a larger set (n = 35,783) of words. This set composed the intersection between (1) all words that ELP lexical decision data were available for, and (2) all words where CBOW and skip-gram embeddings were available for in the Subtitles corpus. This primarily included very uncommon words and shifted the median word frequency from 2.69 per million (982 occurrences) to 1.52 per million (545 occurrences).

CBOW embeddings accounted for more variance in lexical decision times when the larger word set was used: 41.90% versus 40.47%. In contrast, skip-gram embeddings accounted for less variance when the larger word set was used: 29.36% versus 37.52%. We again tested how much variance CBOW and skip-gram embeddings accounted for, above the other. CBOW accounted for 16.38% of the variance over skip-gram embeddings, whereas skip-gram only accounted for 3.84% unique variance above CBOW embeddings. When effects of length and orthographic neighborhood size were first accounted for, these numbers dropped to 9.14% for CBOW and 2.21% for skip-gram. Over a larger set of words that is more inclusive of very uncommon words, CBOW substantially outperforms the skip-gram model at accounting for lexical access times.

Consistent with analyses from Experiment 1, CBOW provided a much better fit to lexical access data than skip-gram across the full range of common and uncommon words. These effects are not spurious and instead have to do with the information contained within embeddings, as evidenced by the poor performance of randomization models.

Comparing CBOW embeddings to CD and WF

For comparison, log WF calculated from the Subtitles corpus accounted for 35.27% of the variance in ELP lexical decision times and log CD accounted for 35.76% of the variance. This is lower than the 40.47% accounted for by CBOW embeddings.

We predicted that CBOW embeddings should account for little to no variance in lexical access measures above and beyond WF and CD on the thesis that embeddings are feature vectors of word meaning, and that CD and WF are measures of how likely needed a word’s meaning is, aggregated over numerous semantic features about that word’s underlying meaning. Both sources are capturing the effects of many varied semantic sources on lexical access. Likewise, it was expected that neither CD nor WF would account for much variance in lexical access measures beyond that accounted for by CBOW embeddings.

Word length and orthographic neighborhood size are also both measures thought to play an important role in lexical decision. Indeed, a common benchmark for assessing the relevance of measures thought to play a role in word processing is to see if they account for any variance in behavioral measures above and beyond that accounted for by word length, orthographic neighborhood size, and log WF (Adelman, Marquis, Sabatos-DeVito, & Estes, 2013).

In terms of measuring the predictive validity of CBOW embeddings, it is pertinent to test if they account for any variation beyond length and orthographic neighborhood size. The difficulty is, when performing a direct comparison of the ability of CBOW embeddings, WF, and CD to account for unique variance in lexical decision, also including word length and orthographic neighborhood size may unfairly favor CBOW embeddings. It is known that CD and WF are both correlated with length and orthographic neighborhood size, however we have not yet tested the relationship between these orthographic measures and CBOW embeddings; some of the variance that would be accounted for by WF and CD is instead being accounted for by length and orthographic neighborhood size. To accommodate for this possibility, we perform two sets of comparisons between CBOW, WF, and CD: one set including length and orthographic neighborhood size and one set not including these variables. In both sets of analyses, the goal was to identify how much variance each of CBOW, WF, and CD accounts for in lexical decision times above other measures, as well as how much variance each measure accounted for on their own. Results are presented in Table 3.

These model comparisons produced some interesting patterns of results. First, it was very clear that CBOW was much more sensitive to corpus size than WF and CD. When trained on the TASA corpus, CBOW performed relatively poorly at predicting LDRT effects (15.78% variance accounted for by itself), compared to CD and WF (28.89% and 28.80%). However, as corpus size increased, CBOW had consistent performance gains. This can most clearly be seen when looking across the nested subsets of the Subtitles corpus: 35.35% variance of Subtitles-90 M, 38.75% variance on Subtitles-180 M, and 40.47% on the full Subtitles corpus. In contrast, neither CD nor WF showed substantial gains in predictive validity across these three corpora (CD 35.63%, 35.58%, 35.76%; WF 35.06%, 35.12%, 35.27%). These results are consistent with previous findings. Brysbaert and New (2009) found that the predictive validity of word frequency measures quickly plateau in quality after 20 million tokens. However, the quality of CBOW embeddings, as measured on a variety of different tasks, continues to improve well into billions of tokens. CBOW does, however, perform quite poorly on small corpora where insufficient distributional information is available to adequately learn vector representations for words (Lai, Liu, Xu, & Zhao, 2016). To understand this difference in performance between CD, WF, and CBOW, it is important to consider the differences in the ranges of variability between word frequency and co-occurrence frequency: there is far more variability in the distribution of co-occurrences than word frequencies because word frequency is determined by words whereas co-occurrence is determined by Word × Word relationships; with large numbers of elements, there are always vastly more possible combinations of elements than elements themselves. It makes sense for any sort of measure derived from frequencies to arrive at a stable estimate before a measure derived from co-occurrences. It likewise makes sense for co-occurrence measures to perform much poorer on small data sets for the same reasons; for small corpora, co-occurrence information is too sparse despite individual words possibly having occurred numerous times.

A second interesting pattern of results is that CBOW accounts for more unique variance than either WF or CD on the three largest corpora (i.e., Subtitles-180 M, Subtitles, Wikipedia). This is most pronounced on the Wikipedia corpus where CBOW accounts for 25.59% of the variance in lexical access measures above CD and WF (9.84% after accounting for length and orthographic neighborhood size), whereas CD and WF account for 0.00% and 0.16%, above the other two variables, respectively (0.27% and 0.05% after length and orthographic neighborhood size). This is perhaps an unfair comparison, given that CD and WF are effectively the same measure on this corpus and over these n = 27,056 words (r > .99). But even when looking at these variables in a pairwise fashion, the differences in unique variance is very large: 26.12% and 25.79% for CBOW over CD and WF respectively (9.89% and 10.01% after length and orthographic neighborhood size) compared to 6.19% and 6.35% for CD and WF over CBOW respectively (4.30% and 4.08% after length and orthographic neighborhood size).

A third interesting pattern is that as corpus size grows, less unique variance is attributable to CD and WF and more to CBOW. So it is not just that CBOW is consistently capturing more of the variance that would otherwise be attributable to CD and WF as corpus size increases (less unique variance attributable to CD and WF). In large corpora, CBOW is also picking up on additional information that CD and WF do not carry (more unique variance attributable to CBOW). This pattern is clearly seen when looking at the unique variance attributable to each variable on the two-variable comparisons (e.g., CBOW after CD vs. CD after CBOW) over the three Subtitles corpora. The pattern is present whether or not effects due to length and orthographic neighborhood size are accounted for first.

As a final observation, it bears noting that although CBOW and the other two variables have quite a bit of shared variance, CBOW never completely excludes the other two, nor vice versa. Focusing specifically on pairwise comparisons over the Subtitles corpora, where none of CBOW, CD and WF are at large deficits (unlike TASA, which is likely too small of a corpus for CBOW to be effectively trained on, and Wikipedia where CD and WF measures are exceptionally poor), the amount of unique variance attributable to CBOW ranges from 6.73% to 8.92% (3.49% to 4.79% after length and orthographic neighborhood size), versus 4.05% to 7.04% for CD (2.42% to 4.55% after length and orthographic neighborhood size), and 3.72% to 6.44% for WF (1.87 to 3.64% after length and orthographic neighborhood size).

Discussion

The results of Experiment 2 provide support for two conclusions. First, CBOW has more psychological relevance than skip-gram as a model of word meaning. This is evidenced by the fact that CBOW embeddings account for substantially more variance in lexical access times than skip-gram embeddings when considered across the full range of common and uncommon words.

Second, the effects that CD and WF have on lexical access are largely semantic in nature. This is supported by that fact that large portions of the variance in lexical access times accounted for by CD and WF can also be accounted for by embeddings produced by CBOW, which are semantic feature vectors. These results helps reconcile the fact that so few semantic features have been found that impinge strongly on tasks that require access to word meaning, above and beyond WF. We adopt J. R. Anderson’s (1991) stance that the primary consideration of memory retrieval is needs probability. Needs probability is definitionally dependent on semantics. CD and WF are indexing the cumulative effects of numerous semantic factors that influence the need for knowledge across a broad range of contexts. From this theoretical stance, the question of where variation in retrieval times is coming from should actually be approached from a direction that is opposite the typical one: How much variance does WF account for in lexical decision times beyond semantic features? These results suggest that the answer is, at most, very little.

Third, in terms of operationalizations of average need, CBOW embeddings provide the superior account when used with large corpora, whereas CD and WF appear better suited for smaller corpora (less than ~90 million tokens). However, in no cases did CBOW embeddings exclude CD or WF from predicting lexical decision times, or vice versa. It remains unclear whether this is because the measures are actually picking up on unique variation, have differential dependency on corpus size (possibly, CBOW might account for all of the relevant variation with a sufficiently large corpus), or due to lack of parameter optimization of CBOW. All of these options should be pursued in future research. At the current moment, however, we can confidently conclude that CBOW embeddings, WF, and CD all have a large degree of shared variance between them insofar as their ability to account for lexical decision times is concerned. This is to be expected under the thesis that all three measures get their predictive power via their shared relationship to the construct of needs probability.

General discussion

The reported research presents multiple findings and theoretical implications that are of relevance to the study of language and memory.

Differences between CBOW and skip-gram

CBOW and skip-gram differ in the predictive validity of lexical access times, with CBOW providing the superior fit across the full range of common and uncommon words in all cases. CBOW average needs also provided the expected linear fit to logarithmic CD values, whereas those of the skip-gram model displayed a U-shaped relationship. Furthermore, CBOW lent itself to psychological interpretation more readily than skip-gram. Overall, of the two models, CBOW appears to be the one that will be of most interest to psychologists; its behavior and formal learning objective are both consistent with J. R. Anderson’s rational analysis of memory (1991; J. R. Anderson & Milson 1989; J. R. Anderson & Schooler, 1991).

It bears noting that of the two models, skip-gram generally produces more desirable behavior on engineering problems, particularly when very large corpora (multiple millions of tokens) are used to learn embeddings (e.g., Mikolov, Chen, et al., 2013; Mikolov, Sutskever, Chen, Corrado, & Dean, 2013). However, it would be fallacious to claim that higher accuracy on complex tasks necessarily means one model is more in line with actual human performance than another model. Humans are rarely perfectly accurate on anything, and correctly modeling errors is often stronger evidence for a model than correctly modeling nonerrors. Thus, for any task, further gains past some performance threshold start becoming strongly indicative of very unhumanlike behavior.

Experiment 1 demonstrated that, within skip-gram, words with the highest global similarity to all other words are those that are either very frequent or very infrequent. If ‘knife’ is a probe word, then of the near-synonyms of cut, slash, and lacerate, the high-frequency cut and the low-frequency lacerate are expected to be more accessible than the middling-frequency slash. As model benchmarking tests are made more difficult (i.e., by drawing on more obscure, less frequent, test items), skip-gram will necessarily outperform CBOW due to how the two models organize their entries; low-frequency words in skip-gram are, on average, more similar to an arbitrary probe than they are in CBOW (Experiment 1) and are thus more accessible on average.

Skip-gram prioritizes retrieval of information that is as general as possible (high frequency) or as specific as possible (low frequency), downweighting the middle range. For applied problems like returning results from a search engine query, retrieved documents would be optimally useful for users who either want very general, entry-level information or very specific and focused knowledge, ignoring the middle. At face value, this appears to be a desirable property for many information retrieval systems, in contrast to CBOW where very specific information would be difficult to access. So although skip-gram may be better solving an interesting information retrieval problem, it may still not be a more useful model of human semantic memory than CBOW.

Word frequency and contextual diversity effects

The results of both Experiment 1 and Experiment 2 bolster previous claims that WF and CD effects in language processing tasks are likely semantic in nature (e.g., Jones et al., 2012). Experiment 1 motivates this conclusion by demonstrating that average needs calculated from CBOW are strongly correlated with both log WF and log CD. Experiment 2 motivates this conclusion by demonstrating that a large portion of the variability in lexical access measures accounted for by CD and WF can also be accounted for by the semantic features comprising CBOW embeddings; when considering the Subtitles corpus (i.e., the corpus that produces CD and WF measures with the most predictive validity), CD alone accounts for 35.76% of the variance in lexical access times. However, only 2.42% is attributable uniquely to CD, above and beyond the effects of CBOW embeddings, word length, and orthographic neighborhood size. For WF, this number is 1.87%. These results are difficult to reconcile for models that explain CD or WF effects in terms of mechanisms that are indifferent to the semantic content of memory.

It is possible that the 2.42% of variance attributed to Subtitles-based CD and 1.87% to WF per se reported here is an overestimate. Experiments 1 and 2 both identified a trend that as corpus size grew, the amount of variance uniquely attributable to CD and WF was diminished. It is a fair question whether or not this trend continues if larger corpora are employed. This is in addition to the facts that (1) no parameter optimization was performed for CBOW, and (2) it is difficult to know how much one is distorting the relevant co-occurrence structure of texts when preprocessing it to remove nonalphabetical characters: a problem that has no consequence for the calculation of WF and CD but most certainly has consequences for models that learn word meanings from co-occurrence structure.

An objection to the above argument is that humans are unlikely to have much more linguistic exposure than what the models in the currently reported experiments have received. Thus, there is little psychological relevance in considering the effects of corpora larger than are used in the current research. Brysbaert, Stevens, Mandera, and Keuleers (2016) point out that the theoretical maximum linguistic exposure of an individual per year is quite limited. Estimates range between 11.688 million tokens/year (based on the distributions of social interactions and speech in those interactions), 27.26 million tokens/year (watching television 24 hours per day), and 105 million tokens/year (reading 16 hours per day). Thus, a human will have been exposed to, at maximum, between 220 million and 2 billion words by the time they are 20 years old, depending on the source by which linguistic exposure is estimated. It is very likely that corpora used in the current research already exceed the realistic linguistic exposure of most university undergraduates.