Abstract

Here, we investigate how audiovisual context affects perceived event duration with experiments in which observers reported which of two stimuli they perceived as longer. Target events were visual and/or auditory and could be accompanied by nontargets in the other modality. Our results demonstrate that the temporal information conveyed by irrelevant sounds is automatically used when the brain estimates visual durations but that irrelevant visual information does not affect perceived auditory duration (Experiment 1). We further show that auditory influences on subjective visual durations occur only when the temporal characteristics of the stimuli promote perceptual grouping (Experiments 1 and 2). Placed in the context of scalar expectancy theory of time perception, our third and fourth experiments have the implication that audiovisual context can lead both to changes in the rate of an internal clock and to temporal ventriloquism-like effects on perceived on- and offsets. Finally, intramodal grouping of auditory stimuli diminished any crossmodal effects, suggesting a strong preference for intramodal over crossmodal perceptual grouping (Experiment 5).

Similar content being viewed by others

Conscious perception involves the efficient integration of sensory information from different modalities. On the one hand, crossmodal integration can make perceptual experience richer and more accurate if the different modalities provide complementary information about single objects or events. On the other hand, however, erroneous grouping of crossmodal information (e.g., grouping sources that do not belong together) can lead to distortions of conscious perception. To get around this problem, it is essential that there should be efficient brain mechanisms of intra- and intermodal perceptual grouping that evaluate whether streams of sensory information should be combined into single perceptual constructs or not. Although humans can be aware of some of these mechanisms, other mechanisms may play their prominent role outside of awareness (Repp & Penel, 2002). Research on the unity assumption (i.e., the extent to which observers treat highly consistent sensory streams as belonging to a single event) has demonstrated that successful crossmodal integration of auditory and visual components in speech perception requires conscious perception of the two sensory inputs as belonging together (Vatakis & Spence, 2007). Such dependency has not been found for audiovisual integration with nonspeech stimuli (Vatakis & Spence, 2008). Even within single modalities, subconscious perceptual grouping mechanisms play an important role, since the global perceptual organization of spatially or temporally separated “chunks” of sensory information can have distinct effects on “local” perception (e.g., Klink, Noest, Holten, van den Berg, & van Wezel, 2009; Watanabe, Nijhawan, Khurana, & Shimojo, 2001).

In multimodal integration, the brain typically relies more heavily on the modality that carries the most reliable information (Alais & Burr, 2004; Burr & Alais, 2006; Ernst & Bülthoff, 2004; Recanzone, 2003; Wada, Kitagawa, & Noguchi, 2003; Walker & Scott, 1981; Welch & Warren, 1980; Witten & Knudsen, 2005). The assignment of reliability can be based on intrinsic properties of individual sensory systems or on the signal-to-noise ratio of the available sensory input. The visual system, for example, has a higher spatial resolution than does the auditory system (Witten & Knudsen, 2005). Thus, when visual and auditory information about the location of a single object in space are slightly divergent, the perceived location of the audiovisual object will be closer to the actual visual location than to the actual auditory location (Alais & Burr, 2004; Welch & Warren, 1980; Witten & Knudsen, 2005). Such an “illusory” perceived location is the basis of every successful ventriloquist performance. For the temporal aspects of perception, the auditory system is usually more reliable and, thus, more dominant than the visual system (Bertelson & Aschersleben, 2003; Freeman & Driver, 2008; Getzmann, 2007; Guttman, Gilroy, & Blake, 2005; Morein-Zamir, Soto-Faraco, & Kingstone, 2003; Repp & Penel, 2002). This is strikingly demonstrated when a single light flash is perceived as a sequence of multiple flashes when it is accompanied by a sequence of multiple auditory tones (Shams, Kamitani, & Shimojo 2002).

The perception of time or event duration is one specific case where conscious perception often deviates from the physical stimulus characteristics (Eagleman, 2008). Since time is a crucial component of many perceptual and cognitive mechanisms, it may be surprising that the subjective experience of the amount of time passing is distorted in many ways, such as by making saccades (Maij, Brenner, & Smeets, 2009; Morrone, Ross, & Burr, 2005; Yarrow, Haggard, Heal, Brown, & Rothwell, 2001) or voluntary actions (Park, Schlag-Rey, & Schlag, 2003), by the emotional state of the observer (Angrilli, Cherubini, Pavese, & Mantredini, 1997), or by stimulus properties such as magnitude (Xuan, Zhang, He, & Chen 2007), dynamics (Kanai, Paffen, Hogendoorn, & Verstraten, 2006; Kanai & Watanabe, 2006), or repeated presentation (Pariyadath & Eagleman, 2008; Rose & Summers, 1995). Moreover, if temporal sensory information about duration is simultaneously present in multiple modalities, crossmodal integration can also cause distortions of subjective time perception (e.g., Chen & Yeh, 2009; van Wassenhove, Buonomano, Shimojo, & Shams, 2008). For example, it is known that when sounds and light flashes have equal physical durations, the sounds are subjectively perceived as longer than the light flashes (Walker & Scott, 1981; Wearden, Edwards, Fakhri, & Percival 1998). Furthermore, when auditory and visual stimuli of equal physical duration are presented simultaneously, the auditory system dominates the visual system and causes the durations of visual stimuli to be perceived as longer than they physically are (Burr, Banks, & Morrone, 2009; Chen & Yeh, 2009; Donovan, Lindsay, & Kingstone, 2004; Walker & Scott, 1981).

Time perception mechanisms are classically explained with (variants of) the scalar expectancy theory (SET; Gibbon, 1977; Gibbon, Church, & Meck, 1984). SET proposes an internal clock mechanism that contains a pacemaker emitting pulses at a certain rate. During an event, a mode switch closes and allows for emitted pulses to be collected into an accumulator. The number of pulses in the accumulator at the end of the timed event is compared against a reference time from memory. This comparison determines the perceived duration in a linear fashion: More accumulated pulses means longer perceptual durations. Whereas SET offers explanations for many aspects of time perception and distortion, it remains unclear how duration information from multiple modalities is integrated to allow a crossmodal estimation of event durations.

In general, the perceived duration of an event can directly be influenced by a change in pacemaker rate, a change in mode switch open/close dynamics, or distortions in memory storage and retrieval (Penney, Gibbon, & Meck 2000). Within the SET framework, the difference in the perceived duration of equally long visual and auditory stimulus durations has been attributed to modality-specific pacemaker rates for visual and auditory time (Wearden et al., 1998). Additionally, the dilation of subjective visual stimulus durations by simultaneously presented auditory stimuli has been explained by changes in pacemaker rate, and not in mode switch latency (Chen & Yeh, 2009). Using a duration bisection procedure, it has also been demonstrated that distortions in the memory- stage of SET can occur when a current sensory duration is compared against a previously trained reference duration that is stored in memory (Penney et al., 2000). In this paradigm, observers are trained to discriminate between short- and long-duration signals (both labeled anchor durations). In a subsequent test phase, they judge whether the durations of novel stimuli are closer to the short or to the long anchor duration. If both auditory and visual anchor durations have to be simultaneously kept in memory, a memory-mixing effect occurs: The subjectively long auditory anchor duration and the subjectively short visual anchor duration mix into an intermediate reference duration that is perceived as shorter than the auditory anchor but longer than the visual anchor of equal physical duration (Penney et al., 2000).

Although some authors have attributed a difference in perceived internal clock rate to an attentional effect at the level of the mode switch (Penney, Meck, Roberts, Gibbon, & Erlenmeyer-Kimling, 2005), most have concluded that distortions of subjective time duration do not result from a change in mode switch dynamics but, rather, from a change in the rate of the internal clock (Chen & Yeh, 2009; Penton-Voak, Edwards, Percival, & Wearden, 1996; Wearden et al., 1998). However, since these studies all used auditory and visual stimuli with the same physical on- and offset moments, it cannot be excluded that mode switch dynamics will play a more prominent role in crossmodal time perception when the on- and offsets are not the same. On the contrary, studies showing that the perceived temporal order of multiple visual stimuli can be influenced by the presence of irrelevant sounds (a phenomenon termed temporal ventriloquism; Bertelson & Aschersleben, 2003; Getzmann, 2007; Morein-Zamir et al., 2003) suggest that audiovisual integration may also distort the perceived on- and offset moment of visual events. One way by which temporal ventriloquism might play a role in the perceived duration of a visual event is that it shifts the subjective on- and offset of a visual event toward the on- and offset of an accompanying auditory stimulus. If these shifted subjective visual on- and offsets determine the moment at which the mode switch closes and opens, they could very well modulate the subjective duration of a visual event without changing the rate of the internal clock. Alternatively, the mode switch closing and opening could be determined by the physical, rather than by subjective, on- and offsets. In such a scenario, performance on a visual duration discrimination task should be immune to temporal ventriloquism-like effects.

The experiments presented here provide evidence for the idea that both the rate of the internal clock and the perceived on- and offset of a visual target stimulus are modulated by crossmodal interactions. Below, we discuss a series of human psychophysical experiments on audiovisual duration perception that exploited a two-alternative forced choice, prospective method of duration discrimination (i.e., observers knew that they would report which of two stimuli had a longer duration). In order to investigate both the hypothesized effects of temporal ventriloquism and the previously demonstrated changes in internal clock rate, we presented auditory and visual stimuli both with and without differences in their physical on- and offsets. We started out by testing the hypothesis that an irrelevant auditory stimulus influences the perceived duration of a visual target but that irrelevant visual stimuli do not affect the perceived duration of an auditory target (Experiment 1). Although such an asymmetry has been shown with different experimental approaches (Bruns & Getzmann, 2008; Chen & Yeh, 2009), it has not yet been shown with the experimental paradigm that we used throughout this study. We then continued by testing the hypothesis that for any such crossmodal effect to occur, the onsets and offsets of the auditory and visual stimuli need to be temporally close enough to evoke some kind of subconscious binding (Experiment 2). The possible role of temporal ventriloquism-like effects was explored in more detail in Experiment 3, where the temporal differences between the on- and offsets of the target and nontarget stimuli in the different modalities were systematically varied. In Experiment 4, we set out to determine whether the auditory dominance over visual duration discrimination would be reflected in a complete shift of the time perception system from using visual temporal information to using auditory temporal information, or whether some weighted average would be used that relied more heavily on auditory than on visual information. Our fifth and final experiment controlled for an important possible confound in all the other experiments. Any crossmodal effect on reported perceived durations might be due to a truly altered experience of subjective durations in the target modality caused by crossmodal interactions within the time perception system, but it could also represent a behavioral shift toward reporting perceived durations from the irrelevant nontarget modality instead. Using stimulus conditions in which intra- and crossmodal grouping of stimulus elements are to be expected, we demonstrated that subconscious crossmodal grouping of auditory and visual stimuli is necessary for the crossmodal effects on duration discrimination to occur.

Ultimately, our interpretation of the results is summarized in a schematic SET model for crossmodal duration perception (Fig. 6). In the first stage of the model, stimulus features are perceptually grouped within and/or across modalities. The second stage incorporates a multimodal version of the SET that captures temporal ventriloquism effects in the timing of the mode switch and accounts for additional crossmodal influences with modality-dependent internal clock rates.

General method

The basic experimental setup was the same for all the experiments. The differences between the experiments predominantly concerned the precise timing of stimuli and the kind of perceptual judgment observers were asked to report. Those specific details are described in the Method sections of the individual experiments.

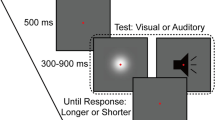

All the stimuli were generated on a Macintosh computer running MATLAB (Mathworks, Natick, MA) with the Psychtoolbox extensions (Brainard, 1997; Pelli, 1997) and were displayed on a 22-in. CRT monitor with a resolution of 1,280 × 1,024 pixels and a refresh rate of 100 Hz. Observers used a head- and chinrest and viewed the screen from a distance of 100 cm. In all the experiments, the observers performed a two-alternative forced choice task; they reported which of two target stimuli they perceived to have a longer duration. The modality of the target stimuli was indicated to the observers on the screen prior to presentation of the stimulus. The visual targets were white circles or squares with a diameter of ~3° of visual angle and with an equal surface area to keep total luminance constant. The luminance of the visual targets was 70 cd/m2, and they were presented on a gray background with a luminance of 12 cd/m2. The auditory targets were pure tones of 200 Hz, played to the observers through a set of AKG K512 stereo headphones at a SPL of ~64 dB (measured at one of the headphone speakers with a Temna 72-860 sound level meter). All the participants had normal or corrected-to-normal visual acuity and no known auditory difficulties. All the experiments contained randomly interleaved catch trials on which large duration differences (400 ms) were present in the target modality, whereas nontargets were of equal duration. Adequate performance on catch trials was an indication that an observer was performing the tasks correctly. Poor performance on catch trials (less than 75% correct) was reason for exclusion of an observer from the data analysis. For this reason, 6 observers were excluded from Experiment 3, and 2 from Experiment 5. The number of observers that is mentioned in the Method sections of the individual experiments indicates the number of observers who performed adequately on catch trials and whose data were included in the analysis. All the observers were students or scientific staff in Utrecht University’s departments of psychology and biology, ranging in age from 19 to 35 years.

Experiment 1

Asymmetric audiovisual distortions in duration perception

In this experiment, investigated whether crossmodal influences between auditory and visual duration perception could be demonstrated with our experimental paradigm. If such effects were found, this experiment would further reveal whether they depended on the temporal properties of nontarget stimuli and/or the temporal relation between the target and nontarget stimuli.

Method

Ten observers (ranging in age from 21 to 30 years, 5 males and 5 females, 2 authors) participated in this experiment. They reported which of two target stimuli they perceived as having a longer duration. Prior to presentation, observers were notified whether the target stimuli would be visual or auditory. The target stimuli were always accompanied by nontarget stimuli in the other modality. Before the actual experiment, all the participants performed a staircase procedure to determine their individual just-noticeable differences (JNDs) for visual and auditory stimuli with a base duration of 500 ms. In this procedure, they essentially performed a task that was the same as the main task—that is, comparing the duration of two stimuli—but here the target stimuli were never accompanied by nontarget stimuli in another modality. The staircase procedure used the Quest algorithm in Psychtoolbox (Watson & Pelli, 1983) and consisted of 25 trials converging on 82% correct, determining the minimal duration difference an observer can reliably detect at a base duration of 500 ms. The staircase was performed 3 times for both modalities, and the average for each modality was taken as the individual observer’s JND. The observer-specific JNDs were then used in the main experiment. The average JND over all observers for auditory stimuli was 78.9 ms (SEM = ±8.9 ms), and for visual stimuli, it was 117.7 ms (SEM = ±8.7 ms).

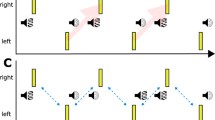

The stimuli in the target modality had a duration of 500 ms ± JND/2, and the order in which the long and short stimuli were presented was counterbalanced. The stimuli in the nontarget modality either could both 500 ms (δt nontarget = 0) or could be 400 and 600 ms (δt nontarget = 200 ms; see Fig. 1a). When there was a duration difference between the nontarget stimuli, the short nontarget stimulus was always paired with the long target stimulus, and the long nontarget stimulus with the short target stimulus. The temporal midpoints of the target and nontarget stimuli could either be aligned (marked “Center Aligned”) or shifted ±250 ms relative to each (“Center Shifted”). We aligned stimuli by their midpoint, since we expected temporal ventriloquism to play a role in the perceived on- and offsets of multimodal stimuli. Alignment by midpoints has the benefit of equal temporal deviations between the onsets and offsets of target and nontarget stimuli. The interstimulus interval between the target stimuli, defined as the temporal separation between their midpoints, was 1,500 ms, with a randomly assigned jitter between −50 and +50 ms (Fig. 1a). Experimental conditions were presented in blocks of 40 repetitions. Individual trials started when the observer pressed a designated key on a standard keyboard. The order of these blocks was counterbalanced.

Setup and results of Experiment 1. a Example of the experimental setup for Experiment 1. Here, the targets are visual, and observers report which of two visual targets (rectangles in top panel) they perceive to have a longer duration. In this example, the visual targets are paired with auditory nontarget stimuli that are aligned with the visual targets by their temporal “midpoints” and have a duration difference in the opposite direction of the duration difference in the visual targets. b Results of Experiment 1 demonstrate asymmetric crossmodal influences in duration perception. The percentage of correctly identified longer target stimuli is plotted, split by target modality. Visual targets are shown in the top panel, and auditory targets in the bottom panel. Within a modality, a distinction is made between cases in which the target and nontarget stimuli were center aligned (left panels) or center shifted (right panels) with respect to their temporal midpoints. Gray bars represent cases in which there was no duration difference in the nontarget modality, white bars represent cases with a duration difference in the nontarget modality, and error bars represent SEMs

The first 5 observers (including the 2 authors) were asked to indicate whether they perceived either the first or the second stimulus to have the longest duration. Even though observers were instructed to fixate a dot on the screen during the entire duration of the experiment, this specific instruction would, in principle, allow them to completely ignore nontarget visual stimuli by temporarily closing their eyes. None of the observers admitted to adopting such a strategy, but to avoid the possibility altogether, we modified the instructions and asked a second group of 5 observers to report whether they perceived either the circle (accompanied with the sound) or the square (accompanied with the sound) to have a longer duration. Since the order in which the square and circle appeared was pseudorandom, this instruction forced observers to keep looking at the screen. The results from both observer groups were highly similar and, therefore, were combined in the group analysis.

Results and discussion

Figure 1b displays the percentage of trials on which observers correctly identified the longer target stimulus for each experimental condition. If nontarget stimuli have no effect on duration discrimination performance with target stimuli, observers would be expected to perform at a level of 82% correct, which was the threshold level of the staircase procedure that determined their individual JNDs. The first thing that becomes clear from the results in Fig. 1b is that there appears to be an asymmetry in the extent to which visual (top panel) and auditory (bottom panel) duration discrimination performances are influenced by nontarget stimuli in the other modality. A within-subjects ANOVA (factors: target modality visual vs. auditory, center-aligned vs. center-shifted presentation, and nontarget duration different vs. equal) confirmed that there was indeed a significant difference between the target modalities, F(1, 37) = 119.44, p < .001. It also returned significant differences between the cases in which the nontarget stimuli had the same duration (gray bars in Fig. 1b) and the cases in which the nontarget stimuli had a duration difference opposite to that of the target stimuli (white bars in Fig. 1b), F(1, 37) = 40.18, p < .001.

Center-aligned versus center-shifted presentation of the target and nontarget also had a significant effect on performance, F(1, 37) = 23.17, p < .01, but because the interaction between center alignment and target modality was significant as well, F(1, 37) = 15.68, p < .001, we reanalyzed the results for the two target modalities separately (within -subjects ANOVA with factors of center-aligned vs. center-shifted presentation and non-target duration different vs. equal). This analysis revealed that when target stimuli are visual, there are significant effects both of center-aligned versus center-shifted presentation, F(1, 9) = 27.74, p < .001, and of difference versus no difference in auditory nontarget durations, F(1, 9) = 160.75, p < .001. The interaction between the two was not significant but did show a trend, F(1 ,9) = 4.00, p = 0.08, suggesting that the effect of auditory nontarget duration differences was slightly larger when the temporal midpoints of visual targets and auditory nontargets are aligned. When the targets were auditory, neither of these contrasts reached significance (center-aligned/center-shifted, F(1, 9) = 0.01, p = .92; difference/no-difference in nontarget, F(1, 9) = 0.82, p = .39. Thus, crossmodal distortions in duration perception occurred only for visual targets in an auditory context, not the other way around.

All the different visual target conditions were individually analyzed, revealing that for the center-aligned cases, a duration difference in the auditory nontargets reduced performance by 61.0% (SEM = ±4.4), bringing it significantly below the individual measured thresholds, t(9) = −13.92, p < .001, and even below chance, t(9) = −5.01, p < .01. This implies a strong bias to report the short visual target stimulus (paired with the long auditory nontarget stimulus) as being subjectively longer than the long visual target stimulus (paired with the short auditory nontarget stimulus). Thus, the presence of sounds does not merely impair performance on a visual duration discrimination task but actually modulates the subjective visual duration. If there is no difference in auditory nontarget duration, performance on visual duration discrimination is still impaired by 20.1% (SEM = ±4.9%), which brings it significantly below the JND threshold, t(9) = −4.15, p < .01, but keeps it significantly higher than chance, t(9) = 3.90, p < .01. In the center-shifted cases, a significantly reduced performance of 29.9% (±2.8% SEM) was observed when there was an auditory nontarget duration difference, t(9) = −10.81, p < .001, but the 5.5% (SEM = ±3.7%) impairment when there was no duration difference between auditory nontarget stimuli was not significant, t(9) = −1.50, p = .17. In the latter case, performance was still significantly better than chance, t(9) = 9.15, p < .001, suggesting that no mentionable crossmodal effects took place. None of the individual cases for auditory targets were statistically different from the 82% threshold level [effect-sizealigned/diff = −1.8% ± 6.7%, t aligned/diff (9) = −0.27, p aligned/diff = .79; effect-sizealigned/diff = −1.2% ± 3.1%, t aligned/no_diff (4) = −0.39, p aligned/no_diff = .71; effect-sizeshifted/diff = −6.1% ± 4.7%, t shifted/diff (9) = −1.31, p shifted/diff = .22; effect-sizeshifted/no_diff = 3.7% ± 3.3%, t shifted/no_diff (9) = 1.12, p shifted/no_diff = .29].

We conclude that visual duration discrimination performance is influenced by the presence of auditory nontarget stimuli but that the extent of impairment depends critically on both the relative on- and offsets and the duration differences between the target and nontarget stimuli in both modalities. If the visual target and auditory nontarget stimuli are center aligned and the auditory nontarget stimuli have a duration difference opposite to that of the visual targets, performance on the visual duration discrimination task will be impaired most. When the stimuli are either center shifted with auditory nontarget duration differences or center aligned without auditory nontarget duration differences, performance is impaired less. Finally, there is no significant impairment of visual duration discrimination performance when both stimuli are center Shifted and auditory nontarget duration differences are absent.

We suspect that while the presence or absence of a duration difference in the auditory nontargets may influence the internal representation of a visual duration through crossmodal interactions, the effect of temporal alignment and its consequential difference in the on- and offsets of visual and auditory stimuli will predominantly act upon the likeliness that crossmodal binding will occur and promote the crossmodal interactions in the time perception system. The next experiment tested the hypothesis that temporal proximity of the on- and offsets of the target and nontarget stimuli in the different modalities is indeed required for crossmodal effects to occur in our duration discrimination task.

Experiment 2

The need for crossmodal on- and offset proximity

The asymmetric crossmodal effects demonstrated in Experiment 1 raise several questions with regard to the critical stimulus aspects that evoke the distortions of subjective stimulus duration. Do additional nontarget sounds influence all subjective duration judgments or only the duration judgments for visual stimuli? Furthermore, do the differences between the center-aligned and center-shifted conditions result from a mere difference in the amount of temporal overlap between the visual targets and auditory nontargets, or does the actual timing of the on- and offsets of the stimuli play the crucial role we predicted? In this second experiment, we addressed these questions by combining auditory and visual duration judgments with contextual background sounds that, if present, started well before and ended well after target presentation, resulting in equal amounts of crossmodal temporal overlap but very large crossmodal on- and offset differences.

Method

The same ten observers who participated in Experiment 1 also performed in this experiment. They reported which of two target stimuli they perceived to have a longer duration. The target stimuli could either be visual (circles or squares) or auditory (pure tones at 200 Hz). Nontarget background stimuli were always auditory (pure tones at 100 Hz), with significantly longer durations than the target stimuli. Prior to Experiment 1, all the participants had performed a staircase experiment to determine their individual JNDs for visual and auditory stimuli with a base duration of 500 ms. These individual JNDs were also used in this experiment. Stimuli in the target modality had a duration of 500 ms ± JND/2, and the order of the long and short target stimuli was counterbalanced. Auditory background stimuli were 2,500 ms in duration, and their midpoint was temporally aligned with the midpoint of the target stimuli, resulting in an on- and offset difference of minimally 1,000 ms − JND/4, which should be more than enough to prevent audiovisual integration (Jaekl & Harris, 2007). Auditory background sounds, if present, could be played in conjunction with the short target stimulus only, the long target stimulus only, or both target stimuli (Fig. 2a). The interstimulus interval between target stimuli, defined as the temporal separation between their midpoints, was 2,500 ms, with a randomly assigned jitter between −50 and +50 ms. Observers were familiarized with the stimuli and task before the experiment started, and all of them indicated that the auditory target stimuli could be easily distinguished from the auditory background sound. Each combination of target/nontarget stimuli was presented 40 times, resulting in 320 trials that were distributed over four blocks of trials in a counterbalanced way to allow observers to have short breaks. Individual trials started when observers pressed a designated key on a standard keyboard.

Setup and results of Experiment 2. a Observers reported which of two target stimuli they perceived to have a longer duration. Target stimuli could be either visual circles or squares or auditory tones (200 Hz), and the duration difference between the targets matched with individually determined just-noticeable differences (JNDs) for each observer. Target stimuli could be accompanied by long background sounds (100 Hz) that could be paired with the short target stimulus, the long target stimulus, or both. b Results are plotted as the percentage correctly identified longer targets for each experimental condition. No significant effects were found of either target modality or nontarget condition. Error bars represent SEMs

Results and discussion

Figure 2b plots the percentage of correctly identified longer targets for each experimental condition. None of the experimental conditions appear to have had any effect on the observers’ performance. A statistical analysis of the data (within-subjects ANOVA) confirmed that there was no significant difference between visual targets (left panel) and auditory targets (right panel), F(1, 27) = 0.54, p = .48, nor was there an effect of nontarget stimulus timing, F(3, 27) = 1.50, p = .24. The only two distinctions between the auditory nontarget stimuli that did have an effect in Experiment 1 and the auditory background stimuli that had no effect in Experiment 2 were their frequency and the difference in on- and offset timing between target stimuli and nontarget sounds. It seems highly unlikely that auditory influences on perceived visual duration would be crucially different for sounds of 100 Hz and sounds of 200 Hz or that an observer’s capability of blocking the auditory influence with attention would depend on such a minor frequency difference. Comparing the results from Experiments 1 and 2, we conclude that an auditory nontarget affects the perceived duration of a visual target only when the on- and offsets of the target and nontarget stimuli are close enough in time. Such temporal proximity of target and nontarget on- and offsets might merely allow the subconscious binding of auditory and visual stimuli, thereby promoting crossmodal distortions of duration perception. Alternatively, a temporal ventriloquism-like effect on the perceived on- and offsets of the target stimuli may also play a role in the construction of perceived event durations. This possibility was explored in the next experiment.

Experiment 3

A parametric approach to auditory distortions of visual duration

The first two experiments demonstrated that duration judgments about a visual target stimulus are distorted by the presence of auditory nontarget stimuli with on- and offsets that are close in time to the on- and offsets of the visual target stimulus. It remains unclear, however, whether these distortions occurred because the sounds increased the perceived duration of the short visual stimulus, decreased the perceived duration of the long visual stimulus, or both. Furthermore, it is unclear whether the temporal proximity of these on- and offsets merely promote crossmodal interactions, or whether the temporal difference in on- and offset has a more systematic effect (as we would expect from temporal ventriloquism). Our third experiment employed a parametric method to investigate the influence of auditory stimuli on visual duration judgments. Two visual stimuli of equal duration had to be compared, while one of the two was accompanied with an auditory nontarget stimulus that could have either a shorter or a longer duration than the visual stimulus it was paired with.

Method

Twelve observers (ranging in age from 19 to 35 years, 7 males and 5 females) participated in this experiment. Two of these observers had also participated in Experiments 1 and 2. They reported which of two visual target stimuli they perceived to have a longer duration. One of the target stimuli (which could be the first or the second, randomly assigned and counterbalanced) was paired with an auditory nontarget stimulus. Both of the visual target stimuli had a duration of 500 ms, whereas the auditory nontarget stimuli had pseudorandomly assigned durations ranging from 150 to 850 ms in 50-ms steps (Fig. 3a). This resulted in duration differences between the visual target stimuli and auditory nontarget stimuli ranging from −350 to +350 ms. The target and nontarget stimuli were temporally aligned by the midpoint of their duration. The interstimulus interval between target stimuli, defined as the temporal separation between their midpoints, was 1,500 ms, with a randomly assigned jitter between −50 and +50 ms. Each stimulus combination was repeated 16 times in pseudorandom, counterbalanced order (yielding a total of 240 trials for each observer). Individual trials started when observers pressed a designated key on a standard keyboard.

Setup and results of Experiment 3. a Observers reported which of two visual target stimuli they perceived to have a longer duration. One of the two target stimuli was accompanied by an auditory nontarget stimulus with a variable duration (this was counterbalanced between the first and second stimuli). Visual stimuli always had a duration of 500 ms, while durations of the auditory nontarget stimuli ranged from 350 ms (150 ms shorter than the visual target) to 850 ms (150 ms longer than the visual target). b The results of the experiment are plotted as the percentage of trials on which the visual target stimulus with an auditory nontarget was perceived to have the longest duration of the two visual stimuli. The thick black line plots the averaged data of 12 observers. Error bars represent SEMs

Since we did not inform our observers that most of the visual stimuli had equal physical durations (physical duration differences were present only on catch trials), we subjected them to an extensive debriefing procedure after the experiment. All the observers reported that they considered duration judgments on some trials to be a lot easier than on other trials, but they also claimed that even on the relatively hard trials, they usually had a reasonable idea of which stimulus had the longest duration. None of the observers reported having been aware of the fact that almost all the visual stimuli had no actual duration difference.

Results and discussion

The results of Experiment 3 are shown in Fig. 3b. The percentage of trials on which the visual target with an auditory nontarget was perceived as longer than the visual target without an auditory nontarget stimulus is plotted against the difference in duration between an auditory nontarget and a visual target. A first thing to note is that the presence of auditory nontargets can result in both longer and shorter perceived visual durations, depending on the relative duration of the nontarget sounds. This effect of nontarget duration was significant, F(14, 165) = 14.27, p < .001. If visual targets are paired with auditory nontargets of equal or longer physical duration, the perceived duration of this visual target is increased (Fig. 3b) (t tests on individual points: t(11) between 2.44 and 7.15, all p values < .04). Interestingly, even when the auditory nontarget stimulus with which the visual target was paired was of exactly the same duration, the visual target was still perceived to have a longer duration than the visual-only stimulus of equal duration on 66.8% (SEM = ±4.7%) of the trials, which is significantly above chance, t(11) = 3.59, p < .01. When, however, the auditory nontargets were between 100 and 350 ms shorter than the visual targets they were paired with, the visual targets were significantly more often perceived to be shorter than they physically were (ranging from 59.5 ± 3.2% to 75.0 ± 3.8% of the time), ts(11) between −5.52 and −2.54, all p values < .03.

From the perspective of SET, a change in perceived duration can occur because of a change in pacemaker rate, a change in the duration that the mode switch is closed and pulses are fed into the accumulator, or distortions in the translation of information from the accumulator stage to the reference memory. Previous studies have demonstrated that when an auditory and a visual stimulus have the same physical duration, the auditory stimulus is perceived to be longer than the visual stimulus (Penney et al., 2000; Walker & Scott, 1981; Wearden et al., 1998). This effect has been attributed to an auditory pacemaker rate that is faster rate than the visual pacemaker rate (Penney et al., 2000; Wearden et al., 1998). When auditory and visual stimuli are grouped crossmodally, there could be an audiovisual pacemaker rate that is faster than the visual pacemaker rate, yet slower than the auditory pacemaker rate.

Whereas this explanation holds well for a perceived dilation of visual duration, it cannot explain the observed perceived shortening of visual durations when visual targets are paired with significantly shorter auditory nontargets. To be able to account for this effect, we have to incorporate an audiovisual-integration-driven, temporal ventriloquism-like change in mode switch timing. In temporal order judgment studies, typically used to investigate temporal ventriloquism, it has been shown that the temporal order discrimination performance for two subsequently presented visual stimuli greatly improves when the first visual stimulus is preceded by an auditory tone and the second visual stimulus followed by another tone (Bertelson & Aschersleben, 2003; Getzmann, 2007; Morein-Zamir et al., 2003). The predominant explanation for this effect is that the temporal onsets of the auditory stimuli capture the onsets of the visual stimuli, thereby effectively shifting their perceived temporal position further apart. A similar thing could happen in our experiments, where the actual on- and offsets of visual targets might have been involuntarily captured by the on- and offsets of the auditory nontargets and perceptually shifted toward them. In SET, this would result in an altered closing time of the mode switch. An alternative, more trivial explanation for our results might be that our observers strategically switched to reporting differences between the auditory nontarget duration and the visual target duration when they were unable to reach a decision about a difference between visual target durations. This seems unlikely since, upon debriefing, observers reported not to have been aware of the fact that the vast majority of visual targets actually had equal durations. If the above-mentioned strategy still played a role in observer’s reports, it would thus not have been a consciously initiated strategy but, rather, a subconscious neural process of which the observer was not aware (Repp & Penel, 2002). This may, in fact, be just another way of suggesting that the brain’s time perception mechanism is subjected to crossmodal influences that change its functional “strategies.”

When visual targets were paired with an auditory nontarget of equal duration (point 0 on the x-axis in Fig. 3b), they were usually perceived to have a longer duration than their visual-only companion targets. Since temporal ventriloquism-like effects are unlikely to play a significant role here (on- and offsets moments are the same for both modalities), the cause of this distortion may be suspected to lie in an altered rate of the internal clock, or in SET terminology, the rate of the pacemaker. The idea that auditory distractors may influence the rate of the pacemaker for visual duration judgments has been proposed before (Chen & Yeh, 2009; Penton-Voak et al., 1996; Wearden et al., 1998), but it remains unclear whether the time perception system just switches from a slow visual to a fast auditory pacemaker, or whether there could be something like an audiovisual pacemaker running at an intermediate rate. These possibilities were tested in Experiment 4.

Experiment 4

The relative rates of the pacemaker

From a scalar timing theory point of view, the finding that the perceived duration of a visual target stimulus increases in the presence of an auditory nontarget stimulus of equal physical duration (Experiment 3) could be attributed to an increased pulse rate of a central amodal pacemaker (Chen & Yeh, 2009; Penton-Voak et al., 1996; Wearden et al., 1998), to a difference in the intrinsic rates of independent modality-specific pacemakers (Mauk & Buonomano, 2004; van Wassenhove et al., 2008), or to modality-specific accumulator dynamics dealing with pulses from a central amodal pacemaker (Rousseau & Rousseau, 1996). Since our present experiments cannot explicitly distinguish between these possibilities, we will discuss our data using a more general terminology of modality-specific pacemaker rates, rather than attribute any effect to actual modality-specific pacemakers or accumulators. In this fourth experiment, we set out to unravel whether any differences can be observed between supposedly pure visual, pure auditory, and audiovisual pacemaker rates.

Method

Eleven observers (ranging in age from 21 to 28 years, 7 males and 4 females, 2 authors) participated in this experiment. Three of these observers (including the authors) also had participated in Experiments 1 and 2, whereas 3 others also had participated in Experiment 3. The observers were presented with a visual and an auditory target stimulus, and they reported which of the two they perceived to have a longer duration (probing the auditory vs. visual pacemaker rate). The duration of the visual stimulus was always 500 ms, while the duration of the auditory stimulus varied from 400 to 600 ms in steps of 50 ms. Each pair of stimuli was presented 40 times in pseudorandom order, and individual trials started when observers pressed a designated key on a standard keyboard. The order of the visual and auditory stimuli within a single trial was pseudorandomly chosen to prevent fixed order effects (Grondin & McAuley, 2009).

Three of the above-mentioned observers (1 author) and 8 additional observers (ranging in age from 20 to 28 years, 2 males and 3 females) also performed two additional conditions in which they compared (1) the durations of a pure auditory target stimulus and a visual target stimulus that was paired with an auditory nontarget stimulus of equal physical duration (probing the auditory vs. audiovisual pacemaker rate) and (2) the durations of a pure visual target stimulus and a visual target stimulus that was paired with an auditory nontarget stimulus of equal physical duration (probing the visual vs. audiovisual pacemaker rate). The unimodal stimuli were always 500 ms, while the crossmodal stimulus pairings varied in duration from 400 to 600 ms in steps of 50 ms. Each stimulus pair was presented 40 times in pseudorandom order. The order of the stimuli within a trial was pseudorandom as well, and individual trials started when observers pressed a designated key on a standard keyboard.

Results and discussion

The results of Experiment 4 are displayed in Fig. 4. We fitted the data of individual observers to a Weibull function using the psignifit 2.5.6 toolbox for MATLAB (see http://bootstrap-software.org/psignifit/) that implements a maximum-likelihood method (Wichmann & Hill, 2001) to estimate the point of subjective equality (PSE). At the PSE, observers are equally likely to label either of two stimuli as longer, indicating that their subjective durations can be regarded as equal. The group-averaged psychometric curves are plotted in Fig. 4a–c as thick black lines. Figure 4a demonstrates a significant effect of duration difference on the percentage of auditory longer responses, F(4, 50) = 17.62, p < .001. When auditory and visual stimuli had the same physical duration, the auditory stimulus was perceived to have a longer duration on 65.3% (SEM = ± 4.8%) of the trials. This percentage is significantly above chance, t(10) = 3.20, p < .01. The average PSE was −48.8 ms (SEM = ± 13.2 ms), which is significantly different from zero, t(10) = −3.70, p < .01, indicating that, on average, observers perceived our visual and auditory targets as having equal duration when the visual stimulus was, in fact, about 50 ms longer.

Results of Experiment 4. a The duration of a sound is compared with that of a visual stimulus. The group-averaged psychometric curve (thick black line) is shifted to the left, indicating that when a sound and a visual stimulus were of equal physical duration, the sound was significantly more often perceived to have a longer duration than the visual stimulus. Data,points represent the average data of 11 observers (error bars are SEMs). b The duration of a target sound is compared with that of a visual target stimulus that was paired with a nontarget sound of equal physical duration as the visual stimulus. When the two targets were of equal physical duration, observers performed at chance level. Data points represent the average data of 11 observers (error bars are SEMs), and the thick black line is the group-averaged psychometric function. c The duration of a visual target stimulus is compared with that of a second visual target stimulus paired with a nontarget sound of equal physical duration The group-averaged psychometric curve (thick black line) is shifted to the left, indicating that a visual stimulus was perceived to have a longer duration when it was paired with a sound of equal duration. Data points represent the average data of 11 observers (error bars are SEMs). d Comparison of the shifts in the point of subjective equality (PSE) for the experiments presented in panels a to Cc. Significant deviations from zero are observed for visual versus auditory targets (white bar) and visual versus visual targets with auditory nontargets (dark gray bar), but not for auditory versus visual targets with auditory nontargets (light gray bar). Error bars indicate SEMs

Figure 4b plots how the perceived duration of a visual target stimulus paired with an equally long nontarget sound compared with the perceived duration of purely auditory target stimuli. Data points represent the average data for 11 observers. There was a significant effect of duration difference on the percentage of visual longer responses, F(4, 50) = 69.63, p < .001. When the audiovisual stimulus was of equal physical duration as the purely auditory stimulus, observers did not significantly perceive any of the two as longer, t(10) = −1.07, p = .31. This notion is confirmed by the fact that the average PSE was not significantly different from zero (SEM = −5.0 ± 5.6 ms), t(10) = −0.88, p = .40.

In Fig. 4c, purely visual target durations are compared with the duration of visual target stimuli that were paired with equally long nontarget sounds. There was again a significant effect of duration difference on the percentage of visual-only longer responses, F(4, 50) = 37.03, p < .001. Also, when the visual target stimuli had the same physical duration, the one paired with the sound was perceived to have a longer duration on 64.8% (SEM = ± 3.0%) of the trials. This percentage is significantly above chance, t(10) = 5.00, p < .01. The average PSE was −40.3 ms (SEM = ± 6.7 ms), which is significantly different from zero, t(10) = −5.98, p < .01, indicating that, on average, observers perceived the targets as having equal duration when the visual stimulus paired with the sound was, in fact, about 40 ms longer.

To directly compare the supposed effects of purely auditory, purely visual, and audiovisual pacemaker rates, we plotted the average PSEs for the different conditions in Fig. 4d. The difference in PSE for comparing pure visual target durations with pure auditory target durations (white bar) and comparing pure visual target durations with visual target durations paired with a sound (dark gray bar) was not significant, F(1, 20 ) = 0.33, p = .57), while both these conditions did significantly differ in PSE from the condition in which pure auditory target durations and visual target durations paired with sounds were compared (light gray bar), F(1, 20) = 9.33, p < .01, and F(1, 20) = 16.17, p < .01, respectively. From the perspective of SET, these results suggest either that the time perception system automatically switches to the auditory pacemaker rate when sounds are present or that there is an additional audiovisual pacemaker rate that is highly similar to the auditory pacemaker rate.

It is possible that in all the experimental conditions in which observers were asked to use the duration of a visual target stimulus for comparison in a duration discrimination task and, at the same time, ignore the auditory nontarget that it was paired with, observers, in fact, subconsciously switched to using the duration of the auditory nontarget (Repp & Penel, 2002). In order to distinguish such an explanation from the more tentative hypothesis that the observed changes in duration discrimination performance were due to subconscious crossmodal grouping and its consequential influences on the brain’s time perception system, we performed a fifth experiment. In that experiment, we manipulated the likeliness of intramodal and crossmodal stimulus grouping to investigate how this would affect the previously demonstrated crossmodal distortions in duration discrimination.

Experiment 5

Intramodal perceptual grouping prevents crossmodal duration effects

Although the results of all the previous experiments strongly suggest that the crossmodal grouping of auditory and visual stimuli is an essential prerequisite for the occurrence of crossmodal interactions in duration perception, we cannot exclude an alternative hypothesis according to which observers exclusively use the duration of auditory nontargets in their duration discrimination tasks. This fifth experiment examined whether auditory influences on visual duration perception would persist if we disturbed the supposed crossmodal grouping by allowing the auditory stimuli to be grouped intramodally, rather than crossmodally. This manipulation should have no effect on a behavioral switch toward using the auditory nontargets in the duration comparison. It should, however, affect changes in perceived duration based on crossmodal interactions of grouped stimuli in the time perception system. The fact that intramodal perceptual grouping reduces or abolishes crossmodal effects has been shown with other experimental paradigms before (Bruns & Getzmann, 2008; Keetels, Stekelenburg, & Vroomen, 2007; Lyons, Sanabria, Vatakis, & Spence, 2006; Penton-Voak et al., 1996; Sanabria, Soto-Faraco, Chan, & Spence, 2004, 2005; Vroomen & de Gelder, 2000), but if we want to use the argument of subconscious grouping in the context of our own findings, we need to demonstrate that it is also true for the duration discrimination paradigm we used in our experiments.

Method

Ten observers (ranging in age from 20 to 28 years, 7 males and 3 females, 1 author) participated in this experiment. Two of these observers also had participated in Experiments 1, 2, and 4, 1 had participated in Experiments 3 and 4, 3 had participated in Experiments 1 and 2, and 4 observers had not participated in any of the other experiments. Prior to the main experiment, observers were subjected to a staircase procedure in order to determine their discrimination thresholds for visual durations. The staircase procedure used the Psychtoolbox Quest algorithm and consisted of 25 trials, converging on 82% correct. It was performed 3 times, and the averages of the three obtained threshold values were taken as the individual observer’s JNDs. These JNDs (average, 135.6 ms ± 9.8 ms SEM) were then used in the main experiment, where observers were asked to judge which of two visual stimuli with a duration difference equal to their individually determined JND had a longer duration. Three conditions were tested. In the first condition, the visual target stimuli were the only stimuli presented. In the second condition, the visual target stimuli were paired with auditory nontarget stimuli that had a difference in duration opposite to that of the visual stimuli (as in Experiment 1). The third condition was similar to the second, but now the critical stimulus presentations were preceded by three unimodal repetitions of the nontarget sound stimuli (Fig. 5a). The visual target stimuli had durations of 500 ms ± JND/2, and the order in which the long and short stimuli were presented was counterbalanced. Auditory nontarget stimuli had durations of 400 ms (paired with the long visual stimulus) and 600 ms (paired with the short visual stimulus). The visual target stimuli and auditory nontarget stimuli were aligned by their temporal midpoints, and the interstimulus interval between target stimuli was 1,500 ms. In the condition with the preceding sounds, the pair of short and long tones was played 3 times, with the tones in the same order as they would eventually have when paired with the visual targets. In addition, these pairs were presented with fixed interstimulus intervals of 1,500 ms (between the midpoints) to create the vivid experience of a consistent auditory stream. Each experimental condition was repeated 20 times in pseudorandom order.

Setup and results of Experiment 5. a The experiment comprised three conditions: (1) a purely visual duration discrimination task (L = long, S = short); (2) a visual duration discrimination task with the visual targets paired with auditory nontargets that had a duration difference with an opposite sign, as compared with the duration difference of the visual targets; and (3) the same condition as in 2, but this time with the comparison preceded by a stream of auditory nontargets that were rhythmically consistent with the two nontarget sounds in the task. b Visual discrimination performance (bar marked 1) is at the predetermined level for individual just-noticeable differences (JNDs). In the presence of nontarget sounds, performance drops significantly (bar marked 2), but when a stream of nontarget sounds preceded the task, performance goes back up (bar marked 3). Error bars indicate SEMs

Results and discussion

The results of this experiment are plotted in Fig. 5b. When observers discriminate durations of two purely visual targets (Fig. 5a), they perform at the same level that was used to determine their individual JNDs (light gray bar marked with 1 in Fig. 5b) (80.7% ± 1.9% correct), t(9) = −0.72, p = .49. This is not surprising, since they are essentially performing the same task as that in the preceding staircase procedure. When the visual targets are paired with auditory nontargets having opposite duration differences (Fig. 5a), they are performing the same task as the observers in Experiment 1. As was found in Experiment 1, performance on identifying the longer visual stimulus was significantly impaired by the presence of auditory nontargets (middle bar marked with 2 in Fig. 5b) (59.7% ± 7.8% correct), t(9) = −2.88, p < .02. However, if this condition was preceded by a stream of irrelevant auditory nontargets, performance went back up and was indistinguishable from the 82% threshold level (77.5% ± 4.7% correct), t(9) = −0.96, p = .36. We therefore conclude that a subconscious intramodal grouping of auditory nontargets into a consistent auditory stream prevents the subconscious crossmodal binding that is necessary for the crossmodal influence of auditory nontargets on the discrimination performance of visual target durations. These results clearly support the idea that the distortions of visual duration discrimination performance by irrelevant auditory stimuli presented in this study were based on interactions between crossmodally grouped stimuli within the time perception system, instead of a mere behavioral switch toward reporting the durations of auditory nontargets rather than the durations of visual targets.

General discussion

Adequate estimation of event durations is critical for both behavioral and cognitive performance, but how does the brain estimate event durations? Perceiving the duration of an event is, in a sense, a classic cue combination problem (e.g., Landy, Maloney, Johnston, & Young, 1995). In order to be as accurate as possible, the brain will rely on all available relevant cues and weigh their influences on the basis of their relative reliabilities, determined by a multitude of factors such as signal-to-noise ratio and intrinsic resolution. But how does the brain “know” whether different cues provide information about a single perceptual objective and, thus, should be combined? This question is particularly interesting when the different cues come from sensory modalities (Driver & Spence, 1998, 2000; Kanai, Sheth, Verstraten, & Shimojo, 2007; Sugita & Suzuki, 2003; Vroomen, Keetels, de Gelder, & Bertelson, 2004; Wallace et al., 2004). In a series of five experiments, we explored the interactions between vision and audition in the perception of event duration. Since the auditory system is thought to represent time more reliably than does the visual system, we would expect the brain to recruit auditory temporal information when it needs to resolve visual temporal problems (as in a visual duration discrimination task). Visual information, on the other hand, should not be used in resolving auditory temporal tasks. This expectation is confirmed by our experiments, which provide clues about when and how these crossmodal effects may occur.

Experiment 1 confirms the asymmetric nature of crossmodal influences on duration discrimination with simultaneous auditory and visual sensory input. Performance on a visual duration discrimination task was significantly impaired by the presence of auditory nontargets that had either an opposite duration difference, as compared with the visual stimuli they were paired with, or no duration difference at all. Oppositely, visual nontargets did not influence performance on an auditory duration discrimination task at all. This asymmetry in crossmodal audiovisual influences confirms previous results (Bruns & Getzmann, 2008; Chen & Yeh, 2009; but the opposite has also been shown: van Wassenhove et al., 2008) and is most likely caused either by asymmetries in involuntary crossmodal grouping or by asymmetries in modality-specific temporal reliabilities. The former suggests that irrelevant sounds are automatically grouped with relevant visual targets, whereas irrelevant visual stimuli are not automatically grouped with relevant sounds. If this is the case, it could very well be due to asymmetries in the modality-specific reliability of temporal information. Since the auditory system has a much higher temporal resolution than does the visual system, it is not improbable that the brain would, by default, employ available auditory information when it estimates visual durations (and not the other way around). This becomes even more likely if we realize that crossmodal duration distortions occur only when stimulus onsets are in close temporal proximity to each other. When, in Experiment 1, auditory nontargets were slightly shifted in time relative to the visual targets they were paired with, their influence on visual duration discrimination performance significantly decreased.

The results of Experiment 2 add further support to this idea. When the same visual duration discrimination task as that in Experiment 1 was performed while visual targets were paired with auditory nontargets that started well before and ended well after the visual targets were presented, performance was not influenced by the sounds. Consequently, the mere presence of sound is not enough to evoke changes in subjective visual durations. If the onsets and offsets of the auditory and visual stimuli are distinctly different, this could be interpreted as a no-go signal for crossmodal binding.

Whereas the crossmodal difference in on- and offset may function as an important prerequisite for the mere occurrence of crossmodal effects (i.e., when it is within the range of on- and offset asynchronies where crossmodal effects do occur), the actual size of this difference in on- and offset may be important. In a related phenomenon termed temporal ventriloquism, the performance on a visual temporal order judgment task is influenced by the presence of irrelevant auditory stimuli (Bertelson & Aschersleben, 2003; Getzmann, 2007; Morein-Zamir et al., 2003). If a first nontarget sound is played before a first visual target and a second nontarget sound is played after a second visual target, observers are able to detect much smaller temporal differences in the onset of the two visual targets than when these two nontarget sounds are played in between two visual targets. The prevailing explanation of temporal ventriloquism suggests that visual onsets are “pulled” toward the auditory onsets, thereby creating a perceived audiovisual onset that is a weighted average of the visual and auditory onsets but leans more toward the auditory than toward the visual onset moment. In a similar way, the on- and offsets of auditory nontargets may shift the perceived on- and offsets of visual targets and may influence the subjective visual target duration.

Theories of duration perception often boil down to variations on SET or scalar timing theory (Gibbon, 1977; Gibbon et al., 1984). Basically, this theory states that in order to perceive durations, a neural mode switch closes, allowing pulses from a pacemaker to be collected in an accumulator. After the switch is reopened, the number of pulses in the accumulator is compared against a reference memory to establish a perceived duration. From an SET point of view, the temporal ventriloquism-like explanation of altered subjective duration could be interpreted as an altered duration of mode switch closure (closed upon perceived onset, opened upon perceived offset). Alternatively, changes in subjective duration could also be caused by a change in SET’s pacemaker rate or the translation of the accumulator state to the reference memory (memory mixing; Penney et al., 2000).

Conventional approaches to disentangling the effects of the mode switch and the pacemaker rate have focused on the latency of the switch by calculating the intercept and slope of a linear regression through the relationship between a range of physical and perceived base durations (Penton-Voak et al., 1996; Wearden et al., 1998). This analysis method has generally demonstrated that audiovisual duration distortions result from a change in pacemaker rate, and not from changes in switch latency (Chen & Yeh, 2009; Penton-Voak et al., 1996; Wearden et al., 1998). Furthermore, it has been repeatedly shown that auditory events are generally perceived to have a longer duration than visual events of equal physical duration (Penney et al., 2000; Walker & Scott, 1981; Wearden et al., 1998; but see Boltz, 2005, for the absence of this effect if naturalistic stimuli are used, or Grondin, 2003, for a review of the specific circumstances for intermodal effects on timing deviations). Studies in which visual and auditory targets of equal physical duration have been used generally have reached the conclusion that the auditory pacemaker rate is faster than the visual pacemaker rate and that the auditory distortions of perceived visual durations should be attributed to these changes in the rate of the internal clock (Chen & Yeh, 2009; Penton-Voak et al., 1996; Wearden et al., 1998).

In our approach, we cannot calculate intercepts and slopes, because we use a single base duration. However, our results from Experiment 3 do suggest that the temporal ventriloquism-like effects on mode switch latency and the previously demonstrated variable internal clock rates may both play a role. When we paired a visual target with an auditory nontarget whose duration ranged from shorter than, via equally long as, to longer than the visual target, we observed that when this visual target was accompanied by an equally long or longer sound, it was perceived as longer than an equally long visual target without a sound. This confirms previous findings and suggests that the rate of the internal clock indeed plays a role. However, we also observed a significant shortening of the subjective visual duration when the visual target was paired with a shorter auditory nontarget. Such shortening cannot be explained by a pacemaker rate account, but it does fit the prediction of temporal ventriloquism-like effects on the mode switch latency.

The collective results of the first three experiments raise two important questions. First, does the time perception system switch between a visual and an auditory pacemaker rate, or does it dynamically scale its pacemaker rate in the context of crossmodal sensory evidence? This question was addressed in Experiment 4, where we compared the relative pacemaker rates derived from duration discrimination experiments in which observers compared visual target durations against auditory target durations, unimodal visual target durations against auditory target durations paired with visual nontargets, and unimodal auditory target durations against auditory target durations paired with visual nontargets. The results confirm the idea that both the auditory pacemaker rate and the hypothesized audiovisual pacemaker rate are higher than the visual pacemaker rate (Penton-Voak et al., 1996; Wearden et al., 1998), but they also suggest that if an audiovisual pacemaker rate even exists, it cannot be distinguished from a purely auditory pacemaker rate.

The second question that arises from our experiments concerns the nature of the demonstrated auditory influences on visual duration discrimination performance. Are the effects truly caused by crossmodal interactions in the time perception system, or are observers (subconsciously) switching from using visual target durations to using auditory nontarget durations in the visual duration discrimination task? Knowing that a global temporal context might influence perceived interval duration (Jones & McAuley, 2005), we created experimental conditions in which we manipulated the likeliness of crossmodal grouping of auditory and visual stimuli by introducing a global context that promoted intramodal grouping of auditory stimulus elements instead (Experiment 5). Such a manipulation should not affect performance if observers simply used auditory instead of visual duration in their task, but we expected it to have strong effects if the demonstrated crossmodal influences on duration discrimination depended on crossmodal grouping of auditory and visual stimuli. Our results demonstrate that the crossmodal effects of auditory stimuli on visual duration discrimination performance are abolished when the auditory stimuli can be intramodally grouped with a preceding stream of sounds and confirm previous studies that demonstrated a precedence of intramodal over crossmodal grouping that prevents crossmodal influences (see also Bruns & Getzmann, 2008; Keetels & Vroomen, 2007; Lyons et al., 2006; Penton-Voak et al., 1996; Sanabria et al., 2004, 2005; Vroomen & de Gelder, 2000).

When all the results are taken into account, the general rule emerges that auditory temporal information is recruited to improve the accuracy of visual duration judgments, but only if there is sufficient reason for the brain to assume that the information of the two senses are about the same event. In none of our experiments was there an a priori reason why pure tones and visual squares or circles should be considered as independent information sources of a single event, but their temporal co-occurrence was apparently enough to evoke subconscious crossmodal binding.

We can schematically depict our interpretation of the results in a functional crossmodal SET model (Fig. 6). Such a scheme consists of an intra- and crossmodal grouping stage where stimuli are perceptually grouped, followed by temporal ventriloquism-like effects on the control of the mode switch, and a pacemaker running at a weighted crossmodal pulse rate that, under audiovisual conditions, is heavily (if not completely) dominated by the “auditory rate of time.”

Functional scalar expectancy model of audiovisual duration perception. Visual and auditory sensory input first enters the brain as separate sources of information. It is then processed by a perceptual-grouping stage that determines whether intra- or crossmodal grouping will occur. If crossmodal grouping does not occur, a unimodally defined mode switch is closed to allow pulses from a pacemaker with a unimodally defined rate to be collected in an accumulator. If the auditory and visual inputs are, however, grouped crossmodally, the period that the mode switch is closed is determined by the weighted contribution (W) of both modalities (temporal ventriloquism), with a stronger emphasis on the auditory information (W S(A) > W S(V)). The rate at which pulses are then collected in the accumulator is again a weighted average of the unimodal auditory and visual pulse rates. Here, the dominance of audition is even stronger (in Experiment 5, the audiovisual pacemaker rate was not statistically different from the auditory pacemaker rate), resulting in weight factor asymmetry W P(A) >> > W P(V)

An alternative explanation that seems to fit our data equally well at first sight incorporates the process of memory mixing, instead of crossmodal pacemaker rates, as a source for a change in the subjective rate of the internal clock (Penney et al., 2000). The memory-mixing hypothesis is usually tested using a duration bisection paradigm in which target stimuli are judged to be closer in duration to either a short or a long trained reference duration that is kept in memory. If the remembered reference durations for auditory and visual targets are similar, distortions occur that indicate that these reference durations become a mixture of a fast-rate auditory and a slow-rate visual pulse count. Consequently, stimuli with a physical duration equal to the reference memory duration are perceived as longer when they are auditory and as shorter when they are visual (Penney et al., 2000). In our paradigm, observers are asked to make shorter–longer judgments between sequentially presented stimuli, without having to keep a trained, standard reference in long-term memory. Memory mixing may, however, still play a role in the immediate retrieval of interval duration information for perceptual judgments. In this explanation, the crossmodal effects would not happen during the encoding of target duration, where the visual and auditory pacemakers each feed their pulses into a unimodal accumulator but, rather, would occur upon retrieval of the encoded target duration. The short-term unimodal memories could become mixed, resulting in distortions of the subjective crossmodal interval duration.

In conclusion, we demonstrated that the brain automatically uses temporal information from irrelevant sounds to judge durations of visual events, provided that the temporal characteristics of the two sensory streams of information are such that crossmodal binding is feasible. The distortions of visual duration perception through the crossmodal influence of audition is caused both by the perceived onset and offset of the visual stimuli (a temporal ventriloquism-like effect for interval duration) and by the integrated activity of a functional pacemaker during this period.

Interesting objectives for future studies include investigations of the perceptual grouping process (what are the critical criterions for intra- and crossmodal grouping?), the apparent asymmetry in crossmodal influences (will lower auditory signal-to-noise ratios allow visual influences on auditory duration perception?), and the possible role of memory mixing and a search for activity shifts in pacemaker-like neural substrates under different uni- and multimodal conditions (Buhusi & Meck, 2005). Despite a long history in time perception research, there is obviously still a lot of effort needed before we may begin to understand how the brain accomplishes the seemingly effortless perception of the temporal aspects of our multimodal surroundings.

References

Alais, D., & Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Current Biology, 14, 257–262. doi:10.1016/j.cub.2004.01.029

Angrilli, A., Cherubini, P., Pavese, A., & Mantredini, S. (1997). The influence of affective factors on time perception. Perception & Psychophysics, 59, 972–982.

Bertelson, P., & Aschersleben, G. (2003). Temporal ventriloquism: Crossmodal interaction on the time dimension: 1. evidence from auditory–visual temporal order judgment. International Journal of Psychophysiology, 50, 147–155. doi:10.1016/S0167-8760(03)00130-2

Boltz, M. G. (2005). Duration judgments of naturalistic events in the auditory and visual modalities. Perception & Psychophysics, 67, 1362–1375.

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10, 433–436. doi:10.1163/156856897X00357

Bruns, P., & Getzmann, S. (2008). Audiovisual influences on the perception of visual apparent motion: Exploring the effect of a single sound. Acta Psychologica, 129, 273–283. doi:10.1016/j.actpsy.2008.08.002

Buhusi, C. V., & Meck, W. H. (2005). What makes us tick? Functional and neural mechanisms of interval timing. Nature Reviews. Neuroscience, 6, 755–765. doi:10.1038/nrn1764

Burr, D., & Alais, D. (2006). Combining visual and auditory information. Progress in Brain Research, 155, 243–258. doi:10.1016/S0079-6123(06)55014-9

Burr, D., Banks, M., & Morrone, M. C. (2009). Auditory dominance over vision in the perception of interval duration. Experimental Brain Research, 198, 49–57. doi:10.1007/s00221-009-1933-z

Chen, K., & Yeh, S. (2009). Asymmetric cross-modal effects in time perception. Acta Psychologica, 130, 225–234. doi:10.1016/j.actpsy.2008.12.008

Donovan, C. L., Lindsay, D. S., & Kingstone, A. (2004). Flexible and abstract resolutions to crossmodal conflicts. Brain and Cognition, 56, 1–4. doi:10.1016/j.bandc.2004.02.019

Driver, J., & Spence, C. (1998). Cross-modal links in spatial attention. Philosophical Transactions of the Royal Society B, 353, 1319–1331. doi:10.1098/rstb.1998.0286

Driver, J., & Spence, C. (2000). Multisensory perception: Beyond modularity and convergence. Current Biology, 10, 731–735. doi:10.1016/S0960-9822(00)00740-5

Eagleman, D. M. (2008). Human time perception and its illusions. Current Opinion in Neurobiology, 18, 131–136. doi:10.1016/j.conb.2008.06.002

Ernst, M. O., & Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends in Cognitive Sciences, 8, 162–169. doi:10.1016/j.tics.2004.02.002

Freeman, E., & Driver, J. (2008). Direction of visual apparent motion driven solely by timing of a static sound. Current Biology, 18, 1262–1266. doi:10.1016/j.cub.2008.07.066

Getzmann, S. (2007). The effect of brief auditory stimuli on visual apparent motion. Perception, 36, 1089–1103.

Gibbon, J. (1977). Scalar expectancy theory and Weber's law in animal timing. Psychological Review, 84, 279–325.

Gibbon, J., Church, R. M., & Meck, W. H. (1984). Scalar timing in memory. Annals of the New York Acadamy of Sciences, 423, 52–77. doi:10.1111/j.1749-6632.1984.tb23417.x

Grondin, S. (2003). Sensory modalities and temporal processing. In H. Helfrich (Ed.), Time and mind: II. Information processing perspectives (pp. 61–77). Toronto: Hogrefe & Huber.

Grondin, S., & McAuley, J. D. (2009). Duration discrimination in crossmodal sequences. Perception, 38, 1542–1559.

Guttman, S. E., Gilroy, L. A., & Blake, R. (2005). Hearing what the eyes see: Auditory encoding of visual temporal sequences. Psychological Science, 16, 228–235. doi:10.1111/j.0956-7976.2005.00808.x

Jaekl, P. M., & Harris, L. R. (2007). Auditory–visual temporal integration measured by shifts in perceived temporal location. Neuroscience Letters, 417, 219–224. doi:10.1016/j.neulet.2007.02.029

Jones, M. R., & McAuley, J. D. (2005). Time judgments in global temporal contexts. Perception & Psychophysics, 67, 398–417.

Kanai, R., & Watanabe, M. (2006). Visual onset expands subjective time. Perception & Psychophysics, 68, 1113–1123.

Kanai, R., Paffen, C. L. E., Hogendoorn, H., & Verstraten, F. A. J. (2006). Time dilation in dynamic visual display. Journal of Vision, 6(12), 1421–1430. doi:10.1167/6.12.8

Kanai, R., Sheth, B. R., Verstraten, F. A. J., & Shimojo, S. (2007). Dynamic perceptual changes in audiovisual simultaneity. PLoS ONE, 2, e1253. doi:10.1371/journal.pone.0001253