Improved Central Attention Network-Based Tensor RX for Hyperspectral Anomaly Detection

Abstract

:1. Introduction

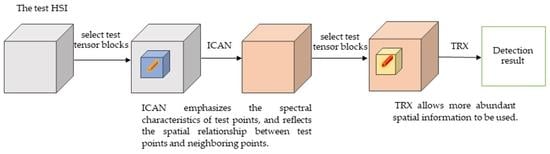

- Hyperspectral AD mainly depends on the spectral feature of a test point in HSI. Neighborhood points similar to the test point also contain discriminative information. In ICAN, the spectral information of a test point is emphasized, and spatial information reflects the similarity between the test point and its neighborhood points. The extraction of spectral–spatial features is more reasonable;

- In ICAN, the test point in the center of a test tensor block is used as a convolution kernel to perform convolution operation with the test tensor block, which reflects the similarity between the test point and its neighborhood points. The determination of this convolution kernel avoids the selection of training samples in CNN;

- Because the input of ICAN is the test tensor block, TRX is used after ICAN, which allows more abundant spatial information to be used for AD.

2. The Proposed Methods

2.1. DBN

2.2. Improved Central Attention Module

2.3. Tensor RX for HSI

2.4. ICAN-TRX for HSI

| Algorithm 1: ICAN-TRX |

| Input: HSI and test tensor . |

| (1) is first transformed into a pixelwise two-dimensional matrix (), and DBN is employed for the reconstruction of Y. The reconstruction matrix () is transformed into a tensor . |

| (2) The central tensor in is used as a convolution kernel to convolve with by Equation (1), and the result tensor as the key tensor is transformed into the weight matrix Z . |

| (3) The tensor is the pointwise multiplication of as the value tensor and Z, and the tensor is the pointwise division of the central tensor in and the central point in Z. |

| (4) is used as a convolution kernel to convolve with by Equation (2) for the result , and the HSI transformed by ICAN is obtained. |

| (5) TRX is used in the HSI transformed by ICAN by Equation (11), and the final AD result is obtained. |

| Output: AD result. |

3. Experimental Results

3.1. Datasets

3.2. Experiment

3.3. Parameter Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent Advances in Techniques for Hyperspectral Image Processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Landgrebe, D. Hyperspectral Image Data Analysis. IEEE Signal Process. Mag. 2002, 19, 17–28. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Y.; Wang, Q.; Zhu, G. Fast Hyperspectral Anomaly Detection via High-Order 2-D Crossing Filter. IEEE Trans. Geosci. Remote Sens. 2015, 53, 620–630. [Google Scholar] [CrossRef]

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef] [Green Version]

- Chang, C.-I.; Chiang, S.-S. Anomaly Detection and Classification for Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1314–1325. [Google Scholar] [CrossRef] [Green Version]

- Matteoli, S.; Diani, M.; Corsini, G. A Tutorial Overview of Anomaly Detection in Hyperspectral Images. IEEE Aerosp. Electron. Syst. Mag. 2010, 25, 5–28. [Google Scholar] [CrossRef]

- Reed, I.S.; Yu, X. Adaptive Multiple-Band CFAR Detection of an Optical Pattern with Unknown Spectral Distribution. IEEE Trans. Acoust. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Molero, J.M.; Garzon, E.M.; Garcia, I.; Plaza, A. Analysis and Optimizations of Global and Local Versions of the RX Algorithm for Anomaly Detection in Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2013, 6, 801–814. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, B.; Ran, Q.; Gao, L.; Li, J.; Plaza, A. Weighted-RXD and Linear Filter-Based RXD: Improving Background Statistics Estimation for Anomaly Detection in Hyperspectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2014, 7, 2351–2366. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust Uncertainty Principles: Exact Signal Reconstruction from Highly Incomplete Frequency Information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Z.Z.; Sun, H.; Ji, K.F.; Li, Z.Y.; Zou, H.X. Local Sparsity Divergence for Hyperspectral Anomaly Detection. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1697–1701. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Collaborative Representation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1463–1474. [Google Scholar] [CrossRef]

- Li, J.; Zhang, H.; Zhang, L.; Ma, L. Hyperspectral Anomaly Detection by the Use of Background Joint Sparse Representation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2523–2533. [Google Scholar] [CrossRef]

- Kang, X.; Xiang, X.; Li, S.; Benediktsson, J.A. PCA-Based Edge-Preserving Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Meng, S.; Huang, L.-T.; Wang, W.-Q. Tensor Decomposition and PCA Jointed Algorithm for Hyperspectral Image Denoising. IEEE Geosci. Remote Sens. Lett. 2016, 13, 897–901. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Tian, J. Anomaly Detection for Hyperspectral Images Based on Robust Locally Linear Embedding. J Infrared Millim. Terahertz Waves 2010, 31, 753–762. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Tian, J. Anomaly Detection for Hyperspectral Images Using Local Tangent Space Alignment. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 824–827. [Google Scholar]

- Zhang, L.; Zhao, C. Sparsity Divergence Index Based on Locally Linear Embedding for Hyperspectral Anomaly Detection. J. Appl. Remote Sens. 2016, 10, 025026. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X. R-VCANet: A New Deep-Learning-Based Hyperspectral Image Classification Method. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 10, 1975–1986. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Liu, P.; Zhang, H.; Eom, K.B. Active Deep Learning for Classification of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 712–724. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Wu, G.; Du, Q. Transferred Deep Learning for Anomaly Detection in Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 597–601. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, B. Transferred CNN Based on Tensor for Hyperspectral Anomaly Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 2115–2119. [Google Scholar] [CrossRef]

- Tao, C.; Pan, H.; Li, Y.; Zou, Z. Unsupervised spectral–spatialfeature learning with stacked sparse autoencoder for hyperspectral imagery classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2438–2442. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Geng, J. Spectral–Spatial Classification of Hyperspectral Image Based on Deep Auto-Encoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4073–4085. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, B. A Stacked Autoencoders-Based Adaptive Subspace Model for Hyperspectral Anomaly Detection. Infrared Phys. Technol. 2019, 96, 52–60. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, B.; Lin, F. Hyperspectral Anomaly Detection via Fractional Fourier Transform and Deep Belief Networks. Infrared Phys. Technol. 2022, 125, 104314. [Google Scholar] [CrossRef]

- Plaza, A.; Martín, G.; Plaza, J.; Zortea, M.; Sánchez, S. Recent Developments in Endmember Extraction and Spectral Unmixing. In Optical Remote Sensing; Springer: Berlin/Heidelberg, Germany, 2011; pp. 235–267. [Google Scholar]

- Zhang, L.; Zhao, C. A Spectral-Spatial Method Based on Low-Rank and Sparse Matrix Decomposition for Hyperspectral Anomaly Detection. Int. J. Remote Sens. 2017, 38, 4047–4068. [Google Scholar] [CrossRef]

- Du, B.; Zhao, R.; Zhang, L.; Zhang, L. A Spectral-Spatial Based Local Summation Anomaly Detection Method for Hyperspectral Images. Signal Process. 2016, 124, 115–131. [Google Scholar] [CrossRef]

- Lin, T.; Bourennane, S. Survey of Hyperspectral Image Denoising Methods Based on Tensor Decompositions. EURASIP J. Adv. Signal Process. 2013, 2013, 186. [Google Scholar] [CrossRef] [Green Version]

- Geng, X.; Sun, K.; Ji, L.; Zhao, Y. A High-Order Statistical Tensor Based Algorithm for Anomaly Detection in Hyperspectral Imagery. Sci. Rep. 2015, 4, 6869. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, S.; Wang, W.; Qi, H.; Ayhan, B.; Kwan, C.; Vance, S. Low-Rank Tensor Decomposition Based Anomaly Detection for Hyperspectral Imagery. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4525–4529. [Google Scholar]

- Zhang, X.; Wen, G.; Dai, W. A Tensor Decomposition-Based Anomaly Detection Algorithm for Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5801–5820. [Google Scholar] [CrossRef]

- Capobianco, L.; Garzelli, A.; Camps-Valls, G. Target Detection with Semisupervised Kernel Orthogonal Subspace Projection. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3822–3833. [Google Scholar] [CrossRef]

- Harsanyi, J.; Chang, C. Hyperspectral image classification and dimensionality reduction: An orthogonal subspace projection approach. IEEE Trans. Geosci. Remote Sens. 1994, 32, 779–785. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Cheng, B.; Deng, Y. A Tensor-Based Adaptive Subspace Detector for Hyperspectral Anomaly Detection. Int. J. Remote Sens. 2018, 39, 2366–2382. [Google Scholar] [CrossRef]

- Zhang, L.; Ma, J.; Cheng, B.; Lin, F. Fractional Fourier Transform Based Tensor RX for Hyperspectral Anomaly Detection. Remote Sens. 2022, 14, 797. [Google Scholar] [CrossRef]

- He, J.; Zhao, L.; Yang, H.; Zhang, M.; Li, W. HSI-BERT: Hyperspectral Image Classification Using the Bidirectional Encoder Representation from Transformers. IEEE Trans. Geosci. Remote Sens. 2020, 58, 165–178. [Google Scholar] [CrossRef]

- Pu, C.; Huang, H.; Yang, L. An Attention-Driven Convolutional Neural Network-Based Multi-Level Spectral–Spatial Feature Learning for Hyperspectral Image Classification. Expert Syst. Appl. 2021, 185, 115663. [Google Scholar] [CrossRef]

- Liu, H.; Li, W.; Xia, X.-G.; Zhang, M.; Gao, C.-Z.; Tao, R. Central Attention Network for Hyperspectral Imagery Classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 185, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Hou, Z.; Li, W.; Tao, R.; Orlando, D.; Li, H. Multipixel Anomaly Detection with Unknown Patterns for Hyperspectral Imagery. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 5557–5567. [Google Scholar] [CrossRef] [PubMed]

- Horn, R.A.; Johnson, C.R. Matrix Analysis; Cambridge University Press: Cambridge, UK, 1985; p. 176. [Google Scholar]

- Kang, X.; Zhang, X.; Li, S.; Li, K.; Li, J.; Benediktsson, J.A. Hyperspectral Anomaly Detection with Attribute and Edge-Preserving Filters. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5600–5611. [Google Scholar] [CrossRef]

| Data | Captured Place | Resolution | Sensor | Experimental Size | Fight Time |

|---|---|---|---|---|---|

| Data L | Los Angeles | 7.1 m | AVIRIS | 100 × 100 × 205 | 11/9/2011 |

| Data C | Cat Island | 17.2 m | AVIRIS | 150 × 150 × 189 | 9/12/2010 |

| Data P | Pavia | 1.3 m | ROSIS-03 | 150 × 150 × 102 | Unknown |

| Data T | Texas Coast | 17.2 m | AVIRIS | 100 × 100 × 204 | 8/29/2010 |

| Data S | San Diego | 3.5 m | AVIRIS | 120 × 120 × 126 | Unkown |

| Data | LRX (Win, Wout) | KRX (c,Win, Wout) | FrFE-RX p | FrFE-LRX (p, Win, Wout) | PCA-TRX (d, Wt, Wb) | FrFT-TRX (p, Wt, Wb) | ICAN-TRX (Wa, Wt, Wb) (ne, be, le) |

|---|---|---|---|---|---|---|---|

| Data L | (7, 9) | (10−5, 5, 9) | 0.2 | (0.2, 7, 9) | (10, 7, 9) | (1, 7, 9) | (3, 3, 41) (10, 40, 2) |

| Data C | (25, 77) | (10−2, 5, 7) | 0.2 | (0.2, 25, 77) | (10, 3, 37) | (0.2, 3, 37) | (5, 5, 15) (6, 25, 2) |

| Data P | (25, 81) | (10−1, 25, 29) | 1 | (1, 25, 77) | (20, 3, 37) | (1, 3, 37) | (3, 3, 35) (10, 20, 2) |

| Data T | (7, 9) | (10−2, 7, 9) | 1 | (1, 5, 7) | (8, 7, 9) | (0.2, 7, 9) | (5, 5, 9) (10, 40, 2) |

| Data S | (7, 9) | (10−2, 7, 9) | 0.9 | (0.9, 7, 9) | (9, 3, 31) | (0.9, 3, 31) | (21, 11, 25) (5, 5, 2) |

| Data | GRX | LRX | KRX | FrFE-RX | FrFE-LRX | PCA-TRX | FrFT-TRX | ICAN-TRX |

|---|---|---|---|---|---|---|---|---|

| Data L | 1.43 | 27.79 | 56.67 | 11.35 | 39.08 | 4.97 | 32.54 | 88.46 |

| Data C | 0.71 | 421.21 | 25.87 | 22.35 | 430.72 | 2.92 | 140.65 | 70.36 |

| Data P | 0.63 | 236.19 | 1418.01 | 11.86 | 218.95 | 5.56 | 44.78 | 39.36 |

| Data T | 0.58 | 26.95 | 20.92 | 10.96 | 38.06 | 5.64 | 33.13 | 33.80 |

| Data S | 0.59 | 15.05 | 26.21 | 9.37 | 24.89 | 1.80 | 33.61 | 75.66 |

| Wt | Wb | |||||||

|---|---|---|---|---|---|---|---|---|

| 33 | 35 | 37 | 39 | 41 | 43 | 45 | 47 | |

| 3 | 0.9527 | 0.9530 | 0.9507 | 0.9529 | 0.9538 | 0.9531 | 0.9487 | 0.9436 |

| 5 | 0.9274 | 0.9298 | 0.9263 | 0.9304 | 0.9307 | 0.928 | 0.9207 | 0.9129 |

| 7 | 0.8746 | 0.8782 | 0.8814 | 0.8787 | 0.8815 | 0.8752 | 0.8629 | 0.8488 |

| Wt | Wb | |||||||

|---|---|---|---|---|---|---|---|---|

| 11 | 13 | 15 | 17 | 19 | 21 | 23 | 25 | |

| 3 | 0.9994 | 0.9996 | 0.9997 | 0.9997 | 0.9997 | 0.9997 | 0.9996 | 0.9996 |

| 5 | 0.9995 | 0.9996 | 0.9997 | 0.9997 | 0.9997 | 0.9997 | 0.9996 | 0.9996 |

| 7 | 0.9995 | 0.9995 | 0.9996 | 0.9996 | 0.9996 | 0.9996 | 0.9996 | 0.9995 |

| Wt | Wb | |||||||

|---|---|---|---|---|---|---|---|---|

| 33 | 35 | 37 | 39 | 41 | 43 | 45 | 47 | |

| 3 | 0.9977 | 0.9979 | 0.9979 | 0.9968 | 0.9959 | 0.9955 | 0.9956 | 0.9953 |

| 5 | 0.9959 | 0.9958 | 0.9954 | 0.9945 | 0.9934 | 0.9933 | 0.9932 | 0.9928 |

| 7 | 0.9915 | 0.9915 | 0.9912 | 0.9897 | 0.9885 | 0.9871 | 0.9872 | 0.9965 |

| Wt | Wb | ||||

|---|---|---|---|---|---|

| 9 | 11 | 13 | 15 | 17 | |

| 3 | 0.9812 | 0.9839 | 0.9856 | 0.9798 | 0.9774 |

| 5 | 0.9958 | 0.9930 | 0.9882 | 0.9750 | 0.9648 |

| 7 | 0.9926 | 0.9911 | 0.9785 | 0.9650 | 0.9351 |

| Wt | Wb | |||||

|---|---|---|---|---|---|---|

| 19 | 21 | 23 | 25 | 27 | 29 | |

| 9 | 0.9679 | 0.9777 | 0.9841 | 0.9875 | 0.9894 | 0.9888 |

| 11 | 0.9868 | 0.9907 | 0.9926 | 0.9937 | 0.9933 | 0.9910 |

| 13 | 0.9888 | 0.9915 | 0.9933 | 0.9934 | 0.9923 | 0.9869 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Ma, J.; Fu, B.; Lin, F.; Sun, Y.; Wang, F. Improved Central Attention Network-Based Tensor RX for Hyperspectral Anomaly Detection. Remote Sens. 2022, 14, 5865. https://doi.org/10.3390/rs14225865

Zhang L, Ma J, Fu B, Lin F, Sun Y, Wang F. Improved Central Attention Network-Based Tensor RX for Hyperspectral Anomaly Detection. Remote Sensing. 2022; 14(22):5865. https://doi.org/10.3390/rs14225865

Chicago/Turabian StyleZhang, Lili, Jiachen Ma, Baohong Fu, Fang Lin, Yudan Sun, and Fengpin Wang. 2022. "Improved Central Attention Network-Based Tensor RX for Hyperspectral Anomaly Detection" Remote Sensing 14, no. 22: 5865. https://doi.org/10.3390/rs14225865