Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images

Abstract

:1. Introduction

- The high heterogeneity in mountainous areas produces a host of small farmland parcels with irregular shapes. These parcels with multiple crops construct a complex planting structure, which leads to the difficulty of obtaining accurate boundaries of the ground objects.

- Universal cloud coverage in mountainous areas, caused by rainy and hot weather during the same period, results in the absence of opportune optical data, which makes it hardly to distinguish farmland parcels from other parcel types.

- By using CNN technology, a hierarchical extraction scheme based on VHR optical images was developed to obtain an accurate image object distribution map of complex scenes such as mountainous areas.

- Combined with the LSTM networks, the potential of multiple variables of time-series SAR for farmland parcel identification is fully explored in cloudy and rainy areas.

2. Study Area and Dataset

2.1. Study Site

2.2. Remote Sensing Data

2.3. Field Sampling Data

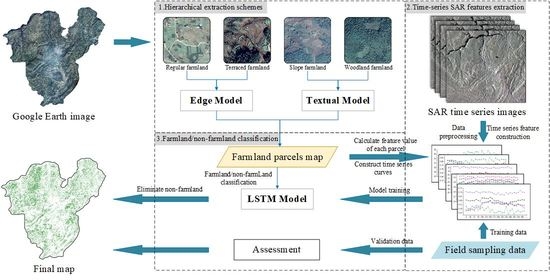

3. Method

- hierarchical extraction schemes using VHR optical images, consisting of a farmland parcel classification system and CNN-based farmland parcel extraction;

- time-series SAR features extraction from the S1A dataset;

- farmland/non-farmland classification using time series SAR data, consisting of parcel-level time-series feature construction and LSTM-based classification.

3.1. Hierarchical Extraction Scheme Uusing VHR Optical Images

3.1.1. Farmland Classification System Based on Geographical Divisions

3.1.2. Parcel-Stratified Extraction Based on CNNs

- The deep learning algorithm was selected according to the unique features of target objects. In this study, the Richer Convolutional Features(RCF) model [53] was used for the extraction of parcels with clear boundaries (regular farmland and terraced farmland), and the D_LinkNet model [54] was used to obtain texture-based parcels (slope farmland and woodland farmland). Since we focus on the effect of this hierarchical extraction scheme in complex landscapes, other studies on the improvement of deep learning models for the characteristics of target objects are not discussed in this paper.

- CNN training requires a relatively large amount of labelled data. The training data for four farmland types were cropped from the VHR satellite images, where the sampled data are not available, and manually delineated through visual-interpretation. The samples of regular farmland and terraced farmland were used to train an edge model, while the samples of slope farmland and woodland farmland were used to train two different texture models, respectively. Through the iterative training method, four farmland types parcel extraction models were respectively generated based on these samples.

- Due to the higher similarity between regular farmland and terraced farmland, these farmlands were first extracted together. We then used these results as a mask to extract slope farmland in the remaining area. All the obtained parcels were subsequently used together as a mask, and the woodland farmland was finally obtained to ensure that as much farmland as possible was obtained. Finally, through sample addition and iterative training methods, the missing and wrong areas were supplemented and corrected to improve the final map.

3.2. Time-Series SAR Feature Extraction

3.3. Farmland/Non-Farmland Classification Using Time Series SAR Data

3.3.1. Time Series Features Construction

3.3.2. Classification Model Based on LSTM

3.4. Accuracy Assessment

4. Results

4.1. Overall Farmland Parcel Extraction Results

4.2. Hierarchical Extraction Results

4.3. Time Series Feature Construction Results

4.4. Farmland/Non-Farmland Classification Results

4.5. Parameter Setting in the LSTM-Based Classification Model

5. Discussion

- The advantages of VHR optical data and time series SAR data are combined. VHR Google satellite optical imagery is used for obtaining basic parcels for farmland mapping, and the S1A SAR dataset provides time-series features for identifying the farmland parcels.

- The hierarchical extraction scheme based on a divided and stratified strategy greatly reduces the difficulty of obtaining farmland parcels in complex scenes. By dividing the ground objects layer by layer, these objects are extracted at a relatively simple level, so the design and training of the model can be conducted at a lower cost. It is worth noting that the distinguishability between each extracted object class affects the extraction accuracy. Low distinguishability will cause the same object to be extracted by different models, which affects the accuracy of the results. This is also the reason why the overall accuracy of Stage 2 shown in Table 4 has not increased compared to Stage 1. However, in this step, we focus on obtaining the parcel objects containing all the farmland and identifying them through subsequent time series classification. Therefore, this problem does not affect the results of the proposed method.

- The CNN-based parcel extraction method is different from the previous method of object segmentation, and the obtained parcel objects more closely matches the shape of the ground objects.

- Recent studies [56,61] have shown great potential of polarization decomposition variables for crop classification. However, in this study, linear polarization variables produced a higher accuracy. This is because, in complex agricultural landscapes, such as mountainous areas, consisting of various types of crops, polarization decomposition variables that reflect the different scattering mechanisms of land covers are more suitable than linear polarization variables for the identification of high biomass crops, but do not have an advantage in the identification of agricultural landscapes with various crops.

- Compared with the pixel-level method, this paper assigned the mean value of all pixels in the parcel as the feature value of this parcel, thereby reducing the influence of SAR speckle noise.

- In this experiment, farmland types are classified by only visual features, leading to the acquisition of other types of parcels. We should consider using other geographic element features (such as elevation, slope, and water content) to improve discrimination for more detailed classifications. As type discrimination increases, more accurate and complete extraction results can be obtained.

- There are some extremely fragmented and small farmland parcels in mountainous areas that are hard to extract, and the temporal behavior of these parcels is also difficult to construct from the S1A dataset. Therefore, the parcel extraction model needs to be further optimized to improve the acquisition ability of small boundaries, and higher spatial resolution SAR data should be obtained to generate the time profiles of these parcels.

- In smallholder agricultural areas, the crop temporal behaviors vary from field to field due to the different types of crops and planting time, which leads to the offset of the time series curve of farmland that the model fits, thus reducing the classification accuracy.

- We also need to obtain more features of farmland parcels from multi-source data, so as to identify farmland in a higher dimensional space and achieve greater accuracy.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| VHR | Very High Resolution |

| SAR | Synthetic Aperture Radar |

| CNNs | Convolutional Neural Networks |

| RNNs | Recurrent Neural Networks |

| LSTM | Long and Short-Term Memory |

References

- Ashourloo, D.; Shahrabi, H.S.; Azadbakht, M.; Aghighi, H.; Nematollahi, H.; Alimohammadi, A.; Matkan, A.A. Automatic canola mapping using time series of sentinel 2 images. ISPRS J. Photogramm. Remote Sens. 2019, 156, 63–76. [Google Scholar] [CrossRef]

- Skakun, S.; Kussul, N.; Shelestov, A.; Kussul, O. The use of satellite data for agriculture drought risk quantification in Ukraine. Geomat. Nat. Hazards Risk 2016, 7, 901–917. [Google Scholar] [CrossRef]

- Sulik, J.J.; Long, D.S. Spectral considerations for modeling yield of canola. Remote Sens. Environ. 2016, 184, 161–174. [Google Scholar] [CrossRef] [Green Version]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; de By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef]

- Burke, M.; Lobell, D.B. Satellite-based assessment of yield variation and its determinants in smallholder African systems. Proc. Natl. Acad. Sci. USA 2017, 114, 2189–2194. [Google Scholar] [CrossRef] [Green Version]

- Kontgis, C.; Schneider, A.; Ozdogan, M. Mapping rice paddy extent and intensification in the Vietnamese Mekong River Delta with dense time stacks of Landsat data. Remote Sens. Environ. 2015, 169, 255–269. [Google Scholar] [CrossRef]

- Massey, R.; Sankey, T.T.; Congalton, R.G.; Yadav, K.; Thenkabail, P.S.; Ozdogan, M.; Sánchez Meador, A.J. MODIS phenology-derived, multi-year distribution of conterminous U.S. crop types. Remote Sens. Environ. 2017, 198, 490–503. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Le, Y.; Gong, P.; Biging, G.S. Automated mapping of soybean and corn using phenology. ISPRS J. Photogramm. Remote Sens. 2016, 119, 151–164. [Google Scholar] [CrossRef] [Green Version]

- Bouvet, A.; Le Toan, T. Use of ENVISAT/ASAR wide-swath data for timely rice fields mapping in the Mekong River Delta. Remote Sens. Environ. 2011, 115, 1090–1101. [Google Scholar] [CrossRef] [Green Version]

- Brian, D.W.; Stephen, L.E. Large-area crop mapping using time-series MODIS 250 m NDVI data: An assessment for the U.S. Central Great Plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of Multi-Source and Multi-Temporal Remote Sensing Data Improves Crop-Type Mapping in the Subtropical Agriculture Region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castillejo-González, I.L.; López-Granados, F.; García-Ferrer, A.; Manuel Peña-Barragán, J.; Jurado-Expósito, M.; Sánchez de la Orden, M.; González-Audicana, M. Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery. Comput. Electron. Agric. 2009, 68, 207–215. [Google Scholar] [CrossRef]

- McCarty, J.; Neigh, C.; Carroll, M.; Wooten, M. Extracting smallholder cropped area in Tigray, Ethiopia with wall-to-wall sub-meter WorldView and moderate resolution Landsat 8 imagery. Remote Sens. Environ. 2017, 202, 142–151. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2017, 204, 509–523. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Yang, J.; Price, B.; Cohen, S.; Lee, H.; Yang, M.H. Object Contour Detection with a Fully Convolutional Encoder-Decoder Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 193–202. [Google Scholar] [CrossRef] [Green Version]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. Int. J. Comput. Vis. 2017, 125, 3–18. [Google Scholar] [CrossRef]

- Richter, G.M.; Agostini, F.; Barker, A.; Costomiris, D.; Qi, A. Assessing on-farm productivity of Miscanthus crops by combining soil mapping, yield modelling and remote sensing. Biomass Bioenergy 2016, 85, 252–261. [Google Scholar] [CrossRef] [Green Version]

- Rosentreter, J.; Hagensieker, R.; Waske, B. Towards large-scale mapping of local climate zones using multitemporal Sentinel 2 data and convolutional neural networks. Remote Sens. Environ. 2020, 237, 111472. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. A new deep convolutional neural network for fast hyperspectral image classification. ISPRS J. Photogramm. Remote Sens. 2018, 145, 120–147. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land Use Classification in Remote Sensing Images by Convolutional Neural Networks. arXiv 2015, arXiv:1508.00092v1. Available online: https://arxiv.org/pdf/1508.00092.pdf (accessed on 1 August 2015).

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep Convolutional Neural Networks for Hyperspectral Image Classification. J. Sens. 2015, 2015, 12. [Google Scholar] [CrossRef] [Green Version]

- Gong, Y.; Xiao, Z.; Tan, X.; Sui, H.; Xu, C.; Duan, H.; Li, D. Context-Aware Convolutional Neural Network for Object Detection in VHR Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 34–44. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Ding, P.; Zhang, Y.; Deng, W.J.; Jia, P.; Kuijper, A. A light and faster regional convolutional neural network for object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 141, 208–218. [Google Scholar] [CrossRef]

- Nogueira, K.; Penatti, O.A.; dos Santos, J.A. Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef] [Green Version]

- Hu, F.; Xia, G.S.; Hu, J.; Zhang, L. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Wu, B.; Li, Q. Crop planting and type proportion method for crop acreage estimation of complex agricultural landscapes. Int. J. Appl. Earth Obs. Geoinf. 2012, 16, 101–112. [Google Scholar] [CrossRef]

- Fenske, K.; Feilhauer, H.; Förster, M.; Stellmes, M.; Waske, B. Hierarchical classification with subsequent aggregation of heathland habitats using an intra-annual RapidEye time-series. Int. J. Appl. Earth Obs. Geoinf. 2020, 87, 102036. [Google Scholar] [CrossRef]

- Haest, B.; Vanden Borre, J.; Spanhove, T.; Thoonen, G.; Delalieux, S.; Kooistra, L.; Mücher, C.; Paelinckx, D.; Scheunders, P.; Kempeneers, P. Habitat Mapping and Quality Assessment of NATURA 2000 Heathland Using Airborne Imaging Spectroscopy. Remote Sens. 2017, 9, 266. [Google Scholar] [CrossRef] [Green Version]

- Potgieter, A.B.; Apan, A.; Hammer, G.; Dunn, P. Early-season crop area estimates for winter crops in NE Australia using MODIS satellite imagery. ISPRS J. Photogramm. Remote Sens. 2010, 65, 380–387. [Google Scholar] [CrossRef]

- Steele-Dunne, S.C.; McNairn, H.; Monsivais-Huertero, A.; Judge, J.; Liu, P.; Papathanassiou, K. Radar Remote Sensing of Agricultural Canopies: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2249–2273. [Google Scholar] [CrossRef] [Green Version]

- Song, P.; Mansaray, L.R.; Huang, J.; Huang, W. Mapping paddy rice agriculture over China using AMSR-E time series data. Isprs J. Photogramm. Remote Sens. 2018, 144, 469–482. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Jiao, X.; Kovacs, J.M.; Shang, J.; Mcnairn, H.; Walters, D.; Ma, B.; Geng, X. Object-oriented crop mapping and monitoring using multi-temporal polarimetric RADARSAT-2 data. Isprs J. Photogramm. Remote Sens. 2014, 96, 38–46. [Google Scholar] [CrossRef]

- Homayouni, S.; Mcnairn, H.; Hosseini, M.; Jiao, X.; Powers, J. Quad and compact multitemporal C-band PolSAR observations for crop characterization and monitoring. Int. J. Appl. Earth Obs. Geoinf. 2019, 74, 78–87. [Google Scholar] [CrossRef]

- Deschamps, B.; Mcnairn, H.; Shang, J.; Jiao, X. Towards operational radar-only crop type classification: Comparison of a traditional decision tree with a random forest classifier. Can. J. Remote Sens. 2012, 38, 60–68. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, J.; Wu, T.; Yanan, Z.; Liu, H.; Gao, L.; Dong, W.; Liu, W.; Yang, Y.; Hu, X.; et al. Synchronous Response Analysis of Features for Remote Sensing Crop Classification Based on Optical and SAR Time-Series Data. Sensors 2019, 19, 4227. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Williams, R.; Zipser, D. A Learning Algorithm for Continually Running Fully Recurrent Neural Networks. Neural Comput 1998, 1. [Google Scholar] [CrossRef]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech Recognition with Deep Recurrent Neural Networks. ICASSP IEEE Int. Conf. Acoust. Speech Signal Process.-Proc. 2013, 38. [Google Scholar] [CrossRef] [Green Version]

- Mikolov, T.; Karafiát, M.; Burget, L.; Cernocký, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the 11th Annual Conference of the International Speech Communication Association, INTERSPEECH, Makuhari, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Sun, Z.; Di, L.; Fang, H. Using long short-term memory recurrent neural network in land cover classification on Landsat and Cropland data layer time series. Int. J. Remote Sens. 2018, 40, 1–22. [Google Scholar] [CrossRef]

- Emile, N.; Dinh, H.T.M.; Nicolas, B.; Dominique, C.; Laure, H. Deep Recurrent Neural Network for Agricultural Classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar]

- Zhou, Y.; Luo, J.; Feng, L.; Yang, Y.; Chen, Y.; Wu, W. Long-short-term-memory-based crop classification using high-resolution optical images and multi-temporal SAR data. GISci. Remote Sens. 2019, 56, 1170–1191. [Google Scholar] [CrossRef]

- Dinh, H.; Tong, M.; Ienco, D.; Gaetano, R.; Lalande, N.; Ndikumana, E.; Osman, F.; Maurel, P. Deep Recurrent Neural Networks for mapping winter vegetation quality coverage via multi-temporal SAR Sentinel-1. IEEE Geosci. Remote. Sens. Lett. 2018, 15, 464–468. [Google Scholar] [CrossRef]

- Liu, Y.; Cheng, M.M.; Hu, X.; Bian, J.W.; Zhang, L.; Bai, X.; Tang, J. Richer Convolutional Features for Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1939–1946. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Li, H.; Zhang, C.; Zhang, S.; Atkinson, P.M. Crop classification from full-year fully-polarimetric L-band UAVSAR time-series using the Random Forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2020, 87, 102032. [Google Scholar] [CrossRef]

- Guo, J.; Wei, P.L.; Liu, J.; Jin, B.; Su, B.F.; Zhou, Z.S. Crop Classification Based on Differential Characteristics of H/α Scattering Parameters for Multitemporal Quad-and Dual-Polarization SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6111–6123. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M.; Minh, D.H.T.; Ndikumana, E.; Courault, D.; Belhouchette, H. Mapping Paddy Rice Using Sentinel-1 SAR Time Series in Camargue, France. Remote Sens. 2019, 11, 887. [Google Scholar] [CrossRef] [Green Version]

- Whelen, T.; Siqueira, P. Use of time-series L-band UAVSAR data for the classification of agricultural fields in the San Joaquin Valley. Remote Sens. Environ. 2017, 193, 216–224. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Shuai, G.; Zhang, J.; Basso, B.; Pan, Y.; Zhu, X.; Zhu, S.; Liu, H. Multi-temporal RADARSAT-2 polarimetric SAR for maize mapping supported by segmentations from high-resolution optical image. Int. J. Appl. Earth Obs. Geoinf. 2019, 74, 1–15. [Google Scholar] [CrossRef]

| Data | Day of Year | Data | Day of Year | Data | Day of Year |

|---|---|---|---|---|---|

| 4 January 2019 | 4 | 4 May 2019 | 124 | 1 September 2019 | 244 |

| 16 January 2019 | 16 | 16 May 2019 | 136 | 13 September 2019 | 256 |

| 28 January 2019 | 28 | 28 May 2019 | 148 | 25 September 2019 | 268 |

| 9 February 2019 | 40 | 9 June 2019 | 160 | 7 October 2019 | 280 |

| 21 February 2019 | 52 | 21 June 2019 | 172 | 19 October 2019 | 292 |

| 5 March 2019 | 64 | 3 July 2019 | 184 | 31 October 2019 | 304 |

| 17 March 2019 | 76 | 15 July 2019 | 196 | 12 November 2019 | 316 |

| 29 March 2019 | 88 | 27 July 2019 | 208 | 24 November 2019 | 328 |

| 10 April 2019 | 100 | 8 August 2019 | 220 | 6 December 2019 | 340 |

| 22 April 2019 | 112 | 20 August 2019 | 232 | 18 December 2019 | 352 |

| Class | Number of Parcels | Area (m) | Average Area (m) | |

|---|---|---|---|---|

| Farmland | 501 | 435,549.88 | 869.36 | |

| Non-farmland | Urban | 98 | 683,677.30 | 6976.30 |

| Woodland | 148 | 1,668,752.88 | 11,275.36 | |

| Grassland | 129 | 595,287.23 | 4614.63 | |

| Water | 37 | 248,308.34 | 6711.04 | |

| Total | 913 | 3,631,575.63 | 3977.63 |

| Level 1 Geographic Area | Level 2 Farmland Type | Features |

|---|---|---|

| Plain area | Regular farmland | Regular shape, clear boundaries, uniform internal texture, and neat spatial distribution. |

| Hillside area | Terraced farmland | Small and narrow shape, clear boundaries, uniform internal texture, and dense spatial distribution. |

| Slope farmland | Various shapes, fuzzy boundaries, rough but obvious texture, and mixed with trees and grass. | |

| Forest area | Woodland farmland | Various shapes, fuzzy boundaries, grass-like texture, and scattered between woodland and grassland. |

| Stage | Farmland | Non-Farmland | OA | ||||

|---|---|---|---|---|---|---|---|

| PA | UA | F1 | PA | UA | F1 | ||

| Stage1 | 0.8899 | 0.8882 | 0.8829 | 0.8698 | 0.8716 | 0.8653 | 0.8747 |

| Stage2 | 0.9638 | 0.8307 | 0.8930 | 0.7715 | 0.9482 | 0.8515 | 0.8756 |

| Stage3 | 0.8584 | 0.8203 | 0.8389 | 0.9744 | 0.9806 | 0.9775 | 0.9605 |

| Variables | Farmland | Non-Farmland | OA | ||||

|---|---|---|---|---|---|---|---|

| PA | UA | F1 | PA | UA | F1 | ||

| VV + VH + H + A + | 0.8902 | 0.7880 | 0.8360 | 0.7087 | 0.8415 | 0.7694 | 0.8083 |

| VV+VH | 0.9002 | 0.7631 | 0.8260 | 0.6602 | 0.8447 | 0.7411 | 0.7918 |

| H+A+ | 0.8902 | 0.7508 | 0.8146 | 0.6408 | 0.8276 | 0.7223 | 0.7776 |

| 0.8802 | 0.7449 | 0.8070 | 0.6335 | 0.8131 | 0.7121 | 0.7689 | |

| H | 0.8842 | 0.7347 | 0.8025 | 0.6117 | 0.8129 | 0.6981 | 0.7612 |

| VH | 0.8463 | 0.7452 | 0.7925 | 0.6481 | 0.7762 | 0.7063 | 0.7568 |

| A | 0.8503 | 0.7435 | 0.7933 | 0.6432 | 0.7794 | 0.7048 | 0.7568 |

| VV | 0.8822 | 0.7038 | 0.7830 | 0.5485 | 0.7930 | 0.6485 | 0.7317 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Wang, J.; Luo, J.; Wu, Z.; Chen, J.; Zhou, Y.; Sun, Y.; Shen, Z.; Xu, N.; Yang, Y. Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images. Remote Sens. 2020, 12, 3733. https://doi.org/10.3390/rs12223733

Liu W, Wang J, Luo J, Wu Z, Chen J, Zhou Y, Sun Y, Shen Z, Xu N, Yang Y. Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images. Remote Sensing. 2020; 12(22):3733. https://doi.org/10.3390/rs12223733

Chicago/Turabian StyleLiu, Wei, Jian Wang, Jiancheng Luo, Zhifeng Wu, Jingdong Chen, Yanan Zhou, Yingwei Sun, Zhanfeng Shen, Nan Xu, and Yingpin Yang. 2020. "Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images" Remote Sensing 12, no. 22: 3733. https://doi.org/10.3390/rs12223733