Moreau-Enhanced Total Variation and Subspace Factorization for Hyperspectral Denoising

Abstract

:1. Introduction

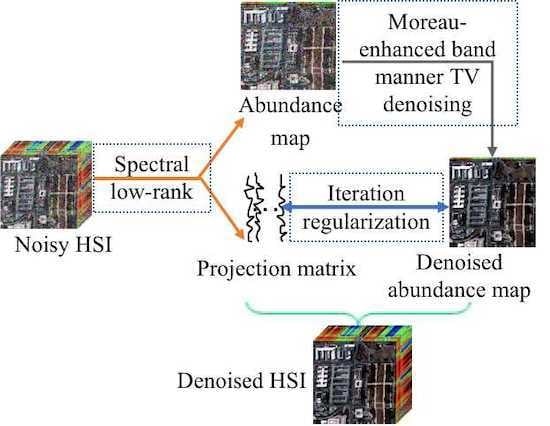

- The low-rank structure of HSI data are explored via subspace representation, which is realized by decomposing the HSI into the product of projection matrix and abundance maps. To separate the noise out, the projection matrix is required to be orthogonal.

- The Moreau-enhanced TV as a non-convex regularizer is applied to each abundance map, which is expected to enhance the spatial information and thus achieve more performance boost. Furthermore, subspace representation and -norm are incorporated into our denoising framework.

- A variational augmented Lagrangian method (ALM) algorithm is designed to minimize the proposed model. Extensive experiments confirm that our method can offer an excellent recovery performance compared with many popular HSI denoising methods. More importantly, the proposed algorithm requires less computational cost than most others.

2. Background Formulation

2.1. Problem Formulation

2.2. Global Low-Rank Matrix Factorization

3. Proposed Method

3.1. Moreau-Enhanced TV Restoration Model

3.2. Subspace-Based Moreau-Enhanced TV Restoration Model

3.3. Optimization Procedure

| Algorithm 1: SMTVSF algorithm. |

| Require: The degraded HSI , the dimension of subspace k, and parameters , , , |

| return: Denoised HSI |

| 1: Initialize: S = 0; |

| 2: Estimate projection matrix A from Y via HySime [48]; |

| 3: Compute abundance maps |

| 4: Denoise M via ‘Moreau-enhanced’ TV regularization; |

| 5: Repeat until convergence |

| 6: Update S as Equation (19) |

| 7: Update M as Equation (21) |

| 8: Update A as Equation (22) |

| 9: Obtain the denoised HSI X = AM. |

4. Experiment Results

4.1. Simulated Experiments

4.2. Real Experiments

4.3. Parameter Sensitivity

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

References

- Goetz, A.F.H. Three decades of hyperspectral remote sensing of the earth: A personal view. Remote Sens. Environ. 2009, 113, S5–S16. [Google Scholar] [CrossRef]

- Willett, R.M.; Duarte, M.F.; Davenport, M.A.; Baraniuk, R.G. Sparsity and structure in hyperspectral imaging: Sensing, reconstruction, and target detection. IEEE Signal Process. Mag. 2014, 31, 116–126. [Google Scholar] [CrossRef] [Green Version]

- Peng, Y.; Meng, D.; Xu, Z.; Gao, C.; Yang, Y.; Zhang, B. Decomposable nonlocal tensor dictionary learning for multispectral image denoising. In Proceedings of the IEEE Conference Computer Vision Pattern Recognit, Columbus, OH, USA, 23–28 June 2014; pp. 2949–2956. [Google Scholar]

- Wu, Z.; Shi, L.; Li, J.; Wang, Q.; Sun, L.; Wei, Z.; Plaza, J.; Plaza, A. GPU parallel implementation of spatially adaptive hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1131–1143. [Google Scholar] [CrossRef]

- Feng, R.; Wang, L.; Zhong, Y. Joint Local Block Grouping with Noise-Adjusted Principal Component Analysis for Hyperspectral Remote-Sensing Imagery Sparse Unmixing. Remote Sens. 2019, 11, 1223. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Wu, Z.; Xiao, L.; Liu, J.; Wei, Z.; Dang, F. A novel ℓ1/2 sparse regression method for hyperspectral unmixing. Int. J. Remote Sens. 2013, 34, 6983–7001. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Li, J.; Plaza, A.; Wei, Z. Anomaly detection in hyperspectral images based on low-rank and sparse representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1990–2000. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Yan, H.; Gao, Y.; Wei, W. Salient object detection in hyperspectral imagery using multi-scale spectral-spatial gradient. Neurocomputing 2018, 291, 215–225. [Google Scholar] [CrossRef]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 2013, 22, 1620–1630. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Q.; Zhang, L.; Shen, H. Hyperspectral image denoising employing a spectral-spatial adaptive total variation model. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3660–3677. [Google Scholar] [CrossRef]

- Zhang, H. Hyperspectral image denoising with cubic total variation model. SPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 7, 95–98. [Google Scholar] [CrossRef] [Green Version]

- Qian, Y.; Ye, M. Hyperspectral imagery restoration using nonlocal spectral-spatial structured sparse representation with noise estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 499–515. [Google Scholar] [CrossRef]

- Letexier, D.; Bourennane, S. Multidimensional wiener filtering using fourth order statistics of hyperspectral images. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 917–920. [Google Scholar]

- Maggioni, M.; Katkovnik, V.; Egiazarian, K.; Foi, A. Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans. Image Process. 2013, 22, 119–133. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM 2011, 58, 1–37. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Hyperspectral Image Denoising via Noise-Adjusted Iterative Low-Rank Matrix Approximation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3050–3061. [Google Scholar] [CrossRef]

- Xie, Y.; Qu, Y.; Tao, D.; Wu, W.; Yuan, Q.; Zhang, W. Hyperspectral image restoration via iteratively regularized weighted schatten p-norm minimization. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4642–4659. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, Y.; Wang, Y.; Wang, D.; Peng, C.; He, G. Denoising of hyperspectral images using nonconvex low rank matrix approximation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5366–5380. [Google Scholar] [CrossRef]

- Sun, L.; Jeon, B.; Zheng, Y.; Wu, Z. Hyperspectral image restoration using low-rank representation on spectral difference image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1151–1155. [Google Scholar] [CrossRef]

- Song, X.; Wu, L.; Hao, H.; Xu, W. Hyperspectral Image Denoising Based on Spectral Dictionary Learning and Sparse Coding. Electronics 2019, 8, 86. [Google Scholar] [CrossRef] [Green Version]

- Fan, F.; Ma, Y.; Li, C.; Mei, X.; Huang, J.; Ma, J. Hyperspectral image denoising with superpixel segmentation and low-rank representation. Inf. Sci. 2017, 397/398, 48–68. [Google Scholar] [CrossRef]

- Zhu, R.; Dong, M.; Xue, J.H. Spectral Nonlocal Restoration of Hyperspectral Images With Low-Rank Property. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3062–3067. [Google Scholar]

- Guo, X.; Huang, X.; Zhang, L.; Zhang, L. Hyperspectral image noise reduction based on rank-1 tensor decomposition. Int. Soc. Photogramm. Remote Sens. J. Photogramm. Remote Sens. 2013, 83, 50–63. [Google Scholar] [CrossRef]

- Xie, Q.; Zhao, Q.; Meng, D.; Xu, Z.; Gu, S.; Zuo, W.; Zhang, L. Multispectral Images Denoising by Intrinsic Tensor Sparsity Regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1692–1700. [Google Scholar]

- Wu, Z.; Wang, Q.; Jin, J.; Shen, Y. Structure tensor total variation-regularized weighted nuclear norm minimization for hyperspectral image mixed denoising. Signal Process. 2017, 131, 202–219. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C.-W. Nonlocal Low-Rank Regularized Tensor Decomposition for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5174–5189. [Google Scholar] [CrossRef]

- Kong, X.; Zhao, Y.; Xue, J.; Chan, J.C.-W. Hyperspectral Image Denoising Using Global Weighted Tensor Norm Minimum and Nonlocal Low-Rank Approximation. Remote Sens. 2019, 11, 2281. [Google Scholar] [CrossRef] [Green Version]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Total-variation-regularized low-rank matrix factorization for hyperspectral imagerestoration. IEEE Trans. Geosci. Remote Sens. 2016, 54, 178–188. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, Q.; Wu, Z.; Shen, Y. Total variation-regularized weighted nuclear norm minimization for hyperspectral image mixed denoising. J. Electron. Imag. 2016, 25, 1–21. [Google Scholar] [CrossRef]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted Nuclear Norm Minimization with Application to Image Denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Sun, L.; Zhan, T.; Wu, Z.; Jeon, B. A Novel 3D Anisotropic Total Variation Regularized Low Rank Method for Hyperspectral Image Mixed Denoising. ISPRS Int. J. Geo-Inf. 2018, 7, 412. [Google Scholar] [CrossRef] [Green Version]

- Aggarwal, H.K.; Majumdar, A. Hyperspectral Image Denoising Using Spatio-Spectral Total Variation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 442–446. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral Image Denoising Using Local Low-Rank Matrix Recovery and Global Spatial–Spectral Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 713–729. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, R.; Wang, Y. A Method for the Destriping of an Orbita Hyperspectral Image with Adaptive Moment Matching and Unidirectional Total Variation. Remote Sens. 2019, 11, 2098. [Google Scholar] [CrossRef] [Green Version]

- Lefkimmiatis, S.; Roussos, A.; Maragos, P.; Unser, M. Structure tensor total variation. SIAM J. Imaging Sci. 2015, 8, 1090–1122. [Google Scholar] [CrossRef]

- Zhuang, L.; Bioucas-Dias, J.M. Fast Hyperspectral image Denoising based on low rank and sparse representations. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1847–1850. [Google Scholar]

- Zhuang, L.; Bioucas-Dias, J.M. Hyperspectral image denoising based on global and non-local low-rank factorizations. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1900–1904. [Google Scholar]

- He, W.; Yao, Q.; Li, C.; Yokoya, N.; Zhao, Q. Non-local Meets Global: An Integrated Paradigm for Hyperspectral Denoising. arXiv 2018, arXiv:1812.04243. [Google Scholar]

- Yang, Y.; Zheng, J.; Chen, S.; Zhang, M. Hyperspectral image restoration via local low-rank matrix recovery and Moreau-enhanced total variation. IEEE Geosci. Remote Sens. Lett. 2019. [Google Scholar] [CrossRef]

- Qian, Y.; Shen, Y.; Ye, M.; Wang, Q. 3D nonlocal means filter withnoise estimation for hyperspectral imagery denoising. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 1345–1348. [Google Scholar]

- Xue, J.; Zhao, Y.; Liao, W.; Kong, S.G. Joint Spatial and Spectral Low-Rank Regularization for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1940–1958. [Google Scholar] [CrossRef]

- Zhuang, L.; Bioucas-Dias, J.M. Fast hyperspectral image denoising and inpainting based on low-rank and sparse representations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 730–742. [Google Scholar] [CrossRef]

- Rasti, B.; Scheunders, P.; Ghamisi, P.; Licciardi, G.; Chanussot, J. Noise reduction in hyperspectral imagery: Overview and application. Remote Sens. 2018, 10, 482. [Google Scholar] [CrossRef] [Green Version]

- Bioucas-Dias, J.M.; Nascimento, J.M. Hyperspectral Subspace Identification. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2435–2445. [Google Scholar] [CrossRef] [Green Version]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011; pp. 561–575. [Google Scholar]

- Selesnick, I. Total Variation Denoising Via the Moreau Envelope. IEEE Signal Process. Lett. 2017, 24, 216–220. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Zhang, L. Total variation regularized reweighted sparse nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3909–3921. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, M.; Ma, Y. The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Yang, J.; Yin, W.; Zhang, Y.; Wang, Y. A Fast Algorithm for Edge-Preserving Variational Multichannel Image Restoration. SIAM J. Imaging Sci. 2009, 2, 569–592. [Google Scholar] [CrossRef]

- A Freeware Multispectral Image Data Analysis System. Available online: https://engineering.purdue.edu/~biehl/MultiSpec/hyperspectral.html (accessed on 30 December 2019).

- Hyperspectral Remote Sensing Scenes. Available online: http://www.ehu.es/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 30 December 2019).

- AVIRIS Indian Pines Dataset. Available online: http://www.ehu.eus/ccwintco/index.php (accessed on 15 May 2019).

- HYDICE Urban Dataset. Available online: https://www.researchgate.net/publication/315725239_Code_of_NonLRMA (accessed on 15 May 2019).

- Combettes, P.L.; Pesquet, J.-C. Proximal splitting methods in signal processing. In Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Bauschke, H.H., Burachik, R.S., Combettes, P.L., Elser, V., Luke, D.R., Wolkowicz, H., Eds.; Springer: New York, NY, USA, 2011; Volume 49, pp. 185–212. [Google Scholar]

- Cai, J.F.; Candès, E.J.; Shen, Z. A Singular Value Thresholding Algorithm for Matrix Completion. Soc. Ind. Appl. Math. J. Opt. 2008, 20, 1956–1982. [Google Scholar] [CrossRef]

| Data | Noise Case | Metrics | Noisy | LRMR | LRTV | FastHyDe | GLF | NM-Meet | LLRGTV | LLRMTV | STVSF | SMTVSF |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WDC | Case 1 | MPSNR(dB) | 26.02 | 39.04 | 38.27 | 40.54 | 40.82 | 39.90 | 40.51 | 39.93 | 40.22 | 41.43 |

| MSSIM | 0.6929 | 0.9774 | 0.9758 | 0.9859 | 0.9878 | 0.9824 | 0.9826 | 0.9865 | 0.9850 | 0.9886 | ||

| ERGAS | 194.29 | 42.63 | 46.71 | 37.08 | 36.18 | 40.65 | 35.93 | 40.66 | 38.24 | 33.86 | ||

| Case 2 | MPSNR(dB) | 15.86 | 34.61 | 34.68 | 30.41 | 30.54 | 29.03 | 35.85 | 35.63 | 34.46 | 36.27 | |

| MSSIM | 0.2869 | 0.9427 | 0.9430 | 0.9148 | 0.9163 | 0.8440 | 0.9531 | 0.9416 | 0.9421 | 0.9552 | ||

| ERGAS | 632.75 | 69.58 | 69.22 | 128.60 | 128.34 | 149.90 | 60.21 | 69.44 | 70.81 | 65.91 | ||

| Case 3 | MPSNR(dB) | 14.16 | 33.53 | 34.81 | 27.00 | 27.53 | 26.80 | 36.85 | 36.31 | 35.50 | 37.20 | |

| MSSIM | 0.2335 | 0.9360 | 0.9509 | 0.8646 | 0.8797 | 0.8545 | 0.9606 | 0.9557 | 0.9531 | 0.9731 | ||

| ERGAS | 843.87 | 89.40 | 74.11 | 218.27 | 214.35 | 227.83 | 63.91 | 62.44 | 68.79 | 48.67 | ||

| Case 4 | MPSNR(dB) | 13.87 | 33.38 | 34.41 | 26.11 | 26.56 | 26.05 | 34.81 | 35.09 | 33.73 | 35.58 | |

| MSSIM | 0.2285 | 0.9356 | 0.9447 | 0.8416 | 0.8579 | 8432 | 0.9505 | 0.9487 | 0.9300 | 0.9550 | ||

| ERGAS | 856.52 | 99.61 | 79.69 | 244.56 | 236.70 | 238.96 | 140.05 | 85.05 | 183.90 | 74.31 | ||

| Time(Seconds) | 240 | 103 | 14 | 807 | 95 | 672 | 727 | 18 | 44 | |||

| Pavia | Case 1 | MPSNR(dB) | 26.02 | 38.49 | 38.96 | 40.82 | 41.58 | 39.02 | 39.27 | 39.10 | 40.21 | 41.42 |

| MSSIM | 0.7209 | 0.9758 | 0.9775 | 0.9864 | 0.9886 | 0.9787 | 0.9795 | 0.9798 | 0.9844 | 0.9898 | ||

| ERGAS | 184.54 | 44.89 | 43.44 | 33.92 | 31.01 | 42.78 | 40.90 | 40.15 | 36.25 | 30.28 | ||

| Case 2 | MPSNR(dB) | 15.86 | 33.20 | 34.62 | 29.89 | 29.95 | 26.94 | 34.14 | 34.33 | 34.10 | 35.03 | |

| MSSIM | 0.2858 | 0.9319 | 0.9426 | 0.9084 | 0.9104 | 0.7618 | 0.9388 | 0.9454 | 0.9297 | 0.9595 | ||

| ERGAS | 596.67 | 81.63 | 73.85 | 119.68 | 117.78 | 187.20 | 73.48 | 71.66 | 77.50 | 69.23 | ||

| Case 3 | MPSNR(dB) | 13.99 | 32.46 | 33.87 | 26.09 | 26.70 | 24.65 | 34.39 | 34.85 | 33.90 | 34.77 | |

| MSSIM | 0.2200 | 0.9202 | 0.9365 | 0.8462 | 0.8715 | 0.7549 | 0.9478 | 0.9441 | 0.9357 | 0.9458 | ||

| ERGAS | 796.17 | 99.36 | 101.87 | 200.54 | 189.66 | 269.95 | 78.56 | 82.76 | 81.32 | 68.17 | ||

| Case 4 | MPSNR(dB) | 13.80 | 31.21 | 33.88 | 24.75 | 25.52 | 24.30 | 34.16 | 34.22 | 33.37 | 34.56 | |

| MSSIM | 0.2371 | 0.9158 | 0.9276 | 0.7880 | 0.8393 | 0.7595 | 0.9599 | 0.9537 | 0.9584 | 0.9634 | ||

| ERGAS | 824.19 | 140.04 | 155.72 | 251.02 | 236.27 | 284.28 | 82.01 | 86.12 | 85.50 | 74.55 | ||

| Time(Seconds) | 67 | 21 | 8 | 474 | 52 | 33 | 115 | 4 | 22 |

| HSI Data | LRMR | LRTV | FastHyDe | GLF | NM-Meet | LLRGTV | LLRMTV | SMTVSF |

|---|---|---|---|---|---|---|---|---|

| Indian Pines | 74 | 28 | 6 | 273 | 25 | 220 | 76 | 20 |

| HYDICE Urban | 149 | 51 | 10 | 475 | 56 | 463 | 108 | 24 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Chen, S.; Zheng, J. Moreau-Enhanced Total Variation and Subspace Factorization for Hyperspectral Denoising. Remote Sens. 2020, 12, 212. https://doi.org/10.3390/rs12020212

Yang Y, Chen S, Zheng J. Moreau-Enhanced Total Variation and Subspace Factorization for Hyperspectral Denoising. Remote Sensing. 2020; 12(2):212. https://doi.org/10.3390/rs12020212

Chicago/Turabian StyleYang, Yanhong, Shengyong Chen, and Jianwei Zheng. 2020. "Moreau-Enhanced Total Variation and Subspace Factorization for Hyperspectral Denoising" Remote Sensing 12, no. 2: 212. https://doi.org/10.3390/rs12020212