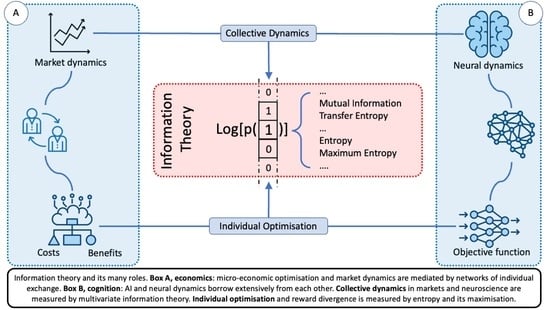

Information Theory for Agents in Artificial Intelligence, Psychology, and Economics

Abstract

:1. Introduction

It is not widely understood, however, that the tools made available by communication theory are useful for analyzing data, whether or not we believe that the human organism is best described as a communications system.

In early experiments, mean response time appeared to grow linearly with uncertainty, but glitches soon became evident. The deepest push in the response time direction was Donald Laming’s (1968) subtle Information Theory of Choice-Reaction Times, although he later stated: “This idea does not work. While my own data (1968) might suggest otherwise, there are further unpublished results that show it to be hopeless” (Laming, 2001, p. 642).

The enthusiasm—nay, faddishness—of the times is hard to capture now. Many felt that a very deep truth of the mind had been uncovered. Yet, Shannon was skeptical [...] Myron Tribus (1979, p. 1) wrote: “In 1961 Professor Shannon, in a private conversation, made it quite clear to me that he considered applications of his work to problems outside of communication theory to be suspect and he did not attach fundamental significance to them.” These skeptical views strike me as appropriate.

However, in my opinion, the most important answer lies in the following incompatibility between psychology and information theory. The elements of choice in information theory are absolutely neutral and lack any internal structure; the probabilities are on a pure, unstructured set whose elements are functionally interchangeable.

2. Economic Rationality and Expected Utility

Economists after Bentham [1748–1832] became increasingly uncomfortable, not only with the naïve hypothesis that our brains are machines for generating utility, but with all attempts to base economics on psychological foundations. The theory of revealed preference therefore makes a virtue of assuming nothing whatever about the psychological causes of our choice behavior [...] Modern decision theory succeeds in accommodating the infinite variety of the human race within a single theory simply by denying itself the luxury of speculating about what is going on inside someone’s head. Instead, it pays attention only to what people do. ([52] Chapter 1: Revealed Preferences)

In revealed-preference theory, it isn’t true that [an agent] chooses b rather than a because the utility of b exceeds the utility of a. This is the Causal Utility Fallacy. It isn’t even true that [an agent] chooses b rather than a because she prefers b to a. On the contrary, it is because [an agent] chooses b rather than a that we say that [they] prefer b to a, and assign b a larger utility. ([52] Chapter 1: Revealed Preferences)

The rationality of expected utility theory requires an agent’s preferences to be described by Axioms 1–4, that they are stable over time, and that they are revealed through the agent’s behaviour. From these premises an observer can infer, by Savage’s theorems, that an agent’s preferences can be represented using a numerical utility function in the form of Equation (1) and that the agent’s behaviour is optimal with respect to this inferred utility function. However this does not mean that a utility function exists or is used by the agent to optimise their decision, optimisation is inferred by consistency of preferences, and the agent only behaves as though a utility exists and as though they are using it to optimise the choices they make.

3. Free Energy

3.1. Free Energy in Physics

3.2. Free Utility in Economics

- Using MaxEnt to form constrained distributions over decision variables is compatible with Bayes optimal decisions,

- It brings maximising the entropy into correspondence with maximising the expected utility when the utility is a log-loss function,

- These methods can be generalised to finding other optimal, constrained strategies using a variety of loss functions.

4. The Logic of Jaynes’ Learning Robot

In order to direct attention to constructive things and away from controversial irrelevancies, we shall invent an imaginary being. Its brain is to be designed by us, so that it reasons according to certain definite rules. These rules will be deduced from simple desiderata which, it appears to us, would be desirable in human brains; i.e., we think that a rational person, should he discover that he was violating one of these desiderata, would wish to revise their thinking ([64] Chapter 1: The thinking computer)

5. Friston’s Free Energy Principle

- A finite set of observations about the external world.

- A finite set of actions A the agent can take in the external world.

- A finite set of hidden states S of the external world.

- A finite set of control states U that the agent can choose from in order to act in the external world.

6. Collecting the Threads

6.1. Do Not Look under the Hood!

Thus, the assumptions of utility or profit maximization, on the one hand, and the assumption of substantive rationality, on the other, freed economics from any dependence upon psychology. ([77] p. 66)

6.2. OK, Should We Look under the Hood Anyway?

- A good used car drives safely, economically, and comfortably. (over-simplified premise)

- The only test of whether a used car is a good used car is whether it drives safely, economically, and comfortably. (invalidly from 1)

- Anything one discovers by opening the hood and checking the separate components of a used car is irrelevant to its assessment. (trivially from 2)

6.3. How Large Is Our World?

There is no consensus on where such a logical prior comes from, but scholars who see Bayesianism as embodying the essence of rational learning usually argue that we should place the choice of prior at a time in the past when Alice is completely ignorant. An appeal is then sometimes made to the “Harsanyi doctrine”, which says that different rational agents independently placed in a situation of complete ignorance will necessarily formulate the same common prior (Harsanyi 1977). However, even if one accepts this doubtful principle, one is left wondering what this “rational prior” would look like.

In practice, some version of Laplace’s principle of insufficient reason is usually employed. Others take this position further by arguing that the complete ignorance assumption implies, for example, that the rational prior will maximize entropy (Jaynes and Bretthorst 2003). We are then as far from Savage’s view on constructing priors as it is possible to be. Instead of using all potentially available information in the small world to be studied in formulating a prior, one treats all such information as irrelevant.

The literature offers many examples that draw attention to the difficulties implicit in appeals to versions of Laplace’s principle. Similar objections can be made to any proposal for choosing a logical prior that depends only on the state space. Even in small worlds we seldom know the “correct” state space. In a large world, we have to update our state space as we go along—just as physicists are trying to do right now as they seek to create a theory of everything.

In brief, Bayesianism has no solid answer to the question of where logical priors come from. Attempts to apply versions of the principle of insufficient reason fail because they take for granted that the state space is somehow known a priori beyond any need for revision. The same goes for any proposal that makes the prior a function of the state space alone. However, how can Alice be so certain about the state space when she is assumed to know nothing whatever about how likely different states may be?

Kersten et al. (2004) provide an excellent review of object perception as Bayesian inference and ask a fundamental question, ‘Where do the priors come from. Without direct input, how does image-independent knowledge of the world get put into the visual system?’ In §3(e), we answer this question and show how empirical Bayes allows priors to be learned and induced online during inference. [29]

Empirical Bayes allows these priors to be induced by sensory input, using hierarchies of backward and lateral projections that prevail in the real brain. In short, hierarchical models of representational learning are a natural choice for understanding real functional architectures and, critically, confer a necessary role on backward connections.

The problem with fully Bayesian inference is that the brain cannot construct the prior expectation and variability [...] de novo. They have to be learned and also adapted to the current experiential context. This is a solved problem in statistics and calls for empirical Bayes, in which priors are estimated from data. Empirical Bayes harnesses the hierarchical structure of a generative model, treating the estimates at one level as priors on the subordinate level (Efron & Morris 1973). This provides a natural framework within which to treat cortical hierarchies in the brain, each level providing constraints on the level below. This approach models the world as a hierarchy of systems where supraordinate causes induce and moderate changes in subordinate causes. These priors offer contextual guidance towards the most likely cause of the input.

At the end of the story, the situation is as envisaged by Bayesianites: the final “massaged” posteriors can indeed be formally deduced from a final “massaged” prior using Bayes’ rule. This conclusion is guaranteed by the use of a complex adjustment process that operates until consistency is achieved. [...]

Notice that it is not true in this story that [an agent] is learning when the massaged prior is updated to yield a massaged prior - that rational learning consists of no more than applying Bayes rule. On the contrary, Bayesian updating only takes place after all learning is over. [...] Bayesianites therefore have the cart before the horse. Insofar as learning consists of deducing one set of beliefs from another, it is the massaged prior that is deduced from the unmassaged posteriors [86].

6.4. Where to for the Future?

It may be that the pure information processing metaphor is not sufficient, and that other frameworks might give the insights that we need. Some proposals for such frameworks include free energy principles, control theory, dynamical systems theory, and ideas from biological development. It may be that an entirely new framework is needed for a full account of cognition.

Funding

Data Availability Statement

Conflicts of Interest

References

- Georgescu-Roegen, N. The entropy law and the economic problem. In Valuing the Earth: Economics, Ecology, Ethics; MIT Press: Cambridge, MA, USA, 1993; pp. 75–88. [Google Scholar]

- Wilson, A. Entropy in Urban and Regional Modelling; Routledge: London, UK, 2011; Volume 1. [Google Scholar]

- Crosato, E.; Prokopenko, M.; Harré, M.S. The polycentric dynamics of Melbourne and Sydney: Suburb attractiveness divides a city at the home ownership level. Proc. R. Soc. A 2021, 477, 20200514. [Google Scholar] [CrossRef]

- Crosato, E.; Nigmatullin, R.; Prokopenko, M. On critical dynamics and thermodynamic efficiency of urban transformations. R. Soc. Open Sci. 2018, 5, 180863. [Google Scholar] [CrossRef] [Green Version]

- Harré, M.; Bossomaier, T. Phase-transition–like behaviour of information measures in financial markets. EPL Europhys. Lett. 2009, 87, 18009. [Google Scholar] [CrossRef]

- Bossomaier, T.; Barnett, L.; Steen, A.; Harré, M.; d’Alessandro, S.; Duncan, R. Information flow around stock market collapse. Account. Financ. 2018, 58, 45–58. [Google Scholar] [CrossRef]

- Harré, M. Entropy and Transfer Entropy: The Dow Jones and the Build Up to the 1997 Asian Crisis. In Proceedings of the International Conference on Social Modeling and Simulation, Plus Econophysics Colloquium 2014; Springer: Cham, Switzerland, 2015; pp. 15–25. [Google Scholar]

- Bossomaier, T.; Barnett, L.; Harré, M. Information and phase transitions in socio-economic systems. Complex Adapt. Syst. Model. 2013, 1, 1–25. [Google Scholar] [CrossRef] [Green Version]

- Matsuda, H.; Kudo, K.; Nakamura, R.; Yamakawa, O.; Murata, T. Mutual information of Ising systems. Int. J. Theor. Phys. 1996, 35, 839–845. [Google Scholar] [CrossRef]

- Barnett, L.; Lizier, J.T.; Harré, M.; Seth, A.K.; Bossomaier, T. Information flow in a kinetic Ising model peaks in the disordered phase. Phys. Rev. Lett. 2013, 111, 177203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prokopenko, M.; Barnett, L.; Harré, M.; Lizier, J.T.; Obst, O.; Wang, X.R. Fisher transfer entropy: quantifying the gain in transient sensitivity. Proc. R. Soc. A Math. Phys. Eng. Sci. 2015, 471, 20150610. [Google Scholar] [CrossRef]

- Aoki, M. New Approaches to Macroeconomic Modeling. In Cambridge Books; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Wolpert, D.H.; Harré, M.; Olbrich, E.; Bertschinger, N.; Jost, J. Hysteresis effects of changing the parameters of noncooperative games. Phys. Rev. E 2012, 85, 036102. [Google Scholar] [CrossRef] [Green Version]

- Harré, M.S.; Bossomaier, T. Strategic islands in economic games: Isolating economies from better outcomes. Entropy 2014, 16, 5102–5121. [Google Scholar] [CrossRef] [Green Version]

- Lizier, J.T. JIDT: An information-theoretic toolkit for studying the dynamics of complex systems. Front. Robot. AI 2014, 1, 11. [Google Scholar] [CrossRef] [Green Version]

- Bossomaier, T.; Barnett, L.; Harré, M.; Lizier, J.T. An Introduction to Transfer Entropy; Springer International Publishing: Cham, Switzerland, 2016; Volume 65. [Google Scholar]

- Laming, D. Statistical information and uncertainty: A critique of applications in experimental psychology. Entropy 2010, 12, 720–771. [Google Scholar] [CrossRef] [Green Version]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81–97. [Google Scholar] [CrossRef] [Green Version]

- McGill, W. Multivariate information transmission. Trans. IRE Prof. Group Inf. Theory 1954, 4, 93–111. [Google Scholar] [CrossRef]

- Luce, R.D. Whatever happened to information theory in psychology? Rev. Gen. Psychol. 2003, 7, 183–188. [Google Scholar] [CrossRef] [Green Version]

- Harré, M.S.; Bossomaier, T.; Gillett, A.; Snyder, A. The aggregate complexity of decisions in the game of Go. Eur. Phys. J. B 2011, 80, 555–563. [Google Scholar] [CrossRef]

- Harré, M.; Bossomaier, T.; Snyder, A. The development of human expertise in a complex environment. Minds Mach. 2011, 21, 449–464. [Google Scholar] [CrossRef]

- Harré, M.; Snyder, A. Intuitive expertise and perceptual templates. Minds Mach. 2012, 22, 167–182. [Google Scholar] [CrossRef]

- Wollstadt, P.; Lizier, J.T.; Vicente, R.; Finn, C.; Martinez-Zarzuela, M.; Mediano, P.; Novelli, L.; Wibral, M. IDTxl: The Information Dynamics Toolkit xl: A Python package for the efficient analysis of multivariate information dynamics in networks. J. Open Source Softw. 2019. [Google Scholar] [CrossRef]

- Wibral, M.; Vicente, R.; Lindner, M. Transfer entropy in neuroscience. In Directed Information Measures in Neuroscience; Springer: Berlin, Germany, 2014; pp. 3–36. [Google Scholar]

- Wibral, M.; Lizier, J.; Vögler, S.; Priesemann, V.; Galuske, R. Local active information storage as a tool to understand distributed neural information processing. Front. Neuroinform. 2014, 8, 1. [Google Scholar] [CrossRef] [Green Version]

- Cramer, B.; Stöckel, D.; Kreft, M.; Wibral, M.; Schemmel, J.; Meier, K.; Priesemann, V. Control of criticality and computation in spiking neuromorphic networks with plasticity. Nat. Commun. 2020, 11, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. A theory of cortical responses. Philos. Trans. R. Soc. B Biol. Sci. 2005, 360, 815–836. [Google Scholar] [CrossRef]

- Genewein, T.; Leibfried, F.; Grau-Moya, J.; Braun, D.A. Bounded rationality, abstraction, and hierarchical decision-making: An information-theoretic optimality principle. Front. Robot. AI 2015, 2, 27. [Google Scholar] [CrossRef] [Green Version]

- Braun, D.A.; Ortega, P.A.; Theodorou, E.; Schaal, S. Path integral control and bounded rationality. In Proceedings of the 2011 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL), Paris, France, 11–15 April 2011; pp. 202–209. [Google Scholar]

- Ortega, P.A.; Braun, D.A. Thermodynamics as a theory of decision-making with information-processing costs. Proc. R. Soc. A Math. Phys. Eng. Sci. 2013, 469, 20120683. [Google Scholar] [CrossRef]

- Friston, K.J.; Daunizeau, J.; Kiebel, S.J. Reinforcement learning or active inference? PLoS ONE 2009, 4, e6421. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Friston, K.; Schwartenbeck, P.; FitzGerald, T.; Moutoussis, M.; Behrens, T.; Dolan, R.J. The anatomy of choice: Active inference and agency. Front. Hum. Neurosci. 2013, 7, 598. [Google Scholar] [CrossRef] [Green Version]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin, Germany, 2006. [Google Scholar]

- Friston, K.; Kilner, J.; Harrison, L. A free energy principle for the brain. J. Physiol. 2006, 100, 70–87. [Google Scholar] [CrossRef] [Green Version]

- Oizumi, M.; Albantakis, L.; Tononi, G. From the phenomenology to the mechanisms of consciousness: Integrated information theory 3.0. PLoS Comput. Biol. 2014, 10, e1003588. [Google Scholar] [CrossRef] [Green Version]

- Veness, J.; Ng, K.S.; Hutter, M.; Uther, W.; Silver, D. A monte-carlo aixi approximation. J. Artif. Intell. Res. 2011, 40, 95–142. [Google Scholar] [CrossRef]

- Bolon-Canedo, V.; Remeseiro, B. Feature selection in image analysis: A survey. In Artificial Intelligence Review; Springer: Berlin, Germany, 2019; pp. 1–27. [Google Scholar]

- Solorio-Fernández, S.; Carrasco-Ochoa, J.A.; Martínez-Trinidad, J.F. A review of unsupervised feature selection methods. Artif. Intell. Rev. 2020, 53, 907–948. [Google Scholar] [CrossRef]

- Hall, M.A.; Smith, L.A. Practical feature subset selection for machine learning. In Proceedings of the 21st Australasian Computer Science Conference ACSC’98, Perth, Australia, 4–6 February 1998. [Google Scholar]

- Huang, H.; Huang, J.; Feng, Y.; Zhang, J.; Liu, Z.; Wang, Q.; Chen, L. On the improvement of reinforcement active learning with the involvement of cross entropy to address one-shot learning problem. PLoS ONE 2019, 14, e0217408. [Google Scholar] [CrossRef] [PubMed]

- Kamatani, N. Genes, the brain, and artificial intelligence in evolution. J. Hum. Genet. 2020. [Google Scholar] [CrossRef]

- Ferrucci, D.A. Introduction to “this is watson”. IBM J. Res. Dev. 2012, 56, 1. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [Green Version]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Mitchell, M. Can GPT-3 Make Analogies? Medium 2020. Available online: https://medium.com/@melaniemitchell.me/can-gpt-3-make-analogies-16436605c446 (accessed on 3 March 2021).

- Mitchell, M. Artificial intelligence hits the barrier of meaning. Information 2019, 10, 51. [Google Scholar] [CrossRef] [Green Version]

- Damielson, P.; Audi, R.; Bicchieri, C. The Oxford Handbook of Rationality; Oxford University Press: Cary, NC, USA, 2004. [Google Scholar]

- Steele, K.; Stefánsson, H.O. Decision Theory. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; Metaphysics Research Lab.: Stanford, UK, 2020. [Google Scholar]

- Binmore, K. Rational Decisions; Princeton University Press: Princeton, NJ, USA, 2008. [Google Scholar]

- Savage, L.J. The Foundations of Statistics; Courier Corporation: Chelmsford, MA, USA, 1972. [Google Scholar]

- Friedman, M. The Methodology of Positive Economics; Cambridge University Press: Cambridge, UK, 1953. [Google Scholar]

- Maki, U.; Mäki, U. The Methodology of Positive Economics: Reflections on the Milton Friedman Legacy; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Moscati, I. Retrospectives: How economists came to accept expected utility theory: The case of samuelson and savage. J. Econ. Perspect. 2016, 30, 219–236. [Google Scholar] [CrossRef] [Green Version]

- Wolpert, D.H. Predictive Game Theory; Massachusetts Institute of Technology (MIT): Cambridge, MA, USA, 2005. [Google Scholar]

- Wolpert, D.H.; Wheeler, K.R.; Tumer, K. Collective intelligence for control of distributed dynamical systems. EPL Europhys. Lett. 2000, 49, 708. [Google Scholar] [CrossRef] [Green Version]

- Harré, M.S.; Atkinson, S.R.; Hossain, L. Simple nonlinear systems and navigating catastrophes. Eur. Phys. J. B 2013, 86, 289. [Google Scholar] [CrossRef]

- McKelvey, R.D.; Palfrey, T.R. Quantal response equilibria for normal form games. Games Econ. Behav. 1995, 10, 6–38. [Google Scholar] [CrossRef]

- Niven, R.K. Jaynes’ MaxEnt, steady state flow systems and the maximum entropy production principle. In AIP Conference Proceedings; American Institute of Physics: College Park, MD, USA, 2009; Volume 1193, pp. 397–404. [Google Scholar]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620. [Google Scholar] [CrossRef]

- Grünwald, P.D.; Dawid, A.P. Game theory, maximum entropy, minimum discrepancy and robust Bayesian decision theory. Ann. Stat. 2004, 32, 1367–1433. [Google Scholar] [CrossRef] [Green Version]

- Jaynes, E.T. Probability Theory: The Logic of Science; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Jaynes, E.T. Bayesian Methods: General Background; Citeseer: New York, NY, USA, 1986. [Google Scholar]

- Ramstead, M.J.; Kirchhoff, M.D.; Friston, K.J. A tale of two densities: Active inference is enactive inference. Adapt. Behav. 2020, 28, 225–239. [Google Scholar] [CrossRef] [Green Version]

- Friston, K.; Rigoli, F.; Ognibene, D.; Mathys, C.; Fitzgerald, T.; Pezzulo, G. Active inference and epistemic value. Cogn. Neurosci. 2015, 6, 187–214. [Google Scholar] [CrossRef]

- Pearl, J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Pearl, J. Causes of effects and effects of causes. Sociol. Methods Res. 2015, 44, 149–164. [Google Scholar] [CrossRef] [Green Version]

- Pearl, J. Graphical models for probabilistic and causal reasoning. In Quantified Representation of Uncertainty and Imprecision; Springer: Berlin, Germany, 1998; pp. 367–389. [Google Scholar]

- Da Costa, L.; Parr, T.; Sajid, N.; Veselic, S.; Neacsu, V.; Friston, K. Active inference on discrete state-spaces: A synthesis. J. Math. Psychol. 2020, 99, 102447. [Google Scholar] [CrossRef]

- Mullainathan, S.; Thaler, R.H. Behavioral Economics; Technical Report; National Bureau of Economic Research: Cambridge, MA, USA, 2000. [Google Scholar]

- Tversky, A.; Kahneman, D. Advances in prospect theory: Cumulative representation of uncertainty. J. Risk Uncertain. 1992, 5, 297–323. [Google Scholar] [CrossRef]

- Gilboa, I.; Schmeidler, D. Maxmin expected utility with non-unique prior. J. Math. Econ. 1989, 18, 141–153. [Google Scholar] [CrossRef] [Green Version]

- Hausman, D.M. Why look under the hood. Philos. Econ. Anthol. 1994, 217–221. [Google Scholar] [CrossRef]

- Binmore, K. Rationality. In Handbook of Game Theory with Economic Applications; Elsevier: Amsterdam, The Netherlands, 2015; Volume 4, pp. 1–26. [Google Scholar]

- Simon, H.A. From substantive to procedural rationality. In 25 Years of Economic Theory; Springer: Berlin, Germany, 1976; pp. 65–86. [Google Scholar]

- Li, W. Mutual information functions versus correlation functions. J. Stat. Phys. 1990, 60, 823–837. [Google Scholar] [CrossRef]

- Cellucci, C.J.; Albano, A.M.; Rapp, P.E. Statistical validation of mutual information calculations: Comparison of alternative numerical algorithms. Phys. Rev. E 2005, 71, 066208. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Shen, Y.; Zhang, J.Q. A nonlinear correlation measure for multivariable data set. Phys. D Nonlinear Phenom. 2005, 200, 287–295. [Google Scholar] [CrossRef]

- Harré, M.S. Strategic information processing from behavioural data in iterated games. Entropy 2018, 20, 27. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arthur, W.B. Foundations of complexity economics. Nat. Rev. Phys. 2021, 3, 136–145. [Google Scholar] [CrossRef]

- Moran, R.J.; Campo, P.; Symmonds, M.; Stephan, K.E.; Dolan, R.J.; Friston, K.J. Free energy, precision and learning: The role of cholinergic neuromodulation. J. Neurosci. 2013, 33, 8227–8236. [Google Scholar] [CrossRef] [Green Version]

- Gilboa, I.; Schmeidler, D. A Theory of Case-Based Decisions; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Binmore, K. On the foundations of decision theory. Homo Oeconomicus 2017, 34, 259–273. [Google Scholar] [CrossRef] [Green Version]

- Binmore, K. Rational decisions in large worlds. In Annales d’Economie et de Statistique; GENES: New York, NY, USA, 2007; pp. 25–41. [Google Scholar]

- Parkes, D.C.; Wellman, M.P. Economic reasoning and artificial intelligence. Science 2015, 349, 267–272. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harré, M.S. Information Theory for Agents in Artificial Intelligence, Psychology, and Economics. Entropy 2021, 23, 310. https://doi.org/10.3390/e23030310

Harré MS. Information Theory for Agents in Artificial Intelligence, Psychology, and Economics. Entropy. 2021; 23(3):310. https://doi.org/10.3390/e23030310

Chicago/Turabian StyleHarré, Michael S. 2021. "Information Theory for Agents in Artificial Intelligence, Psychology, and Economics" Entropy 23, no. 3: 310. https://doi.org/10.3390/e23030310