Surface Electromyography-Based Action Recognition and Manipulator Control

Abstract

:Featured Application

Abstract

1. Introduction

2. Method and Mathematical Background

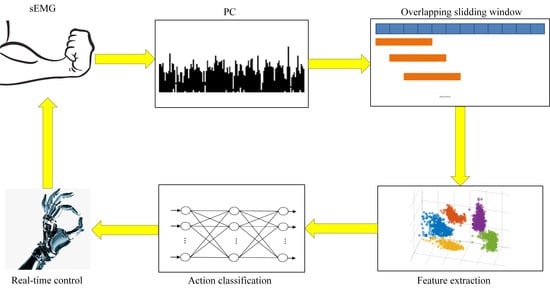

2.1. System Architecture

2.2. Data Preprocessing

2.3. Feature Extraction

2.3.1. Time-Domain Feature

2.3.2. Frequency-Domain Feature

2.3.3. Wavelet Feature

2.4. Feature Selection

2.5. Selection of Classifier

2.5.1. BP Neural Network

2.5.2. Genetic-Algorithm-Based Support Vector Machine (GA–SVM)

2.6. Evaluation of Classification

3. Experiment

3.1. Design of sEMG Detection and Action Recognition System

3.2. Subjects and Training Session

3.3. Experimental Protocol and Procedure

4. Results

4.1. Comparison of Features

4.2. Comparison of Online Classification

4.3. Performance of Real-Time Control

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| sEMG | surface Electromyography |

| SNR | signal-noise rate |

| DOF | degrees of freedom |

| LAN | Local Area Network |

| MAV | Mean Absolute Value |

| WL | Waveform Length |

| RMS | Root Mean Square |

| PSD | Power Spectrum Density |

| ZC | Zero Crossing |

| MF | Median Frequency |

| MPF | Mean Power Frequency |

| BP | back propagation |

| RBF | Radial Basis Function |

| GA | Genetic Algorithm |

| SVM | Support Vector Machine |

| PGA | programmable gain amplifier |

| UDP | User Datagram Protocol |

References

- Salih, S.I.; Oleiwi, J.K.; Ali, H.M. Study the Mechanical Properties of Polymeric Blends (SR/PMMA) Using for Maxillofacial Prosthesis Application. IOP Conf. Ser. Mater. Sci. Eng. 2018, 454. [Google Scholar] [CrossRef]

- Han, Y.; Liu, F.; Dowd, G.; Zhe, J. A thermal management device for a lower-limb prosthesis. Appl. Therm. Eng. 2015, 82, 246–252. [Google Scholar] [CrossRef]

- Wu, J.; Yu, G.; Gao, Y.; Wang, L. Mechatronics modeling and vibration analysis of a 2-DOF parallel manipulator in a 5-DOF hybrid machine tool. Mech. Mach. Theory 2018, 121, 430–445. [Google Scholar] [CrossRef]

- Truong, H.V.A.; Tran, D.T.; Ahn, K.K. A Neural Network Based Sliding Mode Control for Tracking Performance with Parameters Variation of a 3-DOF Manipulator. Appl. Sci. 2019, 9, 2023. [Google Scholar] [CrossRef] [Green Version]

- Fang, Y. Interacting with Prosthetic Hands via Electromyography Signals. Ph.D. Dissertation, University of Portsmouth, Portsmouth, UK, July 2015. [Google Scholar]

- Nazarpour, K.; Sharafat, A.R.; Firoozabadi, S.M.P. Surface EMG Signal Classification Using a Selective Mix of Higher Order Statistics. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 4208–4211. [Google Scholar] [CrossRef]

- Wang, J. Four Finger Movements Elbow sEMG Pattern Recognition Algorithm. Master’s Thesis, Kunming University of Science and Technology, Kunming, China, March 2017. [Google Scholar]

- Lao, K. Design and Myoelectrical Control of Anthropomorphic Prosthetic Hand. Master’s Thesis, South China University of Technology, Guangzhou, China, April 2016. [Google Scholar]

- Zhao, H. Research on Hand Grasping Movement of sEMG Signals for Artifical Limb. Ph.D. Dissertation, Harbin University of Science and Technology, Harbin, China, June 2016. [Google Scholar]

- Ma, Z.; Qiao, Y.; Li, L.; Rong, H. Classification of surface EMG signals based on LDA. Comput. Eng. Sci. 2009, 38, 2321–2327. [Google Scholar]

- Yu, B. Wearable Human Activity Recognition System Based on sEMG Signal. Master’s Thesis, Zhejiang University, Hangzhou, China, March 2018. [Google Scholar]

- Yang, X.; Yan, J.; Fang, Y.; Zhou, D.; Liu, H. Simultaneous Prediction of Wrist/Hand Motion via Wearable Ultrasound Sensing. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 970–977. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, Y.; Fang, Y.; Gui, K.; Li, K.; Zhang, D.; Liu, H. sEMG Bias-Driven Functional Electrical Stimulation System for Upper-Limb Stroke Rehabilitation. IEEE Sens. J. 2018, 18, 6812–6821. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Chen, Y.; Yu, H.; Yang, X.; Lu, W. Learning Effective Spatial-Temporal Features for sEMG Armband based Gesture Recognition. IEEE Internet Things J. 2020, 1–14. [Google Scholar] [CrossRef]

- Shen, S.; Gu, K.; Chen, X.; Wang, R. Motion Classification Based on sEMG Signals Using Deep Learning. In Machine Learning and Intelligent Communications, Proceedings of the International Conference on Machine Learning and Intelligent Communications (MLICOM 2019), Nanjing, China, 24–25 August 2019; Springer: Cham, Switzerland, 2019; pp. 563–572. [Google Scholar] [CrossRef]

- Banerjee, S.; Boudaoud, S.; Kinugawa, B.K. How young is your Muscle? A Machine Learning framework for motor functional assessment with ageing by NMF based analysis of HD-sEMG signal. J. Comput. Med. Biol. 2020, in press. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Kim, J.; Koo, B.; Kim, T.; Jung, H.; Park, S.; Kim, S.; Kim, Y. Development of an Armband EMG Module and a Pattern Recognition Algorithm for the 5-Finger Myoelectric Hand Prosthesis. Int. J. Precis. Eng. Manuf. 2019, 20, 1997–2006. [Google Scholar] [CrossRef]

- Meattini, R.; Benatti, S.; Scarcia, U.; De Gregorio, D.; Benini, L.; Melchiorri, C. An sEMG-Based Human–Robot Interface for Robotic Hands Using Machine Learning and Synergies. IEEE Trans. Compon. Packag. Manuf. Technol. 2018, 8, 1149–1158. [Google Scholar] [CrossRef]

- Nazmi, N.; Abdul Rahman, M.A.; Yamamoto, S.-I.; Ahmad, S.A.; Malarvili, M.; Mazlan, S.A.; Zamzuri, H. Assessment on Stationarity of EMG Signals with Different Windows Size During Isotonic Contractions. Appl. Sci. 2017, 7, 1050. [Google Scholar] [CrossRef] [Green Version]

- Oskoei, M.A.; Hu, H. Support Vector Machine-Based Classification Scheme for Myoelectric Control Applied to Upper Limb. IEEE Trans. Biomed. Eng. 2008, 55, 1956–1965. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D. Research of EMG-based Control Methods for Bionic Mechanical Hand. Master’s Thesis, Shenyang Ligong University, Shenyang, China, December 2013. [Google Scholar]

- Udhan, T.; Bernadin, S. Optimal time-and frequency-domain feature characterization for emotion recognition using electromyographic speech. J. Acoust. Soc. Am. 2016, 139, 2015. [Google Scholar] [CrossRef]

- Jali, M.H.; Ibrahim, I.M.; Sulaima, M.F.; Bukhari, W.M.; Izzuddin, T.A.; Nasir, M.N. Features Extraction of EMG Signal using Time Domain Analysis for Arm Rehabilitation Device. AIP Conf. Proc. 2015, 1660. [Google Scholar] [CrossRef]

- Duan, W.; Huang, L.; Han, Y.; Huang, D. A hybrid EMD-AR model for nonlinear and non-stationary wave forecasting. J. Zhejiang Univ. Sci. A 2016, 17, 115–129. [Google Scholar] [CrossRef] [Green Version]

- Reddy, N.P.; Gupta, V. Toward direct biocontrol using surface EMG signals: Control of finger and wrist joint models. Med. Eng. Phys. 2007, 29, 398–403. [Google Scholar] [CrossRef]

- Zhang, Y. Hand Gestures Recognition Based on Optimization of sEMG Training Set Weight. Master’s Thesis, Chongqing University, Chongqing, China, April 2017. [Google Scholar]

- Rahayuningsih, I.; Wibawa, A.D.; Pramunanto, E. Klasifikasi Bahasa Isyarat Indonesia Berbasis Sinyal EMG Menggunakan Fitur Time Domain (MAV, RMS, VAR, SSI). J. Tek. ITS 2018, 7, 2337–3520. [Google Scholar] [CrossRef]

- Arabadzhiev, T.I.; Dimitrov, V.G.; Dimitrova, N.A.; Dimitrov, G.V. Interpretation of EMG integral or RMS and estimates of ‘neuromuscular efficiency’ can be misleading in fatiguing contraction. J. Electromyogr. Kinesiol. 2010, 20, 223–232. [Google Scholar] [CrossRef]

- Suresh, N.; Sasilatha, T.; Senthil, B. System on Chip (SOC) Based Cardiac Monitoring System Using Kalman Filtering with Fast Fourier Transform (FFT) Signal Analysis Algorithm. J. Med. Imaging Health Inf. 2016, 6, 897–905. [Google Scholar] [CrossRef]

- Abboud, J.; Nougarou, F.; Descarreaux, M. Muscle Activity Adaptations to Spinal Tissue Creep in the Presence of Muscle Fatigue. PLoS ONE 2016, 11, e0149076. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pucciarelli, G. Wavelet Analysis in Volcanology: The Case of Phlegrean Fields. J. Environ. Sci. Eng. A 2017, 6, 300–307. [Google Scholar] [CrossRef] [Green Version]

- Duval-Poo, M.A.; Piana, M.; Massone, A.M. Solar hard X-ray imaging by means of Compressed Sensing and Finite Isotropic Wavelet Transform. Astron. Astrophys. 2018, 615. [Google Scholar] [CrossRef]

- Wang, T.; Li, L.; Huang, Y.-A.; Zhang, H.; Ma, Y.; Zhou, X. Prediction of Protein-Protein Interactions from Amino Acid Sequences Based on Continuous and Discrete Wavelet Transform Features. Molecules 2018, 23, 823. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Taran, S.; Bajaj, V. Motor imagery tasks-based EEG signals classification using tunable-Q wavelet transform. Neural Comput. Applic. 2019, 31, 6925–6932. [Google Scholar] [CrossRef]

- Luo, Z.; Wang, F. Electromyography Movement Pattern Recognition Based on the Wavelet Eigenvalues and Pi-sigma Network. J. Test. Meas. Technol. 2006, 20, 344–348. [Google Scholar]

- Wu, B.; Han, S.; Xiao, J.; Hu, X.; Fan, J. Error compensation based on BP neural network for airborne laser ranging. Optik 2016, 127, 4083–4088. [Google Scholar] [CrossRef]

- Pan, H.; Yang, J.; Shi, Y.; Li, T. BP Neural Network Application Model of Predicting the Apple Hardness. J. Comput. Theor. Nanosci. 2015, 12, 2802–2807. [Google Scholar] [CrossRef]

- Li, D.; Jia, S. Application of BP artificial neural network in blood glucose prediction based on multi-spectrum. Laser Optoelectron. Prog. 2017, 54, 031703. [Google Scholar] [CrossRef]

- Jun, Y.; Li, G. Fast algorithm for building Delaunay triangulation based on grid division. Sci. Surv. Mapp. 2016, 41, 109–114. [Google Scholar]

- Zang, S.; Zhang, C.; Zhang, L.; Zhang, Y. Wetland Remote Sensing Classification Using Support Vector Machine Optimized with Genetic Algorithm: A Case Study in Honghe Nature National Reserve. Sci. Geogr. Sin. 2012, 32, 434–441. [Google Scholar] [CrossRef]

- Ar, Y.; Bostanci, E. A genetic algorithm solution to the collaborative filtering problem. Expert Syst. Appl. 2016, 61, 122–128. [Google Scholar] [CrossRef]

- Chen, P.; Yuan, L.; He, Y.; Luo, S. An improved SVM classifier based on double chains quantum genetic algorithm and its application in analogue circuit diagnosis. Neurocomputing 2016, 211, 202–211. [Google Scholar] [CrossRef]

- Liu, Q.; Chen, G.; Liu, X.; Yang, Q. Genetic algorithm based SVM parameter composition optimization. Comput. Appl. Softw. 2012, 29, 94–100. [Google Scholar]

- Jiang, C.; Zhang, G.; Li, Z. Abnormal intrusion detection for embedded network system based on genetic algorithm optimised SVM. Comput. Appl. Softw. 2011, 28, 287–289. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, Y.; Miao, D. Three-way Confusion Matrix for Classification: A Measure Driven View. Inf. Sci. 2019, 507, 772–794. [Google Scholar] [CrossRef]

- Hasnain, M.; Pasha, M.F.; Ghani, I.; Imran, M.; Alzahrani, M.Y.; Budiarto, R. Evaluating Trust Prediction and Confusion Matrix Measures for Web Services Ranking. IEEE Access 2020, 8, 90847–90861. [Google Scholar] [CrossRef]

- Kong, Y.; Jing, M. Research of the classification method based on confusion matrixes and ensemble learning. Comput. Eng. Sci. 2012, 34, 111–117. [Google Scholar]

- Ma, X.; Guo, M. Research on gesture EMG signal recognition based on EEMD and multi domain feature fusion. J. Yunnan Univ. 2018, 40, 252–258. [Google Scholar] [CrossRef]

- Pancholi, S.; Joshi, A.M. Portable EMG Data Acquisition Module for Upper Limb Prosthesis Application. IEEE Sens. J. 2018, 18, 3436–3443. [Google Scholar] [CrossRef]

- Rehman, M.Z.U.; Waris, A.; Gilani, S.O.; Jochumsen, M.; Niazi, I.K.; Jamil, M.; Farina, D.; Kamavuako, E.N. Multiday EMG-Based Classification of Hand Motions with Deep Learning Techniques. Sensors 2018, 18, 2497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Ling, C.; Li, S. EMG Signals based Human Action Recognition via Deep Belief Networks. IFAC Pap. OnLine 2019, 52, 271–276. [Google Scholar] [CrossRef]

- Namazi, H. Fractal-Based Classification of Electromyography (EMG) Signal in Response to Basic Movements of the Fingers. Fractals 2019, 27, 1950037. [Google Scholar] [CrossRef]

- Tehovnik, E.J.; Slocum, W.M. Microstimulation of V1 delays visually guided saccades: A parametric evaluation of delay fields. Exp. Brain Res. 2007, 176, 413–424. [Google Scholar] [CrossRef] [PubMed]

- Lyons, K.R.; Joshi, S.S. Upper Limb Prosthesis Control for High-Level Amputees via Myoelectric Recognition of Leg Gestures. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1056–1066. [Google Scholar] [CrossRef]

- Stachaczyk, M.; Atashzar, S.F.; Farina, D. Adaptive Spatial Filtering of High-Density EMG for Reducing the Influence of Noise and Artefacts in Myoelectric Control. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1511–1517. [Google Scholar] [CrossRef]

| Feature | Average | Fist Clenching | Hand Opening | Wrist Flexion | Wrist Extension | Calling Me |

|---|---|---|---|---|---|---|

| MAV | 90.0% | 100% | 97% | 79% | 93% | 81% |

| ZC | 27.4% | 21% | 19% | 17% | 47% | 33% |

| WL | 87.0% | 98% | 81% | 77% | 93% | 86% |

| RMS | 87.2% | 95% | 93% | 76% | 91% | 83% |

| MF | 38.8% | 22% | 21% | 34% | 71% | 46% |

| MPF | 37.2% | 18% | 47% | 19% | 59% | 43% |

| Wavelet coefficient | 71.6% | 87% | 71% | 62% | 75% | 63% |

| Classifier | Average | Fist Clenching | Hand Opening | Wrist Flexion | Wrist Extension | Calling Me |

|---|---|---|---|---|---|---|

| BP | 93.2% | 100% | 92% | 91% | 96% | 87% |

| GA-SVM | 83.0% | 100% | 64% | 92% | 93% | 66% |

| Action | Fist Clenching | Hand Opening | Wrist Flexion | Wrist Extension | Calling Me |

|---|---|---|---|---|---|

| Fist clenching | 950 | 0 | 0 | 0 | 0 |

| Hand opening | 9 | 876 | 17 | 27 | 21 |

| Wrist flexion | 16 | 22 | 865 | 29 | 18 |

| Wrist extension | 5 | 12 | 15 | 911 | 7 |

| Calling me | 20 | 25 | 43 | 36 | 826 |

| Action | Fist Clenching | Hand Opening | Wrist Flexion | Wrist Extension | Calling Me |

|---|---|---|---|---|---|

| Fist clenching | 950 | 0 | 0 | 0 | 0 |

| Hand opening | 34 | 606 | 49 | 171 | 90 |

| Wrist flexion | 8 | 22 | 877 | 27 | 16 |

| Wrist extension | 10 | 16 | 34 | 883 | 7 |

| Calling me | 38 | 76 | 120 | 89 | 627 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, T.; Liu, D.; Wang, Q.; Bai, O.; Sun, J. Surface Electromyography-Based Action Recognition and Manipulator Control. Appl. Sci. 2020, 10, 5823. https://doi.org/10.3390/app10175823

Cao T, Liu D, Wang Q, Bai O, Sun J. Surface Electromyography-Based Action Recognition and Manipulator Control. Applied Sciences. 2020; 10(17):5823. https://doi.org/10.3390/app10175823

Chicago/Turabian StyleCao, Tianao, Dan Liu, Qisong Wang, Ou Bai, and Jinwei Sun. 2020. "Surface Electromyography-Based Action Recognition and Manipulator Control" Applied Sciences 10, no. 17: 5823. https://doi.org/10.3390/app10175823