Attention Enhanced U-Net for Building Extraction from Farmland Based on Google and WorldView-2 Remote Sensing Images

Abstract

:1. Introduction

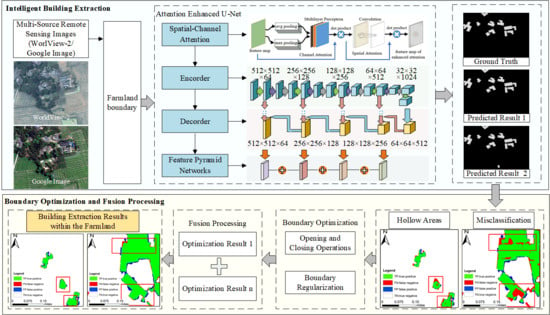

2. Methodology

2.1. Overall Framework

2.2. Improvement of the Attention-Enhanced U-Net Network

2.3. Building Extraction from Multi-Source Remote Sensing Images under Boundary Constraints

2.4. Building Boundary Optimization and Fusion Processing

3. Case Experiment Analysis

3.1. Case Area and Dataset

3.2. Experimental Environment and Parameter Setting

3.3. Experimental Results and Analysis

3.4. Discussion

3.4.1. Comparative Experiments of Building Extraction

3.4.2. Comparative Experiments of Boundary Optimization and Fusion

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, Y.M.; Yao, M.R.; Zhao, Q.Q.; Chen, Z.J.; Jiang, P.H.; Li, M.C.; Chen, D. Delineation of a basic farmland protection zone based on spatial connectivity and comprehensive quality evaluation: A case study of Changsha City, China. Land Use Policy 2021, 101, 105145. [Google Scholar] [CrossRef]

- Connell, D.J. The Quality of Farmland Protection in Canada: An Evaluation of the Strength of Provincial Legislative Frameworks. Can. Plan. Policy Aménage. Polit. Can. 2021, 1, 109–130. [Google Scholar]

- Perrin, C.; Clément, C.; Melot, R.; Nougarèdes, B. Preserving farmland on the urban fringe: A literature review on land policies in developed countries. Land 2020, 9, 223. [Google Scholar] [CrossRef]

- Perrin, C.; Nougarèdes, B.; Sini, L.; Branduini, P.; Salvati, L. Governance changes in peri-urban farmland protection following decentralisation: A comparison between Montpellier (France) and Rome (Italy). Land Use Policy 2018, 70, 535–546. [Google Scholar] [CrossRef] [Green Version]

- Epp, S.; Caldwell, W.; Bryant, C. Farmland preservation and rural development in Canada. In Agrourbanism; Gottero, E., Ed.; GeoJournal Library; Springer: Cham, Switzerland, 2019; Volume 124, pp. 11–25. [Google Scholar]

- Wu, Y.Z.; Shan, L.P.; Guo, Z.; Peng, L. Cultivated land protection policies in China facing 2030: Dynamic balance system versus basic farmland zoning. Habitat Int. 2017, 69, 126–138. [Google Scholar] [CrossRef]

- Shao, Z.F.; Li, C.M.; Li, D.R.; Altan, O.; Zhang, L.; Ding, L. An accurate matching method for projecting vector data into surveillance video to monitor and protect cultivated land. ISPRS Int. J. Geo-Inf. 2020, 9, 448. [Google Scholar] [CrossRef]

- Li, C.X.; Gao, X.; Xi, Z.L. Characteristics, hazards, and control of illegal villa (houses): Evidence from the Northern Piedmont of Qinling Mountains, Shaanxi Province, China. Environ. Sci. Pollut. Res. 2019, 26, 21059–21064. [Google Scholar] [CrossRef] [PubMed]

- Shao, Z.F.; Tang, P.H.; Wang, Z.Y.; Saleem, N.; Yam, S. BRRNet: A fully convolutional neural network for automatic building extraction from high-resolution remote sensing images. Remote Sens. 2020, 12, 1050. [Google Scholar] [CrossRef] [Green Version]

- Xie, J.L. Research on Key Technologies of Rural Building Information Extraction Based on High Resolution Remote Sensing Images; Southwest Jiaotong University: Chengdu, China, 2019. [Google Scholar]

- Ji, S.P.; Wei, S.Q.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- You, Y.F.; Wang, S.Y.; Ma, Y.X.; Chen, G.S.; Wang, B. Building detection from VHR remote sensing imagery based on the morphological building index. Remote Sens. 2018, 10, 1287. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.N.; Shi, Q.; Du, B.; Zhang, L.P.; Wang, D.Z.; Ding, H.X. Scene-Driven Multitask Parallel Attention Network for Building Extraction in High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4287–4306. [Google Scholar] [CrossRef]

- Liao, C.; Hu, H.; Li, H.F.; Ge, X.M.; Chen, M.; Li, C.N. Joint Learning of Contour and Structure for Boundary-Preserved Building Extraction. Remote Sens. 2021, 13, 1049. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Yan, K.; Yu, X.Z. Building extraction of multi-source data based on deep learning. In Proceedings of the 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC), Xiamen, China, 5–7 July 2019; pp. 296–300. [Google Scholar]

- Sun, G.Y.; Huang, H.; Zhang, A.Z.; Li, F.; Zhao, H.M. Fusion of multiscale convolutional neural networks for building extraction in very high-resolution images. Remote Sens. 2019, 11, 227. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.W. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Liasis, G.; Stavrou, S. Building extraction in satellite images using active contours and colour features. Int. J. Remote Sens. 2016, 37, 1127–1153. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Ghaffarian, S. Automatic building detection based on Purposive FastICA (PFICA) algorithm using monocular high resolution Google Earth images. ISPRS J. Photogramm. Remote Sens. 2014, 97, 152–159. [Google Scholar] [CrossRef]

- Liu, Z.J.; Wang, J.; Liu, W.P. Building extraction from high resolution imagery based on multi-scale object oriented classification and probabilistic Hough transform. In Proceedings of the 2005 International Geoscience and Remote Sensing Symposium (IGARSS’05), Seoul, Korea, 29 July 2005; pp. 2250–2253. [Google Scholar]

- Lin, C.G.; Nevatia, R. Building detection and description from a single intensity image. Comput. Vis. Image Underst. 1998, 72, 101–121. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, H.; Zhang, X. High-resolution Image Building Extraction Using U-net Neural Network. Remote Sens. Inf. 2020, 35, 3547. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Munich, Germany, 5–9 October 2015, Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Yi, Y.N.; Zhang, Z.J.; Zhang, W.C.; Zhang, C.R.; Li, W.D. Semantic segmentation of urban buildings from VHR remote sensing imagery using a deep convolutional neural network. Remote Sens. 2019, 11, 1774. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Li, Y.; Xu, W.P.; Chen, H.H.; Jiang, J.H.; Li, X. A Novel Framework Based on Mask R-CNN and Histogram Thresholding for Scalable Segmentation of New and Old Rural Buildings. Remote Sens. 2021, 13, 1070. [Google Scholar] [CrossRef]

- Zhang, L.L.; Wu, J.S.; Fan, Y.; Gao, H.M.; Shao, Y.H. An efficient building extraction method from high spatial resolution remote sensing images based on improved mask R-CNN. Sensors 2020, 20, 1465. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.W.; Shibasaki, R. Automatic building segmentation of aerial imagery using multi-constraint fully convolutional networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef] [Green Version]

- Lin, J.; Jing, W.; Song, H.; Chen, G. ESFNet: Efficient Network for Building Extraction from High-Resolution Aerial Images. IEEE Access 2019, 7, 54285–54294. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef] [Green Version]

- Bai, T.; Pang, Y.; Wang, J.C.; Han, K.N.; Luo, J.S.; Wang, H.Q.; Lin, J.Z.; Wu, J.; Zhang, H. An Optimized faster R-CNN method based on DRNet and RoI align for building detection in remote sensing images. Remote Sens. 2020, 12, 762. [Google Scholar] [CrossRef] [Green Version]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Lei, L.; Zou, H. Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2018, 145, 3–22. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Valente, J.; Voort, M.V.D.; Tekinerdogan, B. Effect of Attention Mechanism in Deep Learning-Based Remote Sensing Image Processing: A Systematic Literature Review. Remote Sens. 2021, 13, 2965. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision; Munich, Germany, 8–14 September 2018, Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Yang, H.; Wu, P.; Yao, X.; Wu, Y.; Wang, B.; Xu, Y. Building Extraction in Very High Resolution Imagery by Dense-Attention Networks. Remote Sens. 2018, 10, 1768. [Google Scholar] [CrossRef] [Green Version]

- Pan, X.; Yang, F.; Gao, L.; Chen, Z.; Zhang, B.; Fan, H.; Ren, J. Building extraction from high-resolution aerial imagery using a generative adversarial network with spatial and channel attention mechanisms. Remote Sens. 2019, 11, 917. [Google Scholar] [CrossRef] [Green Version]

- Jiang, H.W.; Hu, X.Y.; Li, K.; Zhang, J.M.; Gong, J.Q.; Zhang, M. PGA-SiamNet: Pyramid Feature-Based Attention-Guided Siamese Network for Remote Sensing Orthoimagery Building Change Detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef] [Green Version]

- Guo, M.Q.; Liu, H.; Xu, Y.Y.; Huang, Y. Building extraction based on U-Net with an attention block and multiple losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Xie, Y.K.; Zhu, J.; Cao, Y.G.; Feng, D.J.; Hu, M.J.; Li, W.L.; Zhang, Y.H.; Fu, L. Refined Extraction of Building Outlines From High-Resolution Remote Sensing Imagery Based on a Multifeature Convolutional Neural Network and Morphological Filtering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1852–1855. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.K.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision; Munich, Germany, 8–14 September 2018, Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

| Method | Accuracy | F1 | Recall | IoU |

|---|---|---|---|---|

| Our model | 96.96% | 81.47% | 82.72% | 68.72% |

| Post-processing | 97.47% | 85.61% | 93.02% | 74.85% |

| Method | Accuracy | F1 | Recall | IoU |

|---|---|---|---|---|

| U-Net | 88.99% | 51.84% | 73.31% | 34.99% |

| FCN8 | 95.70% | 68.18% | 57.02% | 42.88% |

| Attention_UNet | 94.42% | 70.14% | 81.07% | 54.01% |

| DeepLabv3+ | 96.60% | 77.60% | 72.78% | 63.39% |

| Our model | 96.96% | 81.47% | 82.72% | 68.72% |

| Method | Accuracy | F1 | Recall | IoU |

|---|---|---|---|---|

| U-Net | 89.56% | 53.55% | 87.22% | 38.16% |

| FCN8 | 96.88% | 80.28% | 84.27% | 67.30% |

| Attention_UNet | 94.40% | 72.06% | 89.78% | 57.14% |

| DeepLabv3+ | 96.88% | 80.57% | 86.94% | 67.79% |

| Our model | 97.47% | 85.61% | 93.02% | 74.85% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Fu, L.; Zhu, Q.; Zhu, J.; Fang, Z.; Xie, Y.; Guo, Y.; Gong, Y. Attention Enhanced U-Net for Building Extraction from Farmland Based on Google and WorldView-2 Remote Sensing Images. Remote Sens. 2021, 13, 4411. https://doi.org/10.3390/rs13214411

Li C, Fu L, Zhu Q, Zhu J, Fang Z, Xie Y, Guo Y, Gong Y. Attention Enhanced U-Net for Building Extraction from Farmland Based on Google and WorldView-2 Remote Sensing Images. Remote Sensing. 2021; 13(21):4411. https://doi.org/10.3390/rs13214411

Chicago/Turabian StyleLi, Chuangnong, Lin Fu, Qing Zhu, Jun Zhu, Zheng Fang, Yakun Xie, Yukun Guo, and Yuhang Gong. 2021. "Attention Enhanced U-Net for Building Extraction from Farmland Based on Google and WorldView-2 Remote Sensing Images" Remote Sensing 13, no. 21: 4411. https://doi.org/10.3390/rs13214411