Machine Learning Classification Ensemble of Multitemporal Sentinel-2 Images: The Case of a Mixed Mediterranean Ecosystem

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Data

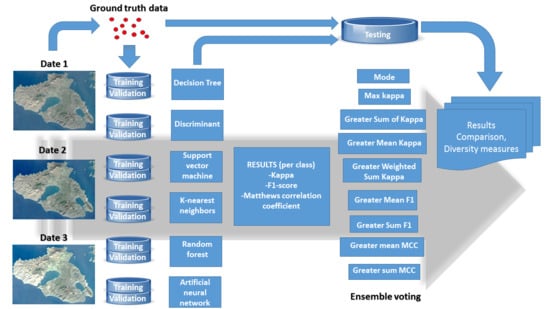

2.2. Methodology

2.2.1. Ground Truth Data Collection

2.2.2. Base Classifiers

2.2.3. Ensemble Voting Methods

- ‘Mode’: This voting method selects the suggestion with greater frequency in the six suggestions. In the cases with equal frequency, it selects the one with the higher sum of kappa.

- ‘Max kappa’: This voting method selects the suggestion with greater kappa.

- ‘Greater Sum of Kappa’: This voting method selects the suggestion with the greater sum of kappa aggregated on the suggestions. Identical suggestions are summed up and then compared with all other kappa values.

- ‘Greater Mean Kappa’: This method selects the suggestion with greater average kappa per suggestion. Identical suggestions are averaged and then compared with all other suggestions.

- ‘Greater Weighted Sum Kappa’: This method calculates the weighted sum of kappa which is the multiplication of the sum of kappa over the frequency of each suggestion group. Then, it selects the suggestion with the greater weighted sum of kappa.

- ‘Greater mean F1’: This voting method evaluates the average F1-score per suggestion and selects the one with the greater average F1. After grouping suggestions, we estimate the average F1 by group and compare the results. The result will be the one with the one with greater average F1-score.

- ‘Greater sum F1’: This voting method selects the suggestion with a greater aggregation of F1. After grouping the suggestions, we calculate the summation of F1 per group and compare the results. The result will be the suggestion group with the greater sum of F1-score.

- ‘Greater mean MCC’: This voting method evaluates the average MCC per suggestion group and then selects the one with the greater “mean MCC”. After grouping the suggestions, we average their MCC value and compare the results. The result will be the suggestion group with the greater average MCC

- ‘Greater sum MCC’: This last voting method selects the suggestion with the greater average MCC. After grouping suggestions, we evaluate the summation of MCC per group before evaluating the result. The result will be the suggestion group with the greater sum of MCC.

3. Results and Discussion

3.1. Base Classifiers Training

3.2. Classification Ensemble

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 617 | 82 | 23 | 0 | 10 | 0 | 1 | 9 | 0 | 46 | 0 | 1 | 0 | 789 | 0.78 |

| OF | 47 | 178 | 1 | 0 | 1 | 8 | 2 | 30 | 0 | 5 | 14 | 4 | 0 | 290 | 0.61 | |

| BW | 39 | 0 | 1012 | 10 | 0 | 0 | 0 | 0 | 4 | 92 | 0 | 1 | 0 | 1158 | 0.87 | |

| BU | 4 | 2 | 2 | 225 | 0 | 0 | 0 | 1 | 9 | 1 | 0 | 1 | 0 | 245 | 0.92 | |

| PB | 33 | 2 | 0 | 0 | 1034 | 3 | 47 | 26 | 0 | 0 | 11 | 0 | 0 | 1156 | 0.89 | |

| CF | 1 | 16 | 0 | 0 | 2 | 164 | 0 | 35 | 0 | 0 | 10 | 0 | 0 | 228 | 0.72 | |

| PN | 3 | 0 | 0 | 0 | 13 | 0 | 32 | 10 | 0 | 0 | 2 | 0 | 0 | 60 | 0.53 | |

| MS | 5 | 29 | 0 | 0 | 3 | 12 | 5 | 119 | 0 | 0 | 6 | 0 | 0 | 179 | 0.66 | |

| BL | 0 | 0 | 0 | 9 | 0 | 0 | 0 | 0 | 41 | 0 | 0 | 0 | 0 | 50 | 0.82 | |

| GL | 51 | 16 | 26 | 1 | 0 | 2 | 0 | 0 | 0 | 177 | 0 | 7 | 0 | 280 | 0.63 | |

| OB | 1 | 0 | 0 | 0 | 2 | 5 | 0 | 3 | 0 | 0 | 13 | 1 | 0 | 25 | 0.52 | |

| AL | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 10 | 2 | 167 | 0 | 183 | 0.91 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 267 | 267 | 1 | |

| Total | 801 | 327 | 1064 | 246 | 1065 | 194 | 87 | 234 | 54 | 331 | 58 | 182 | 267 | |||

| PA | 0.77 | 0.54 | 0.95 | 0.91 | 0.97 | 0.85 | 0.37 | 0.51 | 0.76 | 0.53 | 0.22 | 0.92 | 1 | OA = 0.82 | ||

| kappa | 0.73 | 0.55 | 0.88 | 0.91 | 0.91 | 0.77 | 0.43 | 0.56 | 0.79 | 0.55 | 0.31 | 0.91 | 1 | kappa = 0.79 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 674 | 63 | 9 | 1 | 3 | 0 | 0 | 8 | 0 | 17 | 0 | 0 | 0 | 775 | 0.87 |

| OF | 17 | 171 | 0 | 0 | 0 | 9 | 0 | 20 | 0 | 3 | 13 | 5 | 0 | 238 | 0.72 | |

| BW | 32 | 1 | 967 | 10 | 0 | 0 | 0 | 0 | 6 | 42 | 0 | 0 | 0 | 1058 | 0.91 | |

| BU | 0 | 0 | 2 | 212 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 216 | 0.98 | |

| PB | 42 | 3 | 0 | 0 | 1053 | 0 | 44 | 31 | 0 | 1 | 18 | 0 | 0 | 1192 | 0.88 | |

| CF | 1 | 27 | 0 | 0 | 0 | 164 | 0 | 16 | 0 | 0 | 8 | 3 | 0 | 219 | 0.75 | |

| PN | 3 | 3 | 0 | 0 | 5 | 0 | 40 | 4 | 0 | 0 | 0 | 0 | 0 | 55 | 0.73 | |

| MS | 5 | 36 | 0 | 0 | 4 | 20 | 3 | 152 | 0 | 0 | 7 | 0 | 0 | 227 | 0.67 | |

| BL | 0 | 1 | 1 | 23 | 0 | 0 | 0 | 0 | 46 | 0 | 0 | 0 | 0 | 71 | 0.65 | |

| GL | 26 | 14 | 85 | 0 | 0 | 0 | 0 | 0 | 0 | 263 | 1 | 10 | 0 | 399 | 0.66 | |

| OB | 1 | 8 | 0 | 0 | 0 | 1 | 0 | 3 | 0 | 0 | 11 | 0 | 0 | 24 | 0.46 | |

| AL | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 164 | 0 | 169 | 0.97 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 267 | 267 | 1 | |

| Total | 801 | 327 | 1064 | 246 | 1065 | 194 | 87 | 234 | 54 | 331 | 58 | 182 | 267 | |||

| PA | 0.84 | 0.52 | 0.91 | 0.86 | 0.99 | 0.85 | 0.46 | 0.65 | 0.85 | 0.79 | 0.19 | 0.9 | 1 | OA = 0.85 | ||

| kappa | 0.83 | 0.58 | 0.89 | 0.91 | 0.91 | 0.79 | 0.56 | 0.64 | 0.73 | 0.7 | 0.26 | 0.93 | 1 | kappa = 0.83 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 743 | 35 | 8 | 0 | 4 | 0 | 0 | 7 | 0 | 13 | 1 | 1 | 0 | 812 | 0.92 |

| OF | 23 | 259 | 0 | 0 | 0 | 4 | 0 | 15 | 0 | 2 | 1 | 1 | 0 | 305 | 0.85 | |

| BW | 5 | 1 | 1033 | 2 | 0 | 0 | 0 | 0 | 2 | 38 | 0 | 0 | 0 | 1081 | 0.96 | |

| BU | 3 | 0 | 0 | 242 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 250 | 0.97 | |

| PB | 6 | 0 | 0 | 0 | 1039 | 0 | 14 | 4 | 0 | 0 | 1 | 0 | 0 | 1064 | 0.98 | |

| CF | 0 | 2 | 0 | 0 | 0 | 172 | 0 | 13 | 0 | 0 | 5 | 0 | 0 | 192 | 0.9 | |

| PN | 1 | 0 | 0 | 0 | 12 | 0 | 72 | 2 | 0 | 0 | 1 | 0 | 0 | 88 | 0.82 | |

| MS | 3 | 24 | 0 | 0 | 7 | 16 | 1 | 186 | 0 | 0 | 10 | 1 | 0 | 248 | 0.75 | |

| BL | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 47 | 0 | 0 | 0 | 0 | 49 | 0.96 | |

| GL | 17 | 6 | 23 | 0 | 0 | 0 | 0 | 0 | 0 | 275 | 1 | 6 | 0 | 328 | 0.84 | |

| OB | 0 | 0 | 0 | 0 | 3 | 2 | 0 | 7 | 0 | 0 | 37 | 0 | 0 | 49 | 0.76 | |

| AL | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 1 | 173 | 0 | 177 | 0.98 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 267 | 267 | 1 | |

| Total | 801 | 327 | 1064 | 246 | 1065 | 194 | 87 | 234 | 54 | 331 | 58 | 182 | 267 | |||

| PA | 0.93 | 0.79 | 0.97 | 0.98 | 0.98 | 0.89 | 0.83 | 0.79 | 0.87 | 0.83 | 0.64 | 0.95 | 1 | OA = 0.93 | ||

| kappa | 0.91 | 0.81 | 0.95 | 0.97 | 0.97 | 0.89 | 0.82 | 0.76 | 0.91 | 0.82 | 0.69 | 0.96 | 1 | kappa = 0.91 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 673 | 45 | 2 | 4 | 1 | 0 | 2 | 7 | 0 | 22 | 0 | 2 | 0 | 758 | 0.89 |

| OF | 34 | 253 | 0 | 0 | 0 | 15 | 0 | 34 | 0 | 5 | 11 | 2 | 0 | 354 | 0.71 | |

| BW | 27 | 1 | 1038 | 15 | 0 | 0 | 0 | 0 | 3 | 50 | 0 | 0 | 0 | 1134 | 0.92 | |

| BU | 2 | 1 | 0 | 221 | 0 | 0 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 231 | 0.96 | |

| PB | 17 | 1 | 0 | 0 | 1047 | 0 | 24 | 13 | 0 | 2 | 9 | 0 | 2 | 1115 | 0.94 | |

| CF | 0 | 2 | 0 | 0 | 0 | 161 | 0 | 17 | 0 | 0 | 16 | 0 | 0 | 196 | 0.82 | |

| PN | 1 | 0 | 0 | 0 | 10 | 0 | 58 | 6 | 0 | 0 | 1 | 0 | 0 | 76 | 0.76 | |

| MS | 9 | 20 | 0 | 0 | 6 | 18 | 3 | 157 | 0 | 0 | 9 | 1 | 0 | 223 | 0.7 | |

| BL | 0 | 0 | 1 | 6 | 0 | 0 | 0 | 0 | 44 | 0 | 0 | 0 | 0 | 51 | 0.86 | |

| GL | 38 | 3 | 23 | 0 | 0 | 0 | 0 | 0 | 0 | 247 | 0 | 7 | 0 | 318 | 0.78 | |

| OB | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 12 | 0 | 0 | 13 | 0.92 | |

| AL | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 170 | 0 | 176 | 0.97 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 265 | 265 | 1 | |

| Total | 801 | 327 | 1064 | 246 | 1065 | 194 | 87 | 234 | 54 | 331 | 58 | 182 | 267 | |||

| PA | 0.84 | 0.77 | 0.98 | 0.9 | 0.98 | 0.83 | 0.67 | 0.67 | 0.81 | 0.75 | 0.21 | 0.93 | 0.99 | OA = 0.89 | ||

| kappa | 0.84 | 0.72 | 0.93 | 0.92 | 0.95 | 0.82 | 0.71 | 0.67 | 0.84 | 0.74 | 0.34 | 0.95 | 1 | Kappa = 0.87 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 724 | 54 | 8 | 0 | 4 | 0 | 3 | 14 | 0 | 38 | 1 | 1 | 724 | 847 | 0.85 |

| OF | 22 | 235 | 0 | 0 | 0 | 7 | 0 | 18 | 0 | 3 | 3 | 2 | 22 | 290 | 0.81 | |

| BW | 21 | 1 | 1027 | 3 | 0 | 0 | 0 | 0 | 3 | 49 | 0 | 0 | 21 | 1104 | 0.93 | |

| BU | 4 | 1 | 2 | 242 | 0 | 0 | 0 | 0 | 12 | 0 | 0 | 2 | 4 | 263 | 0.92 | |

| PB | 10 | 0 | 0 | 0 | 1049 | 0 | 27 | 15 | 0 | 0 | 7 | 0 | 10 | 1108 | 0.95 | |

| CF | 1 | 6 | 0 | 0 | 0 | 173 | 0 | 14 | 0 | 0 | 15 | 0 | 1 | 209 | 0.83 | |

| PN | 1 | 0 | 0 | 0 | 3 | 0 | 57 | 3 | 0 | 0 | 1 | 0 | 1 | 65 | 0.88 | |

| MS | 5 | 24 | 0 | 0 | 8 | 14 | 0 | 169 | 0 | 0 | 10 | 0 | 5 | 230 | 0.73 | |

| BL | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 39 | 0 | 0 | 0 | 0 | 40 | 0.97 | |

| GL | 13 | 4 | 27 | 0 | 0 | 0 | 0 | 0 | 0 | 234 | 0 | 8 | 13 | 286 | 0.82 | |

| OB | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 20 | 0 | 0 | 22 | 0.91 | |

| AL | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 7 | 1 | 169 | 0 | 179 | 0.94 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 267 | 1 | |

| Total | 801 | 327 | 1064 | 246 | 1065 | 194 | 87 | 234 | 54 | 331 | 58 | 182 | 801 | |||

| PA | 0.9 | 0.72 | 0.97 | 0.98 | 0.98 | 0.89 | 0.66 | 0.72 | 0.72 | 0.71 | 0.34 | 0.93 | 0.9 | OA = 0.90 | ||

| kappa | 0.85 | 0.75 | 0.93 | 0.95 | 0.96 | 0.85 | 0.75 | 0.71 | 0.83 | 0.74 | 0.5 | 0.93 | 0.85 | Kappa = 0.88 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 717 | 55 | 7 | 2 | 5 | 0 | 0 | 3 | 0 | 28 | 0 | 1 | 0 | 818 | 0.88 |

| OF | 25 | 220 | 0 | 1 | 0 | 9 | 0 | 37 | 0 | 3 | 8 | 2 | 0 | 305 | 0.72 | |

| BW | 11 | 0 | 1044 | 3 | 0 | 0 | 0 | 0 | 3 | 43 | 0 | 2 | 0 | 1106 | 0.94 | |

| BU | 1 | 1 | 7 | 225 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 0 | 242 | 0.93 | |

| PB | 8 | 0 | 0 | 0 | 1034 | 0 | 39 | 12 | 0 | 0 | 13 | 0 | 0 | 1106 | 0.93 | |

| CF | 0 | 3 | 0 | 0 | 0 | 163 | 0 | 24 | 0 | 0 | 11 | 3 | 0 | 204 | 0.8 | |

| PN | 1 | 0 | 0 | 0 | 12 | 0 | 52 | 0 | 0 | 0 | 1 | 0 | 0 | 66 | 0.79 | |

| MS | 5 | 23 | 0 | 0 | 10 | 16 | 1 | 143 | 0 | 0 | 7 | 0 | 0 | 205 | 0.7 | |

| BL | 0 | 1 | 0 | 8 | 0 | 0 | 0 | 0 | 51 | 0 | 0 | 0 | 0 | 60 | 0.85 | |

| GL | 23 | 5 | 27 | 1 | 0 | 0 | 0 | 1 | 0 | 243 | 0 | 3 | 0 | 303 | 0.8 | |

| OB | 0 | 2 | 0 | 0 | 1 | 3 | 0 | 9 | 0 | 0 | 18 | 1 | 0 | 34 | 0.53 | |

| AL | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 7 | 3 | 169 | 0 | 182 | 0.93 | |

| AB | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 279 | 280 | 1 | |

| Total | 792 | 311 | 1085 | 241 | 1063 | 191 | 92 | 229 | 62 | 324 | 61 | 181 | 279 | |||

| PA | 0.91 | 0.71 | 0.96 | 0.93 | 0.97 | 0.85 | 0.57 | 0.62 | 0.82 | 0.75 | 0.3 | 0.93 | 1 | OA = 0.89 | ||

| kappa | 0.87 | 0.7 | 0.94 | 0.93 | 0.94 | 0.82 | 0.65 | 0.64 | 0.83 | 0.76 | 0.37 | 0.93 | 1 | Kappa = 0.87 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 740 | 35 | 7 | 2 | 6 | 1 | 0 | 10 | 1 | 21 | 0 | 1 | 0 | 824 | 0.90 |

| OF | 27 | 271 | 0 | 1 | 0 | 2 | 0 | 9 | 0 | 5 | 1 | 0 | 0 | 316 | 0.86 | |

| BW | 8 | 0 | 1031 | 3 | 0 | 0 | 0 | 0 | 0 | 34 | 0 | 0 | 0 | 1076 | 0.96 | |

| BU | 0 | 0 | 1 | 246 | 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 | 0 | 257 | 0.96 | |

| PB | 2 | 0 | 0 | 0 | 1062 | 0 | 15 | 3 | 0 | 1 | 4 | 0 | 0 | 1087 | 0.98 | |

| CF | 0 | 1 | 0 | 0 | 0 | 152 | 0 | 11 | 0 | 0 | 5 | 0 | 0 | 169 | 0.90 | |

| PN | 0 | 0 | 0 | 0 | 10 | 0 | 66 | 0 | 0 | 0 | 0 | 0 | 0 | 76 | 0.87 | |

| MS | 4 | 12 | 0 | 0 | 9 | 17 | 1 | 189 | 0 | 1 | 6 | 0 | 0 | 239 | 0.79 | |

| BL | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 50 | 0 | 0 | 0 | 0 | 54 | 0.93 | |

| GL | 15 | 4 | 49 | 1 | 0 | 0 | 0 | 0 | 0 | 250 | 0 | 2 | 0 | 321 | 0.78 | |

| OB | 1 | 2 | 0 | 0 | 3 | 6 | 0 | 4 | 0 | 0 | 34 | 0 | 0 | 50 | 0.68 | |

| AL | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 185 | 0 | 187 | 0.99 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 254 | 254 | 1 | |

| Total | 797 | 327 | 1088 | 257 | 1090 | 178 | 82 | 226 | 61 | 312 | 50 | 188 | 254 | 4910 | ||

| PA | 0.93 | 0.83 | 0.95 | 0.96 | 0.97 | 0.85 | 0.80 | 0.84 | 0.82 | 0.80 | 0.68 | 0.98 | 1 | OA = 0.92 | ||

| kappa | 0.9 | 0.83 | 0.94 | 0.95 | 0.97 | 0.87 | 0.83 | 0.8 | 0.87 | 0.78 | 0.68 | 0.99 | 1 | Kappa = 0.91 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 727 | 42 | 5 | 3 | 4 | 0 | 1 | 7 | 1 | 25 | 0 | 0 | 0 | 815 | 0.89 |

| OF | 20 | 244 | 0 | 0 | 0 | 2 | 0 | 13 | 0 | 6 | 5 | 0 | 0 | 290 | 0.84 | |

| BW | 15 | 0 | 1037 | 3 | 0 | 0 | 0 | 0 | 3 | 35 | 0 | 1 | 0 | 1094 | 0.95 | |

| BU | 0 | 0 | 1 | 248 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 0 | 257 | 0.96 | |

| PB | 6 | 0 | 0 | 0 | 1074 | 0 | 26 | 9 | 0 | 2 | 7 | 0 | 0 | 1124 | 0.96 | |

| CF | 0 | 3 | 0 | 0 | 0 | 159 | 0 | 16 | 0 | 0 | 12 | 0 | 0 | 190 | 0.84 | |

| PN | 0 | 0 | 0 | 0 | 1 | 0 | 55 | 0 | 0 | 0 | 0 | 0 | 0 | 56 | 0.98 | |

| MS | 9 | 28 | 0 | 0 | 10 | 17 | 0 | 179 | 0 | 1 | 5 | 0 | 0 | 249 | 0.72 | |

| BL | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 49 | 0 | 0 | 0 | 0 | 52 | 0.94 | |

| GL | 20 | 7 | 44 | 1 | 0 | 0 | 0 | 0 | 0 | 241 | 0 | 4 | 0 | 317 | 0.76 | |

| OB | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 19 | 0 | 0 | 23 | 0.83 | |

| AL | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 2 | 183 | 0 | 189 | 0.97 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 254 | 254 | 1 | |

| Total | 797 | 327 | 1088 | 257 | 1090 | 178 | 82 | 226 | 61 | 312 | 50 | 188 | 254 | 4910 | ||

| PA | 0.91 | 0.75 | 0.95 | 0.96 | 0.99 | 0.89 | 0.67 | 0.79 | 0.80 | 0.77 | 0.38 | 0.97 | 1 | OA = 0.91 | ||

| kappa | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.85 | 0.68 | 0.74 | 0.85 | 0.73 | 0.40 | 0.96 | 1 | Kappa = 0.89 | ||

| Reference | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | Total | UA | ||

| Predicted | OG | 727 | 42 | 5 | 3 | 4 | 0 | 1 | 7 | 1 | 25 | 0 | 0 | 0 | 815 | 0.89 |

| OF | 20 | 244 | 0 | 0 | 0 | 2 | 0 | 13 | 0 | 6 | 5 | 0 | 0 | 290 | 0.84 | |

| BW | 15 | 0 | 1037 | 3 | 0 | 0 | 0 | 0 | 3 | 35 | 0 | 1 | 0 | 1094 | 0.95 | |

| BU | 0 | 0 | 1 | 248 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 0 | 0 | 257 | 0.96 | |

| PB | 6 | 0 | 0 | 0 | 1074 | 0 | 26 | 9 | 0 | 2 | 7 | 0 | 0 | 1124 | 0.96 | |

| CF | 0 | 3 | 0 | 0 | 0 | 159 | 0 | 16 | 0 | 0 | 12 | 0 | 0 | 190 | 0.84 | |

| PN | 0 | 0 | 0 | 0 | 1 | 0 | 55 | 0 | 0 | 0 | 0 | 0 | 0 | 56 | 0.98 | |

| MS | 9 | 28 | 0 | 0 | 10 | 17 | 0 | 179 | 0 | 1 | 5 | 0 | 0 | 249 | 0.72 | |

| BL | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 49 | 0 | 0 | 0 | 0 | 52 | 0.94 | |

| GL | 20 | 7 | 44 | 1 | 0 | 0 | 0 | 0 | 0 | 241 | 0 | 4 | 0 | 317 | 0.76 | |

| OB | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 19 | 0 | 0 | 23 | 0.83 | |

| AL | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 2 | 183 | 0 | 189 | 0.97 | |

| AB | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 254 | 254 | 1 | |

| Total | 797 | 327 | 1088 | 257 | 1090 | 178 | 82 | 226 | 61 | 312 | 50 | 188 | 254 | 4910 | ||

| PA | 0.89 | 0.84 | 0.95 | 0.96 | 0.96 | 0.84 | 0.98 | 0.72 | 0.94 | 0.76 | 0.83 | 0.97 | 1 | OA = 0.91 | ||

| kappa | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.85 | 0.68 | 0.74 | 0.85 | 0.73 | 0.40 | 0.96 | 1 | Kappa = 0.89 | ||

References

- Shen, H.; Lin, Y.; Tian, Q.; Xu, K.; Jiao, J. A comparison of multiple classifier combinations using different voting-weights for remote sensing image classification. Int. J. Remote Sens. 2018, 39, 3705–3722. [Google Scholar] [CrossRef]

- Maulik, U.; Chakraborty, D. Remote Sensing Image Classification: A survey of support-vector-machine-based advanced techniques. IEEE Geosci. Remote Sens. Mag. 2017, 5, 33–52. [Google Scholar] [CrossRef]

- Fathizad, H.; Hakimzadeh Ardakani, M.A.; Mehrjardi, R.T.; Sodaiezadeh, H. Evaluating desertification using remote sensing technique and object-oriented classification algorithm in the Iranian central desert. J. Afr. Earth Sci. 2018, 145, 115–130. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Chen, K.S.; Tzeng, Y.C.; Chen, C.F.; Kao, W.L.; Ni, C.L. Classification of multispectral imagery using dynamic learning neural network. In Proceedings of the IGARSS ’93—IEEE International Geoscience and Remote Sensing Symposium, Tokyo, Japan, 18–21 August 2013; IEEE: Piscataway, NJ, USA, 1994; pp. 896–898. [Google Scholar]

- Lawrence, R. Classification of remotely sensed imagery using stochastic gradient boosting as a refinement of classification tree analysis. Remote Sens. Environ. 2004, 90, 331–336. [Google Scholar] [CrossRef]

- Sohn, Y.; Rebello, N.S. Supervised and unsupervised spectral angle classifiers. Photogramm. Eng. Remote Sens. 2002, 68, 1271–1280. [Google Scholar]

- Strahler, A.H. The use of prior probabilities in maximum likelihood classification of remotely sensed data. Remote Sens. Environ. 1980, 10, 135–163. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. A relative evaluation of multiclass image classification by support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1335–1343. [Google Scholar] [CrossRef] [Green Version]

- Collins, M.J.; Dymond, C.; Johnson, E.A. Mapping subalpine forest types using networks of nearest neighbour classifiers. Int. J. Remote Sens. 2004, 25, 1701–1721. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Qian, Y.; Zhou, W.; Yan, J.; Li, W.; Han, L. Comparing Machine Learning Classifiers for Object-Based Land Cover Classification Using Very High Resolution Imagery. Remote Sens. 2014, 7, 153–168. [Google Scholar] [CrossRef]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced Spectral Classifiers for Hyperspectral Images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef] [Green Version]

- Ballanti, L.; Blesius, L.; Hines, E.; Kruse, B. Tree Species Classification Using Hyperspectral Imagery: A Comparison of Two Classifiers. Remote Sens. 2016, 8, 445. [Google Scholar] [CrossRef] [Green Version]

- Seetha, M.; Muralikrishna, I.V.; Deekshatulu, B.L.; Malleswari, B.L.; Hegde, P. Artificial Neural Networks and Other Methods of Image Classification. Theor. Appl. Inf. Technol. 2008, 4, 1039–1053. [Google Scholar]

- Thanh Noi, P.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2017, 18, 18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Lapini, A.; Pettinato, S.; Santi, E.; Paloscia, S.; Fontanelli, G.; Garzelli, A. Comparison of Machine Learning Methods Applied to SAR Images for Forest Classification in Mediterranean Areas. Remote Sens. 2020, 12, 369. [Google Scholar] [CrossRef] [Green Version]

- Ge, G.; Shi, Z.; Zhu, Y.; Yang, X.; Hao, Y. Land use/cover classification in an arid desert-oasis mosaic landscape of China using remote sensed imagery: Performance assessment of four machine learning algorithms. Glob. Ecol. Conserv. 2020, 22, e00971. [Google Scholar] [CrossRef]

- Dang, V.-H.; Hoang, N.-D.; Nguyen, L.-M.-D.; Bui, D.T.; Samui, P. A Novel GIS-Based Random Forest Machine Algorithm for the Spatial Prediction of Shallow Landslide Susceptibility. Forests 2020, 11, 118. [Google Scholar] [CrossRef] [Green Version]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GISci. Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Tonbul, H.; Colkesen, I.; Kavzoglu, T. Classification of poplar trees with object-based ensemble learning algorithms using Sentinel-2A imagery. J. Geod. Sci. 2020, 10, 14–22. [Google Scholar] [CrossRef]

- Langley, S.K.; Cheshire, H.M.; Humes, K.S. A comparison of single date and multitemporal satellite image classifications in a semi-arid grassland. J. Arid Environ. 2001, 49, 401–411. [Google Scholar] [CrossRef]

- Yuan, F.; Sawaya, K.E.; Loeffelholz, B.C.; Bauer, M.E. Land cover classification and change analysis of the Twin Cities (Minnesota) Metropolitan Area by multitemporal Landsat remote sensing. Remote Sens. Environ. 2005, 98, 317–328. [Google Scholar] [CrossRef]

- Hütt, C.; Koppe, W.; Miao, Y.; Bareth, G. Best Accuracy Land Use/Land Cover (LULC) Classification to Derive Crop Types Using Multitemporal, Multisensor, and Multi-Polarization SAR Satellite Images. Remote Sens. 2016, 8, 684. [Google Scholar] [CrossRef] [Green Version]

- Eisavi, V.; Homayouni, S.; Yazdi, A.M.; Alimohammadi, A. Land cover mapping based on random forest classification of multitemporal spectral and thermal images. Environ. Monit. Assess. 2015, 187, 291. [Google Scholar] [CrossRef] [PubMed]

- Tigges, J.; Lakes, T.; Hostert, P. Urban vegetation classification: Benefits of multitemporal RapidEye satellite data. Remote Sens. Environ. 2013, 136, 66–75. [Google Scholar] [CrossRef]

- Alcantara, C.; Kuemmerle, T.; Prishchepov, A.V.; Radeloff, V.C. Mapping abandoned agriculture with multi-temporal MODIS satellite data. Remote Sens. Environ. 2012, 124, 334–347. [Google Scholar] [CrossRef]

- Key, T. A Comparison of Multispectral and Multitemporal Information in High Spatial Resolution Imagery for Classification of Individual Tree Species in a Temperate Hardwood Forest. Remote Sens. Environ. 2001, 75, 100–112. [Google Scholar] [CrossRef]

- Kamusoko, C. Image Classification. In Remote Sensing Image Classification in R; Springer: Singapore, 2019; pp. 81–153. [Google Scholar]

- Rujoiu-Mare, M.-R.; Olariu, B.; Mihai, B.-A.; Nistor, C.; Săvulescu, I. Land cover classification in Romanian Carpathians and Subcarpathians using multi-date Sentinel-2 remote sensing imagery. Eur. J. Remote Sens. 2017, 50, 496–508. [Google Scholar] [CrossRef] [Green Version]

- Sharma, A.; Liu, X.; Yang, X. Land cover classification from multi-temporal, multi-spectral remotely sensed imagery using patch-based recurrent neural networks. Neural Netw. 2018, 105, 346–355. [Google Scholar] [CrossRef] [Green Version]

- Pal, M.; Mather, P.M. A comparison of decision tree and backpropagation neural network classifiers for land use classification. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Toronto, ON, Canada, 24–28 June 2002. [Google Scholar]

- Rogan, J.; Franklin, J.; Stow, D.; Miller, J.; Woodcock, C.; Roberts, D. Mapping land-cover modifications over large areas: A comparison of machine learning algorithms. Remote Sens. Environ. 2008, 112, 2272–2283. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Du, P.; Xia, J.; Zhang, W.; Tan, K.; Liu, Y.; Liu, S. Multiple Classifier System for Remote Sensing Image Classification: A Review. Sensors 2012, 12, 4764–4792. [Google Scholar] [CrossRef]

- Briem, G.J.; Benediktsson, J.A.; Sveinsson, J.R. Multiple classifiers applied to multisource remote sensing data. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2291–2299. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, R.; Zurita-Milla, R.; Izquierdo-Verdiguier, E.; de By, R.A. A Cloud-Based Multi-Temporal Ensemble Classifier to Map Smallholder Farming Systems. Remote Sens. 2018, 10, 729. [Google Scholar] [CrossRef] [Green Version]

- Foody, G.M.; Boyd, D.S.; Sanchez-Hernandez, C. Mapping a specific class with an ensemble of classifiers. Int. J. Remote Sens. 2007, 28, 1733–1746. [Google Scholar] [CrossRef]

- Lei, G.; Li, A.; Bian, J.; Yan, H.; Zhang, L.; Zhang, Z.; Nan, X. OIC-MCE: A Practical Land Cover Mapping Approach for Limited Samples Based on Multiple Classifier Ensemble and Iterative Classification. Remote Sens. 2020, 12, 987. [Google Scholar] [CrossRef] [Green Version]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B.; Shehata, M. A Multiple Classifier System to improve mapping complex land covers: A case study of wetland classification using SAR data in Newfoundland, Canada. Int. J. Remote Sens. 2018, 39, 7370–7383. [Google Scholar] [CrossRef]

- Doan, H.T.X.; Foody, G.M. Increasing soft classification accuracy through the use of an ensemble of classifiers. Int. J. Remote Sens. 2007, 28, 4609–4623. [Google Scholar] [CrossRef]

- Giacinto, G.; Roli, F. Ensembles of Neural Networks for Soft Classification of Remote Sensing Images. In Proceedings of the European Symposium on Intelligent Techniques, European Network for Fuzzy Logic and Uncertainty Modelling in Information Technology, Bari, Italy, 20–21 March 1997; pp. 166–170. [Google Scholar]

- Drucker, H.; Cortes, C.; Jackel, L.D.; LeCun, Y.; Vapnik, V. Boosting and Other Ensemble Methods. Neural Comput. 1994, 6, 1289–1301. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Pal, M. Ensemble of support vector machines for land cover classification. Int. J. Remote Sens. 2008, 29, 3043–3049. [Google Scholar] [CrossRef]

- Pal, M. Ensemble Learning with Decision Tree for Remote Sensing Classification. World Acad. Sci. Eng. Technol. 2007, 36, 258–260. [Google Scholar]

- Battiti, R.; Colla, A.M. Democracy in neural nets: Voting schemes for classification. Neural Netw. 1994, 7, 691–707. [Google Scholar] [CrossRef]

- Oza, N.C.; Tumer, K. Classifier ensembles: Select real-world applications. Inf. Fusion 2008, 9, 4–20. [Google Scholar] [CrossRef]

- Puletti, N.; Chianucci, F.; Castaldi, C. Use of Sentinel-2 for forest classification in Mediterranean environments. Ann. Silvic. Res. 2018, 42, 32–38. [Google Scholar]

- Chatziantoniou, A.; Psomiadis, E.; Petropoulos, G. Co-Orbital Sentinel 1 and 2 for LULC Mapping with Emphasis on Wetlands in a Mediterranean Setting Based on Machine Learning. Remote Sens. 2017, 9, 1259. [Google Scholar] [CrossRef] [Green Version]

- Henderson, M.; Kalabokidis, K.; Marmaras, E.; Konstantinidis, P.; Marangudakis, M. Fire and society: A comparative analysis of wildfire in Greece and the United States. Hum. Ecol. Rev. 2005, 12, 169–182. [Google Scholar]

- ESA Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/ (accessed on 10 February 2020).

- Hill, R.A.; Wilson, A.K.; George, M.; Hinsley, S.A. Mapping tree species in temperate deciduous woodland using time-series multi-spectral data. Appl. Veg. Sci. 2010, 13, 86–99. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-learning Versus OBIA for Scattered Shrub Detection with Google Earth Imagery: Ziziphus lotus as Case Study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef] [Green Version]

- Li, Q.; Qiu, C.; Ma, L.; Schmitt, M.; Zhu, X.X. Mapping the Land Cover of Africa at 10 m Resolution from Multi-Source Remote Sensing Data with Google Earth Engine. Remote Sens. 2020, 12, 602. [Google Scholar] [CrossRef] [Green Version]

- Bwangoy, J.-R.B.; Hansen, M.C.; Roy, D.P.; De Grandi, G.; Justice, C.O. Wetland mapping in the Congo Basin using optical and radar remotely sensed data and derived topographical indices. Remote Sens. Environ. 2010, 114, 73–86. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Almuallim, H. An efficient algorithm for optimal pruning of decision trees. Artif. Intell. 1996, 83, 347–362. [Google Scholar] [CrossRef] [Green Version]

- Aylmer Fisher FRS, R. Summary for Policymakers. In Climate Change 2013—The Physical Science Basis; Intergovernmental Panel on Climate Change, Ed.; Cambridge University Press: Cambridge, UK, 1936; pp. 1–30. ISBN 9788578110796. [Google Scholar]

- Bandos, T.V.; Bruzzone, L.; Camps-Valls, G. Classification of Hyperspectral Images with Regularized Linear Discriminant Analysis. IEEE Trans. Geosci. Remote Sens. 2009, 47, 862–873. [Google Scholar] [CrossRef]

- Feret, J.-B.; Asner, G.P. Tree Species Discrimination in Tropical Forests Using Airborne Imaging Spectroscopy. IEEE Trans. Geosci. Remote Sens. 2013, 51, 73–84. [Google Scholar] [CrossRef]

- Clark, M.; Roberts, D.; Clark, D. Hyperspectral discrimination of tropical rain forest tree species at leaf to crown scales. Remote Sens. Environ. 2005, 96, 375–398. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Colkesen, I. A kernel functions analysis for support vector machines for land cover classification. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 352–359. [Google Scholar] [CrossRef]

- Franco-Lopez, H.; Ek, A.R.; Bauer, M.E. Estimation and mapping of forest stand density, volume, and cover type using the k-nearest neighbors method. Remote Sens. Environ. 2001, 77, 251–274. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Mas, J.F.; Flores, J.J. The application of artificial neural networks to the analysis of remotely sensed data. Int. J. Remote Sens. 2008, 29, 617–663. [Google Scholar] [CrossRef]

- Atkinson, P.M.; Tatnall, A.R.L. Introduction Neural networks in remote sensing. Int. J. Remote Sens. 1997, 18, 699–709. [Google Scholar] [CrossRef]

- Yuan, H.; Van Der Wiele, C.; Khorram, S. An Automated Artificial Neural Network System for Land Use/Land Cover Classification from Landsat TM Imagery. Remote Sens. 2009, 1, 243–265. [Google Scholar] [CrossRef] [Green Version]

- Kavzoglu, T.; Mather, P.M. The use of backpropagating artificial neural networks in land cover classification. Int. J. Remote Sens. 2003, 24, 4907–4938. [Google Scholar] [CrossRef]

- Längkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and Segmentation of Satellite Orthoimagery Using Convolutional Neural Networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef] [Green Version]

- Pires de Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2019, 12, 86. [Google Scholar] [CrossRef] [Green Version]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Zuo, Z.; Shuai, B.; Wang, G.; Liu, X.; Wang, X.; Wang, B.; Chen, Y. Convolutional recurrent neural networks: Learning spatial dependencies for image representation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 18–26. [Google Scholar]

- Zhao, W.; Du, S. Spectral–Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Lv, X.; Ming, D.; Chen, Y.; Wang, M. Very high resolution remote sensing image classification with SEEDS-CNN and scale effect analysis for superpixel CNN classification. Int. J. Remote Sens. 2019, 40, 506–531. [Google Scholar] [CrossRef]

- Kuncheva, L.I.; Whitaker, C.J. Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 2003, 51, 181–207. [Google Scholar] [CrossRef]

- Petrakos, M.; Atli Benediktsson, J.; Kanellopoulos, I. The effect of classifier agreement on the accuracy of the combined classifier in decision level fusion. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2539–2546. [Google Scholar] [CrossRef] [Green Version]

- Thomas, G. Dietterich An Experimental Comparison of Three Methods for Constructing Ensembles of Decision Trees: Bagging, Boosting, and Randomization. Mach. Learn. 2000, 40, 139–157. [Google Scholar]

- Edwards, A.L. Note on the “correction for continuity” in testing the significance of the difference between correlated proportions. Psychometrika 1948, 13, 185–187. [Google Scholar] [CrossRef]

- Kavzoglu, T. Object-Oriented Random Forest for High Resolution Land Cover Mapping Using Quickbird-2 Imagery. In Handbook of Neural Computation; Academic Press: London, UK, 2017; ISBN 9780128113196. [Google Scholar]

- Environmental Systems Research Institute. ESRI ArcGIS Desktop: Release 10; Environmental Systems Research Institute: Redlands, CA, USA, 2013. [Google Scholar]

- The Mathworks Inc. The Mathworks Inc.: Massachusetts. 2018. Available online: https://www.Mathworks.com/Products/Matlab (accessed on 10 February 2020).

- R Development Core Team. R: A Language and Environment for Statistical Computing; R Development Core Team: Vienna, Austria, 2017. [Google Scholar]

- Wickham, H.; Francois, R. The Dplyr Package; R Core Team: Vienna, Austria, 2016. [Google Scholar]

- Bache, S.M.; Wickham, H. Package ‘magrittr’—A Forward-Pipe Operator for R. Available online: https://CRAN.R-project.org/package=magrittr (accessed on 10 February 2020).

- Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Anthony, G.; Gregg, H.; Tshilidzi, M. Image classification using SVMs: One-Against-One vs One-against-All. In Proceedings of the 28th Asian Conference on Remote Sensing 2007, ACRS 2007, Kuala Lumpur, Malaysia, 12–16 November 2007. [Google Scholar]

- Daengduang, S.; Vateekul, P. Enhancing accuracy of multi-label classification by applying one-vs-one support vector machine. In Proceedings of the 2016 13th International Joint Conference on Computer Science and Software Engineering (JCSSE), Khon Kaen, Thailand, 13–15 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Marquardt, D.W. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Shang, X.; Chisholm, L.A. Classification of Australian Native Forest Species Using Hyperspectral Remote Sensing and Machine-Learning Classification Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2481–2489. [Google Scholar] [CrossRef]

- Pirotti, F.; Sunar, F.; Piragnolo, M. Benchmark of machine learning methods for classification of a sentinel-2 image. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B7, 335–340. [Google Scholar] [CrossRef]

- Salas, E.A.L.; Subburayalu, S.K.; Slater, B.; Zhao, K.; Bhattacharya, B.; Tripathy, R.; Das, A.; Nigam, R.; Dave, R.; Parekh, P. Mapping crop types in fragmented arable landscapes using AVIRIS-NG imagery and limited field data. Int. J. Image Data Fusion 2020, 11, 33–56. [Google Scholar] [CrossRef]

- Gauquelin, T.; Michon, G.; Joffre, R.; Duponnois, R.; Génin, D.; Fady, B.; Bou Dagher-Kharrat, M.; Derridj, A.; Slimani, S.; Badri, W.; et al. Mediterranean forests, land use and climate change: A social-ecological perspective. Reg. Environ. Chang. 2018, 18, 623–636. [Google Scholar] [CrossRef]

- Vasilakos, C.; Kalabokidis, K.; Hatzopoulos, J.; Kallos, G.; Matsinos, Y. Integrating new methods and tools in fire danger rating. Int. J. Wildl. Fire 2007, 16, 306. [Google Scholar] [CrossRef]

- Vasilakos, C.; Kalabokidis, K.; Hatzopoulos, J.; Matsinos, I. Identifying wildland fire ignition factors through sensitivity analysis of a neural network. Nat. Hazards 2009, 50, 125–143. [Google Scholar] [CrossRef]

- Bajocco, S.; De Angelis, A.; Perini, L.; Ferrara, A.; Salvati, L. The impact of Land Use/Land Cover Changes on land degradation dynamics: A Mediterranean case study. Environ. Manag. 2012, 49, 980–989. [Google Scholar] [CrossRef]

- Otero, I.; Marull, J.; Tello, E.; Diana, G.L.; Pons, M.; Coll, F.; Boada, M. Land abandonment, landscape, and biodiversity: Questioning the restorative character of the forest transition in the Mediterranean. Ecol. Soc. 2015. [Google Scholar] [CrossRef] [Green Version]

- Song, C.; Pons, A.; Yen, K. Sieve: An Ensemble Algorithm Using Global Consensus for Binary Classification. AI 2020, 1, 16. [Google Scholar] [CrossRef]

| Band | Central Wavelength (nm) | Bandwidth (nm) | Spatial Resolution (m) |

|---|---|---|---|

| Band 2—Blue | 490 | 65 | 10 |

| Band 3—Green | 560 | 35 | 10 |

| Band 4—Red | 665 | 30 | 10 |

| Band 5—Vegetation red edge | 705 | 15 | 20 |

| Band 6—Vegetation red edge | 740 | 15 | 20 |

| Band 7—Vegetation red edge | 783 | 20 | 20 |

| Band 8—Near infrared | 842 | 115 | 10 |

| Band 8A—Narrow near infrared | 865 | 20 | 20 |

| Band 11—Shortwave infrared | 1610 | 90 | 20 |

| Band 12—Shortwave infrared | 2190 | 180 | 20 |

| Class | Number of Polygons | Number of Training Dataset | Number of Testing Dataset | Total Samples |

|---|---|---|---|---|

| Olive grove | 342 | 3203 | 797 | 4000 |

| Oak forest | 72 | 1309 | 327 | 1636 |

| Brushwood | 73 | 4254 | 1088 | 5342 |

| Built up | 107 | 984 | 257 | 1241 |

| Pinus brutia | 113 | 4257 | 1090 | 5347 |

| Chesnut forest | 31 | 778 | 178 | 956 |

| Pinus nigra | 20 | 349 | 82 | 431 |

| Maquis-type shrubland | 107 | 936 | 226 | 1162 |

| Barren land | 44 | 212 | 61 | 273 |

| Grassland | 127 | 1327 | 312 | 1639 |

| Other broadleaves | 8 | 234 | 50 | 284 |

| Agricultural land | 54 | 729 | 188 | 917 |

| Aquatic bodies | 21 | 1070 | 254 | 1324 |

| Total | 1119 | 19642 | 4910 | 24552 |

| Ci Correct (1) | Ci Wrong (0) | |

|---|---|---|

| Ck correct (1) | N11 | N10 |

| Ck wrong (0) | N01 | N00 |

| DT | DIS | SVM | KNN | RF | ANN | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| UA | PA | UA | PA | UA | PA | UA | PA | UA | PA | UA | PA | |

| OG | 0.77 | 0.78 | 0.84 | 0.87 | 0.93 | 0.92 | 0.84 | 0.89 | 0.90 | 0.85 | 0.91 | 0.88 |

| OF | 0.54 | 0.61 | 0.52 | 0.72 | 0.79 | 0.85 | 0.77 | 0.71 | 0.72 | 0.81 | 0.71 | 0.72 |

| BW | 0.95 | 0.87 | 0.91 | 0.91 | 0.97 | 0.96 | 0.98 | 0.92 | 0.97 | 0.93 | 0.96 | 0.94 |

| BU | 0.91 | 0.92 | 0.86 | 0.98 | 0.98 | 0.97 | 0.90 | 0.96 | 0.98 | 0.92 | 0.93 | 0.93 |

| PB | 0.97 | 0.89 | 0.99 | 0.88 | 0.98 | 0.98 | 0.98 | 0.94 | 0.98 | 0.95 | 0.97 | 0.93 |

| CF | 0.85 | 0.72 | 0.85 | 0.75 | 0.89 | 0.90 | 0.83 | 0.82 | 0.89 | 0.83 | 0.85 | 0.80 |

| PN | 0.37 | 0.53 | 0.46 | 0.73 | 0.83 | 0.82 | 0.67 | 0.76 | 0.66 | 0.88 | 0.57 | 0.79 |

| MS | 0.51 | 0.66 | 0.65 | 0.67 | 0.79 | 0.75 | 0.67 | 0.70 | 0.72 | 0.73 | 0.62 | 0.70 |

| BL | 0.76 | 0.82 | 0.85 | 0.65 | 0.87 | 0.96 | 0.81 | 0.86 | 0.72 | 0.97 | 0.82 | 0.85 |

| GL | 0.53 | 0.63 | 0.79 | 0.66 | 0.83 | 0.84 | 0.75 | 0.78 | 0.71 | 0.82 | 0.75 | 0.80 |

| OB | 0.22 | 0.52 | 0.19 | 0.46 | 0.64 | 0.76 | 0.21 | 0.92 | 0.34 | 0.91 | 0.30 | 0.53 |

| AL | 0.92 | 0.91 | 0.90 | 0.97 | 0.95 | 0.98 | 0.93 | 0.97 | 0.93 | 0.94 | 0.93 | 0.93 |

| AB | 1 | 1 | 1 | 1 | 1 | 1 | 0.99 | 1 | 1 | 1 | 1 | 1 |

| Overall Accuracy | 0.82 | 0.85 | 0.93 | 0.89 | 0.90 | 0.89 | ||||||

| OG | OF | BW | BU | PB | CF | PN | MS | BL | GL | OB | AL | AB | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DT | 0.72 | 0.61 | 0.88 | 0.90 | 0.93 | 0.81 | 0.40 | 0.59 | 0.77 | 0.54 | 0.32 | 0.91 | 1.00 |

| DIS | 0.84 | 0.59 | 0.88 | 0.91 | 0.91 | 0.75 | 0.51 | 0.64 | 0.75 | 0.62 | 0.05 | 0.93 | 1.00 |

| SVM | 0.90 | 0.83 | 0.94 | 0.95 | 0.97 | 0.87 | 0.83 | 0.80 | 0.87 | 0.78 | 0.68 | 0.99 | 1.00 |

| KNN | 0.84 | 0.74 | 0.91 | 0.89 | 0.95 | 0.82 | 0.72 | 0.70 | 0.78 | 0.71 | 0.35 | 0.98 | 1.00 |

| RF | 0.84 | 0.75 | 0.93 | 0.94 | 0.96 | 0.84 | 0.73 | 0.72 | 0.84 | 0.72 | 0.54 | 0.96 | 1.00 |

| ANN | 0.86 | 0.71 | 0.92 | 0.94 | 0.95 | 0.82 | 0.53 | 0.69 | 0.86 | 0.70 | 0.33 | 0.95 | 1.00 |

| Mode | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.85 | 0.68 | 0.74 | 0.85 | 0.73 | 0.40 | 0.96 | 1.00 |

| MaxK | 0.82 | 0.74 | 0.91 | 0.94 | 0.90 | 0.80 | 0.42 | 0.69 | 0.75 | 0.58 | 0.00 | 0.93 | 1.00 |

| GSK | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.84 | 0.68 | 0.74 | 0.85 | 0.73 | 0.30 | 0.96 | 1.00 |

| GMK | 0.79 | 0.64 | 0.90 | 0.93 | 0.90 | 0.75 | 0.42 | 0.68 | 0.72 | 0.58 | 0.04 | 0.93 | 1.00 |

| GWSK | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.85 | 0.68 | 0.74 | 0.85 | 0.73 | 0.40 | 0.96 | 1.00 |

| GMF1 | 0.78 | 0.63 | 0.89 | 0.89 | 0.90 | 0.75 | 0.40 | 0.68 | 0.72 | 0.54 | 0.04 | 0.93 | 1.00 |

| GSF1 | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.84 | 0.68 | 0.74 | 0.85 | 0.73 | 0.30 | 0.96 | 1.00 |

| GMMCC | 0.79 | 0.64 | 0.90 | 0.93 | 0.90 | 0.75 | 0.42 | 0.68 | 0.73 | 0.59 | 0.04 | 0.93 | 1.00 |

| GSMCC | 0.87 | 0.76 | 0.93 | 0.96 | 0.95 | 0.84 | 0.68 | 0.74 | 0.85 | 0.73 | 0.35 | 0.96 | 1.00 |

| DT | DIS | SVM | KNN | RF | DT | DIS | SVM | KNN | RF | |

|---|---|---|---|---|---|---|---|---|---|---|

| DIS | 0.523 | - | - | - | - | 0.895 | - | - | - | - |

| SVM | 0.356 | 0.367 | - | - | - | 0.870 | 0.871 | - | - | - |

| KNN | 0.540 | 0.483 | 0.477 | - | - | 0.930 | 0.896 | 0.924 | - | - |

| RF | 0.583 | 0.515 | 0.509 | 0.671 | - | 0.962 | 0.926 | 0.935 | 0.971 | - |

| ANN | 0.511 | 0.602 | 0.493 | 0.532 | 0.574 | 0.915 | 0.951 | 0.931 | 0.922 | 0.943 |

| (a) | (b) | |||||||||

| DT | DIS | SVM | KNN | RF | DT | DIS | SVM | KNN | RF | |

| DIS | 0.130 | - | - | - | - | 0.097 | - | - | - | - |

| SVM | 0.142 | 0.132 | - | - | - | 0.052 | 0.050 | - | - | - |

| KNN | 0.113 | 0.122 | 0.092 | - | - | 0.086 | 0.075 | 0.051 | - | - |

| RF | 0.099 | 0.110 | 0.081 | 0.064 | - | 0.087 | 0.074 | 0.050 | 0.078 | - |

| ANN | 0.120 | 0.094 | 0.089 | 0.096 | 0.083 | 0.083 | 0.089 | 0.052 | 0.068 | 0.068 |

| (c) | (d) |

| MaxK | GSK | GMK | GWSK | GMF1 | GSF1 | GMMCC | GSMCC | |

|---|---|---|---|---|---|---|---|---|

| Mode | 13.483 | 0 | 1.028 | 0 | 1.125 | 0 | 1.936 | 0 |

| MaxK | - | 13.483 | 36.860 | 13.483 | 36.423 | 14.095 | 40.830 | 13.483 |

| GSK | - | - | 1.028 | 0 | 1.125 | 0 | 1.954 | 0 |

| GMK | - | - | - | 1.028 | 0 | 0.921 | 3.063 | 1.028 |

| GWSK | - | - | - | - | 1.125 | 0 | 1.954 | 0 |

| GMF1 | - | - | - | - | - | 1.028 | 1.895 | 1.125 |

| GSF1 | - | - | - | - | - | - | 1.787 | 0 |

| GMMCC | - | - | - | - | - | - | - | 1.936 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vasilakos, C.; Kavroudakis, D.; Georganta, A. Machine Learning Classification Ensemble of Multitemporal Sentinel-2 Images: The Case of a Mixed Mediterranean Ecosystem. Remote Sens. 2020, 12, 2005. https://doi.org/10.3390/rs12122005

Vasilakos C, Kavroudakis D, Georganta A. Machine Learning Classification Ensemble of Multitemporal Sentinel-2 Images: The Case of a Mixed Mediterranean Ecosystem. Remote Sensing. 2020; 12(12):2005. https://doi.org/10.3390/rs12122005

Chicago/Turabian StyleVasilakos, Christos, Dimitris Kavroudakis, and Aikaterini Georganta. 2020. "Machine Learning Classification Ensemble of Multitemporal Sentinel-2 Images: The Case of a Mixed Mediterranean Ecosystem" Remote Sensing 12, no. 12: 2005. https://doi.org/10.3390/rs12122005