1. Introduction

Image registration aims to align two or more images that have overlapping scenes and are captured by the same or different sensors at different times or from different viewpoints, which is a basic and essential step of remote sensing image processing. The accuracy of registration has a considerable influence on subsequent processing, such as image fusion, image retrieval, object recognition, and change detection. However, high-accuracy remote sensing image registration still faces many difficulties and challenges, especially for urban areas.

Urban scenes, which are often used for urban planning, environmental assessment, and change detection, are widely studied in the field of remote sensing. High-accuracy registration is required to achieve good processing results. Urban scenes contain many man-made objects, such as roads, buildings, and airports. Salient features can be extracted easily, but the localization of features is substantially affected by image variations. Natural disasters, such as earthquakes and floods, may greatly damage the contours of objects or even global geometric structures. With the wide application of high-resolution satellite remote sensing, rich details of images, such as shadows, twigs, and road signs, introduce interference to registration. In addition, the positions of tall buildings are sensitive to viewpoint changes. These issues bring challenges for high-accuracy registration. Therefore, designing a registration algorithm that is robust against background variations according to the characteristics of satellite remote sensing images of urban areas is of great significance.

Most registration methods are feature-based. These methods extract local features from images, such as point features and line features, and then use their neighborhood information or global structure to design matching strategies. The main steps of feature-based registration method are feature extraction, feature description, feature matching, and estimation of transformation model.

Point features are the most widely used. The most representative method is scale-invariant feature transform (SIFT) [

1], and many improved methods are based on it, such as SURF [

2], PCA-SIFT [

3], ASIFT [

4], UR-SIFT [

5], and SAR-SIFT [

6]. However, many outliers are obtained after matching with local feature descriptors. Thus, new methods adopt the graph-based matching strategy using spatial relations of matches, instead of traditional methods, such as RANSAC [

7]. For example, Aguilar et al. [

8] proposed a K-nearest neighbors (KNN)-based algorithm named graph transformation matching to construct the local adjacent structure of features. Liu et al. [

9] proposed restricted spatial-order constraints, which use local structure and global information to eliminate outliers in each iteration. Zhang et al. [

10] combined KNN and triangle area representation (TAR) [

11] on the basis of an affine property for descriptor calculation; the resulting method is insensitive to background variations. Shi et al. [

12] used shape context as global structure constraint and TAR for spatial consistency measurement after SIFT feature extraction. The recovery and filtering vertex trichotomy matching [

13] algorithm filters outliers and retains inliers by designing a vertex trichotomy descriptor that is based on the geometric relations between any of the vertices and lines; this algorithm can remove nearly all outliers and is faster than RANSAC.

Given the characteristics of remote sensing images in urban areas, line features are more semantic and constrained in spatial structures compared with point features. Methods based on line features usually extract edges and then fit line segments. For example, line segment detector (LSD) [

14] and edge drawing lines (EDLines) [

15] are increasingly used in remote sensing image registration. On the basis of line segments, feature description is constructed, and matching strategies are designed with their spatial relations. Wang et al. [

16] extracted EDLines and designed the mean–standard deviation line descriptor (MSLD), but without scale invariance. The line band descriptor (LBD) proposed by Zhang et al. [

17] combines the local appearance and geometric properties of line segments and achieves a more stable performance compared with MSLD. Shi et al. [

18] proposed a novel line segment descriptor with a new histogram binning strategy; this descriptor is robust to global geometric distortions. On the basis of LSD detection, Yammine et al. [

19] designed a neighboring lines descriptor for map registration without texture information. Long et al. [

20] introduced a Gaussian mixture model and the expectation–maximization algorithm into line segment registration; only spatial relations between line segments are used to realize high-accuracy matching. Zhao et al. [

21] proposed a multimodality robust line segment descriptor that is based on LSD; this descriptor extracts line support region by calculating phase consistency and direction. Other methods design new line extraction strategies to describe shape contours, such as the improved level line descriptors [

22] and the optimum number of well-distributed ground control information selection [

23].

For images with background variations caused by reconstruction or disasters, line segments can be broken, and the locations of endpoints will be inconsistent, thereby rendering accurate transformation model estimation difficult. Therefore, some methods combine line segments and point features for registration. For example, Fan et al. [

24] proposed a line matching method leveraged by point correspondences (LP); this method uses an affine invariant derived from one line and two coplanar points to calculate the similarity of two line segments. Zhao et al. [

25] implemented iterative line support region segmentation as geometric constraint for SIFT matching. Meanwhile, line intersections are another kind of point features; they can be obtained conveniently, and their locations are less sensitive to background variations. Sui et al. [

26] extracted LSD and utilized line segment intersections for Voronoi integrated spectral point matching. Li et al. [

27] built the line-junction-line (LJL) structure with two line segments and their intersecting junction for constructing descriptors and design matching strategies. The registration with line segments and their intersections (RLI) algorithm, which was proposed by Lyu et al. [

28], selects triplets of intersections of matching lines to estimate affine transformation iteratively; this algorithm has good robustness.

With the development of deep learning, deep learning-based methods emerge in recent years [

29,

30,

31]. MatchNet proposed by Han et al. [

29] is a typical architecture, which consists of a deep convolutional network that extracts features from patches and a network of three fully connected layers that computes a similarity between the extracted features. In the field of remote sensing, He et al. [

32] proposed a Siamese convolutional neural network for multiscale patch comparison, which combines with the S-Harris corner detector to improve the matching performance for remote sensing images with complex background variations. Yang et al. [

33] generated robust multi-scale feature descriptors utilizing high level convolutional information while preserving some localization capabilities, and designed a gradually increasing selection of inliers for registration. Deep learning-based descriptors can be robust to large background variations, but they have relatively low localization accuracy and need traditional strategies to improve registration performance.

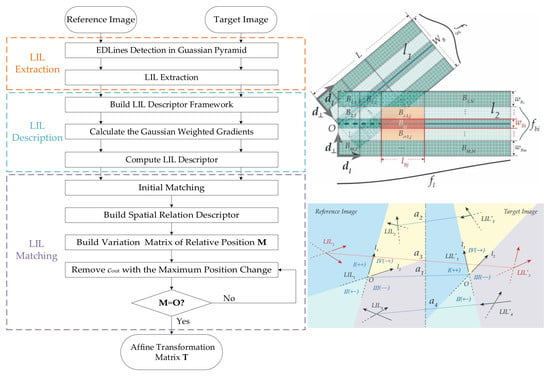

Considering the rich line features in satellite remote sensing images of urban areas and the more stable location of intersections than that of endpoints, this paper proposes a high-accuracy remote sensing image registration algorithm that is based on the line-intersection-line (LIL) structure with two lines and their intersection. First, multi-scale line segments are detected in a Gaussian pyramid, and some constraints are set to filter and compute intersections to extract scale-invariant and accurately located LIL structures. Second, a new LIL local descriptor is constructed by using pixel gradients in two line support regions and realize initial matching. Then, a graph-based LIL outlier removal method is conducted using the LIL structures and changes in the geometric relations between matches. A variation matrix of relative position is built with a spatial relation descriptor based on affine properties and graph theory. Outliers are eliminated successively until the matrix is zero matrix. Finally, high-accuracy affine transformation is estimated with inliers.

The main contribution of this study centers on design of a double-rectangular local descriptor and a spatial relationship-based outlier removal strategy on the basis of the LIL structure. Compared with other similar methods, LIL descriptors are finer to resist large background variations and the outlier removal strategy is more simple and effective, which makes full use of features’ structure and adjacency relations with simple affine properties.

In our experiments, the proposed algorithm can achieve sub-pixel accuracy registration and realize high precision and is robust to scale, rotation, illumination, occlusion, and even large background variations before and after disasters.

4. Discussion

The proposed LIL-based registration algorithm for satellite remote sensing images performs well on simulated and real images with urban scenes.

On images with simulated transformations, the LIL structure has large scale and rotation invariance. Compared with the algorithms based on line matching, i.e., LP and MSLD, the proposed algorithm’s intersections are more robust to edge fragmentation and occlusion, and the positioning accuracy are higher. The proposed algorithm can resist large scale, rotation, illumination variations, and occlusion conditions, achieving registration with sub-pixel accuracy and high precision on general images. In addition, the matching results show that the LIL local descriptor has higher discriminability compared with the SIFT descriptor.

For real images with large background variations, the average and of the proposed algorithm are 0.78 and 99.1%, respectively. By contrast, SIFT, LP and MSLD, which use only local gradient information and local structural constraints, have relative low registration accuracy or are even unable to register on images with large global geographic structure variations. The robustness of RLI is poor. RLI selects triplets of intersections of matching lines for registration iteratively. The performance of intersection matching depends on line matching. For images with small variations, high-accuracy registration can be achieved. However, for images with large background variations, if matches used for initial model estimation have large registration error, then the process of iterative refinement for transformation model easily diverges. LJL performs the second best among all methods, verifying the stability of the LJL structure, but the LJL descriptor is weak in resisting large radiation variations, as in image pair 4. In addition, although LJL can detect numerous correct matches, it has high computational complexity and is time consuming for describing and matching. Each LJL constructed in the original images is adjusted to all images in the pyramids and is described there, whereas the proposed algorithm describes each LIL only once. The LJL match propagation also need more calculation steps including local homography estimation and individual line segment matching, whereas the LIL outlier removal only uses simple affine properties and propagates once. For remote sensing images with complex textures, for example, measured on a 2.8 GHz Inter (R) Core (TM) processor with 16 GB of RAM, LJL consumes approximately 2 h to register an image pair with about 5000 and 4000 LJLs, whereas LIL takes around 1 min 10 s, which shows a great reduction in computational cost.

The reasons for the excellent performance of the proposed algorithm are as follows: (1) The LIL structure can well describe the contours of objects in the image, and intersections can reduce the impact of broken line segments and have more accurate positioning, against large background variations; (2) The LIL local feature description utilizes the neighborhood information of two lines and their intersection, and the division of descriptor region is more detailed than that of LBD and RLI. Besides, differing from traditional circular descriptors such as SIFT and LJL, the double-rectangular descriptor contain more structural information; (3) The LIL outlier removal strategy by using LIL structure and relative position changes between any of LILs can eliminate nearly all false matches that do not conform to the spatial relations given many stubborn outliers in the matching set. The proposed algorithm performs well in most remote sensing images of urban areas.

The proposed algorithm still has some limitations. (1) Intersections tend to be unevenly distributed due to the influence of line segment distribution. Clustered in some areas, many similar points with closed positions bring difficulties to the matching process; (2) The division and dimension of the descriptor are not the optimal solution. The width and length of blocks are not adaptive; instead, they depend on empirical values. Moreover, the division of the line support region along the direction of line segment leads to excessive descriptor dimensions and increases computational complexity; (3) The proposed algorithm has many false matches in the initial matching set and relies too much on the LIL outlier removal algorithm. Future work includes optimizing the structure and parameters of the descriptor to retain more significant features.