Quantifying Multiscale Habitat Structural Complexity: A Cost-Effective Framework for Underwater 3D Modelling

Abstract

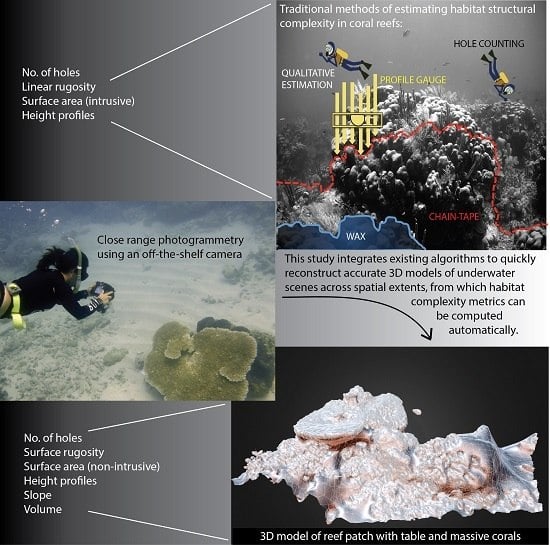

:1. Introduction

- (i)

- It works for images recorded in moderately turbid waters with non-uniform lighting.

- (ii)

- It does not assume scene rigidity; moving features are automatically detected and extracted from the scene. If the moving object appears in more than a few frames, the reconstructed scene will contain occluded regions.

- (iii)

- It allows large datasets and the investigation of structural complexity at multiple extents and resolutions (mm2 to km2).

- (iv)

- It allows in situ data acquisition in a non-intrusive way, including historical datasets.

- (v)

- It enables deployment from multiple imaging-platforms.

- (vi)

- It can obtain measurement accuracies <1 mm, given that at least one landmark of known size is present to extract scale information. Note that as the reconstruction is performed over a larger area its resolution will decrease.

2. Materials and Methods

2.1. Data Acquisition

2.1.1. Calibrated Data: Coral Colony

2.1.2. Uncalibrated Data: Reef Area and Reef Transect

2.2. Image Processing

2.3. Reconstruction Overview

2.3.1. Structure-from-Motion (SfM)

2.3.2. Depth of Field-of-View

2.3.3. Implicit Surface Reconstruction

2.4. Model Reconstruction and Validation

2.4.1. Branching Coral Colony 3D Model

2.4.2. Reef Area and Reef Transect 3D Model

Reef Area Validation Data

Reef Area Underwater 3D Model Reconstruction

Reef Area Underwater 3D Model Validation

Reef Transect Underwater 3D Model Reconstruction

Reef Transect Underwater 3D Model Validation

3. Results

3.1. Branching Coral: Laser-Scanned Model vs. Underwater 3D Model

3.2. Reef Area: In Situ Metrics vs. Underwater 3D Model Metrics

3.3. Reef Transect: In Situ Metrics vs. Underwater 3D Model Metrics

4. Discussion

4.1. Methodological Accuracy and Validation

4.2. Advantages of This Framework

4.3. Limitations and Further Improvements of This Framework

4.4. Ecological Applications

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Macarthur, R.; Macarthur, J.W. On bird species-diversity. Ecology 1961, 42, 594–598. [Google Scholar] [CrossRef]

- Gratwicke, B.; Speight, M.R. The relationship between fish species richness, abundance and habitat complexity in a range of shallow tropical marine habitats. J. Fish Biol. 2005, 66, 650–667. [Google Scholar] [CrossRef]

- Harborne, A.R.; Mumby, P.J.; Kennedy, E.V.; Ferrari, R. Biotic and multi-scale abiotic controls of habitat quality: Their effect on coral-reef fishes. Mar. Ecol. Prog. Ser. 2011, 437, 201–214. [Google Scholar] [CrossRef]

- Vergés, A.; Vanderklift, M.A.; Doropoulos, C.; Hyndes, G.A. Spatial patterns in herbivory on a coral reef are influenced by structural complexity but not by algal traits. PLoS ONE 2011, 6, e17115. [Google Scholar] [CrossRef] [PubMed]

- Taniguchi, H.; Tokeshi, M. Effects of habitat complexity on benthic assemblages in a variable environment. Freshw. Biol. 2004, 49, 1164–1178. [Google Scholar] [CrossRef]

- Ellison, A.M.; Bank, M.S.; Clinton, B.D.; Colburn, E.A.; Elliott, K.; Ford, C.R.; Foster, D.R.; Kloeppel, B.D.; Knoepp, J.D.; Lovett, G.M.; et al. Loss of foundation species: Consequences for the structure and dynamics of forested ecosystems. Front. Ecol. Environ. 2005, 3, 479–486. [Google Scholar]

- Alvarez-Filip, L.; Cote, I.M.; Gill, J.A.; Watkinson, A.R.; Dulvy, N.K. Region-wide temporal and spatial variation in Caribbean reef architecture: Is coral cover the whole story? Glob. Chang. Biol. 2011, 17, 2470–2477. [Google Scholar] [CrossRef] [Green Version]

- Lambert, G.I.; Jennings, S.; Hinz, H.; Murray, L.G.; Lael, P.; Kaiser, M.J.; Hiddink, J.G. A comparison of two techniques for the rapid assessment of marine habitat complexity. Methods Ecol. Evol. 2012, 4. [Google Scholar] [CrossRef]

- Goatley, C.H.; Bellwood, D.R. The roles of dimensionality, canopies and complexity in ecosystem monitoring. PLoS ONE 2011, 6, e27307. [Google Scholar] [CrossRef] [PubMed]

- Hoegh-Guldberg, O.; Mumby, P.J.; Hooten, A.J.; Steneck, R.S.; Greenfield, P.; Gomez, E.; Harvell, C.D.; Sale, P.F.; Edwards, A.J.; Caldeira, K.; et al. Coral reefs under rapid climate change and ocean acidification. Science 2007, 318, 1737–1742. [Google Scholar] [CrossRef] [PubMed]

- Kovalenko, K.E.; Thomaz, S.M.; Warfe, D.M. Habitat complexity: Approaches and future directions. Hydrobiologia 2012, 685, 1–17. [Google Scholar] [CrossRef]

- Odum, E.P.; Kuenzler, E.J.; Blunt, M.X. Uptake of P32 and primary productivity in marine benthic algae. Limnol. Oceanogr. 1958, 3, 340–348. [Google Scholar] [CrossRef]

- Dahl, A.L. Surface-area in ecological analysis—Quantification of benthic coral-reef algae. Mar. Biol. 1973, 23, 239–249. [Google Scholar] [CrossRef]

- Graham, N.; Nash, K. The importance of structural complexity in coral reef ecosystems. Coral Reefs 2013, 32, 315–326. [Google Scholar] [CrossRef]

- Risk, M.J. Fish diversity on a coral reef in the Virgin Islands. Atoll Res. Bull. 1972, 153, 1–6. [Google Scholar] [CrossRef]

- Cocito, S.; Sgorbini, S.; Peirano, A.; Valle, M. 3-D reconstruction of biological objects using underwater video technique and image processing. J. Exp. Mar. Biol. Ecol. 2003, 297, 57–70. [Google Scholar] [CrossRef]

- Mumby, P.J.; Hedley, J.D.; Chisholm, J.R.M.; Clark, C.D.; Ripley, H.; Jaubert, J. The cover of living and dead corals from airborne remote sensing. Coral Reefs 2004, 23, 171–183. [Google Scholar] [CrossRef]

- Kuffner, I.B.; Walters, L.J.; Becerro, M.A.; Paul, V.J.; Ritson-Williams, R.; Beach, K.S. Inhibition of coral recruitment by macroalgae and cyanobacteria. Mar. Ecol. Prog. Ser. 2006, 323, 107–117. [Google Scholar] [CrossRef]

- Pittman, S.J.; Christensen, J.D.; Caldow, C.; Menza, C.; Monaco, M.E. Predictive mapping of fish species richness across shallow-water seascapes in the caribbean. Ecol. Model. 2007, 204, 9–21. [Google Scholar] [CrossRef]

- Dartnell, P.; Gardner, J.V. Predicting seafloor facies from multibeam bathymetry and backscatter data. Photogramm. Eng. Remote Sens. 2004, 70, 1081–1091. [Google Scholar] [CrossRef]

- Cameron, M.J.; Lucieer, V.; Barrett, N.S.; Johnson, C.R.; Edgar, G.J. Understanding community-habitat associations of temperate reef fishes using fine-resolution bathymetric measures of physical structure. Mar. Ecol. Prog. Ser. 2014, 506, 213–229. [Google Scholar] [CrossRef]

- Rattray, A.; Ierodiaconou, D.; Laurenson, L.; Burq, S.; Reston, M. Hydro-acoustic remote sensing of benthic biological communities on the shallow south east Australian continental shelf. Estuar. Coast. Shelf Sci. 2009, 84, 237–245. [Google Scholar] [CrossRef]

- Brock, J.C.; Wright, C.W.; Clayton, T.D.; Nayegandhi, A. LiDAR optical rugosity of coral reefs in Biscayne National Park, Florida. Coral Reefs 2004, 23, 48–59. [Google Scholar] [CrossRef]

- Hamel, M.A.; Andrefouet, S. Using very high resolution remote sensing for the management of coral reef fisheries: Review and perspectives. Mar. Pollut. Bull. 2010, 60, 1397–1405. [Google Scholar] [CrossRef] [PubMed]

- Luckhurst, B.E.; Luckhurst, K. Analysis of the influence of substrate variables on coral reef fish communities. Mar. Biol. 1978, 49, 317–323. [Google Scholar] [CrossRef]

- Friedlander, A.M.; Parrish, J.D. Habitat characteristics affecting fish assemblages on a Hawaiian coral reef. J. Exp. Mar. Biol. Ecol. 1998, 224, 1–30. [Google Scholar] [CrossRef]

- Kamal, S.; Lee, S.Y.; Warnken, J. Investigating three-dimensional mesoscale habitat complexity and its ecological implications using low-cost RGB-D sensor technology. Methods Ecol. Evol. 2014, 5, 845–853. [Google Scholar] [CrossRef]

- Lavy, A.; Eyal, G.; Neal, B.; Keren, R.; Loya, Y.; Ilan, M. A quick, easy and non-intrusive method for underwater volume and surface area evaluation of benthic organisms by 3D computer modelling. Methods Ecol. Evol. 2015, 6, 521–531. [Google Scholar] [CrossRef]

- McKinnon, D.; Hu, H.; Upcroft, B.; Smith, R. Towards Automated and In-Situ, Near-Real Time 3-D Reconstruction of Coral Reef Environments. Aailable online: http://robotics.usc.edu/~ryan/Publications_files/oceans_2011.pdf (accessed on 19 September 2015).

- Williams, S.B.; Pizarro, O.R.; Jakuba, M.V.; Johnson, C.R.; Barrett, N.S.; Babcock, R.C.; Kendrick, G.A.; Steinberg, P.D.; Heyward, A.J.; Doherty, P.J.; et al. Monitoring of benthic reference sites using an autonomous underwater vehicle. IEEE Robot. Autom. Mag. 2012, 19, 73–84. [Google Scholar] [CrossRef]

- Courtney, L.A.; Fisher, W.S.; Raimondo, S.; Oliver, L.M.; Davis, W.P. Estimating 3-dimensional colony surface area of field corals. J. Exp. Mar. Biol. Ecol. 2007, 351, 234–242. [Google Scholar] [CrossRef]

- Leon, J.X.; Roelfsema, C.M.; Saunders, M.I.; Phinn, S.R. Measuring coral reef terrain roughness using “structure-from-motion” close-range photogrammetry. Geomorphology 2015, 242, 21–28. [Google Scholar] [CrossRef]

- Burns, J.H.R.; Delparte, D.; Gates, R.D.; Takabayashi, M. Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. PeerJ 2015, 3. [Google Scholar] [CrossRef] [PubMed]

- Koenderink, J.J.; Vandoorn, A.J. Depth and shape from differential perspective in the presence of bending deformations. J. Opt. Soc. Am. A 1986, 3, 242–249. [Google Scholar] [CrossRef] [PubMed]

- Simon, T.; Minh, H.N.; de la Torre, F.; Cohn, J.F. Action unit detection with segment-based svms. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2737–2744.

- Wilczkowiak, M.; Boyer, E.; Sturm, P. Camera calibration and 3D reconstruction from single images using parallelepipeds. In Proceedings of the Eighth IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; Volume I, pp. 142–148.

- Schaffalitzky, F.; Zisserman, A.; Hartley, R.I.; Torr, P.H.S. A six point solution for structure and motion. In Proceedings of the Computer Vision, ECCV 2000, Dublin, Ireland, 26 June–1 July 2000; Volume 1842, pp. 632–648.

- Friedman, A.; Pizarro, O.; Williams, S.B.; Johnson-Roberson, M. Multi-scale measures of rugosity, slope and aspect from benthic stereo image reconstructions. PLoS ONE 2012, 7, e50440. [Google Scholar] [CrossRef] [PubMed]

- Lirman, D.; Gracias, N.R.; Gintert, B.E.; Gleason, A.C.R.; Reid, R.P.; Negahdaripour, S.; Kramer, P. Development and application of a video-mosaic survey technology to document the status of coral reef communities. Environ. Monit. Assess. 2007, 125, 59–73. [Google Scholar] [CrossRef] [PubMed]

- O’Byrne, M.; Pakrashi, V.; Schoefs, F.; Ghosh, B. A comparison of image based 3d recovery methods for underwater inspections. In Proceedings of the EWSHM—7th European Workshop on Structural Health Monitoring, Nantes, France, 8–11 July 2014.

- Johnson-Roberson, M.; Pizarro, O.; Williams, S.B.; Mahon, I. Generation and visualization of large-scale three-dimensional reconstructions from underwater robotic surveys. J. Field Robot. 2010, 27, 21–51. [Google Scholar] [CrossRef]

- Capra, A.; Dubbini, M.; Bertacchini, E.; Castagnetti, C.; Mancini, F. 3D reconstruction of an underwater archaelogical site: Comparison between low cost cameras. In Proceedings of the 2015 Underwater 3D Recording and Modeling, Piano di Sorrento, Italy, 16–17 April 2015; International Society for Photogrammetry and Remote Sensing: Piano di Sorrento, Italy, 2015; pp. 67–72. [Google Scholar]

- Bythell, J.; Pan, P.; Lee, J. Three-dimensional morphometric measurements of reef corals using underwater photogrammetry techniques. Coral Reefs 2001, 20, 193–199. [Google Scholar]

- Nicosevici, T.; Negahdaripour, S.; Garcia, R. Monocular-based 3-D seafloor reconstruction and ortho-mosaicing by piecewise planar representation. In Proceedings of the MTS/IEEE OCEANS, Washington, DC, USA, 18–23 September 2005.

- Abdo, D.A.; Seager, J.W.; Harvey, E.S.; McDonald, J.I.; Kendrick, G.A.; Shortis, M.R. Efficiently measuring complex sessile epibenthic organisms using a novel photogrammetric technique. J. Exp. Mar. Biol. Ecol. 2006, 339, 120–133. [Google Scholar] [CrossRef]

- González-Rivero, M.; Bongaerts, P.; Beijbom, O.; Pizarro, O.; Friedman, A.; Rodriguez-Ramirez, A.; Upcroft, B.; Laffoley, D.; Kline, D.; Bailhache, C.; et al. The catlin seaview survey—Kilometre-scale seascape assessment, and monitoring of coral reef ecosystems. Aquat. Conserv. Mar. Freshw. Ecosyst. 2014, 24, 184–198. [Google Scholar] [CrossRef]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 19 September 2015).

- Warren, M. Amcctoolbox. Available online: https://bitbucket.org/michaeldwarren/amcctoolbox/wiki/Home (accessed on 19 September 2015).

- Pollefeys, M.; Gool, L.V.; Vergauwen, M.; Verbiest, F.; Cornelis, K.; Tops, J.; Koch, R. Visual modeling with a hand-held camera. Int. J. Comput. Vis. 2004, 59, 207–232. [Google Scholar] [CrossRef]

- Olson, C.F.; Matthies, L.H.; Schoppers, M.; Maimone, M.V. Robust stereo ego-motion for long distance navigation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 13–15 June 2000; Volume II, pp. 453–458.

- McKinnon, D.; Smith, R.N.; Upcroft, B. A semi-local method for iterative depth-map refinement. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St Paul, MN, USA, 14–18 May 2012; pp. 758–762.

- Warren, M.; McKinnon, D.; He, H.; Upcroft, B. Unaided stereo vision based pose estimation. In Proceedings of the Australasian Conference on Robotics and Automation, Brisbane, Queensland, 1–3 December 2010.

- Esteban, C.H.; Schmitt, F. Silhouette and stereo fusion for 3D object modeling. In Proceedings of the Fourth International Conference on 3-D Digital Imaging and Modeling, Banff, AB, Canada, 6–10 October 2003; pp. 46–53.

- Campbell, N.D.F.; Vogiatzis, G.; Hernandez, C.; Cipolla, R. Using multiple hypotheses to improve depth-maps for multi-view stereo. Lect. Notes Comput. Sci. 2008, 5302, 766–779. [Google Scholar]

- Zach, C. Fast and high quality fusion of depth maps. In Proceedings of the International Symposium on 3D Data Processing, Visualization and Transmission (3DPVT), Atlanta, GA, USA, 18–20 June 2008; Georgia Institute of Technology: Atlanta, GA, USA, 2008. [Google Scholar]

- Yang, R.; Welch, G.; Bishop, G.; Towles, H. Real-time view synthesis using commodity graphics hardware. In ACM SIGGRAPH 2002 Conference Abstracts and Applications; ACM: New York, NY, USA, 2002; p. 240. [Google Scholar]

- Cornelis, N.; Cornelis, K.; Van Gool, L. Fast compact city modeling for navigation pre-visualization. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 1339–1344.

- Zaharescu, A.; Boyer, E.; Horaud, R. Transformesh: A topology-adaptive mesh-based approach to surface evolution. In Proceedings of the Computer Vision, ACCV 2007, Tokyo, Japan, 18–22 November 2007; Part II. Volume 4844, pp. 166–175.

- Hiep, V.H.; Keriven, R.; Labatut, P.; Pons, J.P. Towards high-resolution large-scale multi-view stereo. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, Miami, FL, USA, 20–25 June 2009; Volumes 1–4, pp. 1430–1437.

- Furukawa, Y.; Ponce, J. Dense 3D motion capture for human faces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, Miami, FL, USA, 20–25 June 2009; pp. 1674–1681.

- Fischler, M.A.; Bolles, R.C. Random sample consensus—A paradigm for model-fitting with applications to image-analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the Eurographics Symposium on Geometry Processing, Sardinia, Italy, 26–28 June 2006; pp. 61–70.

- Besl, P.J.; Mckay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997; Institute of Electrical and Electronics Engineers: San Juan, Puerto Rico, 1997; pp. 1106–1112. [Google Scholar]

- Repko, J.; Pollefeys, M. 3D models from extended uncalibrated video sequences: Addressing key-frame selection and projective drift. In Proceedings of the Fifth International Conference on 3-D Digital Imaging and Modeling, 3DIM 2005, Washington, DC, USA, 13–16 June 2005; pp. 150–157.

- Gutierrez-Heredia, L.; D’Helft, C.; Reynaud, E.G. Simple methods for interactive 3D modeling, measurements, and digital databases of coral skeletons. Limnol. Oceanogr. Methods 2015, 13, 178–193. [Google Scholar] [CrossRef]

- Jones, A.M.; Cantin, N.E.; Berkelmans, R.; Sinclair, B.; Negri, A.P. A 3D modeling method to calculate the surface areas of coral branches. Coral Reefs 2008, 27, 521–526. [Google Scholar] [CrossRef]

- Figueira, W.; Ferrari, R.; Weatherby, E.; Porter, A.; Hawes, S.; Byrne, M. Accuracy and Precision of Habitat Structural Complexity Metrics Derived from Underwater Photogrammetry. Remote Sens. 2015, 7, 16883–16900. [Google Scholar] [CrossRef]

- Ferrari, R.; Bryson, M.; Bridge, T.; Hustache, J.; Williams, S.B.; Byrne, M.; Figueira, W. Quantifying the response of structural complexity and community composition to environmental change in marine communities. Glob. Chang. Biol. 2015, 12. [Google Scholar] [CrossRef] [PubMed]

- Harborne, A.R.; Mumby, P.J.; Ferrari, R. The effectiveness of different meso-scale rugosity metrics for predicting intra-habitat variation in coral-reef fish assemblages. Environ. Biol. Fishes 2012, 94, 431–442. [Google Scholar] [CrossRef]

- Rogers, A.; Blanchard, J.L.; Mumby, P.J. Vulnerability of coral reef fisheries to a loss of structural complexity. Curr. Biol. 2014, 24, 1000–1005. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, J.; Benjamin, J. Multi-image photogrammetry for underwater archaeological site recording: An accessible, diver-based approach. J. Marit. Arch. 2014, 9, 95–114. [Google Scholar] [CrossRef]

- Wu, C. Visualsfm—A Visual Structure from Motion System, v0.5.26. 2011. Availale online: http://ccwu.me/vsfm/ (accessed on 19 September 2015).

- VisualComputingLaboratory Meshlab, v1.3.3; Visual Computing Laboratory: 2014. Availale online: http://sourceforge.net/projects/meshlab/files/meshlab/MeshLab%20v1.3.3/ (accessed on 19 September 2015).

- Perry, C.T.; Salter, M.A.; Harborne, A.R.; Crowley, S.F.; Jelks, H.L.; Wilson, R.W. Fish as major carbonate mud producers and missing components of the tropical carbonate factory. Proc. Natl. Acad. Sci. USA 2011, 108, 3865–3869. [Google Scholar] [CrossRef] [PubMed]

- Kennedy, E.V.; Perry, C.T.; Halloran, P.R.; Iglesias-Prieto, R.; Schönberg, C.H.; Wisshak, M.; Form, A.U.; Carricart-Ganivet, J.P.; Fine, M.; Eakin, C.M. Avoiding coral reef functional collapse requires local and global action. Curr. Biol. 2013, 23, 912–918. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Graham, N.A.J.; Jennings, S.; MacNeil, M.A.; Mouillot, D.; Wilson, S.K. Predicting climate-driven regime shifts vs. rebound potential in coral reefs. Nature 2015, 518, 94–97. [Google Scholar] [CrossRef] [PubMed]

- Roff, G.; Bejarano, S.; Bozec, Y.M.; Nugues, M.; Steneck, R.S.; Mumby, P.J. Porites and the phoenix effect: Unprecedented recovery after a mass coral bleaching event at rangiroa atoll, french polynesia. Mar. Biol. 2014, 161, 1385–1393. [Google Scholar] [CrossRef]

- Bozec, Y.M.; Alvarez-Filip, L.; Mumby, P.J. The dynamics of architectural complexity on coral reefs under climate change. Glob. Chang. Biol. 2015, 21, 223–235. [Google Scholar] [CrossRef] [PubMed]

- Hixon, M.A.; Beets, J.P. Predation, prey refuges, and the structure of coral-reef fish assemblages. Ecol. Monogr. 1993, 63, 77–101. [Google Scholar] [CrossRef]

- Anthony, K.R.N.; Marshall, P.A.; Abdulla, A.; Beeden, R.; Bergh, C.; Black, R.; Eakin, C.M.; Game, E.T.; Gooch, M.; Graham, N.A.J.; et al. Operationalizing resilience for adaptive coral reef management under global environmental change. Glob. Chang. Biol. 2015, 21, 48–61. [Google Scholar] [CrossRef] [PubMed]

- Rogers, A.; Harborne, A.R.; Brown, C.J.; Bozec, Y.M.; Castro, C.; Chollett, I.; Hock, K.; Knowland, C.A.; Marshell, A.; Ortiz, J.C.; et al. Anticipative management for coral reef ecosystem services in the 21st century. Glob. Chang. Biol. 2015, 21, 504–514. [Google Scholar] [CrossRef] [PubMed]

- Bridge, T.C.L.; Ferrari, R.; Bryson, M.; Hovey, R.; Figueira, W.F.; Williams, S.B.; Pizarro, O.; Harborne, A.R.; Byrne, M. Variable responses of benthic communities to anomalously warm sea temperatures on a high-latitude coral reef. PLoS ONE 2014, 9, e113079. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ferrari, R.; McKinnon, D.; He, H.; Smith, R.N.; Corke, P.; González-Rivero, M.; Mumby, P.J.; Upcroft, B. Quantifying Multiscale Habitat Structural Complexity: A Cost-Effective Framework for Underwater 3D Modelling. Remote Sens. 2016, 8, 113. https://doi.org/10.3390/rs8020113

Ferrari R, McKinnon D, He H, Smith RN, Corke P, González-Rivero M, Mumby PJ, Upcroft B. Quantifying Multiscale Habitat Structural Complexity: A Cost-Effective Framework for Underwater 3D Modelling. Remote Sensing. 2016; 8(2):113. https://doi.org/10.3390/rs8020113

Chicago/Turabian StyleFerrari, Renata, David McKinnon, Hu He, Ryan N. Smith, Peter Corke, Manuel González-Rivero, Peter J. Mumby, and Ben Upcroft. 2016. "Quantifying Multiscale Habitat Structural Complexity: A Cost-Effective Framework for Underwater 3D Modelling" Remote Sensing 8, no. 2: 113. https://doi.org/10.3390/rs8020113