An Improved Convolution Neural Network-Based Model for Classifying Foliage and Woody Components from Terrestrial Laser Scanning Data

Abstract

:1. Introduction

- (1)

- Develop a CNN-based model to separate foliage and woody components by combining 3-D geometrical and LRI information recorded by TLS data.

- (2)

- Investigate the time efficiency and classification accuracy of foliage and woody components using the proposed model in coniferous and broadleaf plots.

- (3)

- Explore the effects of LRI correction and hyper-parameters (the learning rate, batch size, and validation split rate) optimization on the final classification results, and the application possibilities of the proposed classification model in data of mixed forest stand.

2. Materials and Methods

2.1. Study Sites

2.2. TLS Data

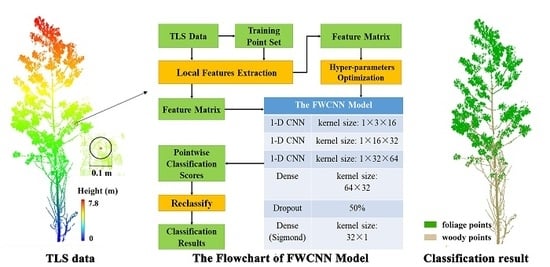

2.3. A CNN-Based Foliage and Woody Separation Model (FWCNN)

2.3.1. The Architecture of FWCNN Model

2.3.2. Training Point Sets Selection

2.3.3. Point Feature Extraction

2.3.4. Hyper-Parameters Determination

2.4. Sensitivity Analysis

2.5. Accuracy Assessment

3. Results

3.1. Extracted Point Features

3.2. Determination of the Optimal Hyper-Parameter Set

3.3. Point-Wise Classification Results

3.4. The Results of Sensitivity Analysis

4. Discussion

4.1. Effects of Hyper-Parameter Selection

4.2. Factors Affecting Classification Accuracy

4.2.1. Searching Radius

4.2.2. Point Density

4.2.3. LRI Correction

4.2.4. Reclassify the Classification Scores

4.3. Comparisons with Other Classifiers Using LRI Information

4.4. Future Potential of the FWCNN

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sun, J.; Yu, X.; Wang, H.; Jia, G.; Zhao, Y.; Tu, Z.; Deng, W.; Jia, J.; Chen, J. Effects of forest structure on hydrological processes in China. J. Hydrol. 2018, 561, 187–199. [Google Scholar] [CrossRef]

- Carlyle-Moses, D.E.; Gash, J.H. Rainfall interception loss by forest canopies. In Forest Hydrology and Biogeochemistry; Springer: Berlin/Heidelberg, Germany, 2011; pp. 407–423. [Google Scholar]

- Griffith, K.T.; Ponette-González, A.G.; Curran, L.M.; Weathers, K.C. Assessing the influence of topography and canopy structure on Douglas fir throughfall with LiDAR and empirical data in the Santa Cruz mountains, USA. Environ. Monit. Assess. 2015, 187, 270. [Google Scholar] [CrossRef] [PubMed]

- Keim, R.F.; Link, T.E. Linked spatial variability of throughfall amount and intensity during rainfall in a coniferous forest. Agric. For. Meteorol. 2018, 248, 15–21. [Google Scholar] [CrossRef]

- Yan, G.; Hu, R.; Luo, J.; Weiss, M.; Jiang, H.; Mu, X.; Xie, D.; Zhang, W. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Ma, L.; Zheng, G.; Eitel, J.U.H.; Magney, T.S.; Moskal, L.M. Determining woody-to-total area ratio using terrestrial laser scanning (TLS). Agric. For. Meteorol. 2016, 228–229, 217–228. [Google Scholar] [CrossRef] [Green Version]

- Zheng, G.; Ma, L.; He, W.; Eitel, J.U.H.; Moskal, L.M.; Zhang, Z. Assessing the Contribution of Woody Materials to Forest Angular Gap Fraction and Effective Leaf Area Index Using Terrestrial Laser Scanning Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1475–1487. [Google Scholar] [CrossRef]

- Vicari, M.B.; Disney, M.; Wilkes, P.; Burt, A.; Calders, K.; Woodgate, W.; Freckleton, R. Leaf and wood classification framework for terrestrial LiDAR point clouds. Methods Ecol. Evol. 2019, 10, 680–694. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Zheng, G.; Eitel, J.U.H.; Moskal, L.M.; He, W.; Huang, H. Improved Salient Feature-Based Approach for Automatically Separating Photosynthetic and Nonphotosynthetic Components Within Terrestrial Lidar Point Cloud Data of Forest Canopies. IEEE Trans. Geosci. Remote Sens. 2016, 54, 679–696. [Google Scholar] [CrossRef]

- Ferrara, R.; Virdis, S.G.P.; Ventura, A.; Ghisu, T.; Duce, P.; Pellizzaro, G. An automated approach for wood-leaf separation from terrestrial LIDAR point clouds using the density based clustering algorithm DBSCAN. Agric. For. Meteorol. 2018, 262, 434–444. [Google Scholar] [CrossRef]

- Zhu, X.; Skidmore, A.K.; Darvishzadeh, R.; Niemann, K.O.; Liu, J.; Shi, Y.; Wang, T. Foliar and woody materials discriminated using terrestrial LiDAR in a mixed natural forest. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 43–50. [Google Scholar] [CrossRef]

- Tao, S.; Guo, Q.; Su, Y.; Xu, S.; Li, Y.; Wu, F. A Geometric Method for Wood-Leaf Separation Using Terrestrial and Simulated Lidar Data. Photogramm. Eng. Remote Sens. 2015, 81, 767–776. [Google Scholar] [CrossRef]

- Douglas, E.S.; Martel, J.; Li, Z.; Howe, G.; Hewawasam, K.; Marshall, R.A.; Schaaf, C.L.; Cook, T.A.; Newnham, G.J.; Strahler, A.; et al. Finding Leaves in the Forest: The Dual-Wavelength Echidna Lidar. IEEE Geosci. Remote Sens. Lett. 2015, 12, 776–780. [Google Scholar] [CrossRef]

- Lalonde, J.F.; Vandapel, N.; Huber, D.F.; Hebert, M. Natural terrain classification using three-dimensional Ladar data for ground robot mobility. J. Field Robot. 2010, 23, 839–861. [Google Scholar] [CrossRef]

- Krishna Moorthy, S.M.; Calders, K.; Vicari, M.B.; Verbeeck, H. Improved Supervised Learning-Based Approach for Leaf and Wood Classification From LiDAR Point Clouds of Forests. IEEE Trans. Geosci. Remote Sens. 2019, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Eitel, J.U.H.; Höfle, B.; Vierling, L.A.; Abellán, A.; Asner, G.P.; Deems, J.S.; Glennie, C.L.; Joerg, P.C.; LeWinter, A.L.; Magney, T.S.; et al. Beyond 3-D: The new spectrum of lidar applications for earth and ecological sciences. Remote Sens. Environ. 2016, 186, 372–392. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Douglas, E.; Strahler, A.; Schaaf, C.; Yang, X.; Wang, Z.; Yao, T.; Zhao, F.; Saenz, E.J.; Paynter, I. Separating leaves from trunks and branches with dual-wavelength terrestrial LiDAR scanning. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium-IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 3383–3386. [Google Scholar]

- Nevalainen, O.; Hakala, T.; Suomalainen, J.; Mäkipää, R.; Peltoniemi, M.; Krooks, A.; Kaasalainen, S. Fast and nondestructive method for leaf level chlorophyll estimation using hyperspectral LiDAR. Agric. For. Meteorol. 2014, 198–199, 250–258. [Google Scholar] [CrossRef]

- Zhu, X.; Wang, T.; Darvishzadeh, R.; Skidmore, A.K.; Niemann, K.O. 3D leaf water content mapping using terrestrial laser scanner backscatter intensity with radiometric correction. ISPRS J. Photogramm. Remote Sens. 2015, 110, 14–23. [Google Scholar] [CrossRef]

- Kashani, A.G.; Olsen, M.J.; Parrish, C.E.; Wilson, N. A Review of LIDAR Radiometric Processing: From Ad Hoc Intensity Correction to Rigorous Radiometric Calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef] [Green Version]

- Korpela, I.; Ørka, H.O.; Hyyppä, J.; Heikkinen, V.; Tokola, T. Range and AGC normalization in airborne discrete-return LiDAR intensity data for forest canopies. ISPRS J. Photogramm. Remote Sens. 2010, 65, 369–379. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; Habib, A.; Kersting, A.P. Improving classification accuracy of airborne LiDAR intensity data by geometric calibration and radiometric correction. ISPRS J. Photogramm. Remote Sens. 2012, 67, 35–44. [Google Scholar] [CrossRef]

- Budei, B.C.; St-Onge, B.; Hopkinson, C.; Audet, F.-A. Identifying the genus or species of individual trees using a three-wavelength airborne lidar system. Remote Sens. Environ. 2018, 204, 632–647. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Demantké, J.; Mallet, C.; David, N.; Vallet, B. Dimensionality based scale selection in 3D lidar point clouds. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 3812, 97–102. [Google Scholar] [CrossRef] [Green Version]

- Ayrey, E.; Hayes, D. The Use of Three-Dimensional Convolutional Neural Networks to Interpret LiDAR for Forest Inventory. Remote Sens. 2018, 10, 649. [Google Scholar] [CrossRef] [Green Version]

- Koma, Z.; Rutzinger, M.; Bremer, M. Automated segmentation of leaves from deciduous trees in terrestrial laser scanning point clouds. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1456–1460. [Google Scholar] [CrossRef]

- Klokov, R.; Lempitsky, V. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. Pointcnn: Convolution on x-transformed points. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 820–830. [Google Scholar]

- Wang, D.; Hollaus, M.; Pfeifer, N. Feasibility of Machine Learning Methods for Separating Wood and Leaf Points from Terrestrial Laser Scanning Data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W4, 157–164. [Google Scholar] [CrossRef] [Green Version]

- Yun, T.; An, F.; Li, W.; Sun, Y.; Cao, L.; Xue, L. A Novel Approach for Retrieving Tree Leaf Area from Ground-Based LiDAR. Remote Sens. 2016, 8, 942. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.; Momo Takoudjou, S.; Casella, E.; Chisholm, R. LeWoS: A universal leaf-wood classification method to facilitate the 3D modelling of large tropical trees using terrestrial LiDAR. Methods Ecol. Evol. 2020, 11, 376–389. [Google Scholar] [CrossRef]

- Charles, R.Q.; Hao, S.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. Spidercnn: Deep learning on point sets with parameterized convolutional filters. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 87–102. [Google Scholar]

- Jin, S.; Guan, H.; Zhang, J.; Guo, Q.; Su, Y.; Gao, S.; Wu, F.; Xu, K.; Ma, Q.; Hu, T.; et al. Separating the Structural Components of Maize for Field Phenotyping Using Terrestrial LiDAR Data and Deep Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2019, 1–15. [Google Scholar] [CrossRef]

- Jin, S.; Su, Y.; Gao, S.; Wu, F.; Hu, T.; Liu, J.; Li, W.; Wang, D.; Chen, S.; Jiang, Y.; et al. Deep Learning: Individual Maize Segmentation From Terrestrial Lidar Data Using Faster R-CNN and Regional Growth Algorithms. Front. Plant Sci. 2018, 9, 886. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4490–4499. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Chollet, F. Deep Learning Mit Python und Keras: Das Praxis-Handbuch vom Entwickler der Keras-Bibliothek; MITP-Verlags GmbH & Co. KG: Frechen, Germany, 2018. [Google Scholar]

- Leica Geosystems AG 2014 Leica Cyclone V.9.0; Leica Geosystems AG: Heerbrugg, Switzerland, 2014.

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Tieleman, T.; Hinton, G. Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. Coursera Neural Netw. Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- Chollet, F. Keras: The Python Deep Learning Library; Astrophysics Source Code Library, 2018. [Google Scholar]

- McGill, R.; Tukey, J.W.; Larsen, W.A. Variations of Box Plots. Am. Stat. 1978, 32, 12–16. [Google Scholar]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision, Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar]

- Jenks, G.F. The data model concept in statistical mapping. Int. Yearb. Cartogr. 1967, 7, 186–190. [Google Scholar]

- Rey, S.J.; Anselin, L. PySAL: A Python Library of Spatial Analytical Methods. Rev. Reg. Stud. 2010, 37, 5–27. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Platt, J. Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Adv. Large Margin Classif. 1999, 10, 61–74. [Google Scholar]

- De Leeuw, J.; Jia, H.; Yang, L.; Liu, X.; Schmidt, K.; Skidmore, A. Comparing accuracy assessments to infer superiority of image classification methods. Int. J. Remote Sens. 2006, 27, 223–232. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ketkar, N. Deep Learning with Python; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and Deformable Convolution for Point Clouds. arXiv 2019, arXiv:1904.08889. [Google Scholar]

- Liu, J.; Skidmore, A.K.; Wang, T.; Zhu, X.; Premier, J.; Heurich, M.; Beudert, B.; Jones, S. Variation of leaf angle distribution quantified by terrestrial LiDAR in natural European beech forest. ISPRS J. Photogramm. Remote Sens. 2019, 148, 208–220. [Google Scholar] [CrossRef]

- Calders, K.; Schenkels, T.; Bartholomeus, H.; Armston, J.; Verbesselt, J.; Herold, M. Monitoring spring phenology with high temporal resolution terrestrial LiDAR measurements. Agric. For. Meteorol. 2015, 203, 158–168. [Google Scholar] [CrossRef]

| Plots | Dimensions (m2) | Number of Trees | Height (m) | DBH (m) | Tree Age (Years) | Canopy Cover (%) | ||

|---|---|---|---|---|---|---|---|---|

| mean | std | mean | std | |||||

| PA | 15×15 | 22 | 10.25 | 1.81 | 0.09 | 0.03 | 8 | 57 |

| CP | 15×15 | 25 | 6.84 | 0.27 | 0.08 | 0.01 | 7 | 84 |

| PM | 10×10 | 54 | 9.72 | 0.74 | 0.11 | 0.02 | 15 | 72 |

| TLS Data | LRI | Classifiers | RT | Time (s) | W-T (Point#) | F-T (Point#) | OA (%) | WPA (%) | FPA (%) | Kappa |

|---|---|---|---|---|---|---|---|---|---|---|

| PA | corrected | FWCNN | 0.49 | 137 | 816,066 | 639,869 | 98.64 | 98.79 | 98.46 | 0.97 |

| GMM | - | 33 | 808,562 | 631,871 | 97.59 | 97.88 | 97.23 | 0.95 | ||

| RF | - | 32 | 813,432 | 622,106 | 97.26 | 98.47 | 95.73 | 0.94 | ||

| SVM | - | 754 | 808,353 | 632,377 | 97.61 | 97.86 | 97.31 | 0.95 | ||

| original | FWCNN | 0.49 | 135 | 813,562 | 637,298 | 98.30 | 98.49 | 98.06 | 0.97 | |

| GMM | - | 31 | 796,810 | 629,770 | 96.66 | 96.46 | 96.91 | 0.93 | ||

| RF | - | 29 | 808,417 | 616,506 | 96.54 | 97.86 | 94.87 | 0.93 | ||

| SVM | - | 671 | 803,259 | 627,360 | 96.93 | 97.24 | 96.54 | 0.94 | ||

| LeWoS | - | - | 685,618 | 583,042 | 85.96 | 82.99 | 89.72 | 0.72 | ||

| LWCLF | - | - | 778,704 | 596,518 | 93.18 | 94.27 | 91.79 | 0.86 | ||

| CP | corrected | FWCNN | 0.5 | 54 | 239,251 | 639,109 | 96.20 | 90.08 | 98.71 | 0.91 |

| GMM | - | 19 | 245,037 | 624,667 | 95.25 | 92.26 | 96.48 | 0.89 | ||

| RF | - | 25 | 249,884 | 615,426 | 94.77 | 94.08 | 95.05 | 0.88 | ||

| SVM | - | 392 | 244,075 | 612,920 | 93.86 | 91.90 | 94.66 | 0.85 | ||

| original | FWCNN | 0.49 | 53 | 250,730 | 617,133 | 95.05 | 94.40 | 95.31 | 0.88 | |

| GMM | - | 24 | 234,537 | 614,167 | 92.95 | 88.31 | 94.85 | 0.83 | ||

| RF | - | 28 | 244,619 | 610,533 | 93.66 | 92.10 | 94.29 | 0.85 | ||

| SVM | - | 457 | 244,012 | 612,913 | 93.85 | 91.87 | 94.66 | 0.85 | ||

| LeWoS | - | - | 242,131 | 515,749 | 83.00 | 91.16 | 79.65 | 0.63 | ||

| LWCLF | - | - | 260,947 | 514,708 | 84.95 | 98.25 | 79.49 | 0.68 | ||

| PM | corrected | FWCNN | 0.53 | 156 | 557,925 | 332,661 | 94.98 | 98.25 | 89.96 | 0.89 |

| GMM | - | 42 | 474,793 | 337,737 | 86.65 | 83.61 | 91.33 | 0.73 | ||

| RF | - | 61 | 555,355 | 309,153 | 92.20 | 97.79 | 83.60 | 0.83 | ||

| SVM | - | 712 | 550,389 | 315,621 | 92.36 | 96.92 | 85.35 | 0.84 | ||

| original | FWCNN | 0.51 | 152 | 555,381 | 320,991 | 93.46 | 97.80 | 86.80 | 0.86 | |

| GMM | - | 34 | 457,293 | 320,237 | 82.92 | 80.53 | 86.60 | 0.65 | ||

| RF | - | 58 | 550,377 | 303,886 | 91.10 | 96.92 | 82.18 | 0.81 | ||

| SVM | - | 875 | 545,353 | 310,619 | 91.29 | 96.03 | 84.00 | 0.81 | ||

| LeWoS | - | - | 438,141 | 272,671 | 75.80 | 77.15 | 73.73 | 0.50 | ||

| LWCLF | - | - | 405,122 | 289,273 | 74.05 | 71.34 | 78.22 | 0.48 |

| TLS Data | RT | W-T (Point#) | F-T (Point#) | OA (%) | WPA (%) | FPA (%) | Kappa |

|---|---|---|---|---|---|---|---|

| PA | 0.5 | 814,229 | 635,852 | 98.25 | 98.57 | 97.84 | 0.96 |

| 0.49 | 816,066 | 639,869 | 98.64 | 98.79 | 98.46 | 0.97 | |

| PM | 0.5 | 544,513 | 320,606 | 92.26 | 95.88 | 86.70 | 0.84 |

| 0.53 | 557,925 | 332,661 | 94.98 | 98.25 | 89.96 | 0.89 |

| TLS Data | Training Set | W-T (Point#) | F-T (Point#) | OA (%) | WPA (%) | FPA (%) | Kappa |

|---|---|---|---|---|---|---|---|

| PA | MIX | 808,562 | 630,191 | 97.48 | 97.88 | 96.97 | 0.95 |

| CP | 807,402 | 632,377 | 97.55 | 97.74 | 97.31 | 0.95 | |

| PM | 808,553 | 607,588 | 95.95 | 97.88 | 93.49 | 0.92 | |

| CP | MIX | 252,495 | 576,751 | 90.82 | 95.07 | 89.08 | 0.79 |

| PA | 253,708 | 557,155 | 88.81 | 95.52 | 86.05 | 0.75 | |

| PM | 257,096 | 512,303 | 84.26 | 96.80 | 79.12 | 0.67 | |

| PM | MIX | 550,389 | 316,556 | 92.46 | 96.92 | 85.60 | 0.84 |

| PA | 550,393 | 316,358 | 92.43 | 96.92 | 85.55 | 0.84 | |

| CP | 549,117 | 319,396 | 92.62 | 96.69 | 86.37 | 0.84 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, B.; Zheng, G.; Chen, Y. An Improved Convolution Neural Network-Based Model for Classifying Foliage and Woody Components from Terrestrial Laser Scanning Data. Remote Sens. 2020, 12, 1010. https://doi.org/10.3390/rs12061010

Wu B, Zheng G, Chen Y. An Improved Convolution Neural Network-Based Model for Classifying Foliage and Woody Components from Terrestrial Laser Scanning Data. Remote Sensing. 2020; 12(6):1010. https://doi.org/10.3390/rs12061010

Chicago/Turabian StyleWu, Bingxiao, Guang Zheng, and Yang Chen. 2020. "An Improved Convolution Neural Network-Based Model for Classifying Foliage and Woody Components from Terrestrial Laser Scanning Data" Remote Sensing 12, no. 6: 1010. https://doi.org/10.3390/rs12061010