Embodied Spatial Knowledge Acquisition in Immersive Virtual Reality: Comparison to Map Exploration

- 1Institute of Cognitive Science, University of Osnabrück, Osnabrück, Germany

- 2Department of Neurophysiology and Pathophysiology, University Medical Center Hamburg-Eppendorf, Hamburg, Germany

Investigating spatial knowledge acquisition in virtual environments allows studying different sources of information under controlled conditions. Therefore, we built a virtual environment in the style of a European village and investigated spatial knowledge acquisition by experience in the immersive virtual environment and compared it to using an interactive map of the same environment. The environment was well explored, with both exploration sources covering the whole village area. We tested knowledge of cardinal directions, building-to-building orientation, and judgment of direction between buildings in a pointing task. The judgment of directions was more accurate after exploration of the virtual environment than after map exploration. The opposite results were observed for knowledge of cardinal directions and relative orientation between buildings. Time for cognitive reasoning improved task accuracies after both exploration sources. Further, an alignment effect toward the north was only visible after map exploration. Taken together, our results suggest that the source of spatial exploration differentially influenced spatial knowledge acquisition.

Introduction

According to theories of embodied and enacted cognition, spatial navigation unfolds in the navigator’s interaction with the real-world surroundings (O’Regan and Noë, 2001; Engel, et al., 2013). Bodily movement provides information about visual, motor, kinesthetic, and vestibular changes, which are essential for spatial cognition (Grant and Magee, 1998; Riecke et al., 2010; Ruddle et al., 2011a; Ruddle et al., 2011b; Waller et al., 2004). Interaction with the environment is crucial for acquiring spatial knowledge and is becoming increasingly considered for spatial navigation research (Gramann, 2013).

When acquiring knowledge of a large-scale real-world environment, people combine direct experience during exploration with spatial information from indirect sources such as cartographical maps (Thorndyke and Hayes-Roth, 1982; Richardson et al., 1999). While moving in an environment, people develop knowledge about landmarks and their connecting routes, which is rooted in an egocentric reference frame (Siegel and White, 1975; Thorndyke and Hayes-Roth, 1982; Montello, 1998; Shelton and McNamara, 2004; Meilinger et al., 2013). Through the integration of this spatial knowledge, a map-like mental representation called survey knowledge may develop (Siegel and White, 1975; Montello, 1998). The acquisition of survey knowledge is supported by using maps and is thought to be coded in an allocentric reference frame (Thorndyke and Hayes-Roth, 1982; Taylor, Naylor, and Chechile, 1999; Montello et al., 2004; Meilinger et al., 2013). Previous studies have also found that spatial knowledge acquired from a cartographical map is learned with respect to a specific orientation, whereas orientation specificity is much less consistent in spatial knowledge gained by direct experience (Evans and Pezdek, 1980; Presson and Hazelrigg, 1984; Shelton and McNamara, 2001; McNamara, 2003; Montello et al., 2004; Meilinger, Riecke, and Bülthoff, 2006; Sholl et al., 2006; Burte and Hegarty, 2014). Thus, the acquired spatial knowledge is shaped by the employed source of spatial information, but which spatial knowledge of a large-scale environment derives from direct experience or indirect sources such as maps is still not fully understood.

Investigations of spatial knowledge acquisition in large-scale real-world settings are challenging to perform because of the complexity and reproducibility problems. The rapid progress of technology allows for the design of immersive virtual realities (VRs) to reduce the gap between classical lab conditions and real-world conditions (Jungnickel and Gramann, 2016; Coutrot et al., 2019). In VR, modern head-mounted displays (HMDs) provide the user with a feeling of presence and immersion in the virtual environment. These VR environments might be considered as “primary” spaces (Presson and Hazelrigg, 1984; Montello et al., 2004), which are directly experienced environments instead of environments indirectly experienced through, for example, maps. Essential for a direct experience of the environment is the amount of naturalistic movement. While exploring the VR environment, different degrees of translational and rotational movements, up to walking and body turns in a large, real indoor space or on an omnidirectional treadmill, are realized. These means push the limits of VR and provide sensory information closer to real-world conditions (Darken et al., 1997; Ruddle et al., 1999; Ruddle and Lessels, 2006; Riecke et al., 2010; Ruddle et al., 2011a; Byagowi et al., 2014; Kitson et al., 2015; Nabiyouni et al., 2015; Kitson et al., 2018; Liang et al., 2018; Aldaba and Moussavi, 2020). However, sensory information gathered by experience in a VR environment is mainly visual and in varying degrees vestibular. In contrast, navigation in the real world is a multisensory process that includes visual, auditory, motor, kinesthetic, and vestibular information (Montello et al., 2004). Another difference between virtual and real environments is the size of the environment used to investigate spatial learning. Virtual environments range from containing a few corridors to complex virtual layouts and virtual cities (e.g., Mallot et al., 1998; Gramann et al., 2005; Goeke et al., 2013; Ehinger et al., 2014; Zhang et al., 2014; Coutrot et al., 2018; Starrett et al., 2019). However, motion sickness gets more severe with an increased time spent in VR (Aldaba and Moussavi, 2020) and negatively affects the user experience (Somrak et al., 2019). This limits the required time for spatial exploration and thus restricts the virtual environment’s size such that it is still small compared to real-world environments. Nevertheless, VR experiments with immersive setups can investigate spatial learning under controlled conditions, considering more embodied interaction than classic lab conditions. In summary, despite the differences concerning real-world navigated environments, immersive VR environments might be viewed as directly experienced environments that allow comparison of spatial knowledge acquisition by direct and indirect sources under controlled conditions with an increased resemblance to spatial learning in the real world.

In the present article, we, therefore, use a virtual village named Seahaven (Clay et al., 2019) and an interactive map of the same environment (König et al., 2019) for a comparison of spatial knowledge acquisition by experience in an immersive VR or by using cartographic material, respectively. For this purpose, one group of participants explored the virtual environment in the VR, and another group of different participants used the interactive map for spatial learning. Seahaven consists of 213 buildings and covers an area of 216,000 m2. Thus, it is a relatively large and complex virtual environment. With the help of an HTC Vive headset, participants had an immersive experience of the environment from a pedestrian perspective during the free exploration in VR. While participants explored the village, we investigated their viewing behavior by measuring eye and head movements (Clay et al., 2019). Alternatively, during the free exploration of the interactive map of Seahaven, another group of participants were provided with a two-dimensional north-up city map from a birds-eye perspective and could view the buildings with the help of an interactive feature (König et al., 2019). Thus, we investigated spatial learning with different sources of the same virtual environment.

After the free exploration of Seahaven, we tested three spatial tasks to investigate which spatial knowledge is acquired via different sources. The absolute orientation task evaluated knowledge of orientations of single buildings to cardinal directions. The relative orientation task assessed the relative orientation of two buildings, and the pointing task investigated judgments of straight-line interbuilding directions (König et al., 2017). To investigate intuitive and slow deductive cognitive processes (dual-process theories) that might be used for solving the spatial tasks, we tested all tasks in two response-time conditions, a response window that required a response within 3 s and an infinite-time condition that allowed unlimited time for a response. All participants, equally in both groups, performed three consecutive full measurement sessions. In addition to the three tasks, we explored whether spatial-orientation strategies based on egocentric or allocentric reference frames that are learned in everyday navigation, measured with the Fragebogen Räumlicher Strategien (FRS, translated as the “German Questionnaire of Spatial Strategies”) (Münzer et al., 2016a; Münzer and Hölscher, 2011), were related to the learning of spatial properties tested in our tasks after exploring a virtual village.

Therefore, our research question for the present study is whether and how spatial learning in a virtual environment is influenced by different sources for spatial knowledge acquisition, comparing experience in the immersive virtual environment with learning from an interactive city map of the same environment.

Methods

We build an immersive virtual village and an interactive map of the same environment. Participants freely explored either the virtual environment or the interactive map. To investigate spatial knowledge acquisition during the exploration phase, we performed the same three spatial tasks for both groups measuring the knowledge of cardinal north, building-to-building orientation, and judging direction between two buildings. For a more detailed description of the design, please see the sections below.

Participants

In this study, we measured spatial knowledge acquisition of 50 participants, divided in 28 participants exploring an immersive virtual environment and 22 participants exploring an interactive map. Due to motion sickness in VR, we excluded two participants of that group after the first session. Four further participants of the VR group had to be excluded because of technical problems in collecting the eye-tracking data or saving them during their measurements. This resulted in 22 valid participants (11 females, mean age of 22.9 years, SD = 6.7) who explored our virtual environment Seahaven with experience in immersive VR in three sessions with complete data available. To compare these data with those after exploring a map, 22 different participants (11 females, mean age of 23.8 years, SD = 3.1) explored an interactive map of the virtual environment repeatedly in three sessions (König et al., 2019). This matches the target of 22 valid subjects per group as determined by power analysis (G*Power 3.1, effect size 0.25, determined by a previous study (König et al., 2017), power 0.8, significance level 0.05, contrasts for ANOVA: repeated measures, within-between interaction).

All the 22 participants of the VR group and the 22 participants of the map group performed three repeated full sessions resulting in a total of 90 min exploration time. Both groups were tested with the same spatial tasks repeatedly after each exploration phase (Table 1). The three sessions were conducted within ten days. The data that we report here are based on the accuracy of the third final session’s spatial tasks for each participant in both groups. At the beginning of the experiment, participants were informed about the investigation’s purpose and procedure and gave written informed consent. Each participant was reimbursed with nine euros per hour or earned an equivalent amount of “participant hours”, which are a requirement in most students’ study programs at the University of Osnabrück. Overall, each session took about 2 h. The Ethics Committee of the University of Osnabrück approved the study following the Institutional and National Research Committees’ ethical standards.

VR Village Design

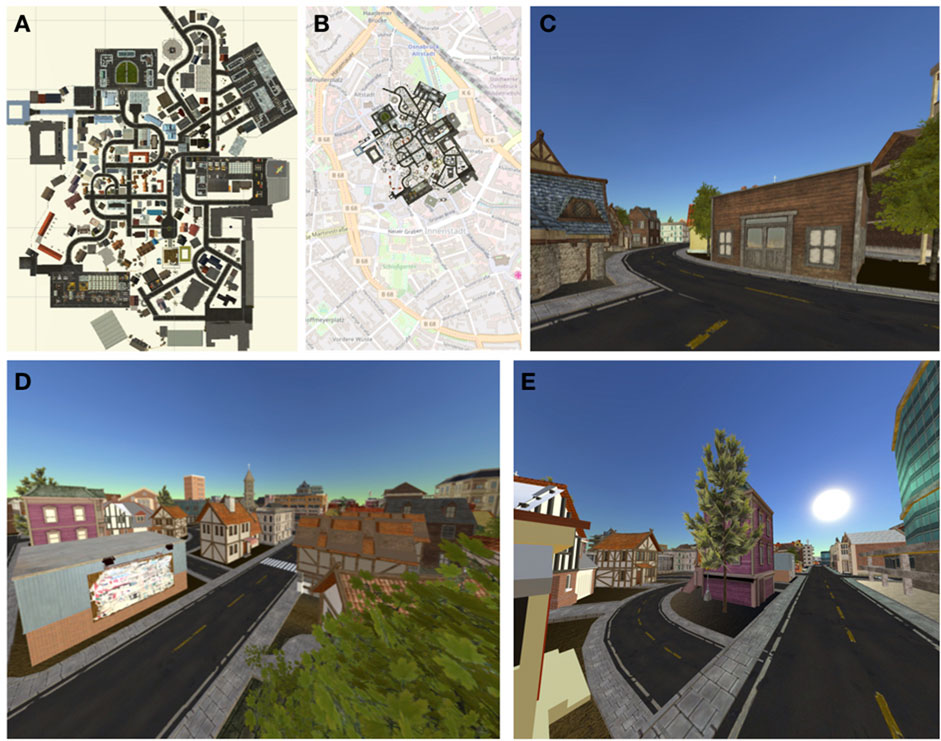

To minimize the gap between spatial tasks investigation under laboratory conditions and in real-world environments, we built a virtual environment (Figure 1) named Seahaven. Seahaven is located on an island to keep participants in the village area. Seahaven was built in the Unity game engine using buildings, streets, and landscape objects acquired from the Unity asset store. Seahaven consists of 213 unique buildings, which had to be distinguishable for the spatial tasks and thus have different styles and appearances. The number of buildings sharing a specific orientation toward the north was approximately equally distributed in steps of 30° (0°–330°). The street layout includes smaller and bigger streets and paths resembling a typical European small village without an ordered grid structure and specific districts. Seahaven would cover about 216,000 m2 in real-world measure and is designed for pedestrian use. For a virtual environment, Seahaven is relatively large and complex compared to many VR environments used for spatial-navigation research (e.g., Goeke et al., 2013; Gramann et al., 2005; Riecke et al., 2002; Ruddle et al., 2011). Its effective size was limited through the time needed to explore it and because more time spent in the VR increases the risk of motion sickness (Aldaba and Moussavi, 2020).

FIGURE 1. Seahaven as a map and in VR: the map of Seahaven (A) is overlaid onto the city map of central Osnabrück for size comparison (B). The remaining photographs show three examples of views in the village from a pedestrian or oblique perspective (C–E).

As we wanted to compare spatial learning after experience in VR and after map use, we provided the information of cardinal directions also during VR exploration, as this information is available on a map. As the Sun’s position is a primary means to infer cardinal directions in natural surroundings, we implemented this cue using a light source in the virtual environment. During the exploration in VR, information about cardinal directions could thus be deduced from the trajectory of the light source representing the Sun over the course of one virtual day. The Sun moved on a regular trajectory from east to west with an inclination matching the latitude of Osnabrück at the equinox. The light source’s position indicated a sunrise in the east at the beginning of the exploration, the Sun’s trajectory during the day, and the sunset in the west at the end of the same exploration session. As the Sun’s position in the sky changed during the session, the shadows of the buildings and other objects in the virtual village changed in relation to the Sun’s position as in a real environment.

Interactive Map Design

The virtual environment’s interactive map resembled a traditional city map with a north-up orientation and a bird’s-eye view (Figures 1A,B). It was implemented using HTML, jQuery, and CSS. By adding an interactive component, participants were also provided with screenshots of front-on views of 193 buildings in Seahaven from a pedestrian perspective which were used as spatial task stimuli (see below). To view the buildings’ screenshots, participants moved over the map using a mouse. When hovering over a building, a red dot appeared on one side of the respective building. The red dot indicated the side of the building that was displayed in the screenshot. By clicking on the building, the respective screenshot of the building’s façade was shown.

Stimuli

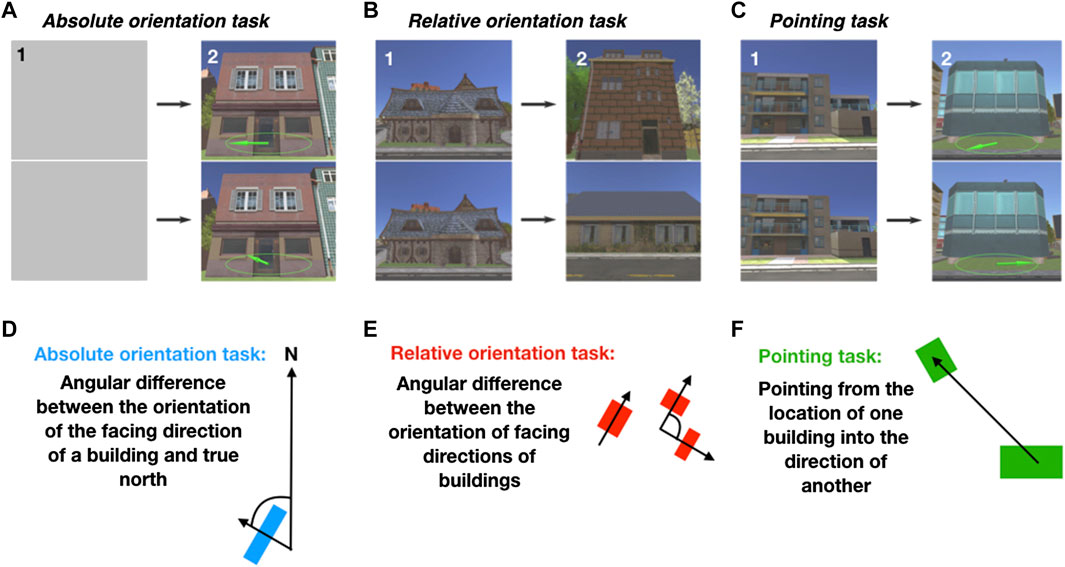

The spatial tasks stimuli were the same for the map and the VR group and were front-on screenshots of 193 buildings (examples are shown in Figure 2). The screenshots were taken at random times during the day in the virtual village, specifically avoiding consistent lighting conditions that could have been used for orientation information. We compared the orientations of buildings toward the north (absolute orientation task) or two buildings’ relative orientation (relative orientation task) in the spatial tasks. As the orientation of buildings can be ambiguous, we took the facing direction of the buildings, which is the direction from which the photographer took the screenshots, as our defined orientation of the buildings (Figure 2). The photographer took the screenshots in the virtual environment from a pedestrian viewpoint that would reflect an approximately 5 m distance to the corresponding building from a position on a street or path in the VR village. For some buildings, this was not possible, so they were excluded as stimuli. Furthermore, a few buildings were overly similar in appearance and therefore had to be excluded as well. All screenshots were shown in full screen with a resolution of 1920 × 1080 pixels on one screen of a six-screen monitor. For the prime stimuli in the relative orientation and pointing tasks, we used the screenshots of 18 buildings that were most often viewed in a VR pilot study. These prime buildings’ orientations were equally distributed over the required orientations from 0° to 330° to cardinal north, increasing in steps of 30°, and were distributed evenly across the village. Each prime building was used twice in the tasks. The screenshots that were used as target stimuli were only used once.

FIGURE 2. Task design: the top row shows the design of the spatial tasks with example trials: absolute orientation task (A), relative orientation task (B), and pointing task (C). The bottom row depicts schemata of the tasks in blue (D), red (E), and green (F), respectively. First, a prime stimulus (1) is shown for 5s in the relative and pointing tasks, which is substituted by a gray screen in the absolute orientation task to fit the other tasks' trial layout. Then, a target stimulus (2) is shown until the button press either in a maximum of 3 s or unlimited time to respond. In the absolute orientation task, participants were required to choose the compass needle depicted on the stimuli that correctly pointed to the north. In the relative orientation task, they were required to select the target building with the same orientation as the prime building. In the pointing task, participants were required to choose the target stimulus in which the compass needle pointed correctly to the prime building's location (adapted from König et al., 2019). In the schemata, the arrows through the blue and red squares (buildings) depict the respective buildings’ facing directions.

Spatial Tasks

Our study wanted to investigate the learning of allocentric knowledge in terms of cardinal directions, the relative orientation of two objects, and straight-line interobject directions. Therefore, we designed three spatial tasks. Our choice of stimuli for our spatial tasks was motivated by a previous study, which examined the same tasks (König et al., 2017). There, we used photographs of buildings and streets of a real-world city as stimuli as buildings and streets are important landmarks for spatial navigation in real-world cities. For comparability reasons, we also focused on buildings in the presented study and performed the same spatial navigation tasks with screenshots of buildings of the VR.

Participants were required to perform the same three spatial tasks after VR exploration or map exploration, respectively. All tasks were designed as two-alternative forced-choice (2AFC) tasks, requiring selecting the correct solution out of two possibilities. Thus, the answers were either correct or wrong (Figure 2). In the absolute orientation and the pointing task, the choice options were depicted as compasses. One compass needle pointed in the correct direction and the other in a wrong direction. These correct and wrong directions deviated from each other in different angular degrees in steps of 30° from 0° to 330°. In the relative orientation task, the two choices were buildings with different orientations. One building had the same (correct) orientation as the prime building. The other building had a different (incorrect) orientation. Again, angular differences between correct and wrong orientation varied in steps of 30° from 0° to 330°. The angular differences between choice options in all tasks provided the information from which we calculated the angular differences and the alignment to the north. For the analysis, we combined deviations resulting from clockwise or anticlockwise rotations—specifically, matching bins from 0 to 180° and 180–360° deviations were collapsed. Due to small variations in the buildings’ orientation in the virtual village design, the difference between these angles varied in the relative orientation task with a maximal deviation of ± 5° in each step from a minimum of 30° in steps of 30°.

In the absolute orientation task, participants were required to judge single buildings’ orientation with respect to the cardinal north (Figures 2A,D). In one trial of the absolute orientation task, two screenshots of the same building were overlaid with a compass. One compass needle pointed correctly toward the cardinal north. The other one pointed randomly into another direction deviating from the north in steps of 30° (Figure 2A). Out of the two images of the building, participants were required to choose the image on which the compass needle pointed correctly to the cardinal north.

In the relative orientation task, participants judged the orientation of buildings relative to the orientation of another building (Figures 2B,E). In one trial of the relative orientation task, participants saw a prime building followed by two different target buildings that differed in their orientation in steps of 30° from each other (Figure 2B). Out of the two buildings, participants were required to choose the target building that had the same orientation as the prime building: in other words, the target building whose orientation was closely aligned with the prime building’s orientation.

In the pointing task, participants had to judge the straight-line direction from one building’s location to the location of another building (Figures 2C,F). In one trial of the pointing task, a screenshot of a prime building was presented first, followed by two screenshots of the same target building. This target building was depicted twice: once overlaid with a compass needle correctly pointing into the direction of the prime building and once overlaid with a compass needle randomly pointing in another direction deviating from the correct direction in steps of 30° (Figure 2C). Of these two images of the target building, participants were required to choose the target building with the compass needle that correctly pointed in the direction of the prime building’s location.

All three tasks were performed in two response-time conditions, the 3 s condition with a 3s response window and the infinite-time condition with unlimited time to respond. This resulted in six different conditions that were presented in blocks. Every block consisted of 36 trials. The number of trials, 216 in total, balances data quality and load on participants’ alertness and motivation. The order of blocks was randomized across subjects. For more detailed information, see König et al. (2017) and König et al. (2019).

Overview of Experimental Procedure

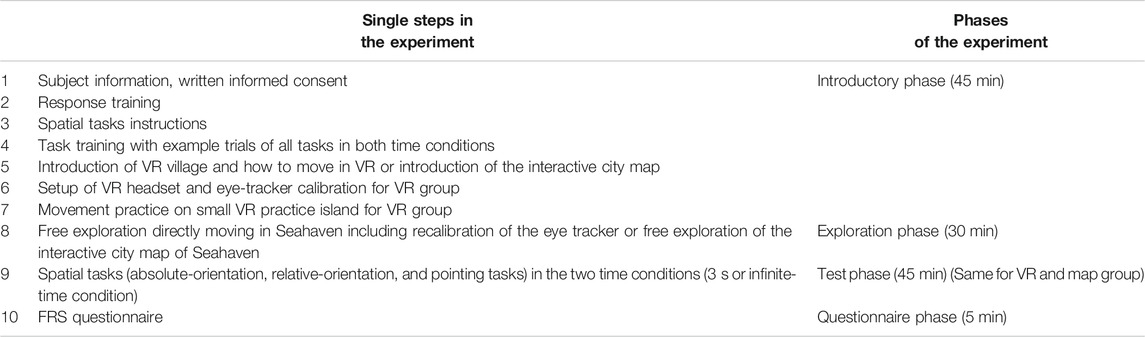

Our experiment consisted of four major phases, the same for both groups, with only small adjustments for the source of spatial exploration, which are described in detail below (Table 1).

The first was the introductory phase, which lasted approximately 45 min. Here, participants were informed about the experiment and gave written informed consent. Further, they performed the response training and received instructions for and an explanation of the spatial tasks. The introduction of the spatial tasks included example trials for all task and time conditions (see section “Response Training” and “Spatial Tasks’ Instructions and Task Training”). Next, participants in the immersive VR group were introduced to VR and instructed how to move in Seahaven. They were especially informed about the risk of motion sickness. After this, an HTC Vive headset with an integrated eye tracker was mounted, giving an immersive VR experience. With this, participants practiced their movement in VR, which was followed by calibration and validation of the eye tracker. Participants in the map group were instead introduced to the interactive map and instructed how to use the interactive feature.

For the second phase, the exploration phase, VR participants were placed in a predefined place in Seahaven from which they started their free exploration for 30 min. Every 5 min, the exploration was briefly interrupted for validation of the eye tracker. The map group freely explored the interactive map of Seahaven, also for 30 min. For this, participants freely moved on the city map with a mouse and, by clicking on a building, were provided with screenshots of the building’s front view through the interactive feature (see section “Exploration of Seahaven’s Interactive City Map” and Figure 3B).

FIGURE 3. Experimental setup: the VR experimental setup includes the HTC Vive, controller, and swivel chair (A). The experimental design with the interactive map is shown in (B) (written informed consent was obtained from the individuals for the publication of these images).

The third (testing) phase lasted for approximately 45 min. Here, participants in the VR and map group were tested on the same three spatial tasks (absolute orientation, relative orientation, and pointing tasks) in the two time conditions (3 s and infinite-time condition; see section “Stimuli” and “Spatial Tasks”).

Fourth and finally, all participants filled out a questionnaire on spatial strategies (FRS questionnaire), which concluded the experiment.

Response Training

To familiarize participants with the 3 s response, the interpretation of the directional compass needle on the screen, and the behavioral responses required in the 2AFC tasks, each participant performed a response training. Here, the participants were required to compare two compass needles and then select within 3 s the compass needle that pointed most straightly upward on the screen. Therefore, one compass needle was presented on one screen and another compass needle on another screen. The screens were placed above each other (Figure 3B), and the compass needles pointed in different directions (Figure 2 with examples of spatial tasks). To choose the compass needle shown on the upper screen, participants were required to press the “up” button. To select the compass needle on the lower screen, they were required to press the “down” button. On each trial, they received feedback on whether they decided correctly (green frame), incorrectly (red frame), or failed to respond in time (blue frame). The response training was finished when the participants responded correctly in 48 out of 50 trials (accuracy >95%). This response training ensured that participants were well acquainted with the response mechanism of the 2AFC design that our spatial tasks used.

Spatial Task Instructions and Task Training

A separate pilot study, in which participants did not know the tasks before the exploration phase, revealed that, during their free exploration, they sometimes focused more on aspects that would not support spatial learning, such as detailed building design. It is known that, during spatial learning, paying attention to the environment or the map supports spatial-knowledge acquisition (Montello, 1998). When acquiring knowledge of a new city, knowledge of where important places such as home, work, and a bakery are is crucial for everyday life. It thus motivates learning spatial relations. To support the motivation of spatial exploration in VR, each participant received task instructions and task training before starting the free exploration time in Seahaven to support intentional spatial learning. Note that none of the pilot study subjects is included in the main study and that no subjects of the main study were excluded for reasons of their spatial exploration behavior. The instructions were given using written and verbal explanations accompanied by photographs of buildings in Osnabrück that were used as stimuli in a previous study (König et al., 2017). Participants then performed pretask training with one example of each spatial task in both time conditions to gain a better insight into the actual task requirements. Except for the stimuli, the pretasks exactly resembled the design of the spatial tasks (see sections “Stimuli” and “Spatial Tasks”). We performed the task training to familiarize participants with the type of spatial knowledge that was later tested in the spatial tasks.

Exploration of Seahaven in Immersive VR

To display the virtual environment in an immersive fashion, we used an HTC Vive headset (https://www.vive.com/eu/product/; Figure 3A) with an integrated Pupil Labs eye tracker (https://pupil-labs.com/vr-ar/). The HTC Vive headset provided a field-of-view of 110° that also allowed peripheral vision, which is considered essential for developing survey knowledge (Sholl, 1996). Previous research recommended the HTC Vive because of its easy-to-use handheld controllers and its high-resolution display providing 1080 x 1200 resolution per eye (Kose, et al., 2017). Participants were instructed about the virtual village and the potential risk of motion sickness. Additionally, they were informed that they could terminate their participation at any time without giving reasons. During the exploration phase, participants were seated on a swivel chair, which allowed for free rotation. For moving in VR, participants were instructed to move forward in the VR environment by touching the forward axis on the HTC Vive’s handheld controller touchpad. To turn, they were required to turn their physical body on the swivel chair in the real world. After these instructions, the HTC Vive headset was mounted, and participants themselves adjusted the interpupillary distance. To support immersion in VR and avoid distractions due to outside noises, participants heard the sound of ocean waves over headphones. Participants started in the VR on a small practice island where they practiced VR movements until they felt comfortable. This was followed by the calibration and validation of the eye tracker (see section “Eye Tracking in Seahaven”). They were then placed in the virtual village at the starting position: the same central main crossing for all participants. From there, they freely explored Seahaven for 30 min by actively moving within the virtual environment. The participants were informed that it was morning in Seahaven when they began their exploration and that at the end of the 30 min exploration, the Sun would be close to the setting (see section “VR Village Design”). We validated the usefulness of this approach by asking the participants to turn toward the north at the end of the VR exploration session. Thus, Seahaven provided a virtual environment for immersive free spatial exploration.

Eye and Position Tracking in Seahaven

We recorded the viewing behavior and the participants’ position in the virtual village during the free exploration in the immersive VR. For directly measuring eye movements in VR, we used the Pupil Labs eye tracker, which is plugged into the HTC Vive headset (Clay et al., 2019). To ensure reliable eye-tracking data, we performed calibration and validation of the eye tracker until a validation error below 2° was achieved. We performed a short validation every 5 min to check the accuracy, for which slippage due to head movements was possible. If the validation error was above 2°, we repeated the calibration and validation procedure. From the eye-tracking data, we evaluated where participants looked. For this, we calculated a 3D gaze vector and used the ray casting system of Unity, which detects hit points of the gaze with an object collider. With this method, we obtained information about the object that was looked at and its distance to the eye. For the statistical analysis of our eye movement data, we calculated the dwelling time by counting the consecutive hit points on a building recorded by the eye tracker. We considered at least seven consecutive hit points on a building as one gaze event, which sums up to 233 ms as the minimum time for viewing duration. We used median absolute deviation-based outlier detection to remove outlying viewing durations. Furthermore, we defined dwelling time as the cumulative time of such gaze events spent on a particular building by a subject. The virtual environment was differentiated into three categories: buildings, the sky, and everything else. As Unity does not allow a collider’s placement around the light source that we used to simulate the moving Sun, we could not investigate hit points with the Sun. As buildings were our objects of interest, each building was surrounded by an individual collider. This enabled us to determine how many, how often, for how long, which, and in which order buildings were looked at, giving us information about participants’ exploration behavior. For the position tracking, we recorded all coordinates in Seahaven that a participant visited during the free exploration. Taken together, we measured the walked path and viewing behavior of participants, which enabled us to characterize their exploration behavior in the virtual environment.

Exploration of Seahaven’s Interactive City Map

For exploring the interactive map of Seahaven (Figures 1A, 3B), participants sat approximately 60 cm from a six-screen monitor setup in a 2 × 3 screen arrangement. During the exploration, the two-dimensional map of the virtual environment was presented on the two central screens (Figure 3B). To explore the map, participants moved over the map using a mouse. When hovering over a building, a red dot appeared on one side of the respective building. By clicking on this building, the interactive component displayed the building’s screenshot twice on the monitor’s two right screens (the same image above each other) (Figure 3B). These screenshots were the same that were used in the spatial tasks of both groups later on in the test phase. How often buildings were clicked on was recorded and later used to determine participants’ familiarity with the stimuli and which buildings were looked at to investigate the part of Seahaven that participants visited. The two screens on the left side of the six-screen monitor setup were not used during exploration or testing. In summary, the interactive map provided participants with a city map of Seahaven and front-on views of the majority of Seahaven buildings.

FRS Questionnaire

To compare participants’ abilities in spatial-orientation strategies learned in real-world environments with their accuracy in the spatial tasks after exploring a virtual village, participants filled in the FRS questionnaire (Münzer and Hölscher, 2011) at the end of the measurements. The FRS questionnaire imposes self-report measures for spatial orientation strategies learned in real environments (Münzer and Hölscher, 2011; Münzer et al., 2016a; Münzer et al., 2016b). The “global-egocentric scale” evaluates global orientation abilities and egocentric abilities based on knowledge of routes and directions. The evaluation of allocentric strategies is separated into the “survey scale,” which assesses an allocentric strategy for mental map formation, and the “cardinal directions scale,” which evaluates knowledge of cardinal directions. All scales consist of Likert items with a score ranging from 1 (“I disagree strongly”) to 7 (“I agree strongly”). Thus, the FRS questionnaire enables us to obtain insights into participants’ preferred use of egocentric or allocentric spatial strategies and investigate whether spatial abilities learned in the real world are used for spatial learning in a virtual environment.

Data Analysis Using Logistic Regression for Binary Answers

As we have binary answers and multiple factors in our 2AFC task design, we performed a logistic regression analysis. The modeling was done using R 3.6.3. We used the glm() function from the lme4 package. This GLM model gives a regression table containing different p-values for the different factors, resulting from a single model. Thus, it does not require multiple comparison correction. The three models can be formalized as follows using the Wilkinson notation (Wilkinson and Rogers, 1973):

Here, “isAccurate” is the accuracy, “time” codes the 3-s or infinite-time condition, “task” codes the absolute, relative, and pointing task, “group” codes VR and map exploration group, “AngularDiff” codes the angular difference of the two response options, and “AngleToNorth” codes the angle of the response to the north,

where X is the design matrix and

We modeled the log odds to be accurate for each trial based on task (absolute, relative, or pointing), time (3 s or infinite-time condition), and exploration group (map or VR) and their interactions. The categorical variables of task, time, and group were coded using simple effect regression coding so that the log odds ratios could be directly interpreted as the main effects of the model coefficients. The model gives log odds ratios for the different levels of each variable with respect to the reference level. Therefore, no separate post-hoc tests are required. We also used the same model for analyzing the angular differences between task choices and alignment to the north. Similarly, the log odds of being accurate for the angular differences between task choices and alignment to the north were modeled as per Eqs 2 and 3.

Following model fitting, we performed chi-squared tests to compare each of the models to evaluate whether the model parameters perform better than a null model fitted only with an intercept.

Further, we performed an ordinary least squares linear regression for analyzing the distance effect. We used the ols() function of the Python package statsmodels v0.11. The dependent variable was the accuracy of participants for a given prime-target building pair, and the independent variables were the task, time, and group as above.

Results

In this study, we investigated spatial knowledge acquisition by experience in a large-scale immersive virtual village. In close analogy, a previous study used an interactive map for the spatial exploration of the same virtual environment and reported a preliminary analysis of those data (König et al., 2019). Here, data obtained from both experiments are analyzed in depth using identical procedures (generalized linear models) and are fully reported to enable direct comparison between the two spatial learning sources.

Results of Exploration Behavior

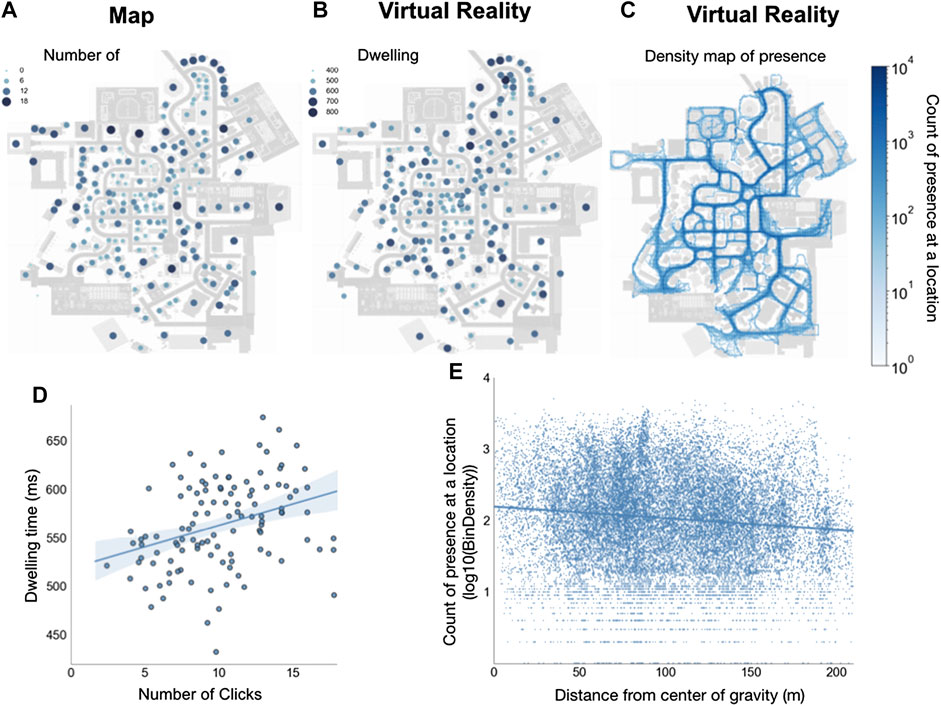

While participants freely explored the virtual village in VR, we measured their viewing behavior and tracked their positions. We characterized the viewing behavior by the time a building was viewed in total. That is, we took the summed dwelling time over the three sessions on a building averaged over subjects as a measure of its familiarity (Figure 4). For participants, who explored the interactive city map of Seahaven, we measured how often a building was clicked on, which displayed the screenshot of the respective building. We took the number of clicks on a building averaged over subjects as a measure of the familiarity of the building after map exploration (Figure 4A). Overall, views of buildings and clicks on buildings revealed the parts of Seahaven visited during map exploration.

FIGURE 4. Distribution of spatial exploration: the heat maps depict how often participants clicked on buildings with map exploration (A) and how long participants looked at a building with exploration in immersive VR (B) on a gradient going from deep blue (most) to light blue (least). The count of the density distribution of presence at a location during exploration in VR for all participants is plotted onto the village map (C) and reveals which places participants visited during the free exploration on the same gradient from deep blue (most) to light blue (least). (D) depicts the scatter plot of the correlation of dwelling time on buildings during exploration in VR and the number of clicks on buildings with map exploration. Each dot shows a dwelling time × click combination of a building averaged over subjects. The blue line depicts the regression line and the shaded area the 95% confidence interval. (E) shows that the distance from the center of gravity of the city map and the density count in bins of presence at a location are negatively correlated. Blue dots depict singly visited locations by a participant, and the blue line is the regression line.

The distribution of the dwelling time on buildings in the village revealed that buildings that were looked at the longest were not centered in a particular village district but were distributed throughout the village (Figure 4B). The same held for the distribution of clicks on buildings after map exploration. For comparison of familiarity after VR exploration and exploring the city map, we performed a Spearman’s rank correlation between dwelling time and the number of clicks on buildings. The result revealed a moderately significant correlation [rho(114) = 0.36, p < 0.001, Figure 4D]. The distribution of looked at buildings in VR and clicked on buildings with the interactive map over the virtual village and their correlation suggests that the exploration covered Seahaven well for both exploration sources.

To investigate participants' walking paths exploring the immersive virtual environment, we analyzed all coordinates in Seahaven, which a participant visited. We displayed this as a density distribution of the count of the “presence at a location” plotted onto the city map (Figure 4C). We performed a Pearson correlation between distance from the center of gravity of the city map and density count of presence at a location (log10(bin of density count)). We found a weak negative correlation [rho (22,340) = −0.12, p < 0.001] (Figure 4E). The density map of presence at a location investigating the walked path in VR revealed that participants visited all possible locations but that central streets were visited slightly more often than peripheral paths.

In summary, the investigation of the exploration behavior showed that participants covered the virtual village well during exploration in the VR and when exploring the map. When exploring the virtual environment, central streets were visited slightly more often than peripheral.

Spatial Task Results

For the performance in the spatial tasks, we evaluated the accuracy of response choices in the 2AFC tasks after experience in the virtual village. We compared the accuracy after exploration in the immersive VR and after exploring an interactive city map (König et al., 2019). Subsequently, we investigated the influence of how long a building was looked at (familiarity of buildings), alignment of stimuli toward the north, the angular difference between choice options, the distance between tested buildings, and abilities of spatial strategies (FRS questionnaire) on task accuracy.

Task Accuracy in Different Time Conditions Comparing VR and Map Exploration

Following dual-process theories (Evans, 1984; Kahneman et al., 2002; Evans, 2008), we hypothesized that the accuracy with unlimited time for a response would be higher than with restricted time to respond within 3 s. Furthermore, following previous research using this set of tasks (König et al., 2017), we hypothesized that experience in the immersive virtual environment would support action-relevant tasks and reveal a higher accuracy for judging straight-line directions between buildings tested in the pointing task as well.

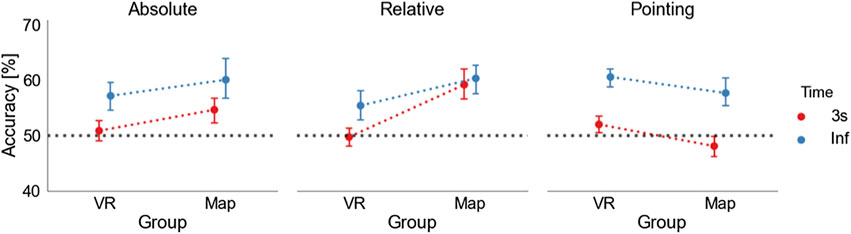

We measured task performance as accuracy, which is the fraction of correctly answered trials within the required time frames to the total number of trials. After VR exploration, the mean accuracies for the different conditions were (each time 3 s, infinite-time, respectively): absolute task 50.88%, 57.20%, relative task 49.75%, 55.43%, and pointing task 52.02%, 60.61%. After exploration with the map, the mean accuracy for the different conditions was absolute task 54.67%, 60.10%, relative task 59.21%, 60.35%, and pointing task 48.11%, 57.70%.

As we investigated different time conditions, we also checked on the mean response times in the 3-s and infinite-time condition. Response times were after VR exploration 1.8 ± 0.5 s and 4.2 ± 2.4 s and after map exploration 1.8 ± 0.5 s and 5.5 ± 4 s, in the respective condition. On average, in the infinite condition, participants took a bit more than twice the time than under time pressure.

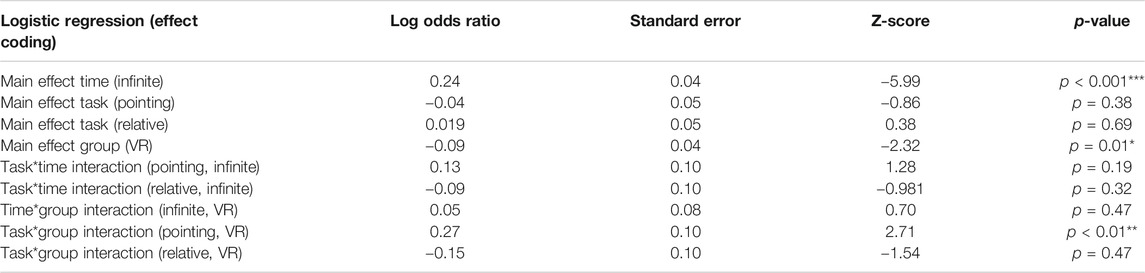

As the spatial tasks require binary answers, we performed a logistic regression analysis for the statistical analysis of the complete dataset with 44 participants and 9504 observations of single trials to log odds being accurate in the time and task conditions comparing the groups that explored Seahaven in VR or with a map. We modeled the log odds of being accurate for each trial based on task (absolute, relative, pointing), time (3 s, infinite-time condition), and exploration group (VR, map) and their interactions. The categorical variables of task, time, and group were coded using simple effect regression coding so that the log odds ratios can be directly interpreted as the main effects of the model coefficients. The model’s coefficients represent the log odds ratios of the accuracy of one categorical level from all other levels.

To test the goodness of fit of our model, we performed a log-likelihood ratio test for the full model, and the null model fitted only on the intercept showing that the full model yielded a significantly better model fit (χ2(9) = 68.69, p < 0.001).

The analysis revealed a significant main effect for time (log odds ratio = 0.24, SE = 0.04, df = 9502, z = -6.03, p < 0.001), which showed that the infinite-time condition resulted in overall higher accuracy levels than responding within 3 s. Additionally, we found a significant main effect for group (log odds ratio = −0.09, SE = 0.04, df = 9499, z = 2.33, p = 0.02) with a slightly higher accuracy by 0.09 log odds after map than VR exploration. The logistic regression showed a significant task*group interaction with a significantly higher accuracy in the pointing task in the VR group than in the map group (log odds ratio = 0.27, SE = 0.10, df = 9495, z = −2.71, p < 0.01). We found no further significant effects. All model results are displayed in Table 2.

Taken together, the spatial tasks showed a significant difference between the 3-s and infinite-time conditions with higher accuracy in time for cognitive reasoning and a significant group effect with an overall higher accuracy after map exploration. Importantly, our results revealed a significant task*group interaction with significantly higher accuracy in the pointing task after VR exploration than after map exploration (Figure 5).

FIGURE 5. Task accuracies: accuracy of absolute orientation (left), relative orientation (middle), and pointing task (right) in 3 s (red) and infinite-time (blue) conditions. In each task, the dots on the left depict the mean accuracy level after VR exploration, and the dots on the right mean accuracy level after map exploration. The dotted lines are displayed to visualize the difference between the map and the VR condition. The black dashed line marks the level of 50%. The error bars indicate the standard error of the mean (SEM).

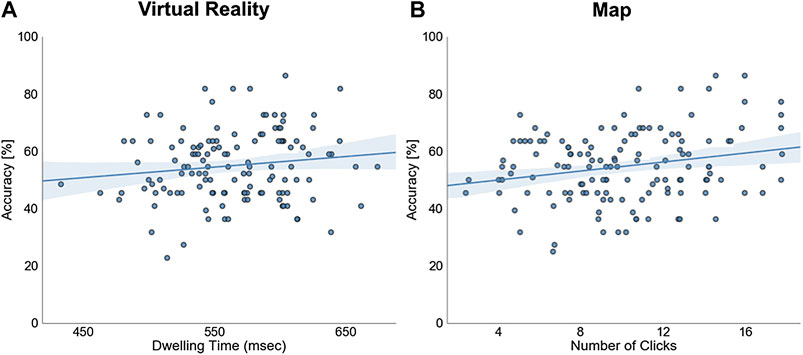

Accuracy as a Function of Familiarity of Buildings Measured as Dwelling Time and Clicks on a Building

We hypothesized that buildings with a longer dwelling time would have increased familiarity and consequently improved the task accuracy. Therefore, we calculated the mean dwelling time for each subject in each building. The absolute orientation task only consisted of one building in a trial, and we considered the dwelling time on this building as the dependent variable. However, as each trial in the relative orientation task consisted of a triplet of buildings, we compared the dwelling time on the prime and the two target buildings and considered the smallest value as an estimate of the minimum familiarity. Each trial of the pointing task consisted of a prime and a target building, for which we compared the dwelling time on the two buildings. We then conservatively used the building with the lowest dwelling time in a trial as the relevant indicator for the respective trial in these tasks. With this, we calculated the average accuracy of all trials over subjects with a specific dwelling time. We calculated a Pearson correlation between accuracy and dwelling time on a building's overall task and time conditions (Figure 6A). We repeated the same analysis to compare the data after map exploration using the number of clicks on a building as a similar factor for familiarity with a building (Figure 6B). After VR exploration, we found a weak significant correlation between accuracy and dwelling time [rho(216) = 0.140, p = 0.04], similar to the correlation after map exploration [rho (216) = 0.165, p = 0.02]. In both exploration groups, we found a positive correlation between task accuracy and the increased familiarity of buildings measured as dwelling time on a building during VR exploration or clicks on a building during map exploration.

FIGURE 6. The familiarity of buildings: the scatter plots show the dependence of overall task accuracy on the dwelling time on a building after VR exploration (A) and the number of clicks on a building after map exploration (B). One dot represents all trials’ average accuracy over subjects with a specific dwelling time (A) or the number of clicks (B) on a building. The blue line depicts the regression line and the shaded area the 95% confidence interval.

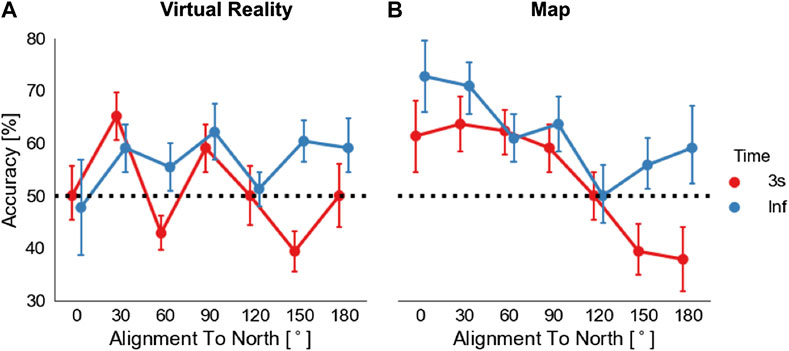

Accuracy as a Function of Alignment to North

To investigate orientation specificity, we tested whether task accuracy was higher when the orientation of the building’s facing direction was aligned with the north. Following previous research (e.g., Montello et al., 2004; König et al., 2019), which found a consistent alignment effect after spatial learning with a map that was less reliable after spatial learning by direct experience (e.g., Presson and Hazelrigg, 1984; Burte and Hegarty, 2014), we did not expect to find an alignment effect revealing orientation specificity after experience in the immersive virtual village opposed to map exploration. To obtain clear results, we only considered the absolute orientation task as participants were required to judge separate single buildings only in this task.

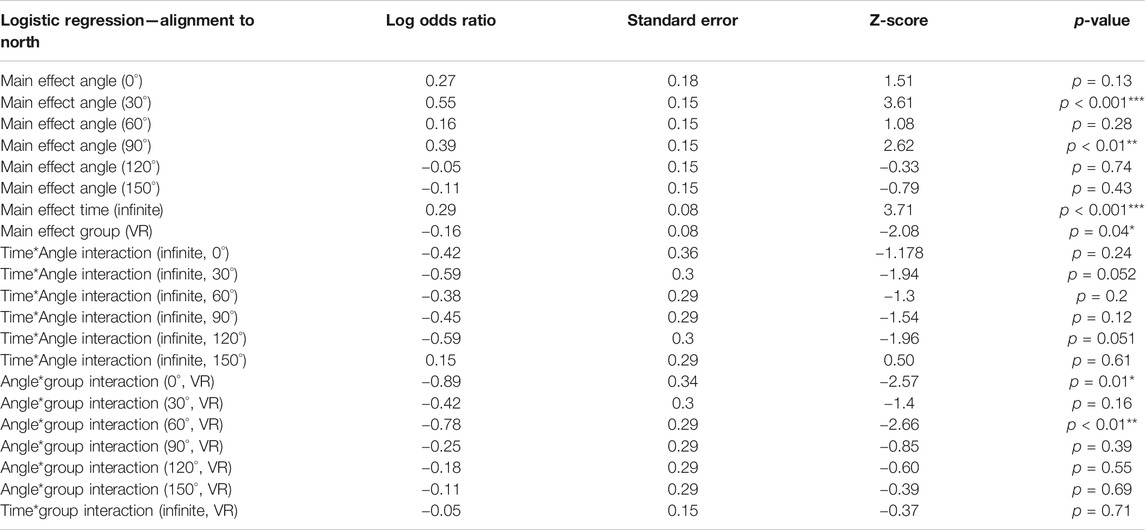

To evaluate the effect of alignment to the north, we used a logistic regression model with effect coding. With this, we modeled the accuracy in single trials of the absolute task given the angle (0°, 30°, 60°, 90°, 120°, 150°, or 180°), time (3 s or infinite-time condition), and exploration group (VR or map) and their interactions.

To test the goodness of fit of our model, we performed a log-likelihood ratio test to compare the full model and the null model fitted only on the intercept showing that the full model yielded a significantly better model fit (χ2(21) = 87.38, p < 0.001).

The analysis revealed a main effect of angle at 30° (log odds ratio = 0.55, SE = 0.15, df = 3146, z = 3.61, p < 0.001) and 90° (log odds ratio = 0.39, SE = 0.15, df = 3146, z = 2.62, p < 0.01). Additionally, we found a significant angle*group interaction for 0° (log odds ratio = −0.88, SE = 0.34, df = 3146, z = −2.57, p = 0.01) and 60° (log odds ratio = −0.78, SE = 0.29, df = 3146, z = −2.66, p < 0.01) with a significantly higher accuracy for both angles after map exploration than VR exploration (Figure 7). We again confirm the significant main effect for time (log odds ratio = 0.29, SE = 0.08, df = 3146, z = 3.71, p < 0.001) and group (log odds ratio = −0.16, SE = 0.08, df = 3146, z = −2.10, p < 0.05). We did not find any further significant effects (Table 3 for all results) (Figure 7).

FIGURE 7. Alignment to north effect: The task accuracy in the absolute orientation task is shown in relation to the angular difference between north and tested stimuli orientation (alignment): (A) after VR exploration and (B) after map exploration. Dots depict mean accuracy in respect of 0°, 30°, 60°, 90°, 120°, 150°, and 180° categories. Error bars depict SEM. The black dashed line marks the level of 50%.

Our results revealed a significant main effect for 30° and 90° angular differences to the north. Furthermore, they showed that 0° and 60° differences to the north had significantly higher accuracies after map exploration than after VR exploration, thus suggesting an alignment effect only after spatial learning with a map.

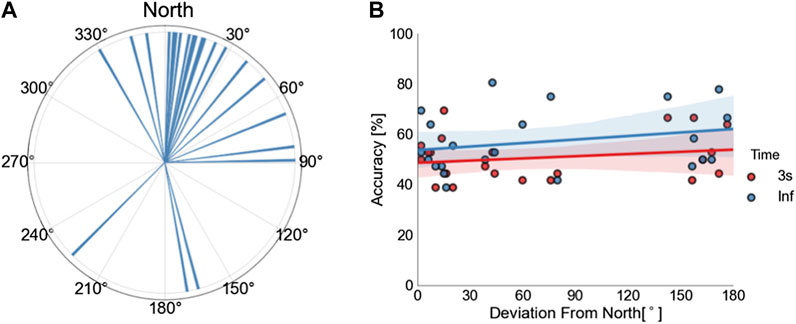

As one reason for the lack of an alignment effect toward the north after VR exploration, we considered that participants might not know where north was in the virtual village. To investigate how well participants deduced the cardinal directions from the virtual Sun’s position in VR, we asked them at the end of each exploration to turn so that they would face their subjectively estimated north (Figure 8A). The estimations of 16 out of 22 participants lay within 45° deviation from true north and six below 3°. Thus, participants’ knowledge of north was remarkably accurate. Nevertheless, we found no correlation between deviation from true north and accuracy (Pearson correlation between the accuracy in the absolute orientation task and deviation from north with 3-s condition: rho(22) = 0.065, p = 0.78 and with infinite-time condition: rho (22) = −0.02, p = 0.94). Note that we collapsed the full circle to angles from 0 to 180° for all analyses (Figure 8B). Therefore, our results suggest that despite having a relatively accurate estimation of the northerly direction in the virtual village, participants could not use it to increase their knowledge of the orientation of buildings in Seahaven.

FIGURE 8. The estimate of cardinal directions in VR: the polar histogram with a bin size of 1° shows the subjective estimation of the north after VR exploration by the participants (A). Up is true north. The scatter plot shows the correlation between accuracy in the absolute orientation task and deviation from the north for the 3-s condition in red and the infinite-response condition in blue (B). One dot depicts the combination of accuracy and absolute deviation from the north for one participant. Lines depict regression lines (3-s condition in red and infinite-time condition in blue). Colored shaded areas depict the respective 95% confidence intervals.

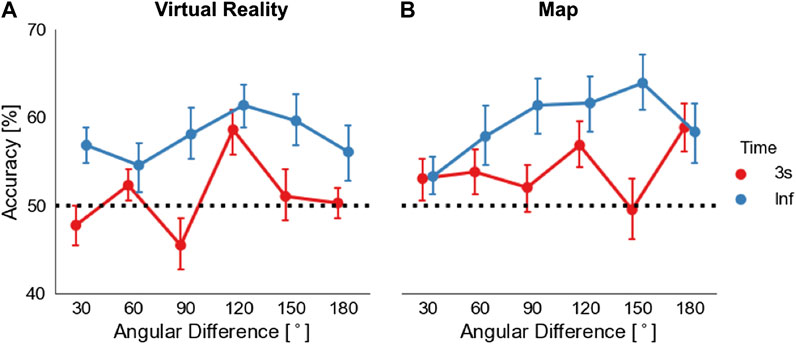

Accuracy as a Function of Angular Difference of Choice Options

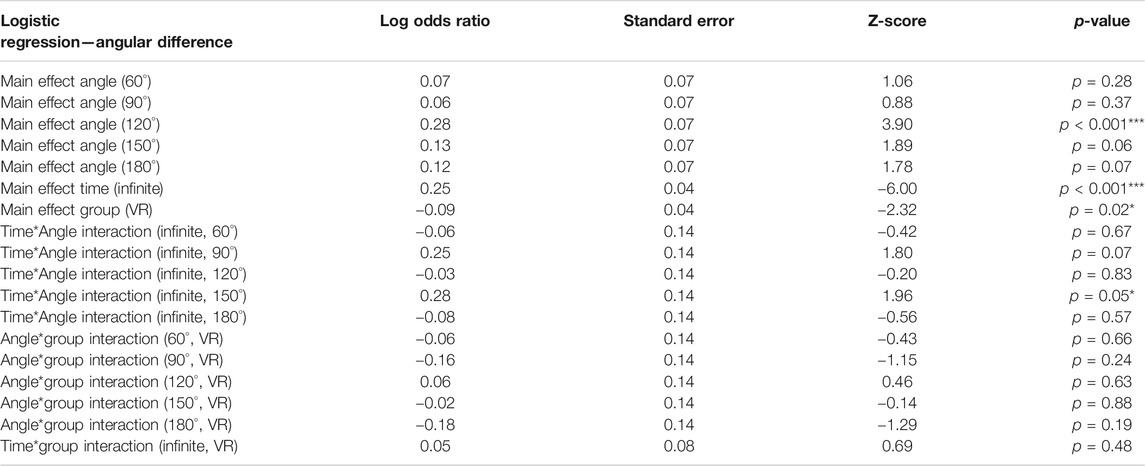

We hypothesized that accuracy would improve with larger angular differences between choice options of our 2AFC tasks independent of whether exploration was in the virtual environment or using the map. All participants were required to choose between two alternative choices that differed from each other in varying angular degrees in steps of 30°. The accuracy was calculated for each bin, combining all tasks but separately for the 3 s and infinite-time conditions.

For statistical analysis, we performed another logistic regression (Table 4) to model the log odds ratio of being accurate based on angle (the angular difference between decision choices: either 30°, 60°, 90°, 120°, 150°, or 180°) and time (3 s or infinite-time condition) and exploration group (VR or map) (Figure 9). As discussed above, the categorical variables were coded with effect coding, and hence the model coefficients can be directly interpreted as main effects.

FIGURE 9. The angular difference of choice options: the plots show the overall task accuracy in relation to the angular difference between choices in the task stimuli after VR exploration (A) and map exploration (B). Dots depict mean accuracy in respect to 30°, 60°, 90°, 120°, 150°, and 180° categories, with a response within 3 s in red and with unlimited time in blue. Error bars represent SEM. The black dashed line marks the level of 50%.

To test the goodness of fit of our model, we performed a log-likelihood ratio test for the full model, and the null model fitted only on the intercept showing that the full model yielded a significantly better model fit (χ2(18) = 77.29, p < 0.001).

Our logistic regression analysis showed a main effect for time and for group with a significant higher accuracy for the infinite-time condition (log odds ratio = 0.25, SE = 0.04, df = 9502, z = −6.0, p < 0.001) and a higher accuracy in the map group (log odds ratio = −0.09, SE = 0.07, df = 9496, z = 2.32, p = 0.02). The analysis also revealed a significantly higher accuracy for 120° angular difference between task choices compared to all other angular differences (log odds ratio = 0.28, SE = 0.07, df = 9497, z = 3.90, p < 0.001). Additionally, we found a significant time*angle interaction with a significantly higher accuracy in the infinite-time condition for 150° angular difference between choice options (log odds ratio = 0.28, SE = 0.14, df = 9491, z = 1.96, p = 0.05). We found no further significant effects (Table 4 and Figure 9).

To summarize, analyzing the effect of the angular difference of choice options on task accuracy, we found a significant main effect of angle, independent of the source of exploration, with higher accuracy and 120° angular difference between task choices. Additionally, we found a significantly higher accuracy for 150° difference between task choices for the infinite-time condition than for the 3-s time condition.

Accuracy as a Function of Distance

In line with previous research (Meilinger et al., 2015), we hypothesized a distance effect with higher task accuracy and smaller distances between tested buildings with experience in the immersive virtual environment. As we have to compare the distance between two buildings for this analysis, we only considered the relative orientation and the pointing task. We had only two buildings in a trial in the pointing task: the prime and the target, whose distance we directly compared. In the relative orientation task, we compared the distance between the prime and the correct target building only. So, distance is defined as the distance between predefined building pairs. For the analysis, we averaged the distance of building pairs over subjects.

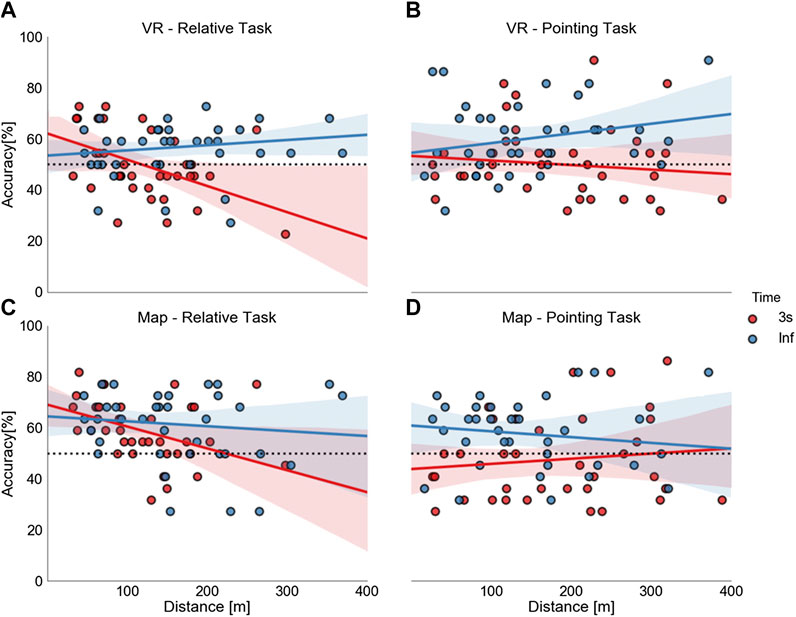

To investigate the dependencies of accuracy on the distance, we calculated an ordinary least squares linear regression model with accuracy as the dependent variable (Figure 10). Here, we focus on the effects of distance and the modulation by time and group as the independent factors. Our linear regression model for the relative orientation task revealed (Table 5 and Figure 10A) a main effect for distance (beta = −0.04, t (137) = −2.89, p = 0.004). This showed that, with a one-unit change of distance, accuracy decreased by 0.04%. Furthermore, we found a two-way interaction for time*distance (beta = 0.08, t (137) = 2.59, p = 0.01), which shows that time conditions affected distance differentially with the 3-s response condition resulting in a larger decrease in accuracy (by 0.08% for one unit change in distance) in comparison to the infinite-time condition. We did not find any further significant results for the relative or pointing tasks (Table 5 and Figure 10B).

FIGURE 10. Effects of distance: the scatter plots show the correlation of distance (abscissa) and task accuracy (ordinate) (A and B—VR; C and D—map). The dots depict the combination of time and distance averaged over subjects for a building pair: the 3-s condition in red and infinite-time condition in blue, on the left side for the relative orientation task (A and C) and on the right side for the pointing task (B and D). The straight lines depict the correlation lines and the colored shaded areas the respective 95% confidence interval. The black dashed line marks the level of 50%.

Taken together, these results unexpectedly showed the same influence pattern of distance after exploration in VR and with a map. In both groups, a distance effect was only visible with higher accuracy for shorter distances between tested buildings in the relative orientation task with a response within 3 s.

Accuracy as a Function of FRS Scaling

To estimate subjectively rated abilities in spatial-orientation strategies learned in real-world environments, we asked subjects to fill out the FRS questionnaire (Münzer et al., 2016; Münzer and Hölscher, 2011). To investigate the influence of these spatial-orientation abilities on task accuracies after exploring a virtual village, we performed the more robust Spearman’s rank correlation analysis of the FRS scales and task accuracies and reanalyzed the map data accordingly. After VR exploration, we did not find significant correlations with any of the task and time accuracies (p > 0.09) for scale 1 (the global-egocentric orientation scale). Similarly, on scale 2, the survey scale, the results showed no significant correlation (p > 0.06). For scale 3, the cardinal directions scale, we found a correlation with the absolute orientation task with unlimited response time [rhon (19) = 0.51, p = 0.03]. No other correlations for scale three were significant (p > 0.13). After map exploration, we found a correlation between Scale one and the pointing task with a 3-s response time [rho (19) = 0.488, p = 0.03]. No other correlations were significant (p > 0.07). As the p-values did not survive Bonferroni multiple comparison correction (p < 0.002), we are cautious about interpreting these results any further.

Discussion

In the present study, our research question was whether and how spatial learning is influenced by different knowledge acquisition sources, comparing experience in the immersive virtual environment with the learning of an interactive city map of the same environment (König et al., 2019). Taken together, our results showed a significantly higher accuracy when unlimited time was given for cognitive reasoning than for a response within 3 s, independent of the source of exploration and with a slight overall higher accuracy after map exploration. Furthermore, we found a higher accuracy for judging straight-line directions between buildings after exploration in the immersive VR than after exploration with the map. After map exploration, we found a higher accuracy in tasks testing cardinal directions and building-to-building orientation. The increased familiarity of buildings was weakly correlated with an increase in task accuracy. We found an alignment-to-north effect only after map exploration, in contrast to exploration in immersive VR, where we found no orientation specificity of task accuracies. Our results revealed the same pattern for distances after VR and map exploration, having a distance effect visible with higher accuracy for shorter distances only in judging relative orientations of buildings within 3 s. We found no significant correlation between task accuracies and the scales of the FRS questionnaire in either group. Overall, our results suggest that spatial knowledge acquisition was influenced by the source of spatial exploration with higher accuracy for action-relevant judgment of straight-line directions between buildings after exploration in immersive VR and superior knowledge of cardinal directions and building-to-building orientation after map exploration.

Different Sources of Information in Spatial Cognition

As the information sources for spatial learning provide different information, the learned and memorized spatial knowledge is also expected to differ. Investigating spatial knowledge acquisition after direct experience in real-world surroundings, previous research revealed that landmark, route, and survey knowledge might evolve (Siegel and White, 1975; Thorndyke and Hayes-Roth, 1982; Montello, 1998; Richardson et al., 1999), even though there are large individual differences (Montello, 1998; Ishikawa and Montello, 2006; Wiener et al., 2009; Hegarty et al., 2018). Direct experience supports route knowledge in relation to an egocentric reference frame, whereas spatial learning with a map heightens survey knowledge in relation to an allocentric reference frame (Thorndyke and Hayes-Roth, 1982; Richardson et al., 1999; Taylor et al., 1999; Montello et al., 2004; Shelton and McNamara, 2004; Frankenstein et al., 2012; Meilinger et al., 2013; Meilinger et al., 2015). As a map directly provides a fixed coordinate system and the topography of the environment, survey knowledge is supposed to be preferentially derived from maps (Thorndyke and Hayes-Roth, 1982; Montello et al., 2004). However, it can also be obtained from navigation within an environment (Siegel and White, 1975; Thorndyke and Hayes-Roth, 1982; Klatzky et al., 1990; Montello, 1998). Spatial navigation in the real world is a highly individual and complex activity.

To clarify spatial learning from different sources, Thorndyke and Hayes-Roth (1982) investigated acquired spatial properties of a complex real-world building after navigation therein and compared it to map use. Their results revealed that after map learning, participants were more accurate in estimating Euclidian distances between locations and location of objects in the tested environment. After navigation experience, participants were more accurate at judging route distances and relative directions from a start to a destination location, which is considered for testing survey knowledge (Montello et al., 2004). In the presented study, we performed a judgment-of-direction task between the location of buildings of a virtual village, similar to Thorndyke and Hayes-Roth (1982). In line with Thorndyke and Hayes-Roth (1982), we found significantly higher accuracy in the judgment of direction task after direct exploration of the virtual environment than after map exploration.

Thorndyke and Hayes-Roth (1982) argued that participants using a map were required to perform a perspective switch, from the bird’s-eye perspective of the map to the required ego perspective in the judgment of direction task. They assumed that this perspective switch might have been the cause for the lower performance after map learning. Our participants who explored the interactive map had to perform a switch of perspective even during the exploration between the bird’s-eye perspective of the map to the required ego perspective in viewing screenshots of the front-on views of the buildings. Participants who explored the immersive VR used the ego perspective during exploration. Both groups used the ego perspective in the judgment of the direction task. Thus, the argument that the switch of perspective might explain the lower accuracy after map learning also holds for our results. More recent research supported the view that a switch in required perspective caused a reduction in performance (Shelton and McNamara, 2004; McNamara et al., 2008; Meilinger et al., 2013). However, following Thorndyke and Hayes-Roth (1982), who assumed that survey knowledge could also be achieved with enough familiarity of the environment by navigation experience, we suggest that our participants acquired some kind of spatial topographical overview after exploration of our immersive virtual environment.

In contrast, our tasks that evaluated knowledge of cardinal directions and relative orientations between buildings revealed higher accuracies after map exploration, even though they had to perform the same perspective switch as in the pointing task. We assume that, as a map directly provides the information of cardinal directions and relative orientations, it was easier to learn this spatial information during map exploration than the exploration of the immersive VR. In summary, our results suggest that judgment of straight-line directions between locations was preferentially acquired after exploration of the immersive VR in contrast to tasks using information about cardinal directions and building-to-building orientation that was preferentially derived with an interactive map.

In real-world spatial navigation studies, orientation specificity and distance between tested objects were found to be differential factors after direct experience in the environment and map use. Orientation specificity is measured as an alignment effect visible in higher accuracy when learned and retrieved orientations were aligned. This was consistently found after spatial learning that provided a fixed reference frame such as cardinal directions of a map or environmental features (McNamara, 2003; McNamara et al., 2003; Montello et al., 2004; Frankenstein et al., 2012; Brunyé et al., 2015). After direct experience in the environment, which might lead to multiple local reference frames (Montello et al., 2004; Meilinger et al., 2006, 2015; Meilinger, 2008), an alignment effect was much less consistently found (Presson and Hazelrigg, 1984; Burte and Hegarty, 2014). In agreement, our results revealed no alignment effect of tested building orientations toward north after experience in the immersive virtual village. Even though participants were remarkably accurate at estimating north after VR exploration, they could not use this knowledge to improve task accuracy. In contrast, we found an alignment effect toward north after map exploration (König et al., 2019). Thus, our results are in line with a differential effect of orientation specificity after experience in immersive VR and map exploration.

Investigating the influence of the distance between tested buildings, previous research in real-world environments indicated that when acquiring knowledge of a large-scale environment by direct experience, smaller distances between tested objects improved task performance, whereas after learning with a map, no such distance effect was found (Loomis et al., 1993; Frankenstein et al., 2012; Meilinger et al., 2015). In contrast, our results revealed the same pattern of distance dependency after village exploration in VR and a map. In both groups, with time for cognitive reasoning to respond, task accuracies were not influenced by the distance between tested buildings, whereas, when responses within 3 s were required, we found a significant negative correlation between accuracy and distance in judging the relative orientation between buildings but not in judging the direction between buildings locations in the pointing task. Our virtual village’s spatial extent, which allowed for distances between buildings up to 400 (virtual) meters, is relatively large for a virtual environment. However, compared to a real-world city, this distance is small and might not be large enough to yield distance effects as in a larger real-world city. Thus, our results revealed a comparable pattern for the influence of distance on task accuracy after VR and map exploration with only a distance effect in judging building-to-building orientation with responses within 3 s, but the extent of our virtual village might not be large enough to investigate this effect reliably.

Influence of Experiment and Task Design

As an important factor, we have to consider the experiment design as an influential effect onto the results. With our design, we aimed at giving experience in VR close to the natural experience. But there are some limitations. We used an HTC Vive headset, an immersive virtual environment setup that proved to give good results on easy handling of the controllers and a high resolution per eye (Kose et al., 2017). Thus, participants felt surrounded by the virtual environment with a 360° panoramic scenery, which supported the feeling of presence in the VR. Furthermore, the HTC Vive headset provided a field-of-view of 110° that also allowed peripheral vision, which is essential for developing survey knowledge (Sholl, 1996). For the experience of movement, we provided natural visual flow and physical-body rotation on a swivel chair combined with forward movement that was controlled by the participants with a handheld controller. We are aware that, with this design, the translational movement provided by a controller does not resemble natural movement, even though this setup was shown by Riecke et al. (2010) to result in comparable performance levels to those of full walking. To provide the experience of translational movement designs like an omnidirectional treadmill (Darken et al., 1997) or the VRNChair (Byagowi et al., 2014; Aldaba and Moussavi, 2020) could be considered. In our experimental design, we provided the VR group with free exploration in our virtual environment. We suggest that this might be considered as a primary environment, which would allow an experience more similar to the direct experience of a natural environment than compared to indirect sources like maps (Presson and Hazelrigg, 1984; Montello et al., 2004). Our interactive city map provided participants with a north-up city map from a bird’s eye perspective. Additionally, the interactive feature presented screenshots of the buildings’ front-views from a pedestrian perspective. This interactive feature is not typical for regular city maps. Nevertheless, we consider our interactive city map more like an indirect source than a direct experience for spatial learning. The exploration of the virtual environment covered the whole village area well with both exploration sources. Longer and more often viewed buildings, i.e., a higher familiarity with the buildings, revealed improved task accuracies in both groups. Both groups were equally tested on screenshots of the virtual village buildings not providing embodied experience in the test phase. Taken together, although direct experience in the real world differs in many aspects from experience in our virtual environment, we suggest that Seahaven is a valid virtual environment to investigate spatial learning by experience in immersive VR. Further, it allows the comparison to spatial learning with the use of our interactive map of the same VR environment as an indirect source.

The design of the task, in addition to the source of spatial exploration, also has to be considered as an influential factor in spatial knowledge acquisition (Montello et al., 2004). Pointing tasks in spatial research generally test judgment of directions from a specific location to another specified location. Besides this general structure, there exist a large variety of different designs (e.g., Shelton and McNamara, 1997; Mou and McNamara, 2002; McNamara, 2003; McNamara et al., 2003; Montello et al., 2004; Waller and Hodgson, 2006). In recent research, Zhang et al. (2014) investigated the development of cognitive maps after map and desktop VR learning with two different pointing tasks adapted from previous research (Mou et al., 2004; Holmes and Sholl, 2005; Waller and Hodgson, 2006). They used a scene and orientation-dependent pointing task, which provided egocentric embodied scene and orientation information, and a judgment of relative direction task, which did not give any orientation information and was presumed to be based on remembered knowledge of allocentric relations between landmarks. After spatial exploration in our immersive VR, our pointing task was performed outside the VR and displayed screenshots of the tested buildings in a 2AFC design. With this design, we stayed within the visual domain for testing. This allowed the use of identical test conditions after VR and map learning. Zhang et al. (2014) found a superior performance in the scene and orientation-dependent pointing task after spatial learning in VR. In contrast, after map learning of the VR environment, the performance was superior in the relative direction task's judgment in that study. We found a higher accuracy with our pointing task after exploration in the immersive VR, which agrees with Zhang et al.’s (2014) results. We suggest that even though the pointing stimuli in the task did not provide scene and orientation information further to the screenshots taken in VR, the display of these visual images triggered the remembered visual scene information available while exploring the virtual environment. As the map exploration solely provided the screenshots of the buildings and the city map from a bird’s eye view, no additional embodied information was learned. Our results suggest that our pointing task's accuracy was supported by remembered scene and orientation information after exploration in VR, which led to higher accuracy than after map exploration.

In everyday navigation, spontaneous decisions and careful deductive planning are combined. This is in line with dual-process theories (Evans, 1984; Finuncane et al., 2000; Kahneman et al., 2002; Evans, 2008), which distinguish rapid, intuitive cognitive processes, “System 1” processes, from slow, deductive, analytical cognitive processes, “System 2” processes. Kahneman et al. (2002) suggested that “System 2” processes, once they achieved greater proficiency, descend to and improve “System 1” processes. Thus, to investigate which kind of cognitive processes were used to solve our tasks, we investigated two response time conditions in the present study. We used a response window that required a response within 3 s, testing a rapid decision, and an infinite-time condition with unlimited time for a response, allowing for analytical cognitive reasoning. Our results revealed a significantly higher accuracy with unlimited time to respond that allowed for slow, deductive cognitive reasoning than responses within 3 s after VR and map exploration. Thus, our results suggest that deductive cognitive reasoning in line with System 2 processes were important to solve our spatial tasks after VR and map exploration of Seahaven.

Comparison of Spatial Cognition in VR to the Real World