Co-Design of a Trustworthy AI System in Healthcare: Deep Learning Based Skin Lesion Classifier

- 1Frankfurt Big Data Lab, Goethe University Frankfurt, Frankfurt, Germany

- 2Department of Business Management and Analytics, Arcada University of Applied Sciences, Helsinki, Finland

- 3Data Science Graduate School, Seoul National University, Seoul, South Korea

- 4German Research Center for Artificial Intelligence (DFKI), Kaiserslautern, Germany

- 5Health Ethics and Policy Lab, Swiss Federal Institute of Technology (ETH Zurich), Zurich, Switzerland

- 6Department of Dermatology, University Clinic Münster, Münster, Germany

- 7Department of Dermatology, Medical Faculty, Heinrich-Heine University, Düsseldorf, Germany

- 8Section of General Practice and Research Unit for General Practice, Department of Public Health, Faculty of Health and Medical Sciences, University of Copenhagen, Copenhagen, Danemark

- 9Primary Health Care Research Unit, Region Zealand, Denmark

- 10École des médias, Collège André-Laurendeau, Université du Québec à Montréal and Philosophie, Montreal, QC, Canada

- 11Philosophy Department, Pace University, New York, NY, United States

- 12Department of Informatics, Umeå University, Umeå, Sweden

- 13Department of Medicine and Division of Infectious Diseases and Immunology, NYU Grossman School of Medicine, New York, NY, United States

- 14Department of Computer Science, TU Kaiserslautern, Kaiserslautern, Germany

- 15Department of Computer Science (DIKU), University of Copenhagen (UCPH), Copenhagen, Denmark

- 16Department of Mathematics and Computer Science, Eindhoven University of Technology, Eindhoven, Netherlands

- 17Center for Human-Compatible AI, University of California, Berkeley, CA, United States

- 18Department of Biomedical Engineering, Basel University, Basel, Switzerland

- 19The Global AI Ethics Institute, Paris, France

- 20Section for Health Service Research and Section for General Practice, Department of Public Health, University of Copenhagen, Copenhagen, Denmark

- 21Centre for Research in Assessment and Digital Learning, Deakin University, Melbourne, VIC, Australia

- 22Ethics & Philosophy Lab, University of Tuebingen, Tuebingen, Germany

- 23Faculty of Law, University of Cambridge, Cambridge, United Kingdom

- 24Center for the Study of Ethics in the Professions, Illinois Institute of Technology, Chicago, IL, United States

- 25Department of Food and Resource Economics, Faculty of Science, University of Copenhagen, Copenhagen, Denmark

- 26Hautmedizin Bad Soden, Bad Soden, Germany

- 27Charité Lab for AI in Medicine, Charité Universitätsmedizin Berlin, Berlin, Germany

- 28QUEST Center for Transforming Biomedical Research, Berlin Institute of Health (BIH), Charité Universitätsmedizin Berlin, Berlin, Germany

- 29School of Computing and Digital Technology, Faculty of Computing, Engineering and the Built Environment, Birmingham City University, Birmingham, United Kingdom

- 30School of Healthcare and Social Work Seinäjoki University of Applied Sciences (SeAMK), Seinäjoki, Finland

- 31Freelance researcher, writer and consultant in AI Ethics, London, United Kingdom

- 32Division of Cell Matrix Biology and Regenerative Medicine, The University of Manchester, Manchester, United Kingdom

- 33Human Genetics, Wellcome Sanger Institute, England, United Kingdom

- 34Industrial Engineering & Operation Research, UC Berkeley, CA, United States

- 35Intel Labs, Santa Clara, CA, United States

- 36Institute for Ethics and Emerging Technologies, University of Turin, Turin, Italy

- 37Z-Inspection® Initiative, New York, NY, United States

- 38T. H Chan School of Public Health, Harvard University, Cambridge, MA, United States

This paper documents how an ethically aligned co-design methodology ensures trustworthiness in the early design phase of an artificial intelligence (AI) system component for healthcare. The system explains decisions made by deep learning networks analyzing images of skin lesions. The co-design of trustworthy AI developed here used a holistic approach rather than a static ethical checklist and required a multidisciplinary team of experts working with the AI designers and their managers. Ethical, legal, and technical issues potentially arising from the future use of the AI system were investigated. This paper is a first report on co-designing in the early design phase. Our results can also serve as guidance for other early-phase AI-similar tool developments.

Trustworthy Artificial Intelligence Co-Design

Our research work aims to address the need for co-design of trustworthy AI in healthcare using a holistic approach, rather than monolithic ethical checklists. This paper summarizes the initial results of using an ethically aligned co-design methodology to ensure a trustworthy early design of an AI system component. The system is aimed to explain the decisions made by deep learning networks when used to analyze images of skin lesions. Our approach uses a holistic process, called Z-inspection® (Zicari, et al., 2021b), to help assisting engineers in the early co-design of an AI system to satisfy the requirement for Trustworthy AI as defined by the High-Level Expert Group on AI (AI HLEG) set up by the European Commission. One of the key features of the Z-inspection® is the involvement of a multidisciplinary team of experts co-creating together with the AI engineers, their managers to ensure that the AI system is trustworthy. Our results can also serve as guidance for other similar early-phase AI tool developments.

Basic Concepts

Z-inspection® can be considered an ethically aligned co-design methodology, as defined by the work of Robertson et al. (2019) who propose a design process of robotics and autonomous systems using a co-design approach, applied ethics, and values-driven methods. In the following, we illustrate some key concepts.

Co-Design

Co-design is defined as a collective creativity, applied across the whole span of a design process, that engages end-users and other relevant stakeholders (Robertson et al., 2019). In their methodology, Robertson et al. (2019) suggest that the design process is open, in the sense that within this process “interactions occur in a broader socio-technical context”; this is the reason why “stakeholder engagement should not be restricted to end-user involvement but should encourage and support the inclusion of additional stakeholder groups” which are part of the design process or which are impacted by the designed product. The ethical aspects of the process and product must also be considered in relation to the “existing regulatory environment (…) to facilitate the integration of such provisions in the early stages” of the co-design.

Vulnerability

Robertson et al. (2019) mention that “within a socio-technical system where humans interact with partially automated technologies, an end-user is vulnerable to failures from both humans and the technology”. These failures and the risks associated with them are symptomatic of power asymmetries embedded in these technologies. This stresses the importance of an “exposure analysis that employs a metric of end-user exposure capable of attributing variations across measurements to specific contributors (which) can aid the development of designs with reduced end-user vulnerability”.

Exposure

Exposure represents an evaluation of the contact potential between a hazard and a receptor (Robertson et al., 2019), for this reason, the authors state that: “A threat to an end-user, engaging with a technological system is only significant if it aligns with a specific weakness of that system resulting in contact that leads to exposure”. Conversely, every weakness can potentially be targeted by a threat—either external or arising from a component’s failure to achieve “fitness for purpose”—and so the configuration of the system’s weaknesses influences the end-user’s “exposure.” They accordingly emphasize that, in the case of autonomous systems, the “analysis of the “exposure” of the system provides a numerical and defensible measure of the weaknesses” of that system, and thus must be an integral part of the co-design process.

In this paper, we focus on the part of the co-design process that helps to identify the possible exposures when designing a system. In the framework for Trustworthy AI, exposures are defined as ethical, technical, and legal issues related to the use of the AI system.

Trustworthy Artificial Intelligence

Our process is based on the work of the High-Level Expert Group on AI (AI HLEG) set up by the European Commission who published ethics guidelines for trustworthy AI in April 2019 (AI HLEG, 2019). According to the AI HLEG, for an AI to be trustworthy, it needs to be:

Lawful–respecting all applicable laws and regulations,

Robust–both from a technical and social perspective, and

Ethical–respecting ethical principles and values.

The framework makes use of four ethical principles rooted on fundamental rights (AI HLEG, 2019): Respect for human autonomy, Prevention of harm, Fairness, and Explicability.

Acknowledging that these ethical principles cannot give solutions to AI practitioners, the AI HLEG suggested, based on the above principles, seven requirements for Trustworthy AI (AI HLEG, 2019) that should enable the self-assessment of a AI System, namely:

1) Human agency and oversight,

2) Technical robustness and safety,

3) Privacy and data governance,

4) Transparency,

5) Diversity, non-discrimination and fairness,

6) Societal and environmental wellbeing,

7) Accountability.

These guidelines are aimed at a variety of stakeholders, especially guiding practitioners towards more ethical and more robust applications of AI. The interpretation, relevance, and implementation of trustworthy AI, however, depends on the domain and the context where the AI system is used. Although these requirements are a welcome first step towards enabling an assessment of the societal implication of the use of AI systems, there are some challenges in the practical application of requirements, namely:

• The AI guidelines are not domain specific.

• They offer a static checklist and do not offer specific guidelines during design phases.

• There are no available best practices to show how to implement how such requirements can be applied in practice.

Particularly in healthcare, discussions surrounding the need for trustworthy AI have been soaring. In the next two sections, we illustrate the process of co-design that we use for a specific use case in healthcare. Related Work reviews some relevant related work and Conclusion presents some conclusions.

Co-Design of a Trustworthy Artificial Intelligence system in Healthcare: Deep Learning Based Skin Lesion Classifier

In recent years, AI systems statistically reached human-level performances in the diagnosis of malignant melanomas from dermatoscopic images in a visual based experimental setting. Such Computer-Aided Diagnosis (CAD) systems have already yielded higher sensitivity and specificity in diagnosing malignant melanoma analyzing dermatoscopic pictures compared to well-trained dermatologists (Brinker T. J. et al., 2019; Brinker et al., 2019 TJ.). However, the acceptance of these CAD systems in real clinical setups is severely limited primarily because their decision-making process remains largely obscure due to the lack of explainability (Lucieri, et al., 2020a). Moreover, the images used are specific (dermoscopy images), whereas dermatologists will usually palpate, as well as look at the region, to determine the position of the lesion, age, sex, etc.

A team led by Prof. Andreas Dengel at the German Research Center for Artificial Intelligence (DFKI) developed a framework for the domain-specific explanation of arbitrary Neural Network (NN)-based classifiers. Dermatology has been chosen as a first use case for the system. They developed a prototype called Explainable AI in Dermatology (named exAID) (Lucieri, et al., 2020b). exAID combines existing high-performing NNs designed for the classification of skin tumors with concept-based explanation techniques, providing diagnostic suggestions and explanations conforming with the definition of expert approved diagnostic criteria. exAID can therefore be considered in its current status a “trust-component” for existing AI systems. The designers of the exAID hoped to provide dermatologists with an easy-to-understand explanation that can help to guide the diagnostic process (Lucieri, et al., 2020b).

Status: AI System in the early design phase.

The Research Questions

How do we help engineers to design and implement a trustworthy AI system for this use case? What are the potential pitfalls of the AI system and how might they be mitigated at the development stage?

Motivation: This is a co-design conducted by a team of independent experts with the engineering team that performed the initial design of the AI component. The main goal of this research work is to help create a Trustworthy AI roadmap for the design, implementation, and future deployment of AI systems.

Co-Design Framework

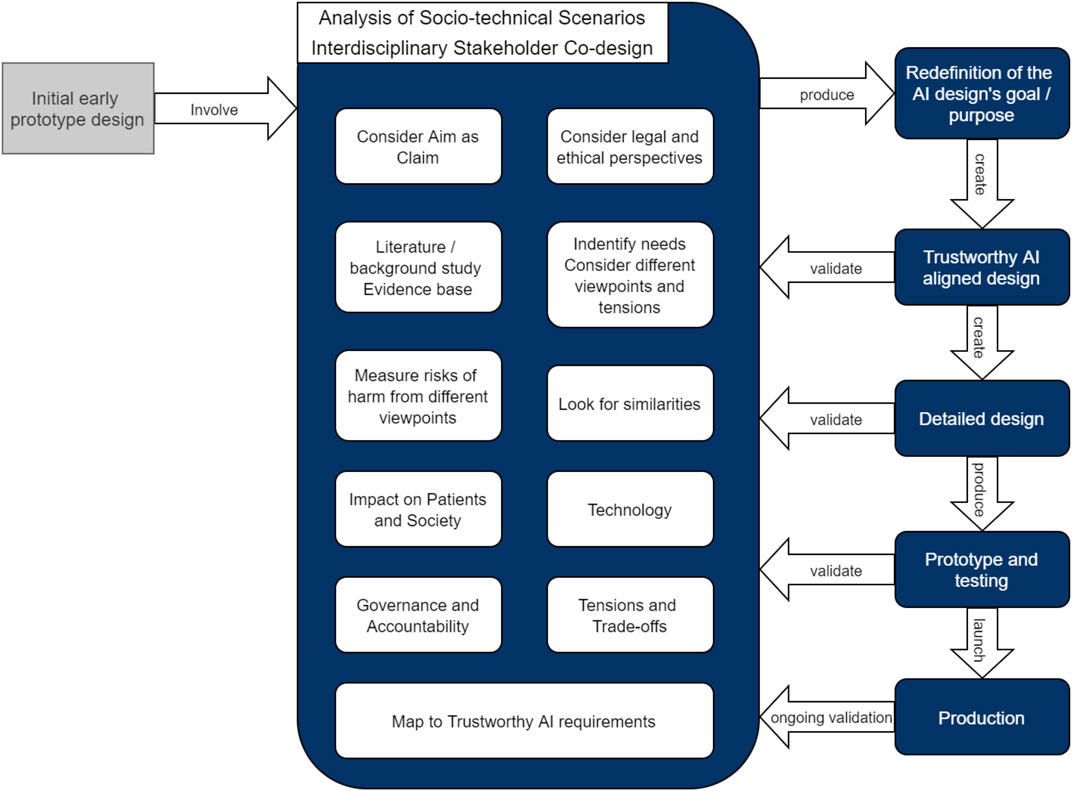

A diagrammatic representation of the proposed co-design framework is offered in Figure 1

For this use case, three Zoom workshops were organized with 35 experts with interdisciplinary background [including philosophers, ethicists, policy makers, social scientists, medical doctors, legal and data protection specialists, computer scientists and machine learning (ML) engineers] where the initial aim of the early prototype AI system was analyzed.

The initial outcome of this co-design process, as described in this paper, is the redefinition of the AI designs goal and purpose. This was achieved by discussing a number of socio-technical scenarios using an approach inspired by Leikas et al. (2019) and modified for the healthcare domain, consisting of the following phases: 1) Definition the boundaries for the AI system; 2) Identification of the main stakeholders; 3) Identification of the needs based on several different viewpoints; 4) Consideration of the Aim of the system as a claim; 5) Literature review and creation of evidence base; 6) Usage situations, Look for similarities; 7)Measure of risks of harms with respect to different viewpoints; 8) Consideration of ethical and legal aspects.

After this initial discussion with the complete team, the co-design process was conducted in parallel with a number of smaller working groups of three to five experts each. The groups worked independently to avoid cognitive bias of the members, followed by a general meeting to assess and merging of the various results from the different working groups, taking into account the Impact on the Patients and Society, the Technology, Governance and Accountability. This resulted in the Identification of Tensions, Trade-offs and then the Mapping to the Trustworthy AI requirements, as presented in Co-Design: Think Holistically.

In the future, we plan to address the other phases of the co-design process depicted in Figure 1, namely: 1) the creation of the initial Trustworthy AI aligned design; 2) the validation of the design by iterating the co-design process; 3) the creation of a detailed design, and the validation of the detailed design by iterating the co-design process; followed by 4) the implementation of the prototype with testing and validation; and finally 5) putting the system in production and ongoing validation.

Socio-Technical Usage Scenarios of the Artificial Intelligence System

Socio-technical usage scenarios is a participatory design tool for achieving a trustworthy AI design and implementation (Leikas et al., 2019). Socio-technical usage scenarios are also a useful tool to describe the aim of the system, the actors, their expectations, the goals of actors’ actions, the technology, and the context, while consequentially fostering moral imagination and providing a common ground where experts from different fields can come together (Lucivero, 2016, p. 160). We use socio-technical scenarios within discussion workshops, where expert groups work together to systematically examine, and elaborate the various tasks with respect to different contexts of AI under consideration.

Socio-technical scenarios can also be used to broaden stakeholders’ understanding of one’s own role in the technology, as well as awareness of stakeholders’ interdependence. The theoretical background behind the socio-technical scenarios as a way to trigger moral imagination and debate is linked to the pragmatist philosophical tradition, which states that ethical debates must be both principles-driven and context-sensitive (Keulartz et al., 2002; Lucivero, 2016, p. 156). This is why these tools are especially interesting for the holistic approach of the Z-inspection®.

In Z-Inspection®, socio-technical usage scenarios are used by a team of experts, to identify a list of potential ethical, technical, and legal issues that need to be further deliberated (Zicari, et al., 2021a). Scenarios are used as part of the assessment of an AI system already deployed, or as a participatory design tool if the AI is in the design phase (as in this case). We have been using socio-technical scenarios within discussion workshops, where expert groups work together to systematically examine and elaborate the various tasks with respect to different contexts of AI.

In this early phase of the design, multiple usages for the same AI technology are possible. Each use of the same technology may pose different challenges. In our approach, we work together with the designers and the prime stakeholders to identify such possible usage of the technology. We consider the pros and cons and evaluate which of the various possible usages is the primary use case. The basic idea is to analyze the AI system using the socio-technical scenarios with relevant stakeholders including designers (when available), domain, technical, legal, and ethics experts (Leikas et al., 2019).

In our process, the concept of ecosystems plays an important role in defining the boundaries of the assessment. We define an ecosystem, as applied to our work, as a set of sectors and parts of society, level of social organization, and stakeholders within a political and economic context. It is important to note that illegal and unethical are not the same thing, and that both law and ethics are context dependent for each given ecosystem. The legal framework is dependent on the geopolitical boundaries of the assessment.

Co-Design: Think Holistically

We report in this section some of the main lessons we learned so far for this use case when assisting the engineers in making the AI design trustworthy. These lessons learned can be considered a useful guideline when assessing how trustworthy an AI design is for similar tool developments.

In the process we did not prioritize discussing different viewpoints or similarities. We began our discussion by building common concepts and discussing the used terminology. In our example we explored our definitions of: 1) early stage vs. localized stage, 2) early diagnosis vs. timely diagnosis, 3) survival vs. mortality, and 4) overdiagnosis vs underdiagnosis. From these discussions similarities and different viewpoints were revealed.

An important aspect of this co-design process is a good balance between sequentiality of actions and freedom of discussion during the workshops. Recurrent, open-minded, and interdisciplinary discussions involving different perspectives of the broad problem definition turned out as extremely valuable core components of the process. Their outcomes turned out to be fertile soil for the identification of various aspects and tensions that could then be transferred to the current state of development of the system within self-contained focus groups to compile action recommendations, streamlining the whole process.

The early involvement of an interdisciplinary panel of experts broadened the horizon of AI designers which are usually focused on the problem definition from a data and application perspective. The co-creation process with its different perspectives highlighted different important aspects, which aided the AI design in a very early phase, benefiting the final system and thus the users. Successful interdisciplinary research is challenging and requires participants to articulate in detail exactly how concepts—including the most basic—are theoretically and practically understood. Using “accuracy of a diagnostic test”, for example, not only requires clarification of how each of the words are understood as scientific concepts but also clarification regarding the accuracy for whom, for what purpose, which context, and under what presumptions, etc.

One of the key lessons learned is that by evaluating the design of trustworthy AI with a holistic co-creative approach, we are able to identify a number of problems that were not possible with traditional design engineering approaches. We list some of them in the rest of this section.

Initial Aim of the ML System

The initial aim of the exAID framework was to act as an add-on component to support dermatologists in clinical practice. Given an existing AI system trained for skin melanoma detection, the add-on component goal is to explain the system’s decisions in terms that dermatologists can understand. With these explanations, the AI system can support the clinician’s decision-making process by providing a qualified second opinion on relevant features in a dermatoscopic image and, therefore, potentially improve diagnostic performance (Tschandl et al., 2020).

The Initial Prototype

Image-based ML algorithms use pattern recognition to derive abstract representations that reveal structures in the image which can be used for automatic classification. This abstract representation can significantly deviate from the human perception of the problem. However, first efforts in decoding the ML representation in skin lesion classification indicate that the process is partially transferable to human understandable domains (Lucieri, et al., 2020a).

The system explanation module transfers the abstract representation of the ML algorithm into expert entrusted concepts to make the ML algorithm’s diagnostic suggestion more understandable by a clinician (Lucieri, et al., 2020a; Lucieri, et al., 2020b). These explanatory concepts are borrowed from the established 7-point checklist algorithm (Argenziano et al., 1998) and include the following: 1) Typical Pigment Network, 2) Atypical Pigment Network, 3) Streaks, 4) Regular Dos & Globules, 5) Irregular Dots & Globules, 6) Blue Whitish Veil.

The presence or absence of each of these concepts is quantified. These quantitative results are leveraged by a rule-based method to generate textual explanations and complemented by heatmaps that localize the criteria in the original dermatoscopic image.

Limitations of the Initial Prototype

The project was in the early design phase and no final target groups which can benefit from it were identified. So far, the use by dermatologists in clinic or practice and self-screening by patients were considered. Furthermore, no clinical trials have been conducted so far.

The ML model currently used for skin lesion classification has been trained on a limited number of publicly available skin images (Mendonça et al., 2013; Codella et al., 2018; Kawahara et al., 2019; Rotemberg et al., 2021). As most of the data was acquired from a small number of distinct research facilities, the dataset suffers from variations in image quality and the occasional presence of artifacts and it cannot be guaranteed that the data is representative of patients’ backgrounds (e.g., age, sex, skin tone distribution). The ISIC dataset (Codella et al., 2018) has also been shown to have significant bias in its images (Bissoto et al., 2019, 2020).

Darker skin tones are absent from the training data. As the system should only be used with skin types that were represented in the training datasets, it is at this stage limited to a population of Fitzpatrick skin phototypes I—III (Fitzpatrick, 1988) and is therefore not appropriate to use for those with darker skin tones.

The training data also only contains lesions from certain body regions and excludes others, e.g. there is no training data from moles on the genitals, nails or the lining of the mouth. As the dermatoscopic features of melanoma/naevi differ between body regions, the system should only be used in areas that the underlying AI was trained for.

The designers chose to use dermatoscopic images and therefore, only images from dermatoscopes should be used, excluding clinical images. In addition, the chosen dataset excludes common skin alterations (e.g. piercings, tattoos, scars, burns, etc. The system’s use should consequently be restricted to cases where none of these alterations are present. What guardrails are in place to ensure that this model is never used outside of its scope? This may require that all users of the model be well informed of its limitations and scope.

Re-Evaluate and Understand What is the “Aim” of the System.

Deciding and defining the aim of the system is obviously important yet surprisingly ambiguous in a research setting of different disciplines and research traditions.

The original motivation for the design of the AI system was to try solving a general problem given the lack of acceptance of deep neural network enabled Computer-Aided Diagnosis (CAD), as its decision-making process remains obscure. The designers wanted to demonstrate that it is possible to explain the results of a deep neural network used as a deep learning based medical image classifier. They have chosen publicly available data sets and open access neural network to classify skin tumors (Lucieri, et al., 2020a).

The first question we raised at the first multi-disciplinary workshop was: Is there a real need for an AI system as initially presented by the designers?

The first thing we did during the first two workshops was to clarify the motivation and the aim of such an AI system between experts. Successful interdisciplinary research is challenging and requires participants to articulate in detail exactly how concepts—including the most basic—are theoretically and practically understood. Using “accuracy of a diagnostic test”, for example, not only requires clarification of how each of the words are understood as scientific concepts but also clarification regarding the accuracy from whom, for what purpose, which context, and under what presumptions, etc.

A relevant aspect we identified up front is whether this AI system is what clinicians and patients want, and if this AI system results in more good than harm. Furthermore, the interdisciplinary exchange brought up several tensions described in detail in subsequent sections.

Consider Different Viewpoints

By considering various viewpoints together with the AI engineers, it is possible to re-evaluate the aim of the system. We present different viewpoints discussed among experts in this subsection.

During the co-creation process, the discussion with different experts in our team, including dermatologists, experts in public health, evidence-based diagnosis, ethics, healthcare, law, and ML, prompted the main stakeholder and owner of the use case, the team of DFKI, to redefine their stated main aim of the system.

When evaluating the design of the use case, it soon became clear that different stakeholders have different scopes, timeframe, and the population in mind. Thanks to the heterogeneity of our team, such differences and tensions were confronted. We present here a summary of different points of view. For each viewpoint, intensive exchange and communication took place between various domain experts, with different knowledge and backgrounds, all of whom form part of our team.

The Dermatologist’s View

We first present the dermatologist’s view as defined by two of the dermatologists in our team. This viewpoint helps clarify what kind of AI tool could help dermatologists during their daily practice.

The dermatologist’s daily routine is to examine skin lesions and to determine if they are benign or if there is a risk of malignancy, thus needing further diagnostic or therapeutic measures. Currently, the diagnostic algorithm mainly consists of patient history, assessment of risk factors, and inspection of the skin with the naked eye. The next step is dermoscopy of suspicious pigmented or not pigmented lesions, sometimes followed by videodermoscopy, which yields higher magnification and high resolution images. Further non-invasive diagnostic measures could be in-vivo confocal microscopy or electric impedance spectroscopy (Malvehy et al., 2014).

The aim of this diagnostic algorithm is to determine if the examined lesions bear a risk of malignancy or not. If this can be ruled out, the lesions will be left in place. If the risk is determined to be high, excisional biopsy or rarely incisional biopsy will be performed. In case of uncertainty, video-dermatoscopic follow-up after 3 months is another option. In the end, the final question is always: is there an indication to remove the lesion or not (a functional diagnosis). As malignancy frequently cannot be ruled out by non-invasive measures this leads to the excision of benign lesions. In the particular case of melanocytic lesions, the ratio of benign nevi detected for each malignant melanoma diagnosed, i.e. the Number Needed to Treat (NNT), is regularly used as an indicator of diagnostic accuracy and efficacy (Sidhu et al., 2012). However, this value depends on the prevalence of the disease and varies according to physician and lesion-related variables (English et al., 2004; Baade et al., 2008; Hansen et al., 2009).

The ratio of total excisions to find one melanoma varies from approximately 6–22 (number needed to treat, NNT) (Petty et al., 2020). A tool that would allow for less unnecessary excisions would thus be very helpful.

The acceptance and use of a diagnostic aid is dependent on many factors at different levels. First there is the medical level. During their training, physicians have learned to seek a second opinion from a colleague if they are uncertain. Here it is usual not only to exchange opinions, but also to include an explanation of why one decides the way one does. Therefore, explainable AI might be very beneficial for the acceptance of CADs since physicians are used to this. Of course, doctors also want to know about the quality of the second opinion. In order to evaluate the meaningfulness of the results of AI for clinical decisions, it is therefore necessary to know about the performance of a technical device (sensitivity, specificity, positive predictive value, negative predictive value, likelihood ratios, ROC (receiver operating characteristic) curve, and Area under the curve (AUC) plus the degree of overdiagnosis (Brodersen et al., 2014).

When it comes to the technical details of the presented prototype, in particular the explainability of dermoscopy criteria, it is necessary to note that uniformly accepted criteria for dermoscopy do not exist. Dermatologists often just attend weekend courses, acquire knowledge from various books or are even self-taught. This can be a problem for the wide acceptance of the discussed device. It would therefore be important that recognized expert groups first establish broadly accepted diagnostic criteria and validate the tool on an expert level. It would also be necessary to check whether existing criteria can be simply transferred to AI-devices.

Then there is a legal level. Many dermatologists fear that they could miss malignant lesions and be sued for them. As a result, lesions are more often removed than left in place. This is also where the personality of the doctor comes into play. Many doctors prefer to be on the safe side. They are also not keen to save data, such as pictures of the lesions, which can later be used against them in lawsuits. Legal questions regarding the use of diagnostic tools must therefore be clarified for the acceptance. It should also not be neglected that economic aspects play an important role. Sometimes technical devices are only used for billing reasons, and the actual output of the device is not taken into account in the decision process.

All these factors should be considered when designing a medical device. The use and acceptance of an AI device is therefore highly dependent on the end user and their training including personality and attitude towards patient care.

The Evidence-Based Medicine View

We now present the Evidence-Based Medicine View as defined by two of our domain experts in our team. This complementary viewpoint helps clarify how the AI tool could help.

In addition to the health threat of melanoma, the phenomenon of overdiagnosis is important to understand given that overdiagnosis affects the interpretation of melanoma detection and treatment effects (Johansson et al., 2019).

Overdiagnosis is about correctly diagnosing a condition that would never harm the patient (Brodersen et al., 2018). The impact of overdiagnosis can be of such magnitude that it increases the incidence, prevalence, and survival rates of the condition. This has been estimated also to be the case with melanoma (Vaccarella et al., 2019). An Australian study has estimated the proportion of overdiagnosis of melanoma in women and men to be 54 and 58% respectively in 2012 (Glasziou et al., 2020).

At the moment, there is no means to establish the potential risk of metastazation in localized stage melanomas. Because of this, most melanomas are excised, and patients are therefore labelled with a diagnosis of having cancer–a malignant disease. Thus, those melanomas that would never turn harmful, therefore counts in cancer statistics as successful (early) detection and 100% treatment effects although they would be as successful if they were not diagnosed in the first place. Therefore, mortality rates are the most valid outcome to evaluate the effect of detection and treatment, while survival rates are invalid.

Overdiagnosis also influences the interpretation of test accuracy. In evidence-based medicine (EBM), test accuracy is traditionally described within the Bayesian diagnostic paradigm as a 2 × 2 table: the result can be either positive or negative and true or false. Due to overdiagnosis, test accuracy requires a 3 × 2 table: either positive or negative harmful condition, positive or negative harmless condition, as well as true or false (Brodersen et al., 2014).

Compared to overdiagnosis, the phenomenon of underdiagnosis is less investigated and less clearly defined in EBM. In the research field of melanoma, underdiagnosis has among others been described as a wrong diagnosis/misdiagnosis (Kutzner et al., 2020) and defined as “melanoma that was initially diagnosed as a naevus or melanoma in situ” (Van Dijk et al., 2007).

Based on the above, the following questions were posed at co-creation: diagnostic precision of what? Is it precision in detecting any melanoma, those melanoma in a certain localized stage or tumor thickness, or only the melanoma that would develop into a metastasizing tumor? Or is it precision in detecting non-melanoma? or potential (non-)melanoma that should be assessed by a dermatologist? Because of overdiagnosis, it is imperative to clarify the precision in detail as it influences the interpretation of the results that follow. If not, sophisticated use of AI may detect more cases of melanoma without benefit to patients, public health and society because these additional detected cases could be due to overdiagnosis and thereby only harmful.

The Population Health View

The Population Health Perspective is provided by three of our domain experts. This viewpoint reframes the questions and goals to highlight how the AI tool can promote population health.

From the population health perspective, it is vital to create an instrument that works towards equity and does not further exacerbate the inequities already present in melanoma diagnosis and treatment (Rutherford et al., 2015). To achieve this, the criteria of inclusivity must be met, which for this use case entails prioritizing the inclusion of the full spectrum of skin tones, as well as age, and socio-economic status, in the training of the AI model. Additionally, while the clinical problem presents as one of overdiagnosis of disease, it is vital to recognize what population this issue pertains to. While melanoma may be most common among white individuals, a diagnosis is far more likely to lead to fatality for people of color. This is driven by the latency of diagnosis for the latter group (Gupta et al., 2016). Therefore, while the aim for one part of the population might be to focus on overdiagnosis, there should be a distinct aim towards addressing underdiagnosis among populations that are non-white.

The Patient View

We now present the patient view as defined by three of our domain experts in our team. This complementary viewpoint helps clarifying how the AI tool could help the patient. Patient expectations and concerns are of main interest during the whole process from design to application of the AI system. For this use case, we included the patient’s view and the general citizen perspective during the various workshops, represented by three of our domain experts in our team. In particular, one of the three team experts, works as a journalist and author on AI acceptance among citizens and had undergone dermatologist checks himself for his own research. No additional patient peer review groups were involved at this stage.

Given that the target audience and purpose of the AI system is not yet clearly defined, it is important to explore several directions and how each of them might impact patients’ health and health service experience. At this stage of the process when we refer to a patient, we did not distinguish between patients at different stages of interactions with clinicians and their relative percentage (which differs country by country): e.g. patients who are worried presenting to a general practitioner (GP) with a skin lesion and maybe being afraid they have malignant melanoma; patients who visits directly a dermatologists or who are referred to a dermatologist by a GP with a mole that is most frequently diagnosed as a benign lesion or in more rare cases as a malignant melanoma—of which many are overdiagnosed.

It is reasonable to assume that the patient’s primary goals are to have their malignant melanoma detected early, and to avoid unnecessary treatment for harmless abnormalities. Any AI system intended as a prevention tool to support lay people in self-screening could represent a significant step towards fostering patients’ active involvement in prevention efforts, especially given the shift towards telemedicine since the beginning of the COVID-19 pandemic. Research also suggests that patients are open to using new digital solutions for performing skin self-examinations, as long as they receive adequate technical support for using these tools (Dieng et al., 2019) and don’t feel that they compromise the doctor-patient relationship (Nelson et al., 2020). However, there are also certain challenges to the introduction of these new tools from the patient’s point of view.

Firstly, it would shift the diagnostic process and responsibility from the healthcare provider to patient, without additional clinical knowledge to confirm the diagnosis. Despite being able to self-screen, the patient would, however, remain dependent on the clinician’s decision to act upon the AI system’s recommendations. In other words, the system might give patients a false sense of autonomy. In cases where patient and clinician have conflicting views about diagnosis, this could strain the doctor-patient relationship. Moreover, it is important to consider that failing to detect a skin lesion, be it due to technical or human error from the patient’s side, may lead to feelings of regret and self-blame (Banerjee et al., 2018; Eways et al., 2020).

Secondly, while some patients may be capable and willing to engage in self-screening practices, others may feel overwhelmed and reluctant to do so, or may simply not be willing to engage in preventive activities (Lau et al., 2014).

Thirdly, from the patient’s point of view cost and reimbursement are important considerations. Specifically, state healthcare systems need to clarify who will bear the costs of such a software, clarify medical legal liability (as normally this would be squarely in the hands of the product producer if being advertised as a medical tool) and subsequent treatments, and whether a self-examination can replace a regular check-up by a doctor or increase its frequency.

Despite these ethical issues, the benefits of this technology are numerous. For many patients, the option of primary self-examination can reduce the effort and cost of visiting a doctor. This is particularly true in countries in which preventive examinations are not paid for by a state healthcare system or when visiting doctors, such as due to COVID-19 limitations, is more difficult. Another benefit has to do with the AI providing a second “opinion”. A well-designed system can offer detailed explanations of its reasoning underlying a diagnosis, which the clinician can communicate to the patient in layman’s language. These explanations can include a statistical probability of the presence of malignant melanoma, and offer a visual comparison of the irregularity under investigation with other cases. This makes it easier for patients to understand why a diagnosis is made.

The patient representative expects that the AI system is designed in a way that its outputs can be easily understood by non-experts, especially by using common every-day language rather than medical terms. Especially if the system is used as a standalone version outside the clinical context as an app, its user interface design must also be easy to understand with clear guidelines on what to do and how to avoid mistakes while operating the software. Medical examinations can be very stressful, even more if the examination detects a potential threat to the patient’s health. At all steps access to medical support, for example in the form of a call center that can explain findings and necessary steps as well as help to cope with the stress, is therefore mandatory.

At the same time, if the above is implemented, we need to consider what are the risks on the scope and limitation of the model. How do we ensure that all users are educated if the system is open-sourced? Who is accountable if the model is misused?

The AI system must also provide clear explanations for statistical probabilities, that put numbers into perspective for the specific patient rather than for an abstract group. If the AI system suggests an action, like extraction of a skin sample, the patient wants to know, why exactly the algorithms came to that suggestion. This can help to minimize patient’s concerns about a “black box” decision from a machine. On a very practical level, patients want to receive all available information as a printed or digital copy in order to seek a second opinion and have evidence for possible discussions with insurance companies. This is an important point supporting explainability.

The patient representative suggests that user-focus-groups with real patients be established during the design of the system in order to gain maximum acceptance of a later use.

While the patient wants to have clear and explained information another addressed potential tension of self-diagnosis is the lack of the human emotional aspect. The patient does not want to be treated as a mere number on which to collect samples from the lab and spit out a 1, 0 diagnosis but wants, also, to receive understanding and rational human support. We, therefore, expect that such self-diagnosis will be complemented with human support. Hence, in the user-focus-groups various designs can be proposed to hamper this lack such as complementing the tool with a support expert team ready to address the various emotional needs arising from its use.

The AI tool would need to be assessed from the patient perspective. Patients may have different viewpoints regarding the tool's use for self-diagnosis and for use by doctors. Some patients may wish to have a more human connection, while others may appreciate remote, self-led, medical diagnosis. Telemedicine has grown during the COVID-19 epidemic and patient perspectives on the use of self-diagnosis and remote tools may also be evolving during this time. The tool would still require the involvement of the clinician and confirmation by a clinician for future treatment, if needed.

Shifting the diagnostic process from doctor to patient can make the process more accessible (home based care, especially as telemedicine has increased during the COVID-19 epidemic) and also add new complexities, affecting cost and liability and especially as the doctor would need to confirm the diagnosis for further treatment.

The pitfalls in shifting from a doctor-based diagnostic process to a self-diagnosing process are many. First of all, if the pre-test probability of disease is decreasing, then the positive predictive value of a positive test result will decrease, resulting in more false positives. Another problem is that spectrum bias will to a large degree affect the diagnostic process so the diagnostic precision will be lower. Finally, empirical evidence strongly supports that screening for malignant melanoma increases overdiagnosis and there is a danger that self-diagnosing will lead to substantial screening, which again will lead to even more overdiagnosis.

According to recent research related to the use of AI in the diagnostics of skin cancer (Nelson et al., 2020), the majority of patients support the use of AI and have high confidence in its use. This might be due to unnecessary and overseen malignancies being expected. It should be noted that in this context, more overidagnosis could be regarded as beneficial, which is against the view point from the experts in evidence based medicine in our team. Patients would prefer a separate assessment by the physician and the AI system and be informed about the respective results.

According to (Jutzi et al., 2020) 75% of patients would recommend an AI system to their families and friends. and 94% wish symbiosis of the physician with AI. The integrity of the physician-patient-relation must be maintained. There is confidence in the accuracy of AI on the one hand, but concerns about the accuracy on the other hand. A marked heterogeneity of patient’s perspectives on the use of AI is noted.

The patient perspective would clearly encompass more than just the self-diagnosis and can be taken in many directions.

It was also noted during the analysis, that in different medical systems than Europe (e.g. US), if this AI system is being used for medical diagnosis without a doctor, this will end up placing the liability in the hands of the designers of the tool in some medical systems. Therefore, perhaps an AI system should not replace the GP or the dermatologist but be used as a decision support system.

Measure the Risk of Harming

For this use case, this is our proposal of an exposure analysis presenting a metric of end-user exposure which can aid in the development of an AI design with reduced end-user vulnerability.

Not harming, or minimizing harming a patient is an important, if not the most important, requirement for an AI for asserting acceptable ethical standards within screening assessment in the medical field. This risk requires a closer look, and quantification of the term “harm” needs to be determined. Further—the result of the quantification will differ depending on the field in which the AI is used.

To verify a superior result of an AI over a standard or current screening method, the considered set of variables must be representative of different stakeholder views, such as the healthcare system in respective countries, physician, and the patient.

For this use-case we have asked the following questions, 1) what ratio of False Positives to False Negatives is reasonable, 2) is there a standard way of quantifying the costs given differing stakeholder perspectives, 3) how do we assess if the AI system is harming or not? These are also relevant for the definition of the fairness criteria, which typically concerns inequalities in e.g. the error rates for different salient groups.

While early detection of melanoma is of prime importance, overassessment is linked with additional medical costs and an unnecessary physical and psychological burden on the affected patients. Measures reducing misdiagnosis while maintaining or increasing sensitivity have the potential to reduce psychological burden and potential consequences arising from unneeded treatments. Further, an acceptable ratio of false positives vs. negatives will also differ between cases where an AI is used. For instance, erroneous brain surgery in the case of a false positive will be far more serious than a false positive in the case of a mole removal that might or might not lead to cancer.

The number of errors must be kept to a minimum, as the number of false positives (the claim that a healthy patient has a specific diagnosis) and false negatives (the claim that a sick patient is healthy) might result in overdetection of patients that are healthy, or not treating patients that are, in fact, unhealthy or ill. It is important to keep the balance between overdetection reducing the global medical and labor costs while still maintaining a high True Positive detection rate hence ensuring people with positive cases are treated rapidly and adequately.

Requirements from a statistical point of view regarding false positives and negatives, could be expressed as: The false positive rate is calculated as

A verified histological assessment should be used as a reference measure for comparing standard methods with that of the AI. The measure confirms the level of error, which in turn must surpass that of the current best practice. The test has four cases with outcomes where only two give an unambiguous answer:

I) FNAI < FNcur and FPAI < FPcur

II) FNAI > FNcur and FPAI < FPcur

III) FNAI < FNcur and FPAI > FPcur

IV) FNAI > FNcur and FPAI > FPcur

Considering the implications of the outcome groups:

• In case I) the AI has a better outcome for both variables than the current method and the AI would be considered to comply with the “Do no harm” requirement.

• In the case of outcome IV) the AI does not pass the test as both variables have a less favorable outcome than the current method.

• Whether outcome II) and III) falls within an acceptable ethical range will depend on the gravity of the harm in which a false claim will result.

Further, testing AI-devices solely on historical–and potentially outdated–data is, in itself, a liability and warrants testing in an actual clinical setting. Thus, doctors can compare their own assessment with the AI-recommendations based on data generated from clinical studies and not limit the data to pools from historical data or data from one or two sites. Restricting data to a few sites may limit the racial and demographic diversity of patients and create unintended bias (Wu et al., 2021). However, combining real world evidence data with clinical studies together with clinical experience might help GPs and dermatologists reduce the chance of harming the patient to an acceptable level and in addition avoid costs for unnecessary surgery.

From an ethical point of view, both clinical and big data statistical population evidence must provide the direction, although, each specific AI case under consideration must also strive to consider other factors, like indication, patient prognosis and potential treatment implications as mentioned above.

Look for Similarities

During co-creation, it is important to look for similarities. This helps when defining the aim of the AI and allows one to see what challenges might exist for similar technologies.

Similarities With Genetic Testing and Other Forms of Clinical Diagnostics.

As with most clinical diagnostics, in this use case it is crucial to understand what a test can and cannot tell. When trying to predict future outcomes, no test has 100% sensitivity and specificity and there can be many other variables and intervening events that can lead to a different outcome.

This test carries many of the issues of other cancer screenings. From pap smears to mammograms to prostate-specific antigen test (PSA test) to screen for prostate cancer, tests can point to increased risk or may overlook risk, but are not crystal balls. Treatments that then follow may not be necessary and may lead to unnecessary interventions, or the test results may give a false sense of security and lead to worse outcomes, as the diagnosis is then overlooked.

In view of the differences between the Dermatologist’s View, the Evidence-based Medicine View and the Patient View and the resulting tensions with regard to overdiagnosis, risk assessment, risk of harming related to early diagnosis, risk communication, and patient understanding of the test result and its implications, one of the ethics team members of the group felt that this use case could benefit from results of a long-standing interdisciplinary debate on the ethical aspects of predictive genetic diagnosis. Aspects addressed in this debate include patient autonomy, the right to know and the right not to know, psychosocial implications of receiving test results, the clinical significance of test results, lifestyle related questions, the question of which treatment options to choose based on predictions, the harm resulting from treatments that may prove unnecessary (Geller et al., 1997; Burgess, 2001; Hallowell et al., 2003; Clarke & Wallgren-Pettersson, 2019). In order to discuss the melanoma use case in question, it could be very helpful to build on this debate and ask: What are the similarities, what are the differences between (predictive) genetic testing and early detection of melanoma?

Undoubtedly, there are parallels of this use case in dermatology to genetic testing administered through medical doctors or geneticists. Insofar, it seems advisable to think about how the concept of counselling could be transferred to this case. Besides helping patients cope with the psychosocial implications of melanoma analysis and treatment, a reflection of what counselling would imply in the context of melanoma diagnosis, prediction and prognosis would help improve patient-doctor communication about risk. For counselling and clinical risk communication with the individual patient is a complex task filled with difficulties and pitfalls, e.g. that lay people might understand the concept of overdiagnosis and that strong pre-assumption among lay people might create a perception gap (Hoffmann and Del Mar, 2015; Moynihan et al., 2015; Byskov Petersen et al., 2020). Counselling could also help increase patient involvement and give patients the opportunity to decide on whether they prefer a process involving or not involving a ML system. Similar to this use case, there are two forms of "uses" of (predictive) genetic testing: 1) testing administered by a medical doctor/geneticist, embedded in genetic counselling; and 2) direct-to-consumer genetic testing, the latter coming with additional practical and ethical issues (Caulfield & McGuire, 2012).

There are also relevant differences between this use case and genetic testing. In particular, in predictive genetic testing for serious disorders that may develop in the more distant future, such as in the case of predictive genetic testing for Huntington’s disease, genetic testing may trigger complex and adverse psychological outcomes. Predictive genetic testing may burden an individual with information about future serious health deterioration or a distant death, or trigger an irreversible intervention, like removal of breasts and ovaries in a young woman following BRCA mutation testing when other life events, including even future treatments, may intervene (Broadstock et al., 2000; Hawkins et al., 2011; Eccles et al., 2015). Moreover, genetics gives us a sense of self and a sense of who we are. In contrast, the melanoma use case discussed here does not have this level of impact. Also, the time scale with melanoma analysis is much shorter and so is not as prone to the problems of predicting distant outcomes. Furthermore, unlike genetic testing for familial disorders, melanoma testing is only about the individual person undergoing diagnosis.

Consider the Aim of the Future Artificial Intelligence System as a Claim

One of the key lessons learned at this point is that there may be tensions when considering what the relevant existing evidence to support a claim is, or, as in this case, to support the choice of a design decision when considering different viewpoints.

At this stage of early design, we suggest to consider the aim of the future AI system as a claim that needs to be validated before the AI system is deployed. It is known from the literature (Brundage et al., 2020) that “Verifiable claims are statements for which evidence and arguments can be brought to bear on the likelihood of those claims being true”. As mentioned by (Brundage et al., 2020) if the AI system is already deployed, “claims about AI development are often too vague to be assessed with the limited information publicly made available”.

There is an opportunity to apply claim-oriented approaches in the early design phase of the AI system. We can use in the design of the AI system the Claims, Arguments, and Evidence (CAE) framework–not as an audit process, but rather as a co-design framework.

If we consider the “aim” of the tool we are co-creating as a claim, then we consider the aim as an assertion that needs to be evaluated somehow. Beyond verification, we define the validation of claims as to the use of appropriate forms of evidence and argument to interpret claims as true or false with respect to the original problem statement. Adapting the framework, we then consider arguments as linking evidence to the aim of the AI system, and evidence as to the basis for justification of the aim. Sources of evidence for our use case include the medical research related to the AI system under design and the various viewpoints.

As is the case for the design of this specific AI component, we may discover a tension between the various arguments linking evidence to the aim of the system. At this stage it is important to note this tension and document it, so that it can be taken into account during the later stages of the AI design, and if possible resolve the tension with a trade off.

We list some of the arguments linking evidence to the aim of the system. For some of the Arguments, i.e. Arguments 2 till arguments 5, tensions between different expert view points were also identified.

Argument 1: Malignant melanoma is a very heterogeneous tumor with a clinical course that is very difficult to predict. To date, there are no reliable biomarkers that predict prognosis with certainty. Therefore, there exist subgroups of melanoma patients with different risk for metastasization, some might never metastasize and diagnosing them would be overdiagnosis.

Tensions

For this use case, there are tensions between the various arguments linking evidence to the aim of the system, derived from the different viewpoints expressed by domain experts.

Argument 2: View Point: The Dermatologist.

The dermatologists in our team consider harms from treatment as rather small because of a generally small exzision with no need for general anaesthesia.

Counter Argument: View Point: The Evidence Based Medicine.

The evidence based experts in our team do consider harms from treatments.

Argument 3: View Point: Patient representative.

Patients should be informed about the consequences of screening and therapy before they opt to do it. And, of course, about the prognosis after a diagnosis has been made.

Counter Argument: View Point: The Evidence Based Medicine; The population health view.

According to the WHO screening principles and many countries’ screening criteria, screening should not be offered before robust evidence from high quality evidence (RCTs) shows that the benefits outweigh the harms of screening for malignant melanomas. One of our experts has co-authored a Cochrane review where they did not find this kind of evidence and until then no screening should be performed. Moreover, making a free evidence-based informed choice of whether to be screened or not is not “free”, is not “informed” and is “framed” (Henriksen et al., 2015; Johansson et al., 2019; Byskov Petersen et al., 2020; Rahbek et al., 2021).

Argument 4: View Point: The Dermatologist.

Early detection of malignant melanoma is critical, as the risk of metastasis with worse prognosis increases the longer melanoma remains untreated.

Counter Argument:View Point: The Evidence Based Medicine.

There are no reliable biomarkers that can predict the prognosis of melanoma before excision. There are patients who survive their localized melanoma without therapy. Therefore, the early diagnosis does not necessarily mean a better prognosis; on the contrary, there is a risk of poor patient care due to overdiagnosis.

Argument 5:View Point: The Dermatologist and AI Engineer.

Screening (in the future with AI-devices with even a higher sensitivity) will detect more early, localized melanomas that would have metastasized in a proportion of patients. The benefit of preventing this in this subgroup of patients outweighs the risk of overtreatment of other patients by a small harm - a small excision.

Counter Argument: View Point: The Evidence Based Medicine.

Pivotal ethical value as a physician when conducting clinical work as a GP is “primum non nuocere”—as stated in the original Hippocratic oath–first, do no harm. Therefore, as long as there is lack of robust evidence of high quality that early diagnosis of, or screening for, the melanoma result in reduced morbidity and mortality it cannot support such approaches. At the same time, screening for melanoma will inevitably result in substantial overdiagnosis. Therefore, there is the tendency to plead against screening for a melanoma, and early diagnosis of melanoma (and plead for timely diagnosis of clinical relevant melanoma) until it is provided with robust evidence of high quality that early diagnosis of, or screening for, a melanoma actually result in reduced morbidity and/or mortality of the disease.

Counter Argument: View Point: Patient representative.

We cannot judge as a clinician what is a “small harm”. This can only be judged by the patient.

Is Bias justifiable?

Observing the current literature on AI fairness, there is a tendency to assume that any presence of bias automatically renders the tool ethically unjustifiable. This is an imperfect assumption, however. From a consequentialist perspective, the presence of bias becomes irremediably objectionable only at the moment the harm of bias outweighs any potential good that the tool might bring. While major bias in gender, race and other sensitive areas may often prove ethically challenging, coming to a final conclusion in any particular case will entail argumentation and potential disagreements. Regardless, conclusions are not automatic, and in this regard the current use case can serve as an interesting example.

At first glance, the fact that the tool was predominantly developed for skin types typically found in Caucasians, and that it exhibited considerable bias against darker skin types might lead to a criticism similar to that levelled by Obermeyer et al. (2019) against a different tool. The authors showed that a commercial tool predicting complex health needs exhibited considerable bias against black patients, i.e. getting the same score, black patients were considerably sicker. From the perspective of classical fairness, black and white patients should typically be treated in the same way with regards to access to healthcare resources, and therefore such a tool raises ethical objections.

The argument could be made, however, that the situation in the skin cancer case is different. In contrast to the Obermeyer example, white and black patients do not have the same resource need when it comes to melanoma. The incidence of melanoma in the black population is for example 20–30 times lower than in the white population (Culp & Lunsford, 2019). This makes melanoma in the black population a rare disease, whereas in the white population it is relatively common and a major public health challenge.

Under such circumstances, with differing needs with regards to access to healthcare resources, bias in the given tool, i.e. a development targeted at lighter skin types, could be justifiable in accordance with classical fairness as unequal patients are treated with proportionally unequal resources. It follows that the tool is fair and ethically justified not despite the bias, but because of it (Brusseau, 2021). This paradox is one of the case’s more remarkable features.

It is outside of the scope of this work to reach a final conclusion with regards to this ethical challenge since further arguments need to be considered. For example the fact that the incidence of melanomas in black people is lower but the mortality is higher (Chao et al., 2017) must be weighed as well. Additionally, some of this difference in incidence might be the cause of different degrees of overdiagnosis–again caused by social inequality in access to healthcare. Overdiagnosis in malignant melanoma has the opposite social inequality: those who are highly educated and the richest are those with the highest degree of overdiagnosis (Welch and Fisher, 2017).

Still, the argument remains that the presence of statistical bias should not trigger the automatic response that a tool must be rejected on ethical grounds.

Even if bias in some AI tools could be ethically justifiable, challenges remain. From the perspective of the individual patient, there is still a bias in the system which needs to be clearly and firmly communicated to patients or recalibrated for specific populations before distribution or otherwise it could lead to considerable harm for some patients, though this will be for a minority.

If we consider a health system like in the US, such an AI tool could come with a considerable risk to its producers, given the known bias and the medical liability that could accompany this if not fully communicated, if marketed as a medical diagnostic tool replacing a doctor’s diagnosis.

We do stress the importance of communicating the risks and benefits of the AI tool in different populations.

Verify if Transparency is a prerequisite for Explanation

Explanation and explainability concerns knowing the rationale on the basis of which an AI produces an output. To explain an algorithmic output, it is not sufficient simply to describe how it produces it. To explain the output requires some account of why the output is produced. It is worth noting that transparency is not well defined in the principles of Trustworthy AI, making the relation to explainability very difficult to establish. While there is still no general consensus on what constitutes explainability, we find that three different notions can usefully be distinguished for the purpose of determining whether transparency is a prerequisite for explanation.

On the basis of an analysis of notions of explainability in AI-related research communities Doran et al. (2017, p. 4) distinguish between three ways in which a user may relate to a system. In opaque systems “the mechanisms mapping inputs to outputs are invisible to the user” (Doran et al., 2017, p. 4). In interpretable systems a user can see, study, and understand “how inputs are mathematically mapped to outputs” (Doran et al., 2017, p. 4). Thus, a necessary condition for interpretability is that the system is transparent as opposed to black-boxed. Finally, Doran et al. characterize a system as comprehensible if it “emits symbols along with its output (…).” These symbols “allow the user to relate properties of the inputs to their output” (Doran et al., 2017, p. 4).

While both comprehensible and interpretable systems may be considered improvements as compared to opaque systems, in that they enable explanations of why features of the input led to the output, the explanations given will still depend on human analysis. Transparency should thus be considered a necessary but not a sufficient condition in these cases. In other words, transparency allows for but does not imply explainability. Only a truly explanatory AI system designed as an autonomous system will produce an explanation by itself independent of a human analyst and the contingencies of their context and background knowledge. Given the autonomous nature of these type of systems, explanations will not require transparency about the “inner mechanisms” of the model (Doran et al., 2017, p. 7).

In this case transparency does not mean understanding the mathematical mapping process but identifying/reconstructing “important” drivers that led the model to make a given prediction, and make this understandable from clinicians. Several state-of-the-art approaches exist to compute local explanations of the predictions and to reconstruct these drivers. One introduced by Simonyan et al., is the saliency map method (Simonyan et al., 2014), that assigns a level of importance to each pixel in the input image, using the gradient of prediction with respect to each pixel. This allows one to localize the region of interest in the image, for this use-case, to localize the melanoma.

Another approach to computing local explanations is to perturb the input image. The idea is to modify part of the image, by replacing some pixels, and observing changes in the prediction. When the parts of the image that are important to the prediction are disturbed, the output is changed, while when they are unimportant, the output does not change much. Deep SHAP (Lundberg and Lee, 2017) was introduced as a combination of Deep Lift (Shrikumar et al., 2017) and Shapley Additive Explanation to leverage the explanation capabilities of SHAP values with deep networks. Using concepts from game theory to evaluate different perturbations of the input, it shows the positive and negative contribution of each pixel to the final prediction.

More recently, Lucieri et al. proposed the concept-based Concept Localization Map (CLM) explanation technique (Lucieri, et al., 2020b) as an improvement of previous saliency-based methods, by allowing to highlights groups of pixels representing individual concepts learned by the AI. This is the approach considered in the use-case. Other notable methodologies used in similar cases include adding an attention module that highlight salient features, as was done by Schlemper et al. who used attention for segmentation in abdominal CT scans. (Schlemper et al., 2019).

Given that the intended purpose of the system at hand is to support clinical decision-making, we argue that transparency is a necessary condition for explanations that are dependent on human analysis.

Involve Patients

exAID currently serves as a “trust-component” for existing AI systems. It provides dermatologists with an easy-to-understand explanation that can help guide the diagnostic process. But how can this information be translated and presented to patients, so as to engage them in the decision-making process? What might a discussion aid for the clinical encounter look like?

From a patient-centric view, we need the input of patients to answer these questions and involve them at every stage of the design process. There is indeed a growing body of evidence, indicating that involving patients in healthcare service design can improve patients’ experiences (Tsianakas et al., 2012; Reay et al., 2017). Here it is particularly important to ensure that the views, needs, and preferences of vulnerable and disadvantaged patient groups are taken into account to avoid exacerbating existing inequalities (Amann and Sleigh, 2021).

When faced with decisions about their health, patients should be provided with all available and necessary information that is relevant for making an informed decision. The effective design of explanations of the AI system intended for the patient must still be the task of future investigations: If the system is communicating statistically correct results and therefore also shows very low and low probabilities for the presence of malignant melanoma, which would unlikely lead to the physician taking a sample, this might cause irritation and leave the patient with the feeling of “not having done everything possible”. If, on the other hand, the system would communicate more clearly and was designed to give an own assessment for a “yes” or “no” sample collection, the message would be more clear and understandable for patients, but shift the decision from the physician to the AI system, which is not intentional. AI diagnostic systems, as they are currently designed, do not generally garner the trust of patients, even when they perform much better than human physicians (Longoni et al., 2019). A patient-centered co-design approach could end up reversing these preferences.

Consider the Legal and Ethical Perspectives: Mapping to the Trustworthy Artificial Intelligence Requirements

This AI tool is being developed for use by clinicians to support their decision making about the necessity of next steps for skin lesions and thereby potentially improving diagnostic performance. There are a variety of legal factors that should be considered at this stage. A non-exhaustive discussion of some of these issues is set out below, under the headings defined by the AI HLEG. Not considered here are the licencing and other regulatory requirements of the jurisdiction in which the ML is to be used.

Transparency

Grote and Berens (2020) note that the deployment of machine learning algorithms might shift the evidentiary norms of medical diagnosis. They note; “as the patient is not provided with sufficient information concerning the confidence of a given diagnosis or the rationale of a treatment prediction, she might not be well equipped to give her consent to treatment decisions”. In other words, when a patient may be harmed by an inaccurate prediction, if no explanation for the resulting decision is possible, their truly informed consent cannot be given. This threatens transparency and thereby evidence-based clinical practice, further research and academic appraisal.

Diversity, Non-discrimination, and Fairness

While the notions of bias and fairness are mentioned as issues relating to epistemological risk in section (2.1), there is also a genuine ethical concern about bias, fairness, and equality with respect to the development and use of ML in healthcare (Larrazabal et al., 2020). In general, AI encodes the same biases present in society (Owens & Walker, 2020). This is true when the data is used as is, but if an engineering team works on transforming the data to remove biases, then AI will encode a subset or even a distorted version of these biases.

It will be necessary to identify the ways this ML responds to different races and genders and how any discriminatory effects can be mitigated.

Issues of bias, fairness and equality relate to the issue of trust. Both clinicians and the public may become skeptical about ML systems in diagnostics as a result of problematic cases of inequality in performance across socially salient groups. The opposite could also occur: the public and clinicians trust the AI despite the lack of evidence of the effect of AI on patients’ prognosis–or even evidence showing that the AI is creating more harm than good.

Human Agency and Oversight

The AI HLEG specifically recognizes a “right not to be subject to a decision based solely on automated processing when this (…) significantly affects them” (AI HLEG, 2019, p. 16). For this case, the ML is used as a support mechanism for the decision making of the clinician. On the face of it that seems unproblematic from a human agency and oversight perspective. However, the design process should ensure that it is possible for those who are impacted by the decisions made by AI to challenge them and that the level of human oversight is sufficient (Hickman and Petrin, 2020).

The design process should consider the extent to which a clinician’s agency and autonomous decision-making are or could be reduced by the AI system. The assumption is that the clinicians would only use the AI system to support their classification but any requirement to use it as support may be eroded over time if clinicians were to consider the AI system highly accurate.

Privacy and Data Governance

According to the General Data Protection Regulation (GDPR), an informed and explicit consent is required for the processing of sensitive data, such as health data. A data protection impact assessment in accordance with Article 35 GDPR will need to be carried out. GDPR requirements are extensive and evolving (European Parliament and Council of European Union, 2016). Specific concerns for this use case include the patient's need to consent that an AI system is included in the process and that personal data, in the form of images with related information, may need to be stored for the development of the ML. The possibility of a right to explanation under the GDPR may also pose difficulties to the extent that human understanding of the AI process is limited. The exAID is trying to mitigate this.

A full legal review will be needed to assess the compliance of the system with the GDPR’s requirements. The goal of the GDPR is the protection of fundamental rights and freedoms of natural persons (Art. 1). These are determined in accordance with the Charter of Fundamental Rights of the European Union and the European Convention on Human Rights. This also includes the right to non-discrimination pursuant to Article 21 Charter of Fundamental Rights. This is relevant for this use case in light of the issues, discussed above, relating to accuracy for different skin colours.

Possible Accountability Issues